Research on Polarized Multi-Spectral System and Fusion Algorithm for Remote Sensing of Vegetation Status at Night

Abstract

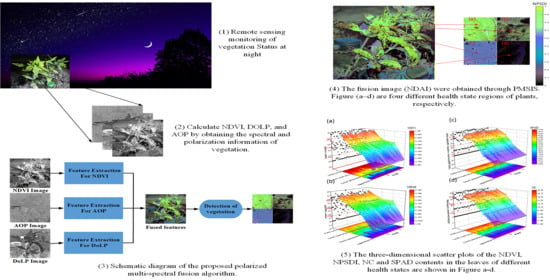

:1. Introduction

2. Materials and Methods

2.1. Plant Materials and Experimental Design

2.2. Polarized Multispectral for Low-Illumination-Level Imaging System

2.3. Measurement of Chlorophyll and Nitrogen Content

2.4. Image Acquisition and Analysis

2.4.1. The Normalized Vegetation Index

2.4.2. Polarization of Vegetation

2.4.3. Fusion Algorithm for Nighttime Plant Detection

3. Results

3.1. Experiments on Different Illumination of Vegetation

3.2. Time Series Experiment of Vegetation

3.3. Outdoor Experiment at Night

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations. The State of the World’s Forests 2018—Forest Pathways to Sustainable Development; Food and Agriculture Organization of the United Nations: Rome, Italy, 2018. [Google Scholar]

- Zhou, Y.; Wu, L.; Zhang, H.; Wu, K. Spread of invasive migratory pest spodoptera frugiperda and management practices throughout china. J. Integr. Agric. 2021, 20, 637–645. [Google Scholar] [CrossRef]

- Tong, A.; He, Y. Estimating and mapping chlorophyll content for a heterogeneous grassland: Comparing prediction power of a suite of vegetation indices across scales between years. ISPRS J. Photogramm. Remote Sens. 2017, 126, 146–167. [Google Scholar] [CrossRef]

- Sharma, R.C.; Kajiwara, K.; Honda, Y. Estimation of forest canopy structural parameters using kernel-driven bi-directional reflectance model based multi-angular vegetation indices. ISPRS J. Photogramm. Remote Sens. 2013, 78, 50–57. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh- ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Gao, L.; Wang, X.; Johnson, B.A.; Tian, Q.; Wang, Y.; Verrelst, J.; Mu, X.; Gu, X. Remote sensing algorithms for estimation of fractional vegetation cover using pure vegetation index values: A review. ISPRS J. Photogramm. Remote Sens. 2020, 159, 364–377. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Bajgain, R.; Xiao, X.; Wagle, P.; Basara, J.; Zhou, Y. Sensitivity analysis of vegetation indices to drought over two tallgrass prairie sites. ISPRS J. Photogramm. Remote Sens. 2015, 108, 151–160. [Google Scholar] [CrossRef]

- Barbosa, H.A.; Kumar, T.L.; Paredes, F.; Elliott, S.; Ayuga, J.G. Assessment of Caatinga response to drought using Meteosat-SEVIRI Normalized Difference Vegetation Index (2008–2016). ISPRS J. Photogramm. Remote Sens. 2019, 148, 235–252. [Google Scholar] [CrossRef]

- Sakamoto, T.; Shibayama, M.; Kimura, A.; Takada, E. Assessment of digital camera-derived vegetation indices in quantitative monitoring of seasonal rice growth. ISPRS J. Photogramm. Remote Sens. 2011, 66, 872–882. [Google Scholar] [CrossRef]

- Rahimzadeh-Bajgiran, P.; Omasa, K.; Shimizu, Y. Comparative evaluation of the Vegetation Dryness Index (VDI), the Temperature Vegetation Dryness Index (TVDI) and the improved TVDI (iTVDI) for water stress detection in semi-arid regions of Iran. ISPRS J. Photogramm. Remote Sens. 2012, 68, 1–12. [Google Scholar] [CrossRef]

- Adam, E.; Deng, H.; Odindi, J.; Abdel-Rahman, E.M.; Mutanga, O. Detecting the early stage of phaeosphaeria leaf spot infestations in maize crop using in situ hyperspectral data and guided regularized random forest algorithm. J. Spectrosc. 2017, 8, 6961387. [Google Scholar] [CrossRef]

- Apan, A.; Datt, B.; Kelly, R. Detection of pests and diseases in vegetable crops using hyperspectral sensing: A comparison of reflectance data for different sets of symptoms. In Proceedings of the 2005 Spatial Sciences Institute Biennial Conference 2005: Spatial Intelligence, Innovation and Praxis (SSC2005), Melbourne, Australia, 12–16 September 2005; pp. 10–18. [Google Scholar]

- Dhau, I.; Adam, E.; Mutanga, O.; Ayisi, K.K. Detecting the severity of maize streak virus infestations in maize crop using in situ hyperspectral data. Trans. R. Soc. S. Afr. 2018, 73, 1–8. [Google Scholar] [CrossRef]

- Iordache, M.; Mantas, V.; Baltazar, E.; Pauly, K.; Lewyckyj, N. A machine learning approach to detecting pine wilt disease using airborne spectral imagery. Remote Sens. 2020, 12, 2280. [Google Scholar] [CrossRef]

- Jo, M.H.; Kim, J.B.; Oh, J.S.; Lee, K.J. Extraction method of damaged area by pinetree pest (Bursaphelenchus Xylophilus) using high resolution IKONOS image. J. Korean Assoc. Geogr. Inform. Stud. 2001, 4, 72–78. [Google Scholar]

- Mota, M.M.; Vieira, P. Pine wilt disease: A worldwide threat to forest ecosystems. Nematology 2009, 11, 5–14. [Google Scholar]

- Nguyen, V.T.; Park, Y.S.; Jeoung, C.S.; Choi, W.I.; Kim, Y.K.; Jung, I.H.; Shigesada, N.; Kawasaki, K.; Takasu, F.; Chon, T.S. Spatially explicit model applied to pine wilt disease dispersal based on host plant infestation. Ecol. Model. 2017, 353, 54–62. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Bonaventure, A.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Shi, Y.; Huang, W.; Luo, J.; Huang, L.; Zhou, X. Detection and discrimination of pests and diseases in winter wheat based on spectral indices and kernel discriminant analysis. Comput. Electron. Agric. 2017, 141, 171–180. [Google Scholar] [CrossRef]

- Son, M.H.; Lee, W.K.; Lee, S.H.; Cho, H.K.; Lee, J.H. Natural spread pattern of damaged area by pine wilt disease using geostatistical analysis. J. Korean Soc. Forest Sci. 2006, 95, 240–249. [Google Scholar]

- Baranoski, G.V.G.; Rokne, J.G. A practical approach for estimating the red edge position of plant leaf reflectance. Int. J. Remote Sens. 2005, 26, 503–521. [Google Scholar] [CrossRef]

- Rouse, J.W.; Hass, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite-1 Symposium, Washington, DC, USA, 10–14 December 1973; Volume 1, pp. 309–317. [Google Scholar]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified adjusted vegetation index (MSAVI). Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gao, B. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Steddom, K.; Bredehoeft, M.W.; Khan, M.; Rush, C.M. Comparison of visual and multispectral radiometric disease evaluations of cercospora leaf spot of sugar beet. Plant Dis. 2005, 89, 153–158. [Google Scholar] [CrossRef] [Green Version]

- Arens, N.; Backhaus, A.; Döll, S.; Fischer, S.; Seiffert, U.; Mock, H.P. Non-invasive presymptomatic detection of cercospora beticola infection and identification of early metabolic responses in sugar beet. Front. Plant Sci. 2016, 7, 1377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deery, D.; Jimenez-Berni, J.; Jones, H.; Sirault, X.; Furbank, R. Proximal remote sensing buggies and potential applications for field-based phenotyping. Agronomy 2014, 4, 349–379. [Google Scholar] [CrossRef] [Green Version]

- Vigneau, N.; Ecarnot, M.; Rabatel, G.; Roumet, P. Potential of field hyperspectral imaging as a non-destructive method to assess leaf nitrogen content in wheat. Field Crop. Res. 2011, 122, 25–31. [Google Scholar] [CrossRef] [Green Version]

- Graeff, S.; Link, J.; Claupein, W. Identification of powdery mildew (Erysiphe graminis sp. tritici) and take-all disease (Gaeumannomyces graminis sp. tritici) in wheat (Triticum aestivum L.) by means of leaf reflectance measurements. Open Life Sci. 2006, 1, 275–288. [Google Scholar] [CrossRef]

- Yang, Z.; Rao, M.N.; Elliott, N.C.; Kindler, S.D.; Popham, T.W. Differentiating stress induced by greenbugs and Russian wheat aphids in wheat using remote sensing. Comput. Electron. Agric. 2009, 67, 64–70. [Google Scholar] [CrossRef]

- Liu, Z.Y.; Wu, H.F.; Huang, J.F. Application of neural networks to discriminate fungal infection levels in rice panicles using hyperspectral reflectance and principal components analysis. Comput. Electron. Agric. 2010, 72, 99–106. [Google Scholar] [CrossRef]

- Prabhakar, M.; Prasad, Y.; Thirupathi, M.; Sreedevi, G.; Dharajothi, B.; Venkateswarlu, B. Use of ground based hyperspectral remote sensing for detection of stress in cotton caused by leafhopper (Hemiptera: Cicadellidae). Comput. Electron. Agric. 2011, 79, 189–198. [Google Scholar] [CrossRef]

- Prabhakar, M.; Prasad, Y.G.; Vennila, S.; Thirupathi, M.; Sreedevi, G.; Ramachandra Rao, G.; Venkateswarlu, B. Hyperspectral indices for assessing damage by the solenopsis mealybug (Hemiptera: Pseudococcidae) in cotton. Comput. Electron. Agric. 2013, 97, 61–70. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-weight multispectral UAV sensors and their capabilities for predicting grain yield and detecting plant diseases. ISPRS—International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 963–970. [Google Scholar]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Yang, C.H.; Everitt, J.H.; Fernandez, C.J. Comparison of airborne multispectral and hyperspectral imagery for mapping cotton root rot. Biosyst. Eng. 2010, 107, 131–139. [Google Scholar] [CrossRef]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Sanches, I.; Filho, C.R.S.; Kokaly, R.F. Spectroscopic remote sensing of plant stress at leaf and canopy levels using the chlorophyll 680nm absorption feature with continuum removal. ISPRS J. Photogramm. Remote Sens. 2014, 97, 111–122. [Google Scholar] [CrossRef]

- Lehmann, J.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Yuan, L.; Pu, R.; Zhang, J.; Wang, J.; Yang, H. Using high spatial resolution satellite imagery for mapping powdery mildew at a regional scale. Precis. Agric. 2016, 17, 332–348. [Google Scholar] [CrossRef]

- Chen, D.; Shi, Y.; Huang, W.; Zhang, J.; Wu, K. Mapping wheat rust based on high spatial resolution satellite imagery. Comput. Electron. Agric. 2018, 152, 109–116. [Google Scholar] [CrossRef]

- Zheng, Q.; Huang, W.; Cui, X.; Shi, Y.; Liu, L. New Spectral Index for Detecting Wheat Yellow Rust Using Sentinel-2 Multispectral Imagery. Sensors 2018, 18, 868. [Google Scholar] [CrossRef] [Green Version]

- Meiforth, J.J.; Buddenbaum, H.; Hill, J.; Shepherd, J.D.; Dymond, J.R. Stress Detection in New Zealand Kauri Canopies with WorldView-2 Satellite and LiDAR Data. Remote Sens. 2020, 12, 1906. [Google Scholar] [CrossRef]

- Li, X.; Yuan, W.; Dong, W. A Machine Learning Method for Predicting Vegetation Indices in China. Remote Sens. 2021, 13, 1147. [Google Scholar] [CrossRef]

- Rvachev, V.P.; Guminetskii, S.G. The structure of light beams reflected by plant leaves. J. Appl. Spectrosc. 1966, 4, 303–307. [Google Scholar] [CrossRef]

- Woolley, J.T. Reflectance and transmission of light by leaves. Plant Physiol. 1971, 47, 656–662. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vanderbilt, V.C.; Grant, L.; Daughtry, C.S.T. Polarization of light scattered by vegetation. Proc. IEEE 1985, 73, 1012–1024. [Google Scholar] [CrossRef]

- Vanderbilt, V.C.; Grant, L.; Biehl, L.L.; Robinson, B.F. Specular, diffuse, and polarized light scattered by two wheat canopies. Appl. Opt. 1985, 24, 2408–2418. [Google Scholar] [CrossRef] [PubMed]

- Duggin, M.J.; Kinn, G.J.; Schrader, M. Enhancement of vegetation mapping using Stokes parameter images. In Proceedings of SPIE—The International Society for Optical Engineering; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 1997; Volume 3121, pp. 307–313. [Google Scholar]

- Rondeaux, G.; Herman, M. Polarization of light reflected by crop canopies. Remote Sens. Environ. 1991, 38, 63–75. [Google Scholar] [CrossRef]

- Fitch, B.W.; Walraven, R.L.; Bradley, D.E. Polarization of light reflected from grain crops during the heading growth stage. Remote Sens. Environ. 1984, 15, 263–268. [Google Scholar] [CrossRef]

- Perry, G.; Stearn, J.; Vanderbilt, V.C.; Ustin, S.L.; Diaz Barrios, M.C.; Morrissey, L.A.; Livingston, G.P.; Breon, F.-M.; Bouffies, S.; Leroy, M. Remote sensing of high-latitude wetlands using polarized wide-angle imagery. In Proceedings of SPIE—The International Society for Optical Engineering; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 1997; Volume 3121, pp. 370–381. [Google Scholar]

- Shibayama, M. Prediction of the ratio of legumes in a mixed seeding pasture by polarized reflected light. Jpn. J. Grassl. Sci. 2003, 49, 229–237. [Google Scholar]

- Vanderbilt, V.C.; Grant, L. Polarization photometer to measure bidirectional reflectance factor R (55°, 0°; 55°, 180°) of leaves. Opt. Eng. 1986, 25, 566–571. [Google Scholar] [CrossRef]

- Vanderbilt, V.C.; Grant, L.; Ustin, S.L. Polarization of Light by Vegetation; Springer: Berlin/Heidelberg, Germany, 1991; Volume 7, pp. 191–228. [Google Scholar]

- Grant, L.; Daughtry, C.S.T.; Vanderbilt, V. Polarized and specular reflectance variation with leaf surface-features. Physiol. Plant. 1993, 88, 1–9. [Google Scholar] [CrossRef]

- Raven, P.; Jordan, D.; Smith, C. Polarized directional reflectance from laurel and mullein leaves. Opt. Eng. 2002, 41, 1002–1012. [Google Scholar] [CrossRef]

- Georgiev, G.; Thome, K.; Ranson, K.; King, M.; Butler, J. The effect of incident light polarization on vegetation bidirectional reflectance factor. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Hu, H.; Lin, Y.; Li, X.; Qi, P.; Liu, T. IPLNet: A neural network for intensity-polarization imaging in low light. Opt. Lett. 2020, 45, 6162–6165. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Zhang, L.; Zhang, D.; Pan, Q. Object separation by polarimetric and spectral imagery fusion. Comput. Vis. Image Underst. 2009, 113, 855–866. [Google Scholar] [CrossRef]

- Shibata, S.; Hagen, N.; Otani, Y. Robust full Stokes imaging polarimeter with dynamic calibration. Opt. Lett. 2019, 44, 891–894. [Google Scholar] [CrossRef] [PubMed]

- Schott, J.R. Fundamentals of Polarimetric Remote Sensing; SPIE Press: Bellingham, WA, USA, 2009; Volume 81. [Google Scholar]

- Goldstein, D.H. Polarimetric characterization of federal standard paints. In Proceedings of SPIE—The International Society for Optical Engineering; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 2000; Volume 4133, pp. 112–123. [Google Scholar]

- Bajwa, S.G.; Tian, L. Multispectral cir image calibration for cloud shadow and soil background influence using intensity normalization. Appl. Eng. Agric. 2002, 18, 627–635. [Google Scholar] [CrossRef]

- Rahman, M.M.; Lamb, D.W.; Samborski, S.M. Reducing the influence of solar illumination angle when using active optical sensor derived NDVIAOS to infer fAPAR for spring wheat (Triticum aestivum L.). Comput. Electron. Agric. 2019, 156, 1–9. [Google Scholar] [CrossRef]

- Martín-Ortega, P.; García-Montero, L.G.; Sibelet, N. Temporal Patterns in Illumination Conditions and Its Effect on Vegetation Indices Using Landsat on Google Earth Engine. Remote Sens. 2020, 12, 211. [Google Scholar] [CrossRef] [Green Version]

- Lavigne, D.A.; Breton, M.; Fournier, G.R.; Pichette, M.; Rivet, V. Development of performance metrics to characterize the degree of polarization of man-made objects using passive polarimetric images. In Proceedings of SPIE—The International Society for Optical Engineering; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 2009; Volume 7336, p. 73361A. [Google Scholar]

- Lavigne, D.A.; Breton, M.; Fournier, G.; Charette, J.-F.; Pichette, M.; Rivet, V.; Bernier, A.-P. Target discrimination of man-made objects using passive polarimetric signatures acquired in the visible and infrared spectral bands. In Proceedings of SPIE—The International Society for Optical Engineering; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 2011; Volume 8160, p. 2063. [Google Scholar]

- Raji, S.N.; Subhash, N.; Ravi, V.; Saravanan, R.; Mohanan, C.; Nita, S.; Kumar, T.M. Detection of mosaic virus disease in cassava plants by sunlight-induced fluorescence imaging: A pilot study for proximal sensing. Remote Sens. 2015, 36, 2880–2897. [Google Scholar] [CrossRef]

- Lichtenthaler, H.K. Chlorophyll Fluorescence Signatures of Leaves during the Autumnal Chlorophyll Breakdown*). J. Plant Physiol. 1987, 131, 101–110. [Google Scholar] [CrossRef]

- Saito, Y.; Kurihara, K.J.; Takahashi, H.; Kobayashi, F.; Kawahara, T.; Nomura, A.; Takeda, S. Remote Estimation of the Chlorophyll Concentration of Living Trees Using Laser-induced Fluorescence Imaging Lidar. Opt. Rev. 2002, 9, 37–39. [Google Scholar] [CrossRef]

- Esau, K. An anatomist’s view of virus diseases. Am. J. Bot. 1956, 43, 739–748. [Google Scholar] [CrossRef]

- Fang, Z.; Bouwkamp, J.C.; Theophanes, S. Chlorophyllase activities and chlorophyll degradation during leaf senescence in non-yellowing mutant and wild type of Phaseolus vulgaris L. J. Exp. Bot. 1998, 1998, 503–510. [Google Scholar]

| Level of Vegetation Health Status | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Withered Leaf-Level-2 Stress Leaf | Level-2 Stress Leaf-Level-1 Stress Leaf | Level-1 Stress Leaf-Healthy Leaf | ||||||||||

| Se | Sp | PPV | NPV | Se | Sp | PPV | NPV | Se | Sp | PPV | NPV | |

| NPSDI | 1 | 0.91 | 0.93 | 1 | 0.91 | 0.87 | 0.88 | 0.91 | 0.89 | 0.92 | 0.91 | 0.90 |

| NDVI | 1 | 0.96 | 0.96 | 1 | 0.9 | 0.88 | 0.88 | 0.9 | 0.98 | 1 | 1 | 0.98 |

| NC | 1 | 1 | 1 | 1 | 0.98 | 0.96 | 0.96 | 0.98 | 0.96 | 1 | 1 | 0.96 |

| SPAD | 1 | 0.98 | 0.98 | 1 | 0.98 | 0.96 | 0.96 | 0.98 | 0.96 | 1 | 1 | 0.96 |

| Acronyms | English Full Name | |

|---|---|---|

| 1 | PMSIS | Polarized multispectral low-illumination-level imaging system |

| 2 | NDVI | Normalized vegetation index |

| 3 | DoLP | Degree of linear polarization |

| 4 | AOP | Angle of polarization |

| 5 | NDAI | NDVI, DoLP and AOP fusion image |

| 6 | NPSDI | Night plant state detection index |

| 7 | SPAD | Chlorophyll content |

| 8 | NC | Nitrogen content |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Jiao, J.; Wang, C. Research on Polarized Multi-Spectral System and Fusion Algorithm for Remote Sensing of Vegetation Status at Night. Remote Sens. 2021, 13, 3510. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13173510

Li S, Jiao J, Wang C. Research on Polarized Multi-Spectral System and Fusion Algorithm for Remote Sensing of Vegetation Status at Night. Remote Sensing. 2021; 13(17):3510. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13173510

Chicago/Turabian StyleLi, Siyuan, Jiannan Jiao, and Chi Wang. 2021. "Research on Polarized Multi-Spectral System and Fusion Algorithm for Remote Sensing of Vegetation Status at Night" Remote Sensing 13, no. 17: 3510. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13173510