1. Introduction

Single image super-resolution (SISR) aims to reconstruct a high-resolution (HR) image from its low-resolution (LR) image. It has a wide range of applications in real scenes, such as medical imaging [

1,

2,

3], video surveillance [

4], remote sensing [

5,

6,

7], high-definition display and imaging [

8], super-resolution mapping [

9], hyper-spectral images [

10,

11], iris recognition [

12], and sign and number plate reading [

13]. In general, this problem is inherently ill-posed because many HR images can be downsampled to an identical LR image. To address this problem, numerous super-resolution (SR) methods are proposed, including early traditional methods [

14,

15,

16,

17] and recent learning-based methods [

18,

19,

20]. Traditional methods include interpolation-based methods and regularization-based methods. Early interpolation methods such as bicubic interpolation are based on sampling theory but often produce blurry results with aliasing artifacts in natural images. Therefore, some regularization-based algorithms use machine learning to improve the performance of SR, mainly including projection onto convex sets (POCS) methods and maximum a posteriori (MAP) methods. Patti and Altunbasak [

15] consider a scheme to utilize a constraint to represent the prior belief about the structure of the recovered high-resolution image. The POCS method assumes that each LR image imposes prior knowledge on the final solution. Later work by Hardie et al. [

17] uses the L2 norm of a Laplacian-style filter over the super-resolution image to regularize their MAP reconstruction.

Recently, a great number of convolutional neural network-based methods have been proposed to address the image SR problem. As a pioneering work, Dong et al. [

21,

22] propose a three-layer network (SRCNN) to learn the mapping function from an LR image to an HR image. Some methods focus mainly on designing a deeper or wider model to further improve the performance of SR, e.g., VDSR [

23], DRCN [

24], EDSR [

25], and RCAN [

18]. Although these methods achieve satisfactory results, the increase in model size and computational complexity limits their applications in the real world.

To reduce the computational burden or memory consumption, CARN-M [

26] proposes a cascading network architecture for mobile devices, but the performance of this method significantly drops. IDN [

27] aggregates current information with partially retained local short-path information by an information distillation network. IMDN [

19] designs an information multi-distillation block to further improve the performance of IDN. RFDN [

28] proposes a more lightweight and flexible residual feature distillation network. However, these methods are not lightweight enough and the performance of image SR can still be further improved. To build a faster and more lightweight SR model, we first propose a lightweight feature distillation pyramid residual group (FDPRG). Based on the enhanced residual feature distillation block (E-RFDB) of E-RFDN [

28], the FDPRG is designed by introducing a dense shortcut (DS) connection and a cascaded feature pyramid block (CFPB). Thus, the FDPRG can effectively reuse the learned feature with DS and capture multi-scale information with CFPB. Furthermore, we propose a lightweight asymmetric residual non-local block (ANRB) to capture the global context information and further improve the SISR performance. The ANRB is modified from ANB [

29] by redesigning the convolution layers and adding a residual shortcut connection. It can not only capture non-local contextual information but also become a lightweight block benefitting from residual learning. Combined with the FDPRG, ANRB, and E-RFDB, we build a more powerful lightweight U-shaped residual network (URNet) for fast and accurate image SR by using a step-by-step fusion strategy.

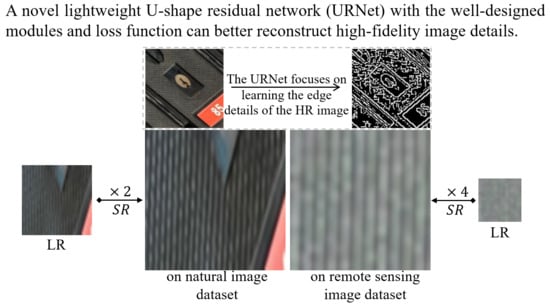

In the image SR field, L1 loss (i.e., mean absolute error) and L2 loss (i.e., mean square error) are usually used to measure the pixel-wise difference between the super-resolved image and its ground truth. However, using only pixel-wise loss will often cause the results to lack high-frequency details and be perceptually unsatisfying with over-smooth textures, as depicted in

Figure 1. Subsequently, content loss [

30], texture loss [

8], adversarial loss [

31], and cycle consistency loss [

32] are proposed to address this problem. In particular, the content loss transfers the learned knowledge of hierarchical image features from a classification network to the SR network. For the texture loss, it is still empirical to determine the patch size to match textures. For the adversarial loss and cycle consistency loss, the training process of generative adversarial nets (GANs) is still difficult and unstable. In this work, we propose a simple but effective high-frequency loss to alleviate the problem of over-smoothed super-resolved images. Specifically, we first extract the detailed information from the ground truth by using an edge detection algorithm (e.g., Canny). Our model also predicts a response map of detail texture. The mean square error between the response map and detail information is taken as our high-frequency loss, which makes our network pay more attention to detailed textures.

The main contributions of this work can be summarized as follows:

- (1)

We propose a lightweight feature distillation pyramid residual group to better capture the multi-scale information and reconstruct the high-frequency detailed information of the image.

- (2)

We propose a lightweight asymmetric residual non-local block to capture the global contextual information and further improve the performance of SISR.

- (3)

We design a simple but effective high-frequency loss function to alleviate the problem of over-smoothed super-resolved images. Extensive experiments on multi-benchmark datasets demonstrate the superiority and effectiveness of our method in SISR tasks. It is worth mentioning that our designed modules and loss function can be combined with the numerous advancements in the image SR methods presented in the literature.

3. U-Shaped Residual Network

In this section, we first describe the overall structure of our proposed network. Then, we elaborate on the feature distillation pyramid residual group and the asymmetric non-local residual block, respectively. Finally, we introduce the loss function of our network, including reconstruction loss and the proposed high-frequency loss.

3.1. Network Structure

As shown in

Figure 2, our proposed U-shaped residual network (URNet) consists of three parts: the shallow feature extraction, the deep feature extraction, and the final image reconstruction.

Shallow Feature Extraction. Almost all previous works only used a

standard convolution as the first layer in their network to extract the shallow features from the input image. However, the extracted features are single scale and not rich enough. The importance of richer shallow features is ignored in subsequent deep learning methods. Inspired by the asymmetric convolution block (ACB) [

50] for image classification, we adapt the ACB to SR domain to extract richer shallow features from the LR image. Specifically,

,

, and

convolution kernels are used to extract features from the input image in parallel. Then, the extracted features are fused by using an element-wise addition operation to generate richer shallow features. Compared with the standard convolution, the ACB can enrich the feature space and significantly improve the performance of SR with the addition of a few parameters and calculations.

Deep Feature Extraction. We use a U-shaped structure to extract deep features. In the downward flow of the U-shaped framework, we use the enhanced residual feature distillation block (E-RFDB) of E-RFDN [

28] to extract features because the E-RFDN has shown its excellent performance in the super-resolution challenge of AIM 2020. In the early stage of deep feature extraction, there is no need for complex modules to extract features. Therefore, we only stack

N E-RFDBs in the downward flow. The number of channels of the extracted feature map is halved by using a

convolution for each E-RFDB (except the last one).

Similarly, the upward flow of the U-shaped framework is composed of

N basic blocks including

feature pyramid residual groups (FDPRG, see

Section 3.2) and an E-RFDB. Based on the U-shaped structure, we utilize a step-by-step fusion strategy to fuse the features by using a

and FDPRG in the downward flow and upward flow. Specifically, the output features of each module in the downward flow are fused into the modules in the upward part in a back-to-front manner. This strategy transfers the information from a low level to a high level and allows the network to fuse the features of different receptive fields, resulting in effectively improving the performance of SR. The number of channels of the feature map increases with the use of the

operation. Especially for the last

, using the FDPRG will greatly increase the model complexity. Therefore, only one E-RFDB is used to extract features in the last upward flow.

Image Reconstruction. After the deep feature extraction stage, a simple

convolution is used to smooth the learned features. Then, the smoothed features are further fused with the shallow features (extracted by ACB) by an element-wise addition operation. In addition, the regression value of each pixel is closely related to the global context information in the image SR task. Therefore, we propose a lightweight asymmetric residual non-local block (ANRB, described in

Section 3.3) to model the global context information and further refine the learned features. Finally, a learnable

convolution and a non-parametric sub-pixel [

51] operation are used to reconstruct the HR image. Similar to [

19,

25,

28], L1 loss is used to optimize our network. In particular, we propose a high-frequency loss function (see

Section 3.4) to make our network pay more attention to learning high-frequency information.

3.2. Feature Distillation Pyramid Residual Group

In the upward flow of the U-shaped structure, we propose a more effective feature distillation pyramid residual group (FDPRG) to extract the deep features. As shown in

Figure 3, the FDPRG consists of two main parts: a dense shortcut (DS) part based on three E-RFDBs and a cascaded feature pyramid block (CFPB). After the CFPB, a

convolution is used to refine the learned features.

Dense Shortcut. Residual shortcut (RS) connection is an important technique in various vision tasks. Benefitting from the RS, many SR methods have greatly improved the performance of image SR. RFDN also uses the RS between each RFDB. Although the RS can transfer the information from the input layer of the RFDB to the output layer of the RFDB, it lacks flexibility and simply adds the features of two layers. Later, we consider introducing a dense concatenation [

52] to reuse the information of all previous layers. However, this dense connection is extremely GPU memory intensive. Inspired by the dense shortcut (DS) [

53] for image classification, we adapt the DS to our SR model by removing the normalization in DS, because the DS has the efficiency of RS and the performance of the dense connection. As shown in

Figure 3, the DS is used to connect the

M E-RFDBs in a learnable manner for better feature extraction. In addition, the algorithm proves through experiments that the addition of DS reduces the memory and calculations, while slightly improving performance.

Cascaded Feature Pyramid Block. For the image SR task, the low-frequency information (e.g., simple texture) for an LR input image does not need to be reconstructed by a complex network, which allows more information in the low-level feature map. High-frequency information (e.g., edges or corners) needs to be reconstructed by a deeper network, so that the deep feature maps contain more high-frequency information. Hence, different scale features have different contributions to image SR reconstruction. Most previous methods do not utilize multi-scale information, which limits the improvement of image SR performance. Atrous spatial pyramid pooling (ASPP) [

54] is an effective multi-scale feature extraction module, which adopts a parallel branch structure of convolutions with different dilation rates to extract multi-scale features, as shown in

Figure 4a. However, the ASPP structure is more dependent on the setting of dilation rate parameters and each branch of ASPP is independent of the other.

Different from the ASPP, we propose a more effective multi-scale cascaded feature pyramid block (CFPB) to learn the different scale information, as shown in

Figure 4b. The CFPB is designed by cascading multi-different scale convolution layers in a parallel manner. Then, the features of the different branches are fused by a

operation. The CFPB uses the idea of convolution cascading so that the next layer multi-scale features can be superimposed on the basis of the receptive field of the previous layer. Even if the dilation rate is small, it can still represent a larger receptive field. Additionally, in each parallel branch, the multi-scale features are no longer independent, which makes it easy for our network to learn multi-scale high-frequency information.

3.3. Asymmetric Non-Local Residual Block

The non-local mechanism [

45] is an attention model, which can effectively capture the long-range dependencies by modeling the connection relationship between a pixel position and all positions. In the image SR task, it is image-to-image learning. Most existing works only focus on learning detailed information while ignoring the long-range feature-wise similarities in natural images, which may produce incorrect textures globally. For the image “img092” (see Figure 8), other SR methods have learned the details of the texture (dark lines in the picture), but the direction of these lines is completely wrong in the global scope. The global texture learned by the proposed URNet after adding the non-local module is consistent with the GT image.

However, the classic Non-Local module has expensive calculation and memory consumption. It cannot be directly applied to the lightweight SR network. Inspired by the asymmetric non-local block (ANB) [

29] for semantic segmentation, we propose a more lightweight asymmetric non-local residual block (ANRB, shown in

Figure 5) for fast and lightweight image SR. Specifically, let

represent a feature map, where

C and

are the numbers of channels and spatial size of

X. We use three

convolutions to compress multi-channel features

X into single-channel features

, respectively. Afterwards, similar to the ANB, we use the pyramid pool sampling algorithm [

55] to sample only

representative feature points from the Key and Value branches. We perform four average pooling operations to obtain four feature maps with sizes of

,

,

,

, respectively. Subsequently, we flatten and expand the four maps, then stitch them together to obtain a sampled feature map with a length of 110. Then, the non-local attention can be calculated as follows:

where

,

, and

are

convolutions.

and

represent the pyramid pooling sampling for generating the sampled features

and

. ⊗ is matrix multiplication and

Y is a feature map containing contextual information.

The last step of the attention mechanism generally uses dot multiplication to multiply the generated attention weight feature map Y with the original feature map to achieve the function of attention. However, the value of a large number of elements in Y, a matrix of , is close to zero due to the operation and the characteristics of the function itself: . If we directly use the operation of the dot multiplication for attention weighting, it will inevitably cause the value of the element in the weighted feature map to be too small, making the gradient disappear, which makes the gradient impossible to iterate.

In order to solve the above problems, we use the addition operation to generate the final attention weighted feature map , allowing the network to converge more easily, where is a convolution operation to convert the single-channel feature map Y into a C-channel feature map for the subsequent element-wise sum. Benefitting from the channel compression and the sampling operation, the ANRB is a lightweight non-local block. The ANRB is used to capture global context information for fast and accurate image SR.

3.4. Loss Function

In the SR domain, L1 loss (i.e., mean absolute error) and L2 loss (e.g., mean squared error) are the most frequently used loss functions for the image SR task. Similar to [

18,

19,

25,

51], we adopt L1 loss as the main reconstruction loss function to measure the differences between the SR images and the ground truth. Specifically, the L1 loss is defined as

where

,

denote the

i-th SR image generated by URNet and the corresponding

i-th HR image used as ground truth.

N is the total number of training samples.

For the image SR task, only using L1 loss or L2 loss will cause the super-resolved images to lack high-frequency details, presenting unsatisfying results with over-smooth textures. As depicted in

Figure 6, comparing the natural image and the SR images generated by SR methods (e.g., RCAN [

18] and IMDN [

19]), we can see the reconstructed image is over-smooth in detailed texture areas. By applying edge detection algorithms to natural images and SR images, the difference is more obvious.

Therefore, we propose a simple but effective high-frequency loss to alleviate this problem. Specifically, we first use the edge detection algorithm to extract the detailed texture maps of the HR and the SR images. Then, we adopt mean absolute error to measure the detailed differences between the SR image and the HR image. This process can be formulated as follows:

where

denotes edge detection algorithm. In this work, we use Canny to extract detailed information from the SR images and the ground truth, respectively. Therefore, the training objective of our network is

, where

and

are weights and used to adjust these two loss functions.

5. Conclusions

In this paper, we introduce a novel lightweight U-shaped residual network (URNet) for fast and accurate image SR. Specifically, we design an effective feature distillation pyramid residual group (FDPRG) to extract deep features from an LR image based on the E-RFDB. The FDPRG can effectively reuse the shallow features with dense shortcut connections and capture multi-scale information with a cascaded feature pyramid block. Based on the U-shaped structure, we utilize a step-by-step fusion strategy to fuse the features of different blocks and further refine the learned features. In addition, we introduce a lightweight asymmetric non-local residual block to capture the global context information and further improve the performance of image SR. In particular, to alleviate the problem of smoothing image details caused by pixel-wise loss, we design a simple but effective high-frequency loss to help optimize our model. Extensive experiments indicate the URNet achieves a better trade-off between image SR performance and model complexity against other state-of-the-art SR methods. In the future, our method will be applied to super-resolution images with fuzzy or even real degradation models. At the same time, we will also consider deep separable convolutions or other lightweight convolutions as an alternative to standard convolutions to further reduce the number of parameters and calculations.