1. Introduction

With the rapid development of remote sensing imaging technology, hyperspectral image (HSI) has drawn more attention in recent years. HSI can be viewed as a three-dimensional cube constructed by plenty of bands. Each sample of HSI contains reflection information of hundreds of different spectral bands, which makes this kind of image suitable for many practical applications, such as precision agriculture [

1], food analysis [

2], anomaly detection [

3], geological exploration [

4,

5], etc. In the past decade, hyperspectral image processing technology has become increasingly popular due to the development of machine learning. However, there are still some challenges in the field of HSI classification: (1) the training of deep learning model depends on a great quantity of labeled sample data, while the number of labeled samples in hyperspectral data is insufficient; (2) because HSI contains rich spectral spatial information, the problem that the spectral spatial features of HSI are not effectively extracted still exists [

6]. In addition, the phenomenon of different spectral curves of the same substance and different substances of the same spectral curves often occur.

Many of the traditional machine learning-based HSI classification approaches use hand-crafted features to train the classifier [

7]. Obviously, feature extraction and classifier training are separated. Representative methods of hand-craft features include local binary patterns (LBPs) [

8], directional gradient histogram (HOG) [

9], global invariant scalable transform (GIST) [

10], random forest [

11], and so on. Representative classifiers include logistic regression [

12], limit learning machine [

13], and support vector machine (SVM) [

14]. The method of hand-craft features (they generally rely on utilizing engineering skills and domain expertise to design several human-engineering features, such as shape, texture, color, spectral, and spatial details [

7]) can effectively represent various attributes of images. However, the best feature sets of different data are very different. Moreover, manual involvement in designing the features considerably affects the classification process, as it requires a high level of domain expertise to design hand-crafted features [

7]. Due to the above limitations of hand-craft features, many automatic feature extraction methods have emerged. For instance, deep learning is an automatic feature extraction method which has achieved great success in recent years. More and more researchers apply deep learning technology to HSI classification tasks.

HSI classification methods based on deep learning framework can be divided into supervised classification methods and unsupervised/semi-supervised classification methods [

15]. Unsupervised classification methods only rely on the spectral or texture information of different ground objects of HSI for feature extraction, and then use the differences of features to achieve the purpose of classification. There are some classification methods improved by automatic encoders (AE) [

16,

17] for hyperspectral image classification. For example, in [

18], the feature representation is adaptively learned from unlabeled data by learning the feature mapping function based on stacked sparse autoencoder. Zhang et al. proposed an unsupervised HSI classification feature learning method based on recursive autoencoder (RAE) network [

19]. Mou et al. proposed an end-to-end complete convolution deconvolution network based on the so-called encoder–decoder paradigm for unsupervised spectral spatial feature learning [

20]. Moreover, the proposal of generative adversarial network (GAN) further promotes the development of unsupervised classification methods [

21]. For instance, Zhu et al. proposed an HSI classification method based on GAN. The generator provides the false input close to the real input, and the discriminator classifies the real input and false input and obtains high classification accuracy [

22]. Supervised classification is the process of using the samples of the known category to judge the samples of other unknown categories. Mou et al. proposed a recurrent neural network (RNN) model which can effectively analyze hyperspectral samples into sequence data, and then determine the sample category according to network reasoning [

23].

The HSI classification method based on CNN is also a typical supervised classification method. As a powerful neural network, CNN has strong ability to automatically extract features. At present, most CNN-based hyperspectral classification methods focus on joint spectral spatial feature extraction. According to different implementation types, they can be divided into two categories: (1) extract spectral and spatial features, respectively, and fuse them for classification; (2) extract spectral spatial features at the same time for classification. There are many methods to extract spatial spectral features respectively. For example, Zhang et al. proposed a dual-channel CNN (DCCNN) model, which uses one-dimensional CNN and two-dimensional CNN to extract spectral and spatial features, respectively [

24]. In [

25], a dual-stream architecture is introduced, in which one stream encodes spectral features through a stacked noise reduction automatic encoder, and the other stream extracts spatial features through deep CNN. In [

26], a three-layer CNN is constructed to extract spectral spatial features by cascading spectral features and two-scale spatial features from shallow to deep layers. Then, multilayer spatial spectral features are fused to achieve complementary information. Finally, the fused features and classifiers are integrated into a unified network and optimized end-to-end. Yang et al. proposed a deep convolution neural network with double-branch structure to extract the joint spectral spatial features of HSI [

27]. The above methods were able to extract spatial and spectral features but ignored the integrity of HSI. In this case, the method of extracting spatial spectral features at the same time showed its advantages in combining the spectral spatial context and preserving the integrity of HSI information. For example, Chen et al. proposed a three-dimensional CNN (3D-CNN) architecture based on kernel sampling to extract the spectral spatial features of HSI simultaneously [

28]. In [

29], a fast spectral space model with dense connectivity for HSI classification is introduced. Zhong et al. proposed a spectral spatial residual network. The spectral and spatial residual blocks continuously learn the main features from HSI to improve the classification performance [

30]. Wang et al. proposed an end-to-end alternating updating spectral and spatial convolution network with cyclic feedback structure to learn the spectral and spatial features of HSI [

31]. Fang et al. proposed a 3D-CNN model combining the dense connection and spectral attention mechanism and obtained good classification results [

32]. Attention thoughts in deep learning are essentially similar to human selective visual attention mechanism [

33,

34,

35]. The core goal is to select more critical information from a large amount of data. Nowadays, attention mechanism is also widely used in HSI classification tasks. For example, inspired by squeeze-and-excitation (SE) block [

33], in [

36], two bilinear squeeze-and-excitation network, (SENet,) with different compression strategies are used to improve the performance of HSI classification. However, deep learning models usually need a large amount of training samples to achieve optimal performance. In order to solve the problem of limited labeled samples of HSI and avoid overfitting, researchers have carried out a lot of research. Geometric transformation method and pixel transformation method were commonly used in the early stage. Later, some other methods were proposed. For instance, GAN can generate samples similar to real data [

37]. Wu et al. proposed the CRNN model, where first, all training data and their pseudo-labels were used to pretrain the model, and then, the model was fine-tuned with limited labeled data [

38]. In addition to these two models, CNNs have also been used to alleviate the small sample problem of HSI. Li et al. proposed a pixel pair (PPF) method. The trained 1D-CNN classifies the pixel pairs composed of test center pixels and surrounding pixels and determines the final label by the voting strategy [

39]. Later, in [

40], a pixel block pair (PBP) method was proposed to extract PBP features with a depth CNN model. Haut et al. introduced a random erasing method to increase the number of labeled data [

41]. In [

6], Zhang et al. proposed a data balance augmentation method, which can solve the problems of limited labeled data and unbalanced categories.

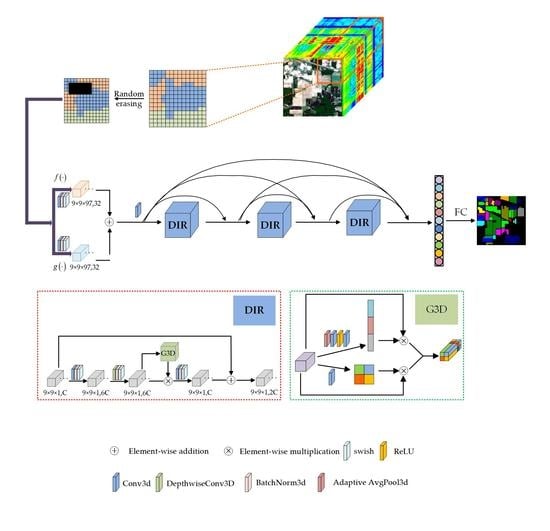

The training of a deep learning model depends on a large number of labeled sample data. Data augmentation can alleviate the problem of limited labeled samples in a hyperspectral image dataset. Random erasing data augmentation has been applied to many scene tasks. Nevertheless, in some articles [

41] that apply it to hyperspectral image classification, the scale of model space is too large, the complexity is large, and the classification accuracy is not high. There is also the problem that spectral spatial features have not been effectively extracted. Because 3D-CNN is more suitable for hyperspectral image classification tasks, it can realize spectral and spatial feature representation at the same time. To address challenges mentioned above, a deep spectral spatial inverted residual network (DSSIRNet) based on data block augmentation is proposed. The network is divided into three stages. In the first stage, the random erasing strategy is designed to enhance the data of the original input 3D cube. The two components of the second stage realize effective joint spectral spatial feature extraction. In the third stage, hyperspectral image classification is realized by using the high-level semantic features learned previously.

The main contributions of this paper are as follows:

In order to make full use of the rich information in HSI, a DIR module is proposed. In this module, the low dimensional representation of the input data is extended to the high dimension, and the depthwise separable convolution is utilized for filtering. Then, the features are projected back to the low dimensional representation by standard convolution. This design is more suitable for high-dimensional feature extraction of HSI.

A global 3D attention module is designed and embedded into the DIR module, which fully considers the global context information of spectral and spatial dimension to further improve the classification performance.

The proposed DSSIRNet is based on 3D-CNN model. With considering the computational complexity, a random erasing strategy based on small spatial blocks is introduced to increase the number of available labeled samples.

The rest of this paper is arranged as follows. The details of the proposed DSSIRNet algorithm are described in

Section 2. In

Section 3, the experimental results and analysis are provided. In

Section 4, some conclusions are drawn.

2. Materials and Methods

The overall framework of the proposed method is shown in

Figure 1. Set

is the input of the model, where

is the 3D HSI cube with height

, width

, and the number of spectral channel

, and

is the label vector of HSI data. Firstly,

is randomly divided into some 3D blocks, which are composed of marked central samples and adjacent samples, and are recorded as a new sample set

, where

,

, and

represent the height, width, and spectral dimension of the new 3D cube, respectively.

and are set to the same value. Then, the training set is randomly sampled from the new sample set according to a certain proportion , and then the validation set is randomly sampled from the rest according to the same proportion. Finally, the remaining proportion is used as the test set . Next, the DSSIRNet is trained with the training set to obtain the initial parameters of the model, and the parameters are continuously updated through the validation set until the optimal parameters are obtained. Finally, the test set is input into the optimal model to obtain the final prediction results .

2.1. The Overall Framework of the Proposed DSSIRNet

Figure 2 shows the structure of the proposed DSSIRNet. The framework is divided into three stages: data augmentation based on random erasing, deep feature extraction with dual-input fusion and DIR module, and classification. The first stage is designed to solve the problem of limited labeled samples of hyperspectral images. Through block random erasing of training samples, the spatial distribution of the dataset can be fully changed, and the number of available training samples can be effectively increased without parameter increase. In order to improve the robustness of the model, it is necessary to solve the problem of insufficient spectral spatial information extraction of hyperspectral images. The second stage of a deep feature extraction network can solve this problem. The second stage mainly includes two parts: (1) dual-input fusion part; (2) DIR dense connection part. In the second part of this stage, three DIR modules are densely connected to enhance the ability of feature representation. This paper also designs a global 3D attention module in the DIR module to achieve more refined and sufficient global context feature extraction. Finally, the spectral spatial features fully extracted in the second stage are input into the third stage to realize classification.

2.2. Block Random Erasing Strategy for Data Augmentation

In the imaging process, hyperspectral images may be occluded by clouds, shadows or other objects due to the influence of the atmosphere, resulting in the loss of information in the occluded area. The most common interference is cloud cover. Therefore, in the first stage, this paper designs a block random erasing strategy. Before model training, first, the image scene is simulated under cloud erasing conditions, and then the space block with added interference is input into the model for training. It realizes the change of spatial information, increases the available samples, and then improves the classification accuracy. Different from [

40], the feature extraction classification model proposed in this paper is three-dimensional. In order to avoid the high complexity of the model, the random erasing under small spatial blocks (i.e., 9 × 9) is studied.

For the training sample set

,

is the spatial area of the original input 3D cube, the erasing probability is set to

, the area of the random initialization erasing area is set to

, the ratio

is set between

and

, and the ratio

of height and width of

is set between

and

. First, a probability

is randomly obtained. If

is satisfied, erasure is implemented; otherwise, it will not be processed. In order to obtain the position of the erasing area, first the initial erasing area

is calculated according to the randomly erasing proportion, and then the length and width of the erasing area is calculated according to the random height to width ratio:

Then, according to the randomly height and width value, the coordinate of the upper left corner of the erasing area can be obtained. According to the height and width obtained by Formulas (1)–(3), the boundary coordinates of the occluded area can be obtained. If the coordinates exceed the boundary of the original space, the above process is repeated. For each iteration of training, random erasing is performed to generate a changing 3D cube, which enables the model to learn more abundant spatial information.

2.3. Dual-Input Fusion Part

Let

be the data processed by random erasing. After spectral processing

and spatial processing

, two groups of feature maps are obtained as the dual input of DIR module. In fact,

and

are the combination functions of three-dimensional convolution, batch normalization (BN), and swish activation functions. The difference between

and

is that the convolution kernel of three-dimensional convolution is different, and the corresponding feature map is

where

and

are the feature maps obtained by spectral processing and spatial processing, respectively,

represents swish activation function operation,

represents three-dimensional convolution operation,

and

are the weight and bias of two convolution, respectively, and

and

represent the trainable parameters of BN operation, respectively. The three-dimensional convolution kernels of spectral processing and spatial processing are

and

, respectively. The number of convolution kernels is 32, the convolution stride is

, and the paddings are

and

, respectively. Therefore, the size and number of the two groups of feature maps are the same. Then, the obtained two groups of feature maps are directly added and fused element by element, which can be represented as

where

represents the element by element addition operation, and

represents the fused feature map.

2.4. DIR Module

The DIR module designed in this paper is inspired by Mobilenet v3 [

42] and Efficientnet [

43]. We expand the 2D inverted residual model to 3D inverted residual model. Through a large number of experiments and parameter adjustment, a 3D general module suitable for hyperspectral image classification is designed.

Figure 3 shows the schematic diagram of the DIR module.

The main idea of deep extraction of spectral spatial features in a DIR module is to expand the low-dimensional representation of input data to high-dimensional representation and filter the feature maps with depthwise separable convolution (DSC). The filtered feature maps are also transmitted to the global 3D attention module for deeper filtering. Following this, the two groups of filtered features are multiplied to enhance feature representation, and then these features are projected back to the low-dimensional representation by standard convolution. Finally, the residual branch is utilized to avoid network degradation. The implementation details of DIR module are described in Algorithm 1.

| Algorithm 1 DIR module. |

| Input: The feature map set obtained after dual-input fusion. |

| 1: Ascend the dimension of input data by Conventional 3D convolution. The number of feature maps after convolution is , and then the feature maps are activated by BN and swish. |

| 2: DSC is performed on the feature maps in two steps: depthwise process and pointwise process. The convolution kernels of the two convolution processes are set to and , respectively. The number of feature maps before and after DSC remains the same. Following this, BN and swish activation are performed on these feature maps and then the filtered feature map set is obtained. |

| 3: Input into the global 3D attention module for depth filtering and obtain the feature map set . |

| 4: Calculate the product of feature maps . |

| 5: Reduce the dimension of the multiplied feature map through conventional three-dimensional convolution, and then perform the BN and swish to obtain the reduced feature map set . |

| 6: Add the input feature map and the reduced dimension feature map, and then activate them with swish to obtain the output feature map set . |

| Output: Feature map set with the same size and number as the input feature map. |

2.5. Global 3D Attention Module

Inspired by csSE [

44], this paper designs the global 3D attention module, as shown in

Figure 4. The global 3D attention module fully considers the global information and effectively extracts the spectral and spatial context features, so as to enhance the ability of feature representation.

The 3D spectral part: firstly, the input feature map

as a combination of spectral channels

, is processed by adaptive average pooling (AAP), and the tensor of element

is obtained:

Then, two linear layers with size

and

are trained to find the correlation between features and classification, and the

-dimensional tensor is obtained. Next, the sigmoid function is used for normalization to obtain the spectral attention map. Finally, the obtained spectral attention map is multiplied by the input feature map. The process can be represented as

where

represents the combination function of two linear layers, ReLU operation and sigmoid activation operation, and

is the spectral attention map.

The 3D spatial part: firstly, the image features are extracted through C convolution layers, then the spatial attention map is activated by sigmoid function, and finally the obtained spatial attention map is multiplied by the input feature map. The process can be represented as

where

is the combination function of one-layer three-dimensional convolution and sigmoid activation operation, and

is the spatial attention map.

Finally, the feature maps obtained from the two parts are compared element by element, and the maximum value is returned to generate the final global 3D attention map.

3. Experimental Setting and Results

3.1. Experimental Setting

- (1)

Datasets

In order to verify the performance of DSSIRNet, four classical datasets are used in the experiment.

The Indian Pines (IN) dataset was obtained from the airborne visible infrared imaging spectrometer (AVIRIS) sensor in northwestern Indiana. The data size is 145 × 145, a total of 220 continuous imaging bands for ground objects. After excluding 20 bands of 104–108, 150–163, and 200 that cannot be reflected by water, the remaining 200 effective bands are taken as the research object. The spatial resolution is 20 m per pixel and the spectral coverage is 0.4~2.5 μm. There are 16 kinds of crops.

The Pavia University (UP) dataset was collected by ROSIS sensor. It continuously images 115 bands in the wavelength range of 0.43~0.86 μm, with a spatial resolution of 1.3 m. After eliminating the noise influence band, the size of UP is 610 × 340 × 103, including nine types of land cover.

The Salinas Valley (SV) dataset was captured by AVIRIS sensors in Salinas Valley, California. The spatial resolution of the data is 3.7 m, and the size is 512 × 217. The original data is 224 bands. After removing 20 bands of 108–112, 154–167, and 224 with serious water vapor absorption, 204 effective bands are retained. The dataset contains 16 crop categories.

The Botswana (BS) dataset was gathered from the NASA EO-1 satellite over Okavango Delta, Botswana, with a spatial resolution of 30 m and spectral coverage of 0.4~2.5 μm. After excluding the uncalibrated bands covering the water absorption characteristics and noise bands, 145 bands of 10–55, 82–97, 102–119, 134–164, and 187–220 are left for research. The data size is 1476 × 256. The ground truth map can be divided into 14 categories.

In the follow-up experiment, the IN, UP, SV, and BS datasets are divided into training set, validation set, and test set, respectively. The proportion of training, validation, and test randomly selected from each category is the same. The training proportion is equal to the ratio of the number of training samples obtained by random sampling to the total number of samples. The principle of validation proportion and test proportion is similar. Here, in order to avoid missing training samples of some categories in the IN dataset, we randomly select 5% for training, 5% for validation, and the remaining 90% for testing. The proportion of training set, validation set, and test set randomly selected from UP dataset, SV dataset, and BS dataset is the same, which are 3%, 3%, and 94% respectively. The detailed division information of the four datasets is listed in

Table 1 and

Table 2 respectively.

- (2)

Experimental Setting and Evaluation Index

In the experiment, the batch size of each dataset was set to 16 and the input spatial size was 9 × 9. In this paper, Adam optimizer was adopted. The initial learning rate was set to 0.0003, and the patience value was set to 15 with cosine attenuation. In addition, the maximum training epoch was set to 200. The experimental hardware platform is a server with Intel (R) Core (TM) i9-9900k CPU, NVIDIA GeForce RTX 2080 Ti GPU and 32 G memory. The experimental software platform is based on Windows 10 Visual Studio Code operating system, and adopts CUDA 10.0, Pytorch 1.2.0, and Python 3.7.4. The classification results of all the experiments were the average classification accuracy ± standard deviation through experiments more than 20 times. In order to provide a quantitative evaluation, this paper uses overall accuracy (OA), average accuracy (AA), and kappa coefficient (kappa) as the measures of classification performance. OA represents the ratio of the number of correctly classified samples to the total number of samples. AA indicates the classification accuracy of each category. Kappa coefficient measures the consistency between the results and the real ground map, which is an index to measure the accuracy of classification. Its calculation is based on the confusion matrix. The lower the Kappa value, the more imbalanced the confusion matrix, and the worse the classification effect.

3.2. Classification Results Compared with Other Methods

This paper compares the proposed DSSIRNet with some state-of-the-art classification methods, including SVM_RBF [

14], DRCNN [

45], ROhsi [

40], SSAN [

46], SSRN [

29], and A

2S

2K-ResNet [

47]. SVM_RBF is a pixel based classification method, DRCNN and ROhsi are 2D-CNN based methods, and SSRN is a method based on RNN and 2D-CNN. The remaining two methods and the proposed DSSIRNet are 3D-CNN based methods.

Table 3,

Table 4,

Table 5 and

Table 6 list the average classification accuracy of the seven methods on the four datasets. The 2–17 lines record the classification accuracy of specific categories, and the last three lines record OA, AA, and kappa of all categories. In addition, for these experimental results, the best results are highlighted in bold.

As can be seen from

Table 3, the classification results of the proposed DSSIRNet method on the IN dataset are obviously superior to those of other state-of-the-art methods, and the best OA, AA, and kappa values are achieved. Because the frameworks based on deep learning (including DRCNN, ROhsi, SSAN, SSRN, A

2S

2K-ResNet, and DSSIRNet) have excellent nonlinear representation and automatic hierarchical feature extraction capabilities, their classification performances are better than that of SVM_RBF. For the model based on 2D-CNN, the model structures of ROhsi and SSAN are too simple, and the extraction of spectral spatial feature is not sufficient. Therefore, the OAs of the above two methods are 9.63% and 9.08% lower than those of DRCNN, respectively. For the 3D-CNN model, the learning of spectral spatial features of SSRN is separated, and the learned advanced features are not fused. Thus, the OAs of SSRN are lower than that of A

2S

2K-ResNet and the proposed DSSIRNet method. The proposed DSSIRNet method firstly performed block data augmentation on the input 3D cube to increase the available samples, and then the designed DIR module fully extracted the spectral spatial features. The global 3D attention module also effectively realized the selection and extraction of global context information. The final dense connection operation effectively integrated the joint spectral spatial features learned by the DIR module. Therefore, the OA value of the proposed method on the IN dataset is 0.8% higher than that of A

2S

2K-ResNet. In particular, the proposed DSSIRNet method also provides a 100% prediction rate for the wheat category.

For the UP dataset and SV dataset, as shown in

Table 4 and

Table 5, the classification results of all methods exceed 90%. The DRCNN uses multiple input spatial windows, so the OA values are higher than that of SVM_RBF, ROhsi, and SSAN. A

2S

2K-ResNet can adaptively select 3D convolution kernel to jointly extract spectral spatial features, so its OA values are higher than that of SSRN. Compared with these methods, the OA values obtained by DSSIRNet on the UP dataset are 7.82%, 3.52%, 4.74%, 4.96%, 1.69%, and 1.09% higher than that of SVM-RBF, DRCNN, ROhsi, SSAN, SSRN, and A

2S

2K-ResNet, respectively. The OA values obtained on the SV dataset are 8.61%, 2.72%, 5.07%, 4.71%, 2.55%, and 0.99% higher than that of SVM-RBF, DRCNN, ROhsi, SSAN, SSRN, and A

2S

2K-ResNet, respectively. In particular, the proposed DSSIRNet method achieved 100% prediction rate in the three categories of grassland, painted metal plate, and bare soil of UP dataset; on the SV dataset, seven categories achieved 100% prediction rate.

Table 6 shows the classification results of different methods on the BS dataset. Compared with the other three datasets, the BS dataset has the highest spatial resolution, so the classification model is very important for the effective extraction of spatial context information. Obviously, the classification performances based on 3D-CNN (SSRN, A

2S

2K-ResNet, and DSSIRNet) are higher than other methods, because 3D-CNN can extract spectral and spatial features at the same time. A

2S

2K-ResNet extracts joint spectral spatial features directly, which may lose spatial context information, so its OA values are lower than that of SSRN. The proposed DSSIRNet method utilized the DIR module to realize the joint extraction of spectral spatial features, and combined the global 3D attention module to focus on the spectral and spatial context features that contribute greatly to the classification, so it achieved the best classification performance.

Figure 5,

Figure 6,

Figure 7 and

Figure 8 show the visual classification results obtained by seven methods on four datasets. Taking

Figure 5 as an example, the classification maps obtained by SVM_RBF, DRCNN, ROhsi, and SSAN have some noise, especially in the corn-notill, grass-pasture, oats, and soybean-mintill classes. The 3DCNN-based methods (including SSRN, A2S2KResNet and DSSIRNet) extract the spectral spatial features more effectively. Compared with other methods, DSSIRNet greatly improves the regional consistency and make some categories more separable, such as grass-trees, hay-windrowed, and wheat. As can be seen, the probability of misclassification among the categories of SVM_RBF, DRCNN, ROhsi, and SSAN is large, and the misclassification rate among other methods is small. In particular, the proposed DSSIRNet method has the smallest misclassification rate and a clear category boundary, which is closest to the ground real map, which shows the effectiveness of the proposed DSSIRNet method.