An Improved Fmask Method for Cloud Detection in GF-6 WFV Based on Spectral-Contextual Information

Abstract

:1. Introduction

2. Materials and Methods

2.1. Fmask Version 3.2 Cloud Detection

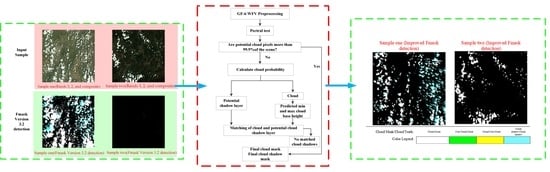

2.2. An Improved Fmask Algorithm for GF-6 WFV Cloud Detection

2.2.1. Data Introduction

2.2.2. Identification of PCPS

- 1.

- Basic Test

- 2.

- HOT Test

- 3.

- Rock and Bare Soil Test

- 4.

- Build Test

- 5.

- Stratus Test

2.2.3. Cloud Pixels Probability Calculation

- Cloud recognition is not an all-or-nothing state due to the complexity of clouds in remote sensing images. Therefore, the distribution of cloud pixels in remote sensing images can be further determined by calculating the cloud probability of PCPs to better identify clouds. Because water pixels and land pixels have high variability, the Fmask cloud detection algorithms of versions 3.2 and 4.0 are referred to calculate cloud probabilities for water pixels and land pixels, respectively. Cloud probability for water

- 1

- Brightness probability for water:

- 2.

- Cloud probability for land

- 1)

- LHOT:

- 2)

- The variability probability for land:

3. Results and Discussion

3.1. Experimental Results

3.2. Qualitative Analysis

3.2.1. Bright Building

3.2.2. Water Surface

3.2.3. Cultivated Land, Woodland, Bare Soil

3.2.4. Others

3.3. Quantitative Analysis and Evaluation

4. Conclusions

- No snow and ice regions are detected in this study since the lack of snow and ice regions in the GF-6 WFV data;

- There is inevitably some bias in the process of accuracy evaluation caused by some subjective human vectorization, because the real vectorized cloud images are obtained by manual vectorization with the help of visual interpretation;

- The effective band for detecting thin clouds is not explored due to the narrow wavelength range covered by GF-6 WFV data; particularly, a poor detection results when there are more thin clouds alone in the image.

- A simple distinction between clouds and snow can be made if snow and ice areas appear in the subsequent GF-6 WFV data, although the data do not contain SWIR bands that can be used for snow and ice detection. The distinction is performed that clouds and cloud shadows are present in pairs while snow exists alone;

- For thin clouds that exist alone, detection can be attempted by the combination of improved Fmask algorithm and spatial texture features.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xiang, P.S. A Cloud Detection Algorithm for MODIS Images Combining Kmeans Clustering and Otsu Method. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2018; Volume 6, p. 62199. [Google Scholar]

- Dan, L.P.; Mateo-García, G.; Gómez-Chova, L. Benchmarking Deep Learning Models for Cloud Detection in Landsat-8 and Sentinel-2 Images. Remote. Sens. 2021, 13, 992. [Google Scholar]

- Lu, C.L.; Bai, Z.G.; Li, Y.C.; Wu, B.; Di, G.D.; Dou, Y.F. Technical Characteristic and New Mode Applications of GF-6 Satellite. Spaceraft Eng. 2021, 30, 7–14. [Google Scholar]

- Yao, J.Q.; Chen, J.Y.; Chen, Y.; Liu, C.Z.; Li, G.Y. Cloud detection of remote sensing images based on deep learning and condition random field. Sci. Surv. Mapp. 2019, 44, 121–127. [Google Scholar]

- Wu, Y.J.; Fang, S.B.; Xu, Y.; Wang, L.; Li, X.; Pei, Z.F.; Wu, D. Analyzing the Probability of Acquiring Cloud-Free Imagery in China with AVHRR Cloud Mask Data. Atmosphere 2021, 12, 214. [Google Scholar] [CrossRef]

- Sun, L.; Wei, J.; Wang, J.; Mi, X.T.; Guo, Y.; Lv, Y.; Yang, Y.K.; Gan, P.; Zhou, X.Y.; Jia, C.; et al. A Universal Dynamic Threshold Cloud Detection Algorithm (UDTCDA) supported by a prior surface reflectance database. J. Geophys. Res. Atmos. 2016, 121, 7172–7196. [Google Scholar] [CrossRef]

- Lu, Y.H. Research on Automatic Cloud Detection Method for Remotely Sensed Satellite Imagery with High Resolution. Master’s Thesis, Xidian University, Xi’an, China, 2018. Unpublished work. [Google Scholar]

- Mao, F.Y.; Duan, M.M.; Min, Q.L.; Gong, W.; Pan, Z.X.; Liu, G.Y. Investigating the Impact of Haze on MODIS Cloud Detection; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2015; Volume 120, pp. 237–247. [Google Scholar]

- Shin, D.; Pollard, J.K.; Muller, J.P. Cloud detection from thermal infrared images using a segmentation technique. Int. J. Remote. Sens. 1996, 17, 2845–2856. [Google Scholar] [CrossRef]

- Li, Z.W.; Shen, H.F.; Li, H.F.; Xia, G.S.; Gamba, P.; Zhang, L.P. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef] [Green Version]

- Fisher, A. Cloud and Cloud-Shadow Detection in SPOT5 HRG Imagery with Automated Morphological Feature Extraction. Remote Sens. 2014, 6, 776–800. [Google Scholar] [CrossRef] [Green Version]

- Gesell, G. An algorithm for snow and ice detection using AVHRR data an extension to the APOLLO software package. Int. J. Remote Sens. 1989, 10, 897–905. [Google Scholar] [CrossRef]

- Jia, L.L.; Wang, X.Q.; Wang, F. Cloud Detection Based on Band Operation Texture Feature for GF-1 Multispectral Data. Remote Sens. Inf. 2018, 33, 62–68. [Google Scholar]

- Wu, T.; Hu, X.Y.; Zhang, Y.; Zhang, L.L.; Tao, P.J.; Lu, L.P. Automatic cloud detection for high resolution satellite stereo images and its application in terrain extraction. ISPRS J. Photogramm. Remote Sens. 2016, 121, 143–156. [Google Scholar] [CrossRef]

- Cao, Q.; Zheng, H.; Li, X.S. A Method for Detecting Cloud in Satellite Remote Sensing Image Based on Texture. Acta Aeronaut. Astronaut. Sin. 2007, 28, 661–666. [Google Scholar]

- Vermote, E.; Saleous, N. LEDAPS Surface Reflectance Product Description; University of Maryland: City of College Park, MD, USA, 2007. [Google Scholar]

- Li, P.F.; Dong, L.M.; Xiao, H.C.; Xu, M.L. A cloud image detection method based on SVM vector machine. Neurocomputing 2015, 169, 34–42. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.J.; Tong, X. Cloud Extraction from Chinese High Resolution Satellite Imagery by Probabilistic Latent Semantic Analysis and Object-Based Machine Learning. Multidiscip. Digit. Publ. Inst. 2016, 8, 963. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.W.; Shen, H.F.; Cheng, Q.; Liu, Y.H.; You, S.C.; He, Z.Y. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.Y.; Sun, L.; Yang, Y.K.; Zhou, X.Y.; Wang, Q.; Chen, T.T. Cloud and Cloud Shadow Detection Algorithm for Gaofen-4 Satellite Data. Acta Opt. Sin. 2019, 39, 438–449. [Google Scholar]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of Landsat-7 ETM+ automated cloud-cover assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Irish, R.R. Landsat 7 automatic cloud cover assessment. SPIE Def. Commer. Sens. 2000, 4049, 348–355. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Huang, Y. Cloud Detection of Remote Sensing Images Based on Saliency Analysis and Multi-texture Features. Master’s Thesis, Wuhan University, Wuhan, China, 2019. Unpublished work. [Google Scholar]

- Wieland, M.; Li, Y.; Martinis, S. Multi-sensor cloud and cloud shadow segmentation with a convolutional neural network. Remote Sens. Environ. 2019, 230, 11203. [Google Scholar] [CrossRef]

- Li, X.; Zheng, H.; Han, C.; Zheng, W.; Chen, H.; Jing, Y.; Dong, K. SFRS-Net: A Cloud-Detection Method Based on Deep Convolutional Neural Networks for GF-1 Remote-Sensing Images. Remote Sens. 2021, 13, 2910. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Y.; Wang, Y.; Qian, Y. Cloud Detection of Landsat Image Based on MS-UNet. Prog. Laser Optoelectron. 2021, 58, 87–94. [Google Scholar]

- Cilli, R.; Monaco, A.; Amoroso, N.; Tateo, A.; Tangaro, S.; Bellotti, R. Machine Learning for Cloud Detection of Globally Distributed Sentinel-2 Images. Remote Sens. 2020, 12, 2355. [Google Scholar] [CrossRef]

- Dong, Z.; Sun, L.; Liu, X.R.; Wang, Y.J.; Liang, T.C. CDAG-Improved Algorithm and Its Application to GF-6 WFV Data Cloud Detection. Acta Opt. Sin. 2020, 40, 143–152. [Google Scholar]

- Wang, Y.J.; Ming, Y.F.; Liang, T.C.; Zhou, X.Y.; Jia, C.; Wang, Q. GF-6 WFV Data Cloud Detection Based on Improved LCCD Algorithm. Acta Opt. Sin. 2020, 40, 169–180. [Google Scholar]

- Jiang, M.M.; Shao, Z.F. Advanced algorithm of PCA-based Fmask cloud detection. Sci. Surv. Mapp. 2015, 40, 150–154. [Google Scholar]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4-8 and Sentinel-2 imagery. Remote Sens. of Environ. 2019, 231, 11205. [Google Scholar] [CrossRef]

- Gomez-Chova, L.; Camps-Valls, G.; Calpe-Maravilla, J.; Guanter, L.; Moreno, J. Cloud-Screening Algorithm for ENVISAT/MERIS Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4105–4118. [Google Scholar] [CrossRef]

- Sun, L.; Liu, X.Y.; Yang, Y.K.; Chen, T.T.; Wang, Q.; Zhou, X.Y. A cloud shadow detection method combined with cloud height iteration and spectral analysis for Landsat 8 OLI data. ISPRS J. Photogramm. Remote. Sens. 2018, 138, 197–203. [Google Scholar] [CrossRef]

| Bands | Wavelength/µm | Fwhm/µm | Gains/(W/(m2. Sr µm)) | Offset/(W/(m2. Sr µm)) |

|---|---|---|---|---|

| Band1 | 0.45-0.52 | 0.07 | 0.0667 | 0.0 |

| Band2 | 0.52-0.59 | 0.07 | 0.0517 | 0.0 |

| Band3 | 0.63-0.69 | 0.06 | 0.0485 | 0.0 |

| Band4 | 0.77-0.89 | 0.12 | 0.0298 | 0.0 |

| Band5 | 0.69-0.73 | 0.04 | 0.0530 | 0.0 |

| Band6 | 0.73-0.77 | 0.04 | 0.0445 | 0.0 |

| Band7 | 0.40-0.45 | 0.05 | 0.0814 | 0.0 |

| Band8 | 0.59-0.63 | 0.04 | 0.0559 | 0.0 |

| Bright Building | Detection method | TPR/% | PPV/% | TNR/% | F1 Score/% |

| OTSU | 91.37 | 79.33 | 87.43 | 84.93 | |

| MMOTSU | 70.90 | 87.00 | 78.70 | 78.13 | |

| SVM | 74.80 | 100.00 | 100.00 | 85.58 | |

| K_MEANS | 78.17 | 91.00 | 67.97 | 84.10 | |

| FMASK(Improved) | 96.27 | 99.67 | 100.00 | 97.94 | |

| Woodland | OTSU | 83.40 | 100 | 100.00 | 90.95 |

| MMOTSU | 66.33 | 97.00 | 95.83 | 77.36 | |

| SVM | 88.67 | 99.00 | 98.97 | 93.55 | |

| K_MEANS | 81.57 | 99.00 | 98.87 | 89.44 | |

| FMASK(Improved) | 96.60 | 94.37 | 94.53 | 95.47 | |

| Cultivated land | OTSU | 81.37 | 100.00 | 100.00 | 89.73 |

| MMOTSU | 67.70 | 94.00 | 90.60 | 78.71 | |

| SVM | 74.87 | 100.00 | 100.00 | 85.63 | |

| K_MEANS | 86.67 | 99.67 | 99.60 | 92.72 | |

| FMASK(Improved) | 93.83 | 99.67 | 99.67 | 99.66 | |

| Bare Soil | OTSU | 81.73 | 100.00 | 100.00 | 89.95 |

| MMOTSU | 69.77 | 81.33 | 71.17 | 75.11 | |

| SVM | 73.40 | 100.00 | 100.00 | 84.66 | |

| K_MEANS | 73.73 | 99.00 | 98.90 | 84.52 | |

| FMASK(Improved) | 92.07 | 99.67 | 99.63 | 95.72 | |

| Water | OTSU | 73.10 | 98.33 | 97.73 | 83.86 |

| MMOTSU | 54.50 | 98.33 | 96.60 | 70.13 | |

| SVM | 76.10 | 98.33 | 97.73 | 85.80 | |

| K_MEANS | 70.57 | 98.33 | 97.27 | 82.17 | |

| FMASK(improved) | 98.80 | 81.33 | 84.17 | 89.22 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Sun, L.; Tang, X.; Ai, B.; Xu, H.; Wen, Z. An Improved Fmask Method for Cloud Detection in GF-6 WFV Based on Spectral-Contextual Information. Remote Sens. 2021, 13, 4936. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13234936

Yang X, Sun L, Tang X, Ai B, Xu H, Wen Z. An Improved Fmask Method for Cloud Detection in GF-6 WFV Based on Spectral-Contextual Information. Remote Sensing. 2021; 13(23):4936. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13234936

Chicago/Turabian StyleYang, Xiaomeng, Lin Sun, Xinming Tang, Bo Ai, Hanwen Xu, and Zhen Wen. 2021. "An Improved Fmask Method for Cloud Detection in GF-6 WFV Based on Spectral-Contextual Information" Remote Sensing 13, no. 23: 4936. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13234936