1. Introduction

Hyperspectral images (HSI) are widely used in various fields [

1,

2,

3,

4] due to their many characteristics, such as spectral imaging with high resolution, unity of spectral image and spatial image, and rapid non-destructive testing. One of the important tasks of HSI applications is HSI classification. At first, researchers only utilized spectral features for classification because the spectral information is easily affected by some factors, for example, light, noise, and sensors. The phenomenon of “same matter with the different spectrum and the same spectrum with distinct matter” often appears. It increases the difficulty of object recognition and seriously reduces the accuracy of classification. Then researchers began to combine spectral characteristics and spatial features to improve the classification accuracy.

The spectral feature extraction of HSI can be realized by unsupervised [

5,

6], supervised [

7,

8], and semi-supervised methods [

7,

9,

10]. Representative unsupervised methods include principal component analysis (PCA) [

11], independent component analysis (ICA) [

12], and locality preserving projections (LPP) [

13]. Some well-known unsupervised feature extraction methods are based on PCA and ICA. The foundation of some supervised feature extraction techniques for HSIs [

14,

15] is the well-known linear discriminant analysis (LDA). Many semi-supervised methods of spectral feature extraction often combine supervised and unsupervised methods to classify HSIs using limited labeled samples and unlabeled samples. For example, Cai et al. [

16] proposed the semi-supervised discriminant analysis (SDA), which adopts the graph Laplacian-based regularization constraint in LDA to capture the local manifold features from unlabeled samples and avoid overfitting while the labeled samples are lacking. Sugiyama et al. [

17] introduced the method of the semi-supervised local fisher discriminant analysis (SELF). It consists of a supervised method (local fisher discriminant analysis [

8]) and PCA. These feature extraction methods (PCA, ICA, and LDA) cannot describe the complex spectral features structure of HSIs. LPP, which is essentially a linear model of Laplacian feature mapping, can describe the nonlinear manifold structure of data and is widely used in the spectral feature extraction of HSIs [

18,

19]. He et al. [

20] applied multiscale super-pixel-wise LPP to HSI classification. Deng et al. [

21] proposed the tensor locality preserving projection (TLPP) algorithm to reduce the dimensionality of HSI. However, in LPP, it is difficult to fix the value of the quantity of nearest neighbors used to construct the adjacency graph [

22]. The above spectral feature extraction methods are all realized by the dimensionality reduction, which results in losing some spectral information. The Gaussian filter [

23] can smooth the spectral information without reduction of bands in order to remove the noise from HSI data. Because of the advantage of removing the noise from data and liner calculation characteristic, the Gaussian filter is widely used in the classification of HSIs [

24,

25]. Tu et al. [

26] used the Gaussian pyramid to capture the features of different scales by stepwise filtering and down-sampling. Shao et al. [

27] utilized the Gaussian filter to fit the trigger and echo signal waveforms for coal/rock classification. The spectra of four-type coal/rock specimens are captured by the 91-channel hyperspectral light detection and ranging (LiDAR) (HSL).

In terms of spatial feature extraction, a Markov model was initially adopted to capture spatial features [

28]. However, it has two disadvantages, which are intractable computational problems and no enough samples to describe the desired object. Then the morphological profile (MP) model [

29] was put forward. Even if MP has a strong ability to extract spatial features, it cannot achieve the flexible structuring element (SE) shape, the ability to characterize the information about the region’s grey-level features, and the less computational complexity [

30]. Benediktsson et al. [

31] proposed the extended morphological profile (EMP) to classify the HSI with high spatial resolution from urban areas. In order to solve the problems of MP, Mura et al. [

32] proposed morphology attribute profile (MAP) as a promotion of MP. The extended morphological profiles with partial reconstruction (EMPP) [

33] were introduced to achieve the classification of high-resolution HSIs in urban areas. Subsequently, the extended morphological attribute profiles (EMAP) [

34] was adopted to cut down the redundancy of MAP. The framework of morphological attribute profiles with partial reconstruction [

35] had gained better performance on the classification of high-resolution HSIs. Geiss et al. [

36] proposed the method of object-based MPs to get a great improvement in terms of classification accuracy compared with standard MPs. Samat et al. [

37] used the extra-trees and maximally stable extremal region-guided morphological profile (MSER_MP) to achieve the ideal classification effect. The broad learning system (BLS) classification architecture based on LPP and local binary pattern (LBP)(LPP_LBP_BLS) [

19] was proposed to gain the high-precision classification. However, LBP only uses the local features of pixels and needs to use an adjacency matrix, which requires a lot of calculation. In recent years, the guided filter [

38,

39,

40,

41,

42] has attracted much interest from many researchers due to its low computational complexity and edge-preserving ability. The hierarchical guidance filtering-based ensemble classification for hyperspectral images (HiFi-We) [

42] was proposed. The method obtains individual learners by spectral-spatial joint features generated from different scales. The ensemble model, that is, the hierarchical guidance filtering (HGF) and matrix of spectral angle distance (mSAD), can be achieved via a weighted ensemble strategy.

Researchers have paid a great deal of work to build various classifiers for improving the classification accuracy of HSIs [

43], such as random forests [

44], neural networks [

45], support vector machines (SVM) [

46,

47], and deep learning [

48], reinforcement learning [

49], and broad learning systems [

50]. Among these classifiers, the BLS classifier [

51,

52] has attached more and more research attention due to the advantage of its simple structure, few training parameters, and fast training process. Ye et al. [

53] proposed a novel regularization deep cascade broad learning system (DCBLS) method to apply to the large-scale data. The method is successful in image denoising. The discriminative locality preserving broad learning system (DPBLS) [

54] was utilized to capture the manifold structure between neighbor pixels of hyperspectral images. Wang et al. [

55] proposed the HSI classification method based on domain adaptation broad learning (DABL) to solve the limitation or absence of the available labeled samples. Kong et al. [

56] proposed a semi-supervised BLS (SBLS). It first used the HGF to preprocess HSI data, then the class-probability structure (CP), and the BLS to classify. It achieved the semi-supervised classification of small samples.

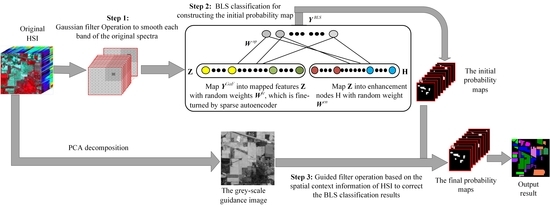

In order to make full use of the spectral-spatial joint features for improving the HSI classification performance, we put forward the method of SSBLS. It incorporates three parts. First, the Gaussian filter is used to smooth spectral features on each band of the original HSI based on the spatial information for removing the noise. The inherent spectral characteristics of pixels are extracted. The first fusion of spectral information and spatial information is realized. Second, inputting the pixel vector of spectral-spatial joint features into the BLS, BLS extracts the sparse and compact features through a random weight matrix fine-turned by a sparse auto encoder for predicting the labels of test samples. The initial probability maps are constructed. In the last step, a guided filter corrects the initial probability maps under the guidance of a grey-scale image, which is obtained by reducing the spectral dimensionality of the original HSI to one via PCA. The spatial context information is fully utilized in the operation process of the guided filter. In SSBLS, the spatial information is used in the first and third steps. In the second step, BLS uses the spectral-spatial joint features to classify. At the same time, in the third step, the first principal component of spectral information is used to obtain the grey-scale image. Therefore, in the proposed method, the full use of spectral-spatial joint features contributes to better classification performance. The major contribution of our work can be summarized as follows:

- (1)

We found the organic combination of the Gaussian filter and BLS could enhance the classification accuracy. The Gaussian filter captures the inherent spectral information of each pixel based on HSI spatial information. BLS extracts the sparse and compact features using the random weights fine-turned by the sparse auto encoder in the process of mapping feature. Sparse features can represent the low-level structures such as edges and high-level structures such as local curvatures, shapes [

57], these contribute to the improvement of classification accuracy. The inherent spectral features are input to BLS for training and prediction, thereby improving the classification accuracy of the proposed method. Experimental data supports this conclusion.

- (2)

We take full advantage of spectral-spatial features in SSBLS. The Gaussian filter firstly smooths each spectral band based on spatial information of HSI to achieve the first fusion of spectral-spatial information. The guided filter corrects the results of BLS classification based on the spatial context information again. The grey-scale guidance image of the guided filter is obtained via the first PCA from the original HSI. These three operations sufficiently join spectral information and spatial information together, which is useful to improve the accuracy of SSBLS.

- (3)

SSBLS utilizes the guided filter to rectify the misclassified hyperspectral pixels based on the spatial contexture information for obtaining the correct classification labels, thereby improving the overall accuracy of SSBLS. The experimental results can also support this point.

The rest of this paper is organized as follows.

Section 2 describes the proposed method in detail.

Section 3 presents the experiments and analysis. The discussion of the proposed method is in

Section 4.

Section 5 is the summary.

3. Experiment Results

We assess the proposed SSBLS through a lot of experiments. All experiments are performed in MATLAB R2014a using a computer with 2.90 GHz Intel Core i7-7500U central processing unit (CPU) and 32 GB memory and Windows 10.

3.1. Hyperspectral Image Dataset

The Indian Pines dataset was acquired by the Airborne Visible Infrared Imaging Spectrometer (AVIRIS) sensor when it was flying over North-west Indiana Indian Pine test site. This scene has 21,025 pixels and 200 bands. The wavelength of bands is from 0.4 to 2.5 μm. Two-thirds agriculture and one-third forests or other perennial natural vegetation constitute this image. There are two main two-lane highways, a railway line, some low-density housing, other built structures, and pathways in this image. It has 16 types of things. In our experiments, we selected the nine categories samples with a quantity greater than 400. The original hyperspectral image and ground truth are given in

Figure 2.

The Salinas scene was obtained by a 224-band AVIRIS sensor, capturing over the Salinas Valley, California, USA, with a high spatial resolution of 3.7 m. The HSI dataset has 512 × 217 pixels with 204 bands after the 20 water absorption bands were discarded. We made use of 16 classes samples in the scene. The original hyperspectral image and ground truth are given in

Figure 3.

The Pavia University dataset was collected by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor over Pavia in northern Italy. The image has 610 × 340 pixels with 103 bands. Some pixels containing nothing in the image were removed. There were nine different sample categories used in our experiments.

Figure 4 is the original hyperspectral image, category names with labeled samples, and ground truth.

3.2. Parameters Analysis

After analyzing SSBLS, it was found that the adjustable parameters are the size of the Gaussian filter window (), the standard deviation of Gaussian function (), the number of mapped feature windows in BLS (), the number of mapped feature nodes per window in BLS (), the number of enhancement nodes (), the radius of the guided filter window (), and the penalty parameter of the guided filter (). The above parameters are analyzed with overall accuracy (OA) to evaluate the performance of SSBLS.

3.2.1. Influence of Parameter and on OA

In this section, the influence of

and

on OA was analyzed in three datasets.

and

are took different values, and other parameters are fixed values, namely,

.

was chosen from

, and the value range of

was chosen from

in the Indian Pines and Salinas datasets.

and

were chosen from

and

, respectively, in the Pavia University dataset. The mean OAs of ten experiments are shown in

Figure 5. It can be seen from this figure that as the

and

increased, the OAs gradually increased, and gradually decreased after reaching the peak. If

is too small, the larger-sized target will divide into multiple parts distributing in the diverse Gaussian filter windows. If

is too large, the window will contain multiple small-sized targets. Both will cause misclassification. When

is too small, the weights change drastically from the center to the boundary. When

gradually becomes larger, the weights change smoothly from the center to the boundary, and the weights of pixels in the window are relatively well-distributed, which is close to the mean filter. Therefore, for different HSI datasets, the optimal values of

and

were not identical. in the Indian Pines dataset, when

, the OA is the largest. So

and

were 18 and 7 respectively in the subsequent experiments. In the Salinas dataset, when

, the performance of SSBLS was the best. Therefore,

and

were taken as 24 and 7 in the later experiments severally. Similarly, the best values of

and

were 21 and 4 respectively in the Pavia University dataset.

3.2.2. Influence of Parameter and on OA

The experiments are carried out on the three datasets. The values of

and

were the optimal values obtained from the above analysis, and

. In the Indian Pines and Pavia University datasets,

and

were chosen from

and

. The values range of

and

were

and

in the Salinas dataset. As shown in

Figure 6, we can see that as

and

were becoming larger, the OAs of SSBLS gradually grew. When

and

were too small, the lesser feature information was extracted and the lower the mean OA of ten experiments was. When

and

were too large, although the performance of SSBLS was improved, the computation and the consumed time also rose. Therefore, in the subsequent experiments, the best values of

and

were 6 and 34 respectively in the Indian Pines dataset, 12 and 36 in the Salinas dataset, and 8 and 26 in the Pavia University dataset.

3.2.3. Influence of Parameter on OA

In the three datasets,

,

,

and

were the optimal values obtained from the above experiments,

and

were 2 and 10

−3, respectively.

was chosen from

in the Indian Pines dataset. The range of

was

in the Salinas and Pavia University datasets. In the three datasets, the average OAs of ten experiments had an upward trend with the increase of

as shown in

Figure 7. As

gradually grew, the features extracted by BLS also increased, at the same time, the computation and consumed time also grew. Therefore, the numbers of enhanced nodes were 1050 in the Indian Pines dataset, and 700 in both the Salinas and Pavia University datasets.

3.2.4. Influence of Parameter on OA

The experiments were carried out on the three datasets. The values of

,

,

,

and

were the optimal values analyzed previously,

is

, and

is chosen from

.

Figure 8 indicates that as

grew, the average OAs of ten experiments first increased, and then decreased. In the Indian Pines dataset, when

, the mean OA was the largest, so

is 3. In the Salinas dataset, when

, the performance of SSBLS was the best, so the value of

was 5. On the Pavia University dataset, while

, the average OA was the greatest, so

was 3.

3.2.5. Influence of Parameter on OA

In the three datasets,

,

,

,

,

and

were the optimal values obtained in the above experiments. The value range of

was

. In the Indian Pines and Salinas datasets, as

increased, the mean OAs first increased and then decreased, as shown in

Figure 9. In the Indian Pines dataset, when

, the average OA was the largest, so

was 10

−3 in the subsequent compared experiments. On the Salinas dataset, while

, the performance of SSBLS was the best, so the optimal value of

was 10

−1. In the Pavia University dataset, as

, the classification effect was the best, then the best value of

was

.

3.3. Ablation Studies on SSBLS

We have conducted several ablation experiments to investigate the behavior of SSBLS on the three datasets. In these ablation experiments, we randomly took 200 labeled samples as training samples and the remaining labeled samples as test samples from each class sample. We utilized OA, average accuracy (AA), kappa coefficient (Kappa) to measure the performance of different methods as shown

Table 2, and the highest values of them are shown in bold.

First, we only used BLS to classify the original hyperspectral data. On the Salinas dataset, the effect was good; the OA reached 91.98%. However, the results were unsatisfactory when using the Indian Pines and Pavia University datasets.

Second, we disentangle the Gaussian filter influence on the classification results. We used the Gaussian filter to smooth the original HSI, and then used BLS to classify, namely the method of BLS based on the Gaussian filter (GBLS). In Indian Pines dataset, the OA was about 20% higher than these of BLS, about 7% higher than that of BLS in the Salinas dataset, and about 17% higher in the Pavia University dataset. These show that the Gaussian filter can help to improve the classification accuracy.

Next, we used BLS to classify the original hyperspectral data and then applied the guided filter to rectify the misclassified pixels of BLS. The results in terms of OA, AA, and Kappa were also better than those of BLS. This shows that guided filter also plays a certain role in improving classification performance.

Finally, we used the proposed method in the paper for HSI classification. This method first uses the Gaussian filter to smooth the original spectral features based on the spatial information of HSI. After using BLS classification, it finally applies the guided filter to correct the pixels that are misclassified by BLS. The results are the best in the four methods. This shows that both Gaussian filter and guided filter contribute to the improvement of classification performance.

From the above analysis, we know that the combination of the Gaussian filtering and BLS has a great effect on the improvement of classification performance, especially on Indian Pines and Pavia University datasets. Although the classification accuracy after BLS classification based on the Gaussian filter (GBLS) was relatively high, the classification accuracy was still improved after adding the guided filter to GBLS. It indicates that the guided filter can also help improve the classification accuracy.

3.4. Experimental Comparison

In order to prove the advantages of SSBLS on the three real datasets, we compare SSBLS with SVM [

65], HiFi-We [

42], SSG [

66], spectral-spatial hyperspectral image classification with edge-preserving filtering (EPF) [

41], support vector machine based on the Gaussian filter (GSVM), feature extraction of hyperspectral images with image fusion and recursive filtering (IFRF) [

67], LPP_LBP_BLS [

19], BLS [

50], and GBLS. All methods inputs are the original HSI data. Furthermore, the experimental parameters are the optimal values. In each experiment, the 200 labeled samples are randomly selected from per class sample as the training set, and the rest labeled samples as the test samples set. We get the individual classification accuracy (ICA), OA, AA, Kappa, overall consumed time (

t), and test time (

tt). All results are the mean values of ten experiments as shown in

Table 3,

Table 4 and

Table 5, and the highest values of them are shown in bold.

(1) Compared with the conventional classification method SVM—the effects of BLS approximate to those of SVM methods on the Indian Pines and Salinas datasets. However, when BLS and SVM make use of the HSI data filtered by the Gaussian filter, the performance of GBLS was obviously better than that of GSVM. In the Pavia University dataset, the OA of BLS was 16.56% lower than that of SVM. After filtering the Pavia University data using the Gaussian filter, the OA of GBLS was about 3% higher than that of GSVM. SSBLS had the best performance. From

Table 3,

Table 4 and

Table 5, the experimental results illustrate that the combination of the Gaussian filter and BLS contributes to improving the classification accuracy.

(2) HiFi-We firstly extracts different spatial context information of the samples by HGF, which can generate diverse sample sets. As the hierarchy levels increased, the pixel spectra features tended to be smooth, and the pixel spatial features were enhanced. Based on the output of HGF, a series of classifiers could be obtained. Secondly, the matrix of spectral angle distance was defined to measure the diversity among training samples in each hierarchy. At last, the ensemble strategy was proposed to combine the obtained individual classifiers and mSAD. This method achieved a good performance. But its performance in terms of OA, AA, and Kappa were not as good as these of SSBLS. The main reasons are that SSBLS adopts the advantages of spectral-spatial joint features sufficiently in the three operations of the Gaussian filter, BLS, and guided filter; these are useful to improve the accuracy of SSBLS.

(3) SSG assigns a label to the unlabeled sample based on the graph method, integrates the spatial information, spectral information, and cross-information between spatial and spectral through a complete composite kernel, forms a huge kernel matrix of labeled and unlabeled samples, and finally applies the Nystróm method for classification. The computational complexity of the huge kernel matrix is large, resulting in increasing the consumed time of the classification. On the contrary, SSBLS not only has higher OA than SSG, but also takes lesser time than SSG.

(4) The EPF method adopts SVM for classification, constructs the initial probability map, and then utilizes the bilateral filter or the guided filter to collect the initial probability map for improving the final classification accuracy. The results of it were very good in the real three hyperspectral datasets. However, SSBLS had better performance compared with EPF. This is mainly because SSBLS firstly utilizes the Gaussian filter to extract the inherent spectral features based on spatial information, moreover, applies the guided filter to rectify the misclassification pixels of BLS based on the spatial context information.

(5) IFRF divides the HSI samples into multiple subsets according to the neighboring hyperspectral band, then applies the mean method to fuse each subset, finally makes use of the transform domain recursive filtering to extract features from each fused subset for classification using SVM. This method works very well. But the performance of SSBLS was better than that of IFRF. Specifically, the mean OA of SSBLS was 1.03% higher than that of IRRF in the Indian dataset, 0.24% higher in the Salinas dataset, and 1.5% higher in the Pavia University dataset. There were three reasons for the analysis results. Firstly, when SSBLS used the Gaussian filter to smooth the HSI spectral features based on the spatial information, the weight of each neighboring pixel decreased with the increase of the distance between it and the center pixel in the Gaussian filter window. The Gaussian filter operation could remove the noise. Secondly, in the SSBLS method, the integration of the Gaussian filter and BLS contributed to extracting the sparse and compact spectral features fusing the spatial features and achieved outstanding classification accuracy. Thirdly, SSBLS applied the guided filter based on the spatial context information to rectify the misclassified hyperspectral pixels for improving the final classification accuracy.

(6) The LPP_LBP_BLS method uses LPP to reduce the dimensionality of HSI in the spectral domain, then utilized LBP to extract spatial features in the spatial domain, and finally makes use of BLS to classify. The performance of LPP_LBP_BLS was very nice. But it has two disadvantages. First, the LBP operation led to an increase in the number of processed spectral-spatial features greatly. For example, the number of spectral bands after dimensionality reduction of each pixel was 50, and the number of each pixel spectral-spatial features after the LBP operation was 2950. Second, LPP_LBP_BLS worked very well on the Indian Pines and Salinas datasets, but the mean OA only reached 97.14% in the Pavia University dataset. It indicates that this method has a certain data selectivity and is not robust enough. The average OAs of SSBLS in the three datasets are all above 99.49%. In the Indian dataset, the mean OA is 99.83%, and the highest OA we obtained during the experiments is 99.97%. In the Salinas dataset, the average OA is 99.96%, and the highest OA can reach 100% sometimes. It shows that the robustness of SSBLS is better, especially on the Pavia University dataset. As the parameters change, the OAs change regularly, as shown in

Figure 5c and

Figure 6c.

(7) Compared with BLS and GBLS. It can be seen in

Table 3,

Table 4 and

Table 5 that BLS had an unsatisfactory classification effect only using the original HSI data; however, when the GBLS adopted the spectral features smoothed by the Gaussian filter, its OA was greatly improved. It indicates that the combination of the Gaussian filtering and BLS contributed to the improvement of classification accuracy. The classification accuracy of SSBLS was higher than those of BLS and GBLS. This was because SSBLS applied the guided filter based on the spatial contextual information to rectify the misclassified pixels, further improving the classification accuracy.

In summary, using the three datasets, the OA, AA, and Kappa of SSBLS were better than those of nine other comparison methods, as can be clearly seen from

Figure 10,

Figure 11 and

Figure 12. From

Table 3,

Table 4 and

Table 5, it can be seen that the execution time of SSBLS was lesser than these methods (SVM, HiFi-We, SSG, EPF, GSVM, IFPF, and LPP_LBP_BLS), and the pretreatment time and the training time of SSBLS was lesser than HiFi-We, SSG, EPF, IFPF, and LPP_LBP_BLS.

5. Conclusions

To take full advantage of the spectral-spatial joint features for the improvement of HSI classification accuracy, we proposed the method of SSBLS in this paper. The method is divided into three parts. Firstly, the Gaussian filter smooths each spectral band to remove the noise in spectral domain based on the spatial information of HSI and fuse the spectral information and spatial information. Secondly, the optimal BLS models were obtained by training the BLS using the spectral features smoothed by the Gaussian filter. The test sample labels were computed for constructing the initial probability maps. Finally, the guided filter is applied to rectify the misclassification pixels of BLS based on the HSI spatial context information to improve the classification accuracy. The results of experiments of the three public datasets show that the proposed method outperforms other methods (SVM, HiFi-We, SSG, EPF, GSVM, IFRF, LPP_LBP_BLS, BLS, and GBLS) in terms of OA, AA, and Kappa.

This proposed method is a supervised learning classification that requires more labeled samples. However, the number of HSI labeled samples were very limited, and a high cost is required to label the unlabeled samples. Therefore, the next step is to study a semi-supervised learning classification method to improve the semi-supervised learning classification accuracy of HSI.