Multiscale Weighted Adjacent Superpixel-Based Composite Kernel for Hyperspectral Image Classification

Abstract

:1. Introduction

2. Related Work

2.1. CK with SVM

2.2. Superpixel Multiscale Segmentation

3. The Proposed Method

3.1. Weighted Adjacent Superpixel-Based Composite Kernel (WASCK)

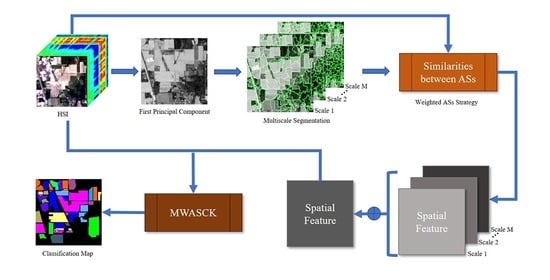

3.2. Multiscale Weighted Adjacent Superpixel-Based Composite Kernel (MWASCK)

4. Experimental Results

4.1. Datasets

4.2. Experimental Results

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef] [Green Version]

- Tu, B.; Zhou, C.; He, D.; Huang, S.; Plaza, A. Hyperspectral Classification with Noisy Label Detection via Superpixel-to-Pixel Weighting Distance. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4116–4131. [Google Scholar] [CrossRef]

- Jin, Q.; Ma, Y.; Mei, X.; Dai, X.; Huang, J. Gaussian Mixture Model for Hyperspectral Unmixing with Low-Rank Representation. In Proceedings of the IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 294–297. [Google Scholar]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X.; Du, B. Hyperspectral Remote Sensing Image Subpixel Target Detection Based on Supervised Metric Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4955–4965. [Google Scholar] [CrossRef]

- Hou, Y.; Zhu, W.; Wang, E. Hyperspectral Mineral Target Detection Based on Density Peak. Intell. Autom. Soft Comput. 2019, 25, 805–814. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of Hyperspectral Remote Sensing Images with Support Vector Machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef] [Green Version]

- Jia, X.; Richards, J.A. Segmented principal components transformation for efficient hyperspectral remote-sensing image display and classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 538–542. [Google Scholar]

- Liu, J.; Xiao, Z.; Chen, Y.; Yang, J. Spatial-Spectral Graph Regularized Kernel Sparse Representation for Hyperspectral Image Classification. Int. J. Geo. Inf. 2017, 6, 258. [Google Scholar] [CrossRef]

- Ye, Q.; Yang, J.; Liu, F.; Zhao, C.; Ye, N.; Yin, T. L1-Norm Distance Linear Discriminant Analysis Based on an Effective Iterative Algorithm. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 114–129. [Google Scholar] [CrossRef]

- Ye, Q.; Zhao, H.; Li, Z.; Yang, X.; Gao, S.; Yin, T.; Ye, N. L1-norm Distance Minimization Based Fast Robust Twin Support Vector k-plane Clustering. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 4494–4503. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Li, C.; Li, H.; Mei, X.; Ma, J. Hyperspectral Image Classification with Discriminative Kernel Collaborative Representation and Tikhonov Regularization. IEEE Geosci. Remote Sens. Lett. 2018, 15, 587–591. [Google Scholar] [CrossRef]

- Zhao, G.; Tu, B.; Fei, H.; Li, N.; Yang, X. Spatial-Spectral Classification of Hyperspectral Image via Group Tensor Decomposition. Neurocomputing 2018, 316, 68–77. [Google Scholar] [CrossRef]

- Quesada-Barriuso, P.; Arguello, F.; Heras, D.B. Spectral–Spatial Classification of Hyperspectral Images Using Wavelets and Extended Morphological Profiles. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 1177–1185. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Gabor-Filtering-Based Nearest Regularized Subspace for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 1012–1022. [Google Scholar] [CrossRef]

- Shen, L.; Jia, S. Three-Dimensional Gabor Wavelets for Pixel-Based Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2011, 49, 5039–5046. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of Hyperspectral Data from Urban Areas Based on Extended Morphological Profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Fauvel, M.; Chanussot, J.; Benediktsson, J.M. SVM and MRF-Based Method for Accurate Classification of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2010, 7, 736–740. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Spectral–Spatial Hyperspectral Image Segmentation Using Subspace Multinomial Logistic Regression and Markov Random Fields. IEEE Trans. Geosci. Remote Sens. 2012, 50, 809–823. [Google Scholar] [CrossRef]

- Sun, L.; Wu, Z.; Liu, J.; Xiao, L.; Wei, Z. Supervised Spectral–Spatial Hyperspectral Image Classification with Weighted Markov Random Fields. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1490–1503. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral Image Classification Using Dictionary-Based Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Joint Within-Class Collaborative Representation for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 2200–2208. [Google Scholar] [CrossRef]

- Pan, C.; Li, J.; Wang, Y.; Gao, X. Collaborative learning for hyperspectral image classification. Neurocomputing 2017, 275, 2512–2524. [Google Scholar] [CrossRef]

- Fu, L.; Li, Z.; Ye, Q.; Yin, H.; Liu, Q.; Chen, X.; Fan, X.; Yang, W.; Yang, G. Learning Robust Discriminant Subspace Based on Joint L2,p- and L2,s-Norm Distance Metrics. IEEE Trans. Neural Netw. Learn. Syst. 2020. [Google Scholar] [CrossRef]

- Ye, Q.; Li, Z.; Fu, L.; Yang, W.; Yang, G. Nonpeaked Discriminant Analysis for Data Representation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3818–3832. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Wang, Y.; Hu, J. Joint Sparse and Low-Rank Multitask Learning with Laplacian-Like Regularization for Hyperspectral Classification. Remote Sens. 2018, 10, 322. [Google Scholar] [CrossRef] [Green Version]

- Du, L.; Wu, Z.; Xu, Y.; Liu, W.; Wei, Z. Kernel Low-Rank Representation for Hyperspectral Image Classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016; pp. 477–480. [Google Scholar]

- Yuebin, W.; Jie, M.; Liqiang, Z.; Bing, Z.; Anjian, L.; Yibo, Z. Self-Supervised Low-rank Representation (SSLRR) for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5658–5672. [Google Scholar]

- Sun, L.; Ma, C.; Chen, Y.; Zheng, Y.; Jeon, B. Low rank component induced spatial-spectral kernel method for hyperspectral image classification. IEEE Trans. Cir. Sys. Video Tech. 2020, 30, 3829–3842. [Google Scholar] [CrossRef]

- Sun, L.; He, C.; Zheng, Y.; Tang, S. SLRL4D: Joint restoration of subspace low-rank learning and non-local 4-D transform filtering for hyperspectral image. Remote Sens. 2020, 12, 2979. [Google Scholar] [CrossRef]

- Sun, L.; Wu, F.; He, C.; Zhan, T.; Liu, W.; Zhang, D. Weighted Collaborative Sparse and L1/2 Low-Rank Regularizations With Superpixel Segmentation for Hyperspectral Unmixing. IEEE Geosci. Remote Sens. Lett. 2020. [CrossRef]

- Camps-Valls, G.; Gomez-Chova, L.; Munoz-Mari, J.; Vila-Frances, J.; Calpe-Maravilla, J. Composite Kernels for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2006, 3, 93–97. [Google Scholar] [CrossRef]

- Zhou, Y.; Peng, J.; Chen, C.L.P. Extreme Learning Machine with Composite Kernels for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 2351–2360. [Google Scholar] [CrossRef]

- Li, H.; Ye, Z.; Xiao, G. Hyperspectral Image Classification Using Spectral–Spatial Composite Kernels Discriminant Analysis. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 2341–2350. [Google Scholar] [CrossRef]

- Zhang, Y.; Prasad, S. Locality Preserving Composite Kernel Feature Extraction for Multi-Source Geospatial Image Analysis. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 1385–1392. [Google Scholar] [CrossRef]

- Gomez-Chova, L.; Camps-Valls, G.; Bruzzone, L.; Calpe-Maravilla, J. Mean Map Kernel Methods for Semisupervised Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 207–220. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Shervashidze, N.; Borgwardt, K.M. Spatio-Spectral Remote Sensing Image Classification with Graph Kernels. IEEE Geosci. Remote Sens. Lett. 2010, 7, 741–745. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, L.; Liu, H.; Yang, S.; Liu, F. Hyperspectral Image Classification by Spatial–Spectral Derivative-Aided Kernel Joint Sparse Representation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 2485–2500. [Google Scholar] [CrossRef]

- Zhang, H.; Li, J.; Huang, Y.; Zhang, L. A Nonlocal Weighted Joint Sparse Representation Classification Method for Hyperspectral Imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 2056–2065. [Google Scholar] [CrossRef]

- Li, J.; Zhang, H.; Huang, Y.; Zhang, L. Hyperspectral Image Classification by Nonlocal Joint Collaborative Representation with a Locally Adaptive Dictionary. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3707–3719. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, L.; Wang, S.; Hou, B.; Liu, F. Adaptive Nonlocal Spatial–Spectral Kernel for Hyperspectral Imagery Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 4086–4101. [Google Scholar] [CrossRef]

- Mori, G.; Ren, X.; Efros, A.A.; Malik, J. Recovering human body configurations: Combining segmentation and recognition. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 326–333. [Google Scholar]

- Caliskan, A.; Bati, E.; Koza, A.; Alatan, A.A. Superpixel based hyperspectral target detection. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7010–7013. [Google Scholar]

- Liu, M.Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy rate superpixel segmentation. In Proceedings of the 2011 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2097–2104. [Google Scholar]

- Duan, W.; Li, S.; Fang, L. Superpixel-based composite kernel for hyperspectral image classification. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1698–1701. [Google Scholar]

- Fang, L.; Li, S.; Duan, W.; Ren, J.; Benediktsson, J.A. Classification of Hyperspectral Images by Exploiting Spectral–Spatial Information of Superpixel via Multiple Kernels. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6663–6674. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Wu, Z.; Xiao, Z.; Yang, J. Region-based relaxed multiple kernel collaborative representation for hyperspectral image classification. IEEE Access 2017, 5, 20921–20933. [Google Scholar] [CrossRef]

- Sun, L.; Ma, C.; Chen, Y.; Shim, H.J.; Wu, Z.; Jeon, B. Adjacent superpixel-based multiscale spatial-spectral kernel for hyperspectral classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 1905–1919. [Google Scholar] [CrossRef]

- Aizerman, M.A.; Braverman, È.M.; Rozonoèr, L.I. Theoretical foundation of potential functions method in pattern recognition. Avtomat. Telemekh. 1964, 25, 917–936. [Google Scholar]

- Hervé, A.; Williams, L.J. Principal component analysis. Wiley Interd. Rev. Comput. Stats. 2010, 2, 433–459. [Google Scholar]

- Nemhauser, G.L.; Wolsey, L.A.; Fisher, M.L. An analysis of approximations for maximizing submodular set functions—I. Math. Prog. 1978, 14, 265–294. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. Libsvm: A library for support vector machines. ACM Trans. Intelligent Sys. Tech. 2011, 2, 27. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Lee, H.; Kwon, H. Going deeper with contextual cnn for hyperspectral image classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef] [Green Version]

- Zhang, M.; Li, W.; Du, Q. Diverse Region-Based CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Meng, Z.; Li, L.; Jiao, L.; Feng, Z.; Liang, M. Fully dense multiscale fusion network for hyperspectral image classification. Remote Sens. 2019, 11, 2718. [Google Scholar] [CrossRef] [Green Version]

- Xu, F.; Zhang, X.; Xin, Z.; Yang, A. Investigation on the Chinese Text Sentiment Analysis Based on ConVolutional Neural Networks in Deep Learning. Comput. Mater. Con. 2019, 58, 697–709. [Google Scholar] [CrossRef] [Green Version]

- Guo, Y.; Li, C.; Liu, Q. R2N: A Novel Deep Learning Architecture for Rain Removal from Single Image. Comput. Mater. Con. 2019, 58, 829–843. [Google Scholar] [CrossRef] [Green Version]

- Wu, H.; Liu, Q.; Liu, X. A Review on Deep Learning Approaches to Image Classification and Object Segmentation. Comput. Mater. Con. 2019, 60, 575–597. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Lu, W.; Li, F.; Peng, X.; Zhang, R. Deep Feature Fusion Model for Sentence Semantic Matching. Comput. Mater. Con. 2019, 61, 601–616. [Google Scholar] [CrossRef]

- Mohanapriya, N.; Kalaavathi, B. Adaptive Image Enhancement Using Hybrid Particle Swarm Optimization and Watershed Segmentation. Intel. Auto. Soft Comput. 2019, 25, 663–672. [Google Scholar] [CrossRef]

- Hung, C.; Mao, W.; Huang, H. Modified PSO Algorithm on Recurrent Fuzzy Neural Network for System Identification. Intel. Auto. Soft Comput. 2019, 25, 329–341. [Google Scholar]

| Indian Pines | University of Pavia | ||||||

|---|---|---|---|---|---|---|---|

| Class | Name | Train | Test | Class | Name | Train | Test |

| C01 | Alfalfa | 2 | 52 | C1 | Asphalt | 30 | 6601 |

| C02 | Corn-no till | 44 | 1390 | C2 | Meadows | 30 | 18,619 |

| C03 | Corn-min till | 26 | 808 | C3 | Gravel | 30 | 2069 |

| C04 | Corn | 8 | 226 | C4 | Trees | 30 | 3034 |

| C05 | Grass/pasture | 15 | 482 | C5 | Metal sheets | 30 | 1315 |

| C06 | Grass/trees | 23 | 724 | C6 | Bare soil | 30 | 4999 |

| C07 | Grass/pasture-mowed | 2 | 24 | C7 | Bitumen | 30 | 1300 |

| C08 | Hay-windrowed | 15 | 474 | C8 | Bricks | 30 | 3652 |

| C09 | Oats | 2 | 18 | C9 | Shadows | 30 | 917 |

| C10 | Soybeans-no till | 30 | 938 | ||||

| C11 | Soybeans-min till | 75 | 2393 | ||||

| C12 | Soybean-clean | 19 | 595 | ||||

| C13 | Wheat | 7 | 205 | ||||

| C14 | Woods | 39 | 1255 | ||||

| C15 | Building-Grass-Trees-Drives | 12 | 368 | ||||

| C16 | Stone-Steel-Towers | 3 | 92 | ||||

| Total | 322 | 10,044 | Total | 270 | 42,506 | ||

| Class | SVM-RBF | SVMCK | SCMK | RMK | ASMGSSK | Proposed Approaches | |

|---|---|---|---|---|---|---|---|

| WASCK | MWASCK | ||||||

| C01 | 57.12 | 31.35 | 87.69 | 100 | 95.77 | 88.85 | 94.42 |

| C02 | 74.06 | 81.27 | 85.27 | 94.32 | 97.18 | 93.7 | 97.14 |

| C03 | 65.61 | 78.49 | 83.86 | 98.09 | 99.01 | 97.49 | 98.9 |

| C04 | 46.95 | 66.64 | 81.11 | 92.48 | 94.34 | 89.42 | 94.42 |

| C05 | 85.56 | 82.86 | 86.85 | 91.58 | 92.55 | 91.95 | 92.51 |

| C06 | 94.65 | 95.03 | 96.42 | 97.73 | 98.48 | 98.19 | 98.67 |

| C07 | 72.92 | 66.25 | 92.5 | 96.25 | 96.25 | 94.58 | 96.25 |

| C08 | 95.11 | 94.81 | 98.78 | 99.49 | 99.87 | 99.94 | 99.7 |

| C09 | 86.11 | 74.44 | 100 | 100 | 97.22 | 84.44 | 96.67 |

| C10 | 66.59 | 78.9 | 91.34 | 93.14 | 95.54 | 94.21 | 95.7 |

| C11 | 79.64 | 86.46 | 93.17 | 98.25 | 98.54 | 98.09 | 98.53 |

| C12 | 70.94 | 70.24 | 85.48 | 95.9 | 97.5 | 95.66 | 97.66 |

| C13 | 98.49 | 91.71 | 95.85 | 99.07 | 99.02 | 97.32 | 99.02 |

| C14 | 95.41 | 95.85 | 98.1 | 99.69 | 99.19 | 99.8 | 99.94 |

| C15 | 39.59 | 68.59 | 88.37 | 97.99 | 98.1 | 96.03 | 97.93 |

| C16 | 84.78 | 86.09 | 90.43 | 95.65 | 93.91 | 96.63 | 95.87 |

| OA (%) | 78.18 | 84.09 | 91.08 | 96.82 | 97.73 | 96.56 | 97.85 |

| Std (%) | 0.99 | 1.52 | 1.11 | 0.48 | 0.36 | 0.47 | 0.4 |

| AA (%) | 75.84 | 78.06 | 90.95 | 96.85 | 97.03 | 94.77 | 97.09 |

| Std (%) | 2.52 | 2.22 | 0.93 | 0.48 | 0.81 | 1.17 | 0.74 |

| Kappa | 0.7507 | 0.8187 | 0.8982 | 0.9637 | 0.9742 | 0.9608 | 0.9755 |

| Std | 0.0111 | 0.0175 | 0.0127 | 0.0054 | 0.0041 | 0.0053 | 0.0046 |

| Class | SVM-RBF | SVMCK | SCMK | RMK | ASMGSSK | Proposed Approaches | |

|---|---|---|---|---|---|---|---|

| WASCK | MWASCK | ||||||

| C1 | 69.21 | 86.14 | 90.83 | 95.98 | 97.9 | 98.93 | 97.23 |

| C2 | 72.49 | 92.06 | 92.6 | 97.52 | 97.83 | 97.85 | 98.15 |

| C3 | 72.57 | 80.12 | 92.64 | 99.31 | 99.73 | 99.57 | 99.86 |

| C4 | 92.41 | 94.34 | 92.36 | 96.25 | 91.82 | 95.34 | 97.82 |

| C5 | 99.33 | 99.48 | 98.71 | 99.03 | 98.73 | 98.82 | 99.43 |

| C6 | 74.47 | 88.93 | 94.04 | 99.05 | 99.06 | 99.24 | 99.93 |

| C7 | 89.65 | 93.99 | 98.78 | 99.6 | 99.04 | 98.9 | 99.72 |

| C8 | 77.74 | 82.26 | 96.38 | 99.41 | 98.61 | 97.89 | 99.47 |

| C9 | 97.67 | 99.54 | 94.58 | 97.84 | 98.55 | 99.21 | 98.99 |

| OA (%) | 75.99 | 89.96 | 93.23 | 97.74 | 97.8 | 98.18 | 98.5 |

| Std (%) | 2.16 | 1.9 | 1.89 | 0.81 | 0.77 | 0.67 | 0.48 |

| AA (%) | 82.84 | 90.76 | 94.55 | 98.22 | 97.92 | 98.42 | 98.96 |

| Std (%) | 0.8 | 0.61 | 0.75 | 0.40 | 0.56 | 0.23 | 0.32 |

| Kappa | 0.6953 | 0.8684 | 0.9112 | 0.9701 | 0.9709 | 0.9759 | 0.9801 |

| Std | 0.0239 | 0.0236 | 0.0240 | 0.0106 | 0.0101 | 0.0088 | 0.0063 |

| Indian Pines | University of Pavia | |||||||

|---|---|---|---|---|---|---|---|---|

| Samples | CNN | CD-CNN | DR-CNN | MWASCK | CNN | CD-CNN | DR-CNN | MWASCK |

| 50 | 80.43 | 84.43 | 88.87 | 98.82 | 86.39 | 92.19 | 96.91 | 99.02 |

| 100 | 84.32 | 88.27 | 94.94 | 99.19 | 88.53 | 93.55 | 98.67 | 99.48 |

| 200 | 87.01 | 94.24 | 98.54 | 99.49 | 92.27 | 96.73 | 99.56 | 99.62 |

| Indian Pines | Kennedy Space Center | |||

|---|---|---|---|---|

| FDMFN | MWASCK | FDMFN | MWASCK | |

| OA (%) | 96.72 | 98.17 | 99.66 | 99.98 |

| AA (%) | 95.06 | 97.53 | 99.41 | 99.97 |

| Kappa | 0.9626 | 0.9791 | 0.9962 | 0.9998 |

| Time Cost | Indian Pines | University of Pavia | ||

|---|---|---|---|---|

| WASCK | MWASCK | WASCK | MWASCK | |

| Superpixel segmentation | 0.09 | 0.45 | 1.15 | 6.84 |

| Kernels computation | 0.82 | 4.46 | 4.28 | 61.76 |

| SVM training | 2.05 | 2.18 | 1.06 | 0.87 |

| SVM testing | 0.11 | 0.13 | 0.75 | 0.84 |

| Total | 3.07 | 7.22 | 7.24 | 70.31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Chen, Y. Multiscale Weighted Adjacent Superpixel-Based Composite Kernel for Hyperspectral Image Classification. Remote Sens. 2021, 13, 820. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040820

Zhang Y, Chen Y. Multiscale Weighted Adjacent Superpixel-Based Composite Kernel for Hyperspectral Image Classification. Remote Sensing. 2021; 13(4):820. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040820

Chicago/Turabian StyleZhang, Yaokang, and Yunjie Chen. 2021. "Multiscale Weighted Adjacent Superpixel-Based Composite Kernel for Hyperspectral Image Classification" Remote Sensing 13, no. 4: 820. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040820