Factors Influencing the Accuracy of Shallow Snow Depth Measured Using UAV-Based Photogrammetry

Abstract

:1. Introduction

2. Methodology

2.1. Study Area

2.2. Survey Instrument Specification (UAV and GNSS Receiver)

2.3. Acquisition of the Snow Depth Map

2.3.1. GCPs

2.3.2. UAV Surveying Campaigns

2.3.3. Photogrammetric Processing to Obtain the DSM

- Camera calibration: this is the process of estimating camera’s intrinsic parameters such as its focal length, skew, distortion, and image center. This is first done by identifying common features in multiple photographs of which real-world 3D coordinates are precisely known (such as GCPs). Then, a set of equations [48] are solved to obtain the camera intrinsic parameters. Here, some of the intrinsic parameters are precisely provided by the camera manufacturer (e.g., focal length) and can be directly adopted. The intrinsic parameters are used to correct the distortion of the photographs and they apply to all photographs, so the accurate 2D coordinates of the pixels in all photographs can be obtained in this step.

- Structure from Motion (SfM): in this process, the tie-points, which are another set of features that coexists in a series of photographs, are first identified. Then, an optimization process of which the objective function is composed of a set of collinearity equations [37,49,50] are solved to obtain extrinsic parameters of the camera (e.g., position and orientation of camera corresponding to all photographs) and 3D coordinates of the tie-points. Here, the key to successful implementation is the automated algorithms for tie-point detection, which requires the comparison between all input photographs [51].

- Bundle Adjustment: the GCPs, as opposed to tie-points, have the precisely known pair of 2D and 3D coordinates, which are also included in the objective function of the optimization process. Therefore, accuracy and the spatial configuration of GCPs greatly influences accuracy of photogrammetry.

- DSM construction. In this step, the triangular irregular network, which is composed of tie-points, is constructed and textures extracted from photographs are applied to obtain the DSM. Here, the accuracy of the DSMs may be estimated by performing the pixel-wise correlation analysis.

2.4. In Situ Snow Depth Measurment and Validation

3. Results

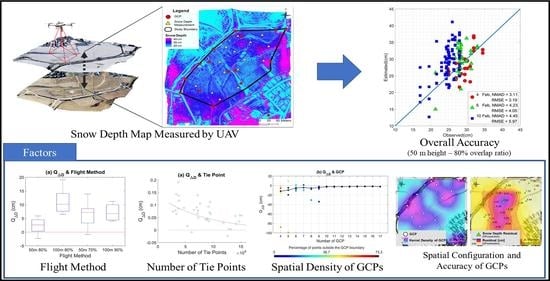

3.1. Overall Accuracy of The Snow Depth Map

3.2. Influence of the UAV Flight Altitude and the Photograph Overlap Ratio

3.3. Influence of Number of Identified Tie-Points

3.4. Influence of Spatial Density of GCPs

3.5. Influence of the Spatial Configuration and the Accuracy of the GCPs

4. Discussions

4.1. Influence of The Surveying Time

4.2. Issues with Image Saturation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhao, Y.; Huang, M.; Horton, R.; Liu, F.; Peth, S.; Horn, R. Influence of Winter Grazing on Water and Heat Flow in Seasonally Frozen Soil of Inner Mongolia. Vadose Zone J. 2013, 12, 1–11. [Google Scholar] [CrossRef]

- Øygarden, L. Rill and Gully Development during an Extreme Winter Runoff Event in Norway. Catena 2003, 50, 217–242. [Google Scholar] [CrossRef]

- Su, J.; van Bochove, E.; Thériault, G.; Novotna, B.; Khaldoune, J.; Denault, J.; Zhou, J.; Nolin, M.; Hu, C.; Bernier, M. Effects of Snowmelt on Phosphorus and Sediment Losses from Agricultural Watersheds in Eastern Canada. Agric. Water Manag. 2011, 98, 867–876. [Google Scholar] [CrossRef]

- Hu, J.; Moore, D.J.; Burns, S.P.; Monson, R.K. Longer Growing Seasons Lead to Less Carbon Sequestration by a Subalpine Forest. Glob. Chang. Biol. 2010, 16, 771–783. [Google Scholar] [CrossRef]

- Friggens, M.M.; Williams, M.I.; Bagne, K.E.; Wixom, T.T.; Cushman, S.A. Effects of Climate Change on Terrestrial Animals [Chapter 9]. In Climate Change Vulnerability and Adaptation in the Intermountain Region [Part 2]; Gen.Tech.Rep.RMRS-GTR-375; Halofsky, J.E., Peterson, D.L., Ho, J.J., Little, N.J., Joyce, L.A., Eds.; US Department of Agriculture, Forest Service, Rocky Mountain Research Station: Fort Collins, CO, USA, 2018; Volume 375, pp. 264–315. [Google Scholar]

- Nelson, M.E.; Mech, L.D. Relationship between Snow Depth and Gray Wolf Predation on White-Tailed Deer. J. Wildlife Manag. 1986, 50, 471–474. [Google Scholar] [CrossRef]

- Wipf, S.; Stoeckli, V.; Bebi, P. Winter Climate Change in Alpine Tundra: Plant Responses to Changes in Snow Depth and Snowmelt Timing. Clim. Chang. 2009, 94, 105–121. [Google Scholar] [CrossRef] [Green Version]

- Dickinson, W.; Whiteley, H. A Sampling Scheme for Shallow Snowpacks. Hydrol. Sci. J. 1972, 17, 247–258. [Google Scholar] [CrossRef]

- Doesken, N.J.; Robinson, D.A. The challenge of snow measurements. In Historical Climate Variability and Impacts in North America; Springer International Publishing: New York, NY, USA, 2009; pp. 251–273. [Google Scholar]

- Gascoin, S.; Lhermitte, S.; Kinnard, C.; Bortels, K.; Liston, G.E. Wind Effects on Snow Cover in Pascua-Lama, Dry Andes of Chile. Adv. Water Resour. 2013, 55, 25–39. [Google Scholar] [CrossRef] [Green Version]

- Peck, E.L. Snow Measurement Predicament. Water Resour. Res. 1972, 8, 244–248. [Google Scholar] [CrossRef]

- Hall, D.; Sturm, M.; Benson, C.; Chang, A.; Foster, J.; Garbeil, H.; Chacho, E. Passive Microwave Remote and in Situ Measurements of Artic and Subarctic Snow Covers in Alaska. Remote Sens. Environ. 1991, 38, 161–172. [Google Scholar] [CrossRef]

- Erxleben, J.; Elder, K.; Davis, R. Comparison of Spatial Interpolation Methods for Estimating Snow Distribution in the Colorado Rocky Mountains. Hydrol. Process. 2002, 16, 3627–3649. [Google Scholar] [CrossRef]

- Tarboton, D.; Blöschl, G.; Cooley, K.; Kirnbauer, R.; Luce, C. Spatial Snow Cover Processes at Kühtai and Reynolds Creek. Spat. Patterns Catchment Hydrol. Obs. Model. 2000, 158–186. [Google Scholar]

- Dietz, A.J.; Kuenzer, C.; Gessner, U.; Dech, S. Remote Sensing of snow—A Review of Available Methods. Int. J. Remote Sens. 2012, 33, 4094–4134. [Google Scholar] [CrossRef]

- Chang, A.; Rango, A. Algorithm Theoretical Basis Document (ATBD) for the AMSR-E Snow Water Equivalent Algorithm. In NASA/GSFC; November 2000. Available online: https://eospso.gsfc.nasa.gov/sites/default/files/atbd/atbd-lis-01.pdf (accessed on 24 August 2020).

- Kelly, R.E.; Chang, A.T.; Tsang, L.; Foster, J.L. A Prototype AMSR-E Global Snow Area and Snow Depth Algorithm. IEEE Trans. Geosci. Remote Sens. 2003, 41, 230–242. [Google Scholar] [CrossRef] [Green Version]

- Kelly, R. The AMSR-E Snow Depth Algorithm: Description and Initial Results. J. Remote Sens. Soc. Jpn. 2009, 29, 307–317. [Google Scholar]

- Lucas, R.M.; Harrison, A. Snow Observation by Satellite: A Review. Remote Sens. Rev. 1990, 4, 285–348. [Google Scholar] [CrossRef]

- Rosenthal, W.; Dozier, J. Automated Mapping of Montane Snow Cover at Subpixel Resolution from the Landsat Thematic Mapper. Water Resour. Res. 1996, 32, 115–130. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V. Development of Methods for Mapping Global Snow Cover using Moderate Resolution Imaging Spectroradiometer Data. Remote Sens. Environ. 1995, 54, 127–140. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V.; DiGirolamo, N.E.; Bayr, K.J. MODIS Snow-Cover Products. Remote Sens. Environ. 2002, 83, 181–194. [Google Scholar] [CrossRef] [Green Version]

- Maxson, R.; Allen, M.; Szeliga, T. Theta-Image Classification by Comparison of Angles Created between Multi-Channel Vectors and an Empirically Selected Reference Vector. 1996 North American Airborne and Satellite Snow Data CD-ROM. 1996. Available online: https://www.nohrsc.noaa.gov/technology/papers/theta/theta.html (accessed on 24 August 2020).

- Pepe, M.; Brivio, P.; Rampini, A.; Nodari, F.R.; Boschetti, M. Snow Cover Monitoring in Alpine Regions using ENVISAT Optical Data. Int. J. Remote Sens. 2005, 26, 4661–4667. [Google Scholar] [CrossRef]

- Foster, J.L.; Hall, D.K.; Eylander, J.B.; Riggs, G.A.; Nghiem, S.V.; Tedesco, M.; Kim, E.; Montesano, P.M.; Kelly, R.E.; Casey, K.A. A Blended Global Snow Product using Visible, Passive Microwave and Scatterometer Satellite Data. Int. J. Remote Sens. 2011, 32, 1371–1395. [Google Scholar] [CrossRef]

- König, M.; Winther, J.; Isaksson, E. Measuring Snow and Glacier Ice Properties from Satellite. Rev. Geophys. 2001, 39, 1–27. [Google Scholar] [CrossRef]

- Romanov, P.; Gutman, G.; Csiszar, I. Automated Monitoring of Snow Cover Over North America with Multispectral Satellite Data. J. Appl. Meteorol. 2000, 39, 1866–1880. [Google Scholar] [CrossRef]

- Simic, A.; Fernandes, R.; Brown, R.; Romanov, P.; Park, W. Validation of VEGETATION, MODIS, and GOES SSM/I snow-cover Products Over Canada Based on Surface Snow Depth Observations. Hydrol. Process. 2004, 18, 1089–1104. [Google Scholar] [CrossRef]

- McKay, G. Problems of Measuring and Evaluating Snow Cover. In Proceedings of the Workshop Seminar of Snow Hydrology, Ottawa, ON, Canada, 28–29 February 1968; pp. 49–63. [Google Scholar]

- Deems, J.S.; Painter, T.H.; Finnegan, D.C. Lidar Measurement of Snow Depth: A Review. J. Glaciol. 2013, 59, 467–479. [Google Scholar] [CrossRef] [Green Version]

- Jacobs, J.M.; Hunsaker, A.G.; Sullivan, F.B.; Palace, M.; Burakowski, E.A.; Herrick, C.; Cho, E. Shallow Snow Depth Mapping with Unmanned Aerial Systems Lidar Observations: A Case Study in Durham, New Hampshire, United States. Cryosphere Discuss. 2020, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV Photogrammetry for Mapping and 3d modeling–current Status and Future Perspectives. Int. Arch. Photogramm. 2011, 38, C22. [Google Scholar] [CrossRef] [Green Version]

- Uysal, M.; Toprak, A.S.; Polat, N. DEM Generation with UAV Photogrammetry and Accuracy Analysis in Sahitler Hill. Measurement 2015, 73, 539–543. [Google Scholar] [CrossRef]

- Bühler, Y.; Marty, M.; Egli, L.; Veitinger, J.; Jonas, T.; Thee, P.; Ginzler, C. Snow Depth Mapping in High-Alpine Catchments using Digital Photogrammetry. Cryosphere 2015, 9, 229–243. [Google Scholar] [CrossRef] [Green Version]

- Nolan, M.; Larsen, C.; Sturm, M. Mapping Snow-Depth from Manned-Aircraft on Landscape Scales at Centimeter Resolution using Structure-from-Motion Photogrammetry. Cryosphere Discuss. 2015, 9, 1445–1463. [Google Scholar] [CrossRef] [Green Version]

- Redpath, T.A.; Sirguey, P.; Cullen, N.J. Mapping Snow Depth at very High Spatial Resolution with RPAS Photogrammetry. Cryosphere 2018, 12, 3477–3497. [Google Scholar] [CrossRef] [Green Version]

- Vander Jagt, B.; Lucieer, A.; Wallace, L.; Turner, D.; Durand, M. Snow Depth Retrieval with UAS using Photogrammetric Techniques. Geosciences 2015, 5, 264–285. [Google Scholar] [CrossRef] [Green Version]

- De Michele, C.; Avanzi, F.; Passoni, D.; Barzaghi, R.; Pinto, L.; Dosso, P.; Ghezzi, A.; Gianatti, R.; Della Vedova, G. Using a fixed-wing UAS to map snow depth distribution: An evaluation at peak accumulation. Cryosphere 2016, 10, 511–522. [Google Scholar] [CrossRef] [Green Version]

- Marti, R.; Gascoin, S.; Berthier, E.; De Pinel, M.; Houet, T.; Laffly, D. Mapping snow depth in open alpine terrain from stereo satellite imagery. Cryosphere 2016, 10, 1361–1380. [Google Scholar] [CrossRef] [Green Version]

- Lendzioch, T.; Langhammer, J.; Jenicek, M. Tracking Forest and Open Area Effects on Snow Accumulation by Unmanned Aerial Vehicle Photogrammetry. Int. Arch. Photogramm. Sci. 2016, 41, 917. [Google Scholar]

- Harder, P.; Schirmer, M.; Pomeroy, J.; Helgason, W. Accuracy of Snow Depth Estimation in Mountain and Prairie Environments by an Unmanned Aerial Vehicle. Cryosphere 2016, 10, 2559–2571. [Google Scholar] [CrossRef] [Green Version]

- Bühler, Y.; Adams, M.S.; Bösch, R.; Stoffel, A. Mapping Snow Depth in Alpine Terrain with Unmanned Aerial Systems (UASs): Potential and Limitations. Cryosphere 2016, 10, 1075–1088. [Google Scholar] [CrossRef] [Green Version]

- Miziński, B.; Niedzielski, T. Fully-Automated Estimation of Snow Depth in Near Real Time with the use of Unmanned Aerial Vehicles without Utilizing Ground Control Points. Cold Reg. Sci. Technol. 2017, 138, 63–72. [Google Scholar] [CrossRef]

- Goetz, J.; Brenning, A. Quantifying Uncertainties in Snow Depth Mapping from Structure from Motion Photogrammetry in an Alpine Area. Water Resour. Res. 2019, 55, 7772–7783. [Google Scholar] [CrossRef] [Green Version]

- DJI. Available online: https://www.dji.com/phantom-4-pro-v2?site=brandsite&from=nav (accessed on 24 August 2020).

- Trimble. Available online: https://geospatial.trimble.com/products-and-solutions/r10 (accessed on 24 August 2020).

- Bentley Systems, I. ContextCapture: Quick Start Guide. 2017. Available online: https://www.bentley.com/-/media/724EEE70E12747C69BB4EEFC1F1CD517.ashx (accessed on 24 August 2020).

- Heikkila, J.; Silvén, O. A Four-Step Camera Calibration Procedure with Implicit Image Correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112. [Google Scholar]

- Duane, C.B. Close-Range Camera Calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—a Modern Synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 20–25 September 1999; pp. 298–372. [Google Scholar]

- Rumpler, M.; Daftry, S.; Tscharf, A.; Prettenthaler, R.; Hoppe, C.; Mayer, G.; Bischof, H. Automated End-to-End Workflow for Precise and Geo-Accurate Reconstructions using Fiducial Markers. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 3, 135–142. [Google Scholar] [CrossRef] [Green Version]

- Lee, G.; Kim, D.; Kwon, H.; Choi, E. Estimation of Maximum Daily Fresh Snow Accumulation using an Artificial Neural Network Model. Adv. Meteorol. 2019, 2019, 1–11. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.; Kim, D.; Kang, B. The Role of Rainfall Spatial Variability in Estimating Areal Reduction Factors. J. Hydrol. 2019, 568, 416–426. [Google Scholar] [CrossRef]

- Höhle, J.; Höhle, M. Accuracy Assessment of Digital Elevation Models by Means of Robust Statistical Methods. ISPRS J. Photogramm. 2009, 64, 398–406. [Google Scholar] [CrossRef] [Green Version]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Deschamps-Berger, C.; Gascoin, S.; Berthier, E.; Deems, J.; Gutmann, E.; Dehecq, A.; Shean, D.; Dumont, M. Snow depth mapping from stereo satellite imagery in mountainous terrain: Evaluation using airborne laser-scanning data. Cryosphere 2020, 14, 2925–2940. [Google Scholar] [CrossRef]

- Hirschmuller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 807–814. [Google Scholar]

| Author(s) | Area (km2) | UAV Camera | Use of RTK 1 | Flight Method 2 | GSD 3 | GCP | Average Depth | MP 4 | Result (RMSE) |

|---|---|---|---|---|---|---|---|---|---|

| Vander Jagt et al. [37] | 0.007 | SkyJiB2 | - | ~29 m 85–90% | 0.6 | NaN 20 | 0.6 cm | 20 | 18.4 cm 9.6 cm |

| De Michele et al. [38] | 0.03 | Swinglet CAM | - | 130 m 80% | 4.5 | 13 | 180 cm | 12 | 14.3 cm |

| Marti et al. [39] | 3.1 | eBee | RTK | 150 m 70% | 10–40 | NaN | 0–320 cm | 343 | NMAD 38 cm |

| Lendzioch et al. [40] | 0.26 0.005 | Phantom 2 | - | 35 m 60–80% | ~1 | 10 | 27 cm 67 cm | 10 | 22 cm 42 cm |

| Harder et al. [41] | 0.65 0.32 | eBee | RTK | 90 m 70% 90 m 85% | 3 | 10 11 | 0–5 m | 34 83 | 8–12 cm 8–9 cm |

| Bühler et al. [42] | 0.29 | Falcon 81 Sony EX-7 | - | 157 m 70% | 3.9 | 9 | 0–3 m | 22 | 15 cm |

| Miziński and Niedzielski [43] | 0.005 | eBee | - | 151 m | 5.3 | NaN | 42 cm | 42 | 41 cm |

| Goetz and Brenning [44] | 0.05 | Phantom 4 | - | 65 m 75% | 2.1 | 19 | 0–3 m | 80 | 15 cm |

| Date | Flight Method | Starting Time (GMT+9) | |||

|---|---|---|---|---|---|

| 9 a.m. | 11 a.m. | 1 p.m. | 3 p.m. | ||

| 1/29 | 50 m 80% 1 | 09:42 | - | - | - |

| 100 m 80% 1 | 10:00 | - | - | - | |

| 50 m 70% 2 | - | - | - | - | |

| 100 m 90% 3 | - | - | - | - | |

| 2/1 | 50 m 80% 1 | 08:16 | - | - | - |

| 100 m 80% 1 | 08:33 | - | - | - | |

| 50 m 70% 2 | 08:43 | - | - | - | |

| 100 m 90% 3 | - | - | - | - | |

| 2/4 | 50 m 80% 1 | 09:10 | 11:16 | - | - |

| 100 m 80% 1 | 09:27 | 11:32 | - | - | |

| 50 m 70% 2 | 09:37 | 11:42 | - | - | |

| 100 m 90% 3 | 09:48 | 11:54 | - | - | |

| 2/6 | 50 m 80% 1 | - | - | 13:36 | 15:32 |

| 100 m 80% 1 | - | - | 13:53 | 15:53 | |

| 50 m 70% 2 | - | - | 14:03 | 16:02 | |

| 100 m 90% 3 | - | - | 14:13 | 16:13 | |

| 2/10 | 50 m 80% 1 | 09:26 | 11:46 | 13:31 | 14:48 |

| 100 m 80% 1 | 09:43 | 12:03 | 13:48 | 15:05 | |

| 50 m 70% 2 | 09:52 | 12:12 | 14:01 | 15:14 | |

| 100 m 90% 3 | 10:03 | 12:23 | 14:11 | 15:25 | |

| 2/21 (Snow-free) | 50 m 80% 1 | 10:49 | |||

| Flight Method | GSDh 1 (cm/px) | GSDv 2 (cm/px) | Flight Dur. | Num. Photos | Proc. Time | Model Size |

|---|---|---|---|---|---|---|

| 50 m 80% | 1.62 | 1.37 | 14 min 16 s | 338 | 1 h 58 min | 189 MB |

| 100 m 80% | 3.24 | 2.74 | 5 min 58 s | 98 | 33 min | 70 MB |

| 50 m 70% | 1.62 | 1.37 | 8 min 44 s | 180 | 1 h | 162 MB |

| 100 m 90% | 3.24 | 2.74 | 11 min 54 s | 260 | 1 h 18 min | 86 MB |

| DATE | Proxy for Uncertainties | Value (cm) | |||

|---|---|---|---|---|---|

| 9 a.m. | 11 a.m. | 13 p.m. | 15 p.m. | ||

| 4 February 2020 15 Points 1 25~35 cm 2 | NMAD RMSE | 1.78 −2.30 2.85 −1.19 2.68 | 3.1 10.30 3.49 −0.80 3.51 | - | - |

| 6 February 2020 18 Points 1 21~31 cm 2 | NMAD RMSE | - | - | 4.52 0.40 4.17 0.80 4.21 | 2.97 2.85 3.93 2.63 3.00 |

| 10 February 2020 21 Points 1 17~28 cm 2 | NMAD RMSE | 3.41 5.60 6.95 5.80 3.92 | 4.89 2.40 5.35 2.59 4.80 | 3.26 3.40 4.24 2.99 3.08 | 4.45 5.90 6.90 5.62 4.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Park, J.; Choi, E.; Kim, D. Factors Influencing the Accuracy of Shallow Snow Depth Measured Using UAV-Based Photogrammetry. Remote Sens. 2021, 13, 828. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040828

Lee S, Park J, Choi E, Kim D. Factors Influencing the Accuracy of Shallow Snow Depth Measured Using UAV-Based Photogrammetry. Remote Sensing. 2021; 13(4):828. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040828

Chicago/Turabian StyleLee, Sangku, Jeongha Park, Eunsoo Choi, and Dongkyun Kim. 2021. "Factors Influencing the Accuracy of Shallow Snow Depth Measured Using UAV-Based Photogrammetry" Remote Sensing 13, no. 4: 828. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040828