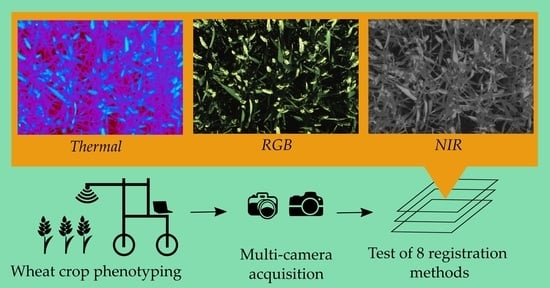

Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions

Abstract

:1. Introduction

2. Materials and Methods

2.1. Cameras Set-Up

2.2. In-Field Image Acquisition

2.3. Calibration-Based Registration Method

2.4. Image-Based Registration Methods

2.5. Validation of the Registration Methods

- The percentage of plausible alignments. This indicator assessed the number of images that seemed visually aligned. It was computed by a human operator examining the registered images in a viewer, beside their master image, one by one. Bad automatic registrations were characterized by aberrant global transformations that were easy to identify (Figure 6). For the local transformation, alignments were considered aberrant in case of the apparition of deformed black borders in the frame or illogical warping of objects such as leaves curving in complete spirals. That indicator was computed for all the acquired images, i.e., a total of 3968 images for each camera.

- The average distance between control points in aligned slave and master images (control points error) [23]. The control points were visually selected on the leaves and ears by a human operator. The points had to be selected on recognizable pixels. Attention was paid to select them in all images regions, at all canopy floors and at different positions on the leaves (edges, center, tip, etc.). It was supposed that registration performances may differ depending on the scene content (only leaves or leaves + ears). Thus, two validation images sets were created. The vegetative set consisted of twelve images from both trials acquired at the six dates before ear emergence. The ears set consisted of 12 images from both trials acquired at the 12 dates after ear emergence. Ten control points were selected for each image. Firstly, this indicator was only computed for the 900 nm images as their intensity content was close enough to the 800 nm master image to allow human selection of control points. Additionally, the other types of images would not have allowed to quantify errors for all the registration methods because some of those methods generated aberrant alignments. Secondly, the control point error indicator was also computed for the RGB images, but only for the ECC and B-SPLINE methods. Those methods were chosen because they were the two best methods for the 900 nm images and because they provided plausible alignments for all of the RGB images of the two validation sets.

- The overlaps between the plant masks in registered slaves and master images. Contrary to the two other indicators, this indicator could be automatically computed. However, it necessitated to isolate plants from background in the slaves and master image. A comparable segmentation could only be obtained for the 900 nm slave and the 800 nm master. The segmentation algorithm relied on a threshold at the first local minimum in the intensity range 20–60 of the image histogram. Then, plants masks were compared to compute the percentage of plant pixels in the aligned slave image that were not plant pixels in the master image (plant mask error). That plant mask error indicator was computed for all acquired images. For the presentation of the results, averaged scores are presented for the two sets of images acquired before and after ears emergence.

3. Results

3.1. Plausible Alignements

3.2. Registration Accuracy and Computation Time

3.3. Parametrization of the B-SPLINE Method

3.4. Plant Mask Erosion

3.5. Suggested Registration—Fusion Strategies

4. Discussion

4.1. Considerations on the Matching Step

4.2. Nature of Distortion and Choice of the Transformation Model

- Differences of optical distortion between the images. The two types of optical distortion are radial and tangential distortions. Radial distortion is due to the spherical shape of the lenses. Tangential distortion is due to misalignment between lens and image plane. If the images are acquired by two different cameras with different optical distortions, it caused a distortion between the images.

- Differences of perspective. Those differences appear if the cameras that acquired the two images are at different distances. For the same distance of the cameras, images present the same perspective, whatever the lens. However, two cameras with different fields of view (determined by focal length and sensor size) necessitate being at different distances to capture the same scene. For this reason, differences of fields of view are intuitively perceived as responsible for differences in perspective distortion.

- Differences of point of view. The cameras that acquire the slave and the master image are not at the same position. This results in two different effects. Firstly, due to the relief of the scene, some elements may be observed in an image and not in the other one. This is called the occlusion effect. Secondly, the relative position of the objects becomes distance-dependent (that property is especially exploited for stereovision). This is referred as to the parallax effect. It is greater when the distance between the cameras increases compared to the distance between the cameras and the objects of interest.

- Scene motion. If the acquisition of the images is not perfectly synchronous, a relative displacement of scene objects with respect to the sensors causes distortion between the images. Objects such as wheat leaves are liable to be moved by the wind.

- Differences of scene illumination.

- Differential impact of heat waves (some images may be blurry).

4.3. Critical Look on the Validation Methods

- To visually assess the success of registration (aligned slave and master images look similar) [23].

- To verify that the values of the transformation parameters fall in the range of plausible values [31]. This method can be assimilated to the previous one but presents the advantage to be automatic.

- To test the algorithm on a target of known pattern [9].

- To manually select control points and assess the distances between their positions in aligned slave and master images [23].

4.4. Visualization of Successful Image Registrations

4.5. Extending the Tests to Other Registration Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kirchgessner, N.; Liebisch, F.; Yu, K.; Pfeifer, J.; Friedli, M.; Hund, A.; Walter, A. The ETH field phenotyping platform FIP: A cable-suspended multi-sensor system. Funct. Plant Biol. 2017, 44, 154–168. [Google Scholar] [CrossRef]

- Shafiekhani, A.; Kadam, S.; Fritschi, F.; DeSouza, G. Vinobot and Vinoculer: Two Robotic Platforms for High-Throughput Field Phenotyping. Sensors 2017, 17, 214. [Google Scholar] [CrossRef] [PubMed]

- Virlet, N.; Sabermanesh, K.; Sadeghi-Tehran, P.; Hawkesford, M.J. Field Scanalyzer: An automated robotic field phenotyping platform for detailed crop monitoring. Funct. Plant Biol. 2017, 44, 143–153. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, Y.; Li, C.; Robertson, J.S.; Sun, S.; Xu, R.; Paterson, A.H. GPhenoVision: A ground mobile system with multi-modal imaging for field-based high throughput phenotyping of cotton. Sci. Rep. 2018, 8, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bai, G.; Ge, Y.; Scoby, D.; Leavitt, B.; Stoerger, V.; Kirchgessner, N.; Irmak, S.; Graef, G.; Schnable, J.; Awada, T. NU-Spidercam: A large-scale, cable-driven, integrated sensing and robotic system for advanced phenotyping, remote sensing, and agronomic research. Comput. Electron. Agric. 2019, 160, 71–81. [Google Scholar] [CrossRef]

- Beauchêne, K.; Leroy, F.; Fournier, A.; Huet, C.; Bonnefoy, M.; Lorgeou, J.; de Solan, B.; Piquemal, B.; Thomas, S.; Cohan, J.-P. Management and Characterization of Abiotic Stress via PhénoField®, a High-Throughput Field Phenotyping Platform. Front. Plant Sci. 2019, 10, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Pérez-Ruiz, M.; Prior, A.; Martinez-Guanter, J.; Apolo-Apolo, O.E.; Andrade-Sanchez, P.; Egea, G. Development and evaluation of a self-propelled electric platform for high-throughput field phenotyping in wheat breeding trials. Comput. Electron. Agric. 2020, 169, 105237. [Google Scholar] [CrossRef]

- Leinonen, I.; Jones, H.G. Combining thermal and visible imagery for estimating canopy temperature and identifying plant stress. J. Exp. Bot. 2004, 55, 1423–1431. [Google Scholar] [CrossRef] [Green Version]

- Jerbi, T.; Wuyts, N.; Cane, M.A.; Faux, P.-F.; Draye, X. High resolution imaging of maize (Zea maize) leaf temperature in the field: The key role of the regions of interest. Funct. Plant Biol. 2015, 42, 858. [Google Scholar] [CrossRef]

- Huang, P.; Luo, X.; Jin, J.; Wang, L.; Zhang, L.; Liu, J.; Zhang, Z. Improving high-throughput phenotyping using fusion of close-range hyperspectral camera and low-cost depth sensor. Sensors 2018, 18, 2711. [Google Scholar] [CrossRef] [Green Version]

- Khanna, R.; Schmid, L.; Walter, A.; Nieto, J.; Siegwart, R.; Liebisch, F. A spatio temporal spectral framework for plant stress phenotyping. Plant Methods 2019, 15, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Roitsch, T.; Cabrera-Bosquet, L.; Fournier, A.; Ghamkhar, K.; Jiménez-Berni, J.; Pinto, F.; Ober, E.S. Review: New sensors and data-driven approaches—A path to next generation phenomics. Plant Sci. 2019, 282, 2–10. [Google Scholar] [CrossRef] [PubMed]

- Mishra, P.; Asaari, M.S.M.; Herrero-Langreo, A.; Lohumi, S.; Diezma, B.; Scheunders, P. Close range hyperspectral imaging of plants: A review. Biosyst. Eng. 2017, 164, 49–67. [Google Scholar] [CrossRef]

- Busemeyer, L.; Mentrup, D.; Möller, K.; Wunder, E.; Alheit, K.; Hahn, V.; Maurer, H.P.; Reif, J.C.; Würschum, T.; Müller, J.; et al. Breedvision—A multi-sensor platform for non-destructive field-based phenotyping in plant breeding. Sensors 2013, 13, 2830–2847. [Google Scholar] [CrossRef] [PubMed]

- Deery, D.; Jimenez-Berni, J.; Jones, H.; Sirault, X.; Furbank, R. Proximal Remote Sensing Buggies and Potential Applications for Field-Based Phenotyping. Agronomy 2014, 5, 349–379. [Google Scholar] [CrossRef] [Green Version]

- Behmann, J.; Acebron, K.; Emin, D.; Bennertz, S.; Matsubara, S.; Thomas, S.; Bohnenkamp, D.; Kuska, M.T.; Jussila, J.; Salo, H.; et al. Specim IQ: Evaluation of a new, miniaturized handheld hyperspectral camera and its application for plant phenotyping and disease detection. Sensors 2018, 18, 441. [Google Scholar] [CrossRef] [Green Version]

- Whetton, R.L.; Waine, T.W.; Mouazen, A.M. Hyperspectral measurements of yellow rust and fusarium head blight in cereal crops: Part 2: On-line field measurement. Biosyst. Eng. 2018, 167, 144–158. [Google Scholar] [CrossRef] [Green Version]

- Leemans, V.; Marlier, G.; Destain, M.-F.; Dumont, B.; Mercatoris, B. Estimation of leaf nitrogen concentration on winter wheat by multispectral imaging. In Proceedings of the Hyperspectral Imaging Sensors: Innovative Applications and Sensor Standards 2017, Anaheim, CA, USA, 12 April 2017; SPIE—International Society for Optics and Photonics: Bellingham, WA, USA, 2017. [Google Scholar]

- Bebronne, R.; Carlier, A.; Meurs, R.; Leemans, V.; Vermeulen, P.; Dumont, B.; Mercatoris, B. In-field proximal sensing of septoria tritici blotch, stripe rust and brown rust in winter wheat by means of reflectance and textural features from multispectral imagery. Biosyst. Eng. 2020, 197, 257–269. [Google Scholar] [CrossRef]

- Genser, N.; Seiler, J.; Kaup, A. Camera Array for Multi-Spectral Imaging. IEEE Trans. Image Process. 2020, 29, 9234–9249. [Google Scholar] [CrossRef]

- Jiménez-Bello, M.A.; Ballester, C.; Castel, J.R.; Intrigliolo, D.S. Development and validation of an automatic thermal imaging process for assessing plant water status. Agric. Water Manag. 2011, 98, 1497–1504. [Google Scholar] [CrossRef] [Green Version]

- Möller, M.; Alchanatis, V.; Cohen, Y.; Meron, M.; Tsipris, J.; Naor, A.; Ostrovsky, V.; Sprintsin, M.; Cohen, S. Use of thermal and visible imagery for estimating crop water status of irrigated grapevine. J. Exp. Bot. 2007, 58, 827–838. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Yang, W.; Wheaton, A.; Cooley, N.; Moran, B. Efficient registration of optical and IR images for automatic plant water stress assessment. Comput. Electron. Agric. 2010, 74, 230–237. [Google Scholar] [CrossRef]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef] [Green Version]

- Rabatel, G.; Labbé, S. Registration of visible and near infrared unmanned aerial vehicle images based on Fourier-Mellin transform. Precis. Agric. 2016, 17, 564–587. [Google Scholar] [CrossRef] [Green Version]

- Klein, S.; Staring, M.; Murphy, K.; Viergever, M.A.; Pluim, J. Elastix: A Toolbox for Intensity-Based Medical Image Registration. IEEE Trans. Med. Imaging 2010, 29, 196–205. [Google Scholar] [CrossRef] [PubMed]

- Sotiras, A.; Davatzikos, C.; Paragios, N. Deformable medical image registration: A survey. IEEE Trans. Med. Imaging 2013, 32, 1153–1190. [Google Scholar] [CrossRef] [Green Version]

- De Vylder, J.; Douterloigne, K.; Vandenbussche, F.; Van Der Straeten, D.; Philips, W. A non-rigid registration method for multispectral imaging of plants. Sens. Agric. Food Qual. Saf. IV 2012, 8369, 836907. [Google Scholar] [CrossRef] [Green Version]

- Raza, S.E.A.; Sanchez, V.; Prince, G.; Clarkson, J.P.; Rajpoot, N.M. Registration of thermal and visible light images of diseased plants using silhouette extraction in the wavelet domain. Pattern Recognit. 2015, 48, 2119–2128. [Google Scholar] [CrossRef]

- Henke, M.; Junker, A.; Neumann, K.; Altmann, T.; Gladilin, E. Comparison of feature point detectors for multimodal image registration in plant phenotyping. PLoS ONE 2019, 14, 1–16. [Google Scholar] [CrossRef]

- Henke, M.; Junker, A.; Neumann, K.; Altmann, T.; Gladilin, E. Comparison and extension of three methods for automated registration of multimodal plant images. Plant Methods 2019, 15, 1–15. [Google Scholar] [CrossRef]

- Meier, U. Growth Stages of Mono and Dicotyledonous Plants. BBCH Monograph 2nd Edition; Federal Biological Research Centre for Agriculture and Forestry: Quedlinburg, Germany, 2001; ISBN 9783955470715. [Google Scholar]

- Berenstein, R.; Hočevar, M.; Godeša, T.; Edan, Y.; Ben-Shahar, O. Distance-dependent multimodal image registration for agriculture tasks. Sensors 2015, 15, 20845–20862. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dandrifosse, S.; Bouvry, A.; Leemans, V.; Dumont, B.; Mercatoris, B. Imaging wheat canopy through stereo vision: Overcoming the challenges of the laboratory to field transition for morphological features extraction. Front. Plant Sci. 2020, 11, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bradski, G.; Kaehler, A. Learning OpenCV; O’Reilly Media, Inc.: Newton, MA, USA, 2008; ISBN 978-1-4493-1465-1. [Google Scholar]

- Hirschmüller, H. Stereo Processing by Semi-Global Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef]

- Xiong, Z.; Zhang, Y. A critical review of image registration methods. Int. J. Image Data Fusion 2010, 1, 137–158. [Google Scholar] [CrossRef]

- Low, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L. Van Speeded-Up Robust Features (SURF). In Computer Vision and Image Understanding; Springer: Berlin/Heidelberg, Germany, 2008; Volume 110, pp. 346–359. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision; IEEE: Manhattan, NY, USA, 2011; pp. 2564–2571. [Google Scholar]

- Alcantarilla, P.F.; Nuevo, J.; Bartoli, A. Fast explicit diffusion for accelerated features in nonlinear scale spaces. In Proceedings of the BMVC 2013—Electronic Proceedings of the British Machine Vision Conference 2013, Bristol, UK, 9–13 September 2013; BMVA Press: Swansea, UK, 2013. [Google Scholar]

- Srinivasa Reddy, B.; Chatterji, B.N. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef] [Green Version]

- Evangelidis, G.D.; Psarakis, E.Z. Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rueckert, D.; Sonoda, L.I.; Hayes, C.; Hill, D.L.; Leach, M.O.; Hawkes, D.J. Nonrigid Registration Using Free-Form Deformations: Application to Breast MR Images. IEEE Trans. Med. Imaging 1999, 18, 712–721. [Google Scholar] [CrossRef]

- Studholme, C.; Hill, D.L.G.; Hawkes, D.J. An overlap invariant entropy measure of 3D medical image alignment. Pattern Recognit. 1999, 32, 71–86. [Google Scholar] [CrossRef]

- Keszei, A.P.; Berkels, B.; Deserno, T.M. Survey of Non-Rigid Registration Tools in Medicine. J. Digit. Imaging 2017, 30, 102–116. [Google Scholar] [CrossRef]

- Yang, W.P.; Wang, X.Z.; Wheaton, A.; Cooley, N.; Moran, B. Ieee Automatic Optical and IR Image Fusion for Plant Water Stress Analysis. In Proceedings of the 12th International Conference on Information Fusion, Seattle, WA, USA, 6–9 July 2009; IEEE: Manhattan, NY, USA, 2009; pp. 1053–1059. [Google Scholar]

- Yang, W.; Wang, X.; Moran, B.; Wheaton, A.; Cooley, N. Efficient registration of optical and infrared images via modified Sobel edging for plant canopy temperature estimation. Comput. Electr. Eng. 2012, 38, 1213–1221. [Google Scholar] [CrossRef]

- Rohlfing, Torsten, 2013 Image Similarity and Tissue Overlaps as Surrogates for Image Registration Accuracy: Widely Used but Unreliable. IEEE Trans. Med. Imaging 2012, 31, 153–163. [CrossRef] [PubMed] [Green Version]

- Feng, R.; Du, Q.; Li, X.; Shen, H. ISPRS Journal of Photogrammetry and Remote Sensing Robust registration for remote sensing images by combining and localizing feature- and area-based methods. ISPRS J. Photogramm. Remote Sens. 2019, 151, 15–26. [Google Scholar] [CrossRef]

- Zach, C.; Pock, T.; Bischof, H. A Duality Based Approach for Realtime TV-L1 Optical Flow. In Proceedings of the Pattern Recognition; Hamprecht, F.A., Schnörr, C., Jähne, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 214–223. [Google Scholar]

- Javier, S.; Meinhardt-llopis, E.; Facciolo, G. TV-L1 Optical Flow Estimation. Image Process. Line 2013, 1, 137–150. [Google Scholar]

- Nguyen, T.; Chen, S.W.; Shivakumar, S.S.; Taylor, C.J.; Kumar, V. Unsupervised Deep Homography: A Fast and Robust Homography Estimation Model. IEEE Robot. Autom. Lett. 2018, 3, 2346–2353. [Google Scholar] [CrossRef] [Green Version]

- Balakrishnan, G.; Zhao, A.; Sabuncu, M.R.; Guttag, J.; Dalca, A. V An Unsupervised Learning Model for Deformable Medical Image Registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Manhattan, NY, USA, 2018. [Google Scholar]

| Method | Matching | Transformation | Resampling | Library | Origin |

|---|---|---|---|---|---|

| SIFT | Features-based | Homography | Bilinear | OpenCV [35] | [38] |

| SURF | Features-based | Homography | Bilinear | OpenCV | [39] |

| ORB | Features-based | Homography | Bilinear | OpenCV | [40] |

| A-KAZE | Features-based | Homography | Bilinear | OpenCV | [41] |

| DDTM | / | Homography | Bilinear | / | [33] |

| DFT | Area-based | Similarity | Bilinear | imreg_dft | [42] |

| ECC | Area-based | Homography | Bilinear | OpenCV | [43] |

| B-SPLINE | Area-based | B-spline | Bilinear | Elastix [26] | [44] |

| Method | Average Time (s) | Control Points Error (mm) | Plant Mask Error (%) | ||||

|---|---|---|---|---|---|---|---|

| 900 nm | RGB | 900 nm | RGB | 900 nm | 900 nm | |

| SIFT | 4.0 | 3.7 | NA | 3.4 | NA | 9.7 | 9.7 |

| SURF | 6.2 | 3.6 | NA | 3.4 | NA | 9.5 | 9.5 |

| ORB | 1.0 | 5.5 | NA | 3.6 | NA | 10.6 | 10.3 |

| A-KAZE | 2.7 | 3.4 | NA | 3.7 | NA | 10.1 | 9.7 |

| DDTM | 2.6 | 5.2 | NA | 4.2 | NA | 11.7 | 10.5 |

| DFT | 41.3 | 3.9 | NA | 4.1 | NA | 9.7 | 9.8 |

| ECC | 21.9 | 3.2 | 3.0 | 3.0 | 3.0 | 9.8 | 9.7 |

| B-SPLINE | 176.7 | 1.9 | 2.0 | 2.0 | 1.6 | 7.0 | 6.5 |

| Strategy Name | Registration | Plant Mask Erosion | Intensity Averaging | Computation Time | Scope |

|---|---|---|---|---|---|

| REAL-TIME | DDTM | Wide | Wide window | Instantaneous | Suitable for multimodal images |

| FAST | DDTM + ECC | Medium | Medium window | Moderated | Suitable for multimodal images |

| ACCURATE | DDTM + B-SPLINE (coarse grid) | Medium | Small window | Slow | Not suitable for thermal images (if the master is NIR) |

| HIGHLY ACCURATE | DDTM + B-SPLINE (fine grid) | Tiny or none | None | Extremely slow | Limited to mono-modal images |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dandrifosse, S.; Carlier, A.; Dumont, B.; Mercatoris, B. Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions. Remote Sens. 2021, 13, 1380. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13071380

Dandrifosse S, Carlier A, Dumont B, Mercatoris B. Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions. Remote Sensing. 2021; 13(7):1380. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13071380

Chicago/Turabian StyleDandrifosse, Sébastien, Alexis Carlier, Benjamin Dumont, and Benoît Mercatoris. 2021. "Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions" Remote Sensing 13, no. 7: 1380. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13071380