Graph Convolutional Networks by Architecture Search for PolSAR Image Classification

Abstract

:1. Introduction

2. Background

2.1. The Classification Methods of PolSAR Data

2.2. Neural Architecture Search

2.3. Graph Neural Networks

- (1)

- SemiGCN [9]: This is a spectral based graph convolution network. It proposes the graph convolutional rule to use the first-order approximation of spectral convolution on graphs.

- (2)

- Max-Relative GCN (MRGCN) [51]: It adopts residual/dense connections, and dilated convolution in GCNs to solve vanishing gradient and over smoothing problem. It deepens the network from several layers to dozens of layers.

- (3)

- EdgeConv [52]: The EdgeConv is an edge convolution module and is proposed for construction dynamic graph CNN to model the relationship between cloud points. It concatenates the feature of the center point with the feature difference of the two points, and then inputs them into MLP. The EdgeConv ensures that the edge features integrate the local relationship between the points and the global information of the points.

- (4)

- Graph Attention Network (GAT) [53]: GAT uses attention coefficient to aggregate the features of neighbor vertices to the central vertex. Its basic idea is to update the node features according to the attention weight of each node on its adjacent nodes. GAT uses masked self attention layer to solve induction problems.

- (5)

- Graph Isomorphism Network (GIN) [54]: This network mixes with the original features of the central node after each hop aggregation operation of the adjacent features of the graph nodes. In the process of feature blending, a learnable parameter is introduced to adjust its own features, and the adjusted features are added with the aggregated adjacent features.

- (6)

- TopKPooling [55]: It is a graph pooling method, and is used in graph U-Net. Its main idea is to map the embedding of nodes into one-dimensional space, and select the top K nodes as reserved nodes.

3. Proposed Method

3.1. Graph Construction

3.2. Weight-Based Mini-Batch for Large-Scale Graph

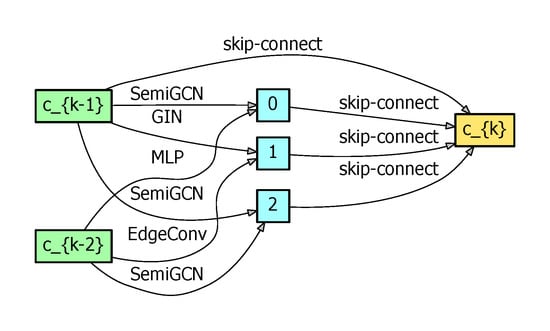

3.3. The Architecture of Our ASGCN

3.4. Comparison on Methods of Graph Partition

- (1)

- Fixed method: A common method is to use these subgraphs for training, and this is called fixed method.

- (2)

- Shuffle: Another method is shuffle. We can randomly select q nodes to constitute a subgraph for training in each epoch.

- (3)

- ClusterGCN [58]: In this method, a strategy called stochastic multiple partitions is proposed. It firstly utilizes the graph clustering algorithm METIS [56], to gather clusters. Then, in each epoch, it randomly samples () clusters and their between-cluster links to form a new batch to solve the unbalanced label distribution problem.

4. Experiments and Analysis

- SVM [24]: It was implemented with LibSVM (https://www.csie.ntu.edu.tw/~cjlin/libsvm/, accessed on 1 Apirl 2021). The kernel function was Radial Basis Function with and penalty coefficient for Flevoland dataset, and and penalty coefficient for San Francisco dataset.

- FS [22]: The number of super-pixels K was chosen among the interval [500, 3000], and the compactness of the super-pixels mpol is selected in the interval [20, 60].

- W-DBN [23]: W-DBN had two hidden layers, and node numbers were set to 50 and 100, respectively. The thresholds was chosen in the interval [0.95, 0.99]. The learning rate was set to 0.01. was set to 0, and the window size was set to 3 or 5.

- CNN [30]: The network included two convolution layers, two max-pooling layers and one fully connected layer. The sizes of the filters in two convolutional layers were 3 × 3 and 2 × 2, respectively, and the pooling size was 2 × 2. The momentum parameter was 0.9, and the weight decay rate was set to .

- DSMR [46]: The number of nearest neighbors and the regularization parameter were among the interval [10, 20] and [1 × , 1 × ], respectively. The weight decay rate is chosen in [, ].

- SemiGCN [9]: The number of hidden units was set to 32 or 64. The number of layers in the network was 3 or 4. Both normalization and self-connections were used. Learning rate and weight decay were set to and , respectively.

- ASGCN (ours): The coefficient of distance weighting was in the range [0, 1]. The number of subgraphs p was in the range [2000, 3000]. Learning rate and weight decay were and , respectively. The numbers of cells, and hidden units were discussed in the experiment.

4.1. Architecture and Parameter Discussion

4.2. Results on the Flevoland Dataset

4.3. Results on the San Francisco Dataset

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lee, J.S.; Ainsworth, T.L. An overview of recent advances in polarimetric SAR information extraction: Algorithms and applications. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 851–854. [Google Scholar]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Freeman, A. Fitting a two-component scattering model to polarimetric SAR data from forests. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2583–2592. [Google Scholar] [CrossRef]

- Ulaby, F.T.; Elachi, C. Radar Polarimetry for Geoscience Applications; Artech House, Inc.: Norwood, MA, USA, 1990. [Google Scholar]

- Zhou, J.; Cui, G.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph Neural Networks: A Review of Methods and Applications. arXiv 2018, arXiv:1812.08434. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; Ainsworth, T.L.; Du, L.J.; Schuler, D.L.; Cloude, S.R. Unsupervised classification using polarimetric decomposition and the complex Wishart classifier. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2249–2258. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; Pottier, E.; Ferro-Famil, L. Unsupervised terrain classification preserving polarimetric scattering characteristics. IEEE Trans. Geosci. Remote Sens. 2004, 42, 722–731. [Google Scholar]

- Freeman, A.; Durden, S.L. A three-component scattering model for polarimetric SAR data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef] [Green Version]

- Silva, W.B.; Freitas, C.C.; Sant’Anna, S.J.; Frery, A.C. Classification of segments in PolSAR imagery by minimum stochastic distances between Wishart distributions. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1263–1273. [Google Scholar] [CrossRef] [Green Version]

- Du, L.; Lee, J. Fuzzy classification of earth terrain covers using complex polarimetric SAR data. Int. J. Remote Sens. 1996, 17, 809–826. [Google Scholar] [CrossRef]

- Kersten, P.R.; Lee, J.S.; Ainsworth, T.L. Unsupervised classification of polarimetric synthetic aperture radar images using fuzzy clustering and EM clustering. IEEE Trans. Geosci. Remote Sens. 2005, 43, 519–527. [Google Scholar] [CrossRef]

- Vasile, G.; Ovarlez, J.P.; Pascal, F.; Tison, C. Coherency Matrix Estimation of Heterogeneous Clutter in High-Resolution Polarimetric SAR Images. IEEE Geosci. Remote Sens. Lett. 2010, 48, 1809–1826. [Google Scholar] [CrossRef]

- Pallotta, L.; Clemente, C.; De Maio, A.; Soraghan, J.J. Detecting Covariance Symmetries in Polarimetric SAR Images. IEEE Geosci. Remote Sens. Lett. 2017, 55, 80–95. [Google Scholar] [CrossRef]

- Pallotta, L.; Maio, A.D.; Orlando, D. A Robust Framework for Covariance Classification in Heterogeneous Polarimetric SAR Images and Its Application to L-Band Data. IEEE Geosci. Remote Sens. Lett. 2019, 57, 104–119. [Google Scholar] [CrossRef]

- Pallotta, L.; Orlando, D. Polarimetric covariance eigenvalues classification in SAR images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 746–750. [Google Scholar] [CrossRef]

- Eltoft, T.; Doulgeris, A.P. Model-Based Polarimetric Decomposition With Higher Order Statistics. IEEE Geosci. Remote Sens. Lett. 2019, 16, 992–996. [Google Scholar] [CrossRef] [Green Version]

- Guo, Y.; Jiao, L.; Wang, S.; Wang, S.; Liu, F.; Hua, W. Fuzzy superpixels for polarimetric SAR images classification. IEEE Trans. Fuzzy Syst. 2018, 26, 2846–2860. [Google Scholar] [CrossRef]

- Liu, F.; Jiao, L.; Hou, B.; Yang, S. POL-SAR Image Classification Based on Wishart DBN and Local Spatial Information. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1–17. [Google Scholar] [CrossRef]

- Fukuda, S.; Hirosawa, H. Polarimetric SAR image classification using support vector machines. IEICE Trans. Electron. 2001, 84, 1939–1945. [Google Scholar]

- Lardeux, C.; Frison, P.L.; Tison, C.; Souyris, J.C.; Stoll, B.; Fruneau, B.; Rudant, J.P. Support vector machine for multifrequency SAR polarimetric data classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 4143–4152. [Google Scholar] [CrossRef]

- She, X.; Yang, J.; Zhang, W. The boosting algorithm with application to polarimetric SAR image classification. In Proceedings of the 1st Asian and Pacific Conference on Synthetic Aperture Radar, Huangshan, China, 5–9 November 2007; pp. 779–783. [Google Scholar]

- Zou, T.; Yang, W.; Dai, D.; Sun, H. Polarimetric SAR image classification using multifeatures combination and extremely randomized clustering forests. EURASIP J. Adv. Signal Proc. 2009, 2010, 1–9. [Google Scholar] [CrossRef] [Green Version]

- He, C.; Li, S.; Liao, Z.; Liao, M. Texture classification of PolSAR data based on sparse coding of wavelet polarization textons. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4576–4590. [Google Scholar] [CrossRef]

- Salehi, M.; Sahebi, M.R.; Maghsoudi, Y. Improving the accuracy of urban land cover classification using Radarsat-2 PolSAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 1394–1401. [Google Scholar]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. ISPRS J. Photo. Remote Sens. 2019, 155, 223–236. [Google Scholar] [CrossRef]

- Bi, H.; Xu, F.; Wei, Z.; Xue, Y.; Xu, Z. An active deep learning approach for minimally supervised PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9378–9395. [Google Scholar] [CrossRef]

- Hänsch, R.; Hellwich, O. Semi-supervised learning for classification of polarimetric SAR-data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 3, pp. 987–990. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Gabbouj, M. Semi-supervised learning for ill-posed polarimetric SAR classification. Remote Sens. 2014, 6, 4801–4830. [Google Scholar] [CrossRef] [Green Version]

- Niu, X.; Ban, Y. An adaptive contextual SEM algorithm for urban land cover mapping using multitemporal high-resolution polarimetric SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1129–1139. [Google Scholar] [CrossRef]

- Zhu, X.J. Semi-Supervised Learning Literature Survey; University of Wisconsin-Madison: Madison, WI, USA, 2005. [Google Scholar]

- Subramanya, A.; Talukdar, P.P. Graph-based semi-supervised learning. Synth. Lect. Artif. Intell. Mach. Learn. 2014, 8, 1–125. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; He, J.; Chang, S.F. Large graph construction for scalable semi-supervised learning. In Proceedings of the 27th International Conference on Machine Learning (ICML 2010), Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Liu, H.; Wang, Y.; Yang, S.; Wang, S.; Feng, J.; Jiao, L. Large polarimetric SAR data semi-supervised classification with spatial-anchor graph. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1439–1458. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, D.; Yang, S.; Hou, B.; Gou, S.; Xiong, T.; Jiao, L. Semisupervised feature extraction with neighborhood constraints for polarimetric SAR classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3001–3015. [Google Scholar] [CrossRef]

- Liu, H.; Yang, S.; Gou, S.; Zhu, D.; Wang, R.; Jiao, L. Polarimetric SAR feature extraction with neighborhood preservation-based deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 1456–1466. [Google Scholar] [CrossRef]

- Liu, H.; Yang, S.; Gou, S.; Chen, P.; Wang, Y.; Jiao, L. Fast classification for large polarimetric SAR data based on refined spatial-anchor graph. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1589–1593. [Google Scholar] [CrossRef]

- Liu, H.; Yang, S.; Gou, S.; Liu, S.; Jiao, L. Terrain Classification based on Spatial Multi-attribute Graph using Polarimetric SAR Data. Appl. Soft Comput. 2018, 68, 24–38. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Z.; Shang, F.; Yang, S.; Gou, S.; Jiao, L. Semi-supervised tensorial locally linear embedding for feature extraction using PolSAR data. IEEE J. Sel. Top. Signal Proc. 2018, 12, 1476–1490. [Google Scholar] [CrossRef]

- Liu, H.; Wang, F.; Yang, S.; Hou, B.; Jiao, L.; Yang, R. Fast Semisupervised Classification Using Histogram-Based Density Estimation for Large-Scale Polarimetric SAR Data. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1844–1848. [Google Scholar] [CrossRef]

- Liu, H.; Shang, F.; Yang, S.; Gong, M.; Zhu, T.; Jiao, L. Sparse Manifold-Regularized Neural Networks for Polarimetric SAR Terrain Classification. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3007–3016. [Google Scholar] [CrossRef]

- Bi, H.; Sun, J.; Xu, Z. A graph-based semisupervised deep learning model for PolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2018, 57, 2116–2132. [Google Scholar] [CrossRef]

- Yao, Q.; Wang, M.; Chen, Y.; Dai, W.; Yi-Qi, H.; Yu-Feng, L.; Wei-Wei, T.; Qiang, Y.; Yang, Y. Taking human out of learning applications: A survey on automated machine learning. arXiv 2018, arXiv:1810.13306. [Google Scholar]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. J. Mach. Learn. Res. 2019, 20, 1–21. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. DARTS: Differentiable Architecture Search. In Proceedings of the 7th International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Li, G.; Xiong, C.; Thabet, A.; Ghanem, B. DeeperGCN: All You Need to Train Deeper GCNs. arXiv 2020, arXiv:2006.07739. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38. [Google Scholar] [CrossRef] [Green Version]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Xu, K.; Hu, W.; Leskovec, J.; Jegelka, S. How Powerful are Graph Neural Networks? In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Gao, H.; Ji, S. Graph U-Nets. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2083–2092. [Google Scholar]

- Karypis, G.; Kumar, V. A fast and high quality multilevel scheme for partitioning irregular graphs. SIAM J. Sci. Comput. 1998, 20, 359–392. [Google Scholar] [CrossRef]

- Dwivedi, V.P.; Joshi, C.K.; Laurent, T.; Bengio, Y.; Bresson, X. Benchmarking Graph Neural Networks. arXiv 2020, arXiv:2003.00982. [Google Scholar]

- Chiang, W.; Liu, X.; Si, S.; Li, Y.; Bengio, S.; Hsieh, C. Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2019, Anchorage, AK, USA, 4–8 August 2019; pp. 257–266. [Google Scholar] [CrossRef] [Green Version]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Fey, M.; Lenssen, J.E. Fast Graph Representation Learning with PyTorch Geometric. In Proceedings of the ICLR Workshop on Representation Learning on Graphs and Manifolds, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; De Grandi, G. Polarimetric SAR speckle filtering and its implication for classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2363–2373. [Google Scholar]

- Fortunato, S. Community detection in graphs. Phys. Rep. 2010, 486, 75–174. [Google Scholar] [CrossRef] [Green Version]

- Fortunato, S.; Hric, D. Community detection in networks: A user guide. Phys. Rep. 2016, 659, 1–44. [Google Scholar] [CrossRef] [Green Version]

| Number of Cells | Hidden Units | Params. (MB) | Test OA (%) |

|---|---|---|---|

| 2 | 16 | 0.0115 | 94.31 |

| 2 | 32 | 0.0388 | 97.21 |

| 2 | 64 | 0.1412 | 95.51 |

| 2 | 128 | 0.5362 | 95.98 |

| 3 | 16 | 0.0161 | 95.19 |

| 3 | 32 | 0.0568 | 99.31 |

| 3 | 64 | 0.2118 | 99.01 |

| 3 | 128 | 0.8168 | 98.37 |

| 4 | 16 | 0.0207 | 94.77 |

| 4 | 32 | 0.0747 | 98.96 |

| 4 | 64 | 0.2825 | 98.65 |

| 4 | 128 | 1.0974 | 97.47 |

| Methods | SVM [24] | FS [22] | W-DBN [23] | CNN [30] | DSMR [46] | SemiGCN [9] | ASGCN (Ours) |

|---|---|---|---|---|---|---|---|

| Stembeans | 83.22 ± 1.893 | 85.13 ± 1.952 | 90.73 ± 2.331 | 93.33 ± 2.132 | 99.82 ± 1.568 | 97.67 ± 1.512 | 97.77 ± 1.231 |

| Rapeseed | 89.01 ± 1.251 | 79.23 ± 1.614 | 90.57 ± 2.451 | 97.45 ± 2.270 | 90.28 ± 1.412 | 97.73 ± 1.486 | 94.71 ± 1.529 |

| Bare Soil | 88.16 ± 1.810 | 76.39 ± 1.642 | 91.71 ± 2.953 | 97.73 ± 2.245 | 86.67 ± 1.356 | 97.29 ± 1.302 | 99.23 ± 0.831 |

| Potatoes | 95.28 ± 1.833 | 83.18 ± 1.846 | 85.67 ± 2.851 | 91.27 ± 2.315 | 99.79 ± 1.632 | 92.78 ± 1.230 | 99.34 ± 0.911 |

| Beet | 86.35 ± 1.917 | 95.23 ± 1.832 | 99.86 ± 2.135 | 99.69 ± 2.412 | 99.76 ± 1.237 | 95.54 ± 1.759 | 98.91 ± 0.938 |

| Wheat2 | 84.47 ± 1.905 | 76.35 ± 1.512 | 89.34 ± 2.412 | 84.91 ± 2.561 | 86.21 ± 1.242 | 94.62 ± 1.637 | 98.74 ± 1.579 |

| Peas | 87.56 ± 1.258 | 85.31 ± 1.831 | 92.81 ± 2.137 | 85.09 ± 2.418 | 98.47 ± 1.122 | 94.06 ± 1.448 | 98.94 ± 0.422 |

| Wheat3 | 76.73 ± 2.018 | 85.11 ± 1.677 | 89.92 ± 2.168 | 99.79 ± 2.111 | 95.56 ± 1.193 | 99.59 ± 1.649 | 99.22 ± 1.144 |

| Lucerne | 87.18 ± 1.316 | 85.81 ± 1.525 | 89.05 ± 2.144 | 70.23 ± 2.104 | 83.84 ± 1.258 | 86.73 ± 1.857 | 96.70 ± 1.700 |

| Barley | 82.26 ± 1.643 | 86.18 ± 1.984 | 88.73 ± 2.587 | 33.73 ± 2.139 | 98.36 ± 1.269 | 97.89 ± 1.268 | 99.34 ± 0.621 |

| Wheat | 81.38 ± 1.700 | 85.12 ± 1.713 | 90.52 ± 2.584 | 95.21 ± 2.516 | 92.03 ± 1.144 | 92.57 ± 1.372 | 98.93 ± 1.137 |

| Grasses | 94.88 ± 1.676 | 82.56 ± 1.656 | 89.43 ± 2.691 | 45.32 ± 2.547 | 64.51 ± 1.581 | 86.75 ± 1.592 | 99.08 ± 1.356 |

| Forest | 81.09 ± 1.748 | 91.63 ± 1.872 | 89.22 ± 2.687 | 99.87 ± 2.138 | 98.50 ± 1.343 | 99.11 ± 1.337 | 98.93 ± 1.483 |

| Water | 83.59 ± 1.420 | 79.20 ± 1.757 | 91.19 ± 2.783 | 88.26 ± 2.147 | 83.16 ± 1.214 | 98.93 ± 1.441 | 99.98 ± 0.473 |

| Buildings | 80.73 ± 1.627 | 76.16 ± 1.729 | 90.73 ± 2.553 | 87.42 ± 2.159 | 99.73 ± 1.231 | 96.43 ± 1.022 | 92.58 ± 1.650 |

| OA | 85.50 ± 1.907 | 84.02 ± 1.738 | 90.33 ± 2.486 | 88.15 ± 2.279 | 92.31 ± 1.311 | 95.55 ± 1.036 | 99.31 ± 1.732 |

| Methods | Water | Vegetation | Low-Density Urban | High-Density Urban | Developed | OA |

|---|---|---|---|---|---|---|

| SVM [24] | 98.69 ± 1.988 | 84.45 ± 1.486 | 50.74 ± 1.364 | 73.53 ± 1.659 | 60.92 ± 1.422 | 85.39 ± 1.907 |

| FS [22] | 90.63 ± 1.776 | 79.77 ± 1.675 | 61.33 ± 1.987 | 80.13 ± 1.888 | 68.21 ± 1.811 | 83.25 ± 1.803 |

| W-DBN [23] | 99.77 ± 2.780 | 89.56 ± 2.913 | 57.64 ± 2.761 | 83.72 ± 2.661 | 65.21 ± 2.703 | 89.75 ± 2.770 |

| CNN [30] | 98.56 ± 2.257 | 81.71 ± 2.164 | 53.24 ± 2.538 | 85.03 ± 2.252 | 62.35 ± 2.290 | 87.73 ± 2.267 |

| DSMR [46] | 99.89 ± 1.464 | 91.63 ± 1.634 | 69.34 ± 1.656 | 87.78 ± 1.552 | 74.91 ± 1.589 | 92.40 ± 1.529 |

| SemiGCN [9] | 98.93 ± 1.469 | 92.03 ± 1.730 | 92.23 ± 1.489 | 91.21 ± 1.349 | 91.09 ± 1.147 | 94.57 ± 1.469 |

| ASGCN (ours) | 99.87 ± 0.881 | 93.77 ± 1.258 | 93.64 ± 1.109 | 93.92 ± 1.529 | 82.33 ± 1.178 | 96.80 ± 1.393 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Xu, D.; Zhu, T.; Shang, F.; Liu, Y.; Lu, J.; Yang, R. Graph Convolutional Networks by Architecture Search for PolSAR Image Classification. Remote Sens. 2021, 13, 1404. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13071404

Liu H, Xu D, Zhu T, Shang F, Liu Y, Lu J, Yang R. Graph Convolutional Networks by Architecture Search for PolSAR Image Classification. Remote Sensing. 2021; 13(7):1404. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13071404

Chicago/Turabian StyleLiu, Hongying, Derong Xu, Tianwen Zhu, Fanhua Shang, Yuanyuan Liu, Jianhua Lu, and Ri Yang. 2021. "Graph Convolutional Networks by Architecture Search for PolSAR Image Classification" Remote Sensing 13, no. 7: 1404. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13071404