1. Introduction

Vegetation phenology describes the life cycle events that occur throughout the year and has been identified as a way of studying ecosystem processes [

1]. In particular, the monitoring of phenology dynamics over the years at various ecological scales helps in understanding how the plants are responding to climate change [

2,

3,

4].

The study of phenology has a long history [

5,

6] and is founded in field observations of phenological events (leaf shooting, flowering, leaf fall, etc.) that respond to internal molecular mechanisms of plants driven by photoperiod, temperature and other factors [

7]. Hence, systematic observations of these events provide important evidence of changes and trends in the climatic variables [

8,

9,

10]. Traditionally, plant phenology relied on human observations of these events made on a limited number of individual plant species, across a small geographic extent [

5,

6]. Vegetation phenology has also been studied at a larger scale using remote sensing [

5,

11]. The intermediate of these two platforms is ‘near-surface’ remote sensing of phenology. A specific remote sensor for this purpose is phenocameras (also known as PhenoCams), which are digital cameras configured to capture time-lapses of the environment, over years or even longer, and can provide a permanent and continuous visual record of vegetation status within that environment [

12]. They can be used to monitor foliage and canopy changes, environmental conditions and, by means of spectral indices, quantify phenology [

5,

12]. Other remote sensors can also monitor phenology, such as multispectral ground sensors (Spectral Reflectance Sensor—SRS), Unmanned Aerial Vehicles (UAVs) and satellites [

12]. These are not designed specifically for phenology monitoring, but can be used for that purpose. Particularly, satellites cover a larger area than PhenoCams, providing insights of phenology at regional and global scales [

13,

14].

Near-surface remote sensing sensors collect data close to the ground to supplement the satellite remote sensing data [

15]. To detect the status of vegetation, the digital numbers (DN) of the captured data are usually converted into spectral reflectance, quantifying reflected radiation in different wavelength bands of the electromagnetic spectrum, typically red (R), green (G), blue (B) and near infrared (NIR). This reflectance is then used to compute vegetation indices (VIs) [

16]. UAVs can carry multispectral cameras that are capable of generating orthomosaics, by means of photogrammetric techniques, that display vegetation canopy from zenith positions. The reliability of derived vegetation indices from consumer grade RGB (red, green, blue) and multispectral cameras mounted on UAV has been demonstrated in some case studies [

17,

18]. Several studies have already shown the enormous potential of UAV in monitoring the phenology dynamics in various tree species across forest communities [

2,

3,

4]. PhenoCams capture the vegetation information in RGB bands in most of the cases, which is directly used to compute RGB VIs without converting the DN values to reflectance. SRS [

19] are used in fixed installations and measure incident and reflected radiation from one or a few wavelength bands, as single signals, continuously in time [

20]. The measured incoming and reflected radiation signals are converted to reflectance and used for calculation of VI products.

These near-surface platforms operate in different temporal and spatial scales and point to the vegetation with different viewing angles, affecting the observations. For instance, UAV cameras permit the imaging of large areas from above (nadir view), whereas PhenoCams view smaller areas, and are usually oriented towards the horizon. Therefore, UAVs represent the canopy and can see the understory of ecosystems (e.g., a forest), while the PhenoCams capture the lateral view of ecosystems (i.e., viewing the protected tree canopies vertically in a forest). Furthermore, SRS and PhenoCams present a much higher temporal resolution (sub-hourly) than UAVs. This makes them more appropriate for defining phenology temporal series.

Upscaling of phenology from field plots to regional and global scales can be achieved by means of satellite remote sensing [

21,

22,

23]. However, differences in spatial and temporal scale as well as angles of observation between field and satellite sensors make upscaling and interpretability difficult. Therefore, it is fortunate that recent years have seen an increase in the availability of low-cost, high-quality sensor systems that can facilitate the step from ground to satellite (i.e., UAVs and SRS). These new systems enhance the possibility of expanding the understanding of ecological studies [

12] and can be chosen according to the user’s specific needs [

24]. The inter-comparison and upscaling from near-ground and satellite data is challenging for similar reasons to those among the cited near-surface sensors, as satellites present different spatial and temporal resolutions and the pre-processing is fairly different, as it involves atmospheric correction of large tiles. Several studies have found good agreement between satellite-based remote sensing and PhenoCams, based on different greenness indices [

1,

13,

14,

25]. However, to our knowledge, there is very little research comparing PhenoCam RGB-based vegetation indices to SRS, UAV and satellite-based VIs, especially in the case of evergreen forests.

Some commonly used indices from near-surface remote sensing instruments include the Normalized Difference Vegetation Indices (NDVI), Green Chromatic Coordinate (GCC), and the Normalized Difference of Green and Red (VIgreen). The NDVI is a ratio of the difference between red and infrared portions of the electromagnetic spectrum and is a measure of the state of plant health [

16]. The GCC is a very common VI for PhenoCam studies [

26,

27] developed as a measure of vegetation greenness to overall image brightness [

28], while the VIgreen uses the green band in place of near-infrared in NDVI formula [

29] to mimic the NDVI values. These VIs have been found to correlate with phenology parameters [

30,

31,

32]. The indices have largely proved their relation to vegetation productivity, biomass and phenology [

14,

18,

33], and have also been used to estimate gross primary productivity of some vegetation types [

34]. Furthermore, they can show how the seasonal cycles of a particular vegetation type influence carbon budgets associated with an ecosystem, or relate how such cycles differ from individual species to landscapes [

26].

Comparison of data and VIs derived from different satellite platforms is a frequently studied topic [

35,

36,

37]. These studies point to the challenge of sensor inter-comparison, as even sensors with similar spatial resolution and band configuration are not the generating same reflectance and VI values [

36,

38]. However, only a few studies have considered comparison of near-surface sensors, and near-surface against satellite sensors. Not only do the satellite-derived VI values differ from sensor to sensor, but these satellites also have different footprints, especially when compared to the SRS. Therefore, the challenge lies in how to make these comparable from the perspective of comparing time series of VIs from different sensors.

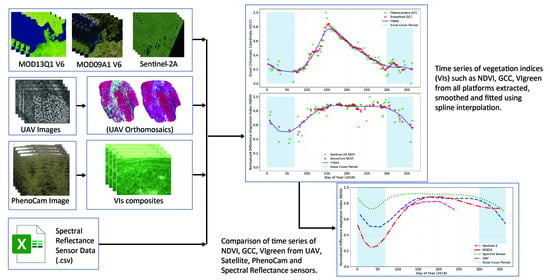

In recent years, the end-to-end (i.e., user to product) development strategy of different near-surface remote sensing systems have simplified the data processing and VI calculation, hence offering end-users the best decisions of use. With a view to further exploring the performance of such sensors building upon previous research, this study aims to test the ability of each sensor for tracking all-year phenology patterns. The main objective of this research is to explore the different aspects of vegetation phenology based on observations of different near-surface and satellite sensors, and to experimentally test and compare how consistent the time series of VIs obtained from different sensors are. Furthermore, the comparison provides insights on the reliability of VIs calculated from each sensor, the capability of each sensor to monitor seasonal dynamics, similarities and limitations of each index and platforms and the causes of these. This research considered three near-surface sensors (UAV, PhenoCam and SRS) and two satellite sensors of different spatial resolution (MODIS and Sentinel-2).

6. Discussion

Using remote sensors to study vegetation phenology at different scales opens up the opportunity to gather information on different aspects of the life cycle of plants to better understand the interaction between climate change and the biosphere. While different near-surface and satellite sensors are available for vegetation phenology monitoring, few studies provide sensor overview and inter-comparisons [

1,

4,

24,

25]. In this research, an effort has been made to compare the performance of different remote sensors to characterize the phenology of a forest. Using combined methods of UAV photogrammetry, phenocamera data analysis, and multispectral sensor data analysis (SRS and satellite), we explored the difference between phenological time series obtained from these platforms, by means of spectral vegetation indices such as NDVI, VIgreen and GCC.

In this study, we observed the complementarity of the data from the studied remote sensors for characterizing vegetation phenology in an evergreen coniferous forest. PhenoCams and SRS offer the flexibility of defining regions of interest within very fine spatio-temporal resolution images (hourly, tree level), and thus allows researchers to model the phenology dynamics of the observed ecosystem, for either individual or a group of species within the landscape. On the other hand, satellite observations cover large regions for global observations [

5], at coarser spatial (decametres to kilometres) and temporal resolutions (days to months). UAVs have the benefit of a fine pixel size (nominally, a few centimetres) and can be operated frequently and avoid atmospheric effects [

4].

Our results show that there are similarities between VIs of the different studied sensors in terms of shape of the phenology curves as indicated by Pearson’ correlation and RMSD showing good agreement between the VIs across the studied sensors. However, there are significant differences in slopes and offsets when comparing the time series of different sensors, for a given VI. As expected, the time series of satellite data (i.e., MODIS and Sentinel-2) are closely correlated and follow a similar time series shape. On the other hand, depending on the VI (NDVI, VIgreen or GCC), we observed different behaviours among sensors. For instance, we noted that UAVNDVI data show a similar pattern to MODISNDVI and Sentinel-2NDVI during the summertime (April to August). Additionally, there is a good agreement between PhenoCamGCC and satelliteGCC for the green-up season (April to May). Regarding VIgreen, there is a relatively good match in determining the peak of the growing season (DOY ~150) between PhenoCamVIgreen, MODISVIgreen and Sentinel-2VIgreen, while the green-up slope of UAVVIgreen and satelliteVIgreen also follow similar patterns. However, these are the only agreements between sensors; apart from these, all sensors differ in slopes on the green-up and senescence, the maximum VI value at the peak of the season, and the phenology transition dates (i.e., beginning, end and length of the growing season). A remarkable difference was observed in the behaviour of SRS sensors; SRSNDVI and SRSVIgreen show significantly higher values than any other sensors, and the length of the growing season is notably longer than for the rest of sensors. Additionally, RMSD related to SRS versus other sensors were generally large.

There are many reasons for the differences between the phenology time series, as described by the different VIs and sensor types. Primarily, it must be noted that the visual seasonal cycle is considerably weaker in evergreen forests than in deciduous forests, and the low annual production of green buds in boreal coniferous forests, in combination with the influence of snow, presents considerable difficulties for detecting the green-up and senescence phases using remote sensing [

60]. Further reasons for the different performances of the sensors are the different spatial resolutions, different spectral band configurations, sensor calibrations, different viewing angles, or differences in data processing [

14,

24,

25,

32]. In addition, the spectral sensors have different spectral response functions, which could cause systematic deviations in the time series of spectral VIs derived from them [

24,

61]. One important factor that might cause differences in the VIs is the viewing angle. In particular, the PhenoCam points towards the horizon and views the canopies of the studied trees (i.e., profile view), while the other studied sensors view from the zenith, viewing the canopy of the trees vertically, including the understory vegetation, soil and snow, through the forest clearings. The varying viewing angles of the studied sensors influence the bidirectional reflectance distribution function (BRDF) [

16]. This affects the spectral and VI values captured by each sensor type [

4]. Moreover, the sun-sensor target viewing geometry is different between the near-ground sensors and the satellites. If we consider the geometry of a typical spruce forest as a collection of conical trees, the shadows projected by the trees influence the reflectance of the forest very differently [

62,

63], depending on the position of the observer (the sensor). If the sensor and the sun are aligned, the sunlight side of the forests is in the view field, whereas, if the observed target is between the sensor and the sun, the shaded side of the forest is in the view field [

62].

Another potential reason for the offset in VIs is the difference in the spectral range and bandwidth of each sensor. The highest difference in bandwidth range is observed in NIR and R (Sequoia: 40 nm; MODIS: 35 nm; Sentinel-2: 106 nm; SRS: 10 nm, for R and NIR). Additionally, the central wavelengths of the NIR bands are different (

Table 2). Even though the central wavelengths of G and R bands were close in all sensors, there are significant differences in bandwidth (Sequoia: 40/40 nm, MODIS: 20/50 nm, Sentinel-2: 36/31 nm, and SRS: 10/10 nm for G and R, respectively). These differences affect the spectral VI values (NDVI, GCC and VIgreen) among sensors. Another reason for deviations in values of the VI time series could be the mixed reflectance characteristics caused by the different spatial resolution of the observed sensors. In addition, the specific days used for image compositing, observation time, and solar elevation angle might add differences in the measured spectral values [

64]. In this study, there was a huge difference in spatial resolution of the sensors (MODIS: 250 m and 500 m, Sentinel-2: 10 m, UAV: ~8 cm, PhenoCam: ~17 m and SRS: ~8 m). With the increase or decrease of remote sensing image scale, the observed targets within a pixel at the ground surface, as seen by each of these sensors, are quite different. This will result in generation of mixed pixels [

16] causing different signal intensities of mixed objects, when compared with the signal intensity of large-scale images. Despite the relative homogeneity of the analysed spruce forest, it is likely that data from the satellite sensors are affected by mixed targets, e.g., understory vegetation and soil. In addition, even though we selected the same observation time for UAV, SRS and PhenoCam for inter-comparison, there was a different acquisition time for the satellite sensors.

Despite the different performance of the selected VIs and studied platforms, we can conclude that different sensors address different aspects of the vegetation. These platforms are complementary when characterising phenology and they should be used for different purposes. As observed in our study, we found strong correlation and similar curve fitting between UAV

NDVI and satellite

NDVI. Therefore, UAV can be a solid tool for upscaling from near-ground observations to satellite data. Despite the differences in pixel size, Parrot Sequoia has a similar band configuration as MODIS and Sentinel-2 [

41]. That is not the case for UAV

VIgreen versus MODIS

VIgreen and Sentinel-2

VIgreen, where the time series offset and slopes are very different. Our results coincide with the findings of [

59], where VIgreen index from multiple cameras had the lowest correlation to MODIS in comparison to the GCC. Despite VIgreen being a normalized index like NDVI, the NIR band seem to play an important role in differentiating vegetation productivity [

65,

66]. We also observed a good agreement between PhenoCam

GCC and the satellites

GCC, especially in the green-up phase, as was also observed by [

57,

67], despite GCC not using the NIR band, and both sensor types having different viewing angles and pixel sizes [

14]. This finding validates MODIS and Sentinel-2 as useful sensors for characterizing vegetation phenology at the landscape scale. In summary, the results suggest that VIgreen is not a good proxy for phenology, while NDVI and GCC are, as was previously observed by [

2,

4,

13].

We observed strongly significant different time series pattern between NDVI and GCC (

Figure 9). NDVI saturates at a high value throughout the growing season, with a short green-up and senescence phases. Additionally, for SRS

NDVI there is not a significant increase in NDVI between the green and the non-green seasons. On the contrary, GCC presents a sharper peak in the middle of the growing season, with steep slopes for the senescence phase, and especially for the green-up phase. Therefore, we hypothesize that NDVI, through its sensitivity to red light absorption, is more sensitive to the overall albedo change going from a snowy landscape to the fully developed green canopy. The sensitivity of NDVI is well documented [

60,

68,

69]. On the other hand, the GCC normalizes for albedo and thereby amplifies the green signal of the canopy that relates more directly to the phenology phases. These VIs relate to different aspects of the changing landscape during spring. To note is that both VIs work well at both near-surface and landscape scales, as shown by the agreement between UAV

NDVI vs. satellite

NDVI and PhenoCam

GCC vs. satellite

GCC. Therefore, PhenoCams and UAVs have important and complementary roles for satellite data validation and upscaling [

26,

30,

57,

59,

70].

An important observation of this study is the differences in offset between platforms. As mentioned, the shapes of the time series and correlations among VIs across sensors was strong, especially in summer time. However, we found significant differences in the slopes of the green-up and senescence phases, as well as significant offset in VI values, across sensor types. This suggests that these remote sensors can be interchangeable for qualitative characterization of vegetation phenology, especially of the growing season, but cannot be used indistinctively for quantitative studies or accurate determination of phenology phases [

4,

59,

70]. Another result that supports this is that the statistical calculation of the phenology transition dates (SOS, MOS and EOS) using fitted time series curves from VIs shows certain biases with the real dates, captured in the PhenoCam images by visual inspection. The bias in estimating SOS and EOS was around three days for GCC, while it was up to 8–10 days for NDVI. For VIgreen, the bias ranged between 3 and 8 days. This supports the conclusion that NDVI and VIgreen may be less appropriate estimators of phenology phases than GCC [

4,

14,

59].

A second conclusion of our study is that there is a large uncertainty and differences in VI performance among sensors during the winter season, when there is snow on the ground and trees. The presence of snow beneath the forest canopy is assumed to remain longer compared to the canopy mostly during the spring and can be detected differently across near-surface and satellite sensors [

71]. This eventually affects SOS events derived from time series obtained from different platforms [

72]. While the VI time series are somehow comparable when there is only vegetation present, the behaviour of the VIs is quite random from sensor to sensor in the presence of snow. Complex light scattering and viewing geometry, as well as the likelihood of snow on up-looking sensors, affect the different VI signals observed for snow [

16,

73,

74].

7. Conclusions

In the present paper, near-surface and satellite remote sensors at different scales were inter-compared for the purpose of vegetation phenology characterization. We defined phenology time series from multispectral ground sensors (SRS), PhenoCam, UAV, Sentinel-2 and MODIS data, depicted by three different spectral vegetation indices (VIs), i.e., NDVI, GCC and VIgreen. These platforms differ significantly in spatial and temporal resolutions, viewing angles and observation scales. The aim of this study was therefore to explore differences in the time series phenology curves from sensor to sensor and for different Vis, and their performances in determining the transition dates in phenology along the year.

Based on the results, we conclude that the studied remote sensing platforms (SRS, UAV, PhenoCam, MODIS and Sentinel-2) and VIs (NDVI, GCC and VIgreen) all produce useful data for phenology research, although the platforms and indices serve different purposes. GCC is the recommended VI for characterizing phenology phases and transition dates, whereas NDVI is suitable for detecting when the landscape changes from winter to summer following snow-melt and canopy green-up. The tested satellite data were validated by the near-surface remote sensors as useful tools for phenology and productivity studies, since PhenoCamGCC and satelliteGCC correlate well, similarly to UAVNDVI and satelliteNDVI, which show good agreement. This proves the potential for upscaling vegetation phenology from near-surface to landscape level by using a combination of sensor systems.