TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images

Abstract

:1. Introduction

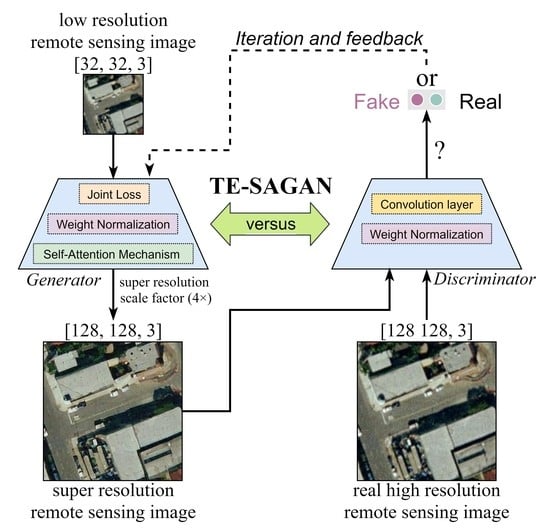

2. Method

2.1. Principle of the Proposed Method

2.2. Generator of TE-SAGAN

2.2.1. Self-Attention Mechanism

2.2.2. Weight Normalization

2.3. Discriminator of TE-SAGAN

2.4. Loss Function

2.4.1. Content Loss

2.4.2. Adversarial Loss

2.4.3. Perceptual Loss

2.4.4. Texture Loss

2.4.5. Total Loss Function

3. Experiment and Analysis

3.1. Evaluation Indexes

3.2. Experiment Preparation

3.2.1. Data Set

3.2.2. Experimental Settings

3.3. Results and Analysis

3.3.1. Quantitative Evaluation

3.3.2. Qualitative Evaluation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road extraction from high-resolution remote sensing imagery using deep learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef] [Green Version]

- Guo, M.; Liu, H.; Xu, Y.; Huang, Y. Building extraction based on U-Net with an attention block and multiple losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Wei, S.; Ji, S. Graph convolutional networks for the automated production of building vector maps from aerial images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602411. [Google Scholar] [CrossRef]

- Xia, L.; Zhang, X.; Zhang, J.; Wu, W.; Gao, X. Refined extraction of buildings with the semantic edge-assisted approach from very high-resolution remotely sensed imagery. Int. J. Remote Sens. 2020, 41, 8352–8365. [Google Scholar] [CrossRef]

- Tom, B.C.; Katsaggelos, A.K. Reconstruction of a High-Resolution Image by Simultaneous Registration, Restoration, and Interpolation of Low-Resolution Images. Proc. Int. Conf. Image Process. 1995, 2, 539–542. [Google Scholar]

- Galbraith, A.E.; Theiler, J.; Thome, K.J.; Ziolkowski, R.W. Resolution Enhancement of Multilook Imagery for the Multispectral Thermal Imager. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1964–1977. [Google Scholar] [CrossRef]

- He, Y.; Yap, K.-H.; Chen, L.; Chau, L.-P. A Soft MAP Framework for Blind Super-Resolution Image Reconstruction. Image Vis. Comput. 2009, 27, 364–373. [Google Scholar] [CrossRef]

- Haut, J.M.; Fernández-Beltran, R.; Paoletti, M.E.; Plaza, J.; Plaza, A.J.; Pla, F. A New Deep Generative Network for Unsupervised Remote Sensing Single-Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6792–6810. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Coupled Adversarial Training for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3633–3643. [Google Scholar] [CrossRef]

- Tsai, R.Y.; Huang, T.S. Multiframe Image Restoration and Registration. Adv. Comput. Vis. Image Process. 1984, 1, 317–339. [Google Scholar]

- Cover, T.; Hart, P. Nearest Neighbor Pattern Classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Duchon, C.E. Lanczos Filtering in One and Two Dimensions. J. Appl. Meteorol. Climatol. 1979, 18, 1016–1022. [Google Scholar] [CrossRef]

- Carlson, R.E.; Hall, C.A. Error Bounds for Bicubic Spline Interpolation. J. Approx. Theory 1973, 7, 41–47. [Google Scholar] [CrossRef] [Green Version]

- Miles, N. Method of Recovering Tomographic Signal Elements in a Projection Profile or Image by Solving Linear Equations. JUSTIA Patents No. 5323007, 21 June 1994. [Google Scholar]

- Stark, H.; Olsen, E.T. Projection-Based Image Restoration. J. Opt. Soc. Am. A-Opt. Image Sci. Vis. 1992, 9, 1914–1919. [Google Scholar] [CrossRef]

- Stark, H.; Oskoui, P. High-Resolution Image Recovery from Image-Plane Arrays, Using Convex Projections. J. Opt. Soc. Am. A Opt. Image Sci. 1989, 6, 1715–1726. [Google Scholar] [CrossRef]

- Irani, M.; Peleg, S.; Chang, H.; Yeung, D.; Xiong, Y. Super Resolution from Image Sequences Super-Resolution through Neighbor Embedding. CVPR 1990, 2, 115–120. [Google Scholar]

- Unser, M.A.; Aldroubi, A.; Eden, M. Fast B-Spline Transforms for Continuous Image Representation and Interpolation. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 277–285. [Google Scholar] [CrossRef]

- Unser, M.A.; Aldroubi, A.; Eden, M. B-Spline Signal Processing: Part I—Theory. IEEE Trans. Signal Process. 1993, 41, 821–833. [Google Scholar] [CrossRef]

- Unser, M.A.; Aldroubi, A.; Eden, M. B-Spline Signal Processing: Part II-Efficient Design and Applications. IEEE Trans. Signal Process. 1993, 41, 834–848. [Google Scholar] [CrossRef]

- Xu, Y.; Jin, S.; Chen, Z.; Xie, X.; Hu, S.; Xie, Z. Application of a graph convolutional network with visual and semantic features to classify urban scenes. Int. J. Geogr. Inf. Sci. 2022, 1–26. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the ECCV 2014, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Denton, E.L.; Chintala, S.; Szlam, A.D.; Fergus, R. Deep Generative Image Models Using a Laplacian Pyramid of Adversarial Networks. NIPS 2015, 28, 1486–1494. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 694–711. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-Recursive Convolutional Network for Image Super-Resolution. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1637–1645. [Google Scholar]

- Li, J.; Fang, F.; Mei, K.; Zhang, G. Multi-Scale Residual Network for Image Super-Resolution. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 527–542. [Google Scholar]

- Han, W.; Chang, S.; Liu, D.; Yu, M.; Witbrock, M.; Huang, T.S. Image Super-Resolution via Dual-State Recurrent Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1654–1663. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1874–1883. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. MemNet: A Persistent Memory Network for Image Restoration. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4549–4557. [Google Scholar]

- Tong, T.; Li, G.; Liu, X.; Gao, Q. Image Super-Resolution Using Dense Skip Connections. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4809–4817. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; Shi, W. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 June 2017; pp. 105–114. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Loy, C.C.; Qiao, Y.; Tang, X. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the ECCV Workshops 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Rakotonirina, N.C.; Rasoanaivo, A. ESRGAN+: Further Improving Enhanced Super-Resolution Generative Adversarial Network. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 3637–3641. [Google Scholar]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-ESRGAN: Training Real-World Blind Super-Resolution with Pure Synthetic Data. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 10 October 2021; pp. 1905–1914. [Google Scholar]

- Jo, Y.; Yang, S.; Kim, S.J. Investigating Loss Functions for Extreme Super-Resolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1705–1712. [Google Scholar]

- Salimans, T.; Kingma, D.P. Weight Normalization: A Simple Reparameterization to Accelerate Training of Deep Neural Networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2016; pp. 901–909. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Bangkok, Thailand, 18–22 November 2020; MIT Press: Cambridge, MA, USA, 2020; Volume 2, pp. 2672–2680. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 7–9 July 2015; JMLR.org: Lille, France, 2015; Volume 37, pp. 448–456. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Jolicoeur-Martineau, A. The Relativistic Discriminator: A Key Element Missing from Standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6629–6640. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Ma, W.; Pan, Z.; Guo, J.; Lei, B. Super-resolution of remote sensing images based on transferred generative adversarial network. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1148–1151. [Google Scholar]

- Zhang, Z.; Tian, Y.; Li, J.; Xu, Y. Unsupervised Remote Sensing Image Super-Resolution Guided by Visible Images. Remote Sens. 2022, 14, 1513. [Google Scholar] [CrossRef]

- Guo, M.; Zhang, Z.; Liu, H.; Huang, Y. NDSRGAN: A Novel Dense Generative Adversarial Network for Real Aerial Imagery Super-Resolution Reconstruction. Remote Sens. 2022, 14, 1574. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-Visual-Words and Spatial Extensions for Land-Use Classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, New York, NY, USA, 2–5 November 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 270–279. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. Int. Conf. Learn. Represent. 2014. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Liu, J.; Tang, J.; Wu, G. Residual Feature Distillation Network for Lightweight Image Super-Resolution. In Proceedings of the Computer Vision—ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; Bartoli, A., Fusiello, A., Eds.; Springer International Publishing: Glasgow, UK, 2020; Volume 12537, pp. 41–55. [Google Scholar]

| Dataset | Index | Pre-ESRGAN | Pre-TE-SAGAN |

|---|---|---|---|

| Harbor | SSIM | 0.800 | 0.798 |

| PSNR | 23.250 | 23.154 | |

| Runway | SSIM | 0.819 | 0.821 |

| PSNR | 30.574 | 30.724 | |

| Airplane | SSIM | 0.819 | 0.821 |

| PSNR | 28.748 | 28.800 | |

| Buildings | SSIM | 0.805 | 0.806 |

| PSNR | 26.124 | 26.138 |

| Dataset | Metric | Bicubic | ESPCN | EDSR | SRGAN | ESRGAN | RFDNet | TE-SAGAN |

|---|---|---|---|---|---|---|---|---|

| Test-10000 | SSIM | 0.558 | 0.500 | 0.564 | 0.539 | 0.575 | 0.600 | 0.583 |

| PSNR | 22.854 | 22.141 | 22.855 | 22.695 | 23.500 | 23.650 | 23.700 | |

| FID | 136.312 | 148.199 | 95.226 | 55.252 | 32.587 | 81.866 | 23.771 | |

| Harbor | SSIM | 0.716 | 0.635 | 0.668 | 0.618 | 0.659 | 0.749 | 0.707 |

| PSNR | 20.314 | 19.339 | 19.302 | 18.814 | 19.765 | 21.760 | 20.770 | |

| FID | 147.225 | 169.257 | 205.605 | 227.557 | 135.349 | 115.247 | 121.527 | |

| Runway | SSIM | 0.704 | 0.668 | 0.678 | 0.708 | 0.698 | 0.756 | 0.748 |

| PSNR | 26.010 | 25.393 | 26.863 | 26.794 | 26.959 | 28.069 | 28.467 | |

| FID | 146.718 | 191.391 | 132.028 | 116.549 | 105.031 | 106.095 | 83.282 | |

| Airplane | SSIM | 0.713 | 0.662 | 0.709 | 0.677 | 0.669 | 0.752 | 0.733 |

| PSNR | 25.421 | 23.861 | 24.561 | 23.992 | 24.849 | 26.53 | 26.296 | |

| FID | 179.435 | 201.591 | 158.942 | 143.521 | 89.532 | 119.773 | 82.393 | |

| Buildings | SSIM | 0.699 | 0.625 | 0.670 | 0.643 | 0.656 | 0.746 | 0.720 |

| PSNR | 23.226 | 21.695 | 22.137 | 21.787 | 22.559 | 24.21 | 24.005 | |

| FID | 127.26 | 140.786 | 149.487 | 122.78 | 81.284 | 118.870 | 70.704 | |

| Runtime (min) | / | 107.8 | 3225.6 | 2292.0 | 3072.9 | 251.8 | 2288.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Luo, W.; Hu, A.; Xie, Z.; Xie, X.; Tao, L. TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images. Remote Sens. 2022, 14, 2425. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14102425

Xu Y, Luo W, Hu A, Xie Z, Xie X, Tao L. TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images. Remote Sensing. 2022; 14(10):2425. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14102425

Chicago/Turabian StyleXu, Yongyang, Wei Luo, Anna Hu, Zhong Xie, Xuejing Xie, and Liufeng Tao. 2022. "TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images" Remote Sensing 14, no. 10: 2425. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14102425