1. Introduction

As an essential division of China’s rural land, rural homesteads ensure the basic welfare of farmers and have been a worldwide focus. A comprehensive understanding of the fundamental information such as the scale, layout, ownership, and utilization status of homesteads can provide support for deepening the reform of the rural homestead system. The commonly used statistics of rural homestead-related information mainly depend on the traditional methods such as field surveys and surveying and mapping [

1]. Since these methods suffer from a large workload, long cycle, and low efficiency, it is difficult to meet the country’s needs for homestead statistics and management [

2]. In recent years, the development of high-resolution remote sensing image technology has provided new measures for obtaining homestead information. As a kind of remote sensing images, low-altitude unmanned aerial vehicle (UAV) images have a higher resolution than satellite images, which helps obtain large-scale and detailed information on the distribution of homesteads in rural areas without intensive labor. However, using manual visual interpretation alone to illustrate remotely sensed images will also involve great manpower and time costs, which poses serious challenges when addressing thousands of rural homestead surveys. Therefore, this requires fast and accurate automation technologies to interpret the rural homesteads in remote sensing images.

The essence of rural homestead recognition is to extract the rural buildings and related areas from the images. The use of high-resolution remote sensing images to automatically extract buildings has always been a hot issue, and it has great significance for urban and rural planning, map updating, disaster assessment, geographic information system database updating, and other applications [

3,

4,

5]. With the development of deep learning technologies, fast and accurate building extraction has become possible. Due to the irregular shapes and boundaries of buildings, the semantic segmentation method appears to be the most prospective for precisely extracting buildings in high-resolution remote sensing images, and the extracted targets can also be used further for subsequent analysis and calculations. The contribution of the pioneering work of deep learning in the field of semantic segmentation, fully convolutional networks (FCNs), is to employ an end-to-end convolution neural network and also use deconvolution for up-sampling [

6]. Furthermore, a jump connection method was used to fuse the deep and shallow information of the network. Then, U-Net added a channel feature fusion method on the basis of FCN, fusing the underlying information and advanced information in the channel dimension, which is more helpful for obtaining boundary information [

7]. As the latest version of the DeepLab series proposed by Google, DeepLabV3+ employed the encoder–decoder architecture and atrous spatial pyramid pooling module to obtain multi-scale spatial information and integrate low-level features into high-level semantic information [

8]. DANet combines channel attention mechanism and spatial attention mechanism to enhance the expression ability of foreground and make the network focus more on the target pixels [

9]. Given that the semantic segmentation algorithms have achieved excellent performance, many scholars have improved the existing models suitable for urban building extraction. For instance, Xu et al. combined the attention mechanism module and the multi-scale nesting module to improve the U-Net algorithm [

10]. Zhang et al. proposed the JointNet algorithm with atrous convolution to extract urban buildings and roads [

11]. Ye et al. studied the semantic gap of features at different stages in semantic segmentation and re-weighted the attention mechanism to extract buildings [

12]. Aiming at the problem of the difficulty of recognizing a building boundary using semantic segmentation, Xia et al. proposed the Dense D-LinkNet architecture to realize the precise extraction of the building [

13].

Buildings in rural areas are generally scattered and disordered, but in partial areas, rural houses are closely connected. In order to calculate the quantitative indicators such as the number and area of rural homesteads, a high-precision algorithm is required to extract rural homesteads from satellite images. Recently, semantic segmentation methods have been successfully applied for extracting and detecting rural buildings. Pan et al. employed U-Net to extract and recognize urban village buildings based on Worldview satellite images [

14]. Ye et al. combined Dilated-Resnet and SE-Module with FCN to discriminate rural settlements using Gaofen-2 images [

15]. Sun et al. used a two-stage CNN model to detect rural buildings [

16]. Li et al. focused on the problem of distinguishing old and new rural buildings and then improved the instance segmentation algorithm Mask-RCNN in combination with the threshold mask method to recognize old and new buildings in rural areas [

17].

Despite satisfactory performance in the extraction of buildings in urban areas [

17,

18], the biggest challenge that needs to be addressed for accurately calculating the relevant indicators of rural homesteads is the need to refine the extraction of closely connected rural buildings. There are many types of features in rural areas, and some homesteads are similar to farmlands, roads, waters, and other background categories in terms of color, brightness, and texture. This will greatly interfere with the extraction of foreground targets. In this case, a potential solution is to build an effective semantic segmentation model with strong feature extraction and scene understanding capabilities, so that the relationship between foreground and background can be better discriminated by aggregating contextual information, which will reduce the interference of non-foreground factors on foreground targets. In addition, since most homesteads are closely adjacent and there is little spacing, it is difficult to extract the boundaries of homesteads, which causes the deviation of homestead statistics. As a result, the most desirable approach is to extract and optimize the homestead boundary for a second time to refine the initial segmentation results. Benefitting from the development of deep learning technologies, edge detection methods based on deep learning have begun to emerge. For instance, HED [

19] is an end-to-end edge detection network based on VGG-16 [

20], which is characterized by extracting features of multiple scales, multi-level deep supervision, and fusion. DecoupleSegNet [

21] decoupled the edge part of high frequency and the body part of low frequency in an image and supervised the body part and edge separately to explicitly sample different parts to achieve the purpose of optimizing the boundary. CED [

22] was proposed to solve the problem of the insufficient crispness of edge extraction, which could generate a thinner edge map than HED. Therefore, there is an urgent need to build a rural homestead recognition system using a multi-task joint framework in a precise and intelligent way.

Previous research has proven that multi-task architecture is an effective method for improving learning efficiency, prediction accuracy, and generalization for computer vision tasks [

21,

23,

24,

25]. In this study, a multi-task learning architecture (named MBNet) is proposed with the purpose of obtaining detailed information and high-level semantic information from feature maps and constraining the consistency of predicted binary map points on the boundary with ground truth. Specifically, the network is composed of a detail branch, a semantic branch, and a boundary branch. The detail branch maintains high-resolution parallelism, which is used to extract the low-level detail information of the feature map and interact with the semantic branch. A mixed-scale spatial attention module is added at the top of the semantic branch to obtain contextual weighted information, which makes the network pay more attention to the foreground targets. A boundary branch is designed on the detail branch to extract and optimize the boundary of the extraction target. In addition, a multi-task joint loss function that includes the weighted binary cross-entropy loss and Dice loss is designed to generate accurate semantic masks and clear boundary predictions. It should be noted that, inspired by PointRend [

26], a novel point-based refinement module (named PTPM) is proposed, which uses boundary information to refine semantic information, enabling multi-task interaction.

3. Results

In this section, we describe the method of processing the dataset in experiments and then provide experimental parameter settings and quantitative evaluation methods. Finally, we compare the quantitative evaluation results of the proposed method with those of other state-of-the-art methods and analyze the reasons leading to these results.

3.1. Dataset

The dataset in this study covers the entire county areas of Deqing County, and the remote sensing images have rich landform features, including mountainous landforms, hilly landforms, and plain landforms. The distribution and characteristics of homesteads in different landforms vary greatly. Different kinds of datasets are divided for analysis and comparison according to the characteristics of the landforms. In addition, a suburban homestead is different from the above; it is larger and relatively regular, and also closely arranged, so it is also added as a separate dataset.

The original remote sensing image is labeled with LabelMe to generate a mask. In order to train the boundary branch, we generate ground truth through the distance map transform method [

47]. Specifically, we calculate the Euclidean distance between each pixel in the image and the background point (pixel value is 0) to obtain a binarized segmentation mask map and then set a threshold, and points smaller than the threshold are considered as boundary points. Here, we set the threshold to be

.

Since the GPU memory cannot accommodate large-sized remote sensing images and labels, we crop the image to 512 × 512 pixels. Considering that there are data imbalance and multi-scale problems in the dataset, and in order to expand the dataset, the cropping strategy is divided into two steps:

Starting from the upper left corner of the image, the number of clipping sliding steps is 256. When cropping to a certain area, the number of background pixels/total number of pixels ≤ 0.92, and then the number of sliding steps is reduced by half; otherwise, the number of sliding steps is multiplied by 0.9 and then rounded up.

After randomly cropping the image; if the number of background pixels/total number of pixels ≤ 0.9, it is kept; otherwise, it is discarded.

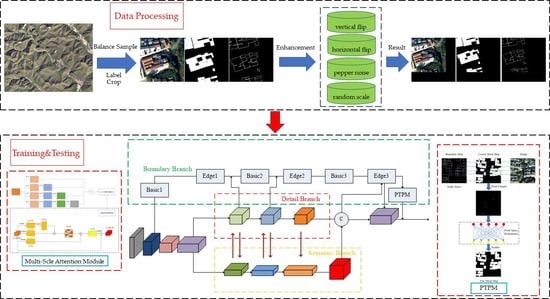

As shown in

Figure 5, we perform data modification according to a certain probability during training for each cropped image, including random scaling, random horizontal and vertical flipping, random salt and pepper noise, random color dithering, etc.

3.2. Experimental Setting

All of the experiments in this paper were implemented based on the PyTorch framework. The network was initialized with He_normal [

48] instead of using pretrained weights. We chose the SGD algorithm with momentum as the optimizer and set the momentum to 0.9 and the initial learning rate to 1 × 10

2. The learning rate decay follows the following rules:

where

base_lr is the learning rate of the last update,

cur_iters is the current number of steps,

max_iters is the total number of training steps, and the value of power is set to 0.9.

All experiments were processed on a Linux server with 128 GB RAM and 6 × 16 GB Tesla P100 GPUs. The batch size was 4 on each GPU, and the batch size for each training was 16.

3.3. Evaluation Metric

In order to accurately evaluate the performance of the proposed method in this paper, we selected five evaluation metrics commonly used in the field of semantic segmentation, namely

OA,

precision,

recall,

F1-

score, and

IOU.

F1-

score is also used to evaluate the effect of the boundary. Their definitions are as follows:

where

TP (true positive) denotes the correctly identified homestead pixels,

FP (false positive) denotes false predictions on positive samples,

FN (false negative) denotes false predictions on negative samples, and

TN (true negative) denotes the correctly identified background pixels.

3.4. Comparisons and Analysis

To verify the performance of MBNet, we contrasted the performance and also completed ablation experiments on the dataset. First, we compared the performance of MBNet with several state-of-the-art (SOTA) algorithms. Then, we performed boundary branch ablation experiments to compare the performance of the network with and without the boundary branch. In addition, we conducted refined loss ablation experiments to compare the performance of the network under different losses.

In this study, we compared MBNet with six SOTA algorithms, namely FCN-ResNet [

6], UNet-ResNet [

7], DeepLabV3+ [

8], HRNetV2 [

49], MAP-Net [

50], and DMBC-Net [

51]. The first four are general SOTA models in the field of computer vision, and the latter two are the latest building semantic segmentation models for remote sensing images. Considering the fairness of the comparison, the backbones of the models we choose to compare are almost all based on the ResNet paradigm. As the pioneering work of semantic segmentation, FCN has been widely applied in many aspects. U-Net is a typical encoding–decoding structure model that integrates the underlying information and is more friendly to boundary processing. DeepLabV3+ is the latest version of the DeepLab series, using ResNet-50 as the backbone, in which the ASPP structure can fully learn multi-scale information. HRNetV2 is a recent SOTA model in the field of convolutional neural networks; it learns multi-scale semantic information while maintaining resolution. MAP-Net learns spatially localized preserved multi-scale features through multiple parallel paths and uses an attention module to optimize multi-scale fusion. DMBC-Net learns semantic information and boundary information through multi-task learning and uses boundary information to optimize the segmentation effect.

For a fair comparison, we adopted the same initial learning rate, number of training steps, optimizer, and other hyperparameter setting strategies during training. In addition, we also analyze the results at the end of this section.

3.4.1. Comparison with SOTA Methods on Mountain Landforms

As shown in

Table 1, the proposed method achieves the best results of all methods. All quantitative evaluation indicators of MBNet surpass those of other methods, and also the visual effect of segmentation is the best. Compared with HRNetV2, which is the best general algorithm in the computer vision field, MBNet is 1.66%, 1.61%, and 1.37% higher in

IOU,

recall, and

F1-

score, respectively. Compared with DMBC-Net, which is specially designed for building extraction, MBNet is 0.89%, 1.15%, and 0.60% higher in

IOU,

recall, and

F1-

score, respectively.

Figure 6,

Figure 7 and

Figure 8 show some example results of extracting rural homesteads on mountainous landforms by different methods. The extraction of homesteads on mountainous landforms is simpler than on other landforms, and thus HRNetV2, MAP-Net, and DMBC-Net perform well in the extraction effects, but there are some defects in the boundary. Although HRNetV2 always maintains high resolution, it lacks fine processing of boundaries, so some complex boundaries cannot be correctly identified. Despite average pooling and larger atrous rate used in MAP-Net and DMBC-Net to extract contextual information, the detailed homestead information is easily ignored, which destroys the continuity of their texture structure and geometric information. This is more evident in DeepLabv3+, resulting in more FNs in DeepLabv3+. In contrast, the MBNet extraction results have more correct and complete boundaries. The interaction between the detail branch and the semantic branch of MBNet makes the underlying image with high resolution contain rich semantic information. At the same time, the boundary branch extracts fine boundaries from the detail branch rich in semantic information to improve the boundary effect of the segmentation results. Since FCN and U-Net lack the ability to aggregate multi-scale semantic information from context and have a weak semantic understanding of images, some shadows and roads similar to the color and texture of homesteads are misidentified as homesteads, which leads to more FPs.

3.4.2. Comparison with SOTA Methods on Plain Landforms

Table 2 shows the quantitative evaluation results of rural homestead extraction on plain landforms. Due to the complexity of the plain landform scene, the variety of ground objects, and the diversity of homestead texture structures, homestead extraction on plain landforms is more difficult than on other landform types; nevertheless, MBNet is still the best in terms of visual effects and in quantitative evaluations. Compared with HRNetV2 and DMBC-Net, MBNet achieves higher

IOU and

F1-

score, respectively.

Figure 9,

Figure 10 and

Figure 11 show some example results of extracting rural homesteads on plain landforms by different methods. Plain landforms contain a large number of non-homestead objects whose spectrum is similar to homestead, including shadows of buildings, farmland, and roads. These background objects can be easily identified as homestead objects. In addition, the buildings have a complex texture structure and are closely arranged, and two adjacent homesteads can easily be identified as the same object. Because FCN and U-Net networks do not have the ability to aggregate contextual multi-scale information, these misleading background objects are easily marked as homesteads, so a large number of FPs are presented in the results. DeepLabv3+ ignores many details of homesteads due to the large atrous rate. Similarly, DMBC-Net also suffers from this problem, but the existing boundary constraints improve the effect of homestead extraction. The ability of HRNet to extract multi-scale information and fusion is insufficient, and some contexts are easily identified as homesteads. Furthermore, due to the existence of multi-scale average pooling in MAP-Net, the details and small objects at the boundary are blurred. By comparison, the proposed MBNet addresses these issues using multiple branches. The mixed-scale spatial attention module of the semantic branch aggregates the multi-scale information of the context and weights the background and objects to highlight the more important foreground objects. The detail branch and the boundary branch are used to extract the refined semantic boundary of the homestead, which helps improve the segmentation results.

3.4.3. Comparison with SOTA Methods on Hilly Landforms

Table 3 shows the quantitative evaluation results of rural homestead extraction on hilly landforms. The hilly landform scene is similar to the plain area, and there are many types of homestead texture structures. However, the remote sensing images are not as clear as those of plain landforms due to clouds and fog. This creates a new challenge for extracting homesteads. For the quantitative evaluation of various methods, MAP-Net and MBNet are the least affected by cloud disturbance, and MBNet has the best quantitative evaluation result. Compared with HRNetV2, MBNet is 1.37% and 1.02% higher in

IOU and

F1-

score, respectively. Furthermore, compared with MAP-Net, MBNet is 0.72% and 0.24% higher in

IOU and

F1-

score, respectively.

Figure 12,

Figure 13 and

Figure 14 show some example results of extracting rural homesteads on hilly landforms by different methods. Although the hilly landform is similar to the plain landform scene, the overall evaluation result is inferior to that of the plain landform due to the presence of clouds and fog in the images of some hilly landforms. This helps evaluate the robustness of the proposed algorithm in different complex scenarios. As can be seen from

Figure 12 and

Table 3, the segmentation results of FCN and U-Net are greatly affected by the clouds and fog, and the value of IoU drops significantly. This can be attributed to the lack of multi-scale aggregation modules, and the semantic information of images cannot be fully understood. However, the MAP-Net is not affected, and instead the IoU value increases. This is because the channel attention squeeze module works in the network. Specifically, this module can weight the foreground objects in the channel dimension and is insensitive to changes in the color channels of the image. Therefore, the mixed-scale spatial attention module in the proposed MBNet is weighted from the spatial dimension. When the pixel information under the clouds and fog cannot be understood, the semantic information can be understood through other surrounding and long-range pixels, which reduces the influence of clouds and fog on the image. The influence of clouds and fog shows that the proposed model has strong robustness. The precise boundary results predicted by the detail branch and the boundary branch improve the segmentation effect, making the prediction results of MBNet the best.

3.4.4. Comparison with SOTA Methods on Suburban Landforms

Table 4 shows the quantitative evaluation results of rural homestead extraction on suburban landforms. Compared with the above three landforms, homesteads in the suburbs are distinct, and they are more like buildings in urban areas which are regular in shape, densely arranged, and numerous. However, there are many types of homesteads, and there are a large number of background objects with a similar spectrum. All quantitative evaluation metrics of the proposed MBNet are the highest in the test set, which is consistent with the conclusion reached for the other three landforms. Compared with HRNetV2, MBNet is 2.37%, 1.06%, and 1.29% higher in

IOU,

recall, and

F1-

score, respectively. Compared with DMBC-Net, MBNet is 0.89%, 0.46%, and 0.61% higher in

IOU,

recall, and

F1-

score, respectively.

Figure 15,

Figure 16 and

Figure 17 show some example results of extracting rural homesteads on suburban images by different methods. The homesteads have a large proportion in the suburb image, which helps provide more targets for the training model. However, there are many types of homesteads in the suburbs, and there are a large number of non-homestead background objects with a similar spectrum and texture to homesteads, such as the ceiling in

Figure 15, which can be easily identified as homesteads. Moreover, the houses have various contours and irregular shapes, and the predicted result boundary is not accurate enough. For FCN and U-Net methods, the lack of contextual multi-scale modules makes them weak in understanding image semantic information, and they are likely to identify shadows and ceilings as homesteads. The atrous rate design of DeepLabV3+ makes it easily ignore many details, resulting in the omission of many homestead objects. However, the multi-scale aggregation ability of HRNet is not sufficient, and it lacks a deep understanding of semantic information, which produces many misidentifications. Due to the mixed-scale spatial attention module of MBNet, the receptive field of MBNet covers the whole image, and it pays more attention to the foreground area. Therefore, the understanding of context semantics is better than that of MAP-Net and DMBC-Net, and there are fewer misidentifications. Compared with other models, the boundary improvement module of MBNet has an obvious effect on the extraction of homesteads in the suburbs.

Figure 15 and

Figure 16 demonstrate the boundary extraction ability of MBNet, and it can be seen that the boundary of MBNet is the most complete with the highest accuracy.

5. Conclusions

In this paper, we propose a multi-task learning framework, MBNet, with a detail branch, a semantic branch, and a boundary branch for rural homestead extraction from high-resolution remote sensing images. Usually, semantic segmentation tasks need to learn high-level semantic features through continuous down-sampling, but this will reduce image resolution and destroy image details. In order to keep the detail information, this paper designs a detail branch, which maintains the resolution of the image after down-sampling by 4 times. At the same time, for obtaining multi-scale spatial aggregation information, this paper designs a semantic branch and adds a mixed-scale spatial attention module at the end of the semantic branch to extract multi-scale information and enhance information representation. In addition, this paper also designs a boundary branch to maintain the consistency of the boundary and ground truth. This branch obtains boundary information and optimizes the boundary information of the segmentation map through the point-to-point module (PTPM). We conducted experiments on UAV images in Deqing County, Zhejiang Province, to verify the effectiveness of the proposed method. Deqing County is divided into mountainous landforms, plain landforms, and hilly landforms. Considering the differences in homesteads, we added the suburbs in the densely populated areas as one of the experimental objects, and the complex terrain environment as well as the dense rural buildings can help verify the applicability and robustness of the proposed method. The experimental results show that our method achieves better results than other advanced methods and can extract the homesteads accurately from the remote sensing images, which lays a foundation for the quantitative research of homesteads. Our research has verified the effectiveness of MBNet in experiments. MBNet can be applied to end-to-end extraction of dense rural homesteads on satellite or UAV images. Furthermore, MBNet can also be used to extract dense and difficult-to-recognize features in the image. In future work, we will consider the instance objects of rural homesteads from the perspectives of self-supervision and weak supervision, and we will describe each instance object with the method of instance segmentation.