Extraction of Broad-Leaved Tree Crown Based on UAV Visible Images and OBIA-RF Model: A Case Study for Chinese Olive Trees

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

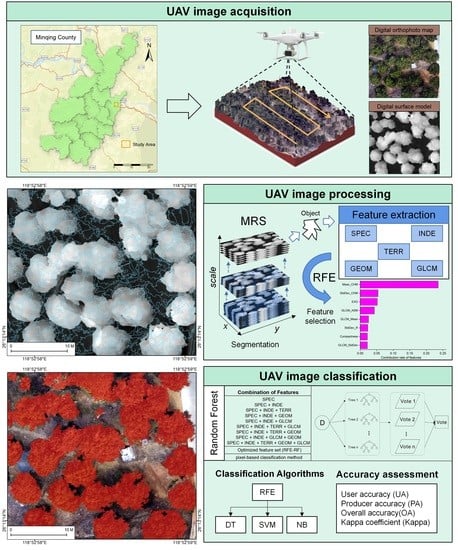

2.2. UAV Image Acquisition and Pre-Processing

2.2.1. Data Acquisition

2.2.2. UAV Image Segmentation

2.3. Crown Feature Extraction and Selection

2.3.1. Crown Elevation Information Extraction

2.3.2. Crown Segmentation Object Feature Extraction

- (1)

- SPEC: Eight subclass features, i.e., the mean (Mean) and standard deviation (StdDev) of the three bands in the visible image, including the mean of the red band (Mean_R), mean of the green band (Mean_G), mean of the blue band (Mean_B), red band standard deviation (StdDev_R), green band standard deviation (StdDev_G), blue band standard deviation (StdDev_B), band maximum difference (Max_diff), and brightness.

- (2)

- INDE: Seven vegetation index subclass features (Table 2), i.e., excess green index (EXG), excess red index (EXR), modified green-red vegetation index (MGRVI), red-green-blue vegetation index (RGBVI), normalized green-blue difference index (NGBDI), normalized green-red difference index (NGRDI), and excess green minus excess red (EXGR).

- (3)

- GLCM: Twelve subclass features for a total of seven texture factors are listed (Table 3). It contains the mean, entropy, angular second moment (ASM), and contrast of the GLCM and gray-level difference vector (GLDV), as well as the correlation, dissimilarity, and homogeneity of the GLCM in all directions.

- (4)

- GEOM: 17 subclass features, i.e., border index, border length, area, volume, width, length, length/width, compactness, shape index, density, roundness, asymmetry, number of pixels, ellipse fitting, rectangle fitting, radius of largest enclosing ellipse, and radius of smallest enclosing ellipse.

- (5)

- TERR: Two subclass features, i.e., the mean (Mean_CHM) and standard deviation (StdDev_CHM) of the relative crown elevation (presented in Equation (2)).

| Vegetation Index | Full Name | Equation | Reference |

|---|---|---|---|

| EXG | excess green index | 2G − R − B | [46] |

| EXR | excess red index | 1.4R − G | [47] |

| MGRVI | modified green-red vegetation index | (G2 − R2)/(G2 + R2) | [48] |

| RGBVI | red-green-blue vegetation index | (G2 − BR)/(G2 + BR) | [49] |

| NGBDI | normalized green-blue difference index | (G − B)/(G + B) | [50] |

| NGRDI | normalized green-red difference index | (G − R)/(G + R) | [51] |

| EXGR | excess green minus excess red | 2G − R − B − (1.4R − G) | [52] |

2.4. RF Parameter Configuration and Feature Selection

2.4.1. RF Model Introduction

2.4.2. RF Parameter Configuration

2.4.3. Feature Optimization

2.5. Research Scheme Design

- (1)

- To study the results of different feature combination schemes for COTC extraction. First, the spectral features of visible images were taken as the first scheme (S1). Second, since the vegetation index was the most commonly used feature that could effectively distinguish between different vegetation types, as well as between vegetation and other ground object types [60], the spectral and constructed vegetation index features were used as the second scheme (S2). The remaining three feature types were added in sequence in the form of arrangements and combinations, and nine experimental schemes (S1–S9) were constructed.

- (2)

- To study the extraction effect of different algorithms on COTC and assess the extraction accuracy after feature dimensionality reduction. The top eight features ranked by importance were selected as the features of the selected samples; RF, DT, SVM, and NB—four commonly used ML classifiers—were used for training to construct schemes S10–S13.

- (3)

- To compare the effects of the single PB classification and multi-feature fusion OBIA methods on COTC extraction. Based on the RF algorithm, we compared the OBIA and traditional PB classification methods to construct scheme S14.

2.6. Selecting Sample Point and Evaluating Accuracy

2.6.1. Selecting Study Area Sample

2.6.2. Accuracy Evaluation Index

3. Results

3.1. Accuracy Evaluation of Different Feature Combination Schemes

3.2. Accuracy Evaluation of Different Classification Algorithms

- (1)

- In addition, the RF algorithm can obtain high-precision classification results when dealing with a feature set after dimensionality reduction.

- (2)

- The OBIA method can describe the attributes of ground objects more accurately, and the extraction accuracy is higher because the object entity has a more complex shape, texture, and other features and spatial relationships than a single pixel.

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Watanabe, Y.; Hinata, K.; Qu, L.; Kitaoka, S.; Watanabe, M.; Kitao, M.; Koike, T. Effects of Elevated CO2 and Nitrogen Loading on the Defensive Traits of Three Successional Deciduous Broad-Leaved Tree Seedlings. Forests 2021, 12, 939. [Google Scholar] [CrossRef]

- Yao, L.; Wang, Z.; Zhan, X.; Wu, W.; Jiang, B.; Jiao, J.; Yuan, W.; Zhu, J.; Ding, Y.; Li, T.; et al. Assessment of Species Composition and Community Structure of the Suburban Forest in Hangzhou, Eastern China. Sustainability 2022, 14, 4304. [Google Scholar] [CrossRef]

- Xu, R.; Wang, L.; Zhang, J.; Zhou, J.; Cheng, S.; Tigabu, M.; Ma, X.; Wu, P.; Li, M. Growth Rate and Leaf Functional Traits of Four Broad-Leaved Species Underplanted in Chinese Fir Plantations with Different Tree Density Levels. Forests 2022, 13, 308. [Google Scholar] [CrossRef]

- Siljeg, A.; Panda, L.; Domazetovic, F.; Maric, I.; Gasparovic, M.; Borisov, M.; Milosevic, R. Comparative Assessment of Pixel and Object-Based Approaches for Mapping of Olive Tree Crowns Based on UAV Multispectral Imagery. Remote Sens. 2022, 14, 757. [Google Scholar] [CrossRef]

- Ye, Z.; Wei, J.; Lin, Y.; Guo, Q.; Zhang, J.; Zhang, H.; Deng, H.; Yang, K. Extraction of Olive Crown Based on UAV Visible Images and the U2-Net Deep Learning Model. Remote Sens. 2022, 14, 1523. [Google Scholar] [CrossRef]

- Tian, Y.; Wu, B.; Su, X.; Qi, Y.; Chen, Y.; Min, Z. A Crown Contour Envelope Model of Chinese Fir Based on Random Forest and Mathematical Modeling. Forests 2020, 12, 48. [Google Scholar] [CrossRef]

- Majasalmi, T.; Rautiainen, M. The impact of tree canopy structure on understory variation in a boreal forest. For. Ecol. Manag. 2020, 466, 118100. [Google Scholar] [CrossRef]

- Yurtseven, H.; Akgul, M.; Coban, S.; Gulci, S. Determination and accuracy analysis of individual tree crown parameters using UAV based imagery and OBIA techniques. Measurement 2019, 145, 651–664. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Almeida, D.R.A.d.; Papa, D.d.A.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Dong, T.; Zhang, X.; Ding, Z.; Fan, J. Multi-layered tree crown extraction from LiDAR data using graph-based segmentation. Comput. Electron. Agric. 2020, 170, 105213. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Jiang, Z.; Wen, Y.; Zhang, G.; Wu, X. Water Information Extraction Based on Multi-Model RF Algorithm and Sentinel-2 Image Data. Sustainability 2022, 14, 3797. [Google Scholar] [CrossRef]

- Gyawali, A.; Aalto, M.; Peuhkurinen, J.; Villikka, M.; Ranta, T. Comparison of Individual Tree Height Estimated from LiDAR and Digital Aerial Photogrammetry in Young Forests. Sustainability 2022, 14, 3720. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, X.; Huang, L.; Yang, G.; Fan, L.; Wei, P.; Chen, G. An Improved CASA Model for Estimating Winter Wheat Yield from Remote Sensing Images. Remote Sens. 2019, 11, 1088. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Liu, S.; Zhao, J.; Duan, J.; Chen, Z.; Guo, R.; Chu, J.; Zhang, J.; Li, X.; Liu, J. A remote sensing data management system for sea area usage management in China. Ocean. Coast. Manag. 2018, 152, 163–174. [Google Scholar] [CrossRef]

- Miraki, M.; Sohrabi, H.; Fatehi, P.; Kneubuehler, M. Individual tree crown delineation from high-resolution UAV images in broadleaf forest. Ecol. Inform. 2021, 61, 101207. [Google Scholar] [CrossRef]

- Kolanuvada, S.R.; Ilango, K.K. Automatic Extraction of Tree Crown for the Estimation of Biomass from UAV Imagery Using Neural Networks. J. Indian Soc. Remote Sens. 2020, 49, 651–658. [Google Scholar] [CrossRef]

- Sarabia, R.; Aquino, A.; Ponce, J.M.; López, G.; Andújar, J.M. Automated Identification of Crop Tree Crowns from UAV Multispectral Imagery by Means of Morphological Image Analysis. Remote Sens. 2020, 12, 748. [Google Scholar] [CrossRef] [Green Version]

- Sarron, J.; Malézieux, É.; Sané, C.; Faye, É. Mango Yield Mapping at the Orchard Scale Based on Tree Structure and Land Cover Assessed by UAV. Remote Sens. 2018, 10, 1900. [Google Scholar] [CrossRef] [Green Version]

- Ahmadi, P.; Mansor, S.; Farjad, B.; Ghaderpour, E. Unmanned Aerial Vehicle (UAV)-Based Remote Sensing for Early-Stage Detection of Ganoderma. Remote Sens. 2022, 14, 1239. [Google Scholar] [CrossRef]

- Sharma, P.; Leigh, L.; Chang, J.; Maimaitijiang, M.; Caffe, M. Above-Ground Biomass Estimation in Oats Using UAV Remote Sensing and Machine Learning. Sensors 2022, 22, 601. [Google Scholar] [CrossRef] [PubMed]

- Aeberli, A.; Johansen, K.; Robson, A.; Lamb, D.W.; Phinn, S. Detection of Banana Plants Using Multi-Temporal Multispectral UAV Imagery. Remote Sens. 2021, 13, 2123. [Google Scholar] [CrossRef]

- Han, R.; Liu, P.; Wang, G.; Zhang, H.; Wu, X.; Hong, S.-H. Advantage of Combining OBIA and Classifier Ensemble Method for Very High-Resolution Satellite Imagery Classification. J. Sens. 2020, 2020, 1–15. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Nuijten, R.J.G.; Kooistra, L.; De Deyn, G.B. Using Unmanned Aerial Systems (UAS) and Object-Based Image Analysis (OBIA) for Measuring Plant-Soil Feedback Effects on Crop Productivity. Drones 2019, 3, 54. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, Y.; Gan, M.; Zhang, J.; Teng, L.; Wang, K.; Shen, Z.; Zhang, L. Discrimination of Settlement and Industrial Area Using Landscape Metrics in Rural Region. Remote Sens. 2016, 8, 845. [Google Scholar] [CrossRef] [Green Version]

- Fu, G.; Zhao, H.; Li, C.; Shi, L. Segmentation for High-Resolution Optical Remote Sensing Imagery Using Improved Quadtree and Region Adjacency Graph Technique. Remote Sens. 2013, 5, 3259–3279. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Yang, W.; Ren, J. Adaptive scale selection in multiscale segmentation based on the segmented object complexity of GF-2 satellite image. Arab. J. Geosci. 2019, 12, 699. [Google Scholar] [CrossRef]

- Phiri, D.; Morgenroth, J.; Xu, C.; Hermosilla, T. Effects of pre-processing methods on Landsat OLI-8 land cover classification using OBIA and random forests classifier. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 170–178. [Google Scholar] [CrossRef]

- Luciano, A.C.d.S.; Picoli, M.C.A.; Rocha, J.V.; Duft, D.G.; Lamparelli, R.A.C.; Leal, M.R.L.V.; Le Maire, G. A generalized space-time OBIA classification scheme to map sugarcane areas at regional scale, using Landsat images time-series and the random forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 127–136. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Zhou, C.; Yin, L.; Feng, X. Urban forest monitoring based on multiple features at the single tree scale by UAV. Urban For. Urban Green. 2021, 58, 126958. [Google Scholar] [CrossRef]

- Zollini, S.; Alicandro, M.; Dominici, D.; Quaresima, R.; Giallonardo, M. UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA). Remote Sens. 2020, 12, 3180. [Google Scholar] [CrossRef]

- Marcial-Pablo, M.d.J.; Ontiveros-Capurata, R.E.; Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W. Maize Crop Coefficient Estimation Based on Spectral Vegetation Indices and Vegetation Cover Fraction Derived from UAV-Based Multispectral Images. Agronomy 2021, 11, 668. [Google Scholar] [CrossRef]

- Deur, M.; Gašparović, M.; Balenović, I. An Evaluation of Pixel- and Object-Based Tree Species Classification in Mixed Deciduous Forests Using Pansharpened Very High Spatial Resolution Satellite Imagery. Remote Sens. 2021, 13, 1868. [Google Scholar] [CrossRef]

- Rashid, H.; Yang, K.; Zeng, A.; Ju, S.; Rashid, A.; Guo, F.; Lan, S. The Influence of Landcover and Climate Change on the Hydrology of the Minjiang River Watershed. Water 2021, 13, 3554. [Google Scholar] [CrossRef]

- Lagogiannis, S.; Dimitriou, E. Discharge Estimation with the Use of Unmanned Aerial Vehicles (UAVs) and Hydraulic Methods in Shallow Rivers. Water 2021, 13, 2808. [Google Scholar] [CrossRef]

- Lu, H.; Liu, C.; Li, N.; Fu, X.; Li, L. Optimal segmentation scale selection and evaluation of cultivated land objects based on high-resolution remote sensing images with spectral and texture features. Environ. Sci. Pollut. Res. 2021, 28, 27067–27083. [Google Scholar] [CrossRef]

- Rana, M.; Kharel, S. Feature Extraction for Urban and Agricultural Domains Using Ecognition Developer. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-3/W6, 609–615. [Google Scholar] [CrossRef] [Green Version]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Y.; Shang, J.; Liu, M.; Li, Q. Investigating the impact of classification features and classifiers on crop mapping performance in heterogeneous agricultural landscapes. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102388. [Google Scholar] [CrossRef]

- Liu, J.; Qin, Q.; Li, J.; Li, Y. Rural Road Extraction from High-Resolution Remote Sensing Images Based on Geometric Feature Inference. ISPRS Int. J. Geo-Inf. 2017, 6, 314. [Google Scholar] [CrossRef] [Green Version]

- Shao, G.; Han, W.; Zhang, H.; Liu, S.; Wang, Y.; Zhang, L.; Cui, X. Mapping maize crop coefficient Kc using random forest algorithm based on leaf area index and UAV-based multispectral vegetation indices. Agric. Water Manag. 2021, 252, 106906. [Google Scholar] [CrossRef]

- Martins, P.H.A.; Baio, F.H.R.; Martins, T.H.D.; Fontoura, J.V.P.F.; Teodoro, L.P.R.; Silva Junior, C.A.d.; Teodoro, P.E. Estimating spray application rates in cotton using multispectral vegetation indices obtained using an unmanned aerial vehicle. Crop Prot. 2021, 140, 105407. [Google Scholar] [CrossRef]

- Olmos-Trujillo, E.; González-Trinidad, J.; Júnez-Ferreira, H.; Pacheco-Guerrero, A.; Bautista-Capetillo, C.; Avila-Sandoval, C.; Galván-Tejada, E. Spatio-Temporal Response of Vegetation Indices to Rainfall and Temperature in A Semiarid Region. Sustainability 2020, 12, 1939. [Google Scholar] [CrossRef] [Green Version]

- Sánchez-Sastre, L.F.; Alte da Veiga, N.M.S.; Ruiz-Potosme, N.M.; Carrión-Prieto, P.; Marcos-Robles, J.L.; Navas-Gracia, L.M.; Martín-Ramos, P. Assessment of RGB Vegetation Indices to Estimate Chlorophyll Content in Sugar Beet Leaves in the Final Cultivation Stage. AgriEngineering 2020, 2, 128–149. [Google Scholar] [CrossRef] [Green Version]

- Qiu, Z.; Ma, F.; Li, Z.; Xu, X.; Ge, H.; Du, C. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Schellberg, J.; Bareth, G. Multi-Temporal Crop Surface Models Combined with the Rgb Vegetation Index from Uav-Based Images for Forage Monitoring in Grassland. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 991–998. [Google Scholar] [CrossRef] [Green Version]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef] [Green Version]

- Kim, E.-J.; Nam, S.-H.; Koo, J.-W.; Hwang, T.-M. Hybrid Approach of Unmanned Aerial Vehicle and Unmanned Surface Vehicle for Assessment of Chlorophyll-a Imagery Using Spectral Indices in Stream, South Korea. Water 2021, 13, 1930. [Google Scholar] [CrossRef]

- Yang, B.; Wang, M.; Sha, Z.; Wang, B.; Chen, J.; Yao, X.; Cheng, T.; Cao, W.; Zhu, Y. Evaluation of Aboveground Nitrogen Content of Winter Wheat Using Digital Imagery of Unmanned Aerial Vehicles. Sensors 2019, 19, 4416. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gurunathan, A.; Krishnan, B. A Hybrid CNN-GLCM Classifier For Detection And Grade Classification Of Brain Tumor. Brain Imaging Behav. 2022, 16, 1410–1427. [Google Scholar] [CrossRef]

- Karhula, S.S.; Finnila, M.A.J.; Rytky, S.J.O.; Cooper, D.M.; Thevenot, J.; Valkealahti, M.; Pritzker, K.P.H.; Haapea, M.; Joukainen, A.; Lehenkari, P.; et al. Quantifying Subresolution 3D Morphology of Bone with Clinical Computed Tomography. Ann. Biomed. Eng. 2020, 48, 595–605. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shafi, U.; Mumtaz, R.; Haq, I.U.; Hafeez, M.; Iqbal, N.; Shaukat, A.; Zaidi, S.M.H.; Mahmood, Z. Wheat Yellow Rust Disease Infection Type Classification Using Texture Features. Sensors 2021, 22, 146. [Google Scholar] [CrossRef] [PubMed]

- Pantic, I.; Dacic, S.; Brkic, P.; Lavrnja, I.; Jovanovic, T.; Pantic, S.; Pekovic, S. Discriminatory ability of fractal and grey level co-occurrence matrix methods in structural analysis of hippocampus layers. J. Theor. Biol. 2015, 370, 151–156. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Duan, S.-B. Reconstruction of daytime land surface temperatures under cloud-covered conditions using integrated MODIS/Terra land products and MSG geostationary satellite data. Remote Sens. Environ. 2020, 247, 111931. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Zhou, X.; Wen, H.; Zhang, Y.; Xu, J.; Zhang, W. Landslide susceptibility mapping using hybrid random forest with GeoDetector and RFE for factor optimization. Geosci. Front. 2021, 12, 101211. [Google Scholar] [CrossRef]

- Ayala-Izurieta, J.; Márquez, C.; García, V.; Recalde-Moreno, C.; Rodríguez-Llerena, M.; Damián-Carrión, D. Land Cover Classification in an Ecuadorian Mountain Geosystem Using a Random Forest Classifier, Spectral Vegetation Indices, and Ancillary Geographic Data. Geosciences 2017, 7, 34. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Xu, J.; Chen, Y.; Xu, K.; Wang, D. Coastal Wetland Classification with GF-3 Polarimetric SAR Imagery by Using Object-Oriented Random Forest Algorithm. Sensors 2021, 21, 3395. [Google Scholar] [CrossRef] [PubMed]

- Bogale Aynalem, S. Flood Plain Mapping and Hazard Assessment of Muga River by Using ArcGIS and HEC-RAS Model Upper Blue Nile Ethiopia. Landsc. Archit. Reg. Plan. 2020, 5, 74. [Google Scholar] [CrossRef]

- Stehman, S.V. Model-assisted estimation as a unifying framework for estimating the area of land cover and land-cover change from remote sensing. Remote Sens. Environ. 2009, 113, 2455–2462. [Google Scholar] [CrossRef]

- Sun, Y.; Li, X.; Shi, H.; Cui, J.; Wang, W.; Ma, H.; Chen, N. Modeling salinized wasteland using remote sensing with the integration of decision tree and multiple validation approaches in Hetao irrigation district of China. Catena 2022, 209, 105854. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, L.; Ji, Q.; Song, W.; Wang, L. A Multi-Level Non-Uniform Spatial Sampling Method for Accuracy Assessment of Remote Sensing Image Classification Results. Appl. Sci. 2020, 10, 5568. [Google Scholar] [CrossRef]

- Guo, Z.; Shi, Y.; Huang, F.; Fan, X.; Huang, J. Landslide susceptibility zonation method based on C5.0 decision tree and K-means cluster algorithms to improve the efficiency of risk management. Geosci. Front. 2021, 12, 101249. [Google Scholar] [CrossRef]

- Zhou, C.; Yang, G.; Liang, D.; Hu, J.; Yang, H.; Yue, J.; Yan, R.; Han, L.; Huang, L.; Xu, L. Recognizing black point in wheat kernels and determining its extent using multidimensional feature extraction and a naive Bayes classifier. Comput. Electron. Agric. 2021, 180, 105919. [Google Scholar] [CrossRef]

- Farhadi, H.; Najafzadeh, M. Flood Risk Mapping by Remote Sensing Data and Random Forest Technique. Water 2021, 13, 3115. [Google Scholar] [CrossRef]

- Kim, J.; Grunwald, S. Assessment of Carbon Stocks in the Topsoil Using Random Forest and Remote Sensing Images. J. Environ. Qual. 2016, 45, 1910–1918. [Google Scholar] [CrossRef]

- Bian, C.; Shi, H.; Wu, S.; Zhang, K.; Wei, M.; Zhao, Y.; Sun, Y.; Zhuang, H.; Zhang, X.; Chen, S. Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data. Remote Sens. 2022, 14, 1474. [Google Scholar] [CrossRef]

- Chauhan, N.K.; Singh, K. Performance Assessment of Machine Learning Classifiers Using Selective Feature Approaches for Cervical Cancer Detection. Wirel. Pers. Commun. 2022. [Google Scholar] [CrossRef]

- Appiah-Badu, N.K.A.; Missah, Y.M.; Amekudzi, L.K.; Ussiph, N.; Frimpong, T.; Ahene, E. Rainfall Prediction Using Machine Learning Algorithms for the Various Ecological Zones of Ghana. IEEE Access 2022, 10, 5069–5082. [Google Scholar] [CrossRef]

- Adugna, T.; Xu, W.; Fan, J. Comparison of Random Forest and Support Vector Machine Classifiers for Regional Land Cover Mapping Using Coarse Resolution FY-3C Images. Remote Sens. 2022, 14, 574. [Google Scholar] [CrossRef]

- Liu, R.; Li, L.; Pirasteh, S.; Lai, Z.; Yang, X.; Shahabi, H. The performance quality of LR, SVM, and RF for earthquake-induced landslides susceptibility mapping incorporating remote sensing imagery. Arab. J. Geosci. 2021, 14, 1–15. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of Feature Selection Methods for Object-Based Land Cover Mapping of Unmanned Aerial Vehicle Imagery Using Random Forest and Support Vector Machine Classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Wijesingha, J.; Astor, T.; Schulze-Brüninghoff, D.; Wachendorf, M. Mapping Invasive Lupinus polyphyllus Lindl. in Semi-natural Grasslands Using Object-Based Image Analysis of UAV-borne Images. PFG–J. Photogramm. Remote Sens. Geoinf. Sci. 2020, 88, 391–406. [Google Scholar] [CrossRef]

- Li, Z.; Ding, J.; Zhang, H.; Feng, Y. Classifying Individual Shrub Species in UAV Images—A Case Study of the Gobi Region of Northwest China. Remote Sens. 2021, 13, 4995. [Google Scholar] [CrossRef]

| UAV Model Wheelbase Weight Max Ascent Speed Max Flight Speed Max Flight Time Hover Accuracy Positioning Module | Phantom 4 Multi-spectral 350 mm 1487 g 6 m/s (Sport Mode), 5 m/s (Manual Mode) 72 km/h (Sport Mode), 50 km/h (Position Mode) 27 min Vertical: ±0.5 m, Horizontal: ±1.5 m GPS + BeiDou + Galileo |

| Classification Method | Scheme | Classification Algorithms | Combination of Features | Number of Features |

|---|---|---|---|---|

| Object-based image analysis | S1 | Random Forest | SPEC | 8 |

| S2 | SPEC + INDE | 15 | ||

| S3 | SPEC + INDE + TERR | 17 | ||

| S4 | SPEC + INDE + GEOM | 32 | ||

| S5 | SPEC + INDE + GLCM | 27 | ||

| S6 | SPEC + INDE + TERR + GLCM | 29 | ||

| S7 | SPEC + INDE + TERR + GEOM | 34 | ||

| S8 | SPEC + INDE + GLCM + GEOM | 44 | ||

| S9 | SPEC + INDE + TERR + GEOM + GLCM | 46 | ||

| Object-based image analysis | S10 | Random Forest | Mean_CHM, SD_CHM, EXG, Angular second moment, Mean_GLCM, SD_R, Compactness SD_GLCM | 8 |

| S11 | Decision Tree | Mean_CHM, SD_CHM, EXG, Angular second moment, Mean_GLCM, SD_R, Compactness SD_GLCM | 8 | |

| S12 | Support Vector Machine | Mean_CHM, SD_CHM, EXG, Angular second moment, Mean_GLCM, SD_R, Compactness SD_GLCM | 8 | |

| S13 | Naive Bayesian | Mean_CHM, SD_CHM, EXG, Angular second moment, Mean_GLCM, SD_R, Compactness SD_GLCM | 8 | |

| Pixel-based classification | S14 | Random Forest | SPEC, EXG, CHM | 5 |

| (a) | Class value | Other | COTC | Total | UA/% | (b) | Class value | Other | COTC | Total | UA/% |

| Other | 218 | 11 | 229 | 95.20 | Other | 219 | 29 | 248 | 88.31 | ||

| COTC | 3 | 168 | 171 | 98.25 | COTC | 2 | 150 | 152 | 98.68 | ||

| Total | 221 | 179 | 400 | Total | 221 | 179 | 400 | ||||

| PA/% | 98.64 | 93.85 | PA/% | 99.10 | 83.80 | ||||||

| (c) | Class value | Other | COTC | Total | UA/% | (d) | Class value | Other | COTC | Total | UA/% |

| Other | 209 | 18 | 227 | 92.07 | Other | 218 | 17 | 235 | 92.77 | ||

| COTC | 12 | 161 | 173 | 93.06 | COTC | 3 | 162 | 165 | 98.18 | ||

| Total | 221 | 179 | 400 | Total | 221 | 179 | 400 | ||||

| PA/% | 94.57 | 89.94 | PA/% | 98.64 | 90.50 | ||||||

| (e) | Class value | Other | COTC | Total | UA/% | ||||||

| Other | 204 | 1 | 205 | 99.51 | |||||||

| COTC | 17 | 178 | 195 | 91.28 | |||||||

| Total | 221 | 179 | 400 | ||||||||

| PA/% | 92.31 | 99.44 |

| Scheme | Classification Methods | Classification Algorithms | Overall Accuracy/% | Kappa Coefficient | Time Used/s |

|---|---|---|---|---|---|

| S10 | Object-based image analysis | Random Forest | 96.50% | 0.93 | 358 |

| S11 | Decision Tree | 92.25% | 0.84 | 362 | |

| S12 | Support Vector Machine | 92.50% | 0.85 | 761 | |

| S13 | Naive Bayesian | 95.00% | 0.90 | 351 | |

| S14 | Pixel-based classification | Random Forest | 95.50% | 0.91 | 205 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, K.; Zhang, H.; Wang, F.; Lai, R. Extraction of Broad-Leaved Tree Crown Based on UAV Visible Images and OBIA-RF Model: A Case Study for Chinese Olive Trees. Remote Sens. 2022, 14, 2469. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14102469

Yang K, Zhang H, Wang F, Lai R. Extraction of Broad-Leaved Tree Crown Based on UAV Visible Images and OBIA-RF Model: A Case Study for Chinese Olive Trees. Remote Sensing. 2022; 14(10):2469. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14102469

Chicago/Turabian StyleYang, Kaile, Houxi Zhang, Fan Wang, and Riwen Lai. 2022. "Extraction of Broad-Leaved Tree Crown Based on UAV Visible Images and OBIA-RF Model: A Case Study for Chinese Olive Trees" Remote Sensing 14, no. 10: 2469. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14102469