Integration and Comparison Methods for Multitemporal Image-Based 2D Annotations in Linked 3D Building Documentation

Abstract

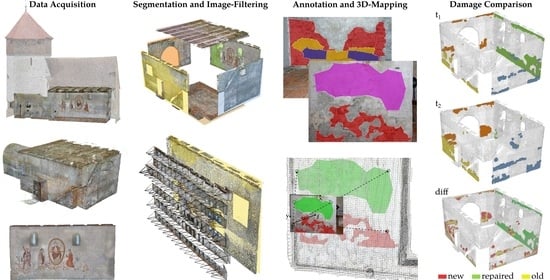

:1. Introduction

2. Materials and Methods

2.1. Case Study

- Which point cloud section belongs to a specific building element?

- Which images contain parts of a specific building element?

- How are 2D annotations transferred to 3D geometries?

- Is a specific building element affected by annotations and by which?

- Which annotation was already part of a previously processed dataset?

- Where and of what dimension are the geometrical changes of the annotations compared to previous datasets?

- What amount of deterioration from this comparison was detected on a specific building element?

2.2. Methodological Approach

2.3. Dataset

2.3.1. Images and Point Cloud

- A collection of 1881 images ( ) captured with a mean distance of ( for the wall painting);

- A sparse point cloud with from the photogrammetric reconstruction (approximately /);

- The estimated extrinsic parameters of the camera for each image (see Figure 3);

- A computed orthophoto of the wall painting with a size of approximately 36 and a resolution of / (see Figure 1).

2.3.2. Structured Segmentation

- Points within a user-defined distance threshold of 20 ;

- Images with a corresponding camera direction of view with an angle of no greater than 20 to the facet normal.

2.3.3. Annotations

2.4. Methods

2.4.1. Mapping of 2D Annotations

- 1

- Triangulation of the segmented part of the sparse point cloud to generate a target for the ray casting;

- 2

- Mapping of vertices of a polygon or polyline on the surface in the view direction (and in the case of the polygons, triangulation to a surface);

- 3

- Storage of the resulting 3D information including characteristic dimensions (e.g., crack length, discoloured area, or spalling volume), the bounding box, and the annotation semantic.

2.4.2. Assignment of Damages to Building Elements

- The definition of an octree depth, which is applied to each object and, thus, changes dv according to the object dimension;

- The target accuracy, which needs to consider the registration error, as a global dv, valid for all geometries of the dataset;

- A combined approach, where the octree depth is defined along with a maximum dv to avoid too large voxels for big objects.

2.4.3. Assignment of Damages to Images

2.4.4. Damage Comparison and Evaluation

- Damages that did not occur in a previous inspection and therefore are new to the dataset;

- Damages that no longer occur in the current inspection and therefore need to be surveyed in detail or were repaired during restoration works;

- Damages that in previous inspections were in separate regions and in the current survey fused into one connected damage.

2.4.5. Integration with Building Information Models

3. Results

3.1. Enriched Building Elements

3.2. Damage Assignments

3.3. Derivation of Damage Progression

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Hallermann, N.; Morgenthal, G.; Rodehorst, V. Vision-based Monitoring of Heritage Monuments: Unmanned Aerial Systems (UAS) for Detailed Inspection And High-accuracy Survey of Structures. Wit Trans. Built Environ. 2015, 153, 621–632. [Google Scholar] [CrossRef] [Green Version]

- Luhmann, T.; Chizhova, M.; Gorkovchuk, D. Fusion of UAV and Terrestrial Photogrammetry with Laser Scanning for 3D Reconstruction of Historic Churches in Georgia. Drones 2020, 4, 53. [Google Scholar] [CrossRef]

- Manuel, A.; Luca, L.D.; Véron, P. A Hybrid Approach for the Semantic Annotation of Spatially Oriented Images. Int. J. Herit. Digit. Era 2014, 3, 305–320. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Dininno, D.; Petrucci, G.; Remondino, F. From 2D to 3D Supervised Segmentation and Classification for Cultural Heritage Applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 399–406. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Billen, R.; Kasprzyk, J.P.; Lefebvre, P.H.; Hallot, P. A Built Heritage Information System Based on Point Cloud Data: HIS-PC. Isprs Int. J.-Geo-Inf. 2020, 9, 588. [Google Scholar] [CrossRef]

- Alfio, V.S.; Costantino, D.; Pepe, M.; Restuccia Garofalo, A. A Geomatics Approach in Scan to FEM Process Applied to Cultural Heritage Structure: The Case Study of the “Colossus of Barletta”. Remote Sens. 2022, 14, 664. [Google Scholar] [CrossRef]

- Christofori, E.; Bierwagen, J. Recording Cultural Heritage Using Terrestrial Laserscanning–Dealing with the system, the huge datasets they create and ways to extract the necessary deliverables you can work with. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W2, 183–188. [Google Scholar] [CrossRef] [Green Version]

- Apollonio, F.I.; Basilissi, V.; Callieri, M.; Dellepiane, M.; Gaiani, M.; Ponchio, F.; Rizzo, F.; Rubino, A.R.; Scopigno, R.; Sobra, G. A 3D-centered information system for the documentation of a complex restoration intervention. J. Cult. Herit. 2018, 29, 89–99. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. Isprs J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Benz, C.; Debus, P.; Ha, H.K.; Rodehorst, V. Crack Segmentation on UAS-based Imagery using Transfer Learning. In Proceedings of the International Conference on Image and Vision Computing New Zealand (IVCNZ), Dunedin, New Zealand, 2–4 December 2019. [Google Scholar] [CrossRef]

- Artus, M.; Alabassy, M.S.H.; Koch, C. A BIM Based Framework for Damage Segmentation, Modeling, and Visualization Using IFC. Appl. Sci. 2022, 12, 2772. [Google Scholar] [CrossRef]

- Dell’Unto, N.; Landeschi, G.; Leander Touati, A.M.; Dellepiane, M.; Callieri, M.; Ferdani, D. Experiencing Ancient Buildings from a 3D GIS Perspective: A Case Drawn from the Swedish Pompeii Project. J. Archaeol. Method Theory 2016, 23, 73–94. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Rinaudo, F. Documenting the State of Preservation of Historical Stone Sculptures in Three Dimensions with Digital Tools. In Pattern Recognition. ICPR International Workshops and Challenges; Del Bimbo, A., Cucchiara, R., Sclaroff, S., Farinella, G.M., Mei, T., Bertini, M., Escalante, H.J., Vezzani, R., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12663, pp. 666–673. [Google Scholar]

- Malinverni, E.S.; Mariano, F.; Di Stefano, F.; Petetta, L.; Onori, F. Modelling in HBIM to Document Materials Decay by a Thematic Mapping to Manage the Cultural Heritage: The Case of “Chiesa della Pietà” in Fermo. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W11, 777–784. [Google Scholar] [CrossRef] [Green Version]

- Garagnani, S. Building Information Modeling and real world knowledge: A methodological approach to accurate semantic documentation for the built environment. In Proceedings of the Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; pp. 489–496. [Google Scholar] [CrossRef]

- Chiabrando, F.; Lo Turco, M.; Rinaudo, F. Modeling the Decay in an HBIM Starting from 3D Point Clouds: A Followed Approach for Cultural Heritage Knowledge. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W5, 605–612. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez-Moreno, C.; Reinoso-Gordo, J.F.; Rivas-López, E.; Gómez-Blanco, A.; Ariza-López, F.J.; Ariza-López, I. From point cloud to BIM: An integrated workflow for documentation, research and modelling of architectural heritage. Surv. Rev. 2018, 50, 212–231. [Google Scholar] [CrossRef]

- Costantino, D.; Pepe, M.; Restuccia, A. Scan-to-HBIM for conservation and preservation of Cultural Heritage building: The case study of San Nicola in Montedoro church (Italy). Appl. Geomat. 2021. [Google Scholar] [CrossRef]

- Croce, V.; Caroti, G.; De Luca, L.; Jacquot, K.; Piemonte, A.; Véron, P. From the Semantic Point Cloud to Heritage-Building Information Modeling: A Semiautomatic Approach Exploiting Machine Learning. Remote Sens. 2021, 13, 461. [Google Scholar] [CrossRef]

- Pierdicca, R.; Paolanti, M.; Matrone, F.; Martini, M.; Morbidoni, C.; Malinverni, E.S.; Frontoni, E.; Lingua, A.M. Point Cloud Semantic Segmentation Using a Deep Learning Framework for Cultural Heritage. Remote Sens. 2020, 12, 1005. [Google Scholar] [CrossRef] [Green Version]

- Bitelli, G.; Castellazzi, G.; D’Altri, A.; De Miranda, S.; Lambertini, A.; Selvaggi, I. Automated Voxel Model from Point Clouds for Structural Analysis of Cultural Heritage. Isprs-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 191–197. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Billen, R. Voxel-based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef] [Green Version]

- Nan, L.; Wonka, P. PolyFit: Polygonal Surface Reconstruction from Point Clouds. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2372–2380. [Google Scholar]

- Chiabrando, F.; Di Lolli, A.; Patrucco, G.; Spanò, A.; Sammartano, G.; Teppati Losè, L. Multitemporal 3D Modelling for Cultural Heritage Emergency During Seismic Events: Damage Assesment of S. Agostino Church in Amatrice (RI). ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-5/W1, 69–76. [Google Scholar] [CrossRef] [Green Version]

- Hallermann, N.; Taraben, J.; Morgenthal, G. BIM related workflow for an image-based deformation monitoring of bridges. In Proceedings of the 9th International Conference on Bridge Maintenance, Safety and Management (IABMAS), Melbourne, Australia, 9–13 July 2018; pp. 157–164. [Google Scholar]

- Vetrivel, A.; Duarte, D.; Nex, F.; Gerke, M.; Kerle, N.; Vosselman, G. Potential of Multi-Temporal Oblique Airborne Imagery for Structural Damage Assessment. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume III-3; pp. 355–362. [Google Scholar] [CrossRef]

- Borrmann, A.; Rank, E. Topological analysis of 3D building models using a spatial query language. Adv. Eng. Inf. 2009, 23, 370–385. [Google Scholar] [CrossRef]

- Snavely, N. Bundler v0.4 User’s Manual. 2009. Available online: https://www.cs.cornell.edu/~snavely/bundler/bundler-v0.4-manual.html (accessed on 10 March 2022).

- Wada, K. labelme: Image Polygonal Annotation with Python. 2018. Available online: https://github.com/wkentaro/labelme (accessed on 10 March 2022).

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson Surface Reconstruction. In Proceedings of the Fourth Eurographics Symposium on Geometry Processing (SGP ’06); Sheffer, A., Polthier, K., Eds.; The Eurographics Association: Goslar, Germany; Caglari, Italy, 2006; pp. 61–70. [Google Scholar] [CrossRef]

- Hamdan, A.H.; Taraben, J.; Helmrich, M.; Mansperger, T.; Morgenthal, G.; Scherer, R.J. A semantic modeling approach for the automated detection and interpretation of structural damage. Autom. Constr. 2021, 128, 103739. [Google Scholar] [CrossRef]

- Taraben, J.; Morgenthal, G. Methods for the Automated Assignment and Comparison of Building Damage Geometries. Adv. Eng. Inf. 2021, 47, 101186. [Google Scholar] [CrossRef]

- Taraben, J.; Morgenthal, G. Automated linking of 3D inspection data for damage analysis. In Proceedings of the Bridge Maintenance, Safety, Management, Life-Cycle Sustainability and Innovations (IABMAS); Yokota, H., Frangopol, D.M., Eds.; CRC Press: Sapporo, Japan, 2021; pp. 3714–3720. [Google Scholar] [CrossRef]

- Fuchs, S.; Scherer, R.J. Multimodels—Instant nD-modeling using original data. Autom. Constr. 2017, 75, 22–32. [Google Scholar] [CrossRef]

- buildingSmart. Industry Foundation Classes 4.0.2.1 Version 4.0–Addendum 2–Technical Corrigendum 1. 2017. Available online: https://technical.buildingsmart.org/standards/ifc/ifc-schema-specifications/ (accessed on 10 March 2022).

- Ledoux, H.; Arroyo Ohori, K.; Kumar, K.; Dukai, B.; Labetski, A.; Vitalis, S. CityJSON: A compact and easy-to-use encoding of the CityGML data model. Open Geospat. Data Softw. Stand. 2019, 4, 4. [Google Scholar] [CrossRef]

| Building Element | # of Damages | # of Damages | # of Damages |

|---|---|---|---|

| Wall 01 | 5 | 10 | 21 |

| Wall 02 | 5 | 14 | 14 |

| Wall 03 | 6 | 7 | 17 |

| Wall 04 | 7 | 7 | 8 |

| ID | Type | # of Images | # of Ancestors from | Growth [%] | # of Ancestors | Growth [%] |

|---|---|---|---|---|---|---|

| tnMdPf | discolouration | 80 | - | - | 5 | 23.5 |

| b8e3vm | missing plaster | 39 | - | - | 1 | 50.5 |

| FXy3KV | missing plaster | 37 | - | - | - | - |

| kk70r3 | missing plaster | 42 | - | - | 1 | 147.6 |

| kZuwv6 | missing plaster | 49 | - | - | 1 | 419.5 |

| lDGeVw | missing plaster | 36 | 1 | 259.1 | 1 | 33.7 |

| Mgg7YP | missing plaster | 57 | 1 | 227.9 | 2 | 143.8 |

| N7ZS4g | missing plaster | 40 | 1 | 51.8 | 1 | 72.8 |

| Q6RCFX | missing plaster | 74 | - | - | 1 | 116.2 |

| whq94c | missing plaster | 104 | 2 | 168.1 | 1 | 152.7 |

| yRCMdN | missing plaster | 36 | - | - | - | - |

| 0H1SWJ | missing plaster | 73 | - | - | - | - |

| 1fKgjk | missing plaster | 40 | - | - | - | - |

| 3AeWJN | missing plaster | 42 | - | - | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taraben, J.; Morgenthal, G. Integration and Comparison Methods for Multitemporal Image-Based 2D Annotations in Linked 3D Building Documentation. Remote Sens. 2022, 14, 2286. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14092286

Taraben J, Morgenthal G. Integration and Comparison Methods for Multitemporal Image-Based 2D Annotations in Linked 3D Building Documentation. Remote Sensing. 2022; 14(9):2286. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14092286

Chicago/Turabian StyleTaraben, Jakob, and Guido Morgenthal. 2022. "Integration and Comparison Methods for Multitemporal Image-Based 2D Annotations in Linked 3D Building Documentation" Remote Sensing 14, no. 9: 2286. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14092286