A Python-Based Open Source System for Geographic Object-Based Image Analysis (GEOBIA) Utilizing Raster Attribute Tables

Abstract

:1. Introduction

2. Software Libraries

- GDAL; raster data model and input and output (I/O) of common image formats.

- RSGISLib; segmentation and attribution of objects.

- Raster I/O Simplification (RIOS); used to read, write and classify attributed objects.

- TuiView; used to view data and provides a GUI for rule development.

- KEA Image format; used to store image objects and associated attributes.

2.1. GDAL

2.2. RSGISLib

2.3. RIOS

2.4. TuiView

2.5. KEA Image Format

3. Typical Workflow

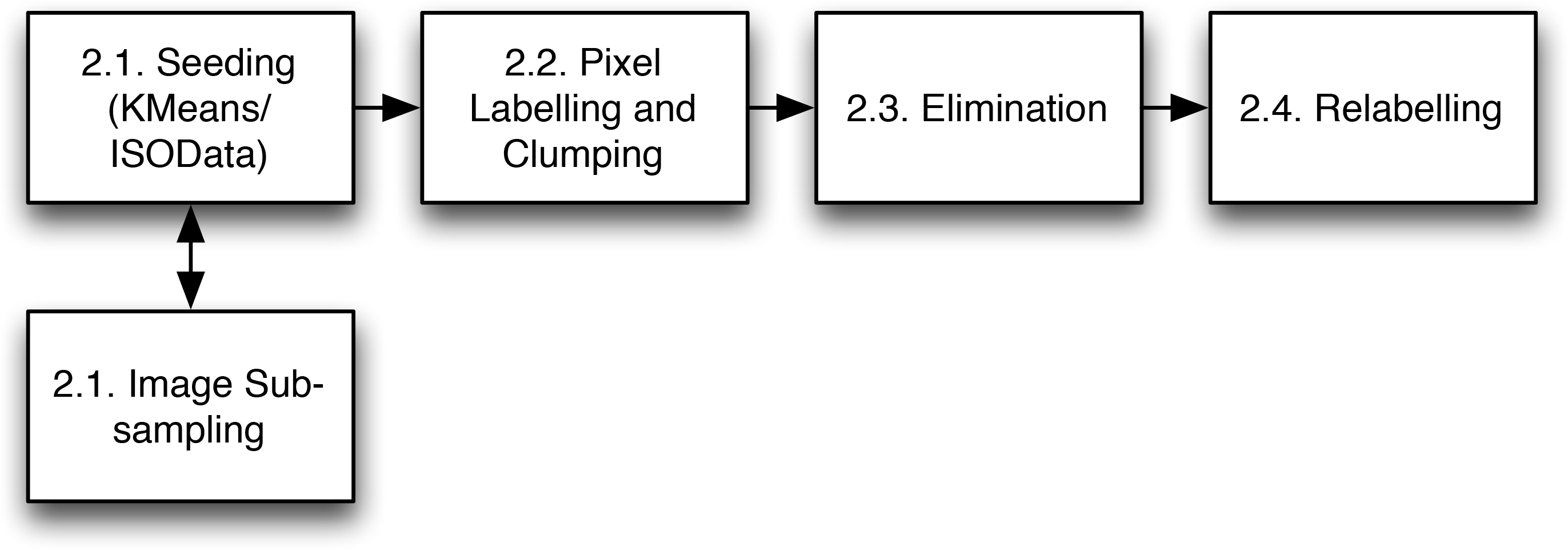

3.1. Segmentation

3.2. Attribute Table Creation

3.3. Rule-Based Classification

3.4. Supervised Classification

4. Comparison with Other Packages

4.1. Features Overview

4.2. Segmentation Comparison

5. Examples of Use

5.1. Change in Mangroves Extent

- (1)

- Segmentation, using data from all three years.

- (2)

- Rule-based classification of the 1996 data

- (3)

- Identification of change in the 2007 and 2010 data, relative to the 1996 baseline.

5.1.1. Segmentation

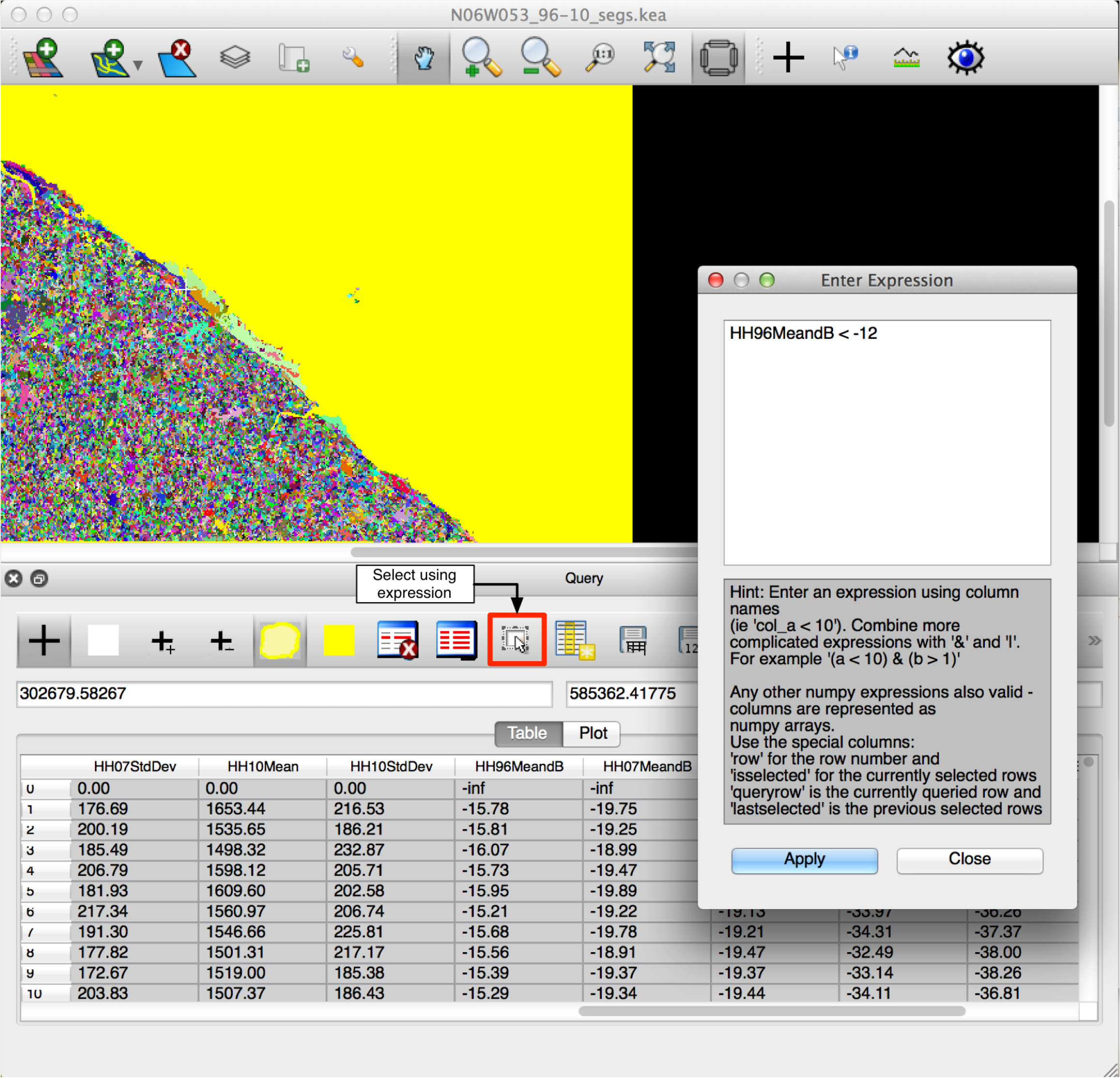

5.1.2. Rule-Based Classification

- (1)

- Populate objects with SAR pixel statistics; where backscatter was expressed as power (linear).

- (2)

- Convert mean SAR backscatter to dB; for each object.

- (3)

- Classify water; using a threshold of < −12 dB to provide an initial mask.

- (4)

- Calculate the proximity to regions classified as water; to provide context for the coastal region.

- (5)

- Classify the scene into broad categories:

- Water (ocean); defined by selecting the largest connected water region.

- Coastal strip; defined to be within 3 km of the coast.

- Other; remaining objects not within the water of coastal strip classes.

- (6)

- Classify within the “coastal strip” class to identify mangroves; using a backscatter threshold.

5.1.3. Change Detection

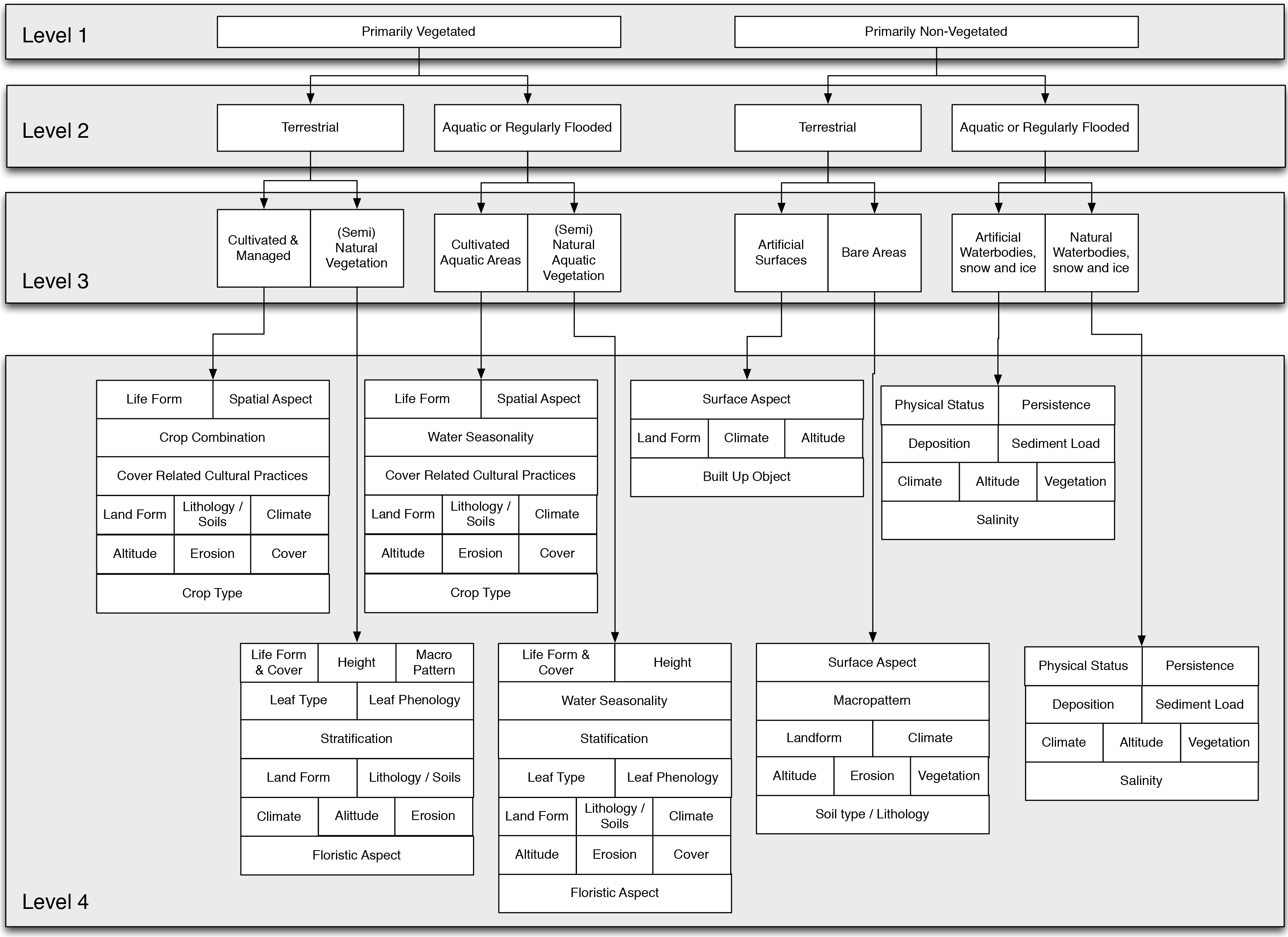

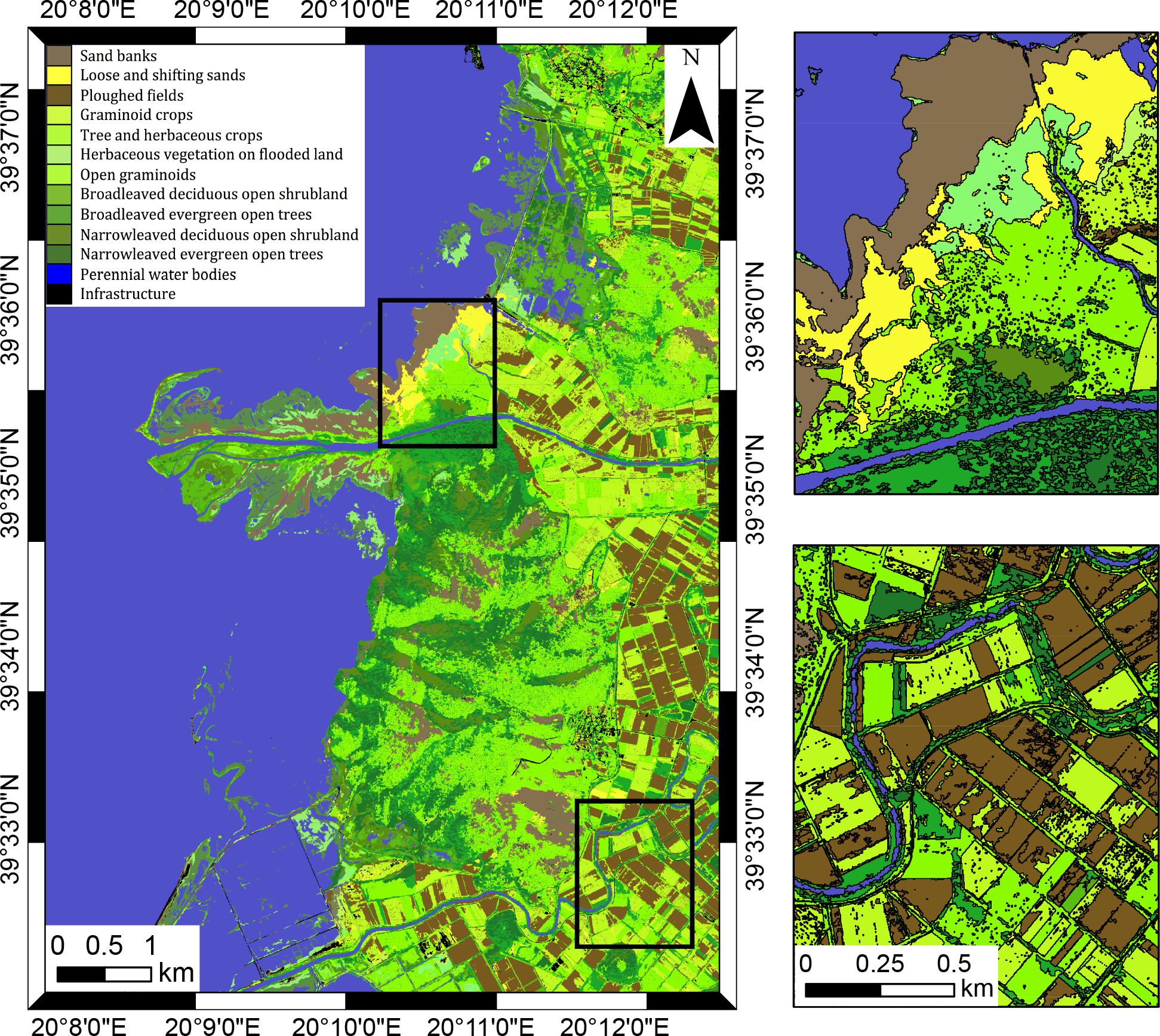

5.2. Land Cover and Habitat Classification

- Feature extraction; identification and segmentation of buildings and trees using [32].

- Segmentation; application of the Shepherd et al. [24] algorithm to the scene.

- Segmentation Fusion; extracted feature boundaries, segmentation and thematic layers (e.g., roads) are fused to provide the segments to be used for the classification steps.

- Level 1 : assignment of objects to vegetated or not-vegetated classes.

- Level 2 : assignment of objects to terrestrial or aquatic classes.

- Level 3 : definition of objects to cultivated, managed or artificial or natural or semi-natural classes.

- Level 4 : description of position (e.g., soils) and type (e.g., woody).

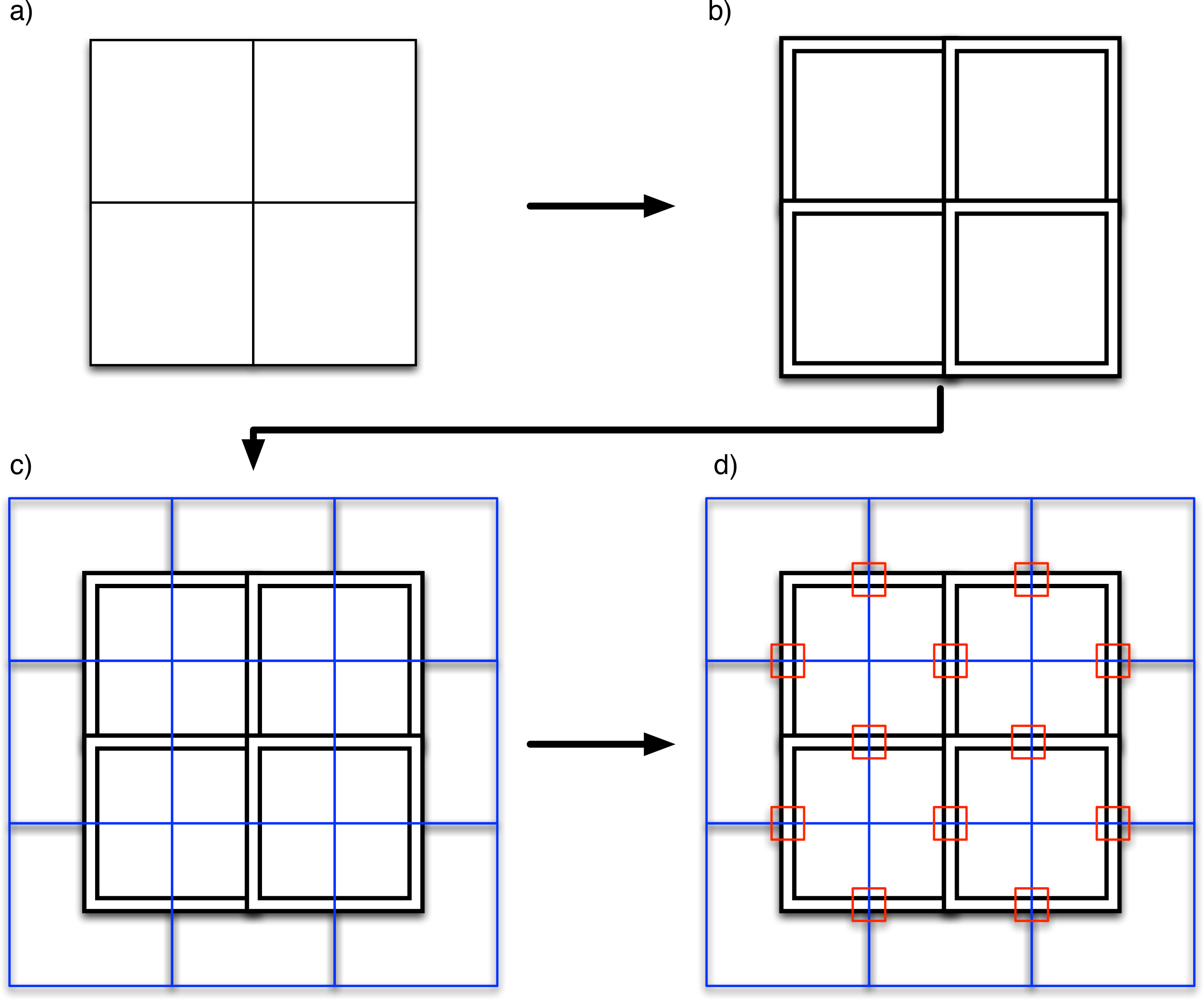

5.3. Scalable Image Segmentation

- Split the data into tiles with an overlap between the tiles; tiles of 10,000 × 10,000 pixels were used, including a 500 pixel overlap.

- Segment each tile independently; utilizing multiple cores on an HPC.

- Merge the tiles, removing any segments next to the tile boundaries: the segments in contact with the tile boundary have artefacts due to the tiling.

- Split the merged segmentation into tiles, but with half a tile offset relative to the original tiles; a half tile offset is used so that the intersection of four tiles from the original segmentation will now be in the center of the new tiles.

- Segment regions on tile boundaries using the same parameters as the first segmentation; following this step, the majority of the scene will be correctly segmented with no boundary artifacts, and this relies on the segmentation always producing the same results, given the same parameters.

- Merge the segments generated at the tile boundaries into the original segmentation; during the merging process, a segment ID offset is used to ensure that segments have unique IDs.

- Finally, merge the independent regions at the boundaries of the second set of tiles, re-segment and copy into the main segmentation (Figure 8d); this produces the final result and eliminates artifacts due to the tiling process.

6. Discussion

6.1. Expansion of the System

6.2. License

6.3. Future Work

7. Conclusions

- Built entirely on software released under open source (GPL-compatible) licenses.

- Modular, allowing the system to be customized and expanded.

- All functions are accessed through Python scripts, allowing a fully automated process to be developed.

- Allows access to all the functionality of the Python language and associated libraries (e.g., SciPy, Scikit-learn).

- Scales well to complex rule sets and large datasets.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens 2010, 65, 2–16. [Google Scholar]

- Gibbes, C.; Adhikari, S.; Rostant, L.; Southworth, J.; Qiu, Y. Application of object based classification and high resolution satellite imagery for Savanna ecosystem analysis. Remote Sens 2010, 2, 2748–2772. [Google Scholar]

- Lucas, R.M.; Medcalf, K.; Brown, A.; Bunting, P.J.; Breyer, J.; Clewley, D.; Keyworth, S.; Blackmore, P. Updating the Phase 1 habitat map of Wales, UK, using satellite sensor data. ISPRS J. Photogramm. Remote Sens 2011, 66, 81–102. [Google Scholar]

- Heumann, B.W. An object-based classification of Mangroves using a hybrid decision tree—Support vector machine approach. Remote Sens 2011, 3, 2440–2460. [Google Scholar]

- Definiens. In eCognition Version 9 Object Oriented Image Analysis User Guide; Technical report; Trimble; Munich, Germany, 2014.

- Vu, T. Object-Based Remote Sensing Image Analysis with OSGeo Tools. Proceedings of FOSS4G Southeast Asia 2012, Johor Bahru, Malaysia, 18–19 July 2012; pp. 79–84.

- Moreno-Sanchez, R. Free and Open Source Software for Geospatial Applications (FOSS4G): A mature alternative in the geospatial technologies arena. Trans. GIS 2012, 16, 81–88. [Google Scholar]

- Steniger, S.; Hay, G. Free and open source geographic information tools for landscape ecology. Ecol. Inf 2009, 4, 183–195. [Google Scholar]

- Christophe, E.; Inglada, J. Open source remote sensing: Increasing the usability of cutting-edge algorithms. IEEE Geosci. Remote Sens. Soc. Newsl 2009, 150, 9–15. [Google Scholar]

- Inglada, J.; Christophe, E. The Orfeo Toolbox Remote Sensing Image Processing Software. Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; pp. IV-733–IV-736.

- Huth, J.; Kuenzer, C.; Wehrmann, T.; Gebhardt, S.; Tuan, V.Q.; Dech, S. Land cover and land use classification with TWOPAC: Towards automated processing for pixel- and object-based image classification. Remote Sens 2012, 4, 2530–2553. [Google Scholar]

- InterImage. InterImage User Manual, Version 1.41; Laboratório de Visão Computacional: Rio de Janeiro, Brazil, 2014. [Google Scholar]

- Gillingham, S.; Bunting, P. RFC40. Available online: http://trac.osgeo.org/gdal/wiki/rfc40_enhanced_rat_support (accessed on 24 March 2014).

- Bunting, P.; Clewley, D.; Lucas, R.M.; Gillingham, S. The Remote Sensing and GIS Software Library (RSGISLib). Comput. Geosci 2014, 62, 216–226. [Google Scholar]

- Bunting, P. ARCSI. Available online: https://bitbucket.org/petebunting/arcsi (accessed on 25 January 2014).

- Wilson, R.T. Py6S: A Python interface to the 6S radiative transfer model. Comput. Geosci 2013, 51, 166–171. [Google Scholar]

- Vermote, E.; Tanre, D.; Deuze, J.; Herman, M.; Morcrette, J. Second simulation of the satellite signal in the solar spectrum, 6S: An overview. IEEE Trans. Geosci. Remote Sens 1997, 35, 675–686. [Google Scholar]

- Gillingham, S.; Flood, N. RIOS. Available online: https://bitbucket.org/chchrsc/rios/ (accessed on 25 January 2014).

- NumPy. Available online: http://www.numpy.org (accessed on 25 January 2014).

- Jones, E.; Oliphant, T.; Peterson, P. SciPy: Open source scientific tools for Python. 2001. Available online: http://www.scipy.org (accessed on 25 June 2014).

- Bunting, P.; Gillingham, S. The KEA image file format. Comput. Geosci 2013, 57, 54–58. [Google Scholar]

- Zlib. Available online: http://www.zlib.net (accessed on 9 May 2014).

- Baatz, M.; Hoffmann, C.; Willhauck, G. Progressing from Object-Based to Object-Oriented Image Analysis. In Object-Based Image Analysis; Blaschke, T., Lang, S., Hay, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 29–42. [Google Scholar]

- Shepherd, J.D.; Bunting, P.; Dymond, J.R. Operational large-scale segmentation of imagery based on iterative elimination. J. Appl. Remote Sens 2014, in press.. [Google Scholar]

- Baatz, M.; Schäpe, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. J. Photogramm. Remote Sens 2000, 58, 12–23. [Google Scholar]

- Johnson, B.; Xie, Z. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote Sens 2011, 66, 473–483. [Google Scholar]

- Christophe, E.; Inglada, J. Robust Road Extraction for High Resolution Satellite Images. Proceedings of the IEEE International Conference on Image Processing (ICIP 2007), San Antonio, TX, USA, 16–19 September 2007.

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res 2011, 12, 2825–2830. [Google Scholar]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett 2006, 27, 294–300. [Google Scholar]

- Di Gregorio, A.; Jansen, L. Land Cover Classification System (LCCS): Classification Concepts and User Manual for Software, Version 2; Technical Report 8; FAO Environment and Natural Resources Service Series: Rome, Italy, 2005. [Google Scholar]

- Lucas, R.; Bunting, P.; Jones, G.; Arias, M.; Inglada, J.; Kosmidou, V.; Petrou, Z.; Manakos, I.; Adamo, M.; Tarantino, C.; et al. The Earth Observation Data for Habitat Monitoring (EODHaM) system. JAG Int. J. Appl. Earth Obs. Geoinforma. Special Issue Earth Obs, 2014; in press. [Google Scholar]

- Arias, M.; Inglada, J.; Lucas, R.; Blonda, P. Hedgerow Segmentation on VHR Optical Satellite Images for Habitat Monitoring. Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, Australia, 21–26 July 2013; pp. 3301–3304.

- Kosmidou, V.; Petrou, Z.; Bunce, R.G.; Mücher, C.A.; Jongman, R.H.; Bogers, M.M.; Lucas, R.M.; Tomaselli, V.; Blonda, P.; Padoa-Schioppa, E.; et al. Harmonization of the Land Cover Classification System (LCCS) with the General Habitat Categories (GHC) classification system. Ecol. Indic 2014, 36, 290–300. [Google Scholar]

- Gill, T.; Johansen, K.; Scarth, P.; Armston, J.; Trevithick, R.; Flood, N. AusCover Good Practice Guidelines: A Technical Handbook Supporting Calibration and Validation Activities of Remotely Sensed Data Products. Available online: http://data.auscover.org.au/xwiki/bin/view/Good+Practice+Handbook/PersistentGreenVegetation (accessed on 25 June 2014).

- Scarth, P. Persistent Green-Vegetation Fraction and Wooded Mask—Landsat, Australia Coverage. Available online: http://www.auscover.org.au/xwiki/bin/view/Product+pages/Persistent+Green-Vegetation+Fraction (accessed on 15 February 2013).

- McKinney, W. Data Structures for Statistical Computing in Python. Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; pp. 51–56.

- Seabold, J.; Perktold, J. Statsmodels: Econometric and Statistical Modeling with Python. Proceedings of the 9th Python in Science Conference, Austin,TX, USA, 28 June–3 July 2010.

- Peterson, P. F2PY: A tool for connecting Fortran and Python programs. Int. J. Comput. Sci. Eng 2009, 4, 296–305. [Google Scholar]

- 2007. GNU. GNU General Public License (GPL) Version 3. Available online: http://www.gnu.org/copyleft/gpl.html (accessed on 10 April 2014).

- Institute for Legal Questions on Free and Open Source Software. Available online: http://www.ifross.org/en/what-difference-between-gplv2-and-gplv3 (accessed on 10 April 2014).

- Free Software Foundation. Available online: http://www.gnu.org/licenses/license-list.html (accessed on 26 January 2014).

| Image Statistics | Shape | Position | Categorical |

|---|---|---|---|

| Minimum | Area | Spatial Location | Proportion |

| Maximum | Length | Border | Majority (string) |

| Sum | Width | Distance to Feature | |

| Mean | Distance to Neighbours | ||

| Standard Deviation | |||

| Median | |||

| Count |

| Feature | RSGISLib | OTB + Spatial Database | InterIMAGE | eCognition |

|---|---|---|---|---|

| Interface | Python | Command Line Interface (CLI) / Python / Graphical User Interface (GUI) | GUI | GUI |

| Installation | Source (windows binaries for TuiView only). | Windows, Linux and OS X binaries, source | Windows binaries and source | Windows binaries |

| License | General Public License / GPL-compatible | CEA CNRS INRIA Logiciel Libre (CeCILL; Similar to GPL) | GPL | Commercial |

| Rule-based classification | Yes | Yes | Yes | Yes |

| Machine-learning classification | Through external libraries | Through external libraries | Yes | Yes |

| Fuzzy Classification | Not currently | Not currently | Yes | Yes |

| Method for storing object attributes | RAT | Spatiallite | Internal | Internal |

| Batch processing | Python | Python/Bash, etc. | Command line | Engine (Add on) |

| Package | Small Scene | Large Scene | ||

|---|---|---|---|---|

| Time (s) | Segments | Time (m) | Segments | |

| RSGISLib (Linux) | 11 | 6395 | 140 | 422,745 |

| OTB (Linux) | 24 | 2172 | 50 | 313,551 |

| InterIMAGE (Windows) | 50 1 | 16,416 | - | - |

| eCognition (Windows) | 5 1 | 22,400 | - | 1,960,447 2 |

© 2014 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Clewley, D.; Bunting, P.; Shepherd, J.; Gillingham, S.; Flood, N.; Dymond, J.; Lucas, R.; Armston, J.; Moghaddam, M. A Python-Based Open Source System for Geographic Object-Based Image Analysis (GEOBIA) Utilizing Raster Attribute Tables. Remote Sens. 2014, 6, 6111-6135. https://0-doi-org.brum.beds.ac.uk/10.3390/rs6076111

Clewley D, Bunting P, Shepherd J, Gillingham S, Flood N, Dymond J, Lucas R, Armston J, Moghaddam M. A Python-Based Open Source System for Geographic Object-Based Image Analysis (GEOBIA) Utilizing Raster Attribute Tables. Remote Sensing. 2014; 6(7):6111-6135. https://0-doi-org.brum.beds.ac.uk/10.3390/rs6076111

Chicago/Turabian StyleClewley, Daniel, Peter Bunting, James Shepherd, Sam Gillingham, Neil Flood, John Dymond, Richard Lucas, John Armston, and Mahta Moghaddam. 2014. "A Python-Based Open Source System for Geographic Object-Based Image Analysis (GEOBIA) Utilizing Raster Attribute Tables" Remote Sensing 6, no. 7: 6111-6135. https://0-doi-org.brum.beds.ac.uk/10.3390/rs6076111