In this paper, we are mainly focusing on two types of roads: suburban roads and highways. Suburban roads are characterized as narrow and with very low traffic density. Highways are characterized as wide and with high traffic density. Since vehicles maintain a high speed on highways, there is always an appropriate distance between two vehicles.

2.2. Vehicle Candidate Location Extraction

Given the road regions of the WV2 image, the first step is to locate potential vehicles. In this research, we use the PAN image to extract vehicle candidate locations. To simply and efficiently extract vehicles embedded in a cluttered background, the image is first enhanced by Perona–Malik anisotropic diffusion. This is important, because noise is reduced without the removal of significant parts of the vehicles’ information. After that, an improved top-hat transformation based on the vehicles’ appearance properties is utilized to extract moving vehicle candidate locations.

Perona and Malik diffusion is a nonlinear diffusion filtering technique. Nonlinear diffusion filtering describes the evolution of the luminance of an image through increasing scale levels as the divergence of a certain flow function that controls the diffusion process [

15]. The following equation shows the classic nonlinear diffusion formulation:

where

div denotes the divergence operator, Δ denotes the gradient operator and

c(

x,

y,

t) is the diffusion equation.

c(

x,

y,

t) controls the rate of diffusion and is usually chosen as a function of the image gradient to preserve edges in the image. The time

t is the scale parameter, and larger values lead to simpler image representations.

Perona and Malik [

16] pioneered the idea of nonlinear diffusion and make

c(

x,

y,

t) dependent on the gradient magnitude to reduce the diffusion at the location of edges, encouraging smoothing within a region instead. The diffusion equation is defined as:

where the function ∇

Iσ is the gradient of a Gaussian smoothed version of the original image

I . Perona and Malik proposed two different formulations for

g:

where the parameter

k controls the sensitivity to edges. The function

g1 gives privilege to wide regions over smaller ones, and the function

g2 privileges high-contrast edges over low-contrast ones.

In this paper, we chose

g1 as the diffusion equation, since we want to remove undesirable noise without blurring or dislocating meaningful vehicle edges. As can be seen from

Figure 4, the image is smoothed and the boundaries of vehicles are well preserved.

In the PAN image, vehicles appear to be elliptical blobs, and the idea of a blob detection algorithm for vehicle detection has been attempted [

5,

7]. In Zheng’s work [

5], classical top-hat transformation is used to identify moving vehicles in very high resolution aerial image (0.15 m). The classical top-hat transformation is based on two morphology operations: opening and closing. The opening and closing operations are defined as:

where

f is the original image,

b is the structure element, ◦ denotes the grayscale opening transformation and • denotes the grayscale closing transformation. ⴱ and ⴲ denote the erosion operator and dilation operator, respectively. Then white top-hat transformation and black top-hat transformation, denoted by WTH and BTH, respectively, are defined as:

where

T is the image after the WTH transformation and

B is the image after BTH transformation.

WTH finds the bright regions in the image, while BTH finds dark regions. Vehicles are usually elliptical bright (dark) blobs in panchromatic images, and WTH and BTH can be directly used to find a moving vehicle. However, the classical top-hat transformation cannot differentiate the heavy clutter and real vehicle region. If there are cluttered backgrounds, most of the clutter will have outputs in the result image. In Bai’s work [

17], an improved top-hat transformation is proposed for infrared dim small target detection. We follow that with the proper selection of structuring elements based on the vehicle’s properties. If the structuring elements are properly chosen, the difference between vehicles and the background can be enhanced, and the performance of vehicle detection will be significantly improved. In light of this, a new moving vehicle detector is proposed.

Let

Bi and

Bo represent two elliptical structuring elements with the same shape. As shown in

Figure 5, Bo is called the outer structuring element.

Bi is called the inner structuring element.

Bb represents the structuring element whose size is between

Bo and

Bi. The margin structuring element Δ

B=

Bo −

Bi is the margin region between

Bi and

Bo. The relationship of

Bi,

Bo, Δ

B and

Bb is demonstrated in

Figure 5.

Operations

f ■

Boi and

f □

Boi are defined as follows:

where

Boi represents that the operation is related to

Bo and

Bi. Then, the new top-hat transformation can be defined as follows:

where

NT is the image after the new WTH transformation (NWTH), and

B is the image after new BTH transformation (NBTH). Furthermore, the new top-hat transformations use two correlated structuring elements, and the margin structuring element Δ

B is used to utilize the difference information between vehicles and surrounding regions.

In NWTH, if the processed region is not a target region, the relationship of the pixels in the processed and surrounding regions is not confirmed. This indicates that there may be negative values in NWTH. To avoid this situation, NWTH can be modified as follows:

Meanwhile, the modified NBTH can be defined as

To apply the proposed method, the road is first divided into several smaller and partially overlapping sub-segments. Then, each sub-segment is rotated horizontally aligned, as shown in

Figure 6. This step is essential, since the moving vehicles are oriented along the road. Thus, the vehicle’s elliptical structure can be better captured by NWTH and NBTH.

From a priori knowledge, in WorldView-2 images, the moving vehicles usually have a size of 6–10 pixels in length and 3–5 pixels in width. In this paper, ΔB represents the surrounding region of moving vehicles. Bb represents the vehicle region. To efficiently detect moving vehicles, the inner size of ΔB should be larger than the size of vehicles. To be efficient and robust, we set Bb with the size of 13 × 7, Bo with the size of 15 × 9 and Bi with the size of 11 × 5.

Furthermore, the vehicle candidate extraction method depends on its polarity. In Bar’s work [

12], the notion of positive polarity means that a vehicle in the panchromatic image is brighter than the surrounding region, whereas negative polarity means that a vehicle in the panchromatic image is darker than the surrounding region. Since vehicles with positive polarity are relatively brighter than surrounding region, the NWTH operation extracts such vehicles. The NBTH operation extracts vehicles with negative polarity.

Examples of vehicle candidate location extraction results are shown in

Figures 7 and

8. In both figures, (a) is the original image, (b) is the result image by the proposed method, (c) is the 3D distribution of the vehicle and surroundings in the square box in the original image and (d) is the 3D distribution of the vehicle and surroundings in the square box in the resulting image.

Figure 7 is an example of vehicles with positive polarity. After NWTH and NBTH, the cluttered backgrounds are well suppressed, and the vehicle region turns to bright spots.

Figure 8 is the example of vehicles with negative polarity. After NWTH and NBTH, the noises and cluttered backgrounds are suppressed, and the vehicle regions are more clearly delineated.

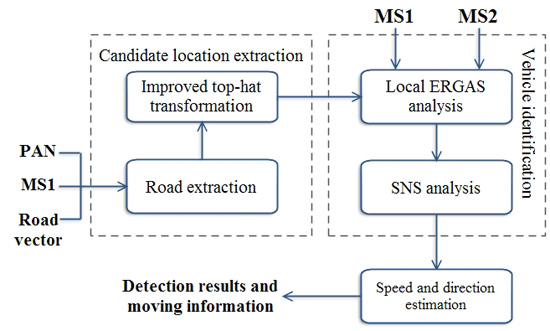

2.3. Moving Vehicles Identification

After moving vehicle candidate extraction, there may be some false alarms in the result image, such as concrete road dividers, oil stains on the road,

etc. Since some road dividers and oil stains have a similar appearance as vehicles, it is hard to effectively eliminate such false alarms by their appearance properties. Recent studies, however, observed the spatial displacement of moving targets in WV2 images [

1,

12], whereas stationary object do not have such properties. Hence, the spatial displacement is a reliable cue for moving vehicle identification.

The WV2 satellite carries a PAN and two MS (MS1 and MS2) sensors onboard. The PAN sensor is located between the MS1 and MS2. MS1 consists of red, green, blue and NIR1. MS2 consists of red edge, yellow, coastal and NIR2. The focal plane layout of WV2 [

18] is shown in

Figure 9. Due to the hardware arrangement, the sequence of collected images is MS1, PAN and MS2 with approximately a 0.13-s time lag between each MS and the PAN image. Therefore, the time lag between MS1 and MS2 is approximately 0.26 s [

1].

There are colorful fringes at moving targets in the WV2 fused image, which have been mostly treated as nuisance. That is because a moving target is observed at three different positions by the satellite, as shown in

Figure 10. The dark and bright spots correspond to the dark and bright vehicles, respectively. The bright vehicle is moving toward the top of the image, while the dark vehicle is moving toward the bottom of the image.

Salehi

et al. use standard PCA to detect moving vehicles [

1]. Bar

et al. proposed a method via spectral and spatial information (SNS) to identify moving vehicles [

12]. In these methods, it is assumed that the influence of spectral difference between spectrally-neighboring bands is smaller than the temporal effect, and all eight spectral bands of WV2 are used to analyze moving vehicles. However, there may be big spectral differences between some spectrally-neighboring bands, and the spectral differences may influence the accuracy of moving vehicle identification. In this paper, we perform a band selection process by visual inspection and quantitative analysis, and three spectrally-neighboring band pairs are selected for moving vehicle identification. Furthermore, we propose the ERGAS-SNS method to identify moving vehicles. Through the analysis of the local ERGAS index, the dominant changed region could be extracted. Consequently, most of the spectral unchanged regions are eliminated, and the accuracy of moving vehicle identification is therefore improved.

2.3.1. Spectral Band Selection

Ten clippings are randomly taken from the WV2 imagery of San Francisco for spectral band selection. Each of the clippings has a size of 500 × 500 pixels. We use the technique of image difference for visual inspection. The change detection maps between MS1 and MS2 spectral bands (C-B, Y-G, RE-R, NIR2-NIR1) are calculated. Sample change detection maps are shown in

Figure 11. From (c–f), by visual inspection, we can see that there are dominant differences in the change detection map generated by RE-R. Furthermore, (g–j) show a regional enlarged image in red rectangles from (c–f), respectively. One moving vehicle locates in the center of the enlarge images. We could observe that in C-B, Y-G and NIR2-NIR1 maps, the vehicle is rather obvious. However, the vehicle in the RE-R map cannot be easily perceived, since heave background clutter is generated by the spectral differences.

Besides visual inspection, we performed the quantitative analysis by using root mean squared error (RMSE) analysis. RMSE is capable of measuring the global spectral distortion between two spectral bands. It exhibits a strong tendency to decrease when the spectral differences between the spectrally-neighboring bands decreases. As shown in

Figure 12, the RMSE values of RE-R are dominantly high, whereas the RMSE values of other spectral band pairs are low. Based upon the above analysis, we conclude that the spectral band pair of RE-R is not suitable to identify moving vehicles.

From visual inspection and quantitative analysis, it can be derived that the spectrally-neighboring band pairs of C-B, Y-G and NIR2-NIR1 are beneficial for moving vehicle identification. Thus, we create new composite MS1 and MS2 images. The composite MS1 image consists of blue, green and NIR1. The composite MS2 image consists of coastal, yellow and NIR2. Both composite images are forwarded to the following ERGAS-SNS analysis.

2.3.2. ERGAS-SNS Analysis

Inspired by the SNS approach proposed by Bar [

12], we investigate extending reliable change detection techniques for moving vehicle identification. The Erreur Relative Globale Adimensionnelle de Synthese (ERGAS) index proposed by Wald [

19] is capable of measuring spectral difference between two spectral images. Following this insight, we incorporate ERGAS analysis into the SNS approach to detect the spatial displacement of moving vehicles.

The ERGAS index was originally designed to estimate the overall spectral quality of image fusion, and it is used to perform such a comparison:

where

h and

l denote the spatial resolutions of a high resolution image and a low resolution image, respectively.

N is the number of spectral bands.

k is the index of each band.

RMSE(

Bk) denotes the root mean square error for

k-band between the fused image and the reference image.

μ(

k) denotes the mean of the

k-band in the reference image. The index is capable of measuring spectra difference between two spectral images. Renza

et al. [

20] use the local ERGAS method for change detection. The new equation of local ERGAS is given by:

where the

fk (

x,y) is the local RMSE and

gk is the mean of each spectral band.

Both images are first normalized to minimize the difference between them, and the local ERGAS method is applied to composite MS1 and MS2 images for local change detection. A window of a size of 5 × 5 scans every pixel of the candidate locations, and bright spots will be generated around moving vehicles.

Figures 13 and

14 give example images given by local ERGAS analysis, where the values of the image are normalized to (0, 255). It can be seen that a moving vehicle turns into a pair of bright spots. The bright spots are forwarded to the SNS analysis.

In the SNS analysis [

12], the change score is calculated between various spectral bands. In this paper, the change score (CS) is defined as:

where med denotes the standard median operator, p is the pixel position, M1 denotes the composite MS1 band group (blue, green and NIR1) and M2 denotes the composite MS2 band group (coastal, yellow and NIR2). After change score calculation, the bright spot pair generated by ERGAS analysis would turn into a positive-negative pair in the change score map, as shown in

Figures 13 and

14. This pair can be considered as a moving vehicle if and only if:

where

L is the vehicle’s length and

D is the feasible displacement of the fastest moving object in the scene during the time gap. From

a priori knowledge, vehicles in WV2 images often have a size of 6–10 pixels in length, and therefore,

Dmin is set to 6 pixels in our implementation. In addition, the general maximum speed of moving vehicles is about 160 km/h. It is about a 22-pixel displacement. Hence,

Dmax is set to 22 pixels in our implementation.

One moving vehicle generates a positive-negative pair (one bright spot and one dark spot) in ERGAS-SNS analysis. An interesting aspect of the positive-negative pair is that which spot belongs to MS1 or MS2 image depends on the vehicle’s polarity. If the vehicle is with positive polarity, the bright spot denotes the vehicles’ position in MS1 image, while the dark spot denotes the vehicles’ position in MS2 image. On the other hand, if the vehicle has negative polarity, the dark spot denotes the vehicle’s position in MS1 image, while the bright spot denotes the vehicle’s position in MS2 image. The phenomenon can be observed in

Figure 13 and

14. As mentioned in Section 2.2, the NWTH operation extracts candidate locations of vehicles with positive polarity, and the NBTH operation extracts candidate locations of vehicles with negative polarity. Following this insight, the vehicle’s position in both MS1 and MS2 images can be extracted. Clearly, this is important information for moving vehicle speed and direction estimation.