Energy Analysis of Road Accidents Based on Close-Range Photogrammetry

Abstract

:1. Introduction

| Photogrammetry | Laser Scanning | |

|---|---|---|

| Automation of spatial data retrieval | Semi-automated | Automated |

| Spatial data accuracy | Accurate | Most accurate |

| Spatial data resolution | Medium-High | High |

| Equipment cost | Low (hundreds) | High (thousands) |

| Equipment portability | Lightweight | Non-portable |

| Data acquisition time | Low (seconds per image) | High (minutes per scan) |

| Range distance | Medium | Long range |

| Operation time | Sensitive to light | Operates day and night but sensitive to rain |

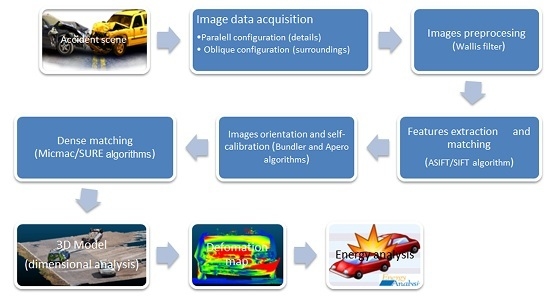

2. Materials and Methods

2.1. Photographic Sensors

| Camera | Sensor Type | Sensor Size | Effective Pixels | Image Size | Shutter Speed | Weight |

|---|---|---|---|---|---|---|

| OLYMPUS EPM-2 | 4/3 CMOS | 17.3 × 13 mm | 17.2 Mp | 4608 × 3456 | 2–1/4000 s | 269 gr |

| NOKIA LUMIA 1020 | BSI CMOS | 8.8 × 6.6 mm | 40.1 Mp | 7136 × 5360 | 158 gr | |

| EPM-2 Lens | Focal length | Crop factor | Field of view | Maximum opening | Minimum opening | Weight |

| M.ZUIKO DIGITAL 14–42 mm f3.5-5.6 II R | 14–42 mm | X2 | 75º–29º | F3.5 : f5.6 | F22 | 113 gr |

2.2. Additional Equipment

2.3. Methodology

2.3.1. Image Data Protocol Acquisition.

- Parallel protocol. Ideal for detailed reconstructions in specific areas of the vehicle or accident scene (e.g. skid marks, remains from the crash, etc.). In this case, the agent needs to capture five images following a cross shape as shown (Figure 3, left).The overlap between images needs to be at least 80%. The master image or central image (shown in red) will capture the area of interest. The remaining photos (four) have a complementary nature, and should be taken to the left, right (shown in purple), top, and bottom (indicated in green) of the central image. These photos should adopt a certain degree of perspective, turning the camera towards the middle of the interest area. It should be noted that, with the purpose of a complete reconstruction, each photo needs to capture the whole area of interest.

- Convergent protocol. Presents an ideal behavior in the reconstruction of a 360º 3D point clouds (accident scene and the whole vehicles). In this case, the agent should capture the images following a ring path (keeping a constant distance to the object). It is necessary to ensure a good overlapping between images (> 80%) (Figure 3 Right). In the situations where the object cannot be captured with a unique ring it is possible to adopt a similar procedure based on the capture of images following a half ring.

2.3.2. Image Pre-Processing

2.3.3. Feature Extraction and Matching

2.3.4. Image Orientation and Self-calibration

2.3.5. Dense Matching

- Detailed 3D point cloud: the point cloud with high resolution of the damaged areas of the vehicle. This model, which represents the deformation suffered during the crash, is the result of the comparison between the theoretical model (initial model) and the deformed one. The former may be supplied by the vehicle manufacturer or obtained through data collection by measuring undamaged vehicles of the same model (as in this case-study) with the laser scanner.

- General 3D point cloud: the point cloud which represents the whole accident scenario. This point cloud allows the dimensional analysis of the road accident and the final position of the involved vehicles.

2.3.6. Energy Analysis of the Road Accident

3. Experimental Results

3.1. Data Acquisition Protocol

| Vehicle | Wheelbase | Length | Width | Track | Weight | NHTSA Category |

|---|---|---|---|---|---|---|

| Nissan Serena SLX | 2.735 m | 4.320 m | 1.695 m | 1.463 m | 1480 kg | 3 |

| Fiat Scudo Combi | 3.000 m | 4.800 m | 1.900 m | 1.574 m | 1722 kg | 4 |

3.2. Photogrammetric Processing

3.3. Energy Analysis of the Accident

| Vehicle | L | C1 | C2 | C3 | C4 | C5 | C6 | d0 | d1 | Ed |

|---|---|---|---|---|---|---|---|---|---|---|

| Nissan Serena SLX | 1.62 m | 0.05 m | 0.1 m | 0.12 m | 0.11 m | 0.05 m | 0.03 m | 89.31 | 621.16 | 14011.56 J |

| Fiat Scudo Combi | 1.05 m | 0.02 m | 0.04 m | 0.06 m | 0.05 m | 0.06 m | 0.03 m | 42.64 | 586.94 | 3787.23 J |

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Pérez Rodríguez, M.U.; Sabucedo Álvarez, J.A.; Martínez Cárdenas, J.G. Investigación y Reconstrucción de Accidentes: La reconstrucción práctica de un accidente de tráfico. Secur. Vialis 2011, 3, 27–37. [Google Scholar] [CrossRef]

- Sánchez Ferragut, A.; Díaz Sánchez, J.L. La Reconstrucción de Accidentes de Tráfico desde el punto de vista policial. Cuadernos de la Guardia Civil 2004, 31, 109–118. [Google Scholar]

- Carballo, H. Pericias Tecnico-Mecanicas; Ediciones Larocca: Buenos Aires, Argentina, 2005. [Google Scholar]

- Luhmann, T.; Robson, S.; Stephen, K.; Harley, I. Close Range Photogrammetry Principles, Methods and Applications; Whittles Publishing: Scotland, UK, 2006. [Google Scholar]

- González-Aguilera, D.; Muñoz-Nieto, A.; Rodríguez-Gonzalvez, P.; Mancera-Taboada, J. Accuracy assessment of vehicles surface area measurement by means of statistical methods. Measurement 2013, 46, 1009–1018. [Google Scholar] [CrossRef]

- Du, X.; Jin, X.; Zhang, X.; Shen, J.; Hou, X. Geometry features measurement of traffic accident for reconstruction based on close-range photogrammetry. Adv. Engin. Softw. 2009, 40, 497–505. [Google Scholar] [CrossRef]

- Fraser, C.S.; Hanley, H.B.; Cronk, S. Close-range photogrammetry for accident reconstruction. Opt. 3D Meas. VII 2005, 2, 115–123. [Google Scholar]

- Fraser, C.S.; Cronk, S.; Hanley, H.B. Close-range photogrammetry in traffic incident management. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; pp. 125–128.

- Hattori, S.; Akimoto, K.; Fraser, C.; Imoto, H. Automated procedures with coded targets in industrial vision metrology. Photogramm. Engin. Remote Sens. 2002, 68, 441–446. [Google Scholar]

- Han, I.; Kang, H. Determination of the collision speed of a vehicle from evaluation of the crush volume using photographs. Proc. Inst. Mech.Engin. Part D: J. Automob. Engin. 2015. [Google Scholar] [CrossRef]

- Poole, G.; Venter, P. Measuring accident scenes using laser scanning systems and the use of scan data in 3d simulation and animation. In Proceedings of the 23rd Southern African Transport Conference, Pretoria, South Africa, 12–15 July 2004; pp. 377–388.

- Buck, U.; Naether, S.; Braun, M.; Bolliger, S.; Friederich, H.; Jackowski, C.; Aghayev, E.; Christe, A.; Vock, P.; Dirnhofer, R.; et al. Application of 3D documentation and geometric reconstruction methods in traffic accident analysis: With high resolution surface scanning, radiological MSCT/MRI scanning and real data based animation. Forensic Sci. Int. 2007, 170, 20–28. [Google Scholar] [CrossRef] [PubMed]

- Buck, U.; Naether, S.; Räss, B.; Jackowski, C.; Thali, M.J. Accident or homicide—Virtual crime scene reconstruction using 3D methods. Forensic Sci. Int. 2013, 225, 75–84. [Google Scholar] [CrossRef] [PubMed]

- Protocol for data acquisition. Available online: https://vimeo.com/127157351 (accessed on 9 November 2015).

- Wallis, K.F. Seasonal adjustment and relations between variables. J. Am. Stat. Assoc. 1976, 69, 18–31. [Google Scholar] [CrossRef]

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Gruen, A. Adaptive least squares correlation: A powerful image matching technique. South Afr. J. Photogramm. Remote Sens. Cartogr. 1985, 14, 175–187. [Google Scholar]

- Moisan, L.; Stival, B. A probabilistic criterion to detect rigid point matches between two images and estimate the fundamental matrix. Int. J. Comput. Vis. 2004, 57, 201–218. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- Kraus, K.; Jansa, J.; Kager, H. Advanced Methods and Applications Vol 2. Fundamentals and Standard Processes Vol. 1; Institute for Photogrammetry Vienna University of Technology: Bonn, Germany, 1997. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Deseilligny, M.P.; Clery, I. Apero, an open source bundle adjustment software for automatic calibration and orientation of set of images. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 269–277. [Google Scholar]

- Kukelova, Z.; Pajdla, T. A minimal solution to the autocalibration of radial distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7.

- Sturm, P.; Ramalingam, S.; Tardif, J.P.; Gasparini, S.; Barreto, J. Camera models and fundamental concepts used in geometric computer vision. Found. Trends®® Comput. Graph. Vis. 2011, 6, 1–183. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Micmac website. Available online: http://www.tapenade.gamsau.archi.fr/TAPEnADe/Tools.html (accessed on 9 November 2015).

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012; pp. 1–9.

- Prasad, A. Energy Absorbed by Vehicle Structures in Side-Impacts; SAE Technical Paper: Warrendale, PA, USA, 1991. [Google Scholar]

- Prasad, A. CRASH3 Damage Algorithm Reformulation for Front and Rear Collisions; SAE Technical Paper: Warrendale, PA, USA, 1990. [Google Scholar]

- McHenry, R. A Comparison of Results Obtained With Different Analytical Techniques for Reconstruction of Highway Accidents; SAE Technical Paper: Warrendale, PA, USA, 1975. [Google Scholar]

- Simulated accident. Available online: https://www.youtube.com/watch?v=z3i_9EbcEZM (accessed on 9 November 2015).

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morales, A.; Gonzalez-Aguilera, D.; Gutiérrez, M.A.; López, A.I. Energy Analysis of Road Accidents Based on Close-Range Photogrammetry. Remote Sens. 2015, 7, 15161-15178. https://0-doi-org.brum.beds.ac.uk/10.3390/rs71115161

Morales A, Gonzalez-Aguilera D, Gutiérrez MA, López AI. Energy Analysis of Road Accidents Based on Close-Range Photogrammetry. Remote Sensing. 2015; 7(11):15161-15178. https://0-doi-org.brum.beds.ac.uk/10.3390/rs71115161

Chicago/Turabian StyleMorales, Alejandro, Diego Gonzalez-Aguilera, Miguel A. Gutiérrez, and Alfonso I. López. 2015. "Energy Analysis of Road Accidents Based on Close-Range Photogrammetry" Remote Sensing 7, no. 11: 15161-15178. https://0-doi-org.brum.beds.ac.uk/10.3390/rs71115161