Automatic Detection and Segmentation of Columns in As-Built Buildings from Point Clouds

Abstract

:1. Introduction

2. Methodology

2.1. Building Rasterization

2.2. Column Detection

2.3. Column Segmentation and Parameterization

3. Results and Discussion

3.1. Data and Instruments

| Technical Characteristics | |

|---|---|

| Measurement range | 0.6 m to 330 m |

| Ranging error (25 m, one sigma) | ±2 mm |

| Step size (vertical/horizontal) | 0.009°/0.009° |

| Field of view (vertical/horizontal) | 300°/360° |

| Beam divergence | 0.011° |

| Measurement rate (points per second) | 122,000–976,000 |

| Laser wavelength | 1550 nm |

3.2. Building Rasterization

| Round Cross-section | ||||

|---|---|---|---|---|

| Case Study 1.a | Case Study 1.b. | Case Study 1.c | Case Study 1.d | |

| Point cloud size (points) | 790,527 | 380,995 | 252,067 | 454,849 |

| Raster resolution (m) | 0.08 | 0.08 | 0.08 | 0.08 |

| Image size (pixels) | 603 × 707 | 557 × 594 | 511 × 544 | 572 × 707 |

| Rectangular cross-section | ||||

| Case study 2.a | Case study 2.b. | Case study 2.c | Case study 2.d | |

| Point cloud size (points) | 488,333 | 247,832 | 211,011 | 288,571 |

| Raster resolution (m) | 0.08 | 0.08 | 0.08 | 0.08 |

| Image size (pixels) | 494 × 461 | 484 × 430 | 536 × 379 | 447 × 398 |

3.3. Column Detection

| Round Cross-section | ||||

|---|---|---|---|---|

| Case Study 1.a | Case Study 1.b | Case Study 1.c | Case Study 1.d | |

| Recall | 0.95 | 0.68 | 0.63 | 0.89 |

| Precision | 1 | 1 | 1 | 1 |

| F1 score | 0.97 | 0.81 | 0.77 | 0.94 |

| Rectangular cross-section | ||||

| Case study 2.a | Case study 2.b | Case study 2.c | Case study 2.d | |

| Recall | 0.9 | 0.9 | 0.7 | 0.8 |

| Precision | 0.69 | 0.64 | 0.53 | 0.67 |

| F1 score | 0.78 | 0.75 | 0.61 | 0.72 |

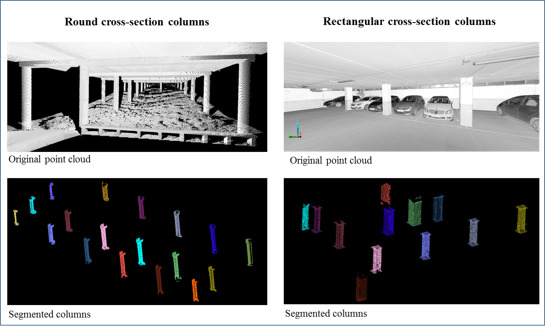

3.4. Column Segmentation

4. Conclusions

- The proposed methodology is robust for column detection without submitting data to manual cleaning, therefore minimizing the processing time.

- The detection step operates under different levels of data completeness. Therefore, it is robust to partial occlusions and clutter, which are very frequent in indoor environments.

- False positives are obtained, especially if other elements with the same shape and size as columns are present in the XY raster. In the case of rectangular cross-section, false positives such as wall corners, information from other elements of the scene such as walls could be used for their identification as false positives.

- The robustness of the methodology makes the acquisition of data from a complete point of view unnecessary and thus minimizes acquisition time.

- A coarse resolution in the rasterization process is enough for column detection.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Haala, M.; Kada, M. An update on automatic 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2010, 65, 570–580. [Google Scholar] [CrossRef]

- Rottersteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 293–298. [Google Scholar] [CrossRef]

- Wang, R. 3D building modeling using images and LiDAR: A review. Int. J. Image Data Fus. 2013, 4, 273–292. [Google Scholar] [CrossRef]

- Patraucean, V.; Armeni, I.; Nahangi, M.; Yeung, J.; Brilakis, I.; Haas, C. State of research in automatic as-built modelling. Adv. Eng. Inform. 2015, 29, 162–171. [Google Scholar] [CrossRef]

- Hwang, B.; Thomas, S.; Haas, C.; Caldas, C. Measuring the Impact of Rework on Construction Cost Performance. J. Construct. Eng. Manag. 2009, 135, 187–198. [Google Scholar] [CrossRef]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Autom. Construct. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Bosché, F. Automated recognition of 3D CAD model objects in laser scans and calculation of as-built dimensions for dimensional compliance control in construction. Adv. Eng. Inform. 2010, 24, 107–118. [Google Scholar] [CrossRef]

- González-Jorge, H.; Riveiro, B.; Armesto, J.; Arias, P. Standard artifact for the geometric verification of terrestrial laser scanning systems. Opt. Laser Technol. 2011, 43, 1249–1256. [Google Scholar] [CrossRef]

- Son, H.; Bosché, F.; Kim, C. As-built data acquisition and its use in production monitoring and automated layout of civil infrastructure: A survey. Adv. Eng. Inf. 2015, 29, 172–183. [Google Scholar] [CrossRef]

- González-Aguilera, D.; Gómez-Lahoz, J.; Sánchez, J. A new approach for Structural Monitoring of Large Dams with a Three-Dimensional Laser Scanner. Sensors 2008, 8, 5866–5883. [Google Scholar] [CrossRef]

- Bosché, F.; Ahmed, M.; Turkan, Y.; Haas, C.T.; Haas, R. The value of integrating scan-to-BIM and scan-vs-BIM techniques for construction monitoring using laser scanning and BIM: The case of cylindrical MEP components. Autom. Construct. 2015, 49, 201–213. [Google Scholar] [CrossRef]

- Bosché, F.; Guenet, E. Automating surface flatness control using terrestrial laser scanning and building information models. Autom. Construct. 2014, 44, 212–226. [Google Scholar] [CrossRef]

- Kim, M.K.; Cheng, C.P.; Sohn, H.; Chang, C.C. A framework for dimensional and surface quality assessment of precast concrete elements using BIM and 3D laser scanning. Autom. Construct. 2015, 49, 225–238. [Google Scholar] [CrossRef]

- Lari, Z.; Habib, A. An adaptive approach for the segmentation and extraction of planar and linear/cylindrical features from laser scanning data. ISPRS J. Photogramm. Remote Sens. 2014, 93, 192–212. [Google Scholar] [CrossRef]

- Son, H.; Kim, C.; Kim, C. Fully automated as-built 3D pipeline extraction method from laser-scanned data based on curvature computation. J. Comput. Civ. Eng. 2015, 29. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Belton, D.; West, G. Robust segmentation for multiple planar surface extraction in laser scanning 3D point cloud data. In Proceedings of the 2012 International Conference of Pattern Recognition, Tsukuba, Japan, 11–15 November 2012; pp. 1367–1370.

- Nurunnabi, A.; West, G.; Belton, D. Outlier detection and robust normal-curvature estimation in mobile laser scanning 3D point cloud data. Pattern Recognit. 2015, 48, 1404–1419. [Google Scholar] [CrossRef]

- Boulch, A.; Marlet, R. Fast and robust normal estimation for point clouds with sharp features. In Computer Graphics Forum; Blackwell Publishing Ltd.: Hoboken, NJ, USA, 2012; Volume 31, pp. 1765–1774. [Google Scholar]

- Pu, S.; Rutzinger, M.; Vosselamn, G.; Oude Elbenik, S. Recognizing basic structures from mobile laser scanning data for road inventory studies. ISPRS J. Photogramm. Remote Sens. 2011, 66, S28–S39. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Mallet, C. Feature relevance assessment for the semantic interpretation of 3D point cloud data. Int. Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W2, 313–318. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C.; Yu, J. Semiautomated extraction of street light poles from mobile LiDAR point-clouds. IEEE Trans. Geosci. Remote Sens. 2015, 3, 1374–1386. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Khoshelham, K. Extending generalized Hough transform to detect 3D objects in laser range data. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007; pp. 206–210.

- Rabbani, T.; van den Heuvel, F. Efficient Hough transform for automatic detection of cylinders in point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2005, 36, 60–65. [Google Scholar]

- Liu, Y.J.; Zhang, J.B.; Hou, J.C.; Ren, J.C.; Tang, W.Q. Cylinder Detection in Large-Scale Point Cloud of Pipeline Plant. IEEE Trans. Vis. Comput. Gr. 2013, 19, 1700–1707. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.; Haas, C.; Haas, R. Automatic detection of cylindrical object in built facilities. J. Comput. Civ. Eng. 2014, 28. [Google Scholar] [CrossRef]

- Luo, D.; Wang, Y. Rapid extracting columns by slicing point clouds. ISPRS08 Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 215–218. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Gr. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Budroni, A.; Boehm, J. Automated 3D reconstruction of interiors from point clouds. Int. J. Archit. Comput. 2010, 8, 55–73. [Google Scholar] [CrossRef]

- Sanchez, V.; Zakhor, A. Planar 3D modeling of building interiors from point cloud data. In Proceedings of the 19th IEEE International Conference on Image Processing (ICIP), Orlando, FL, USA, 30 September–3 October 2012; pp. 1777–1780.

- Valero, E.; Adán, A.; Cerrada, C. Automatic Method for Building Indoor Boundary Models from Dense Point Clouds Collected by Laser Scanners. Sensors 2012, 12, 16099–16115. [Google Scholar] [CrossRef] [PubMed]

- Díaz-Vilariño, L.; Khoshelham, K.; Martínez-Sánchez, J.; Arias, P. 3D modelling of building indoor spaces and closes doors from imagery and point clouds. Sensors 2015, 15, 3491–3512. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Furukawa, Y. Reconstructing the world’s museums. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012.

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef]

- Riveiro, B.; González-Jorge, H.; Martínez-Sánchez, J.; Díaz-Vilariño, L.; Arias, P. Automatic detection of zebra crossings from mobile LiDAR data. Opt. Laser Technol. 2015, 70, 63–70. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Hough Paul, V.C. Methods and Means for Recognizing Complex Patters. U.S. Patent 3,069,654, 18 December 1962. [Google Scholar]

- Ballard, D.H. Generalizing the Hough Transform to detect arbitrary shapes. Pattern Recognit. 1981, 13, 111–122. [Google Scholar] [CrossRef]

- Atherton, T.J.; Kerbyson, D.J. Size invariant circle detection. Image Vis. Comput. 1999, 17, 795–803. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N. A method for registration of 3-d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Meagher, D. Geometric modeling using octree encoding. Comput. Gr. Image Process. 1982, 19, 129–147. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between precision-recall and ROC curves. In Proceedings of the 23th International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Díaz-Vilariño, L.; Conde, B.; Lagüela, S.; Lorenzo, H. Automatic Detection and Segmentation of Columns in As-Built Buildings from Point Clouds. Remote Sens. 2015, 7, 15651-15667. https://0-doi-org.brum.beds.ac.uk/10.3390/rs71115651

Díaz-Vilariño L, Conde B, Lagüela S, Lorenzo H. Automatic Detection and Segmentation of Columns in As-Built Buildings from Point Clouds. Remote Sensing. 2015; 7(11):15651-15667. https://0-doi-org.brum.beds.ac.uk/10.3390/rs71115651

Chicago/Turabian StyleDíaz-Vilariño, Lucía, Borja Conde, Susana Lagüela, and Henrique Lorenzo. 2015. "Automatic Detection and Segmentation of Columns in As-Built Buildings from Point Clouds" Remote Sensing 7, no. 11: 15651-15667. https://0-doi-org.brum.beds.ac.uk/10.3390/rs71115651