Mapping Urban Impervious Surface by Fusing Optical and SAR Data at the Decision Level

Abstract

:1. Introduction

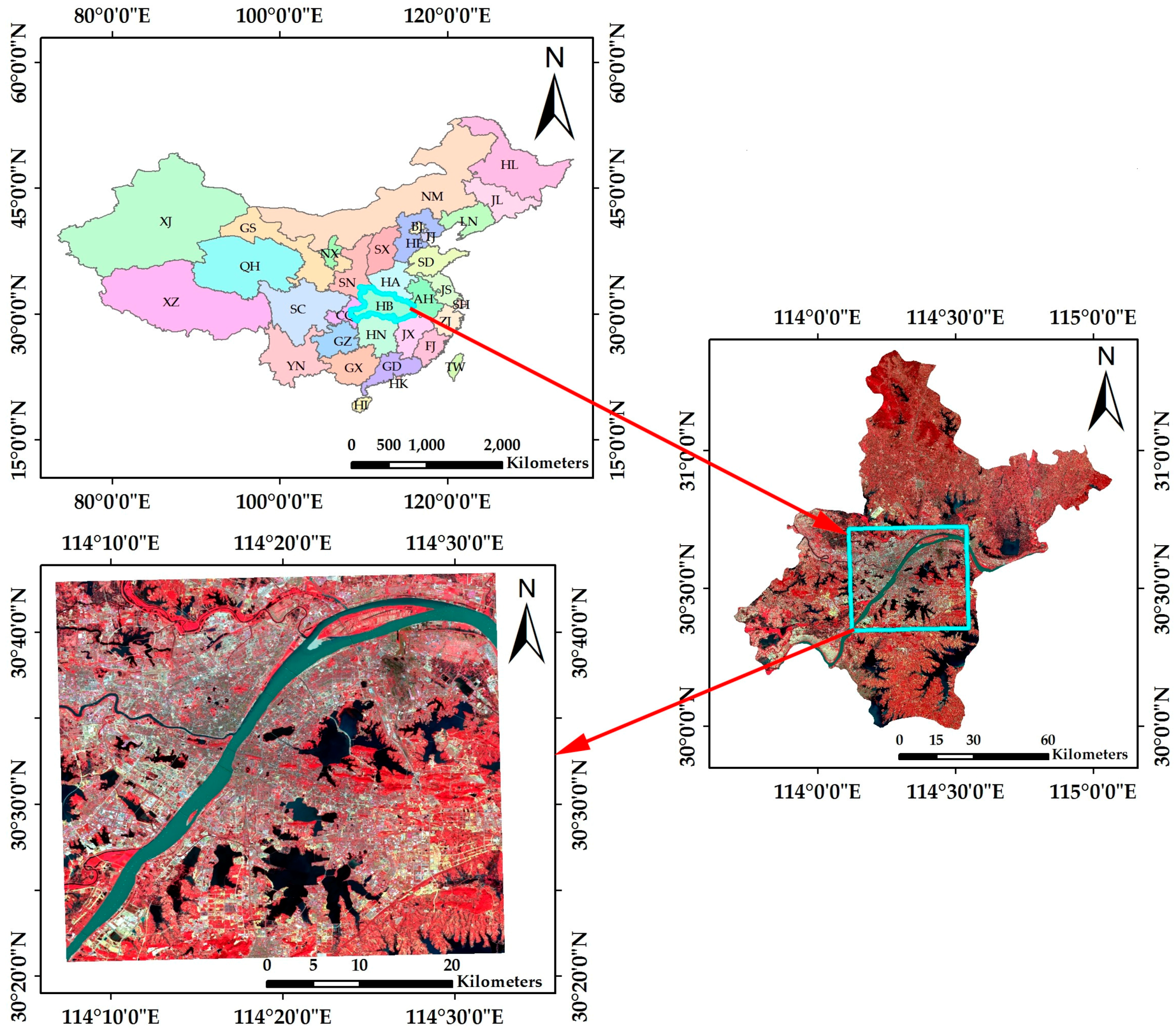

2. Data and the Study Area

2.1. The Study Area

2.2. Data Sources and Preprocessing

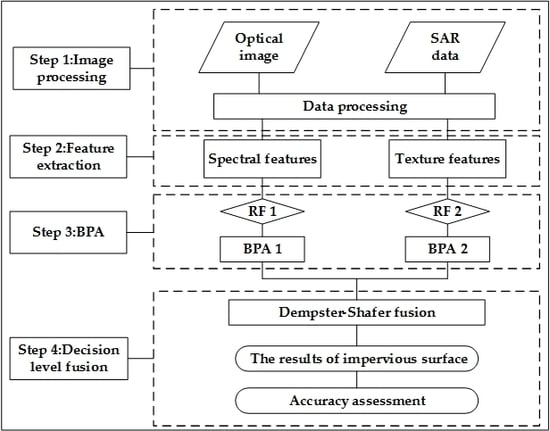

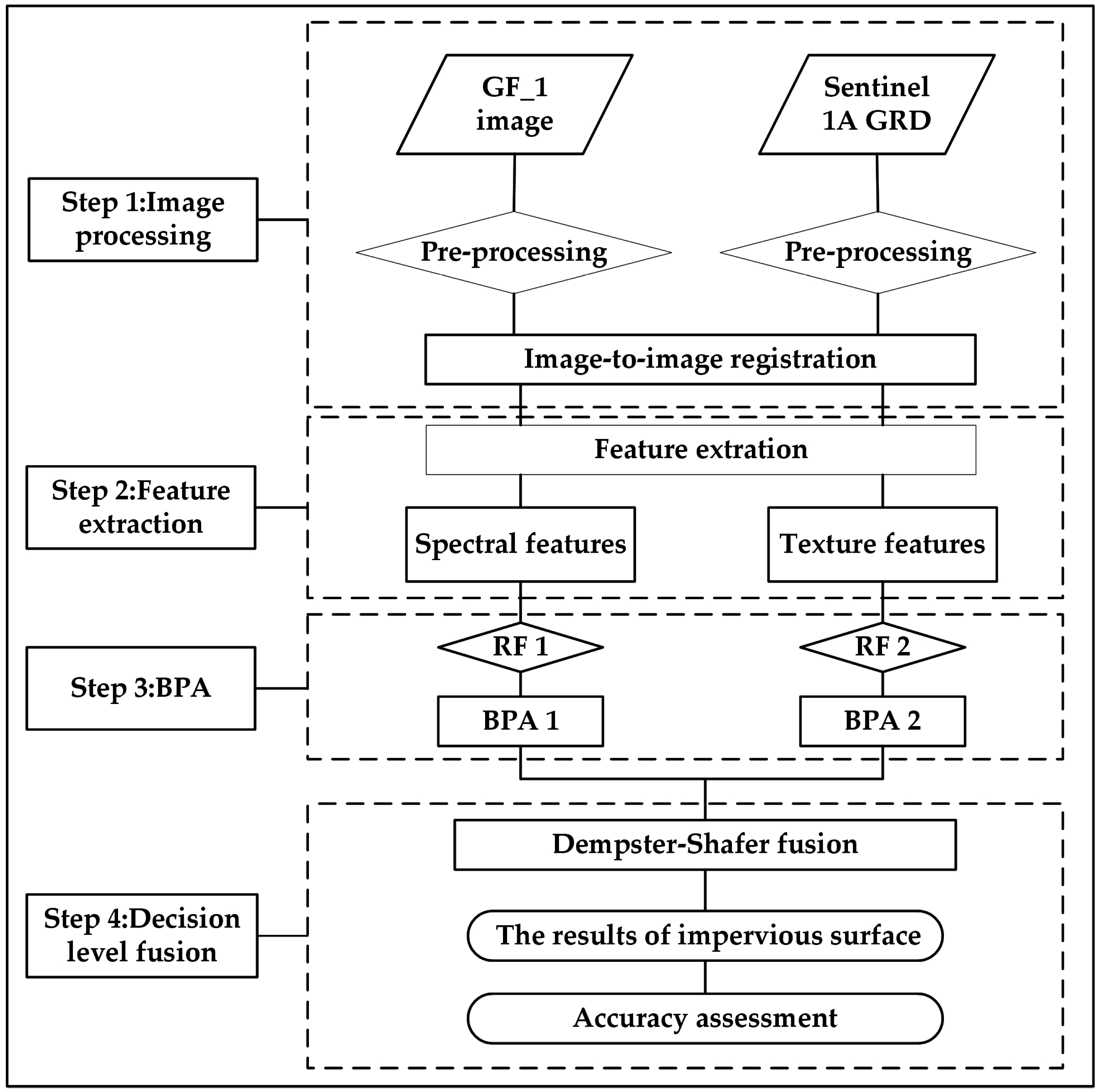

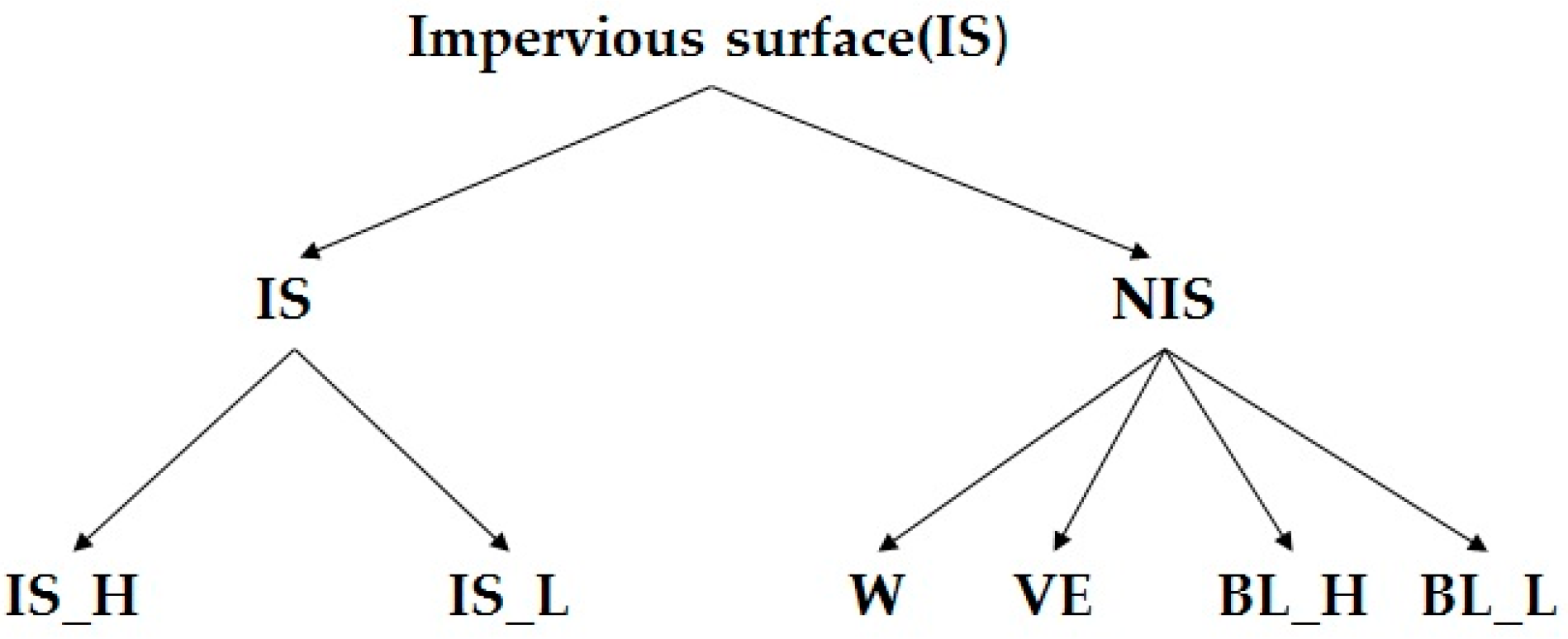

3. Methodology

3.1. Feature Extraction

3.2. Random Forest

- The RF does not over-fit to the training set.

- Compared to other classification algorithms, the RF can deal with the noise in the dataset.

- The RF can handle data of high dimensions and does not require the feature selection. It can process the discrete data as well as the continuous data and non-standardized datasets.

3.3. The Dempster–Shafer (D-S) Theory

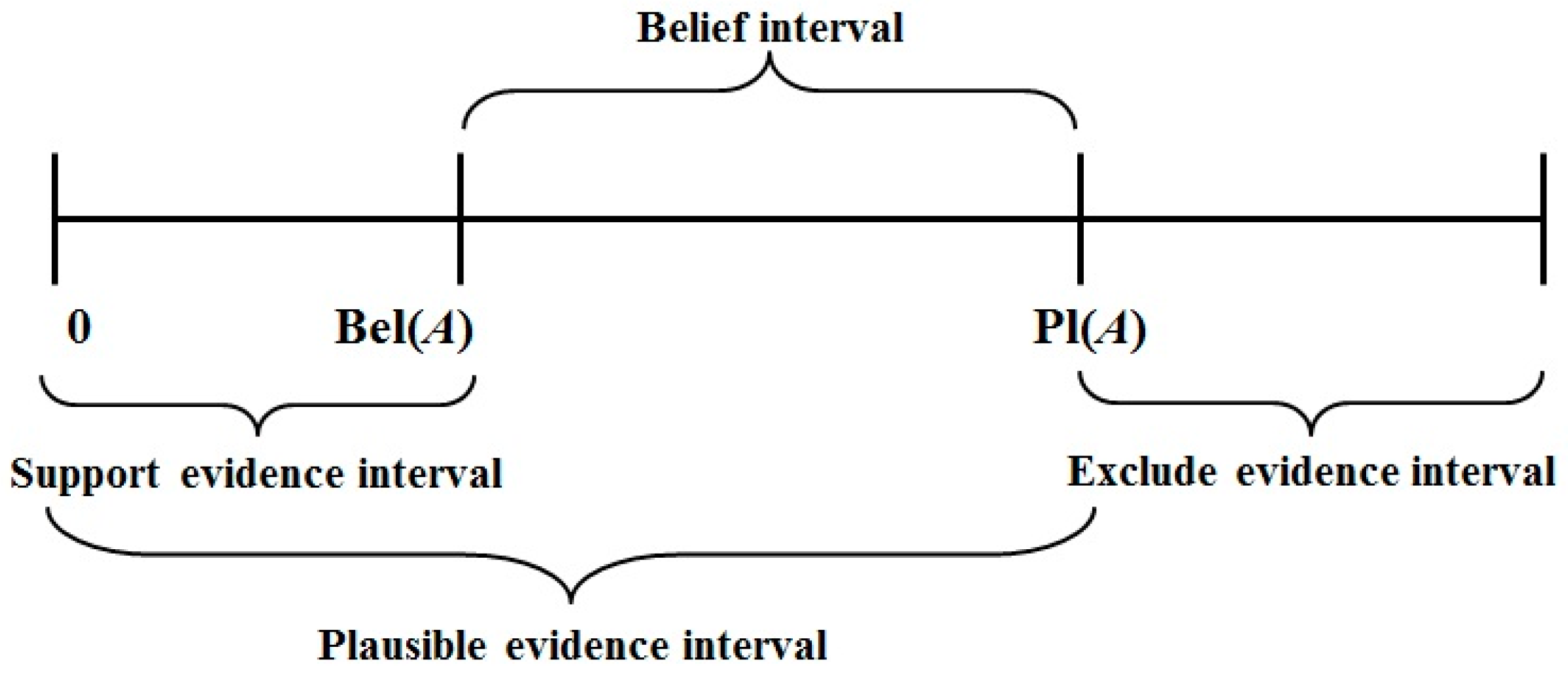

3.3.1. The Construction of the Basic Probability Assignment (BPA) and Uncertainty Interval

3.3.2. Dempster’s Combinational Rule

3.4. Accuracy Assessment

4. Results

4.1. Land Cover Classification from the GF-1/Sentinel-1A Image/DS-Fusion

4.1.1. Land Cover Classification from the GF-1/Sentinel-1A Image

4.1.2. Fusion of Land Covers Derived from the GF-1 Image and the Sentinel-1A Image

4.2. Land Cover Classification from the GF-1 Image/Sentinel-1A Image with Features/D-S Fusion

4.2.1. Land Cover Classification from the GF-1 Image/Sentinel-1A Image with Features

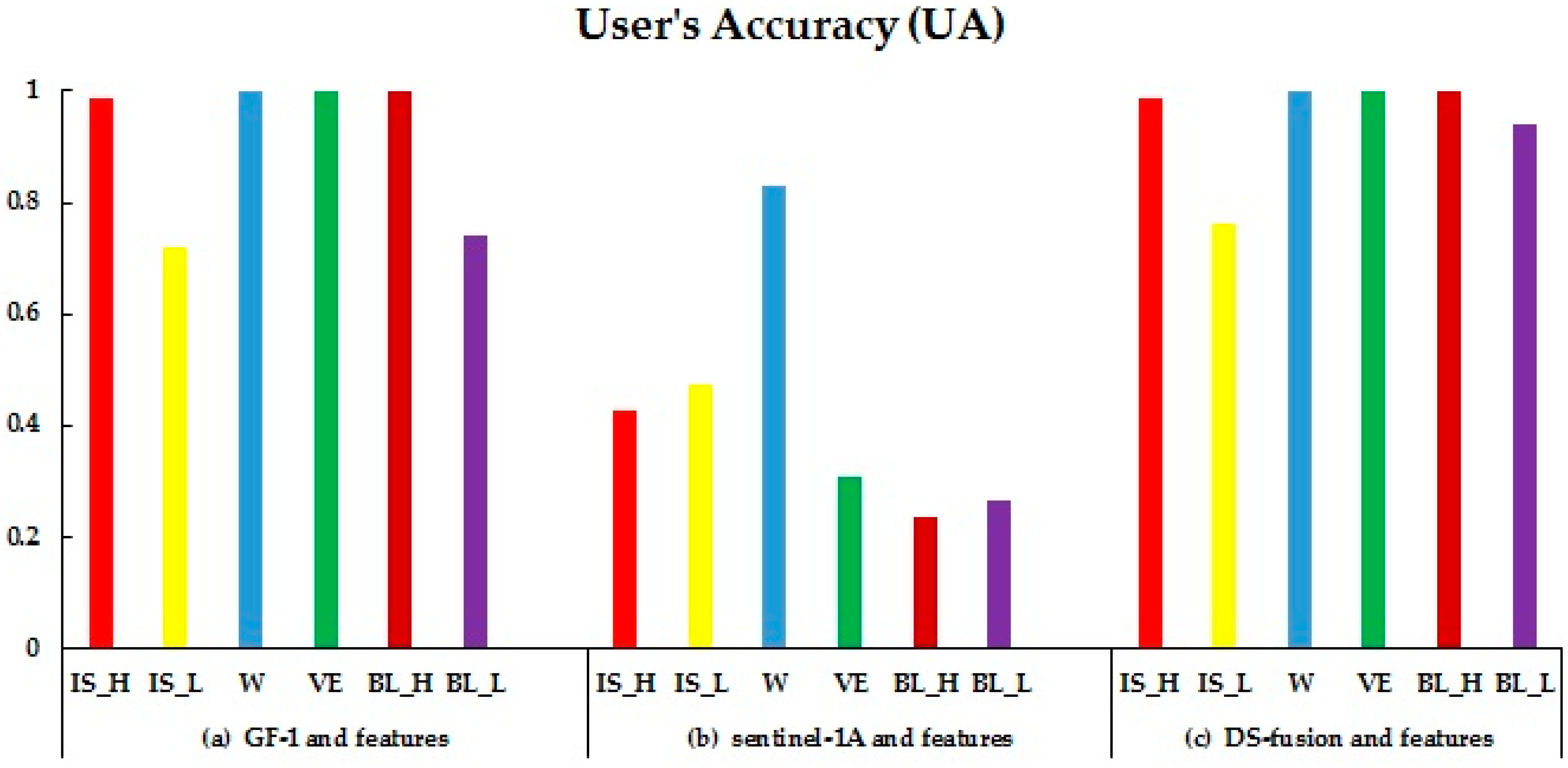

4.2.2. Fusion of Land Covers Derived from the GF-1 and Sentinel-1A Images with Features

5. Discussion

5.1. The Classification Accuracy for Impervious Surfaces and Uncertainty Analysis

5.1.1. The Classification Accuracy for Impervious Surfaces

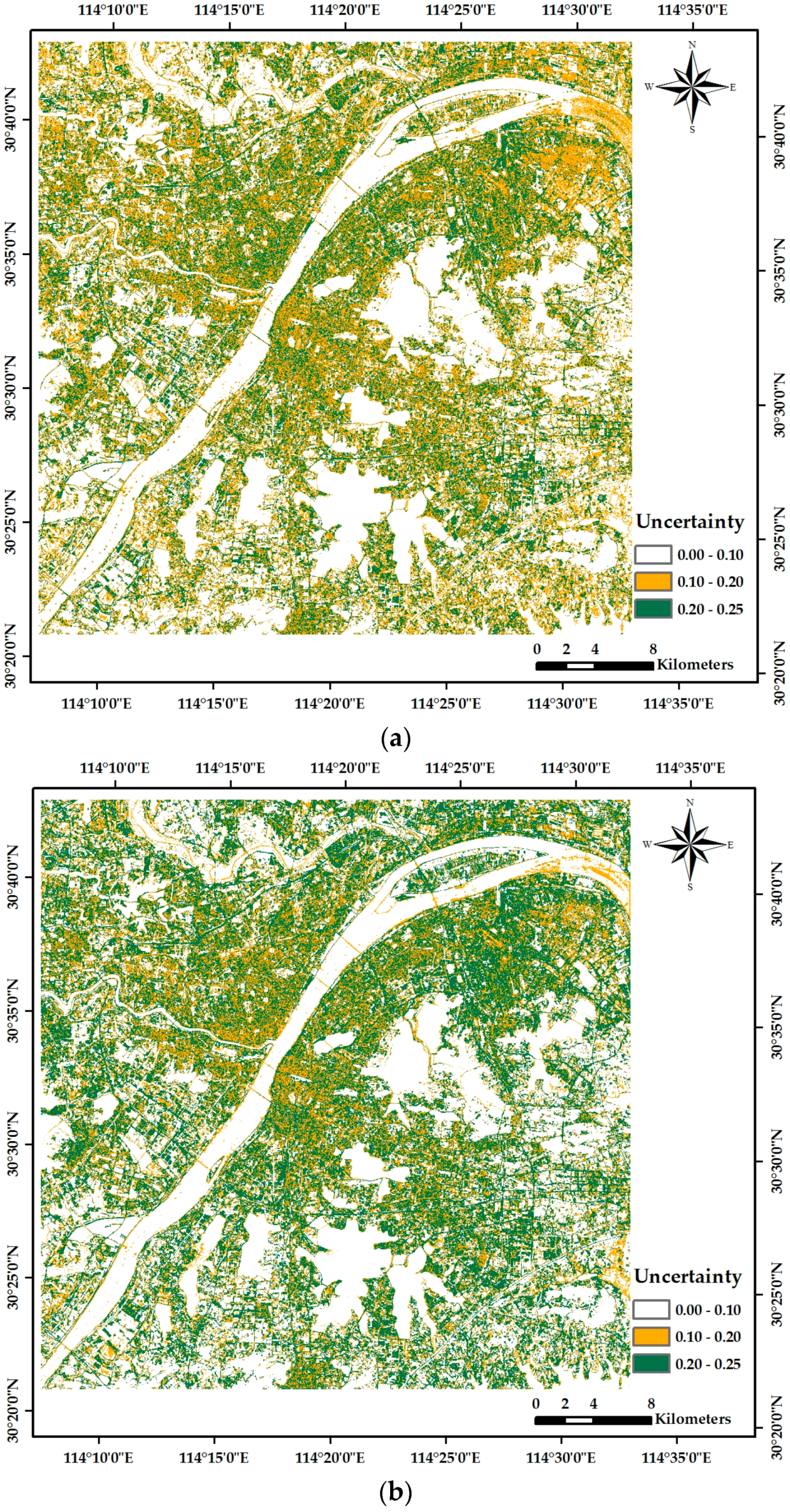

5.1.2. Uncertainty Analysis

5.2. Future Work

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Slonecker, E.T.; Jennings, D.B.; Garofalo, D. Remote sensing of impervious surfaces: A review. Remote Sens. Rev. 2001, 20, 227–255. [Google Scholar] [CrossRef]

- Weng, Q. Remote sensing of impervious surfaces in the urban areas: Requirements, methods, and trends. Remote Sens. Environ. 2012, 117, 34–49. [Google Scholar] [CrossRef]

- Arnold, C.L.; Gibbons, C.J. Impervious Surface Coverage: The Emergence of a Key Environmental Indicator. J. Am. Plan. Assoc. 1996, 62, 243–258. [Google Scholar] [CrossRef]

- Li, J.; Song, C.; Cao, L.; Zhu, F.; Meng, X.; Wu, J. Impacts of landscape structure on surface urban heat islands: A case study of Shanghai, China. Remote Sens. Environ. 2011, 115, 3249–3263. [Google Scholar] [CrossRef]

- Hurd, J.D.; Civco, D.L. Temporal Characterization of impervious surfaces for the State of Connecticut. In Proceedings of the ASPRS Annual Conference, Denver, CO, USA, 23–28 May 2004.

- Yang, L.; Huang, C.; Homer, C.G.; Wylie, B.K.; Coan, M.J.; Corporation, R.; Survey, G.; Data, E.; Falls, S.; Usgs, L.Y.; et al. An approach for mapping large-area impervious surfaces: Synergistic use of Landsat 7 ETM+ and high spatial resolution imagery. Can. J. Remote Sens. 2003, 29, 230–240. [Google Scholar] [CrossRef]

- Brabec, E.; Schulte, S.; Richards, P.L. Impervious Surfaces and Water Quality: A Review of Current Literature and Its Implications for Watershed Planning. J. Plan. Lit. 2002, 16, 499–514. [Google Scholar] [CrossRef]

- Lu, D.; Li, G.; Kuang, W.; Moran, E. Methods to extract impervious surface areas from satellite images. Int. J. Digit. Earth 2014, 7, 93–112. [Google Scholar] [CrossRef]

- Weng, Q.; Hu, X. Medium spatial resolution satellite imagery for estimating and mapping urban impervious surfaces using LSMA and ANN. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2397–2406. [Google Scholar] [CrossRef]

- Zhang, L.; Weng, Q. Annual dynamics of impervious surface in the Pearl River Delta, China, from 1988 to 2013, using time series Landsat imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 86–96. [Google Scholar] [CrossRef]

- Shao, Z.; Liu, C. The integrated use of DMSP-OLS nighttime light and MODIS data for monitoring large-scale impervious surface dynamics: A case study in the Yangtze River Delta. Remote Sens. 2014, 6, 9359–9378. [Google Scholar] [CrossRef]

- Ridd, M.K. Exploring a V-I-S (vegetation-impervious surface-soil) model for urban ecosystem analysis through remote sensing: Comparative anatomy for cities†. Int. J. Remote Sens. 1995, 16, 2165–2185. [Google Scholar] [CrossRef]

- Wu, C.; Murray, A.T. Estimating impervious surface distribution by spectral mixture analysis. Remote Sens. Environ. 2003, 84, 493–505. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Use of impervious surface in urban land-use classification. Remote Sens. Environ. 2006, 102, 146–160. [Google Scholar] [CrossRef]

- Deng, C.; Wu, C. The use of single-date MODIS imagery for estimating large-scale urban impervious surface fraction with spectral mixture analysis and machine learning techniques. ISPRS J. Photogramm. Remote Sens. 2013, 86, 100–110. [Google Scholar] [CrossRef]

- Kuang, W.; Liu, J.; Zhang, Z.; Lu, D.; Xiang, B. Spatiotemporal dynamics of impervious surface areas across China during the early 21st century. Chin. Sci. Bull. 2012, 58, 1691–1701. [Google Scholar] [CrossRef]

- Wu, C. Normalized spectral mixture analysis for monitoring urban composition using ETM+ imagery. Remote Sens. Environ. 2004, 93, 480–492. [Google Scholar] [CrossRef]

- Deng, C.; Wu, C. A spatially adaptive spectral mixture analysis for mapping subpixel urban impervious surface distribution. Remote Sens. Environ. 2013, 133, 62–70. [Google Scholar] [CrossRef]

- Xu, H. Analysis of Impervious Surface and its Impact on Urban Heat Environment using the Normalized Difference Impervious Surface Index (NDISI). Photogramm. Eng. Remote Sens. 2010, 76, 557–565. [Google Scholar] [CrossRef]

- Liu, C.; Shao, Z.; Chen, M.; Luo, H. MNDISI: A multi-source composition index for impervious surface area estimation at the individual city scale. Remote Sens. Lett. 2013, 4, 803–812. [Google Scholar] [CrossRef]

- Wang, Z.; Gang, C.; Li, X.; Chen, Y.; Li, J. Application of a normalized difference impervious index (NDII) to extract urban impervious surface features based on Landsat TM images. Int. J. Remote Sens. 2015, 36, 1055–1069. [Google Scholar] [CrossRef]

- Chabaeva, A.; Civco, D.; Prisloe, S. Development of a population density and land use based regression model to calculate the amount of imperviousness. In Proceedings of the ASPRS Annual Conference, Denver, CO, USA, 23–28 May 2004.

- Elvidge, C.D.; Tuttle, B.T.; Sutton, P.C.; Baugh, K.E.; Howard, A.T.; Milesi, C.; Bhaduri, B.; Nemani, R. Global Distribution and Density of Constructed Impervious Surfaces. Sensors 2007, 7, 1962–1979. [Google Scholar] [CrossRef]

- Bauer, M.E.; Loffelholz, B.; Wilson, B. Estimating and mapping impervious surface area by regression analysis of Landsat imagery. In Remote Sensing Impervious Surface; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Lu, D.; Tian, H.; Zhou, G.; Ge, H. Regional mapping of human settlements in southeastern China with multisensor remotely sensed data. Remote Sens. Environ. 2008, 112, 3668–3679. [Google Scholar] [CrossRef]

- Hodgson, M.E.; Jensen, J.R.; Tullis, J.A.; Riordan, K.D.; Archer, C.M. Synergistic Use of Lidar and Color Aerial Photography for Mapping Urban Parcel Imperviousness. Photogramm. Eng. Remote Sens. 2003, 69, 973–980. [Google Scholar] [CrossRef]

- Powell, S.L.; Cohen, W.B.; Yang, Z.; Pierce, J.D.; Alberti, M. Quantification of impervious surface in the Snohomish Water Resources Inventory Area of Western Washington from 1972–2006. Remote Sens. Environ. 2008, 112, 1895–1908. [Google Scholar] [CrossRef]

- Wu, C. Quantifying high-resolution impervious surfaces using spectral mixture analysis. Int. J. Remote Sens. 2009, 30, 2915–2932. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Extraction of urban impervious surfaces from an IKONOS image. Int. J. Remote Sens. 2009, 30, 1297–1311. [Google Scholar] [CrossRef]

- Mohapatra, R.P.; Wu, C. Subpixel Imperviousness Estimation with IKONOS Imagery: An Artificial Neural Network Approach. Remote Sens. Impervious Surf. 2008, 2000, 21–35. [Google Scholar]

- Zhang, X.; Xiao, P.; Feng, X. Impervious surface extraction from high-resolution satellite image using pixel- and object-based hybrid analysis. Int. J. Remote Sens. 2013, 34, 4449–4465. [Google Scholar] [CrossRef]

- Weng, Q.; Hu, X.; Liu, H. Estimating impervious surfaces using linear spectral mixture analysis with multitemporal ASTER images. Int. J. Remote Sens. 2009, 30, 4807–4830. [Google Scholar] [CrossRef]

- Im, J.; Lu, Z.; Rhee, J.; Quackenbush, L.J. Impervious surface quantification using a synthesis of artificial immune networks and decision/regression trees from multi-sensor data. Remote Sens. Environ. 2012, 117, 102–113. [Google Scholar] [CrossRef]

- Jiang, L.; Liao, M.; Lin, H.; Yang, L. Synergistic use of optical and InSAR data for urban impervious surface mapping: A case study in Hong Kong. Int. J. Remote Sens. 2009, 30, 2781–2796. [Google Scholar] [CrossRef]

- Leinenkugel, P.; Esch, T.; Kuenzer, C. Settlement detection and impervious surface estimation in the Mekong Delta using optical and SAR remote sensing data. Remote Sens. Environ. 2011, 115, 3007–3019. [Google Scholar] [CrossRef]

- Yang, L.M.; Jiang, L.M.; Lin, H.; Liao, M.S. Quantifying Sub-pixel Urban Impervious Surface through Fusion of Optical and InSAR Imagery. Gisci. Remote Sens. 2009, 46, 161–171. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Lin, H. A comparison study of impervious surfaces estimation using optical and SAR remote sensing images. Int. J. Appl. Earth Obs. Geoinform. 2012, 18, 148–156. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Lin, H. Improving the impervious surface estimation with combined use of optical and SAR remote sensing images. Remote Sens. Environ. 2014, 141, 155–167. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, J.; Zhao, Z.; Li, H.; Zhang, Y. Block-regression based fusion of optical and SAR imagery for feature enhancement. Int. J. Remote Sens. 2010, 31, 2325–2345. [Google Scholar] [CrossRef]

- Dempster, A.P. Upper and lower probabilities induced by multivalue mapping. Ann. Math. Stat. 1967, 38, 325–339. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Lein, J.K. Applying evidential reasoning methods to agricultural land cover classification. Int. J. Remote Sens. 2003, 24, 4161–4180. [Google Scholar] [CrossRef]

- Cayuela, L.; Golicher, D.; Salas Rey, J.; Rey Benayas, J.M. Classification of a complex landscape using Dempster-Shafer theory of evidence. Int. J. Remote Sens. 2006, 27, 1951–1971. [Google Scholar] [CrossRef]

- Chust, G.; Ducrot, D.; Pretus, J.L. Land cover discrimination potential of radar multitemporal series and optical multispectral images in a Mediterranean cultural landscape. Int. J. Remote Sens. 2004, 25, 3513–3528. [Google Scholar] [CrossRef]

- Ran, Y.; Li, X.; Lu, L.; Li, Z. Large-scale land cover mapping with the integration of multi-source information based on teh Dempster-Shafer theory. Int. J. Geogr. Inf. Sci. 2012, 26, 169–191. [Google Scholar] [CrossRef]

- Lu, L.; Xie, W.; Zhang, J.; Huang, G.; Li, Q.; Zhao, Z. Woodland extraction from high-resolution CASMSAR data based on dempster-shafer evidence theory fusion. Remote Sens. 2015, 7, 4068–4091. [Google Scholar] [CrossRef]

- GF-1 Images. Geospatial Data Cloud. Available online: http://www.gscloud.cn/ (accessed on 6 October 2015).

- Sentinel-1A Images. Sentinels Scientific Data Hub. Available online: https://scihub.copernicus.eu/ (accessed on 11 October 2015).

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Tateishi, R. Optimization of Second-Order Grey-Level Texture in High-Resolution Imagery for Statistical Estimation of Above-Ground Biomass. J. Environ. Inform. 2006, 8, 70–85. [Google Scholar] [CrossRef]

- Dye, M.; Mutanga, O.; Ismail, R. Combining spectral and textural remote sensing variables using random forests: Predicting the age of Pinus patulaforests in KwaZulu-Natal, South Africa. J. Spat. Sci. J. Spat. Sci. 2012, 57, 193–211. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, Y.; Pu, R.; Zhang, Z. Mapping Robinia pseudoacacia forest health conditions by using combined spectral, spatial, and textural information extracted from IKONOS imagery and random forest classifier. Remote Sens. 2015, 7, 9020–9044. [Google Scholar] [CrossRef]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Breiman, L.E.O. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Deng, C.; Wu, C. BCI: A biophysical composition index for remote sensing of urban environments. Remote Sens. Environ. 2012, 127, 247–259. [Google Scholar] [CrossRef]

- Shao, Z.; Tian, Y.; Shen, X. BASI: A new index to extract built-up areas from high-resolution remote sensing images by visual attention model. Remote Sens. Lett. 2014, 5, 305–314. [Google Scholar] [CrossRef]

| Texture | Equations | Description |

|---|---|---|

| Mean | Mean is the average value in the local window [50]. | |

| Correlation | Correlation measures the gray level linear dependencies in the image. , are the variance values in the local window [50,51]. | |

| Variance | It is the variance in the local window [51,52]. | |

| Homogeneity | Homogeneity is the smoothness of the image texture [50,51]. | |

| Contrast | Contrast measures the variations in the GLCM [50,51]. | |

| Dissimilarity | Dissimilarity is similar to the contrast measurement [50,51]. | |

| Entropy | Entropy is a measure of the degree of disorderliness in an image [50,52]. | |

| Angular Second Moment | ASM is a measure of textural uniformity [50,52]. |

| Source | IS_H | IS_L | W | VE | BL_H | BL_L | M(Θ) | |

|---|---|---|---|---|---|---|---|---|

| Classes | ||||||||

| Optical (m1()) | 0 | 0 | 0.91 | 0 | 0 | 0 | 0.09 | |

| SAR (m2()) | 0.02 | 0 | 0.29 | 0.02 | 0.03 | 0.42 | 0.22 | |

| m1(A1) m2(A2) | 0 | 0 | 0.89 | 0 | 0 | 0.07 | 0.04 | |

| Combination Results | A W | |||||||

| Classes | IS_H | IS_L | W | VE | BL_H | BL_L |

|---|---|---|---|---|---|---|

| (a) GF-1 | ||||||

| IS_H | 67 | 0 | 0 | 0 | 0 | 0 |

| IS_L | 9 | 75 | 6 | 0 | 4 | 17 |

| W | 0 | 0 | 49 | 0 | 0 | 0 |

| VE | 0 | 0 | 0 | 56 | 0 | 0 |

| BL_H | 4 | 0 | 0 | 0 | 59 | 0 |

| BL_L | 0 | 11 | 0 | 0 | 4 | 46 |

| OA | 86.49% | KAPPA | 0.84 | |||

| (b) Sentinel-1A | ||||||

| IS_H | 23 | 9 | 3 | 7 | 12 | 12 |

| IS_L | 23 | 42 | 0 | 7 | 15 | 2 |

| W | 3 | 1 | 41 | 1 | 1 | 4 |

| VE | 14 | 15 | 1 | 16 | 7 | 10 |

| BL_H | 9 | 12 | 8 | 8 | 13 | 19 |

| BL_L | 8 | 7 | 2 | 17 | 19 | 16 |

| OA | 37.10% | KAPPA | 0.24 | |||

| (c) DS-fusion | ||||||

| IS_H | 70 | 2 | 0 | 0 | 3 | 3 |

| IS_L | 7 | 81 | 0 | 0 | 5 | 10 |

| W | 0 | 0 | 55 | 0 | 0 | 0 |

| VE | 1 | 0 | 0 | 56 | 0 | 0 |

| BL_H | 2 | 0 | 0 | 0 | 54 | 0 |

| BL_L | 0 | 3 | 0 | 0 | 5 | 50 |

| OA | 89.93% | KAPPA | 0.88 | |||

| Classes | IS_H | IS_L | W | VE | BL_H | BL_L |

|---|---|---|---|---|---|---|

| (a) GF-1 and features | ||||||

| IS_H | 71 | 0 | 0 | 0 | 0 | 1 |

| IS_L | 9 | 75 | 0 | 0 | 4 | 16 |

| W | 0 | 0 | 55 | 0 | 0 | 0 |

| VE | 0 | 0 | 0 | 56 | 0 | 0 |

| BL_H | 0 | 0 | 0 | 0 | 58 | 0 |

| BL_L | 0 | 11 | 0 | 0 | 5 | 46 |

| OA | 88.70% | KAPPA | 0.86 | |||

| (b) Sentinel-1A and features | ||||||

| IS_H | 20 | 4 | 2 | 4 | 9 | 8 |

| IS_L | 34 | 58 | 0 | 10 | 19 | 1 |

| W | 6 | 0 | 53 | 0 | 0 | 5 |

| VE | 9 | 15 | 0 | 17 | 6 | 8 |

| BL_H | 7 | 9 | 0 | 17 | 20 | 32 |

| BL_L | 4 | 0 | 0 | 8 | 13 | 9 |

| OA | 43.49% | KAPPA | 0.32 | |||

| (c) DS-fusion and features | ||||||

| IS_H | 71 | 0 | 0 | 0 | 0 | 1 |

| IS_L | 9 | 86 | 0 | 0 | 4 | 14 |

| W | 0 | 0 | 55 | 0 | 0 | 0 |

| VE | 0 | 0 | 0 | 56 | 0 | 0 |

| BL_H | 0 | 0 | 0 | 0 | 60 | 0 |

| BL_L | 0 | 0 | 0 | 0 | 3 | 48 |

| OA | 92.38% | KAPPA | 0.91 | |||

| GF-1 | Sentinel-1A | DS-Fusion | GF-1 and Features | Sentinel-1A and Features | DS Fusion and Features | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classes | IS | NIS | IS | NIS | IS | NIS | IS | NIS | IS | NIS | IS | NIS |

| IS | 151 | 27 | 97 | 58 | 160 | 21 | 155 | 21 | 116 | 53 | 166 | 19 |

| NIS | 15 | 214 | 69 | 183 | 6 | 220 | 11 | 220 | 50 | 188 | 0 | 222 |

| Kappa | 0.79 | 0.35 | 0.87 | 0.84 | 0.48 | 0.91 | ||||||

| OA | 89.68% | 68.80% | 93.37% | 92.14% | 74.70% | 95.33% | ||||||

| Minimum | Maximum | Mean | Standard Deviation | |

|---|---|---|---|---|

| Fusing the GF-1 and Sentinel-1A images | 0 | 0.25 | 0.11 | 0.088 |

| Fusing the GF-1 and Sentinel-1A images and their features | 0 | 0.25 | 0.11 | 0.093 |

| Uncertainty Value Range | Fusing the GF-1 and Sentinel-1A Images | Fusing the GF-1 and Sentinel-1A Images and Their Features |

|---|---|---|

| The Number of Pixels | ||

| 0.00–0.10 | 2,888,513 | 2,944,986 |

| 0.10–0.20 | 2,546,273 | 2,297,449 |

| 0.20–0.25 | 1,098,349 | 1,290,700 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Z.; Fu, H.; Fu, P.; Yin, L. Mapping Urban Impervious Surface by Fusing Optical and SAR Data at the Decision Level. Remote Sens. 2016, 8, 945. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8110945

Shao Z, Fu H, Fu P, Yin L. Mapping Urban Impervious Surface by Fusing Optical and SAR Data at the Decision Level. Remote Sensing. 2016; 8(11):945. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8110945

Chicago/Turabian StyleShao, Zhenfeng, Huyan Fu, Peng Fu, and Li Yin. 2016. "Mapping Urban Impervious Surface by Fusing Optical and SAR Data at the Decision Level" Remote Sensing 8, no. 11: 945. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8110945