A Flexible, Generic Photogrammetric Approach to Zoom Lens Calibration

Abstract

:1. Introduction

1.1. Background

1.2. Related Work

2. Materials and Methods

2.1. Overview

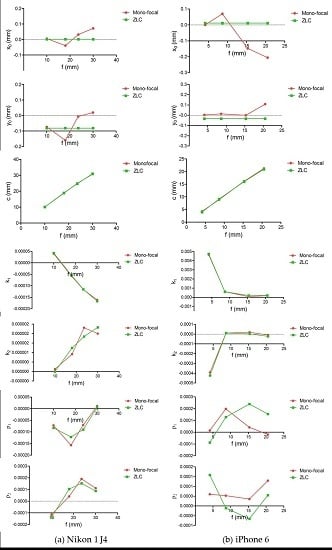

2.2. Zoom-Dependent Models

2.3. Collinearity Equations with Zoom-Related Intrinsic Parameters

2.4. Bundle Adjustment Model and Solution

2.5. Zoom Lens Devices

2.6. Planar Board for Calibration

2.7. Image Geometry Configuration

3. Results

4. Discussion

4.1. Accuracy Assessment

4.2. Demonstration

5. Conclusions

- (1)

- Experiments have been performed on a Nikon 1 J4 and an iPhone 6 mobile phone camera, which support the feasibility and validity of the proposed method for calibrating both optical- and digital-zoom cameras. In other words, the fundamental mathematical model, i.e., collinearity condition equations with zoom-related intrinsic parameters, is feasible for zoom lens calibration. The proposed camera model can be more generally applicable than zoom- and focus-dependent models, as the latter cannot be applied to zoom lens cameras without recording focus information.

- (2)

- The relative accuracies evaluated via a set of mono-focal triangulations, with support of model coefficients of intrinsic parameters achieved by zoom lens calibrations, are better than 1:6300 for the two employed devices. With regard to the multi-focal triangulation accuracy achieved in this study, it is 1:11,300 for the Nikon 1 J4, while the iPhone 6 reaches up to 1:16,100. This fact demonstrates that it is practicable to use a zoom lens camera either at a single zoom setting or at any combination of multiple settings, which undoubtedly improves the flexibility of a zoom lens camera in practice.

- (3)

- Experimental results achieved by the optical zoom lens camera did not exhibit the same extent of accuracy degradation as reported by Zheng et al. [16] since the Nikon 1 J4 with a 3x optical-zoom lens has a higher EXIF recording sensitivity than any of the three zoom lens cameras utilized by Zheng et al. [16]. This suggests that the EXIF recording ability varies among different devices and the zoom-dependent camera model based on a higher sensitivity of EXIF focal length recording can be accurate and avoid serious photogrammetric accuracy degradation. Besides, the use of the EXIF focal length overcomes the inconvenience of the manual recording using artificial rulers [16].

- (4)

- The practical application of texturing a TLS point cloud with two calibrated optical zoom lens images has demonstrated the advantages and flexibility of adjusting spatial resolution using an optical zoom lens over a digital zoom lens for photorealistic modelling purposes.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Schmidt, A.; Sun, L.; Aragon-Camarasa, G.; Siebert, J.P. The Calibration of the Pan-Tilt Units for the Active Stereo Head. In Image Processing and Communications Challenges 7; Springer: Cham, Switzerland, 2016; pp. 213–221. [Google Scholar]

- Taketomi, T.; Okada, K.; Yamamoto, G.; Miyazaki, J.; Kato, H. Camera pose estimation under dynamic intrinsic parameter change for augmented reality. Comput. Graph. 2014, 44, 11–19. [Google Scholar] [CrossRef]

- Salvagnini, P.; Pernici, F.; Cristani, M.; Lisanti, G.; Del Bimbo, A.; Murino, V. Non-myopic information theoretic sensor management of a single pan–tilt–zoom camera for multiple object detection and tracking. Comput. Vis. Image Underst. 2015, 134, 74–88. [Google Scholar] [CrossRef]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Tsai, R.Y. A versatile camera calibration technique for high-accuracy 3d machine vision metrology using off-the-shelf tv cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Schenk, T. Introduction to Photogrammetry; The Ohio State University: Columbus, OH, USA, 2005. [Google Scholar]

- McGlone, J.C. Manual of Photogrammetry; American Society of Photogrammetry: Bethesda, MD, USA, 2013. [Google Scholar]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 266–272. [Google Scholar]

- Kannala, J.; Heikkilä, J.; Brandt, S.S. Geometric Camera Calibration. In Wiley Encyclopedia of Computer Science and Engineering; John Wiley and Sons, Inc.: Somerset, NJ, USA, 2008. [Google Scholar]

- Clarke, T.A.; Fryer, J.G. The development of camera calibration methods and models. Photogramm. Rec. 1998, 16, 51–66. [Google Scholar] [CrossRef]

- Fraser, C. Multiple focal setting self-calibration of close-range metric cameras. Photogramm. Eng. Remote Sens. 1980, 46, 1161–1171. [Google Scholar]

- Lichti, D.D.; Qi, X.J.; Ahmed, T. Range camera self-calibration with scattering compensation. ISPRS J. Photogramm. Remote Sens. 2012, 74, 101–109. [Google Scholar] [CrossRef]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Läbe, T.; Förstner, W. Geometric Stability of Low-Cost Digital Consumer Cameras. In Proceedings of the 20th ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; pp. 528–535.

- Shortis, M.; Bellman, C.; Robson, S.; Johnston, G.; Johnson, G. Stability of Zoom and Fixed Lenses Used with Digital SLR Cameras. In Proceedings of the ISPRS Commission V Symposium of Image Engineering and Vision Metrology, Dresden, Germany, 25–27 September 2006; pp. 285–290.

- Zheng, S.; Wang, Z.; Huang, R. Zoom lens calibration with zoom- and focus-related intrinsic parameters applied to bundle adjustment. ISPRS J. Photogramm. Remote Sens. 2015, 102, 62–72. [Google Scholar] [CrossRef]

- Chen, J.; Dowman, I.; Li, S.; Li, Z.; Madden, M.; Mills, J.; Paparoditis, N.; Rottensteiner, F.; Sester, M.; Toth, C. Information from imagery: Isprs scientific vision and research agenda. ISPRS J. Photogramm. Remote Sens. 2016, 115, 3–21. [Google Scholar] [CrossRef]

- Sobel, I. On calibrating computer controlled cameras for perceiving 3-D scenes. Artif. Intell. 1974, 5, 185–198. [Google Scholar] [CrossRef]

- Krotkov, E.P. Exploratory Visual Sensing for Determining Spatial Layout with an Agile Stereo Camera System; University of Pennsylvania: Philadelphia, PA, USA, 1987. [Google Scholar]

- Fraser, C.; Al-Ajlouni, S. Zoom-dependent camera calibration in digital close-range photogrammetry. Photogramm. Eng. Remote Sens. 2006, 72, 1017–1026. [Google Scholar] [CrossRef]

- Kim, D.; Oh, J.; Sohn, K.; Shin, H. Automatic radial distortion correction in zoom lens video camera. J. Electron. Imaging 2010, 19. [Google Scholar] [CrossRef]

- Alvarez, L.; Gómez, L.; Henríquez, P. Zoom dependent lens distortion mathematical models. J. Math. Imaging Vis. 2012, 44, 480–490. [Google Scholar] [CrossRef]

- Galego, R.; Bernardino, A.; Gaspar, J. Auto-Calibration of Pan-Tilt Cameras Including Radial Distortion and Zoom. In Advances in Visual Computing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 169–178. [Google Scholar]

- Wu, Z.; Radke, R.J. Keeping a pan-tilt-zoom camera calibrated. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1994–2007. [Google Scholar] [CrossRef] [PubMed]

- Sarkis, M.; Senft, C.T.; Diepold, K. Calibrating an automatic zoom camera with moving least squares. IEEE Trans. Autom. Sci. Eng. 2009, 6, 492–503. [Google Scholar] [CrossRef]

- Willson, R.G. Modeling and Calibration of Automated Zoom Lenses; Photonics for Industrial Applications; International Society for Optics and Photonics: Bellingham, WA, USA, 1994; pp. 170–186. [Google Scholar]

- Tarabanis, K.; Tsai, R.Y.; Goodman, D.S. Modeling of a Computer-Controlled Zoom Lens. In Proceedings of the International Conference on Robotics and Automation, Nice, France, 12–14 May 1992; pp. 1545–1551.

- Burner, A.W. Zoom lens calibration for wind tunnel measurements. Video Metrics IV 1995, 2598, 19–33. [Google Scholar]

- Wiley, A.G.; Wong, K.W. Geometric calibration of zoom lenses for computer vision metrology. Photogramm. Eng. Remote Sens. 1995, 61, 69–74. [Google Scholar]

- Li, M.; Lavest, J.-M. Some aspects of zoom lens camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 1105–1110. [Google Scholar]

- Chen, Y.-S.; Shih, S.-W.; Hung, Y.-P.; Fuh, C.-S. Simple and efficient method of calibrating a motorized zoom lens. Image Vis. Comput. 2001, 19, 1099–1110. [Google Scholar] [CrossRef]

- Ahmed, M.T.; Farag, A.A. A neural approach to zoom-lens camera calibration from data with outliers. Image Vis. Comput. 2002, 20, 619–630. [Google Scholar] [CrossRef]

- Fraser, C.; Cronk, S.; Stamatopoulos, C. Implementation of zoom-dependent camera calibration in close-range photogrammetry. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 15–19. [Google Scholar] [CrossRef]

- Wu, B.; Hu, H.; Zhu, Q.; Zhang, Y. A flexible method for zoom lens calibration and modeling using a planar checkerboard. Photogramm. Eng. Remote Sens. 2013, 79, 555–571. [Google Scholar] [CrossRef]

- Fraser, C.S. Automatic camera calibration in close range photogrammetry. Photogramm. Eng. Remote Sens. 2013, 79, 381–388. [Google Scholar] [CrossRef]

- Agapito, L.; Hayman, E.; Reid, I. Self-calibration of rotating and zooming cameras. Int. J. Comput. Vis. 2001, 45, 107–127. [Google Scholar] [CrossRef]

- Tang, R.F.; Fritsch, D. Correlation analysis of camera self-calibration in close range photogrammetry. Photogramm. Rec. 2013, 28, 86–95. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Z.; Zhang, J. Camera Calibration Technique with Planar Scenes; Electronic Imaging 2003; International Society for Optics and Photonics: Bellingham, WA, USA, 2003; pp. 291–296. [Google Scholar]

- Malacara, D.; Malacara, Z. Handbook of Lens Design; Marcel Dekker: New York, NY, USA, 1994; Volume 1. [Google Scholar]

- Freeman, M. The Complete Guide to Digital Photography; Sterling Publishing Company, Incorporated: New York, NY, USA, 2003. [Google Scholar]

- Brown, D.C. Advanced Methods for the Calibration of Metric Cameras; DTIC Document: Fort Belvoir, VA, USA, 1968. [Google Scholar]

- Leberl, F.; Irschara, A.; Pock, T.; Meixner, P.; Gruber, M.; Scholz, S.; Wiechert, A. Point clouds: Lidar versus 3d vision. Photogramm. Eng. Remote Sens. 2010, 76, 1123–1134. [Google Scholar] [CrossRef]

- Ackermann, F. Airborne laser scanning present status and future expectations. ISPRS J. Photogramm. Remote Sens. 1999, 54, 64–67. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y. Direct Linear Transformation from Comparator Coordinates in Close-Range Photogrammetry. In ASP Symposium on Close-Range Photogrammetry; University of Illinois: Champaign, IL, USA, 1971. [Google Scholar]

- Heikkila, J.; Silven, O. A Four-Step Camera Calibration Procedure with Implicit Image Correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112.

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close Range Photogrammetry: Principles, Methods and Applications; Whittles: Dunbeath, UK, 2006. [Google Scholar]

- Fryer, J.; Mitchell, H.; Chandler, J.H. Applications of 3D Measurement from Images; Whittles Publishing: Dunbeath, UK, 2007. [Google Scholar]

| Camera | Zoom lens | ||||||

|---|---|---|---|---|---|---|---|

| Product | Resolution (Pixels) | Pixel Size | Product | Zoom Type | f35 1 (mm) | f 2 (mm) | Max Aperture |

| Nikon 1 J4 | 5232 × 3488 | 2.52 µm | Nikkor kit lens | Optical | 27–81 | 10–30 | F/3.5–5.6 |

| iPhone 6 | 3264 × 2448 | 1.5 µm | Apple iSight | Digital | 29–145 | 4.1–20.5 | F/2.2 |

| Zoom Setting (f: mm) | Images/Setting | Total Images | |

|---|---|---|---|

| Nikon 1 J4 | {10.0, 18.0, 23.6, 30.0} | 8 | 32 |

| iPhone 6 | {4.1, 8.6, 15.3, 20.5} | 7 | 28 |

| f (mm) | H 1 (mm) | RMS of Image Residuals (Pixel) | RMSE of XY Coordinates (mm) | RMSE of Z Coordinates (mm) | Relative Accuracy 2 (1:x) | ||

|---|---|---|---|---|---|---|---|

| Nikon 1 J4 | Mono-focal | 15.7 | 800 | 0.76 | 0.050 | 0.057 | 10,500 |

| 21.0 | 1030 | 0.53 | 0.043 | 0.092 | 10,200 | ||

| 26.0 | 1180 | 0.90 | 0.045 | 0.078 | 13,100 | ||

| ZLC | 15.7 | 800 | 1.27 | 0.085 | 0.093 | 6300 | |

| 21.0 | 1030 | 0.73 | 0.055 | 0.086 | 10,100 | ||

| 26.0 | 1180 | 0.93 | 0.043 | 0.088 | 12,100 | ||

| iPhone 6 | Mono-focal | 6.7 | 900 | 0.39 | 0.048 | 0.073 | 10,300 |

| 12.0 | 1520 | 0.39 | 0.044 | 0.070 | 18,400 | ||

| 18.1 | 1990 | 0.51 | 0.050 | 0.071 | 22,900 | ||

| ZLC | 6.7 | 900 | 0.92 | 0.065 | 0.074 | 9100 | |

| 12.0 | 1520 | 0.44 | 0.045 | 0.068 | 18,400 | ||

| 18.1 | 1990 | 0.66 | 0.051 | 0.102 | 17,500 |

| f (mm) | H (mm) | RMS of Image Residuals (Pixel) | RMSE of XY Coordinates (mm) | RMSE of Z Coordinates (mm) | Relative Accuracy (1:x) | |

|---|---|---|---|---|---|---|

| Nikon 1 J4_ZLC | {15.7, 21.0, 26.0} | 1000 | 1.00 | 0.047 | 0.075 | 11,300 |

| iPhone 6_ZLC | {6.7, 12.0, 18.1} | 1510 | 0.71 | 0.048 | 0.081 | 16,100 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Mills, J.; Xiao, W.; Huang, R.; Zheng, S.; Li, Z. A Flexible, Generic Photogrammetric Approach to Zoom Lens Calibration. Remote Sens. 2017, 9, 244. https://0-doi-org.brum.beds.ac.uk/10.3390/rs9030244

Wang Z, Mills J, Xiao W, Huang R, Zheng S, Li Z. A Flexible, Generic Photogrammetric Approach to Zoom Lens Calibration. Remote Sensing. 2017; 9(3):244. https://0-doi-org.brum.beds.ac.uk/10.3390/rs9030244

Chicago/Turabian StyleWang, Zheng, Jon Mills, Wen Xiao, Rongyong Huang, Shunyi Zheng, and Zhenhong Li. 2017. "A Flexible, Generic Photogrammetric Approach to Zoom Lens Calibration" Remote Sensing 9, no. 3: 244. https://0-doi-org.brum.beds.ac.uk/10.3390/rs9030244