Design and Evaluation of Anthropomorphic Robotic Hand for Object Grasping and Shape Recognition

Abstract

:1. Introduction

2. Methods

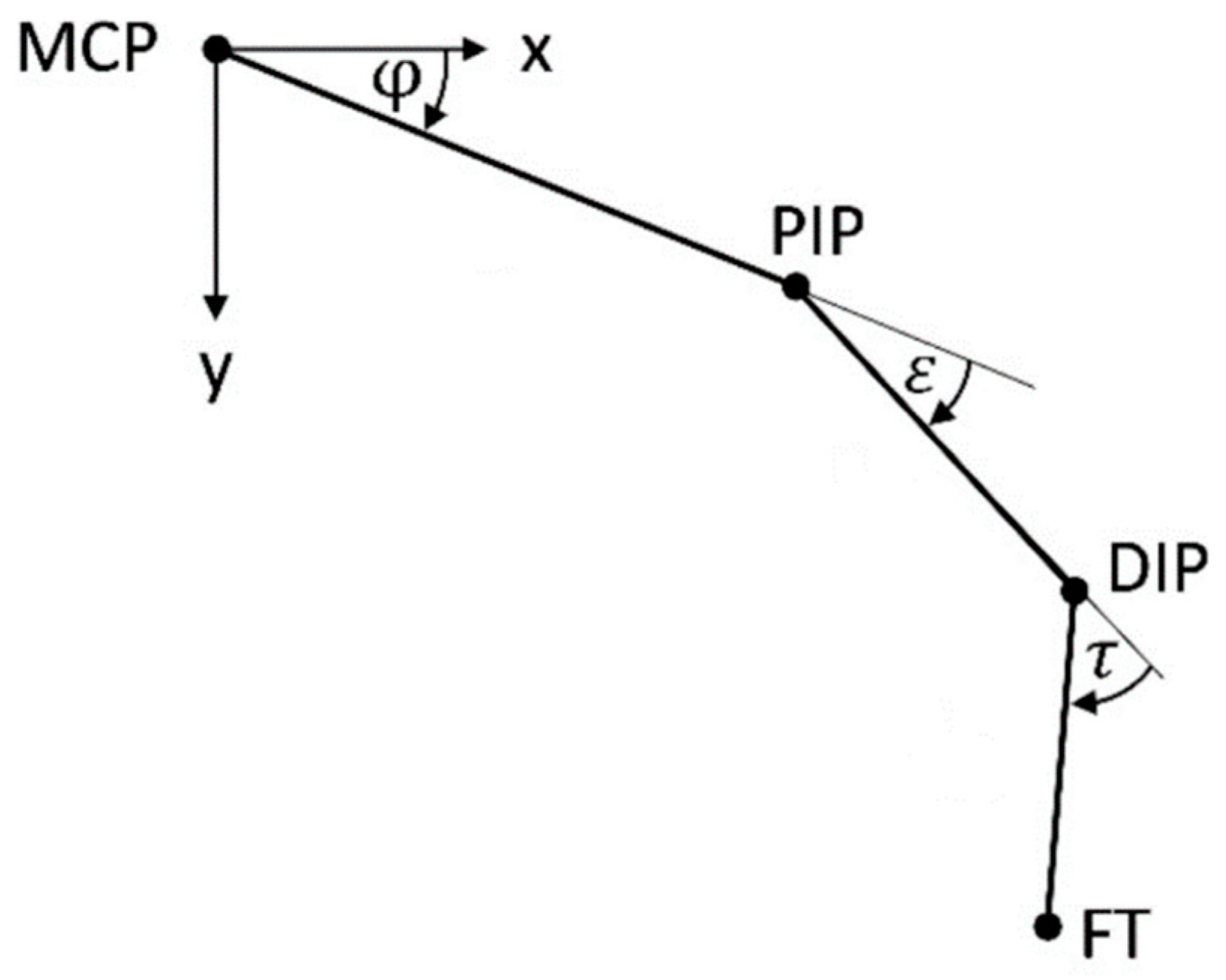

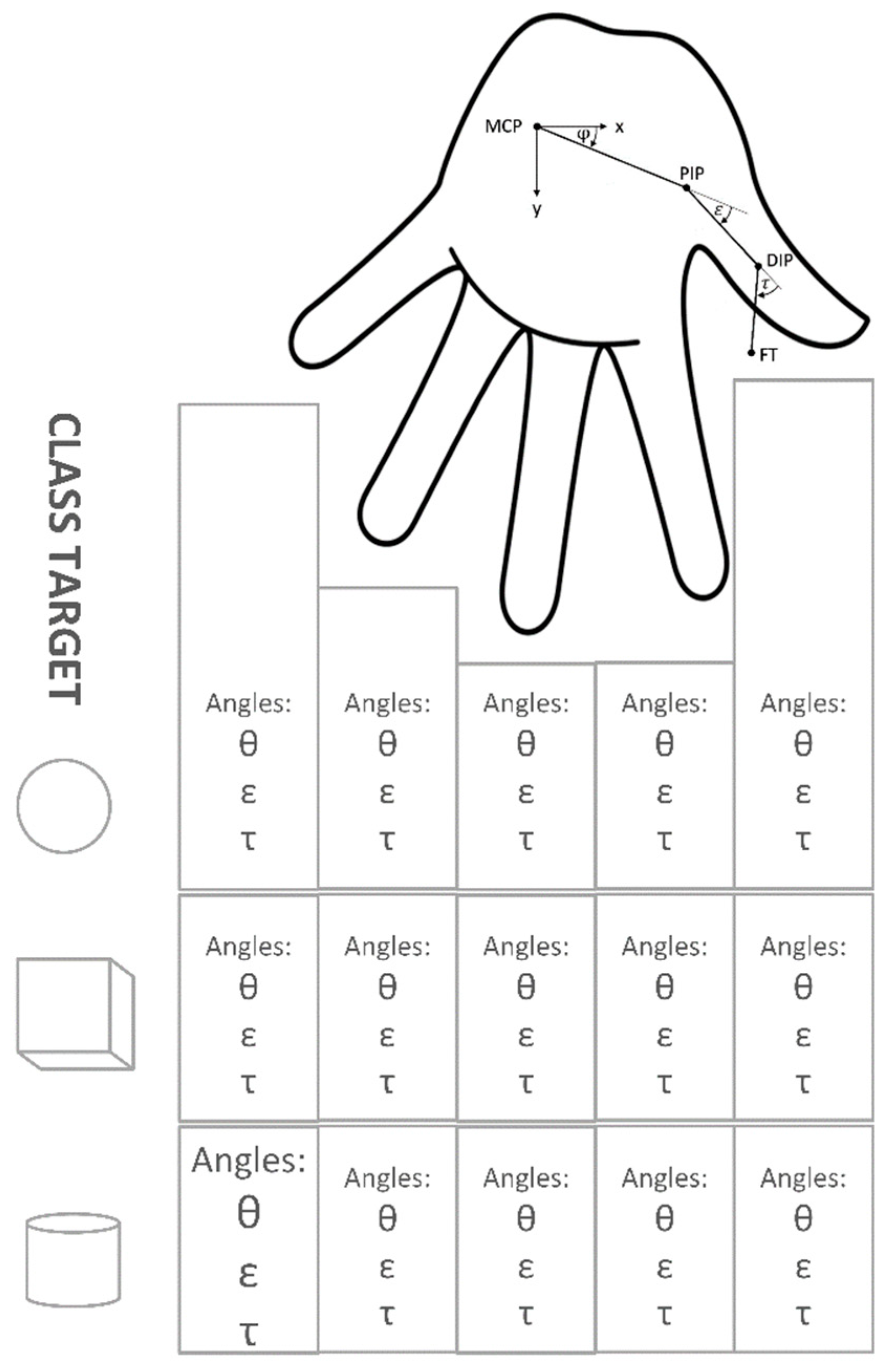

2.1. Formal Definition of a Problem

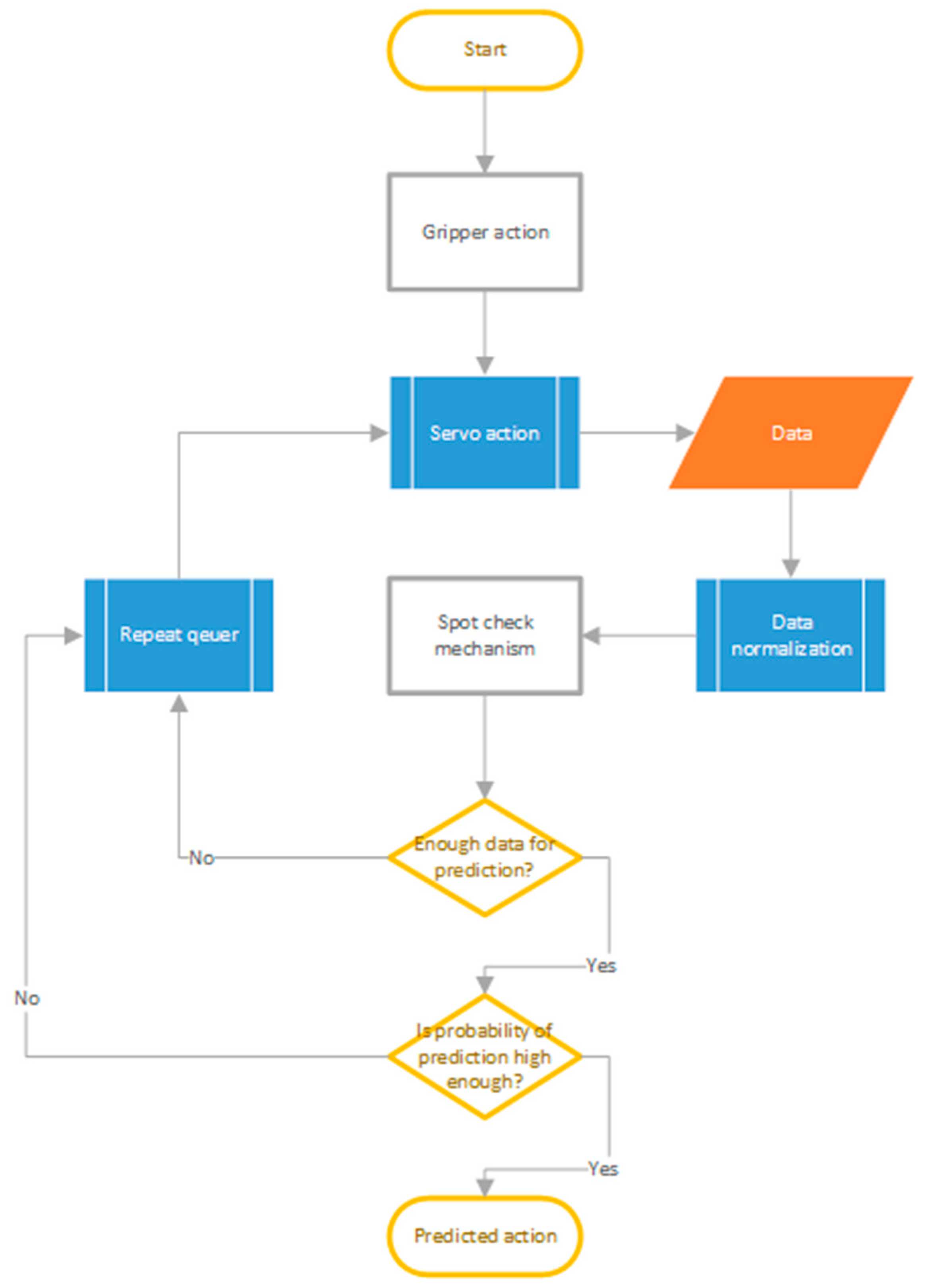

2.2. Outline

2.3. Architecture of the Classification Model

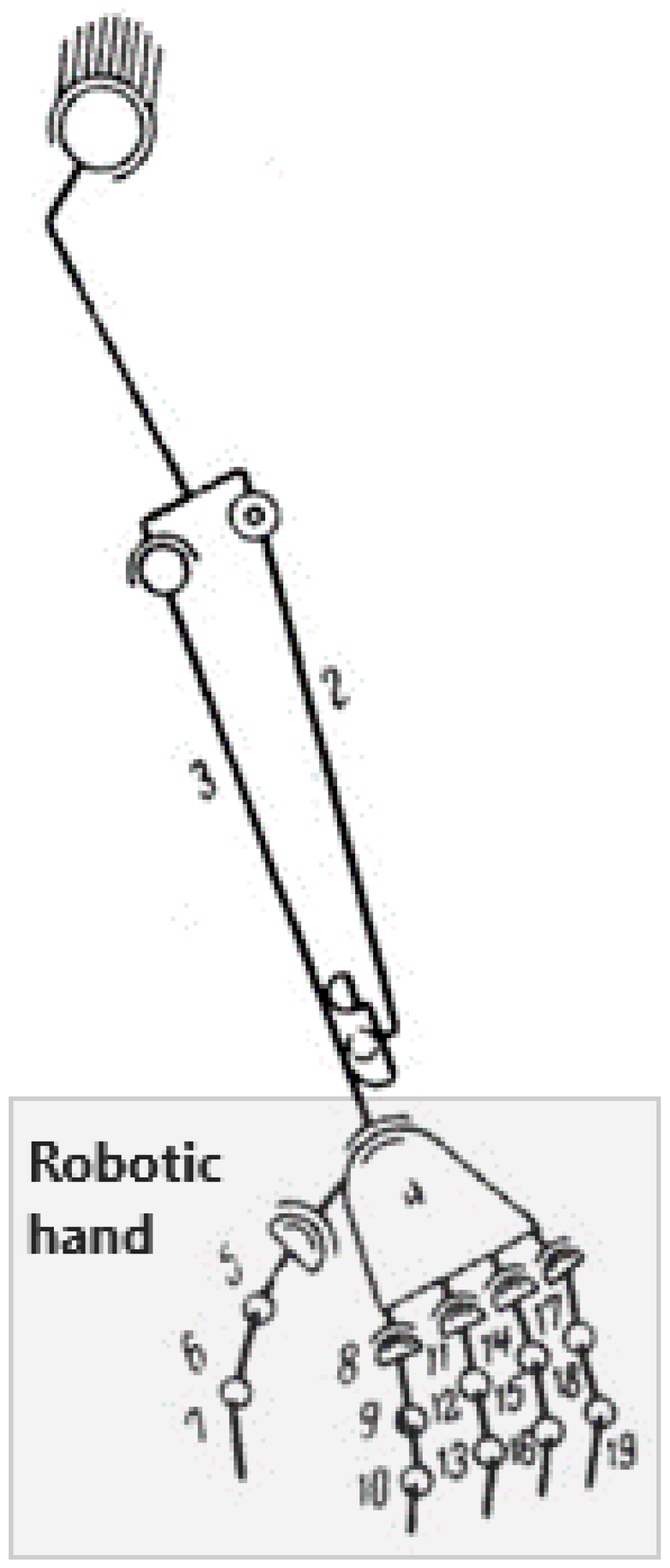

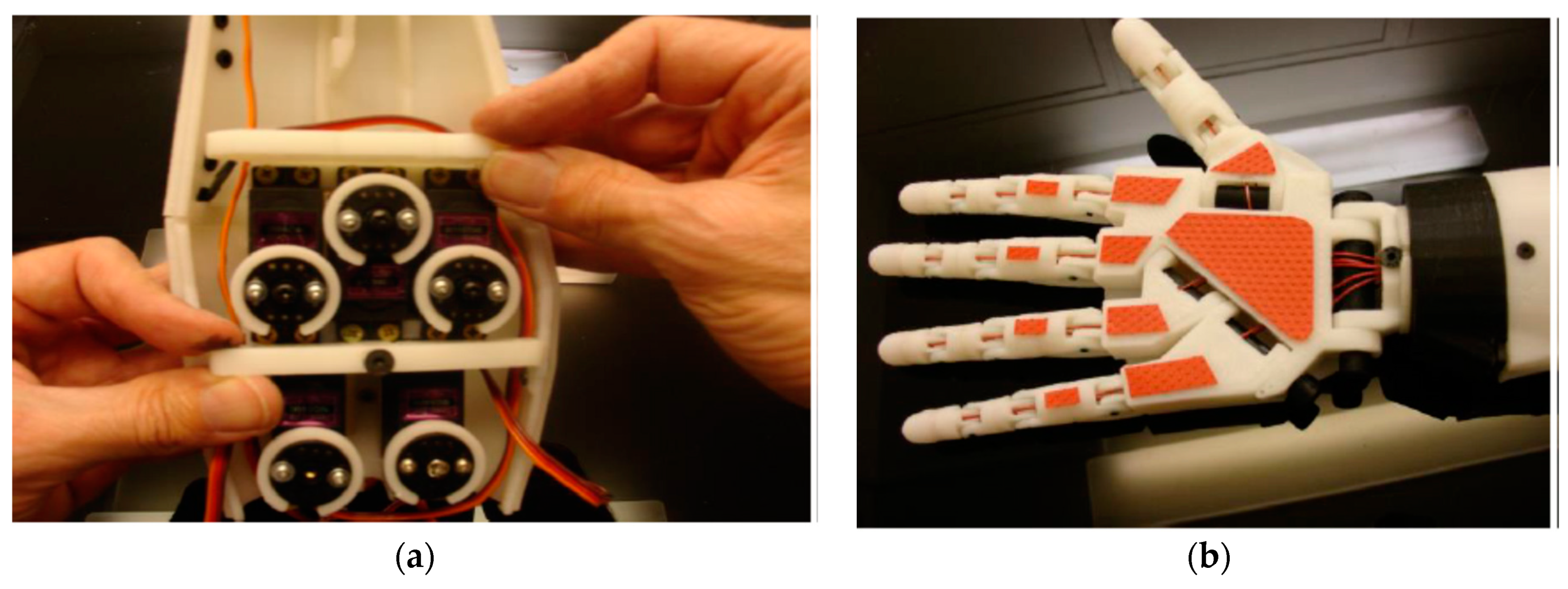

3. Implementation of Robotic Hand

4. Data Collection and Results

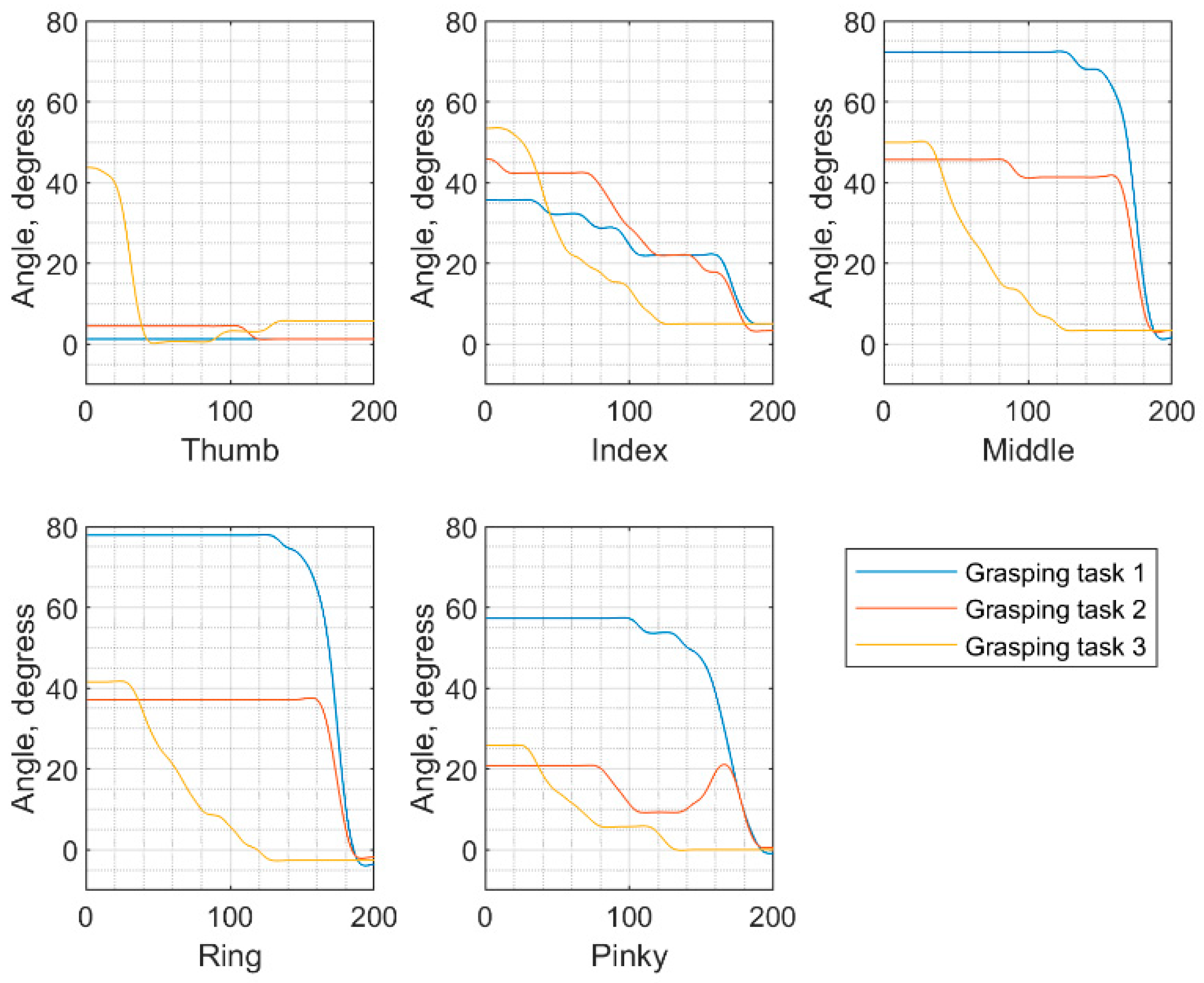

4.1. Data Collection

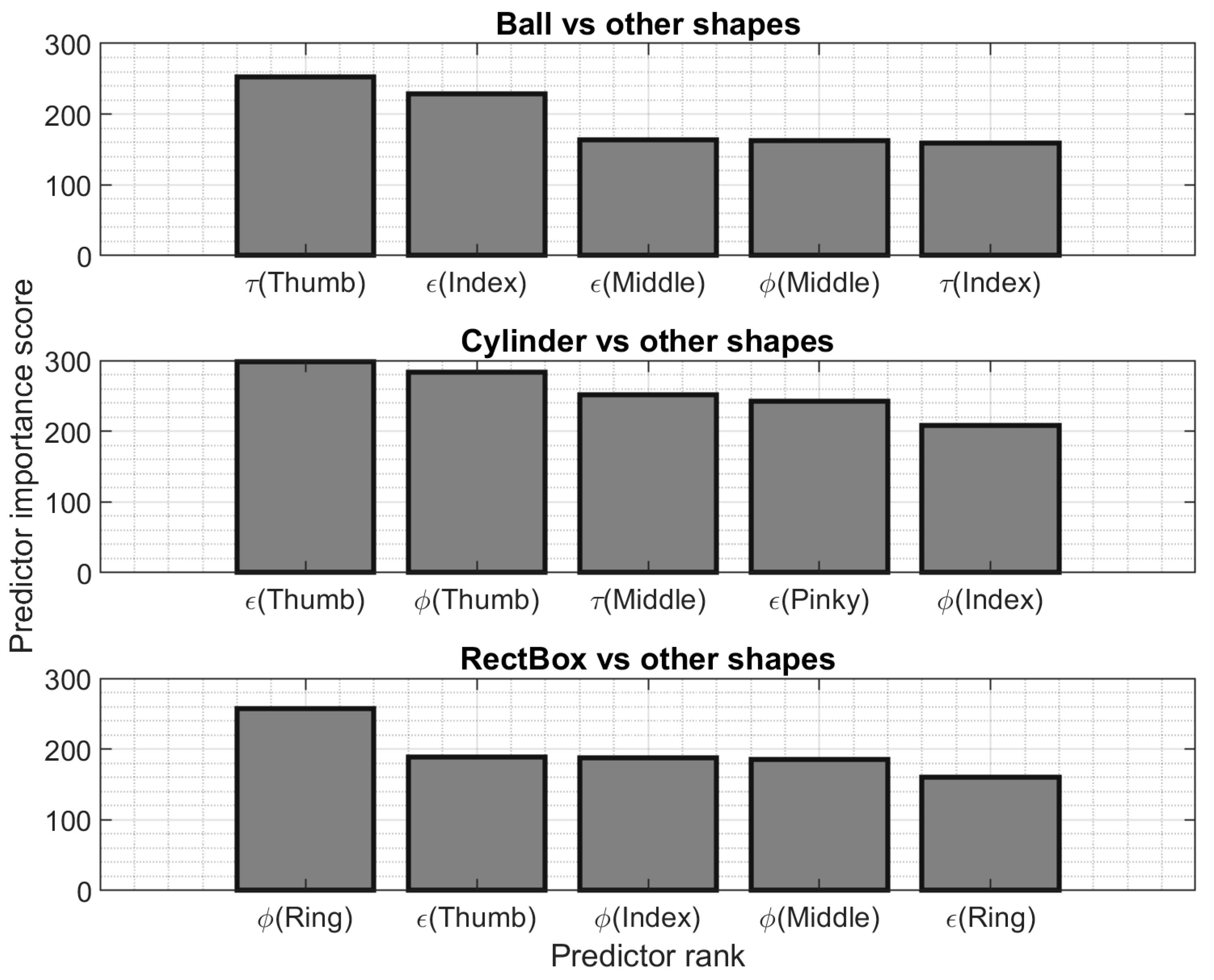

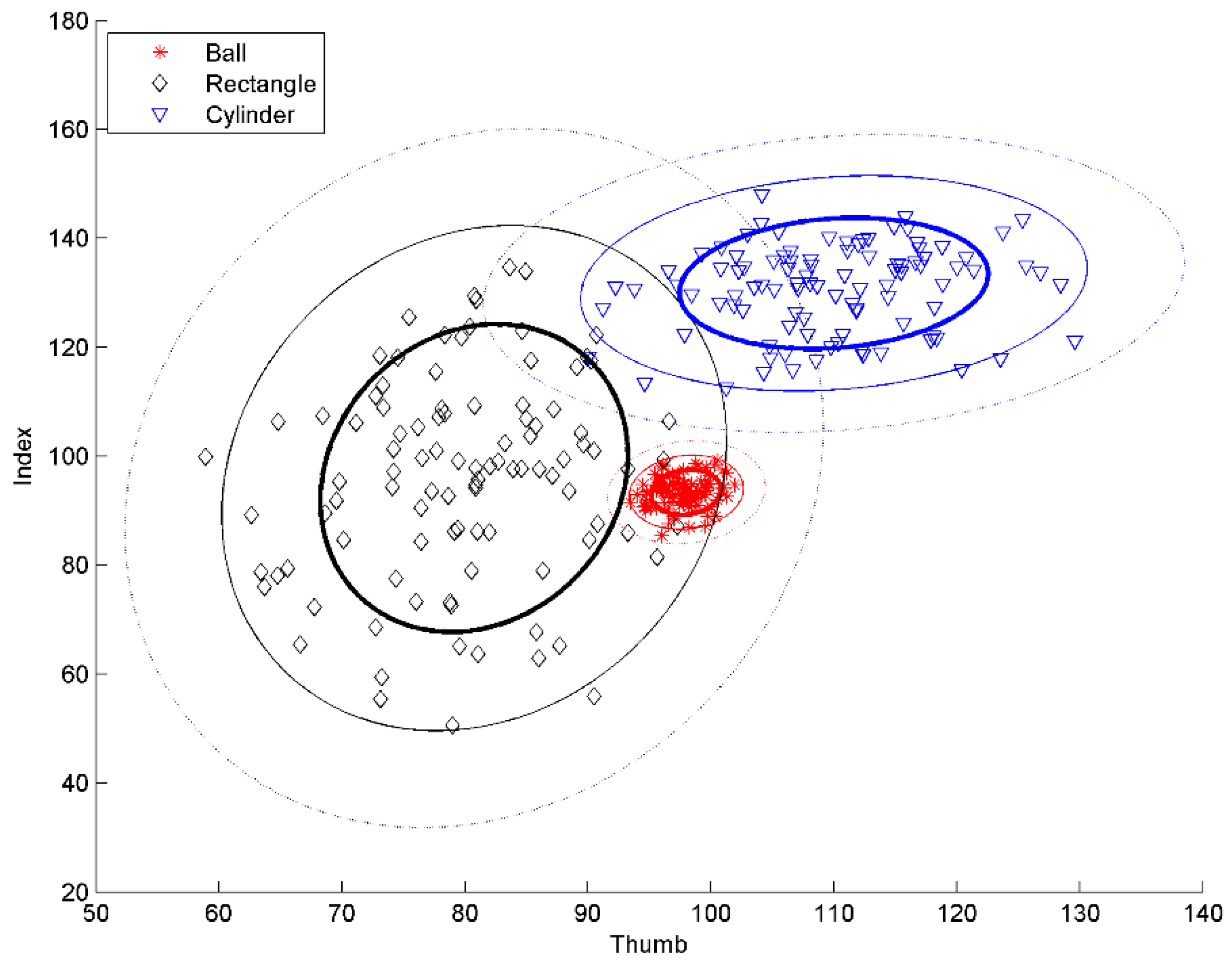

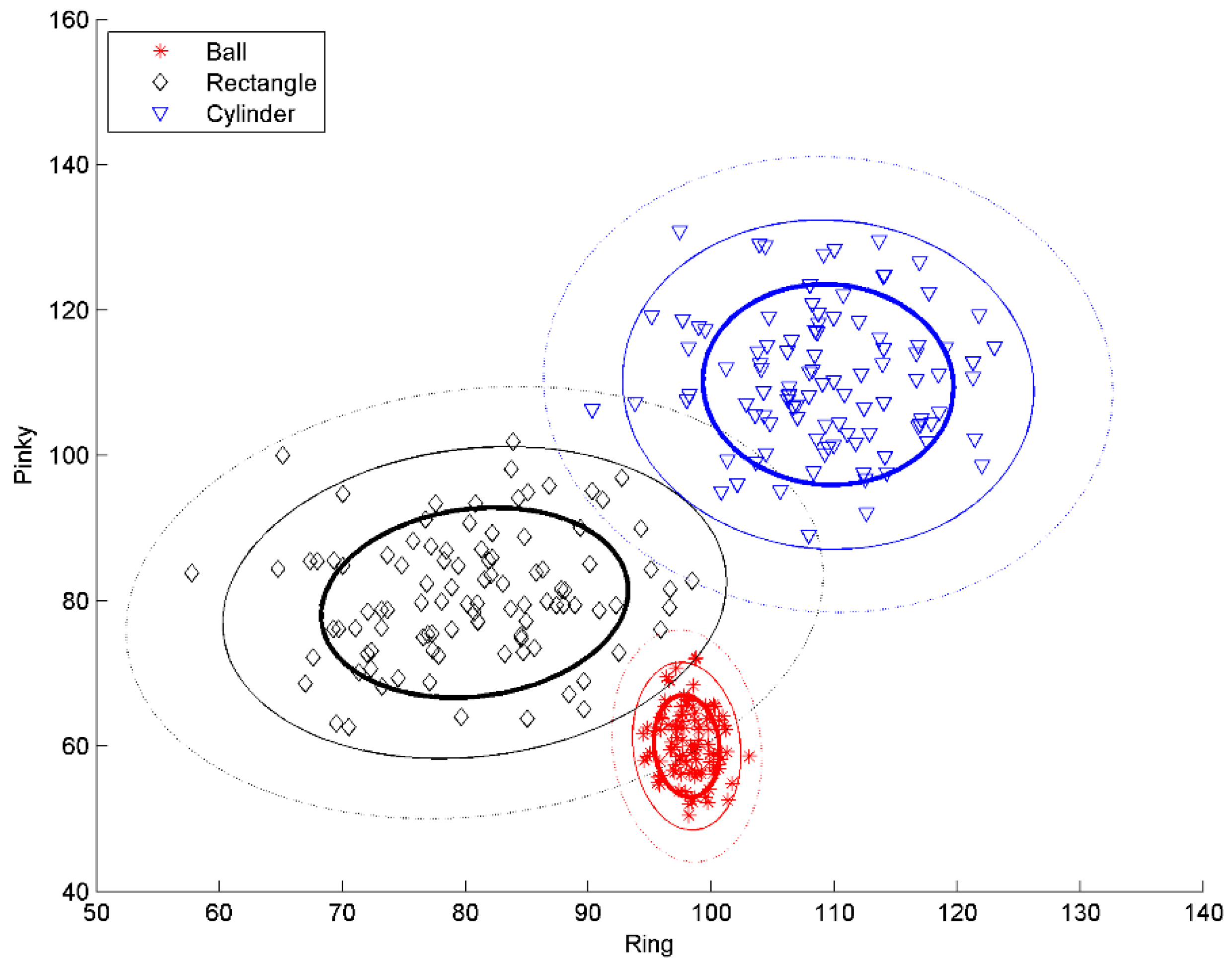

4.2. Analysis of Features

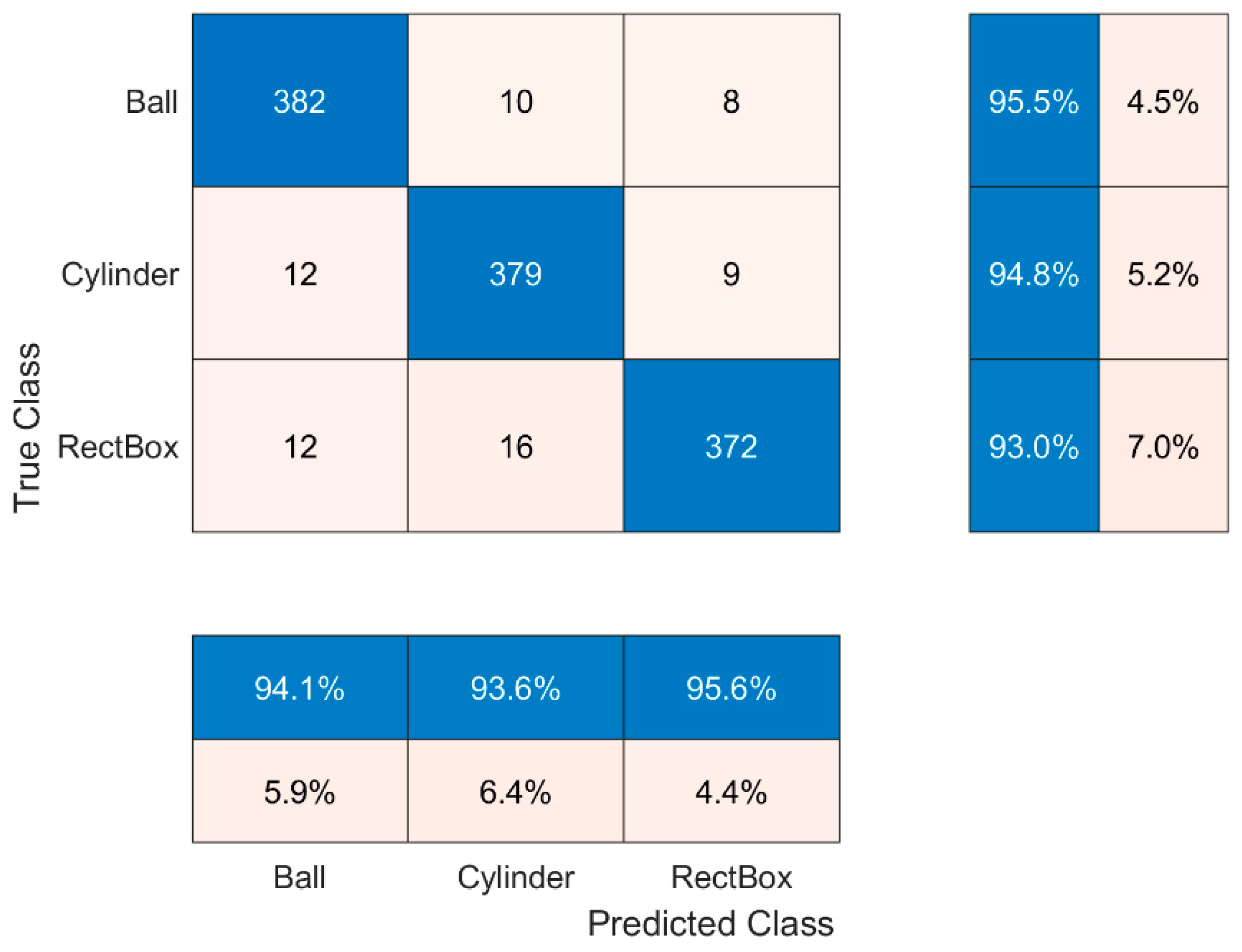

4.3. Evaluation of Results

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yanco, H.A.; Norton, A.; Ober, W.; Shane, D.; Skinner, A.; Vice, J. Analysis of Human-robot Interaction at the DARPA Robotics Challenge Trials. J. Field Robot. 2015, 32, 420–444. [Google Scholar] [CrossRef]

- Bajaj, N.M.; Spiers, A.J.; Dollar, A.M. State of the art in artificial wrists: A review of prosthetic and robotic wrist design. IEEE Trans. Robot. 2019, 35, 261–277. [Google Scholar] [CrossRef]

- Lee, J.-D.; Li, W.-C.; Shen, J.-H.; Chuang, C.-W. Multi-robotic arms automated production line. In Proceedings of the 4th International Conference on Control, Automation and Robotics (ICCAR), Auckland, New Zealand, 20–23 April 2018. [Google Scholar] [CrossRef]

- Beasley, R.A. Medical Robots: Current Systems and Research Directions. J. Robot. 2012, 2012, 401613. [Google Scholar] [CrossRef]

- Heung, K.H.L.; Tong, R.K.Y.; Lau, A.T.H.; Li, Z. Robotic glove with soft-elastic composite actuators for assisting activities of daily living. Soft Robot. 2019, 6, 289–304. [Google Scholar] [CrossRef] [PubMed]

- Malūkas, U.; Maskeliūnas, R.; Damaševičius, R.; Woźniak, M. Real time path finding for assisted living using deep learning. J. Univers. Comput. Sci. 2018, 24, 475–487. [Google Scholar]

- Kang, B.B.; Choi, H.; Lee, H.; Cho, K. Exo-glove poly II: A polymer-based soft wearable robot for the hand with a tendon-driven actuation system. Soft Robot. 2019, 6, 214–227. [Google Scholar] [CrossRef]

- Luneckas, M.; Luneckas, T.; Udris, D.; Plonis, D.; Maskeliunas, R.; Damasevicius, R. Energy-efficient walking over irregular terrain: A case of hexapod robot. Metrol. Meas. Syst. 2019, 26, 645–660. [Google Scholar] [CrossRef]

- Ivanovas, A.; Ostreika, A.; Maskeliūnas, R.; Damaševičius, R.; Połap, D.; Woźniak, M. Block matching based obstacle avoidance for unmanned aerial vehicle. In Artificial Intelligence and Soft Computing; Rutkowski, L., Scherer, R., Korytkowski, M., Pedrycz, W., Tadeusiewicz, R., Zurada, J., Eds.; ICAISC 2018, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 10841. [Google Scholar] [CrossRef]

- Simon, P. Military Robotics: Latest Trends and Spatial Grasp Solutions. Int. J. Adv. Res. Artif. Intell. 2015, 4. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Xie, Y.; Zhou, J.; Wang, K.; Zhang, Z. State-of-the-art robotic grippers, grasping and control strategies, as well as their applications in agricultural robots: A review. Comput. Electron. Agric. 2020, 177. [Google Scholar] [CrossRef]

- Adenugba, F.; Misra, S.; Maskeliūnas, R.; Damaševičius, R.; Kazanavičius, E. Smart irrigation system for environmental sustainability in africa: An internet of everything (IoE) approach. Math. Biosci. Eng. 2019, 16, 5490–5503. [Google Scholar] [CrossRef] [PubMed]

- Burbaite, R.; Stuikys, V.; Damasevicius, R. Educational robots as collaborative learning objects for teaching computer science. In Proceedings of the IEEE International Conference on System Science and Engineering, ICSSE 2013, Budapest, Hungary, 4–6 July 2013; pp. 211–216. [Google Scholar] [CrossRef]

- Martisius, I.; Vasiljevas, M.; Sidlauskas, K.; Turcinas, R.; Plauska, I.; Damasevicius, R. Design of a neural interface based system for control of robotic devices. In Communications in Computer and Information Science; Skersys, T., Butleris, R., Butkiene, R., Eds.; Information and Software Technologies, ICIST 2012; Springer: Berlin/Heidelberg, Germany, 2012; Volume 319. [Google Scholar] [CrossRef]

- Billard, A.; Kragic, D. Trends and challenges in robot manipulation. Science 2019, 364. [Google Scholar] [CrossRef] [PubMed]

- Mahler, J.; Matl, M.; Satish, V.; Danielczuk, M.; DeRose, B.; McKinley, S.; Goldberg, K. Learning ambidextrous robot grasping policies. Sci. Robot. 2019, 4. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Zhang, X.; Zang, X.; Liu, Y.; Ding, G.; Yin, W.; Zhao, J. Feature sensing and robotic grasping of objects with uncertain information: A review. Sensors 2020, 20, 3707. [Google Scholar] [CrossRef] [PubMed]

- Alkhatib, R.; Mechlawi, W.; Kawtharani, R. Quality assessment of robotic grasping using regularized logistic regression. IEEE Sens. Lett. 2020, 4. [Google Scholar] [CrossRef]

- Dogar, M.; Spielberg, A.; Baker, S.; Rus, D. Multi-robot grasp planning for sequential assembly operations. Auton. Robot. 2019, 43, 649–664. [Google Scholar] [CrossRef] [Green Version]

- Gaudeni, C.; Pozzi, M.; Iqbal, Z.; Malvezzi, M.; Prattichizzo, D. Grasping with the SoftPad, a Soft Sensorized Surface for Exploiting Environmental Constraints With Rigid Grippers. IEEE Robot. Autom. Lett. 2020. [Google Scholar] [CrossRef]

- Golan, Y.; Shapiro, A.; Rimon, E.D. A variable-structure robot hand that uses the environment to achieve general purpose grasps. IEEE Robot. Autom. Lett. 2020, 5, 4804–4811. [Google Scholar] [CrossRef]

- Homberg, B.S.; Katzschmann, R.K.; Dogar, M.R.; Rus, D. Robust proprioceptive grasping with a soft robot hand. Auton. Robot. 2019, 43, 681–696. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Sun, Y.; Li, G.; Jiang, G.; Tao, B. Probability analysis for grasp planning facing the field of medical robotics. Meas. J. Int. Meas. Confed. 2019, 141, 227–234. [Google Scholar] [CrossRef]

- Ji, S.; Huang, M.; Huang, H. Robot intelligent grasp of unknown objects based on multi-sensor information. Sensors 2019, 19, 1595. [Google Scholar] [CrossRef] [Green Version]

- Kang, L.; Seo, J.-T.; Kim, S.-H.; Kim, W.-J.; Yi, B.-J. Design and Implementation of a Multi-Function Gripper for Grasping General Objects. Appl. Sci. 2019, 9, 5266. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.-J.; Lee, Y.; Kim, J.; Lee, J.-W.; Park, K.-M.; Roh, K.-S.; Choi, J.-Y. RoboRay hand: A highly backdrivable robotic hand with sensorless contact force measurements. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar] [CrossRef]

- Mu, L.; Cui, G.; Liu, Y.; Cui, Y.; Fu, L.; Gejima, Y. Design and simulation of an integrated end-effector for picking kiwifruit by robot. Inf. Process. Agric. 2020, 7, 58–71. [Google Scholar] [CrossRef]

- Neha, E.; Suhaib, M.; Asthana, S.; Mukherjee, S. Grasp analysis of a four-fingered robotic hand based on matlab simmechanics. J. Comput. Appl. Res. Mech. Eng. 2020, 9, 169–182. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, X.; Chang, U.; Pan, J.; Wang, W.; Wang, Z. Intuitive control of humanoid soft-robotic hand BCL-13. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Beijing, China, 6–9 November 2018; pp. 314–319. [Google Scholar] [CrossRef]

- James, S.; Wohlhart, P.; Kalakrishnan, M.; Kalashnikov, D.; Irpan, A.; Ibarz, J.; Bousmalis, K. Sim-to-real via sim-to-sim: Data-efficient robotic grasping via randomized-to-canonical adaptation networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12619–12629. [Google Scholar] [CrossRef] [Green Version]

- Setiawan, J.D.; Ariyanto, M.; Munadi, M.; Mutoha, M.; Glowacz, A.; Caesarendra, W. Grasp posture control of wearable extra robotic fingers with flex sensors based on neural network. Electronics 2020, 9, 905. [Google Scholar] [CrossRef]

- Song, Y.; Gao, L.; Li, X.; Shen, W. A novel robotic grasp detection method based on region proposal networks. Robot. Comput. Integr. Manuf. 2020, 65. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, Z.; Liang, S.; Geng, W.; Yu, J. A novel vision-based grasping method under occlusion for manipulating robotic system. IEEE Sens. J. 2020, 20, 10996–11006. [Google Scholar] [CrossRef]

- Devaraja, R.R.; Maskeliūnas, R.; Damaševičius, R. AISRA: Anthropomorphic Robotic Hand for Small-Scale Industrial Applications. In Proceedings of the 20th International Conference on Computational Science and its Applications, ICCSA 2020, Cagliari, Italy, 1–4 July 2020; pp. 746–759. [Google Scholar] [CrossRef]

- Vaitkevičius, A.; Taroza, M.; Blažauskas, T.; Damaševičius, R.; Maskeliūnas, R.; Woźniak, M. Recognition of American sign language gestures in a virtual reality using leap motion. Appl. Sci. 2019, 9, 445. [Google Scholar] [CrossRef] [Green Version]

- Hinton, G.E.; Krizhevsky, A.; Wang, S.D. Transforming Auto-Encoders//Artificial Neural Networks and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2011; pp. 44–51. [Google Scholar]

- Ospina, D.; Ramirez-Serrano, A. Sensorless in-hand manipulation by an underactuated robot hand. J. Mech. Robot. 2020, 12. [Google Scholar] [CrossRef]

- Damaševičius, R.; Majauskas, G.; Štuikys, V. Application of design patterns for hardware design. In Proceedings of the Design Automation Conference, Anaheim, CA, USA, 2–6 June 2003; pp. 48–53. [Google Scholar] [CrossRef]

- Jamil, M.F.A.; Jalani, J.; Ahmad, A.; Zaid, A.M. An Overview of Anthropomorphic Robot Hand and Mechanical Design of the Anthropomorphic Red Hand—A Preliminary Work. In Towards Autonomous Robotic Systems; Dixon, C., Tuyls, K., Eds.; TAROS 2015, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9287. [Google Scholar] [CrossRef]

- Juočas, L.; Raudonis, V.; Maskeliūnas, R.; Damaševičius, R.; Woźniak, M. Multi-focusing algorithm for microscopy imagery in assembly line using low-cost camera. Int. J. Adv. Manuf. Technol. 2019, 102, 3217–3227. [Google Scholar] [CrossRef]

- Damaševičius, R.; Maskeliūnas, R.; Narvydas, G.; Narbutaitė, R.; Połap, D.; Woźniak, M. Intelligent automation of dental material analysis using robotic arm with Jerk optimized trajectory. J. Ambient Intell. Humaniz. Comput. 2020. [Google Scholar] [CrossRef]

- Neha, E.; Suhaib, M.; Mukherjee, S. Design Issues in Multi-finger Robotic Hands: An Overview. In Advances in Engineering Design; Prasad, A., Gupta, S., Tyagi, R., Eds.; Lecture Notes in Mechanical Engineering; Springer: Singapore, 2019. [Google Scholar] [CrossRef]

- Souhail, A.; Vassakosol, P. Low cost soft robotic grippers for reliable grasping. J. Mech. Eng. Res. Dev. 2018, 41, 88–95. [Google Scholar] [CrossRef]

- Khan, A.H.; Nower Khan, F.; Israt, L.; Islam, M.S. Thumb Controlled Low-Cost Prosthetic Robotic Arm. In Proceedings of the IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 20–22 February 2019. [Google Scholar] [CrossRef]

- Andrews, N.; Jacob, S.; Thomas, S.M.; Sukumar, S.; Cherian, R.K. Low-Cost Robotic Arm for differently abled using Voice Recognition. In Proceedings of the 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019. [Google Scholar] [CrossRef]

- Moldovan, C.C.; Staretu, I. An Anthropomorphic Hand with Five Fingers Controlled by a Motion Leap Device. Procedia Eng. 2017, 181, 575–582. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, X.; Hagihara, Y.; Yimit, A. Development of a high-performance tactile feedback display for three-dimensional shape rendering. Int. J. Adv. Robot. Syst. 2019, 16. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Devaraja, R.R.; Maskeliūnas, R.; Damaševičius, R. Design and Evaluation of Anthropomorphic Robotic Hand for Object Grasping and Shape Recognition. Computers 2021, 10, 1. https://0-doi-org.brum.beds.ac.uk/10.3390/computers10010001

Devaraja RR, Maskeliūnas R, Damaševičius R. Design and Evaluation of Anthropomorphic Robotic Hand for Object Grasping and Shape Recognition. Computers. 2021; 10(1):1. https://0-doi-org.brum.beds.ac.uk/10.3390/computers10010001

Chicago/Turabian StyleDevaraja, Rahul Raj, Rytis Maskeliūnas, and Robertas Damaševičius. 2021. "Design and Evaluation of Anthropomorphic Robotic Hand for Object Grasping and Shape Recognition" Computers 10, no. 1: 1. https://0-doi-org.brum.beds.ac.uk/10.3390/computers10010001