1. Introduction

During the Covid-19 pandemic, primary health care services and call centers have been criticized for their decisions about the need of a patient to be hospitalized or not. The medical staff working in these call centers have to remotely estimate the condition of the callers based on vague descriptions of their symptoms. Missing information about the patients, the congestion and the consistently changing medical protocols, combined with the subjective opinion of the medical practitioners, often leads to incorrect decisions. Sensitive population with weak immune system (cancer, kidney failure, heart diseases, etc.) is also necessary to be remotely monitored and the same holds for Covid-19 patients in their rehabilitation phase.

The diagnosis of Covid-19 or other similar infections that will appear in the near future (e.g., the Covid-19 variations that recently appeared in United Kingdom or South Africa) is a major challenge since reliable molecular tests have to be performed to a large fraction of the population. Covid-19 is caused by the Severe Acute Respiratory Syndrome Coronavirus 2 virus (SARS-CoV-2) [

1] and is characterized by high infection and case-fatality rates [

2]. There is a large number of asymptomatic carriers and a high infection rate even when there are no symptoms [

3]. In [

4], a number of molecular tests and immunoassays for Covid-19 management life cycle are presented. The life cycle of this virus consists of the preventive, preparedness, response and recovery phase. The main molecular test categories are the Nucleic Acid Amplification Test (e.g., Reverse Transcription—Quantitative Polymerase Chain Reaction (RT-qPCR)) [

5], Immunoassays (such as immune colloidal gold strips) [

6] and sequencing.

The molecular tests and immunoassays are currently applied to a small portion of the population, based on symptom assessment. The classification of the lesions appearing in pneumonia chest Coaxial Tomography (CT) has been recently proposed in [

7,

8]. Image processing applied to X-ray scans has also been examined in [

9]. Lung ultrasound images are analyzed in [

10] for the estimation of the infection progress. Several imaging techniques for the detection of Covid-19 are reviewed in [

11]. Sound processing of either respiratory [

12,

13] or cough sounds can also be used for the early Covid-19 diagnosis. Speech modeling and signal-processing is applied in [

14] for tracking the asymptomatic and symptomatic stages of Covid-19. The coordination of neuromotor in various speech subsystems that are involved in respiration, phonation and articulation are affected by Covid-19 infection.

In [

15], ten major telemedicine applications are reviewed. These applications can minimize the required visits to the doctors providing a suitable treatment option. Robots can undertake human-like activities that could otherwise favor the spread of Covid-19 [

16]. For example, they can deliver medicine, food and other essential items to patients who are under quarantine. Since mild respiratory symptoms appear in the early stage of Covid-19 infection it is important to continuously monitor the respiratory rate with a non-contact method. A device-free real-time respiratory rate monitoring system using wireless signals is presented in [

13] and standard joint unscented Kalman filter is modified for real-time monitoring. A portable health screening device for infections of the respiratory system is presented in [

12].

Several Machine Learning (ML) techniques have been employed [

10] for the diagnosis of Covid-19 infection with high accuracy based on its symptoms [

17]. The 4th medical evolution (Medical 4.0) concerns applications supported by Information Technology, microsystems, personalized therapy and Artificial Intelligence (AI). Ten Medical 4.0 applications are reviewed in [

18]. Generative Adversarial Networks, Extreme Learning Machine and Long/Short Term Memory (LSTM) deep learning methods have been studied in [

19] while user-friendly platforms are offered for physicians and researchers. In [

20], a research-focused decision support system is presented, for the management of patients in the intensive care. Internet of Things (IoT) devices are also employed while the physiologic data are exploited by ML models that have been developed in [

20].

The major weapons for the infection spread prevention, are social distancing and tracing [

21]. Several approaches have been proposed that exploit smart phone facilities and social networks. The control of information publishing strategies based on information mined from social media is presented in [

22]. A detailed model of the topology of the contact network is sufficient in [

23] to capture the salient dynamical characteristics of Covid-19 spread and take appropriate decisions. The user location and contacts can be traced through a smart phone Global Positioning System (GPS), cellular networks, Wi-Fi and Bluetooth. In [

24], the impact of contact tracing precision on the spread of Covid-19 is studied. An epidemic model has been created in [

24] for the evaluation of the relation between the efficiency and the number of people quarantined. In [

25], the contact tracing applications in Australia and Singapore are described.

Additional medical parameters should be taken into consideration when the overall condition of a patient has to be assessed [

18]. These additional medical data may have to be acquired from eHealth sensors. In [

26] wearable devices suitable for monitoring the sensitive population and individuals in quarantine are presented. Unobtrusive sensing systems for disease detection and for monitoring patients with relatively mild symptoms as well as telemetry for the remote monitoring and diagnosis of Covid-19 are studied. Wireless Medical Sensor Network (WMSN) can benefit those who treat Covid-19 patients risking their lives. In [

27] the deployment of WMSN is studied for the accomplishment of quality healthcare while reducing the potential threat from Covid-19. A wearable device based on Internet of Medical Things (IoMT) is presented in [

28] for the protection from Covid-19 spread through contact tracing and social distancing.

The developed platform (called henceforth Coronario) aims at the reduction of the traffic in the call centers of primary healthcare units through remote symptom tracking. The functionality offered can be used to support the real-time screening of vulnerable population (patients with weak immune system, heart diseases, cancer, transplanted, etc.). Moreover, it can be used to monitor the condition of the patients who have recovered from Covid-19 infection in real-time. The research concerning the nature of Covid-19 requires an abundance of information about the pre- and post- hospitalization phase. An eHealth infrastructure that is, a number medical sensors on the side of the patient is employed to support a more reliable diagnosis based on the overall patient condition and his environment [

29]. The anonymous medical data that are used in the Coronario platform can also be exploited in the context of research on Covid-19.

The Coronario platform consists of a user mobile application (UserApp) and a number of medical IoT sensors that are connected to cloud services preferably through a sensor controller for higher security and efficiency. The information processed by the UserApp includes the description of the symptoms through questionnaires, the location of the user and the results of audio processing for the classification of cough and respiratory patterns. Cloud connection allows data privacy with user authentication, access permissions, encryption and other services. The sensor data (e.g., blood pressure, body temperature, etc.) are uploaded there and can be accessed by the supervisor doctor or authorized researchers. The diagnosis results are certified by authorized medical staff that access the data through the Supervisor application. The diagnosis decisions can be potentially assisted by Artificial Intelligence (AI) tools such as pre-trained TensorFlow neural network models. Finally, the researchers can access anonymous medical data, given after the consent of the patients, through the Scientific application.

Compared to similar symptom tracking applications, Coronario offers a flexible MP file format that allows the definition or modification of alert rules, sensor sampling scenarios and questionnaire structures in real time. In this way, several medical cases of Covid-19 or other infections can be supported. Moreover, the facilities of the Coronario platform can be exploited by various practitioners: physicians, primary healthcare units, hemodialysis centers, oncological clinics and so forth. An extensible platform where different sound classification methods can be tested, is also offered for the support of the research on Covid-19. The integration of various components in the Coronario platform offers extra services, beyond symptom assessment and statistical processing. Specifically, user tracking, localization and social distancing, is also supported.

The rest of the paper is organized as follows: The detailed description of the Coronario architecture is presented in

Section 2. The dynamic medical protocol configuration is examined in

Section 3. In

Section 4, cough sound classification is examined for the demonstration of research experiments using the Coronario platform. A discussion on extensions and the security features, follows in

Section 5.

Section 6 draws conclusions.

2. Architecture of the Coronario Platform

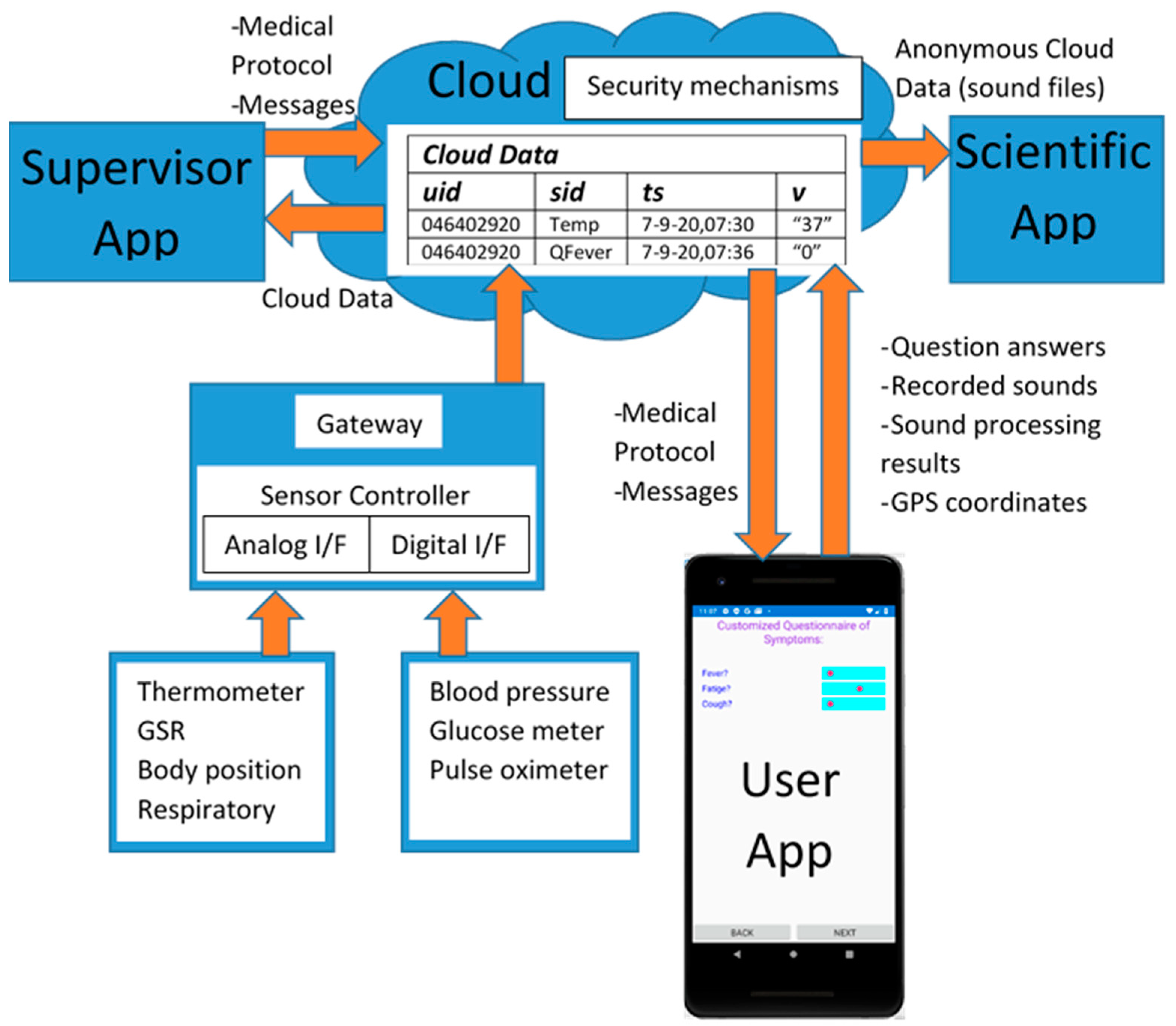

The general Coronario platform architecture appears in

Figure 1. It consists of a mobile (UserApp) and two desktop applications (SupervisorApp and ScientificApp), a local eHealth sensor network and cloud storage. More details about the data structures exchanged between these modules are shown in

Figure 2. The use and the features of each one of these modules are described in the following paragraphs.

2.1. UserApp

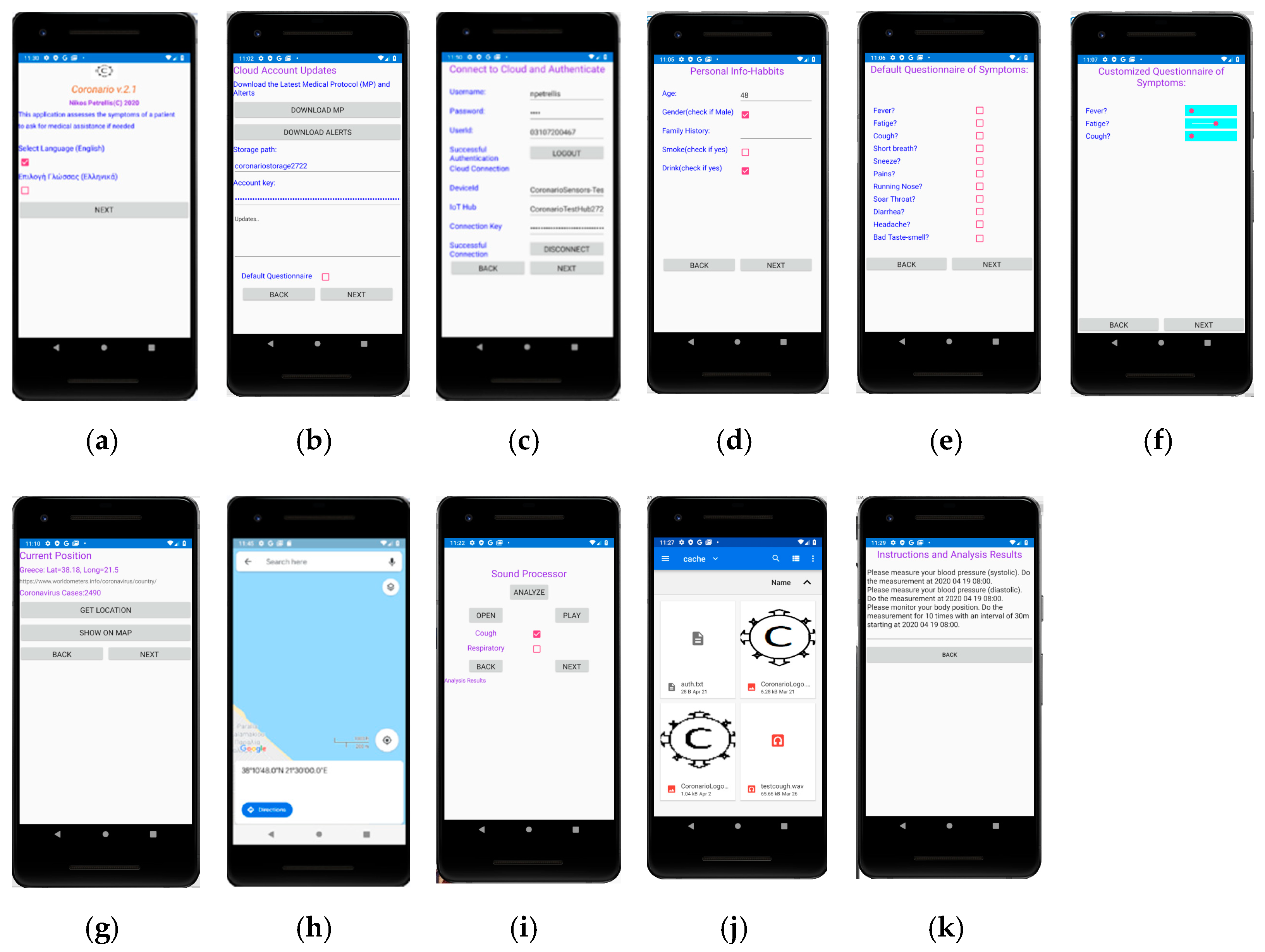

The UserApp is a mobile application used by the patient. It has been developed using Microsoft Visual Studio 2019/Xamarin thus, that it can be deployed as an Android or iOS application. An overview of the UserApp pages appears in

Figure 3. The user selects the language of the interface from the initial page (

Figure 3a), signs in (

Figure 3b) and updates the MP file (

Figure 3c). General information about the age, gender and habits of the user are asked (

Figure 3d) and then, the default or customized by the MP symptoms’ questionnaire appears (

Figure 3e,f). The answers can be either given as true/false using checkboxes or in an analog way through sliders (slider on the left: low symptom intensity, slider on the right: high intensity).

The current position of the user is detected through the Global Positioning System (GPS) of the cell phone in

Figure 3g and displayed on the map (

Figure 3h). In the current version, the country is detected through GPS coordinates and additional information such as the number of Covid-19 cases found so far in this country can also be retrieved by a web page that publishes such information. Narrower regions can also be used to trace the locations that the user has visited in case he found infected by Covid-19.

The user can select a sound file with recorded cough or respiratory sound as shown in

Figure 3i,j. The sound file is then, played and sound processing is performed, potentially sending the analysis results and the extracted features to the cloud. In the last page shown in

Figure 3k, several messages are displayed. For example, diagnosis results and alerts can appear. Guidelines are displayed showing to the user how and when to perform tests with the medical sensors.

2.2. SupervisorApp

The SupervisorApp is used by authorized medical practitioners. A supervisor doctor can access the patient data through this application and exchange messages. He can login to his account on the cloud, selects the interface language and accesses the sensor data in readable format. The supervisor can also select or create an appropriate MP file where the conditions that generate alerts and instructions to the user are defined. The sensor sampling scheme that has to be followed and guidelines about how the user will perform the medical tests with the available medical equipment, is also defined in the MP. Moreover, the specific questions that should be included in the customized questionnaire are determined in the MP file. The flexibility in determining these issues is owed to the employed MP file format described in

Section 3.

The SupervisorApp has been developed in Visual Studio 2019 as a Universal Windows Platform (UWP). Its main page is shown in

Figure 4. The values of specific sensors are also viewed in this page.

2.3. IoT eHealth Sensor Infrastructure

The IoT eHealth sensor infrastructure is employed to monitor several parameters concerning the condition of the patient’s body and his environment. A non-expert should be able to handle the available sensors and a different combination of these sensors can be employed for each medical case. Ordinary sensors that can be operated by unexperienced patients are the following:

body position to monitor how long the patient was standing or walking, lying on the bed or if he had a fall,

blood pressure sensor,

glucose meter,

digital or analog thermometers for measuring the temperature of the body or the environment,

Pulse-oximeter (SPO2),

respiratory sensor to plot the patient breathing pattern,

Galvanic Skin Response (GSR) sensor to monitor the sweat and consequently the stress,

environmental temperature/humidity sensors.

Some of the sensors have analog interface as shown in

Figure 2 (temperature, GSR, body position, respiratory) and should be connected to Analog/Digital Conversion readout circuits. Others offer a digital interface or even network connectivity (e.g., blood pressure, glucose meter, SPO2) and are capable of uploading their measurements directly to a cloud in real-time. A local controller (Gateway) may be used to connect both analog and digital sensors through appropriate readout circuits and upload data to the cloud through its wired or wireless network interface. The Gateway can also filter sensor values and upload only the necessary information required for the diagnosis. Filtering can include smoothing through moving average windows, estimation of missing sensor values through Kalman filters, data mining that searches for strong patterns through Principal Component Analysis (PCA). Only the important sensor values are uploaded to the cloud for higher data privacy and they can be isolated for example, through appropriate thresholds. The experiments conducted here, were based on the sensor infrastructure described in [

29]. After user consent, some sensor data may be anonymously used by the ScientificApp in the context of research about Covid-19 or other similar infections. Although, more advanced tests such as ElectroCardioGrams (ECG) or ElectroMyoGrams (EMG) are also available, their operation is more complicated and the results they produce cannot be interpreted by a non-expert. Moreover, many of these sensors are not medically certified.

UserApp is also employed to guide the user about how and when to operate an external sensor. The sensor sampling strategy can be defined in the MP file and the UserApp converts it to comprehensive guidelines for the patient.

2.4. Cloud Services

There were several options for the implementation of the communication between the Coronario modules. Dedicated links for any pair of modules would not be appropriate since multiple links would have higher cost, would be more difficult to synchronize and would require an overall larger buffering scheme to support asynchronous communication. Multiple copies of the same data travelling through different links could also cause incoherence. Private datacenters could be used to store the generated data. They can offer advanced storage security and speed but have higher initial (capital) and administrative cost. Moreover, they usually support only the customized services developed by their owners. On the contrary, public clouds support advanced services for data sharing, analysis and visualization, they allow ubiquitous uniform access while their cost is defined on a pay-as-you-go basis. However, the customers of the cloud services do not always trust them as far as the security of their data is concerned.

The information is exchanged between the various modules of the Coronario platform through general cloud services such as the ones offered by Google Cloud, Microsoft Azure, Amazon Web Services (AWS). These platforms support advanced security features guaranteed by Service Level Agreements (SLAs), data analysis and notification methods as well as storage of data in raw (blobs) or structured format (e.g., tables, databases). Advanced healthcare services such as Application Program Interfaces (APIs) and integration are offered by Google Cloud Healthcare API. Microsoft Cloud for Healthcare brings various new services for monitoring patients combining Microsoft 365, Dynamics, Power Platform, Azure cloud and IoT tools. The common data model used in this case, allows for easy data sharing and analysis. For example, medical data can be visualized using Microsoft Power BI. Microsoft Azure cloud and IoT services have been used by the Coronario platform although only the storage facilities have been exploited in order to make the developed application more portable. Several other even simpler cloud platforms (Ubidots, ThingSpeak, etc.), could have been employed in the place of Microsoft Azure. The data stored in the cloud can be visualized (e.g., plotted) outside the cloud and more specifically in the Supervisor App.

The following information is stored in the cloud:

MP files as well as messages can also be stored in the cloud as (uid, sid, ts, v) for uniform representation. A whole message or even an MP text file can be stored in the string field v. This field is intentionally defined as a string in order to incorporate a combination of one or more numerical values, text and additional meta-data needed besides uid, sid and ts.

2.5. ScientificApp

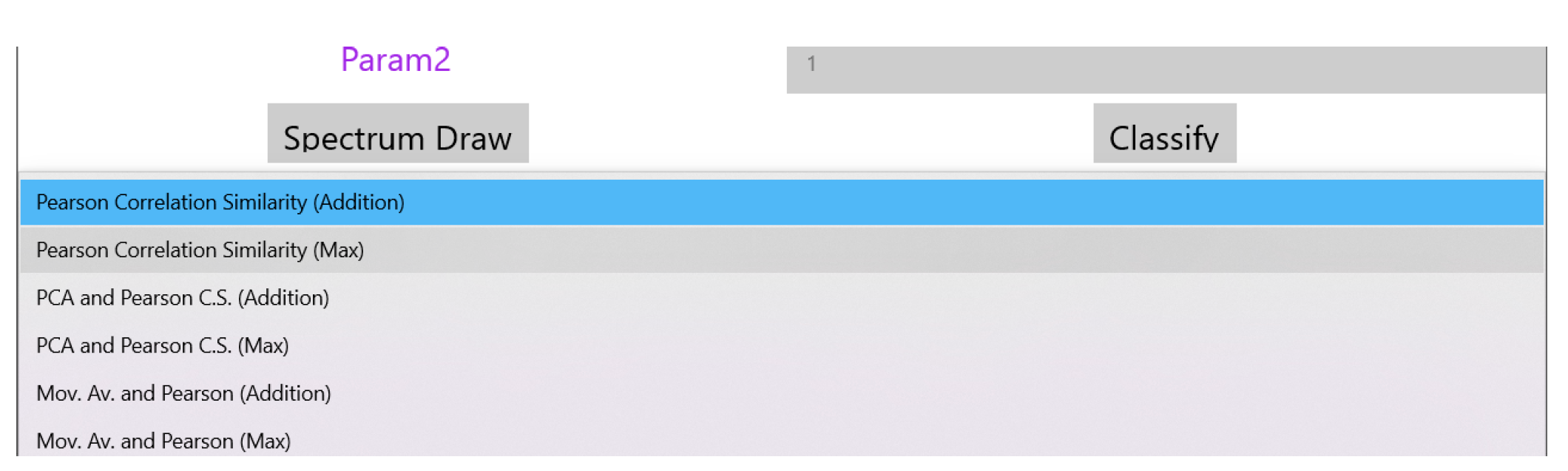

Scientific is a variation of UserApp compiled as a desktop application for a more ergonomic user interface. The details of this module are given in this paragraph in order to demonstrate how alternative classification methods and similarity metrics can be tested. Most of the pages are identical with UserApp except from the page that concerns the sound processor shown in

Figure 5. In this page the researcher can select and play a sound file (e.g., daf9.wav as shown in

Figure 5). The type of the recorded sound (cough or respiratory) as well as the “Training” mode is defined using checkboxes.

Based on the employed classification methods, the SupervisorApp can determine the reference features in the frequency domain of a cough sound class, using a number (e.g., 5–10) of training files. Then, the features extracted from any new sound file that is analyzed, are examined for similarity with the reference ones in order to classify it. Fast Fourier Transform (FFT) is applied to the input sound file in segments for example, of 1024 samples (defined in the page shown in

Figure 5). Subsampling can also be applied in order to cover a longer time interval with a single FFT.

The drop down menu “Select Analysis Type” shown in the page of

Figure 5 can be used to select the similarity method that will be used as shown in

Figure 6. The supported similarity methods will be explained in detail in

Section 4. Some similarity analysis methods may need extra parameters to be set. Reserved fields “Param1” and “Param2” can be used for this purpose. The magnitude of the FFT output values are the features used for similarity checking and are displayed at the bottom of

Figure 4 after the analysis of the sound is completed. If training takes place these values appear after all the training sound files have been analyzed. These values can be exported for example, in a spreadsheet for statistical processing. Different FFT segments of the same sound file are either (a) averaged or (b) the maximum power that appears among different segments for the same frequency is used as the final bin value.

When a new sound file is analyzed, the FFT output is displayed at the bottom of the page shown in

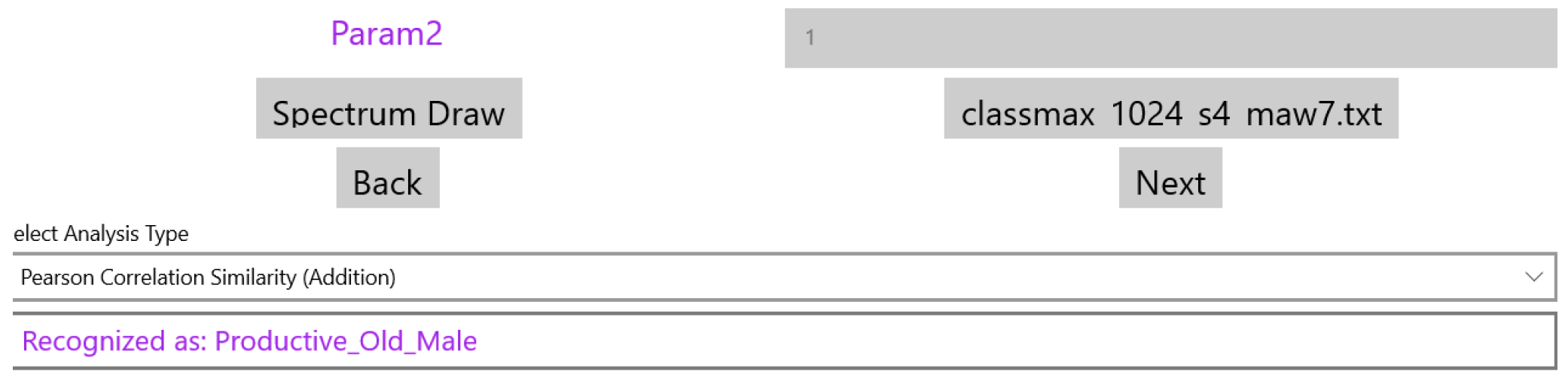

Figure 5 and the classification results can be generated after the user selects the file with the reference features of the supported classes. This set of classes can of course be extended if the system is trained with sounds from a new cough or respiratory category. In

Figure 7, the name of the “Classify” button is changed to the specific reference features’ filename (classmax_1024_s4_maw7.txt). The field holding the selected analysis type in

Figure 5 (Pearson Correlation Similarity), displays now the results of the classification (see

Figure 7).

3. Dynamic MP File Configuration

The format of the MP file is studied in detail in this section, in order to demonstrate that any alert rule, sensor sampling scenario or questionnaire structure can be supported. JavaScript Object Notation (JSON) format is employed for the MP file. The configuration sections of an MP file are the following: (a) Questionnaire, (b) Sensor Sampling and (c) Alert Rules. A new MP file can be defined by the supervisor who uploads it to the cloud through the SupervisorApp (see

Section 2.2). This file can be downloaded from the cloud by the UserApp. Several fields in the UserApp pages are adapted according to the information stored in the MP file. Updating an MP file is performed in real time without requiring a recompilation of the Coronario modules. Each MP section is described in the following paragraphs.

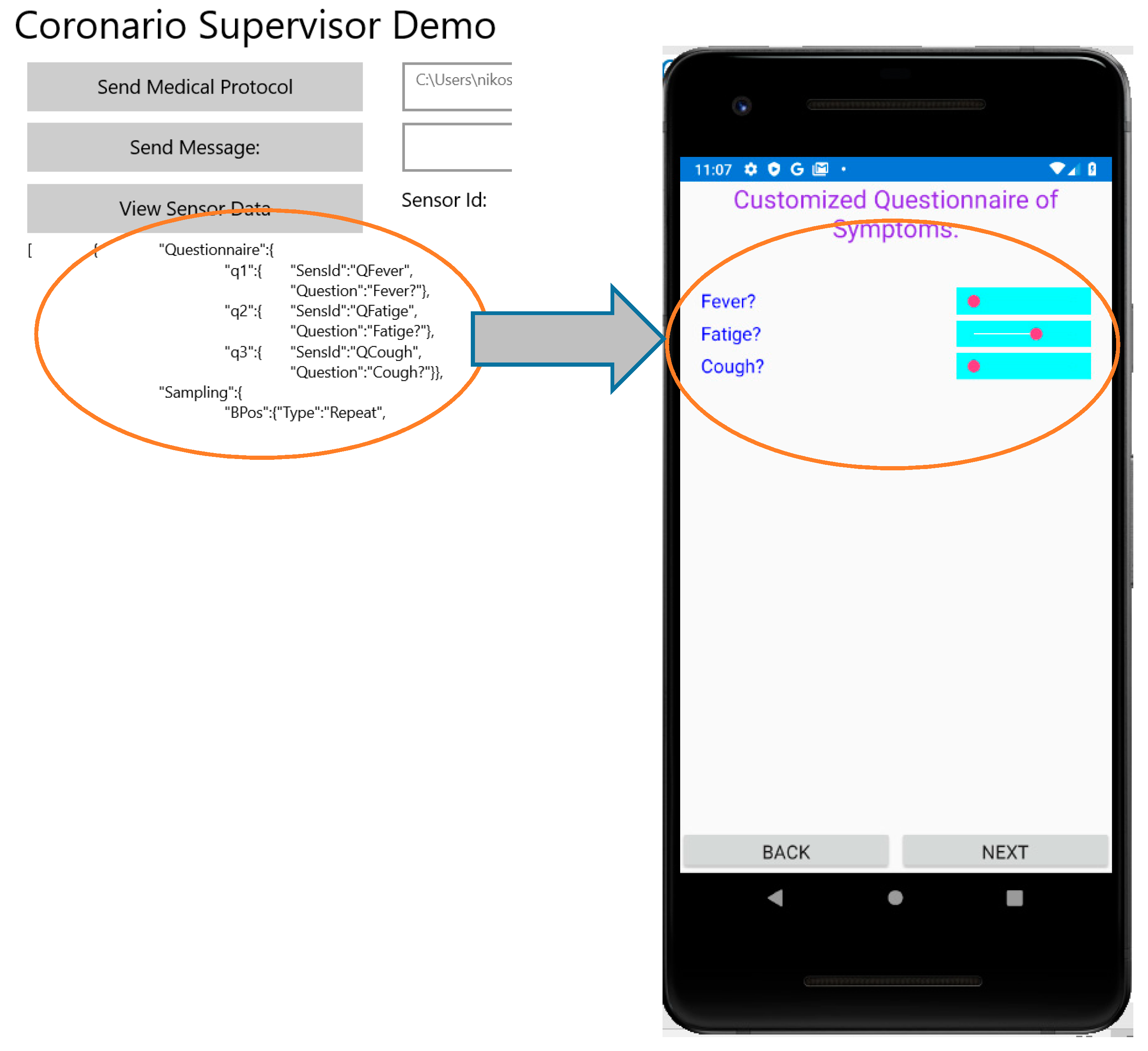

3.1. Questionnaire Configuration

The aim of the Questionnaire section is to select the appropriate questions about the patient symptoms that should appear in the corresponding form of the UserApp (see

Section 2.1). These questions are selected from a pool that contains all the possible ones that are related to Covid-19 or other infections. Names such as q1, q2, q3 (see

Figure 8) are indicating that the definition of a question follows. Each question is defined by the fields: SensId and Question. The value of SensId should be retrieved from the question pool so that it can be recognized by the UserApp. The text in the Question field can be customized (it is not predefined) and appears as a question in the UserApp. The default way of answering a question that is defined in the MP file is a percentage value determined by a slider control that appears in the UserApp questionnaire. In this way, the user can respond to a question by an analog indication between No (0%—slider to the left) and Yes (100%—slider to the right). For example, if a symptom appears with mild intensity, the patient may place the slider close to the middle (50%). If the answers to specific symptom questions should be a clear yes or no, checkboxes can be defined through the Questionnaire section of the MP file. For uniform handling of both the sliders and the checkboxes, when the checkbox is ticked a value of 100% is assumed while 0% is returned when the checkbox is not ticked.

Figure 8 shows how the questions defined in the MP file appear in the UserApp.

The values set in the questionnaire are uploaded to the cloud in the same format as the sensor values of the eHealth Infrastructure (see

Section 2.4): (

uid,

sid,

ts,

v). Moreover, additional information uploaded by the UserApp such as the geolocation is also stored in this format. The type of the parameter

v is string, so that multiple for example, floating point values or even whole messages/text files can be incorporated in a single field. All of these values whether retrieved from the IoT eHealth sensor infrastructure or for example, from the UserApp questionnaire can be viewed by the supervisor who can search for specific sensor values based on the sensor identity or other criteria (e.g., specific dates or users).

3.2. Sensor Sampling Configuration

The second section in the MP file concerns the sampling strategy that should be followed concerning the IoT medical sensors. The format of each entry in this section demands the declaration of the sensor name (e.g., BPos for body position, MPressH for systolic blood pressure and MPressL for the diastolic one), followed by 4 fields: Type, Date, Period, Repeat. The Type field can have one of the following values: Once, Repeat, Interval. If Once is selected, then the medical test should be performed once at the specific Date. The Period and Repeat fields are ignored in this case (can be 0). If Type = “Repeat”, then the patient must perform a routine medical test starting at the date indicated by the corresponding field. A time interval (determined in the Period field) should intervene between successive tests. The Repeat field indicates a maximum number of tests that should be performed although these tests can be terminated earlier by a newer MP file. If Type = “Interval” then the interpretation of the other 3 fields is different. More specifically, a medical test should be performed in a Period starting from Date, Repeat times.

Although this MP file section has been defined for the sensors of the eHealth infrastructure, they can also be used for sensor-like information retrieved through the UserApp (questionnaire answers, geolocation coordinates or even sound/image analysis). The sampling scenarios determined in this MP file section should be converted in comprehensive guidelines for the patient in order to schedule his medical tests in the right time.

Figure 9 shows how the UserApp converts the JSON format of the Sampling Section in natural language.

The sampling scenario for the screening of a patient with kidney failure is examined to demonstrate the flexibility of the MP file format in determining any usage of the medical sensors. More specifically, it demonstrates how different regular and irregular sampling rates can be supported. Sleep apnea which is a breathing disorder is highly prevalent in these patients. This disorder results in reduced blood oxygen saturation (called hypoxemia) and oxygen saturation is below 90%. It is also the cause of high blood pressure and can cause cardiac diseases that are frequent in chronic kidney disease and dialysis patients [

30]. The sensor sampling scheduling of

Figure 10 is implemented by the MP file “Sampling” section as shown in Algorithm 1.

| Algorithm 1 Sampling Section of the MP File for a Dialysis Patient |

| [{“Questionnaire”:{ |

| … |

| “Sampling”:{ |

| “BPressH”:{“Type”:”Repeat”, |

| “Date”:”8:00 6 July 2020”, |

| “Period”:”12 h”, |

| “Repeat”:”1000”} |

| “BPressL”:{“Type”:”Repeat”, |

| “Date”:”8:00 6 July 2020”, |

| “Period”:”12 h”, |

| “Repeat”:”1000”} |

| “BTemp”:{“Type”:”Repeat”, |

| “Date”:”20:00 6 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}} |

| “BTemp”:{“Type”:”Repeat”, |

| “Date”:”20:00 8 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}} |

| “BTemp”:{“Type”:”Repeat”, |

| “Date”:”20:00 10 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}} |

| “Quest”:{“Type”:”Repeat”, |

| “Date”:”20:30 6 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}} |

| “Quest”:{“Type”:”Repeat”, |

| “Date”:”20:30 8 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}} |

| “Quest”:{“Type”:”Repeat”, |

| “Date”:”20:30 10 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}} |

| “SPO2”:{“Type”:”Repeat”, |

| “Date”:”9:00 6 July 2020”, |

| “Period”:”24 h”, |

| “Repeat”:”1000”}}, |

| “Resp”:{“Type”:”Repeat”, |

| “Date”:”23:00 6 July 2020”, |

| “Period”:”7 d”, |

| “Repeat”:”1000”}}, |

| … |

| }] |

As can be seen from Algorithm 1, blood pressure has to be measured every 12 h thus, a single declaration can cover all days. Similarly, a single declaration can determine the SPO2 measurements to be performed every day at 9:00. The body temperature and the questionnaire have to be answered the day before the hemodialysis thus, 3 declarations (with period of one week) are used. Respiratory is monitored once every week. These measurements should be performed continuously thus, a large number (e.g., 1000) is used in the field “Repeat” to cover a long time period until a new MP is defined.

3.3. Alert Configuration

Local rule checks performed by the UserApp can be defined in the “Alert” section of the MP. Whenever the condition in a rule is found true, an action is taken. In the current version this action is a message displayed to the user. Any logical condition

C is expressed as:

where

s0,

s1 are sensor values and

s0,min,

s0,max,

s1,min,

s1,max are the allowed limits in the sensor values. The operators “·”, ”+” and “

” are the logical AND, OR and inversion of

L, respectively. If ±∞ can be used in these limits then, the inversion of a condition can be expressed with Equation (2). For example

Since all the conditions in a rule must be true to perform the defined action (i.e., they are related with an AND operator) the OR operator (+) in Equation (2) can be implemented by defining twice the rule (with the same action): one for: ((

s0 > −∞)∙(

s0 ≤

s0,min)) and one for: ((

s0 ≥

s0,max)∙(

s0 <+∞)). An example that shows that any complicated condition can be supported by the format of the Alerts section of the MP file is the following: let us assume that the action

A has to be taken if the following condition

C is true:

Equation (3) can be rearranged by splitting the condition

C in 3 separate conditions

C1,

C2,

C3, as follows:

This rule can be expressed in the Alerts section as shown in Algorithm 2.

| Algorithm 2 Example Alerts Section in the MP File for Implementing the Rule Defined in Equations (3)–(6) |

| [{“Questionnaire”:{… |

| “Sampling”:{… |

| “Alerts”:{ |

| “m1”:{“A”, |

| “Conditions”:{ |

| “c1a”: {“SensId”:”s0”, |

| “Date”:”8:00 19 April 2020”, |

| “Minimum”:”s0_min”, |

| “Maximum”:”s0_max”} |

| “c1b”: {“SensId”:”s3”, |

| “Date”:”8:00 19 April 2020”, |

| “Minimum”:”TRUE”, |

| “Maximum”:”TRUE”} |

| } |

| “m2”:{“A”, |

| “Conditions”:{ |

| “c2a”: {“SensId”:”s1”, |

| “Date”:”8:00 19 April 2020”, |

| “Minimum”:”-Inf”, |

| “Maximum”:”s1_min”} |

| “c2b”: {“SensId”:”s3”, |

| “Date”:”8:00 19 April 2020”, |

| “Minimum”:”TRUE”, |

| “Maximum”:”TRUE”} |

| } |

| “m3”:{“A”, |

| “Conditions”:{ |

| “c3a”: {“SensId”:”s1”, |

| “Date”:”8:00 19 April 2020”, |

| “Minimum”:”s1_max”, |

| “Maximum”:”+Inf”} |

| “c3b”: {“SensId”:”s3”, |

| “Date”:”8:00 19 April 2020”, |

| “Minimum”:”TRUE”, |

| “Maximum”:”TRUE”} |

| } |

| … |

| |

| }] |

| [{“Questionnaire”:{… |

| “Sampling”:{… |

| “Alerts”:{ |

| “m1”:{“A”, |

| “Conditions”:{ |

| “c1a”: {“SensId”:”s0”, |

As can be seen from Algorithm 2, “Inf” has been used to denote infinite and the minimum, maximum of the sensor values have been declared as: s0_min, s0_max, s1_min, s1_max. Conditions C1, C2, C3 have been implemented by the pairs: (c1a, c1b), (c2a, c2b) and (c3a, c3b), respectively. Although three different rules have been defined (m1, m2, m3), they all trigger the same action “A.” Of course the negation of, for example, s ≥ smin is s < smin and not s ≤ smin as used above in Algorithm 2 but this could be easily handled without modifying the MP file format, if a small correction e is used: s ≤ smin − e.

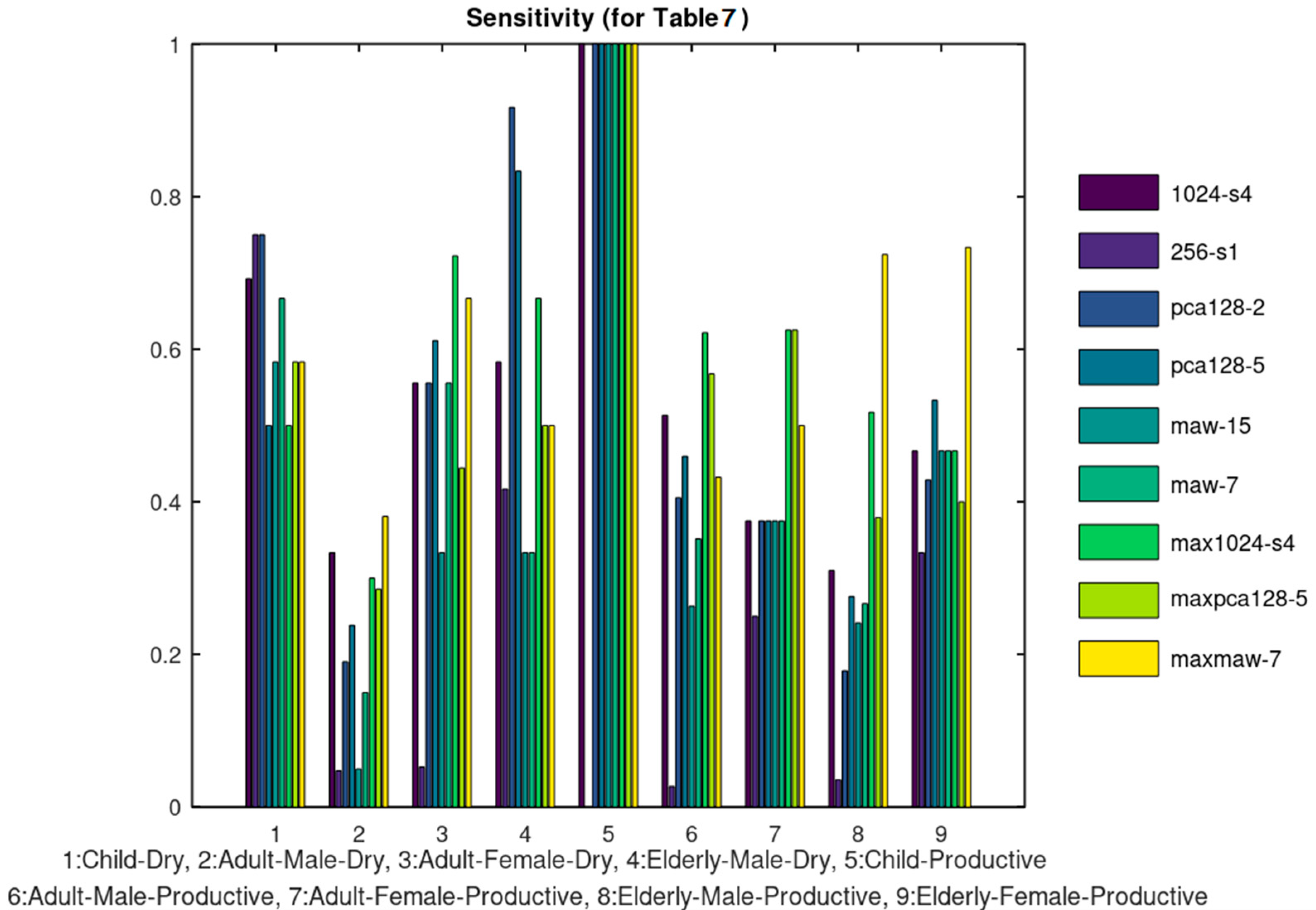

5. Discussion

The already implemented sound processor can be used to classify cough or respiratory sounds related to the infection. The aim of the experiments conducted in the previous section is to demonstrate how the developed platform can host several filtering, signal processing and classification methods in order to exploit the medical information exchanged. Nonetheless, the classification accuracy results obtained in some cases are quite good compared to the referenced approaches listed in

Table 8, taking into consideration that the referenced approaches concern binary decisions. It is obvious that the classification of a sound file in one of the multiple classes that are supported is a much harder problem. Furthermore, the classification accuracy is expected to be much higher if a larger portion of the dataset is used for training. Image processing will also be supported in the next version of the system, to monitor for example, skin disorders as already studied in our previous work [

39]. Additionally, the image processing methods that will be employed may be used to scan the results of for example, Coaxial Tomography, X-ray, ultrasound images and extract useful features for the diagnosis of an infection.

In the current version, the supervisor doctor is responsible for the diagnosis based on the data retrieved from the UserApp and the IoT medical sensors. Nevertheless, AI deep learning tools such as TensorFlow or Caffe can be trained to assist the automation and reliability of the diagnosis. Such a neural network model can be trained using the information exchanged between the Coronario modules (sensor values, user information, geolocation data, etc.). The outputs of this model could be discrete decision about the treatment or suggestions about the next steps that the patient has to follow. Such a trained neural network model can be easily attached on the side of the supervisor doctor where all the information is available or on the side of the eHealth sensor infrastructure. The latter case can be used if some alerts have to be generated locally based on the sensor values. This process can support the alert rules already defined in the MP file as discussed in

Section 3.3.

Several privacy and security issues have been addressed in order to make the system compliant with General Data Protection Regulation (GDPR). First of all, encryption is employed during the exchange and the storage of data. The information exchanged can be decoded at the edge of the Coronario platform from the users (e.g., patients, supervisor doctors or researchers) based on the certificates they own. The encrypted data are unreadable by anyone that does not own the appropriate keys/certificates (e.g., while they reside in the cloud). Only authenticated users have access to the stored information with different permissions. The authorized doctors can download information that concerns only their patients. The researchers can retrieve data from a pool of sensor values that are anonymized and accompanied with permissions for research use. The patients can download the MP files and the messages sent to them from their supervisor doctor. The cloud administrators will not be able to decrypt and read the stored information although the Service Level Agreement (SLA) between the cloud provider and the healthcare institutes will anyway prohibit such an access.

Only the necessary information is stored. Local processing is encouraged to minimize the risk of data exposure during wireless or even wired communication. This is the reason why a local Sensor Controller (Gateway) such as the one used in [

29] is proposed for the sensor infrastructure of the Coronario platform. The supported alerts defined in the medical protocol can check various parameters locally and perform certain actions avoiding the move of sensitive data to the cloud. Several cloud facilities have been employed to guarantee the protection of the stored data: different access privileges, alerts at the cloud level to warn about attacks to the data by unauthorized personnel, the data are deleted from the cloud as soon as possible and so forth. For example, the sensor values can be deleted immediately after they are read by the authorized supervisor. The geolocation data show the places visited by the user and can be deleted after a safe interval of for example, 14 days. Finally, the data used for research such as audio or image files are anonymous and are used only after the consent of the patient.