Toward Management of Uncertainty in Self-Adaptive Software Systems: IoT Case Study

Abstract

:1. Introduction

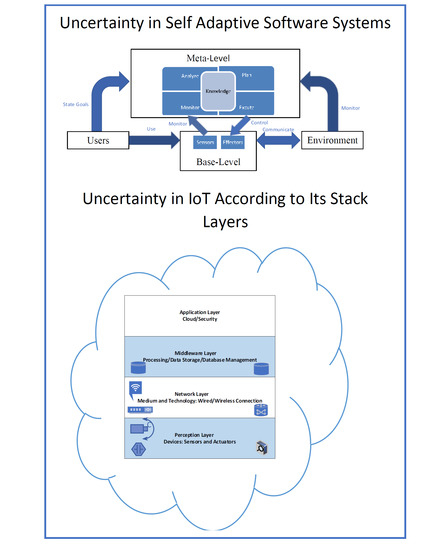

2. Scope of Uncertainty in SASS

3. Modeling Uncertainty in SASS

4. Sources of Uncertainty in SASS

4.1. Uncertainty Due to Simplifying Assumptions

4.2. Uncertainty Due to Model Drift

4.3. Uncertainty Due to Noise in Sensor

4.4. Uncertainty Due to Human User in the Loop

4.5. Uncertainty in the Objectives

4.6. Uncertainty Due to Decentralization

4.7. Uncertainty in the Execution Context

5. Characteristics of Uncertainty

5.1. Reducibility vs. Irreducibility

5.2. Variability vs. Lack of Knowledge

6. Mathematical Techniques for Representing Uncertainty

6.1. Probability Theory

6.2. Fuzzy Sets and Possibility Theory

7. Approaches for Handling Uncertainty in SASS

7.1. Rainbow

7.2. Possibilistic Self-Adaptation (POISED)

7.3. Anticipatory Dynamic Configuration (ADC)

7.4. Feature-Oriented Self-Adaptation (FUSION)

7.5. Resilient Situated Software System (RESIST)

7.6. RELAX

7.7. FLAGS

8. Case Study: Dealing with Uncertainty in IoT

8.1. Sources of Uncertainty in IoT

- Dynamics in the availability of resources (internal dynamics): Managing dynamics autonomously and correctly is especially important in highly critical IoT applications. For example, because the bandwidth of IoT terminals could vary from Kbps to Mbps from sensing simple value to video stream, requirements on hardware are diverging [12,34,36].

- Dynamics in the environment (external dynamics): These systems include unmanned underwater vehicles that are used for oceanic surveillance to monitor pollution levels, and supply chain systems that ensure sufficient, safe, and nutritious food to the global population. The dynamics of these systems introduce uncertainties that may be difficult or even impossible to anticipate before deployment. Hence, these systems need to resolve the uncertainties during system runtime [34].

- Heterogeneity of edge nodes: A large number of nodes may include nodes, sensors, actuators, and gateways, representing high diversity in their processing and communication capabilities. In IoT, one vital issue is integration and interoperability among these nodes (i.e., the ability to interconnect and communicate different systems) to form a cost-effective and easy-to-implement ad hoc network [37]. Devices connected through heterogeneous communications technologies such as Ethernet, Wi-Fi, Bluetooth, ZigBee, etc. consider various metrics, including the data range and rate, network size, Radio Frequency (RF) channels, bandwidth, and power consumption that needs to develop a heterogeneous technological approach to enable interoperable and secure communications in IoT. For instance, because the bandwidth of IoT terminals varies from Kbps to Mbps from sensing simple values to video streams, requirements on hardware are diverging [12].

- Scalability: IoT is an ad hoc network that formed quickly, and it changes rapidly where nodes are interconnected, using network services in a distributed manner [38]. Because the number of connected things is rapidly growing, IoT systems will require the composition of plenty of services into complex workflows. Accordingly, scalability in terms of the size of IoT nodes becomes a significant concern. Scalability will be measured by the capability of the system to handle increasing workloads, for instance, the addition or removal of IoT nodes or the addition or removal of computing resources in a single IoT node (e.g., adding more memory to increase buffer size or adding more processor capacity to speed up processing).

- Transmission technology: Wired and wireless technologies are the main transmission methods of data transfer [39]. Issues related to transmission are considered as an issue regarding transfer speeds and delays in the delivery of data [34]. For instance, in WSN, apart from uncertainty emerging from redundancy in densely deployed neighboring sensors, a significant amount of data can be lost or corrupted during the transmission from sensor nodes to the sink because of intrusion attacks, node failures, or battery depletion in sensor networks.

- Optimum energy use: Energy consumption is defined as the total energy consumed for running the components of the system architecture. The issue of power use is crucial [40]. The irregularities in energy consumption across the nodes, the size of battery capacity, and charging capability are challenging and require effective runtime decisions in IoT systems. The prediction of energy use under uncertainty is critical in fields such as WSN, e.g., forest monitoring or field surveillance systems. IoT nodes are deployed statically in the field to perform a certain task. A continuous source of energy is required to drive these nodes, which presents a severe challenge in terms of cost and network lifetime. Moreover, their computational and storage capabilities do not support complex operations [41].

- User configuration capabilities: The number of users, the complexity of systems, and the continuously changing needs of users make it necessary to provide mechanisms that allow the users to configure the systems by themselves. For instance, IoT in smart homes or smart buildings, including servomotors, mobile devices, televisions, thermostats, energy meters, lighting control systems, music streaming, and control systems, remote video streaming boxes, pool systems, and irrigation systems, in addition to various types of built-in sensors in equipment, and objects whose primary profiles did not include ICT functions (dishwashers, microwave ovens, refrigerators, doors, walls, furniture, windows, facades, elevators, ventilation modules, heating/cooling modules, water systems, roofs, electrical power systems, communication systems, office equipment, data storage systems, video monitoring and property control systems, and home appliances), which require young and elderly people to be able to cope with and sett configurations to meet their requirements [35].

- Data management: In IoT, it is crucial to utilize appropriate data models and semantic descriptions of their content, appropriate language, and format. For instance, when big data are processed and managed, the uncertainty causes severe liabilities with respect to the effectiveness and the accuracy of the big data [42]. To give an example, consider continuous measuring of temperature as a function of an environmental sensor network that produces big data streams. Due to readings, and possible transmission errors, it happens that temperature readings arrive at the receiver node with some uncertainty that is modeled in terms of probability distribution functions. Another example considers the function of a smart city application that determines geographical coordinates. Hence, the collection of geographical coordinates represents big data that are defined in hierarchical models on top of the geography of the smart city, such as stores, streets, quarters, districts, areas, cities, etc. Missing or processing errors causes smart data to be processed without an exact association to the hierarchical level of the smart city they refer to (e.g., the quarter where a certain store is located is known but the street of this store is not known).

- Reliability: The data loss may occur due to the inability of the system to maintain errors. Many factors can cause errors that decrease reliability and safety in IoT nodes, namely, (1) data distortion and corruption, so data can be changed due to imperfect software and/or hardware during data processing, transmission, and storage and (2) complete and partial data loss where the probability of technological disasters, virus attacks, human-made errors, etc. will lead to a complete or partial loss of data [9].

- Privacy protection: IoT nodes must ensure an appropriate level of security and privacy because of their close relationship with the real world [43]. For example, consider the case of obtaining data by unauthorized users, uncertainty in authentication stems from the incompleteness of information regarding the acceptance of an authentication request leads to an incident. Moreover, uncertainty arises from a lack of precision (ambiguity) in the information requesting to authenticate in the system. For instance, assume that X attempts to authenticate to a system. We also assume that authenticating him endangers the system (the access would expose an asset to a threat) with a probability of 50%. A formal definition for uncertainty in authentication is presented as follows: given a set of authentication requests: R = {r1, r2, r3, …, rn}, a set of possible access decisions: D = {Access, Deny}, an access decision function: F:R→D, and a set of possible outcomes for any access decision: O = {Safe, Incident}; the uncertainty of an authentication request is defined by the probability.

- Security: Security in IoT ensures controlling access and authorizing legitimate uses only. Challenges and approaches are proposed to overcome the security issues in different layers of IoT [9]. For instance, in Application Programming Interface (API) malfunctions, clouds provide a set of User Interfaces (UIs) and APIs to customers to manage and interact with cloud services. The security and availability of services depend on the security of these APIs. Because they are used frequently, they are very likely to be attacked continuously. Therefore, adequate protection from the attacks is necessary. These interfaces must protect against accidental and malicious attempts to prevent security policies. Poorly designed, broken, exposed, and hacked APIs could lead to data ambiguity.

- Legal regulatory compliance: Governance strategies are a useful mechanism to address issues related to risk mitigation, compliance, and legal requirements [44]. However, supporting these strategies face challenges mostly due to uncertainties inherited in IoT infrastructure such as requiring changes in sensor configurations (i.e., transfer rate) or changing nodes communication protocol in situations such as an emergency or multiple devices failure that may lead to performance variability.

- Limitations of sensors in IoT: Due to limitations of sensors and uncertain measures, sensors readings require preprocessing because they induce uncertainty due to (1) missing readings (tag collisions, tag detuning, metal/liquid effect, tag misalignment); (2) inconsistent data: RFID tags can be read using various readers at the same time; therefore, it is possible to obtain inconsistent data about the exact location of tags; (3) ghost data: sometimes radio frequencies might cause data to be reflected in reading areas, so RFID readers might read those reflections; (4) redundant data: captured data may contain significant amounts of additional information; and (5) incomplete data: tagged objects might be stolen or forged and generate fake data [34].

8.2. Classification of Sources of Uncertainty Based on Layers of IoT Stack

8.3. Proposed Solutions for Dealing with Uncertainty

- Heterogeneity of nodes: Selection of technology and types of nodes is an important factor that needs to be considered for IoT infrastructure. Acceptance of shared standards can be used to cope with the diversity of devices and applications. Adaptation of trust relationships needs to be implemented on the following levels in order to guide IoT devices to use the most trustworthy information for decision making and to reduce risk caused by malicious devices, i.e., IoT entities, data perception (sensor sensibility, preciseness, security, reliability, persistence, data collection efficiency), privacy preservation (user data and personal information), data aggregation, transmission, and communication, and human–computer interaction.

- Scalability: Service composition mechanisms are used to handle scalability requirements. A service composition mechanism defines a meaningful interaction between services by considering two functional dimensions—control flow and data flow. Control flow refers to the order in which interactions occur, and data flow defines how data are moved among services (behavior of workflow). With the increased number of devices, a data processing pipeline is required, which consists of a set of data and functions according to IoT applications that can appropriately be applied to the transferred data streams between various nodes. It is mandatory to have a system that can be easily expanded according to future needs [38].

- Reliability: Several mechanisms are used to improve reliability, minimize risks of data loss, distortion, and security violation. Ensuring that information generated by IoT is precise, authentic, up to date and complete is very important. Comprehensive approaches are used to detect possible errors in the design phase of the system to avoid uncertainty due to partial information. Replication is another solution that helps to ensure data reliability but requires applying energy-efficient cryptographic algorithms, error correction codes, access structures, secret sharing schemes, etc. However, applying these techniques may increase data storage cost, load, processing for encryption and decryption, the transmission of secret keys, etc. [9].

- Privacy protection: We discussed possibility and probability theory earlier. Probability theory was chosen to define and handle uncertainty in authentication due to the challenges of IoT scenarios such as scalability and the need for less complexity. Privacy protection can be handled by “trust” analysis methods in order to design lightweight security protocols and efficient cryptographic algorithms. Prediction models can also be used in trust analysis. Supervised machine learning algorithms (classification algorithms) may be applied to the dataset in order to build prediction models. For instance, authentication requests were classified into “Access” or “Deny” class. Afterward, a behavioral-based analysis algorithm is used to check the history profile of the user to improve the accuracy of prediction models [43].

- Simulation and modeling uncertainty in IoT: The creation of simulations and models of uncertainty phenomena is a big challenge in IoT [33]. Numerical or statistical model checking at runtime will help select the proper configuration that complies with self-adaption and manage uncertainty in different IoT system components to evaluate system properties. For example, noise uncertainty will degrade the performance severely; in [45], the authors proposed a noise detector in IoT systems, which opens the door for others to find techniques that improve the power control and sensing accuracy in IoT devices.

- Legal regulation and standardization: Legal regulation, standardization, and security policies need to be implemented to overcome ethical and legal issues related to IoT. For example, in the data aggregation process, types of relevant standards are technology standards (including network protocols, communication protocols, and data-aggregation standards), and regulatory standards (related to security and privacy of data).

- Transmission technology: In wireless data transfer, a large sample size requires more energy, bandwidth, and latency. For instance, in WSN, traditional data compression techniques are not suitable, especially in dense networks. Avoiding oversampling beyond network capacity for a WSN is necessary to prevent excessive data transmission from sensors. Techniques that control transmission scheduling, queuing, and managing delay constraints need to be implemented for data transmission in IoT [39].

- Data management: In IoT, data management is handled by a layer between objects and devices that generate the data. Raw data or aggregated data will be transmitted via the network layer to data repositories. Users have access to these repositories to acquire the data [46].

- Optimum energy use: In most IoT applications, the energy consumption ratio is high. For instance, to reduce energy consumption, the adaption of active/sleep scheduling algorithms is employed to improve IoT lifetime. Other techniques for efficient energy consumption include low power radio, the use of self-sustaining sensors, network protocols that generate low traffic rates, and content caching [47].

9. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability

Conflicts of Interest

References

- Oreizy, P.; Gorlick, M.M.; Taylor, R.N.; Heimhigner, D.; Johnson, G.; Medvidovic, N.; Quilici, A.; Rosenblum, D.S.; Wolf, A.L. An architecture-based approach to self-adaptive software. In IEEE Intelligent Systems and Their Applications; IEEE: Piscataway, NJ, USA, 1999. [Google Scholar]

- Shevtsov, S.; Weyns, D.; Maggio, M. SimCA* A Control-theoretic Approach to Handle Uncertainty in Self-adaptive Systems with Guarantees. ACM Trans. Auton. Adapt. Syst. 2019, 19, 1–34. [Google Scholar] [CrossRef]

- Salehie, M.; Tahvildari, L. Self-adaptive software: Landscape and research challenges. ACM Trans. Auton. Adapt. Syst. 2009, 4, 1–42. [Google Scholar] [CrossRef]

- Macías-Escrivá, F.D.; Haber, R.; Del Toro, R.; Hernandez, V. Self-adaptive systems: A survey of current approaches, research challenges and applications. Expert Syst. Appl. 2013, 40, 7267–7279. [Google Scholar] [CrossRef]

- Weyns, D. Engineering self-adaptive software systems–An organized tour. In Proceedings of the 2018 IEEE 3rd International Workshops on Foundations and Applications of Self* Systems (FAS* W), Trento, Italy, 3–7 September 2018; pp. 1–2. [Google Scholar]

- Brun, Y.; Serugendo, G.D.; Gacek, C.; Giese, H.; Kienle, H.; Litoiu, M.; Müller, H.; Pezzè, M.; Shaw, M. Engineering self-adaptive systems through feedback loops. In Software Engineering for Self-Adaptive Systems 2009; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Iftikhar, M.U.; Weyns, D. Activforms: Active formal models for self-adaptation. In Proceedings of the 9th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, Hyderabad, India, 2 June 2014; pp. 125–134. [Google Scholar]

- Andersson, J.; De Lemos, R.; Malek, S.; Weyns, D. Modeling dimensions of self-adaptive software systems. In Software Engineering for Self-Adaptive Systems; Springer: Berlin/Heidelberg, Germany, 2009; pp. 27–47. [Google Scholar]

- Tchernykh, A.; Babenko, M.; Chervyakov, N.; Miranda-López, V.; Avetisyan, A.; Drozdov, A.Y.; Rivera-Rodriguez, R.; Radchenko, G.; Du, Z. Scalable Data Storage Design for Nonstationary IoT Environment With Adaptive Security and Reliability. IEEE Internet Things J. 2020, 7, 10171–10188. [Google Scholar] [CrossRef]

- Schmerl, B.; Kazman, R.; Ali, N.; Grundy, J.; Mistrik, I. Managing trade-offs in adaptable software architectures. In Managing Trade-Offs in Adaptable Software Architectures; Morgan Kaufmann: Burlington, MA, USA, 2017; pp. 1–13. [Google Scholar]

- Sawyer, P.; Bencomo, N.; Whittle, J.; Letier, E.; Finkelstein, A. Requirements-aware systems: A research agenda for re for self-adaptive systems. In Proceedings of the 2010 18th IEEE International Requirements Engineering Conference, Sydney, NSW, Australia, 27 September–1 October 2010. [Google Scholar]

- Chen, S.; Xu, H.; Liu, D.; Hu, B.; Wang, H. A vision of IoT: Applications, challenges, and opportunities with china perspective. IEEE Internet Things J. 2014, 1, 349–359. [Google Scholar] [CrossRef]

- Mahdavi-Hezavehi, S.; Avgeriou, P.; Weyns, D. A classification framework of uncertainty in architecture-based self-adaptive systems with multiple quality requirements. In Managing Trade-Offs in Adaptable Software Architectures; Morgan Kaufmann: Burlington, MA, USA, 2017; pp. 45–77. [Google Scholar]

- Esfahani, N. Management of Uncertainty in Self-Adaptive Software. Ph.D. Dissertation, George Mason University, Fairfax, VA, USA, 2014. [Google Scholar]

- Garlan, D. Software engineering in an uncertain world. In Proceedings of the FSE/SDP Workshop on Future of Software Engineering Research, Santa Fe, NM, USA, 7 November 2010; pp. 125–128. [Google Scholar]

- Esfahani, N.; Sam, M. Uncertainty in self-adaptive software systems. In Software Engineering for Self-Adaptive Systems II; Springer: Berlin/Heidelberg, Germany, 2013; pp. 214–238. [Google Scholar]

- Esfahani, N.; Kouroshfar, E.; Malek, S. Taming uncertainty in self-adaptive software. In Proceedings of the 19th ACM SIGSOFT Symposium and the 13th European Conference on Foundations of Software Engineering, Szeged, Hungary, 5–9 September 2011; pp. 234–244. [Google Scholar]

- Weyns, D.; Malek, S.; Andersson, J. FORMS: A formal reference model for self-adaptation. In Proceedings of the 7th International Conference on Autonomic Computing, Washington, DC, USA, 7–11 June 2010; pp. 205–214. [Google Scholar]

- Aughenbaugh, J.M. Managing Uncertainty in Engineering Design Using Imprecise Probabilities and Principles of Information Economics. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2006. [Google Scholar]

- Bertsekas, D.P.; Tsitsiklis, J.N. Introduction to Probability, 2nd ed.; American Mathematical Society: Providence, RI, USA, 2008. [Google Scholar]

- Hoff, P.D. A First Course in Bayesian Statistical Methods; Springer: New York, NY, USA, 2009. [Google Scholar]

- Zadeh, L.A. Fuzzy sets as a basis for a theory of possibility. Fuzzy Sets Syst. 1978, 1, 3–28. [Google Scholar] [CrossRef]

- Garlan, D.; Cheng, S.W.; Huang, A.C.; Schmerl, B.; Steenkiste, P. Rainbow: Architecture-based self-adaptation with reusable infrastructure. Computer 2004, 10, 46–54. [Google Scholar] [CrossRef]

- Poladian, V.; Sousa, J.P.; Garlan, D.; Shaw, M. Dynamic configuration of resource-aware services. In Proceedings of the 26th International Conference on Software Engineering, Edinburgh, UK, 28 May 2004; pp. 604–613. [Google Scholar]

- Poladian, V.; Garlan, D.; Shaw, M.; Satyanarayanan, M.; Schmerl, B.; Sousa, J. Leveraging resource prediction for anticipatory dynamic configuration. In Proceedings of the First International Conference on Self-Adaptive and Self-Organizing Systems (SASO 2007), Cambridge, MA, USA, 9–11 July 2007; pp. 214–223. [Google Scholar]

- Narayanan, D.; Satyanarayanan, M. Predictive resource management for wearable computing. In Proceedings of the 1st International Conference on Mobile Systems, Applications and Services, San Francisco, CA, USA, 5 May 2003; pp. 113–128. [Google Scholar]

- Elkhodary, A.; Esfahani, N.; Malek, S. FUSION: A framework for engineering self-tuning self-adaptive software systems. In Proceedings of the Eighteenth ACM SIGSOFT International Symposium on Foundations of Software Engineering, Santa Fe, NM, USA, 7 November 2010; pp. 7–16. [Google Scholar]

- Cooray, D.; Malek, S.; Roshandel, R.; Kilgore, D. RESISTing reliability degradation through proactive reconfiguration. In Proceedings of the IEEE/ACM International Conference on Automated Software Engineering, Antwerp, Belgium, 20–24 September 2010; pp. 83–92. [Google Scholar]

- Whittle, J.; Sawyer, P.; Bencomo, N.; Cheng, B.H.; Bruel, J.M. Relax: Incorporating uncertainty into the specification of self-adaptive systems. In Proceedings of the 2009 17th IEEE International Requirements Engineering Conference, Atlanta, GA, USA, 31 August–4 September 2009; pp. 79–88. [Google Scholar]

- Cheng, B.H.; Sawyer, P.; Bencomo, N.; Whittle, J. A goal-based modeling approach to develop requirements of an adaptive system with environmental uncertainty. In International Conference on Model Driven Engineering Languages and Systems; Springer: Berlin/Heidelberg, Germany, 2009; pp. 468–483. [Google Scholar]

- Fredericks, E.M.; DeVries, B.; Cheng, B.H. AutoRELAX: Automatically RELAXing a goal model to address uncertainty. Empir. Softw. Eng. 2014, 19, 1466–1501. [Google Scholar] [CrossRef]

- Baresi, L.; Pasquale, L.; Spoletini, P. Fuzzy goals for requirements-driven adaptation. In Proceedings of the 2010 18th IEEE International Requirements Engineering Conference, Sydney, NSW, Australia, 27 September–1 October 2010; pp. 125–134. [Google Scholar]

- Iftikhar, M.U.; Ramachandran, G.S.; Bollansée, P.; Weyns, D.; Hughes, D. Deltaiot: A self-adaptive internet of things exemplar. In Proceedings of the 2017 IEEE/ACM 12th International Symposium on Software Engineering for Adaptive and Self-Managing Systems (SEAMS), Buenos Aires, Argentina, 22 May 2017; pp. 76–82. [Google Scholar]

- Weyns, D.; Ramachandran, G.S.; Singh, R.K. Self-managing internet of things. In International Conference on Current Trends in Theory and Practice of Informatics; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Magruk, A. The most important aspects of uncertainty in the Internet of Things field–context of smart buildings. Procedia Eng. 2015, 122, 220–227. [Google Scholar] [CrossRef] [Green Version]

- Van Der Donckt, M.J.; Weyns, D.; Iftikhar, M.U.; Singh, R.K. Cost-Benefit Analysis at Runtime for Self-adaptive Systems Applied to an Internet of Things Application. In Proceedings of the 13th International Conference on Evaluation of Novel Approaches to Software Engineering, Madeira, Portugal, 23–24 March 2018; pp. 478–490. [Google Scholar] [CrossRef]

- Sarkar, C.; Nambi, S.A.; Prasad, R.V.; Rahim, A. A scalable distributed architecture towards unifying IoT applications. In Proceedings of the 2014 IEEE World Forum on Internet of Things (WF-IoT), Seoul, Korea, 6–8 March 2014; pp. 508–513. [Google Scholar]

- Arellanes, D.; Lau, K.K. Evaluating IoT service composition mechanisms for the scalability of IoT systems. Future Gener. Comput. Syst. 2020, 108, 827–848. [Google Scholar] [CrossRef]

- Mal-Sarkar, S.; Iftikhar, U.; Sikder, C.Y.; Vijay, K.K. Uncertainty-aware wireless sensor networks. Int. J. Mob. Commun. 2009, 7, 330–345. [Google Scholar] [CrossRef]

- Vepsäläinen, J.; Ritari, A.; Lajunen, A.; Kivekäs, K.; Tammi, K. Energy uncertainty analysis of electric buses. Energies 2018, 11, 3267. [Google Scholar] [CrossRef] [Green Version]

- Tissaoui, A.; Saidi, M. Uncertainty in IoT for Smart Healthcare: Challenges, and Opportunities. In International Conference on Smart Homes and Health Telematics; Springer: Cham, Switzerland, 2020; pp. 232–239. [Google Scholar]

- Cuzzocrea, A. Uncertainty and Imprecision in Big Data Management: Models, Issues, Paradigms, and Future Research Directions. In Proceedings of the 2020 4th International Conference on Cloud and Big Data Computing, Virtual, UK, 26–28 August 2020; pp. 6–9. [Google Scholar]

- Heydari, M. Indeterminacy-Aware Prediction Model for Authentication in IoT. Ph.D. Thesis, Bournemouth University, Poole, UK, 2020. [Google Scholar]

- Nastic, S.; Copil, G.; Truong, H.L.; Dustdar, S. Governing elastic IoT cloud systems under uncertainty. In Proceedings of the 2015 IEEE 7th International Conference on Cloud Computing Technology and Science (CloudCom), Vancouver, BC, Canada, 30 November–3 December 2015; pp. 131–138. [Google Scholar]

- Yao, J.; Jin, M.; Guo, Q.; Li, Y.; Xi, J. Effective energy detection for IoT systems against noise uncertainty at low SNR. IEEE Internet Things J. 2018, 6, 6165–6176. [Google Scholar] [CrossRef]

- Diène, B.; Rodrigues, J.J.; Diallo, O.; Ndoye, E.H.; Korotaev, V.V. Data management techniques for Internet of Things. Mech. Syst. Signal Process. 2020, 138, 106564. [Google Scholar] [CrossRef]

- Shah, B.; Abbas, A.; Ali, G.; Iqbal, F.; Khattak, A.M.; Alfandi, O.; Kim, K.I. Guaranteed lifetime protocol for IoT based wireless sensor networks with multiple constraints. Ad Hoc Netw. 2020, 104, 102158. [Google Scholar] [CrossRef]

| Source of Uncertainty | Classification Based on Location | Classification Based on Nature | |||

|---|---|---|---|---|---|

| Structural | Context | Input Parameter | Aleatory | Epistemic | |

| Simplifying assumptions |  |  |  | ||

| Model drift |  |  | |||

| Noise in Sensing |  |  | |||

| Human in the loop |  |  | |||

| Objectives |  |  |  | ||

| Decentralization |  |  |  | ||

| Execution context |  |  |  |  | |

| Source of Uncertainty | Classification Based on Layer | |||

|---|---|---|---|---|

| Application Layer | Middleware Layer | Network Layer | Perception Layer | |

| Dynamics in the availability of resources (internal Dynamics) |  |  | ||

| Dynamics in the environment (External Dynamics) |  |  | ||

| Heterogeneity of edge nodes |  |  | ||

| Scalability |  |  | ||

| Transmission Technology |  | |||

| Optimum energy use |  | |||

| User Configuration capabilities |  | |||

| Data management |  | |||

| Reliability |  |  | ||

| Privacy protection |  |  | ||

| Security |  |  | ||

| Legal regulatory compliance |  | |||

| Limitations of sensors |  | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ismail, S.; Shah, K.; Reza, H.; Marsh, R.; Grant, E. Toward Management of Uncertainty in Self-Adaptive Software Systems: IoT Case Study. Computers 2021, 10, 27. https://0-doi-org.brum.beds.ac.uk/10.3390/computers10030027

Ismail S, Shah K, Reza H, Marsh R, Grant E. Toward Management of Uncertainty in Self-Adaptive Software Systems: IoT Case Study. Computers. 2021; 10(3):27. https://0-doi-org.brum.beds.ac.uk/10.3390/computers10030027

Chicago/Turabian StyleIsmail, Shereen, Kruti Shah, Hassan Reza, Ronald Marsh, and Emanuel Grant. 2021. "Toward Management of Uncertainty in Self-Adaptive Software Systems: IoT Case Study" Computers 10, no. 3: 27. https://0-doi-org.brum.beds.ac.uk/10.3390/computers10030027