Can We Trust Edge Computing Simulations? An Experimental Assessment

Abstract

:1. Introduction

- implementation of EdgeBench, a reference benchmark in EC, in a simulation environment using FogComputingSim tool, as a proof of concept that simulators can be useful in setting up EC environments;

- comparison of real-world and simulated implementations.

2. Related Work

3. Experimental Setup

3.1. Methodology

3.2. The EdgeBench Benchmark

- “Time_in_flight” is the time spent sending data from the Raspberry Pi to the cloud ().

- “IoT_Hub_time” is the time spent by the cloud storing the results of the computation of each task in an application’s workload ().

- “Compute_time” corresponds to the time spent computing each task in question ().

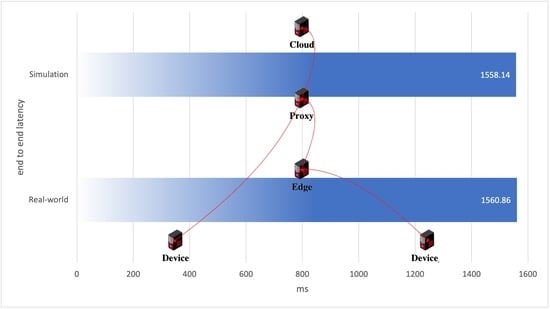

- “End_to_end_latency” is the total time spent solving the proposed problem, i.e., the sum of all times (sending, computing, and storing the result) ().

3.3. Real-World Environment

3.4. Simulation Environment

4. Experimental Evaluation

4.1. Metrics

- Time_in_flight (ms)—time spent sending data from the Edge device (Raspberry Pi) to the cloud;

- IoT_Hub_time (ms)—time spent within the cloud to save the results;

- End_to_end_time (ms)—sum of the time “Time_in_flight” and “IoT_Hub_time”;

- Compute_time (ms)—time spent to compute the data;

- Payloadsize (bytes)—size of files uploaded to the cloud. If the computation is on the Raspberry Pi, it only sends the results file; if it is performed in the cloud, the full data file is sent;

- End_to_end_latency (ms)—total time spent, corresponds to the sum of the time: “End_to_end_time” and “Compute_time”.

4.2. Benchmark Comparison

4.3. Work Limitations

- Despite our changes in FogComputingSim to allow for some network fluctuations, this simulator is not a network simulator and thereby ignores network effects at the cost of minor differences in data transmission. Network effects are a motivation for offloading in the first place, and it is a limitation that we considered in assessing the results.

- The use of a single benchmark and setup limits the conclusions that can be taken from the overall simulator validity. While it can give some clear indications, further tests and setups are needed to obtain a more clear assessment.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Carvalho, G.; Cabral, B.; Pereira, V.; Bernardino, J. Computation offloading in Edge Computing environments using Artificial Intelligence techniques. Eng. Appl. Artif. Intell. 2020, 95, 103840. [Google Scholar] [CrossRef]

- Shakarami, A.; Ghobaei-Arani, M.; Shahidinejad, A. A survey on the computation offloading approaches in mobile edge computing: A machine learning-based perspective. Comput. Netw. 2020, 182, 107496. [Google Scholar] [CrossRef]

- Vieira, J.C. Fog and Cloud Computing Optimization in Mobile IoT Environments. Ph.D. Thesis, Instituto Técnico de Lisboa, Lisbon, Portugal, 2019. [Google Scholar]

- Gupta, H.; Dastjerdi, A.V.; Ghosh, S.K.; Buyya, R. iFogSim: A Toolkit for Modeling and Simulation of Resource Management Techniques in Internet of Things, Edge and Fog Computing Environments. Softw. Pract. Exp. 2017, 47, 1275–1296. [Google Scholar] [CrossRef] [Green Version]

- Das, A.; Patterson, S.; Wittie, M. Edgebench: Benchmarking edge computing platforms. In Proceedings of the 2018 IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC Companion), Zurich, Switzerland, 17–20 December 2018; pp. 175–180. [Google Scholar]

- Mahmud, R.; Buyya, R. Modeling and Simulation of Fog and Edge Computing Environments Using iFogSim Toolkit. In Fog and Edge Computing: Principles and Paradigms, 1st ed.; Srirama, R.B.S.N., Ed.; Wiley: Hoboken, NJ, USA, 2019; Chapter 17; pp. 433–464. [Google Scholar]

- Awaisi, K.S.; Assad, A.; Samee, U.K.; Rajkumar, B. Simulating Fog Computing Applications using iFogSim Toolkit. In Mobile Edge Computing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 5–22. [Google Scholar] [CrossRef]

- Skarlat, O.; Nardelli, M.; Schulte, S.; Borkowski, M.; Leitner, P. Optimized IoT service placement in the fog. Serv. Oriented Comput. Appl. 2017, 11, 427–443. [Google Scholar] [CrossRef]

- Duan, K.; Fong, S.; Zhuang, Y.; Song, W. Carbon Oxides Gases for Occupancy Counting and Emergency Control in Fog Environment. Symmetry 2018, 10, 66. [Google Scholar] [CrossRef] [Green Version]

- Mahmoud, M.M.E.; Rodrigues, J.J.P.C.; Saleem, K.; Al-Muhtadi, J.; Kumar, N.; Korotaev, V. Towards energy-aware fog-enabled cloud of things for healthcare. Comput. Electr. Eng. 2018, 67, 58–69. [Google Scholar] [CrossRef]

- Mutlag, A.A.; Khanapi Abd Ghani, M.; Mohammed, M.A.; Maashi, M.S.; Mohd, O.; Mostafa, S.A.; Abdulkareem, K.H.; Marques, G.; de la Torre Díez, I. MAFC: Multi-Agent Fog Computing Model for Healthcare Critical Tasks Management. Sensors 2020, 20, 1853. [Google Scholar] [CrossRef] [Green Version]

- Kumar, H.A.; J, R.; Shetty, R.; Roy, S.; Sitaram, D. Comparison Of IoT Architectures Using A Smart City Benchmark. Procedia Comput. Sci. 2020, 171, 1507–1516. [Google Scholar] [CrossRef]

- Jamil, B.; Shojafar, M.; Ahmed, I.; Ullah, A.; Munir, K.; Ijaz, H. A job scheduling algorithm for delay and performance optimization in fog computing. Concurr. Comput. Pract. Exp. 2020, 32, e5581. [Google Scholar] [CrossRef]

- Bala, M.I.; Chishti, M.A. Offloading in Cloud and Fog Hybrid Infrastructure Using iFogSim. In Proceedings of the 2020 10th International Conference on Cloud Computing, Data Science Engineering (Confluence), Noida, India, 29–31 January 2020; pp. 421–426. [Google Scholar] [CrossRef]

- Naouri, A.; Wu, H.; Nouri, N.A.; Dhelim, S.; Ning, H. A Novel Framework for Mobile-Edge Computing by Optimizing Task Offloading. IEEE Internet Things J. 2021, 8, 13065–13076. [Google Scholar] [CrossRef]

- Wang, C.; Li, R.; Li, W.; Qiu, C.; Wang, X. SimEdgeIntel: A open-source simulation platform for resource management in edge intelligence. J. Syst. Archit. 2021, 115, 102016. [Google Scholar] [CrossRef]

- Varghese, B.; Wang, N.; Bermbach, D.; Hong, C.H.; de Lara, E.; Shi, W.; Stewart, C. A Survey on Edge Benchmarking. arXiv 2020, arXiv:2004.11725. [Google Scholar]

- Yang, Q.; Jin, R.; Gandhi, N.; Ge, X.; Khouzani, H.A.; Zhao, M. EdgeBench: A Workflow-based Benchmark for Edge Computing. arXiv 2020, arXiv:2010.14027. [Google Scholar]

- Halawa, H.; Abdelhafez, H.A.; Ahmed, M.O.; Pattabiraman, K.; Ripeanu, M. MIRAGE: Machine Learning-based Modeling of Identical Replicas of the Jetson AGX Embedded Platform. In Proceedings of the 2021 IEEE/ACM Symposium on Edge Computing (SEC), San Jose, CA, USA, 14–17 December 2021; pp. 26–40. [Google Scholar]

- Das, A.; Park, T.J. GitHub—rpi-nsl/Edgebench: Benchmark for Edge Computing Platforms. 2019. Available online: https://github.com/rpi-nsl/edgebench (accessed on 1 April 2022).

- Charyyev, B.; Arslan, E.; Gunes, M.H. Latency Comparison of Cloud Datacenters and Edge Servers. In Proceedings of the GLOBECOM 2020—2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Sackl, A.; Casas, P.; Schatz, R.; Janowski, L.; Irmer, R. Quantifying the impact of network bandwidth fluctuations and outages on Web QoE. In Proceedings of the 2015 Seventh International Workshop on Quality of Multimedia Experience (QoMEX), Pilos, Greece, 26–29 May 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Giang, N.K.; Blackstock, M.; Lea, R.; Leung, V.C.M. Distributed Data Flow: A Programming Model for the Crowdsourced Internet of Things. In Proceedings of the Doctoral Symposium of the 16th International Middleware Conference, Vancouver, BC, Canada, 7–11 December 2015; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

| Device | CPU (MIPS) | RAM (MB) | Storage (MB) |

|---|---|---|---|

| Cloud | 180,000 | 64 | 12,288 |

| Proxy server | 100 | 100 | 0 |

| Raspberry Pi | 10,000 | 1024 | 4096 |

| Dataset | Dependencies | Processing Cost (MIPS) | Data Size (Bytes) |

|---|---|---|---|

| Audio | Raw Data | 2650 | 84,852 |

| Processed Data | 5200 | 162 | |

| Image | Raw Data | 800 | 131,707 |

| Processed Data | 5300 | 750 | |

| Scalar | Raw Data | 30 | 240 |

| Processed Data | 5100 | 233 |

| Benchmark | Environment | Time_in_Flight (ms) | IoT_Hub _Time (ms) | End_to_ end_Time (ms) | Compute_ Time (ms) | End_to_ end_Latency (ms) | Payload Size (Bytes) |

|---|---|---|---|---|---|---|---|

| Scalar | Edge | 34.82 | 569.05 | 603.87 | 10.92 | 614.78 | 234.00 |

| SimEdge | 32.27 | 540.27 | 572.53 | 15.69 | 588.22 | ||

| Cloud | 0.00 | 0.00 | 533.55 | 0.00 | 533.55 | 238.99 | |

| SimCloud | 32.63 | 679.48 | 475.64 | 0.00 | 475.64 | ||

| Images | Edge | 34.52 | 610.11 | 644.64 | 242.72 | 887.35 | 751.35 |

| SimEdge | 35.27 | 574.68 | 609.95 | 280.34 | 890.29 | ||

| Cloud | 0.00 | 0.00 | 544.97 | 162.66 | 707.63 | 131,707.42 | |

| SimCloud | 33.93 | 547.75 | 581.69 | 195.05 | 776.74 | ||

| Audio | Edge | 35.94 | 588.11 | 624.04 | 4739.11 | 5363.15 | 162.07 |

| SimEdge | 26.23 | 626.91 | 653.14 | 4640.72 | 5293.86 | ||

| Cloud | 0.00 | 0.00 | 542.05 | 716.63 | 1258.68 | 84,853.85 | |

| SimCloud | 31.30 | 523.74 | 555.04 | 769.02 | 1324.06 |

| Benchmark | Environment | End_to_end_Latency (ms) | ||||||

|---|---|---|---|---|---|---|---|---|

| Minimum | Quartile 25% | Median | Quartile 75% | Maximum | Average | Std. Dev. | ||

| Scalar | Edge | 99.57 | 383.89 | 630.23 | 833.07 | 1179.98 | 614.78 | 277.78 |

| SimEdge | 442.27 | 510.21 | 574.10 | 640.69 | 1083.18 | 588.22 | 123.51 | |

| Cloud | 28.33 | 307.46 | 533.75 | 767.12 | 1032.22 | 533.55 | 286.72 | |

| SimCloud | 201.95 | 251.13 | 321.65 | 622.05 | 1054.56 | 475.64 | 274.95 | |

| Images | Edge | 293.92 | 598.59 | 878.80 | 1112.32 | 7583.10 | 887.35 | 548.48 |

| SimEdge | 474.62 | 558.39 | 627.11 | 1370.62 | 1830.72 | 890.29 | 469.38 | |

| Cloud | 153.73 | 451.90 | 720.86 | 951.60 | 3327.76 | 707.63 | 332.92 | |

| SimCloud | 136.30 | 609.89 | 828.40 | 948.67 | 1174.24 | 776.74 | 239.76 | |

| Audio | Edge | 2382.31 | 4245.45 | 4819.48 | 5780.76 | 19426.96 | 5363.15 | 2530.41 |

| SimEdge | 1240.63 | 4162.39 | 4895.30 | 6877.90 | 9530.80 | 5293.86 | 2051.92 | |

| Cloud | 350.95 | 930.48 | 1230.97 | 1515.55 | 3126.76 | 1258.68 | 489.23 | |

| SimCloud | 698.44 | 981.00 | 1255.43 | 1704.40 | 2021.79 | 1324.06 | 407.09 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carvalho, G.; Magalhães, F.; Cabral, B.; Pereira, V.; Bernardino, J. Can We Trust Edge Computing Simulations? An Experimental Assessment. Computers 2022, 11, 90. https://0-doi-org.brum.beds.ac.uk/10.3390/computers11060090

Carvalho G, Magalhães F, Cabral B, Pereira V, Bernardino J. Can We Trust Edge Computing Simulations? An Experimental Assessment. Computers. 2022; 11(6):90. https://0-doi-org.brum.beds.ac.uk/10.3390/computers11060090

Chicago/Turabian StyleCarvalho, Gonçalo, Filipe Magalhães, Bruno Cabral, Vasco Pereira, and Jorge Bernardino. 2022. "Can We Trust Edge Computing Simulations? An Experimental Assessment" Computers 11, no. 6: 90. https://0-doi-org.brum.beds.ac.uk/10.3390/computers11060090