Understanding the Emotional Impact of GIFs on Instagram through Consumer Neuroscience

Abstract

:1. Introduction

2. Literature Background

2.1. Conceptualization of Emotion Assessment

2.2. Sentiment Analysis and Emotional Engagement in Social Media

2.3. Neuroscience at the Service of the Study of Emotions: Implicit and Explicit Measures

2.4. The Use of GIFs in Social Media

3. Research Questions and Hypotheses

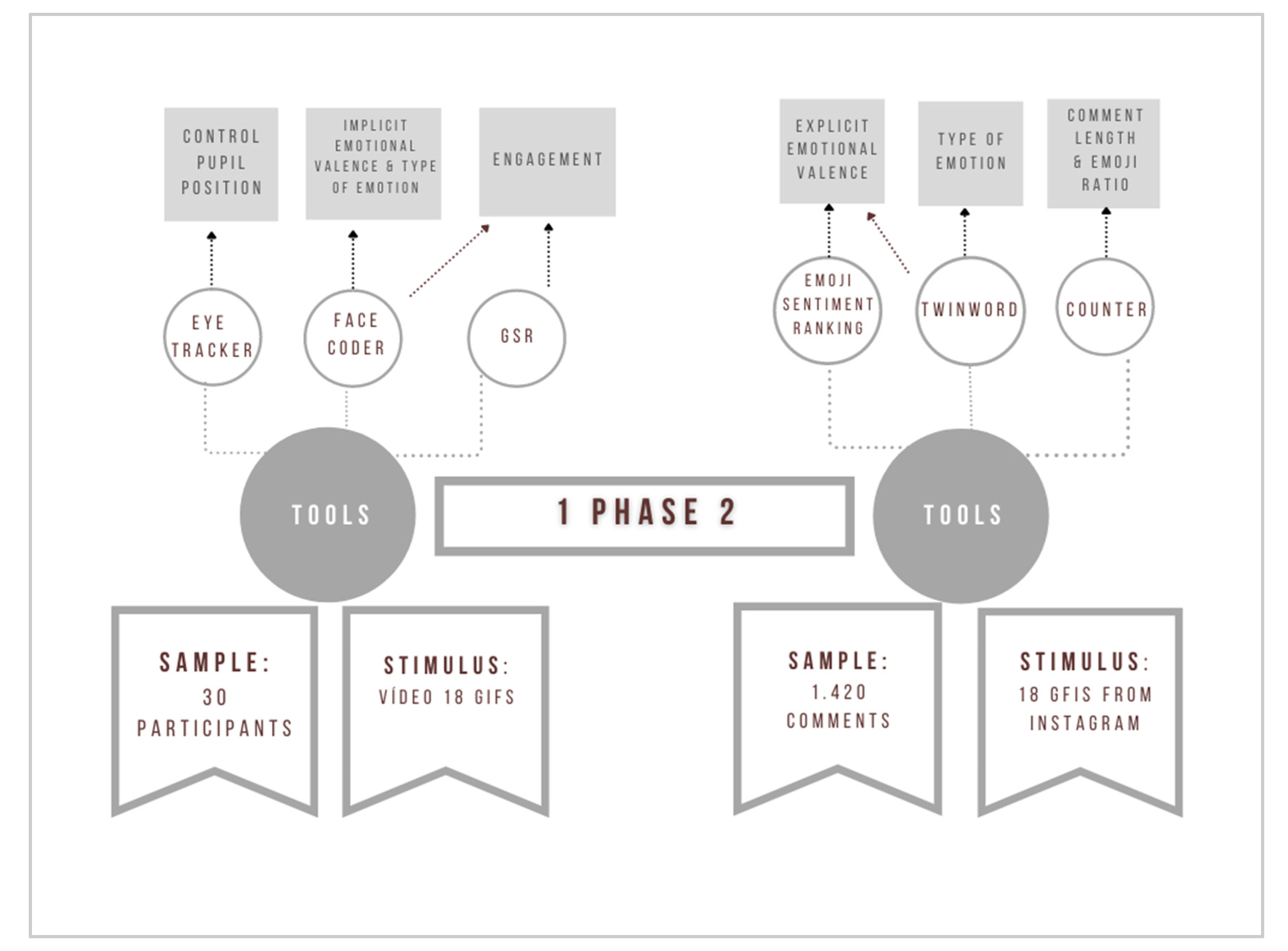

4. Materials, Methods and Results

4.1. Phase 1. Experimental Study of Neuromarketing Applied to GIFs

4.1.1. Participants

4.1.2. Stimuli

4.1.3. Devices

4.1.4. Measuring Tools

4.1.5. Data Analysis

4.1.6. Ethical Issues

4.1.7. Procedure

4.1.8. Results

4.2. Phase 2. Sentiment Analysis of GIF Content on Instagram

4.2.1. Stimuli

4.2.2. Measuring Tools

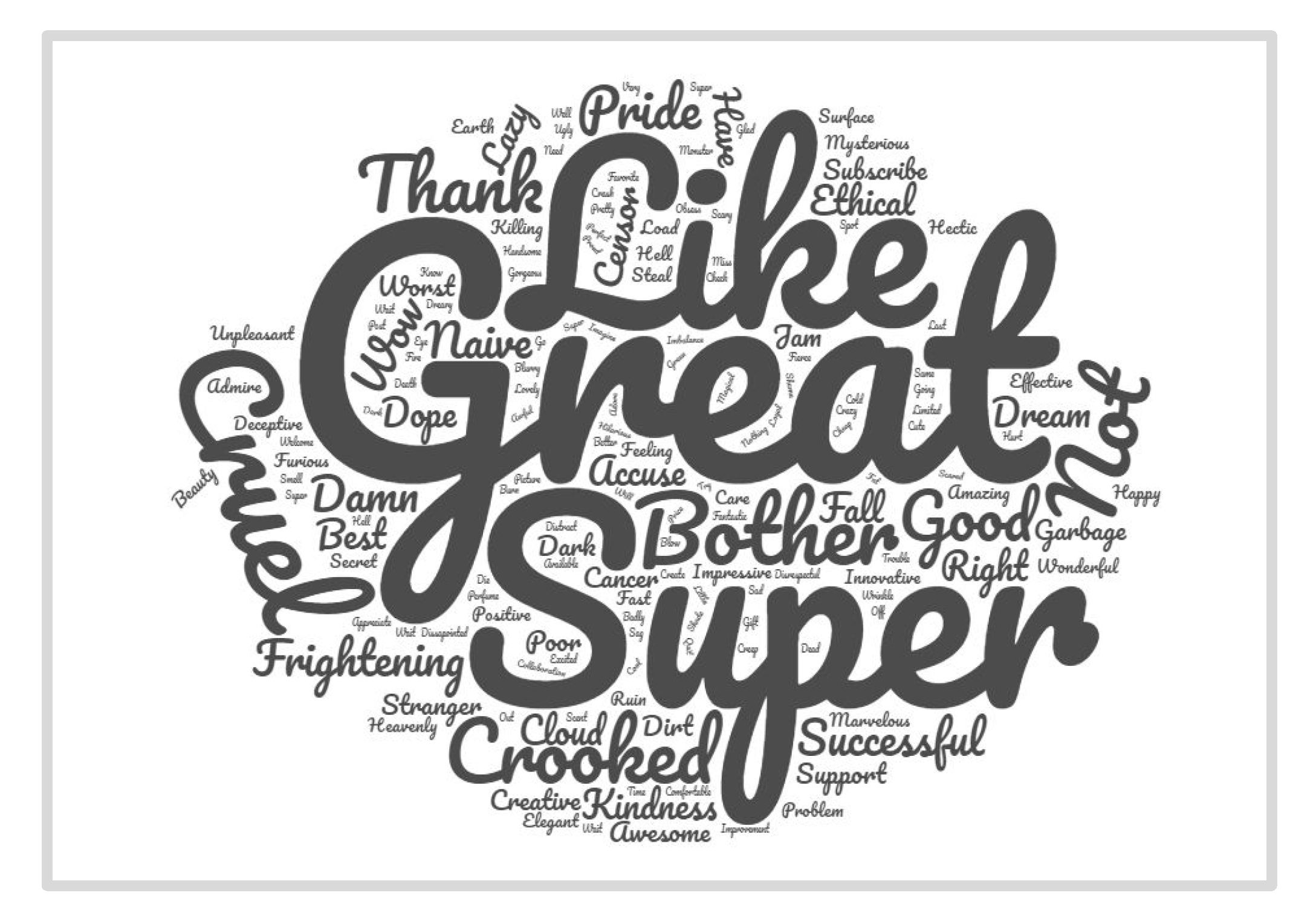

4.2.3. Procedure and Data Analysis

- (1)

- Compilation of comments on each GIF

- (2)

- Analysis of the explicit emotionality of each GIF and calculation of the variable VEE (Table 3)

- (3)

- Analysis of the Composition of Comments and Calculation of Variables LgC and Pemj (Table 3)

- (4)

- Analysis of the Differences between Explicit and Implicit Emotionality and Calculation of the Variable VD (Table 3)

4.2.4. Results

Implicit Measure of Valence Versus Explicit Measure of Valence

Differences between Implicit and Explicit Measures of Emotional Valence for Each GIF

- (1)

- Relation between Valence Difference (VD) and Engagement (Eg)

- (2)

- Relation between Valence Difference (VD) and Comment Length (LgC)

- (3)

- Relation between the Valence Difference (VD) and the Proportion of Emojis (Pemj)

5. Discussion

6. Implications of the Work

7. Limitations and Future Lines of Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, A.K.; Bolton, R.N. The effect of customers’ emotional responses to service failures on their recovery effort evaluations and satisfaction judgments. J. Acad. Mark. Sci. 2002, 30, 5–23. [Google Scholar] [CrossRef] [Green Version]

- Mauri, M.; Cipresso, P.; Balgera, A.; Villamira, M.; Riva, G. Why is Facebook so successful? Psychophysiological measures describe a core flow state while using Facebook. Cyberpsychol. Behav. Soc. Netw. 2011, 14, 723–731. [Google Scholar] [CrossRef] [PubMed]

- Prescott, J. Some considerations in the measurement of emotions in sensory and consumer research. Food Qual. Prefer. 2017, 62, 360–368. [Google Scholar] [CrossRef]

- Bourlai, E.; Herring, S.C. Multimodal Communication on Tumblr: “I Have So Many Feels!”. In Proceedings of the 2014 ACM Conference on Web Science, Bloomington, IN, USA, 23–26 June 2014; ACM: New York, NY, USA; pp. 171–175. [Google Scholar] [CrossRef]

- Bakhshi, S.; Shamma, D.A.; Kennedy, L.; Song, Y.; De Juan, P.; Kaye, J.J. Fast, cheap, and good: Why animated GIFs engage us. In Proceedings of the 2016 Chi Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 575–586. [Google Scholar] [CrossRef]

- Gygli, M.; Soleymani, M. Analyzing and predicting GIF interestingness. In Proceedings of the 24th ACM International Conference on Multimedia, New York, NY, USA, 23–27 October 2006; pp. 122–126. [Google Scholar] [CrossRef] [Green Version]

- Driscoll, B. Sentiment analysis and the literary festival audience. Continuum 2015, 29, 861–873. [Google Scholar] [CrossRef]

- Turnbull, S.; Jenkins, S. Why Facebook Reactions are good news for evaluating social media campaigns. J. Directdata Digit. Mark. Pract. 2016, 17, 156–158. [Google Scholar] [CrossRef] [Green Version]

- Scheinbaum, A. The Dark Side of Social Media: A Consumer Psychology Perspective; Routledge: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, J.H. Using computer vision techniques on Instagram to link users’ personalities and genders to the features of their photos: An exploratory study. Inf. Process. Manag. 2018, 54, 1101–1114. [Google Scholar] [CrossRef]

- Anderson, K.J. Arousal and the Inverted-U Hypothesis: A Critique of Neiss’s “Reconceptualizing Arousal”. Psychol. Bull. 1990, 107, 96–100. [Google Scholar] [CrossRef]

- Harmon-Jones, E.; Harmon-Jones, C.; Summerell, E. On the Importance of Both Dimensional and Discrete Models of Emotion. Behav. Sci. 2017, 7, 66. [Google Scholar] [CrossRef] [Green Version]

- Izard, C.E. The many meanings/aspects of emotion: Definitions, functions, activation, and regulation. Emot. Rev. 2010, 2, 363–370. [Google Scholar] [CrossRef] [Green Version]

- Lang, P.J. The Emotion Probe: Studies of Motivation and Attention. Am. Psychol. 1995, 50, 372–385. [Google Scholar] [CrossRef]

- Ekman, P.; Freisen, W.V.; Ancoli, S. Facial signs of emotional experience. J. Personal. Soc. Psychol. 1980, 39, 1125–1134. [Google Scholar] [CrossRef] [Green Version]

- Damasio, A.; Carvalho, G.B. The nature of feelings: Evolutionary and neurobiological origins. Nat. Rev. Neurosci. 2013, 14, 143–152. [Google Scholar] [CrossRef]

- LeDoux, J.E.; Brown, R. A higher-order theory of emotional consciousness. Proc. Natl. Acad. Sci. USA 2017, 114, E2016–E2025. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reinecke, L.; Trepte, S. Authenticity and well-being on social network sites: A two wave longitudinal study on the effects of online authenticity and the positivity bias in SNS communication. Comput. Hum. Behav. 2014, 30, 95–102. [Google Scholar] [CrossRef]

- Waterloo, S.F.; Baumgartner, S.E.; Peter, J.; Valkenburg, P.M. Norms of online expressions of emotion: Comparing Facebook, Twitter, Instagram, and Whatsapp. New Media Soc. 2018, 20, 1813–1831. [Google Scholar] [CrossRef] [Green Version]

- Dresner, E.; Herring, S.C. Functions of the nonverbal in CMC: Emoticons and 833 illocutionary force. Commun. Theory 2010, 20, 249–268. [Google Scholar] [CrossRef]

- Huffaker, D. Dimensions of leadership and social influence in online 853 communities. Hum. Commun. Res. 2010, 36, 593–617. [Google Scholar] [CrossRef]

- Dubovi, I.; Tabak, I. Interactions between emotional and cognitive engagement with science on YouTube. Public Underst. Sci. 2021, 0963662521990848. [Google Scholar] [CrossRef]

- Morgado, S.M.; Moniz, T.; Felgueiras, S. Facebook and Polícia de Segurança Pública: An exploratory study of follower’s engagement. In Marketing and Smart Technologies; Springer: Singapore, 2020; pp. 363–376. [Google Scholar]

- Vizcaino-Verdu, A.; Aguaded, I. Análisis de sentimiento en Instagram: Polaridad y subjetividad de cuentas infantiles. ZER Rev. Estud. Comun. Komunikazio Ikasketen Aldizka. 2020, 25. [Google Scholar] [CrossRef]

- Claffey, E.; Brady, M. An empirical study of the impact of consumer emotional engagement and affective commitment in firm-hosted virtual communities. J. Mark. Manag. 2019, 35, 1047–1079. [Google Scholar] [CrossRef]

- Zhan, M.; Tu, R.; Yu, Q. Understanding readers: Conducting sentiment analysis of Instagram captions. In Proceedings of the 2nd International Conference on Computer Science and Artificial Intelligence, Shenzhen China, 8–10 December 2018; Association for Computing Machinery: Shenzhen, China, 2018; pp. 33–40. [Google Scholar] [CrossRef]

- Diyanah-Abdullah, N.S.; Asnira-Zolkepli, I. Sentiment analysis of online crowd input towards brand provocation in Facebook, Twitter, and Instagram. In Proceedings of the International Conference on Big Data and Internet of Thing, London, UK, 20–22 December 2017; BDIOT: New York, NY, USA; pp. 67–74. [Google Scholar] [CrossRef]

- Domingo, M.; Jewitt, C.; Kress, G. Multimodal social semiotics: Writing in online contexts. In The Routledge Handbook of Literacy Studies 2015; Rowsell, E.J., Pahl, K., Eds.; Routledge: London, UK; pp. 251–266. Available online: https://bit.ly/2sMlbOE (accessed on 1 May 2021).

- Kralj Novak, P.; Smailović, J.; Sluban, B.; Mozetič, I. Sentiment of emojis. PLoS ONE 2015, 10, e0144296. [Google Scholar] [CrossRef] [PubMed]

- Harris, J.M.; Ciorciari, J.; Gountas, J. Consumer neuroscience and digital/social media health/social cause advertisement effectiveness. Behav. Sci. 2019, 9, 42. [Google Scholar] [CrossRef] [Green Version]

- Lajante, M.; Droulers, O.; Derbaix, C.; Poncin, I. Looking at aesthetic emotions in advertising research through a psychophysiological perspective. Front. Psychol. 2020, 11, 2544. [Google Scholar] [CrossRef] [PubMed]

- Hernández-Fernández, A.; Mora, E.; Hernández, M.I.V. When a new technological product launching fails: A multi-method approach of facial recognition and E-WOM sentiment analysis. Physiol. Behav. 2019, 200, 130–138. [Google Scholar] [CrossRef]

- Mandryk, R.L.; Inkpen, K.M.; Calvert, T.W. Using psychophysiological techniques to measure user experience with entertainment technologies. Behav. Inf. Technol. 2006, 25, 141–158. [Google Scholar] [CrossRef]

- Ash, J. Sensation, Networks, and the GIF: Toward an Allotropic Account of Affect. Networked Affect; The MIT Press: Cambridge, MA, USA, 2015; pp. 119–134. [Google Scholar]

- Miltner, K.; Highfield, T. Never Gonna GIF You Up: Analyzing the Cultural Significance of the Animated GIF. Soc. Media Soc. 2017, 3, 2056305117725223. [Google Scholar] [CrossRef] [Green Version]

- Gürsimsek, Ö. Animated GIFs as vernacular graphic design: Producing Tumblr blogs. Vis. Commun. 2016, 15, 329–349. [Google Scholar] [CrossRef]

- Jou, B.; Bhattacharya, S.; Chang, S. Predict-ing viewer perceived emotions in animated gifs. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 21–25 October 2014; pp. 213–216. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Rudovic, O.O.; Picard, R.W. Gifgif+: Collecting emotional animated gifs with clustered multi-task learning. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 510–517. [Google Scholar]

- Rúa Hidalgo, I.; Galmés Cerezo, M.; Espinosa Jarrín, M.D.C. El engagement y la sorpresa en la comunicación digital de las marcas: Estudio del impacto emocional de los GIFs en los usuarios de las redes sociales. Adresearch Rev. Int. Investig. Comun. 2021, 25, 26–43. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Cohen, J. Statistical power analysis. Curr. Dir. Psychol. Sci. 1992, 1, 98–101. [Google Scholar] [CrossRef]

- Sands, S.F. Sample Size Analysis for Brainwave Collection (EEG) Methodologies. 2009. Available online: https://sandsresearch01.worldsecuresystems.com/assets/white-paper.pdf (accessed on 31 May 2021).

- Hensel, D.; Iorga, A.; Wolter, L.; Znanewitz, J. Conducting neuromarketing studies ethically-practitioner perspectives. Cogent Psychol. 2017, 4, 1320858. [Google Scholar] [CrossRef]

- Interactive Advertising Bureau y Elogia: Estudio Anual De Redes Sociales 2018. IAB Spain. Versión Reducida. Disponible en. Available online: https://iabspain.es/estudio/estudio-anual-de-redes-sociales-2018/ (accessed on 31 May 2021).

- Interbrand: Interbrand Releases 2017 Best Global Brands Report. 24 September 2017 Disponible en. Available online: https://www.interbrand.com/newsroom/bgb-report-2017/ (accessed on 31 May 2021).

- McDuff, D.; El Kaliouby, R.; Picard, R.W. Crowdsourcing facial responses to online videos. IEEE Trans. Affect. Comput. 2018, 3, 456–468. [Google Scholar] [CrossRef]

- Bellman, S.; Wooley, B.; Varan, D. Program-ad matching and television ad effectiveness: A reinquiry using facial tracking software. J. Advert. 2016, 45, 72–77. [Google Scholar] [CrossRef]

- Goyal, G.; Singh, J. Minimum Annotation identification of facial affects for Video Advertisement. In Proceedings of the International Conference on Intelligent Circuits and Systems (ICICS), Phagwara, India, 20–21 April 2018; pp. 300–305. [Google Scholar]

- Mundel, J.; Huddleston, P.; Behe, B.; Sage, L.; Latona, C. An eye tracking study of minimally branded products: Hedonism and branding as predictors of purchase intentions. J. Prod. Brand Manag. 2018, 27, 146–157. [Google Scholar] [CrossRef]

- Walla, P.; Koller, M.; Brenner, G.; Bosshard, S. Evaluative conditioning of established brands: Implicit measures reveal other effects than explicit measures. J. Neurosci. Psychol. Econ. 2017, 10, 24–41. [Google Scholar] [CrossRef]

- Weibel, D.; di Francesco, R.; Kopf, R.; Fahrni, S.; Brunner, A.; Kronenberg, P.; Wissmath, B. TV vs. YouTube: TV advertisements capture more visual attention, create more positive emotions and have a stronger impact on implicit long-term memory. Front. Psychol. 2019, 10, 626. [Google Scholar] [CrossRef]

- Guerreiro, J.; Rita P y Trigueiros, D. Attention, emotions and cause-related marketing effectiveness. Eur. J. Mark. 2015, 49, 1728–1750. [Google Scholar] [CrossRef] [Green Version]

- Reimann, M.; Castano, R.; Zaichkowsky, J.; Bechara, A. How we relate to brands: Psychological and neurophysiological insights into consumer-brand relationships. J. Consum. Psychol. 2012, 22, 128–142. [Google Scholar] [CrossRef]

| Variables | Media | Standard Deviation | Standard Error |

|---|---|---|---|

| Implicit emotional valence (VIE) | 0.1480 | 0.0356 | 0.0084 |

| Engagement (Eg) | 0.0025 | 0.0309 | 0.0073 |

| Links 1 | Happiness | Surprise | Anger | Disgust | Fear | Sadness |

|---|---|---|---|---|---|---|

| GIF1 | 0.0307 | 0.005 | 0.0914 | 0.0487 | 0.0097 | 0.1062 |

| GIF2 | 0.0579 | 0.005 | 0.0903 | 0.0310 | 0.0119 | 0.1499 |

| GIF3 | 0.0475 | 0.0121 | 0.0852 | 0.0193 | 0.0198 | 0.1304 |

| GIF4 | 0.0414 | 0.0042 | 0.083 | 0.0272 | 0.0059 | 0.1455 |

| GIF5 | 0.029 | 0.047 | 0.0793 | 0.0092 | 0.0162 | 0.1263 |

| GIF6 | 0.0415 | 0.0536 | 0.0827 | 0.0169 | 0.0093 | 0.1242 |

| GIF7 | 0.0459 | 0.0705 | 0.0649 | 0.0121 | 0.0053 | 0.123 |

| GIF8 | 0.0519 | 0.0628 | 0.0977 | 0.0200 | 0.0096 | 0.1499 |

| GIF9 | 0.0371 | 0.0059 | 0.0628 | 0.0391 | 0.0078 | 0.1013 |

| GIF10 | 0.0528 | 0.0008 | 0.0802 | 0.0238 | 0.0076 | 0.1395 |

| GIF11 | 0.0398 | 0.0665 | 0.0722 | 0.0206 | 0.0322 | 0.1245 |

| GIF12 | 0.0409 | 0.0383 | 0.0800 | 0.0242 | 0.0097 | 0.1415 |

| GIF13 | 0.0576 | 0.0027 | 0.0506 | 0.0189 | 0.0056 | 0.1445 |

| GIF14 | 0.0455 | 0.0029 | 0.0884 | 0.0428 | 0.0039 | 0.1305 |

| GIF15 | 0.0353 | 0.0129 | 0.0778 | 0.0361 | 0.0086 | 0.1136 |

| GIF16 | 0.0394 | 0.0343 | 0.0644 | 0.0083 | 0.0069 | 0.1248 |

| GIF17 | 0.0184 | 0.0328 | 0.0817 | 0.0290 | 0.0366 | 0.1396 |

| GIF18 | 0.0422 | 0.0152 | 0.1021 | 0.0347 | 0.0082 | 0.1379 |

| Media | 0.0419 | 0.0263 | 0.0797 | 0.0257 | 0.0119 | 0.1307 |

| Variable | Dimension | Tool |

|---|---|---|

| Implicit Emotional Valence (VIE) | Value of emotion | Face coder |

| Engagement (Eg) | Emotional state | Face coder + GSR |

| Explicit Emotional Valence (VEE) | Value of emotion | Twinword |

| Difference VIE y VEE (VD) | Difference between valences | VEE−VIE |

| Comment length (LgC) | Number of elements that appear in the comment | Element Counter (own design) |

| Proportion of Emojis (Pemj) | Percentage of emojis over total number of elements in a comment | Element Counter (own design) |

| Type of basic emotion | Identification of basic emotions | Twinword Emotion Analysis API (explicit method) Face coder (implicit method) |

| Shapiro–Wilk | ||

|---|---|---|

| Data Origin | Statistic | Sig. |

| Biometric tool (VIE) | 0.969 | 0.779 |

| Instagram comments (VEE) | 0.943 | 0.323 |

| Data Origin | Average | Standard Deviation | Standard Error |

|---|---|---|---|

| Biometric tool (VIE) | 0.1480 | 0.0356 | 0.0084 |

| Instagram comments (VEE) | 0.4068 | 0.0305 | 0.0072 |

| Engagement (Eg) | Implicit-Explicit Valence Difference (VD) | |

|---|---|---|

| Engagement (Eg) | 1 | −0.546 * |

| 0.019 | ||

| Implicit–Explicit Valence Difference (VD) | −0.546 * | 1 |

| 0.019 |

| Percentage of Emojis in the Comment (Pemj) | Explicit–Implicit Valence Difference (VD) | ||

|---|---|---|---|

| Percentage of emojis in the comment (Pemj) | Pearson’s correlation | 1 | 0.631 * |

| Sig. (bilateral) | 0.005 | ||

| Explicit–Implicit Valence Difference (VD) | Pearson’s correlation | 0.631 * | 1 |

| Sig. (bilateral) | 0.005 | ||

| Happiness | Surprise | Anger | Disgust | Fear | Sadness | |

|---|---|---|---|---|---|---|

| Biometric tool | 18 | 18 | 18 | 18 | 18 | 18 |

| Instagram comments | 18 | 12 | 0 | 0 | 6 | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rúa-Hidalgo, I.; Galmes-Cerezo, M.; Cristofol-Rodríguez, C.; Aliagas, I. Understanding the Emotional Impact of GIFs on Instagram through Consumer Neuroscience. Behav. Sci. 2021, 11, 108. https://0-doi-org.brum.beds.ac.uk/10.3390/bs11080108

Rúa-Hidalgo I, Galmes-Cerezo M, Cristofol-Rodríguez C, Aliagas I. Understanding the Emotional Impact of GIFs on Instagram through Consumer Neuroscience. Behavioral Sciences. 2021; 11(8):108. https://0-doi-org.brum.beds.ac.uk/10.3390/bs11080108

Chicago/Turabian StyleRúa-Hidalgo, Idoia, Maria Galmes-Cerezo, Carmen Cristofol-Rodríguez, and Irene Aliagas. 2021. "Understanding the Emotional Impact of GIFs on Instagram through Consumer Neuroscience" Behavioral Sciences 11, no. 8: 108. https://0-doi-org.brum.beds.ac.uk/10.3390/bs11080108