A Method Based on Multi-Network Feature Fusion and Random Forest for Foreign Objects Detection on Transmission Lines

Abstract

:1. Introduction

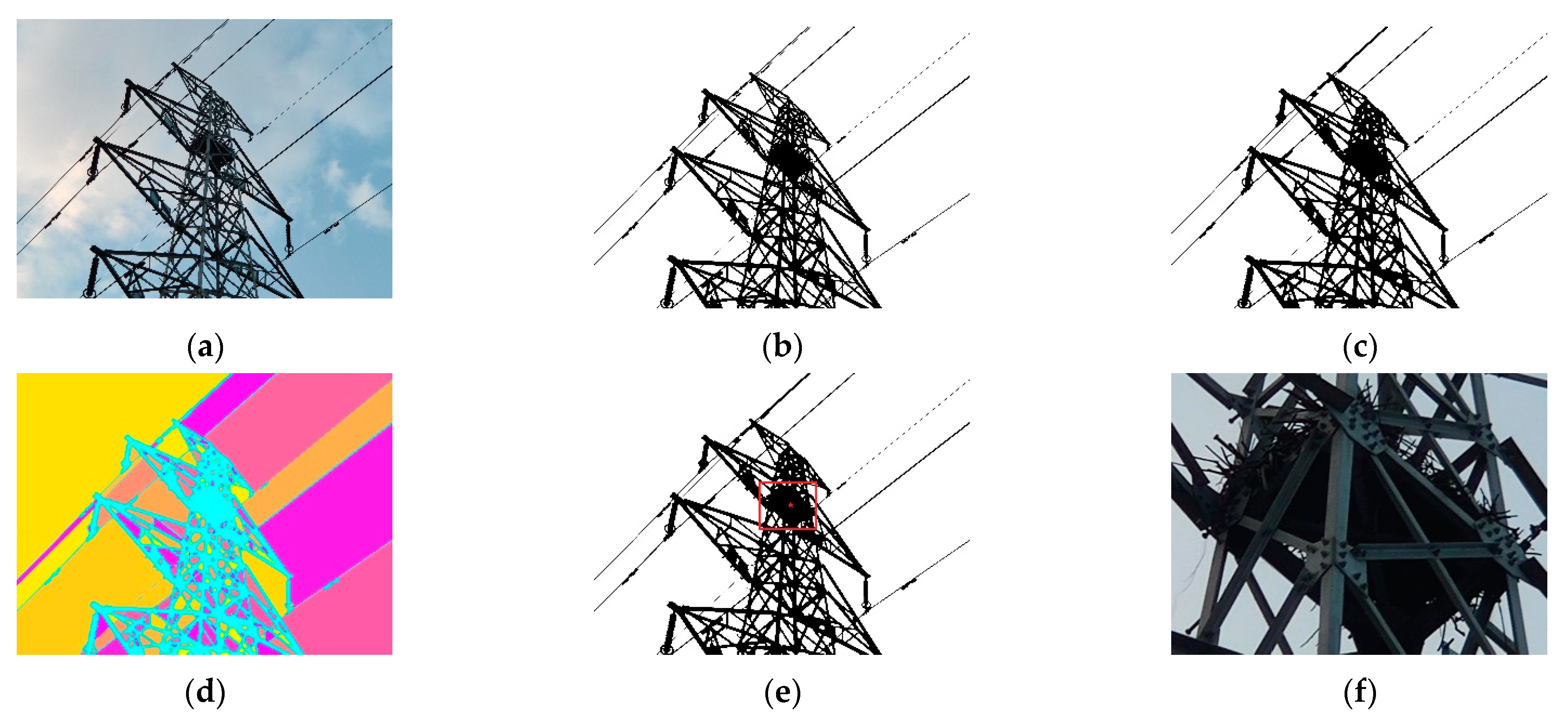

2. Target Region Extraction of Foreign Object Images

- Step 1: Gray the input foreign object images and divide the gray level into N, i.e., (0, 1, 2, …, n−1). Gi represents the number of pixels with gray level i and the total of gray levels in the image are Gsum = G0 + G1 + … + Gn-1;

- Step 2: Set the initial threshold T, define the pixels in the [0, T] gray level interval as the target, and define the pixels in the [T + 1, N − 1] gray level interval as the background.

- Step 3: Calculate the class variance g between the target and background according to Equation (1) and maximize the class variance by changing the value of T, that is the best threshold T for Otsu adaptive threshold segmentation.

- Step 4: Morphological open operation is used to process the segmented binary image, which corrodes the image for eliminating the noise and then expands the image to obtain the connected region and colors the different regions.

- Step 5: According to the connected region of interest, the centroid and bounding rectangle are obtained, so as to mark the target region. Finally, the target region in the image is cropped and extracted.

3. Foreign Objects Detection Algorithm

3.1. Feature Extraction Network

3.1.1. GoogLeNet

3.1.2. EfficientNet-B0

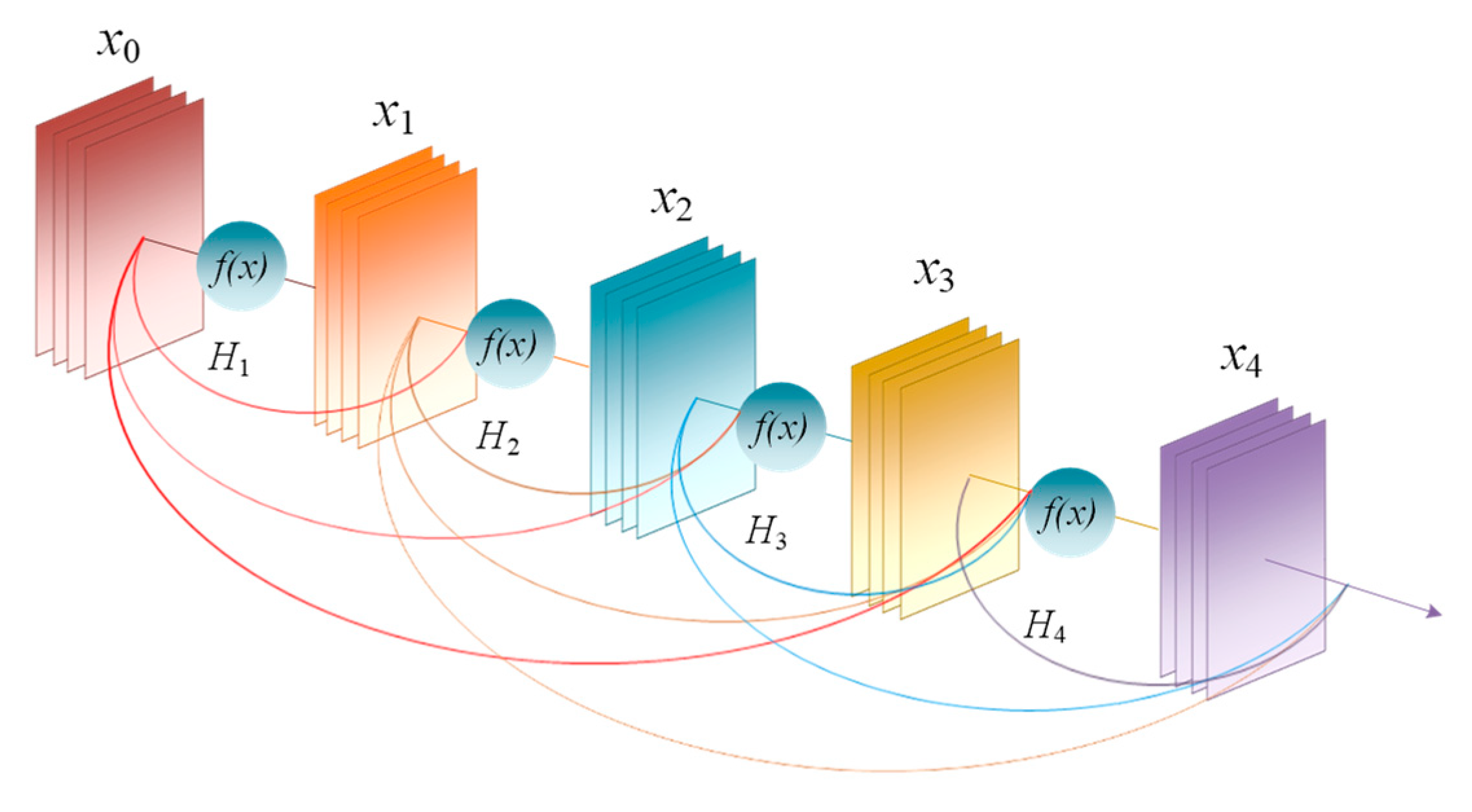

3.1.3. DenseNet-201

3.1.4. ResNet-101

3.1.5. AlexNet

3.2. Multi-Network Feature Fusion

3.3. Random Forest Classifier

4. Simulation and Analysis of Transmission Lines Foreign Object Detection

4.1. Simulation Environment and Evaluation Indexes

4.2. Simulation Results and Analysis

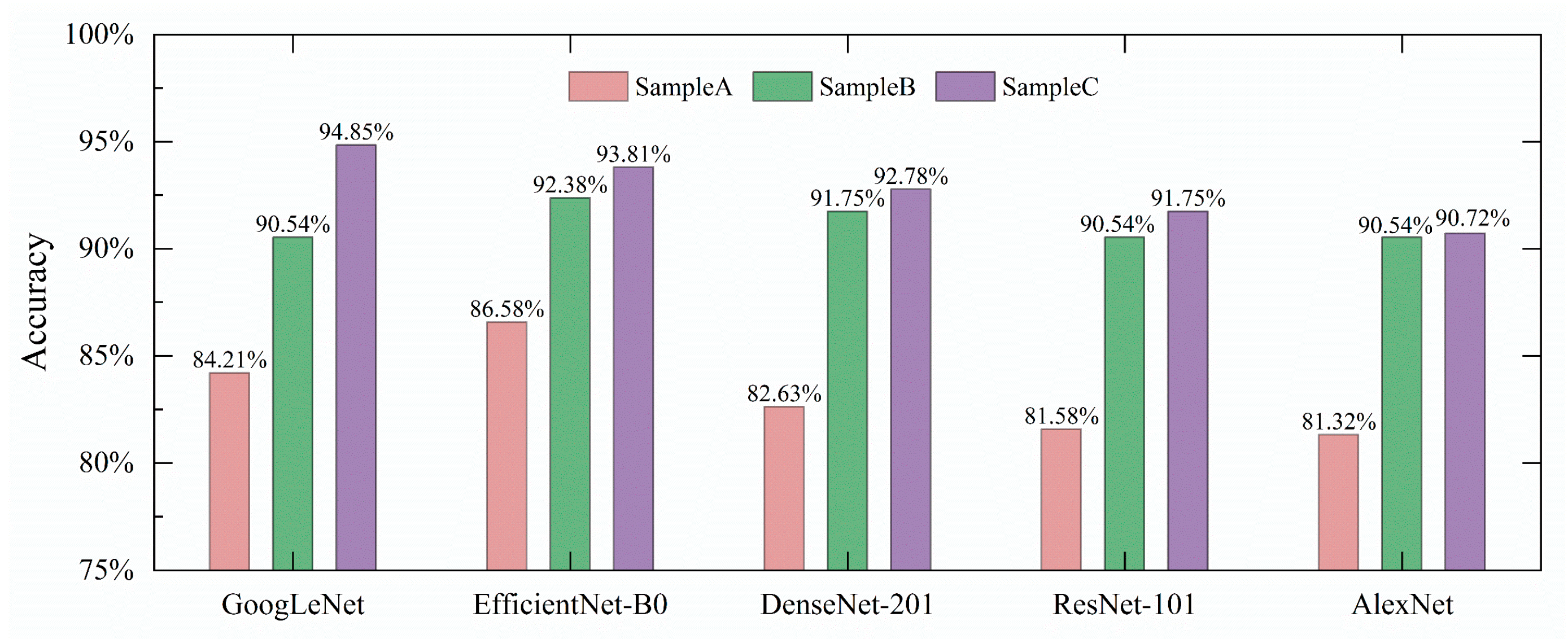

4.2.1. Results under Different Foreign Object Samples

4.2.2. Results under Different Feature Extraction Layers

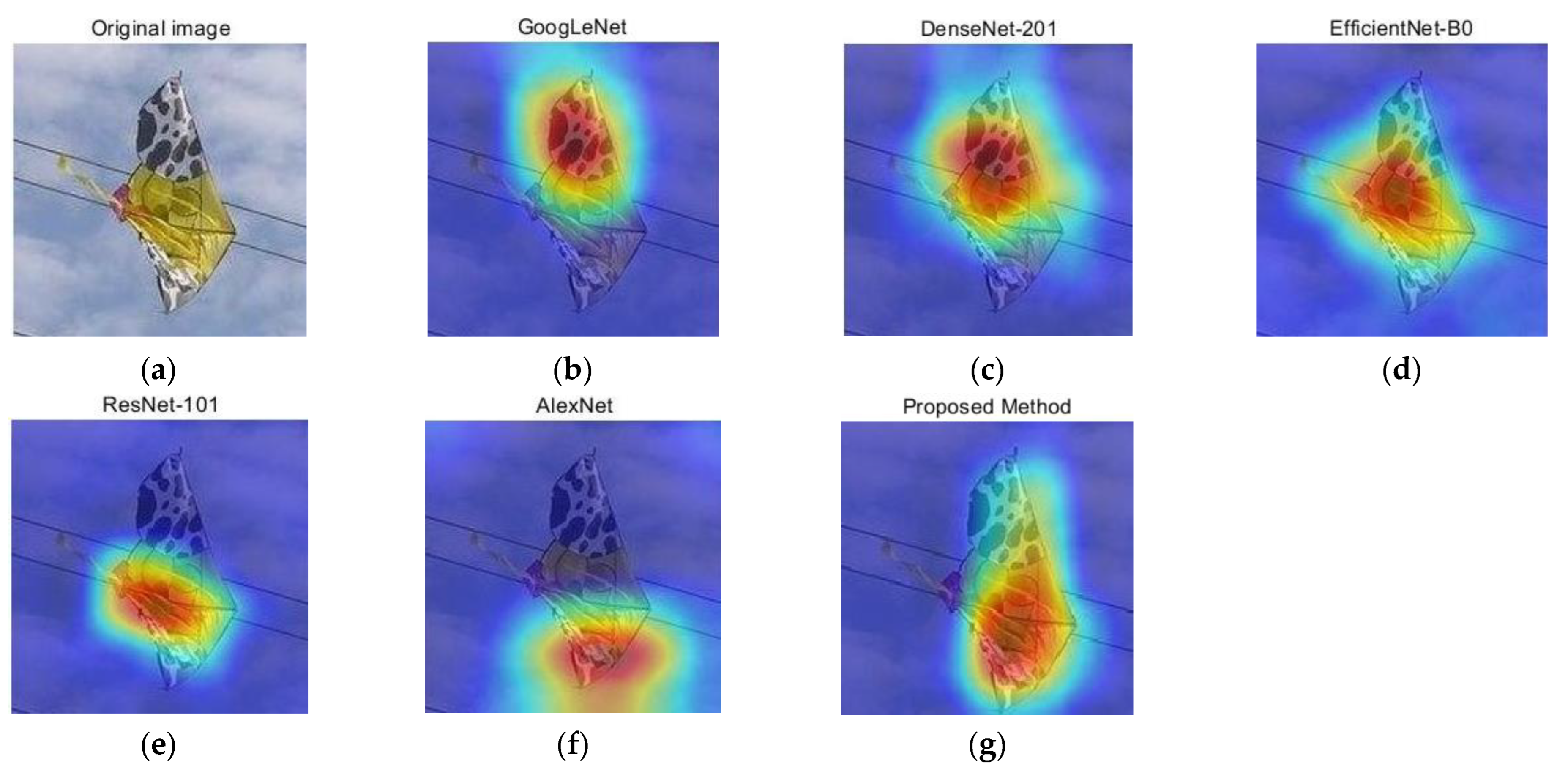

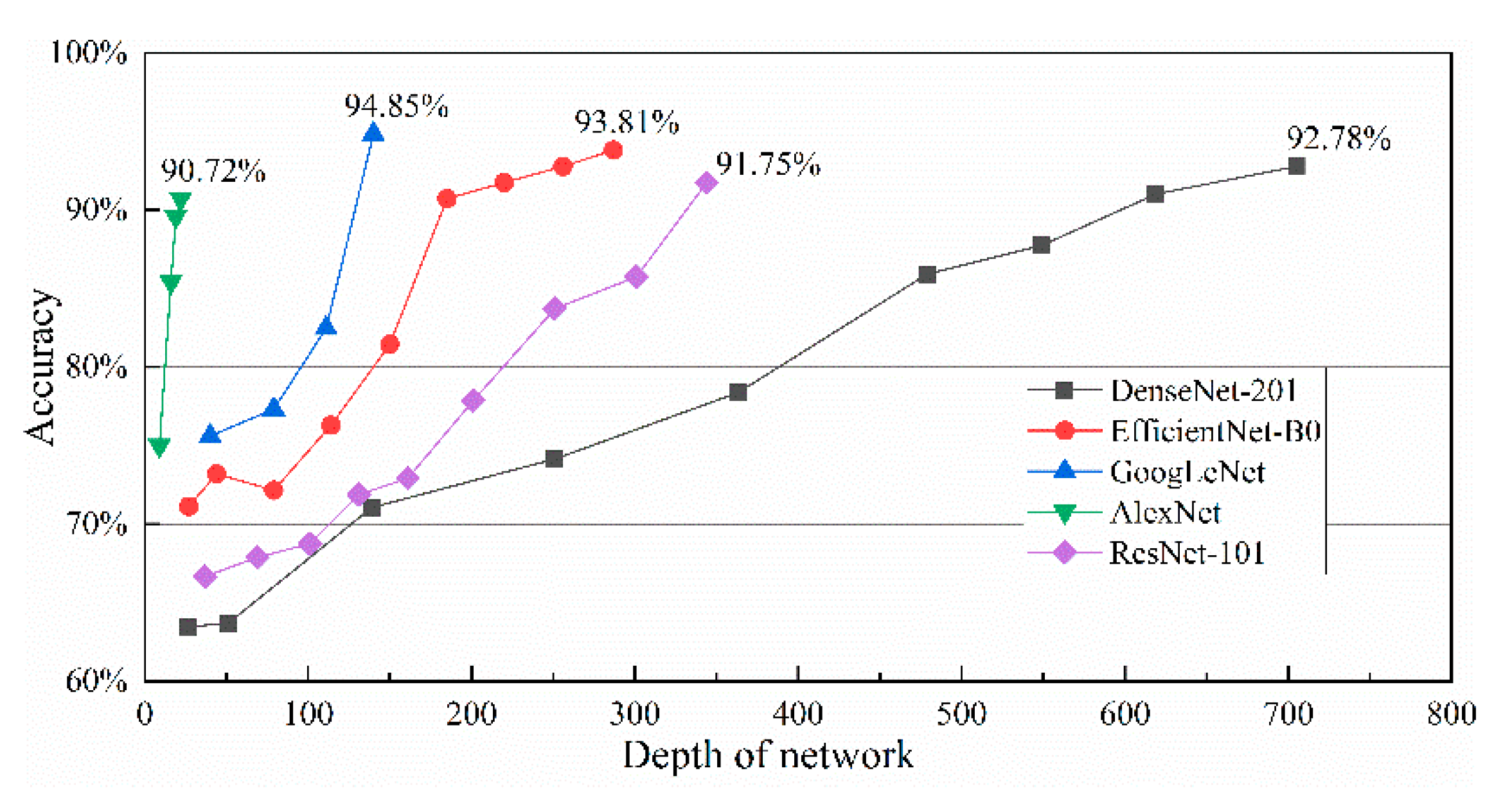

4.2.3. Comparison of Different Feature Extraction Networks

4.2.4. Comparison and Analysis of Different Feature Fusion Schemes

5. Discussion

6. Conclusions

- Target region extraction based on Otus binarization threshold segmentation and morphology processing can reduce the influence and distraction of complex background factors in the inspection images and improve the detection accuracy.

- The optimal feature fusion scheme by fusing the features extracted by GoogLeNet, EfficientNet-B0 and DenseNet-201 can achieve the highest accuracy of 95.88%. It can be seen through Grad-CAM that the fused features can compensate for the differences of different networks and fully reflect the features of the foreign object images, thus improving the generalization ability of the detection model.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, W.; Liu, X.; Yuan, J.; Xu, L.; Sun, H.; Zhou, J.; Liu, X. RCNN-based foreign object detection for securing power transmission lines (RCNN4SPTL). Procedia Comput. Sci. 2019, 147, 331–337. [Google Scholar] [CrossRef]

- Chen, W.; Li, Y.; Li, C. A Visual detection method for foreign objects in power lines based on Mask R-CNN. Int. J. Ambient. Comput. Intell. 2020, 11, 34–47. [Google Scholar] [CrossRef]

- Pei, X.; Kang, Y. Short-Circuit fault protection strategy for High-Power Three-Phase Three-Wire inverter. IEEE Trans. Industr. Inform. 2012, 8, 545–553. [Google Scholar] [CrossRef]

- Qin, X.; Wu, G.; Lei, J.; Fei, F.; Ye, Y.; Mei, Q. A novel method of autonomous inspection for transmission line based on cable inspection robot LiDAR data. Sensors 2018, 18, 596. [Google Scholar] [CrossRef] [Green Version]

- Xia, P.; Yin, J.; He, J.; Gu, L.; Yang, K. Neural detection of foreign objects for transmission lines in power systems. J. Phys. Conf. Ser. 2019, 1267, 012043. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Yan, D.; Luan, K.; Li, Z.; Liang, H. Deep learning-based bird’s nest detection on transmission lines using UAV imagery. Appl. Sci. 2020, 10, 6147. [Google Scholar] [CrossRef]

- Liu, Y.; Huo, H.; Fang, J.; Mai, J.; Zhang, S. UAV Transmission line inspection object recognition based on Mask R-CNN. J. Phys. Conf. Ser. 2019, 1345, 062043. [Google Scholar] [CrossRef]

- Guo, S.; Bai, Q.; Zhou, X. Foreign object detection of transmission lines based on Faster R-CNN. In Information Science and Applications; Springer: Berlin, Germany, 2019; pp. 269–275. [Google Scholar] [CrossRef]

- Yao, N.; Zhu, L. A novel foreign object detection algorithm based on GMM and K-means for power transmission line Inspection. J. Phys. Conf. Ser. 2020, 1607, 012014. [Google Scholar] [CrossRef]

- Taskeed, J.; Md, Z.U. Rotation invariant power line insulator detection using local directional pattern and support vector machine. In Proceedings of the 2016 International Conference on Innovations in Science, Engineering and Technology, Dhaka, Bangladesh, 28–29 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Lu, J.; Xu, X.; Li, X.; Li, L.; Chang, C.; Feng, X.; Zhang, S. Detection of bird’s nest in high power lines in the vicinity of remote campus based on combination features and cascade classifier. IEEE Access 2018, 6, 39063–39071. [Google Scholar] [CrossRef]

- Huang, X.; Shang, E.; Xue, J.; Ding, H.; Li, P. A multi-feature fusion-based deep learning for insulator image identification and fault detection. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; pp. 1957–1960. [Google Scholar] [CrossRef]

- Wang, B.; Wu, R.; Zheng, Z.; Zhang, W.; Guo, J. Study on the method of transmission line foreign body detection based on deep learning. In Proceedings of the 2017 IEEE Conference on Energy Internet and Energy System Integration (EI2), Beijing, China, 26–28 November 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Ni, H.; Wang, M.; Zhao, L. An improved Faster R-CNN for defect recognition of key components of transmission line. Math. Biosci. Eng. 2021, 18, 4679–4695. [Google Scholar] [CrossRef]

- Zu, G.; Wu, G.; Zhang, C.; He, X. Detection of common foreign objects on power grid lines based on Faster R-CNN algorithm and data augmentation method. J. Phys. Conf. Ser. 2021, 1746, 012039. [Google Scholar] [CrossRef]

- Xu, L.; Song, Y.; Zhang, W.; An, Y.; Wang, Y.; Ning, H. An efficient foreign objects detection network for power substation. Image Vis. Comput. 2021, 109, 104159. [Google Scholar] [CrossRef]

- Zhai, Y.; Yang, X.; Wang, Q.; Zhao, Z.; Zhao, W. Hybrid knowledge R-CNN for transmission line multifitting detection. IEEE Trans. Instrum. Meas. 2021, 70, 5013312. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, J.; Chen, Y.; Jiang, S.; Wang, D.; Du, H. Intelligent bird’s nest hazard detection of transmission line based on RetinaNet model. J. Phys. Conf. Ser. 2021, 2005, 012235. [Google Scholar] [CrossRef]

- Li, J.; Nie, Y.; Cui, W.; Liu, R.; Zheng, Z. Power transmission line foreign object detection based on improved YOLOv3 and deployed to the chip. In Proceedings of the MLMI’20: 2020 the 3rd International Conference on Machine Learning and Machine Intelligence, Hangzhou, China, 18–20 September 2020; pp. 100–104. [Google Scholar] [CrossRef]

- Chen, Z.; Xiao, Y.; Zhou, Y.; Li, Z.; Liu, Y. Insulator recognition method for distribution network overhead transmission lines based on modified YOLOv3. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 2815–2820. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, Z.; Li, Q.; Chen, Y.; Xiang, P.; Yu, Q.; Zhang, L.; Lu, Y. Intrusion detection of foreign objects in high-voltage lines based on YOLOv4. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 1295–1300. [Google Scholar] [CrossRef]

- Marvasti-Zadeh, S.M.; Ghanei-Yakhdan, H.; Kasaei, S. Adaptive exploitation of pre-trained deep convolutional neural networks for robust visual tracking. Multimed. Tools Appl. 2021, 80, 22027–22076. [Google Scholar] [CrossRef]

- Marvasti-Zadeh, S.M.; Ghanei-Yakhdan, H.; Kasaei, S.; Nasrollahi, K.; Moeslund, T.B. Effective fusion of deep multitasking representations for robust visual tracking. Vis. Comput. 2021, 1–21. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Christian, S.; Liu, W.; Jia, Y.; Pierre, S.; Scott, R.; Dragomir, A.; Dumitru, E.; Vincent, V.; Andrew, R. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Mark, S.; Andrew, H.; Zhu, M.; Andrey, Z.; Chen, L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Shen, L.; Samuel, A.; Sun, G.; Wu, E. Squeeze-and-Excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Kilian, Q.W. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Lei, B.; Elazab, A.; Tan, E.; Wang, W. Breast cancer image classification via multi-network features and dual-network orthogonal low-rank learning. IEEE Access 2020, 8, 27779–27792. [Google Scholar] [CrossRef]

- Chen, B.; Yuan, D.; Liu, C.; Qian, W. Loop closure detection based on multi-scale deep feature fusion. Appl. Sci. 2019, 9, 1120. [Google Scholar] [CrossRef] [Green Version]

- Ramprasaath, R.S.; Michael, C.; Abhishek, D.; Remakrishna, V.; Devi, P.; Dhruv, B. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef] [Green Version]

- Tin, K.H. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; pp. 278–282. [Google Scholar] [CrossRef]

| Stage | Operation | Size | Class |

|---|---|---|---|

| 1 | Conv 3 × 3 | 224 × 224 | 1 |

| 2 | MBConv1, k3 × 3 | 112 × 112 | 1 |

| 3 | MBConv6, k3 × 3 | 112 × 112 | 2 |

| 4 | MBConv6, k5 × 5 | 56 × 56 | 2 |

| 5 | MBConv6, k3 × 3 | 28 × 28 | 3 |

| 6 | MBConv6, k5 × 5 | 14 × 14 | 3 |

| 7 | MBConv6, k5 × 5 | 14 × 14 | 4 |

| 8 | MBConv6, k3 × 3 | 7 × 7 | 1 |

| 9 | Conv 1 × 1 Pooling and FC | 7 × 7 | 1 |

| Layer | Size | Operation |

|---|---|---|

| Conv | 112 × 112 | 7 × 7 conv |

| pooling | 56 × 56 | 3 × 3 maxpool |

| Dense Block 1 | 56 × 56 | |

| Transition Layer 1 | 28 × 28 | |

| Dense Block 2 | 28 × 28 | |

| Transition Layer 2 | 14 × 14 | |

| Dense Block 3 | 14 × 14 | |

| Transition Layer 3 | 7 × 7 | |

| Dense Block 4 | 7 × 7 | |

| Classification Layer | 1 × 1 1000 | 7 × 7 global average pool Fully connected, softmax |

| Layer Name | Output Size | Operation |

|---|---|---|

| Conv1 | 112 × 112 | 7 × 7, 64 |

| Conv2_x | 56 × 56 | 3 × 3 max pool |

| Dense Block 1 | 56 × 56 | |

| Conv3_x | 28 × 28 | |

| Conv4_x | 14 × 14 | |

| Conv5_x | 7 × 7 | |

| 1 × 1 | average pool, 1000-d fc, softmax |

| Sample | Type of Foreign Object | Total | |||

|---|---|---|---|---|---|

| Balloons | Kites | Nests | Plastic | ||

| Sample A | 40 | 67 | 95 | 44 | 246 |

| Sample B | 40 | 67 | 95 | 44 | 246 |

| Sample C | 60 | 100 | 143 | 66 | 369 |

| Network | Indexes/% | |||

|---|---|---|---|---|

| Accuracy | macro_P | macro_R | macro_F1 | |

| DenseNet-201 | 92.78 | 92.22 | 91.21 | 91.71 |

| GoogLeNet | 94.85 | 94.83 | 93.79 | 94.31 |

| AlexNet | 90.72 | 91.50 | 88.97 | 90.22 |

| ResNet-101 | 91.75 | 92.33 | 89.69 | 90.99 |

| EfficientNet-B0 | 93.81 | 94.81 | 92.41 | 93.59 |

| Baseline Network | Indexes/% | |||||||

|---|---|---|---|---|---|---|---|---|

| N1 | N2 | N3 | N4 | N5 | Accuracy | macro_P | macro_R | macro_F1 |

| √ | √ | 93.81 | 92.52 | 94.31 | 93.41 | |||

| √ | √ | √ | 95.88 | 96.33 | 95.19 | 95.76 | ||

| √ | √ | √ | √ | 92.78 | 95.40 | 90.43 | 92.85 | |

| √ | √ | √ | √ | √ | 93.81 | 95.02 | 92.39 | 93.69 |

| Detection Method | Accuracy (%) |

|---|---|

| GMM + K-means clustering [9] | 78.00 |

| Combination features + cascade classifier [11] | 97.33 |

| SSD [13] | 85.20 |

| Faster R-CNN [15] | 93.09 |

| FODN4PS Net [16] | 85.00 |

| RetinaNet [18] | 94.10 |

| Improved YOLOv3 [19] | 92.60 |

| YOLOv4 [21] | 81.72 |

| The proposed method | 95.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, Y.; Qiu, Z.; Liao, H.; Wei, Z.; Zhu, X.; Zhou, Z. A Method Based on Multi-Network Feature Fusion and Random Forest for Foreign Objects Detection on Transmission Lines. Appl. Sci. 2022, 12, 4982. https://0-doi-org.brum.beds.ac.uk/10.3390/app12104982

Yu Y, Qiu Z, Liao H, Wei Z, Zhu X, Zhou Z. A Method Based on Multi-Network Feature Fusion and Random Forest for Foreign Objects Detection on Transmission Lines. Applied Sciences. 2022; 12(10):4982. https://0-doi-org.brum.beds.ac.uk/10.3390/app12104982

Chicago/Turabian StyleYu, Yanzhen, Zhibin Qiu, Haoshuang Liao, Zixiang Wei, Xuan Zhu, and Zhibiao Zhou. 2022. "A Method Based on Multi-Network Feature Fusion and Random Forest for Foreign Objects Detection on Transmission Lines" Applied Sciences 12, no. 10: 4982. https://0-doi-org.brum.beds.ac.uk/10.3390/app12104982