Building, Hosting and Recruiting: A Brief Introduction to Running Behavioral Experiments Online

Abstract

:1. Introduction

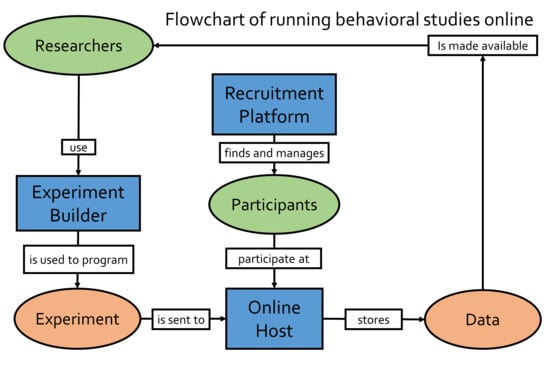

2. How to Run Behavioral Experiments Online

2.1. Experiment Builders

2.2. Hosting and Study Management

2.3. Recruitment of Participants

2.4. How to Choose an Ecosystem?

3. Data Quality Concerns

4. Considerations for Successful Online Studies

Author Contributions

Funding

Conflicts of Interest

References

- Coronavirus Confirmed as Pandemic. Available online: https://www.bbc.com/news/world-51839944 (accessed on 24 March 2020).

- Colleges and Universities across the US Are Canceling In-Person Classes due to Coronavirus—CNN. Available online: https://edition.cnn.com/2020/03/09/us/coronavirus-university-college-classes/index.html (accessed on 24 March 2020).

- Birnbaum, M. Introduction to Behavioral Research on the Internet; Prentice Hall: Upper Saddle River, NJ, USA, 2001; ISBN 9780130853622. [Google Scholar]

- Amir, O.; Rand, D.G.; Gal, Y.K. Economic games on the internet: The effect of 1 stakes. PLoS ONE 2012, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ferdman, S.; Minkov, E.; Bekkerman, R.; Gefen, D. Quantifying the web browser ecosystem. PLoS ONE 2017, 12. [Google Scholar] [CrossRef]

- Francis, G.; Neath, I.; Surprenant, A. Psychological Experiments on the Internet. Section III: Computer Techniques for Internet Experimentation; Academic Press: Cambridge, MA, USA, 2000; pp. 267–283. [Google Scholar] [CrossRef]

- Horton, J.; Rand, D.; Zeckhauser, R. The online laboratory: Conducting experiments in a real labor market. Exp. Econ. 2011, 14, 399–425. [Google Scholar] [CrossRef] [Green Version]

- Lee, Y.S.; Seo, Y.W.; Siemsen, E. Running behavioral operations experiments using Amazon’s mechanical turk. Prod. Oper. Manag. 2018, 27, 973–989. [Google Scholar] [CrossRef]

- Berinsky, A.J.; Huber, G.A.; Lenz, G.S. Evaluating online labor markets for experimental research: Amazon.com’s mechanical turk. Political Anal. 2012, 20, 351–368. [Google Scholar] [CrossRef] [Green Version]

- Buhrmester, M.; Kwang, T.; Gosling, S.D. Amazon’s mechanical turk: A new source of inexpensive, yet high-quality, data? Perspect. Psychol. Sci. 2011, 6, 3–5. [Google Scholar] [CrossRef] [PubMed]

- Mason, W.; Suri, S. Conducting behavioral research on Amazon’s mechanical turk. Behav. Res. Methods 2011, 44, 1–23. [Google Scholar] [CrossRef]

- Cohen, J.; Collins, R.; Darkes, J.; Gwartney, D. A league of their own: Demographics, motivations and patterns of use of 1,955 male adult non-medical anabolic steroid users in the United States. J. Int. Soc. Sports Nutr. 2007, 4, 12. [Google Scholar] [CrossRef] [Green Version]

- Gosling, S.D.; Vazire, S.; Srivastava, S.; John, O.P. Should we trust web-based studies? A comparative analysis of six preconceptions about internet questionnaires. Am. Psychol. 2004, 59, 93–104. [Google Scholar] [CrossRef] [Green Version]

- Reimers, S. The BBC internet study: General methodology. Arch. Sex. Behav. 2007, 36, 147–161. [Google Scholar] [CrossRef]

- van Doorn, G.; Woods, A.; Levitan, C.A.; Wan, X.; Velasco, C.; Bernal-Torres, C.; Spence, C. Does the shape of a cup influence coffee taste expectations? A cross-cultural, online study. Food Qual. Preference 2017, 56, 201–211. [Google Scholar] [CrossRef] [Green Version]

- Reips, U.-D. Standards for internet-based experimenting. Exp. Psychol. 2002, 49, 243–256. [Google Scholar] [CrossRef] [PubMed]

- Builder—PsychoPy v2020.1. Available online: https://www.psychopy.org/builder/builder.html (accessed on 24 March 2020).

- Peirce, J.W.; Gray, J.R.; Simpson, S.; MacAskill, M.R.; Höchenberger, R.; Sogo, H.; Kastman, E.; Lindeløv, J. PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 2019, 51, 195–203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peirce, J.W. PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 2007, 162, 8–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peirce, J.; MacAskill, M. Building Experiments in PsychoPy; SAGE: Thousand Oaks, CA, USA, 2018; ISBN 9781526418142. [Google Scholar]

- Mathôt, S.; Schreij, D.; Theeuwes, J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behav. Res. Methods 2012, 44, 314–324. [Google Scholar] [CrossRef] [Green Version]

- OpenSesame//OpenSesame Documentation. Available online: https://osdoc.cogsci.nl/ (accessed on 24 March 2020).

- Comparing Python to Other Languages. Available online: https://www.python.org/doc/essays/comparisons/ (accessed on 24 March 2020).

- Anwyl-Irvine, A.L.; Massonnié, J.; Flitton, A.; Kirkham, N.; Evershed, J.K. Gorilla in our midst: An online behavioral experiment builder. Behav. Res. Methods 2019, 52, 388–407. [Google Scholar] [CrossRef] [Green Version]

- Inquisit 6 Web Overview. Available online: https://www.millisecond.com/products/inquisit6/weboverview.aspx (accessed on 24 March 2020).

- Scicovery GmbH. LabVanced—Online Experiments Made Easy. Available online: https://www.labvanced.com/ (accessed on 24 March 2020).

- TESTABLE. Available online: https://www.testable.org/#features (accessed on 24 March 2020).

- Henninger, F.; Shevchenko, Y.; Mertens, U.K.; Kieslich, P.J.; Hilbig, B.E. Lab.js: A Free, Open, Online Study Builder. PsyArXiv 2019. [Google Scholar] [CrossRef]

- Lab.js—Online Research Made Easy. Available online: https://lab.js.org/ (accessed on 27 March 2020).

- jsPsych. Available online: https://www.jspsych.org/ (accessed on 24 March 2020).

- Leeuw, J.R.D. jsPsych: A JavaScript library for creating behavioral experiments in a Web browser. Behav. Res. Methods 2015, 47, 1–12. [Google Scholar] [CrossRef]

- PsyToolkit. Available online: https://www.psytoolkit.org/ (accessed on 24 March 2020).

- Gijsbert Stoet. PsyToolkit: A software package for programming psychological experiments using Linux. Behav. Res. Methods 2010, 42, 1096–1104. [Google Scholar] [CrossRef]

- Stoet, G. PsyToolkit: A novel web-based method for running online questionnaires and reaction-time experiments. Teach. Psychol. 2017, 44, 24–31. [Google Scholar] [CrossRef]

- Tatool Web. Available online: https://www.tatool-web.com/#/ (accessed on 24 March 2020).

- Kleiner, M.; Brainard, D.; Pelli, D.; Ingling, A.; Murray, R.; Broussard, C. What’s new in psychtoolbox-3. Perception 2007, 36, 1–16. [Google Scholar]

- Generate JavaScript Using MATLAB Coder—File Exchange—MATLAB Central. Available online: https://de.mathworks.com/matlabcentral/fileexchange/69973-generate-javascript-using-matlab-coder (accessed on 15 April 2020).

- Testable auf Twitter: “Psychology Researchers and Especially Students Need Additional Support now, e.g., Switching to Online Experiments for their Projects. Therefore, We Decided to Make Testable Available to All, Waiving All Our Fees Until the End of this Academic Year. Available online: https://twitter.com/tstbl/status/1241047678273937408 (accessed on 26 March 2020).

- Open Lab. Available online: https://open-lab.online/ (accessed on 24 March 2020).

- Foster, E.D.; Deardorff, A. Open science framework (OSF). J. Med. Libr. Assoc. 2017, 105, 203–206. [Google Scholar] [CrossRef] [Green Version]

- Pavlovia. Available online: https://pavlovia.org/ (accessed on 24 March 2020).

- The First Single Application for the Entire DevOps Lifecycle—GitLab | GitLab. Available online: https://about.gitlab.com/ (accessed on 26 March 2020).

- Lange, K.; Kühn, S.; Filevich, E. “Just another tool for online studies” (JATOS): An easy solution for setup and management of web servers supporting online studies. PLoS ONE 2015, 10. [Google Scholar] [CrossRef]

- JATOS—Just Another Tool for Online Studies. Available online: https://www.jatos.org/ (accessed on 24 March 2020).

- Greiner, B. Subject pool recruitment procedures: Organizing experiments with ORSEE. J. Econ. Sci. Assoc. 2015, 1, 114–125. [Google Scholar] [CrossRef]

- ORSEE. Available online: http://www.orsee.org/web/ (accessed on 25 March 2020).

- Sona Systems: Cloud-based Subject Pool Software for Universities. Available online: https://www.sona-systems.com (accessed on 24 March 2020).

- Amazon Mechanical Turk. Available online: https://www.mturk.com/ (accessed on 24 March 2020).

- Paolacci, G.; Chandler, J.; Ipeirotis, P.G. Running experiments on amazon mechanical turk. Judgm. Decis. Mak. 2010, 5, 411–419. [Google Scholar]

- Crump, M.J.C.; McDonnell, J.V.; Gureckis, T.M. Evaluating Amazon’s mechanical turk as a tool for experimental behavioral research. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prolific | Online Participant Recruitment for Surveys and Market Research. Available online: https://www.prolific.ac/ (accessed on 24 March 2020).

- Palan, S.; Schitter, C. Prolific.ac—A subject pool for online experiments. J. Behav. Exp. Financ. 2018, 17, 22–27. [Google Scholar] [CrossRef]

- Panel Management Software—Recruit, Target, & Save Money | Qualtrics. Available online: https://www.qualtrics.com/core-xm/panel-management/ (accessed on 24 March 2020).

- Survey Solutions for Researchers, by Researchers | CloudResearch: Why CloudResearch? Available online: https://www.cloudresearch.com/why-cloudresearch/ (accessed on 15 April 2020).

- Kallmayer, M.; Zacharias, L.; Nobre, A.C.; Draschkow, D. Introduction to Online Experiments. Available online: https://0-doi-org.brum.beds.ac.uk/10.17605/OSF.IO/XFWHB (accessed on 18 April 2020).

- Zhou, H.; Fishbach, A. The pitfall of experimenting on the web: How unattended selective attrition leads to surprising (yet false) research conclusions. J. Personal. Soc. Psychol. 2016, 111, 493–504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garaizar, P.; Vadillo, M.A.; López-de-Ipiña, D. Presentation accuracy of the web revisited: Animation methods in the HTML5 era. PLoS ONE 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Reimers, S.; Stewart, N. Presentation and response timing accuracy in Adobe Flash and HTML5/JavaScript Web experiments. Behav. Res. Methods 2015, 47, 309–327. [Google Scholar] [CrossRef] [PubMed]

- Garaizar, P.; Reips, U.-D. Best practices: Two Web-browser-based methods for stimulus presentation in behavioral experiments with high-resolution timing requirements. Behav. Res. Methods 2019, 51, 1441–1453. [Google Scholar] [CrossRef] [Green Version]

- Bridges, D.; Pitiot, A.; MacAskill, M.R.; Peirce, J.W. The Timing Mega-Study: Comparing a Range of Experiment Generators, Both Lab-Based and Online. PsyArXiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Anwyl-Irvine, A.L.; Dalmaijer, E.S.; Hodges, N.; Evershed, J. Online Timing Accuracy and Precision: A comparison of platforms, browsers, and participant’s devices. PsyArXiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Semmelmann, K.; Weigelt, S. Online psychophysics: Reaction time effects in cognitive experiments. Behav. Res. Methods 2017, 49, 1241–1260. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clifford, S.; Jerit, J. Is There a cost to convenience? An experimental comparison of data quality in laboratory and online studies. J. Exp. Political Sci. 2014, 1, 120–131. [Google Scholar] [CrossRef] [Green Version]

- Hauser, D.J.; Schwarz, N. Attentive Turkers: MTurk participants perform better on online attention checks than do subject pool participants. Behav. Res. Methods 2015, 48, 400–407. [Google Scholar] [CrossRef] [PubMed]

- Casler, K.; Bickel, L.; Hackett, E. Separate but equal? A comparison of participants and data gathered via Amazon’s MTurk, social media, and face-to-face behavioral testing. Comput. Hum. Behav. 2013, 29, 2156–2160. [Google Scholar] [CrossRef]

- Dandurand, F.; Shultz, T.R.; Onishi, K.H. Comparing online and lab methods in a problem-solving experiment. Behav. Res. Methods 2008, 40, 428–434. [Google Scholar] [CrossRef] [Green Version]

- Gould, S.J.J.; Cox, A.L.; Brumby, D.P.; Wiseman, S. Home is where the lab is: A comparison of online and lab data from a time-sensitive study of interruption. Hum. Comput. 2015, 2. [Google Scholar] [CrossRef] [Green Version]

- Leeuw, J.R.d.; Motz, B.A. Psychophysics in a Web browser? Comparing response times collected with JavaScript and psychophysics toolbox in a visual search task. Behav. Res. Methods 2015, 48, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Armitage, J.; Eerola, T. Reaction time data in music cognition: Comparison of pilot data from lab, crowdsourced, and convenience Web samples. Front. Psychol. 2020, 10, 2883. [Google Scholar] [CrossRef] [PubMed]

- Bartneck, C.; Duenser, A.; Moltchanova, E.; Zawieska, K. Comparing the similarity of responses received from studies in Amazon’s mechanical turk to studies conducted online and with direct recruitment. PLoS ONE 2015, 10. [Google Scholar] [CrossRef]

- Hilbig, B.E. Reaction time effects in lab- versus Web-based research: Experimental evidence. Behav. Res. Methods 2015, 48, 1718–1724. [Google Scholar] [CrossRef] [PubMed]

- Saunders, D.R.; Bex, P.J.; Woods, R.L. Crowdsourcing a normative natural language dataset: A comparison of Amazon Mechanical Turk and in-lab data collection. J. Med. Int. Res. 2013, 15, e100. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Gabriel, U.; Gygax, P. Testing the effectiveness of the Internet-based instrument PsyToolkit: A comparison between web-based (PsyToolkit) and lab-based (E-Prime 3.0) measurements of response choice and response time in a complex psycholinguistic task. PLoS ONE 2019, 14, e0221802. [Google Scholar] [CrossRef] [PubMed]

- Jun, E.; Hsieh, G.; Reinecke, K. Types of motivation affect study selection, attention, and dropouts in online experiments. Proc. ACM Hum.-Comput. Interact. 2017, 1, 1–15. [Google Scholar] [CrossRef]

- Leidheiser, W.; Branyon, J.; Baldwin, N.; Pak, R.; McLaughlin, A. Lessons learned in adapting a lab-based measure of working memory capacity for the web. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 2015, 59, 756–760. [Google Scholar] [CrossRef]

- Robinson, J.; Rosenzweig, C.; Moss, A.J.; Litman, L. Tapped out or barely tapped? Recommendations for how to harness the vast and largely unused potential of the Mechanical Turk participant pool. PLoS ONE 2019, 14. [Google Scholar] [CrossRef] [Green Version]

- Henrich, J.; Heine, S.J.; Norenzayan, A. Most people are not WEIRD. Nature 2010, 466, 29. [Google Scholar] [CrossRef]

- Li, Q.; Joo, S.J.; Yeatman, J.D.; Reinecke, K. Controlling for Participants’ Viewing Distance in Large-Scale, Psychophysical Online Experiments Using a Virtual Chinrest. Sci. Rep. 2020, 10, 904. [Google Scholar] [CrossRef]

- Chandler, J.; Mueller, P.; Paolacci, G. Nonnaïveté among Amazon Mechanical Turk workers: Consequences and solutions for behavioral researchers. Behav. Res. Methods 2013, 46, 112–130. [Google Scholar] [CrossRef] [PubMed]

- Chandler, D.; Kapelner, A. Breaking monotony with meaning: Motivation in crowdsourcing markets. J. Econ. Behav. Organ. 2013, 90, 123–133. [Google Scholar] [CrossRef] [Green Version]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does gamification work?—A literature review of empirical studies on gamification. In Proceedings of the 47th Annual Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014; Sprague, R.H., Ed.; IEEE: Piscataway, NJ, USA, 2014. ISBN 9781479925049. [Google Scholar]

- Appinio GmbH. Appinio—Marktforschung in Minuten. Available online: https://www.appinio.com/de/ (accessed on 24 March 2020).

| Features and Guided Integrations for | Platform Cost Per | Backend | ||||

|---|---|---|---|---|---|---|

| Building | Hosting | Recruiting | Month | Participant | ||

| A. Integrated-Service Providers | ||||||

| Gorilla.sc | ✓ | ✓, jsP | MTurk, Prolific, SONA, any | - | ~$1 | visual |

| Inquisit Web | ✓ | ✓ | ✓ | ~$200 | - | visual |

| Labvanced | ✓ | ✓ | ✓ | ~ $387 | ~$1.5 | visual |

| testable | ✓ | ✓ | ✓ | n.a. [5] | n.a. [5] | visual |

| B. Experiment Builders | ||||||

| jsPsych (jsP) | ✓ | JATOS [1], Pavlovia | MTurk | free | free | JS |

| lab.js | ✓ | JATOS [1], Open Lab, Pavlovia, Qualtrics | MTurk | free | free | visual/JS |

| OpenSesame (OS)Web | ✓ | JATOS [1] | - | free | free | visual/JS |

| PsychoPy Builder (PPB) | ✓ | Pavlovia [2] | - | free | free | visual/JS |

| PsyToolkit (PsyT) | ✓ | ✓ | SONA, MTurk | free | free | visual/JS |

| tatool Web | ✓ | ✓ | MTurk | free | free | visual/JS |

| C. Hosts and Study Management | ||||||

| JATOS | lab.js, jsP, PsyT, OSWeb | (✓) [1] | MTurk, Prolific, [3] | free [1] | free | website |

| Pavlovia | lab.js, jsP, PPB | ✓ | SONA, Prolific, [3] | ~$145 | ~$0.30 | website |

| Open Lab | lab.js | ✓ | any [3] | ~$17 | free [6] | website |

| (inst.) webserver | lab.js, jsP, OSWeb, PPB | JATOS or none | any [3] | free | free | - |

| D. Recruitment Services | ||||||

| Amazon MTurk | any | - | ✓ | - | 40% | website |

| ORSEE | any | - | (✓) [4] | free | free | website |

| SONA | any | - | (✓) [4] | - | n.a. [7] | website |

| Prolific Academic | any | - | ✓ | - | 33% | website |

| Qualtrics Panel | Qualtrics/any | - | ✓ | - | n.a. [7] | website |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sauter, M.; Draschkow, D.; Mack, W. Building, Hosting and Recruiting: A Brief Introduction to Running Behavioral Experiments Online. Brain Sci. 2020, 10, 251. https://0-doi-org.brum.beds.ac.uk/10.3390/brainsci10040251

Sauter M, Draschkow D, Mack W. Building, Hosting and Recruiting: A Brief Introduction to Running Behavioral Experiments Online. Brain Sciences. 2020; 10(4):251. https://0-doi-org.brum.beds.ac.uk/10.3390/brainsci10040251

Chicago/Turabian StyleSauter, Marian, Dejan Draschkow, and Wolfgang Mack. 2020. "Building, Hosting and Recruiting: A Brief Introduction to Running Behavioral Experiments Online" Brain Sciences 10, no. 4: 251. https://0-doi-org.brum.beds.ac.uk/10.3390/brainsci10040251