What Makes a Social Robot Good at Interacting with Humans?

Abstract

:1. Introduction

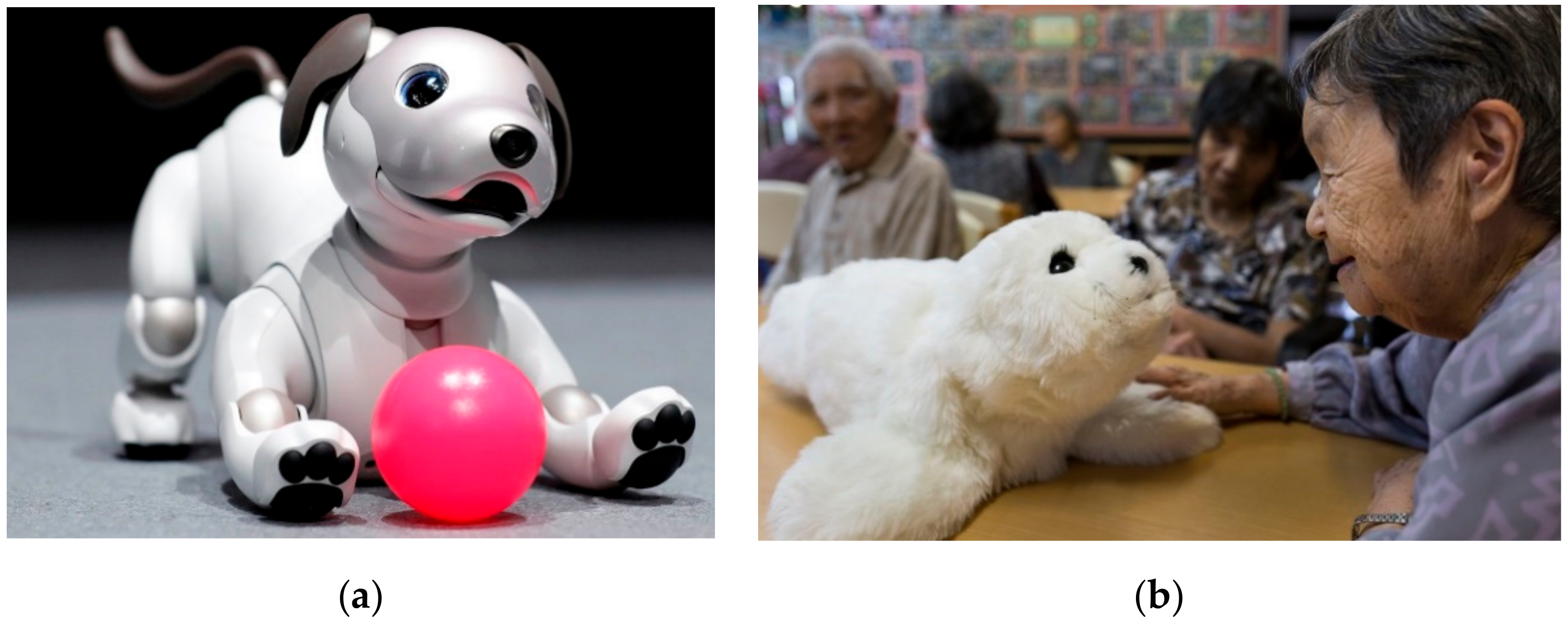

2. Effects of Physical Appearance of Robots

3. Verbal and Non-Verbal Communication

3.1. Nao Humanoid Robot

3.2. Pepper Humanoid Robot

3.3. Kismet Humanoid Robot

3.4. iCub Humanoid Robot

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bartneck, C.; Nomura, T.; Kanda, T.; Suzuki, T.; Kennsuke, K. A cross-cultural study on attitudes towards robots. In Proceedings of the HCI International, Las Vegas, NV, USA, 22–27 July 2005. [Google Scholar]

- International Federation of Robotics. Available online: http://www.ifr.org/service-robots (accessed on 12 January 2020).

- A Kawasaki Robot for Every Application. Available online: https://robotics.kawasaki.com/en1/ (accessed on 12 January 2020).

- FANUC Industrial Robot. Available online: https://www.fanucamerica.com/industrial-solutions (accessed on 12 January 2020).

- Denso Robotics EU Brochure. Available online: https://www.densorobotics-europe.com/fileadmin/Products/DENSO_Robotics_Product_Overview_EN.pdf (accessed on 12 January 2020).

- Vysocky, A.; Novak, P. Human-Robot collaboration in industry. MM Sci. J. 2016, 9, 903–906. [Google Scholar] [CrossRef]

- Freebody, M. The Rise of the Service Robot. Photonics Spectra 2011, 45, 40–42. [Google Scholar]

- Bekey, G.; Ambrose, R.; Kumar, V.; Sanderson, A.; Wilcox, B.; Zheng, Y. International Assessment of Research and Development in Robotics. Available online: http://www.wtec.org/robotics/report/screen-robotics-final-report-highres.pdf (accessed on 15 January 2020).

- Partner Robot FAMILY. Available online: https://www.toyota-global.com/innovation/partner_robot/robot/ (accessed on 12 January 2020).

- Paro Robot Seal Healing Pet. Available online: https://www.japantrendshop.com/paro-robot-seal-healing-pet-p-144.html (accessed on 12 January 2020).

- PARO Therapeutic Robot. Available online: http://www.parorobots.com/index.asp (accessed on 12 January 2020).

- Breazeal, C. Toward sociable robots. Robot. Auton. Syst. 2003, 42, 167–175. [Google Scholar] [CrossRef]

- Buitrago, J.A.; Bolaños, A.M.; Bravo, E.C. A motor learning therapeutic intervention for a child with cerebral palsy through a social assistive robot. Disabil. Rehabil. Assist. Technol. 2019, 2019, 1–6. [Google Scholar] [CrossRef]

- Broekens, J.; Heerink, M.; Rosendal, H. Assistive social robots in elderly care: A review. Gerontechnology 2009, 8, 94–103. [Google Scholar] [CrossRef] [Green Version]

- Mordoch, E.; Osterreicher, A.; Guse, L.; Roger, K.; Thompson, G. Use of social commitment robots in the care of elderly people with dementia: A literature review. Maturitas 2013, 74, 14–20. [Google Scholar] [CrossRef] [PubMed]

- Duffy, B.R. Anthropomorphism and the social robot. Robot. Auton. Syst. 2003, 42, 177–190. [Google Scholar] [CrossRef]

- Goetz, J.; Kiesler, S.; Powers, A. Matching robot appearance and behavior to tasks to improve human-robot cooperation. In Proceedings of the 12th IEEE International Workshop on Robot and Human Interactive Communication, Millbrae, CA, USA, 2 November 2003. [Google Scholar]

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum. Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Ackerman, E. Study: Nobody wants social robots that look like humans because they threaten our identity. IEEE Spectr. 2016, 2016, 1–5. [Google Scholar]

- Ferrari, F.; Paladino, M.P.; Jetten, J. Blurring human–machine distinctions: Anthropomorphic appearance in social robots as a threat to human distinctiveness. Int. J. Soc. Robot. 2016, 8, 287–302. [Google Scholar] [CrossRef]

- Melson, G.F.; Peter, J.K.H.; Beck, A.; Friedman, B. Robotic pets in human lives: Implications for the human–animal bond and for human relationships with personified technologies. J. Soc. Issues 2009, 65, 545–567. [Google Scholar] [CrossRef]

- NAO Robot: Intelligent and Friendly Companion. Available online: https://www.softbankrobotics.com/emea/en/nao (accessed on 12 January 2020).

- Moon, Y. Sony AIBO: The World’s First Entertainment Robot. Available online: https://store.hbr.org/product/sony-aibo-the-world-s-first-entertainment-robot/502010?sku=502010-PDF-ENG (accessed on 15 January 2020).

- Yu, R.; Hui, E.; Lee, J.; Poon, D.; Ng, A.; Sit, K.; Ip, K.; Yeung, F.; Wong, M.; Shibata, T. Use of a therapeutic, socially assistive pet robot (PARO) in improving mood and stimulating social interaction and communication for people with dementia: Study protocol for a randomized controlled trial. JMIR Res. Protoc. 2015, 4, e45. [Google Scholar] [CrossRef] [PubMed]

- Bemelmans, R.; Gelderblom, G.J.; Jonker, P.; de Witte, L. The potential of socially assistive robotics in care for elderly, a systematic review. In Human-Robot Personal Relationships. HRPR 2010. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Lamers, M.H., Verbeek, F.J., Eds.; Springer: Berlin, Germany, 2010. [Google Scholar]

- Moyle, W.; Jones, C.J.; Murfield, J.E.; Thalib, L.; Beattie, E.R.; Shum, D.K.; O’Dwyer, S.T.; Mervin, M.C.; Draper, B.M. Use of a robotic seal as a therapeutic tool to improve dementia symptoms: A cluster-randomized controlled trial. J. Am. Med. Dir. Assoc. 2017, 18, 766–773. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Petersen, S.; Houston, S.; Qin, H.; Tague, C.; Studley, J. The utilization of robotic pets in dementia care. J. Alzheimer’s Dis. 2017, 55, 569–574. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dodds, P.; Martyn, K.; Brown, M. Infection prevention and control challenges of using a therapeutic robot. Nurs. Older People 2018, 30, 34–40. [Google Scholar] [CrossRef] [PubMed]

- Ienca, M.; Jotterand, F.; Vică, C.; Elger, B. Social and assistive robotics in dementia care: Ethical recommendations for research and practice. Int. J. Soc. Robot. 2016, 8, 565–573. [Google Scholar] [CrossRef]

- Hung, L.; Liu, C.; Woldum, E.; Au-Yeung, A.; Berndt, A.; Wallsworth, C.; Horne, N.; Gregorio, M.; Mann, J.; Chaudhury, H. The benefits of and barriers to using a social robot PARO in care settings: A scoping review. BMC Geriatr. 2019, 19, 232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baisch, S.; Kolling, T.; Schall, A.; Rühl, S.; Selic, S.; Kim, Z.; Rossberg, H.; Klein, B.; Pantel, J.; Oswald, F. Acceptance of social robots by elder people: Does psychosocial functioning matter? Int. J. Soc. Robot. 2017, 9, 293–307. [Google Scholar] [CrossRef]

- Shibata, T. Therapeutic seal robot as biofeedback medical device: Qualitative and quantitative evaluations of robot therapy in dementia care. Proc. IEEE 2012, 100, 2527–2538. [Google Scholar] [CrossRef]

- Ouwehand, A.N. The Role of Culture in the Acceptance of Elderly towards Social Assertive Robots: How do Cultural Factors Influence the Acceptance of Elderly People towards Social Assertive Robotics in the Netherlands and Japan? University of Twente Thesis: Enschede, Netherland, 2017. [Google Scholar]

- Taherdoost, H. A review of technology acceptance and adoption models and theories. Procedia Manuf. 2018, 22, 960–967. [Google Scholar] [CrossRef]

- Klein, B.; Gaedt, L.; Cook, G. Emotional Robots. Available online: https://econtent.hogrefe.com/doi/10.1024/1662-9647/a000085 (accessed on 15 January 2020).

- Friedman, B.; Jr, P.H.K.; Hagman, J. Hardware companions? What online AIBO discussion forums reveal about the human-robotic relationship. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003. [Google Scholar]

- Kerepesi, A.; Kubinyi, E.; Jonsson, G.K.; Magnusson, M.S.; Miklosi, A. Behavioural comparison of human–animal (dog) and human–robot (AIBO) interactions. Behav. Process. 2006, 73, 92–99. [Google Scholar] [CrossRef] [PubMed]

- Kubinyi, E.; Miklósi, Á.; Kaplan, F.; Gácsi, M.; Topál, J.; Csányi, V. Social behaviour of dogs encountering AIBO, an animal-like robot in a neutral and in a feeding situation. Behav. Process. 2004, 65, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Kahn, P.H.; Freier, N.G.; Friedman, B.; Severson, R.L.; Feldman, E.N. Social and moral relationships with robotic others? In Proceedings of the 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog no. 04TH8759), Okayama, Japan, 22 September 2004. [Google Scholar]

- Johnston, A. Robotic Seals Comfort Dementia Patients but Raise Ethical Concerns; KALW: San Francisco, CA, USA, 2015. [Google Scholar]

- Calo, C.J.; Hunt-Bull, N.; Lewis, L.; Metzler, T. Ethical implications of using the paro robot, with a focus on dementia patient care. In Proceedings of the Workshops at the Twenty-Fifth AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 8 August 2011. [Google Scholar]

- Fiske, A.; Henningsen, P.; Buyx, A. Your Robot Therapist Will See You Now: Ethical Implications of Embodied Artificial Intelligence in Psychiatry, Psychology, and Psychotherapy. J. Med. Internet Res. 2019, 21, e13216. [Google Scholar] [CrossRef] [PubMed]

- Share, P.; Pender, J. Preparing for a robot future? Social professions, social robotics and the challenges ahead. Irish J. Appl. Soc. Stud. 2018, 18, 4. [Google Scholar]

- Wachsmuth, I. Robots like me: Challenges and ethical issues in aged care. Front. Psychol. 2018, 9, 432. [Google Scholar] [CrossRef] [Green Version]

- Sharkey, A.; Sharkey, N. Children, the elderly, and interactive robots. IEEE Robot. Autom. Mag. 2011, 18, 32–38. [Google Scholar] [CrossRef]

- Sparrow, R.; Sparrow, L. In the hands of machines? The future of aged care. Minds Mach. 2006, 16, 141–161. [Google Scholar] [CrossRef]

- Cecil, P.; Glass, N. An exploration of emotional protection and regulation in nurse–patient interactions: The role of the professional face and the emotional mirror. Collegian 2015, 22, 377–385. [Google Scholar] [CrossRef]

- Winkle, K.; Caleb-Solly, P.; Turton, A.; Bremner, P. Social robots for engagement in rehabilitative therapies: Design implications from a study with therapists. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018. [Google Scholar]

- Carrillo, F.M.; Butchart, J.; Kruse, N.; Scheinberg, A.; Wise, L.; McCarthy, C. Physiotherapists’ acceptance of a socially assistive robot in ongoing clinical deployment. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018. [Google Scholar]

- Correia, F.; Alves-Oliveira, P.; Maia, N.; Ribeiro, T.; Petisca, S.; Melo, F.S.; Paiva, A. Just follow the suit! Trust in human-robot interactions during card game playing. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016. [Google Scholar]

- Sanders, T.L.; MacArthur, K.; Volante, W.; Hancock, G.; MacGillivray, T.; Shugars, W.; Hancock, P.A. Trust and prior experience in human-robot interaction. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Los Angeles, CA, USA, 20 October 2017. [Google Scholar]

- Langer, A.; Feingold-Polak, R.; Mueller, O.; Kellmeyer, P.; Levy-Tzedek, S. Trust in Socially Assistive Robots: Considerations for Use in Rehabilitation. Available online: https://0-www-ncbi-nlm-nih-gov.brum.beds.ac.uk/pubmed/31348963 (accessed on 15 January 2020).

- Beuscher, L.M.; Fan, J.; Sarkar, N.; Dietrich, M.S.; Newhouse, P.A.; Miller, K.F.; Mion, L.C. Socially Assistive Robots: Measuring Older Adults’ Perceptions. J. Gerontol. Nurs. 2017, 43, 35–43. [Google Scholar] [CrossRef]

- Karunarathne, D.; Morales, Y.; Nomura, T.; Kanda, T.; Ishiguro, H. Will Older Adults Accept a Humanoid Robot as a Walking Partner? Int. J. Soc. Robot. 2019, 11, 343–358. [Google Scholar] [CrossRef]

- Kahn, P.H., Jr.; Kanda, T.; Ishiguro, H.; Gill, B.T.; Ruckert, J.H.; Shen, S.; Gary, H.E.; Reichert, A.L.; Freier, N.G.; Severson, R.L. Do people hold a humanoid robot morally accountable for the harm it causes? In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012. [Google Scholar]

- Mavridis, N. A review of verbal and non-verbal human–robot interactive communication. Robot. Auton. Syst. 2015, 63, 22–35. [Google Scholar] [CrossRef] [Green Version]

- Mutlu, B.; Yamaoka, F.; Kanda, T.; Ishiguro, H.; Hagita, N. Nonverbal leakage in robots: Communication of intentions through seemingly unintentional behavior. In Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, La Jolla, CA, USA, 11–13 March 2009. [Google Scholar]

- Andriella, A.; Torras, C.; Alenyà, G. Short-Term Human–Robot Interaction Adaptability in Real-World Environments. Int. J. Soc. Robot. 1–9. 2019. Available online: https://0-link-springer-com.brum.beds.ac.uk/article/10.1007/s12369-019-00606-y (accessed on 12 January 2020).

- Andriella, A.; Torras, C.; Alenya, G. Learning Robot Policies Using a High-Level Abstraction Persona-Behaviour Simulator. Available online: https://www.iri.upc.edu/files/scidoc/2224-Learning-Robot-Policies-Using-a-High-Level-Abstraction-Persona-Behaviour-Simulator.pdf (accessed on 15 January 2019).

- Find Out More about NAO. Available online: https://www.softbankrobotics.com/us/nao (accessed on 12 January 2020).

- Pepper: Softbank Robotics. Available online: https://www.softbankrobotics.com/us/pepper (accessed on 12 January 2020).

- Shamsuddin, S.; Yussof, H.; Ismail, L.I.; Mohamed, S.; Hanapiah, F.A.; Zahari, N.I. Initial response in HRI-a case study on evaluation of child with autism spectrum disorders interacting with a humanoid robot Nao. Procedia Eng. 2012, 41, 1448–1455. [Google Scholar] [CrossRef]

- Tapus, A.; Peca, A.; Aly, A.; Pop, C.; Jisa, L.; Pintea, S.; Rusu, A.S.; David, D.O. Children with autism social engagement in interaction with Nao, an imitative robot: A series of single case experiments. Interact. Stud. 2012, 13, 315–347. [Google Scholar] [CrossRef]

- Shamsuddin, S.; Yussof, H.; Ismail, L.; Hanapiah, F.A.; Mohamed, S.; Piah, H.A.; Zahari, N.I. Initial response of autistic children in human-robot interaction therapy with humanoid robot NAO. In Proceedings of the IEEE 8th International Colloquium on Signal Processing and its Applications, Melaka, Malaysia, 23–25 March 2012. [Google Scholar]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Aaltonen, I.; Arvola, A.; Heikkilä, P.; Lammi, H. Hello pepper, may i tickle you? Children’s and adults’ responses to an entertainment robot at a shopping mall. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6 March 2017. [Google Scholar]

- Gardecki, A.; Podpora, M. Experience from the operation of the pepper humanoid robots. In Proceedings of the Applied Electrical Engineering (PAEE), Koscielisko, Poland, 25–30 June 2017. [Google Scholar]

- Foster, M.E.; Alami, R.; Gestranius, O; Lemon, O.; Niemela, M.; Odobez, J.-M.; Pandey, A.K. The MuMMER Project: Engaging Human-Robot Interaction in Real-World Public Spaces. Available online: https://eprints.gla.ac.uk/123307/ (accessed on 15 January 2020).

- Tanaka, F.; Isshiki, K.; Takahashi, F.; Uekusa, M.; Sei, R.; Hayashi, K. Pepper learns together with children: Development of an educational application. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, South Korea, 3–5 November 2015. [Google Scholar]

- Hirano, T.; Shiomi, M.; Iio, T.; Kimoto, M.; Nagashio, T.; Tanev, I.; Shimohara, K.; Hagita, N. Communication Cues In a Human-Robot Touch Interaction. Available online: https://www.researchgate.net/publication/310820319_Communication_Cues_in_a_Human-Robot_Touch_Interaction (accessed on 15 January 2020).

- Breazeal, C.; Velásquez, J. Toward teaching a robot ‘infant’using emotive communication acts. In Proceedings of the 1998 Simulated Adaptive Behavior Workshop on Socially Situated Intelligence, Zurich, Switzerland, 17–21 August 1998. [Google Scholar]

- Breazeal, C. Early Experiments Using Motivations to Regulate Human-Robot Interaction. 1998. Available online: http://robotic.media.mit.edu/wp-content/uploads/sites/7/2015/01/Breazeal-AAAI-98-early.pdf (accessed on 12 January 2020).

- Kismet, The Robot. Available online: http://www.ai.mit.edu/projects/sociable/baby-bits.html (accessed on 12 January 2020).

- Metta, G.; Natale, L.; Nori, F.; Sandini, G.; Vernon, D.; Fadiga, L.; von Hofsten, C.; Rosander, K.; Lopes, M.; Santos-Victor, J. The iCub humanoid robot: An open-systems platform for research in cognitive development. Neural Netw. 2010, 23, 1125–1134. [Google Scholar] [CrossRef] [PubMed]

- Sandini, G.; Metta, G.; Vernon, D. The iCub Cognitive Humanoid Robot: An Open-System Research Platform for Enactive Cognition. In 50 Years of Artificial Intelligence, Lecture Notes in Computer Science; Lungarella, M., Iida, F., Bongard, J., Pfeifer, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4850. [Google Scholar]

- Frith, C. Role of facial expressions in social interactions. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3453–3458. [Google Scholar] [CrossRef] [Green Version]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onyeulo, E.B.; Gandhi, V. What Makes a Social Robot Good at Interacting with Humans? Information 2020, 11, 43. https://0-doi-org.brum.beds.ac.uk/10.3390/info11010043

Onyeulo EB, Gandhi V. What Makes a Social Robot Good at Interacting with Humans? Information. 2020; 11(1):43. https://0-doi-org.brum.beds.ac.uk/10.3390/info11010043

Chicago/Turabian StyleOnyeulo, Eva Blessing, and Vaibhav Gandhi. 2020. "What Makes a Social Robot Good at Interacting with Humans?" Information 11, no. 1: 43. https://0-doi-org.brum.beds.ac.uk/10.3390/info11010043