Hybrid Feedback Control for Exponential Stability and Robust H∞ Control of a Class of Uncertain Neural Network with Mixed Interval and Distributed Time-Varying Delays

Abstract

:1. Introduction

- This research is the first time to study hybrid feedback control for exponential stability and robust control of a class of uncertain neural network with mixed interval and distributed time-varying delays.

- A novel LKF is first proposed to analyze the problem of robust control for uncertain neural networks with mixed time-varying delays and augmented Lyapunov matrix do not need to be positive definiteness of the chosen LKF compared with [23].

- The problem of robust control for uncertain neural networks with mixed time-varying delays comprising of interval and distributed time-varying delays, these delays are not necessarily differentiable.

2. Model Description and Mathematic Preliminaries

- (i)

- The zero solution of the closed-loop system, where ,, is stable.

- (ii)

- There is a number such thatwhere the supremum is taken over all and the non-zero uncertainty .

3. Stability Analysis

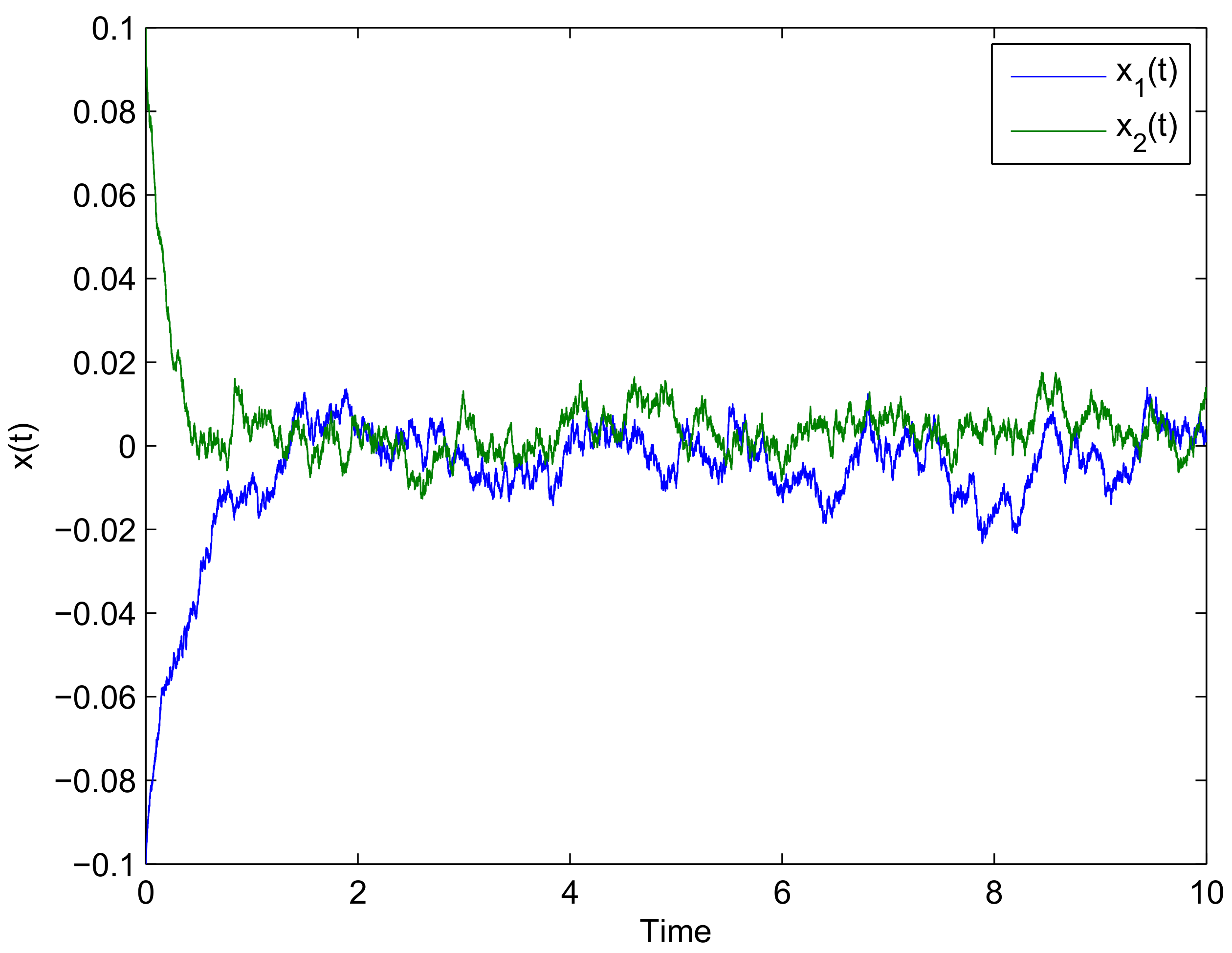

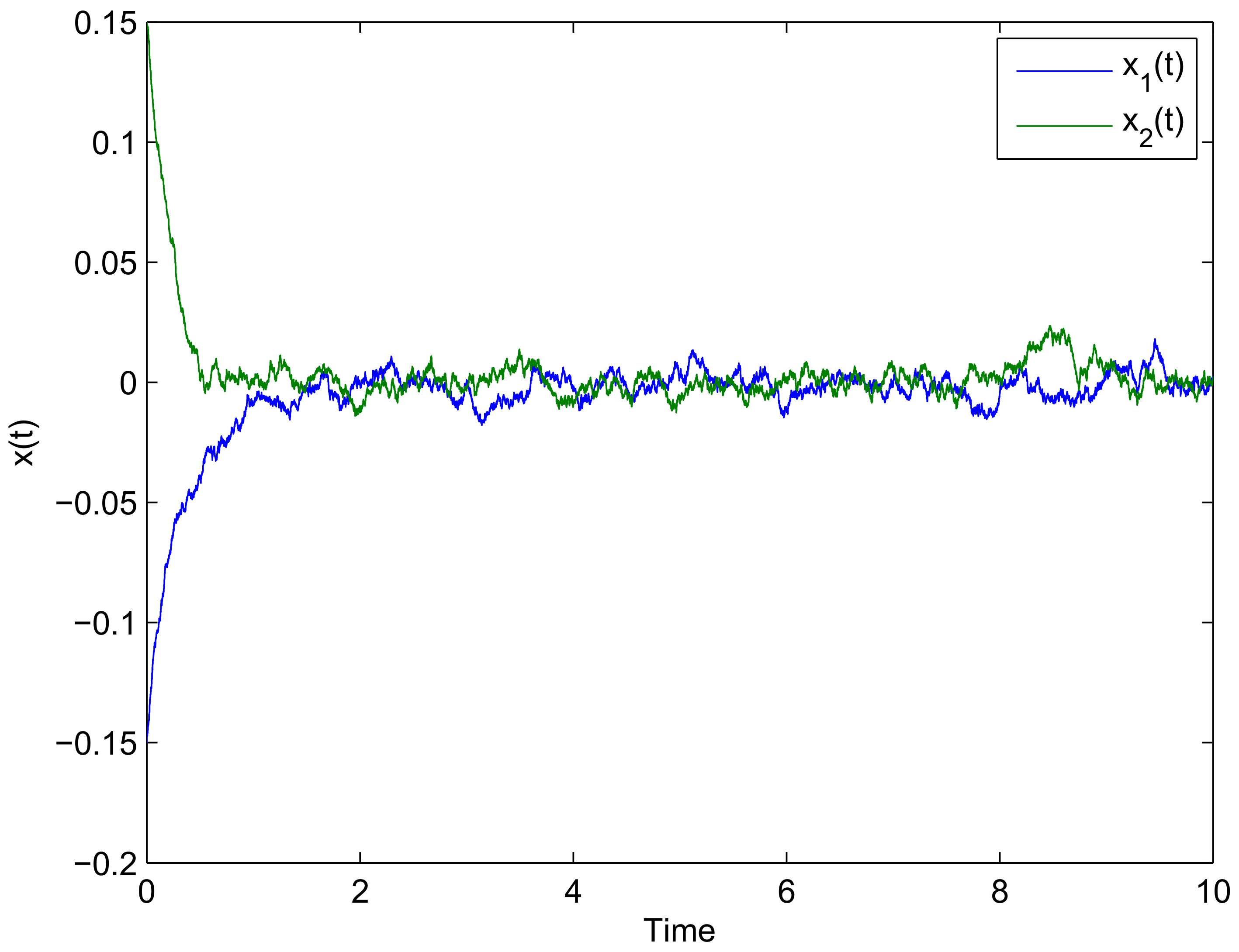

4. Numerical Examples

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheng, X.M.; Gui, W.H.; Gan, Z.J. Robust reliable control for a class of time-varying uncertain impulsive systems. J. Cent. South Univ. Technol. 2005, 12, 199–202. [Google Scholar] [CrossRef]

- Gao, J.; Huang, B.; Wang, Z.; Fisher, D.G. Robust reliable control for a class of uncertain nonlinear systems with time-varying multi-state time delays. Int. J. Syst. Sci. 2001, 32, 817–824. [Google Scholar] [CrossRef]

- Veillette, R.J.; Medanic, J.V.; Perkins, W.R. Design of reliable control systems. IEEE Trans. Autom. Control 1992, 37, 290–304. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, B.; Unbehauen, H. Robust reliable control for a class of uncertain nonlinear state-delayed systems. Automatica 1999, 35, 955–963. [Google Scholar] [CrossRef]

- Alwan, M.S.; Liu, X.Z.; Xie, W.C. On design of robust reliable H∞ control and input–to-state stabilization of uncertain stochastic systems with state delay. Commun. Nonlinear Sci. Numer. Simulat. 2013, 18, 1047–1056. [Google Scholar] [CrossRef]

- Yue, D.; Lam, J.; Ho, D.W.C. Reliable H∞ control of uncertain descriptor systems with multiple delays. IEE Proc. Control Theory Appl. 2003, 150, 557–564. [Google Scholar] [CrossRef]

- Xiang, Z.G.; Chen, Q.W. Robust reliable control for uncertain switched nonlinear systems with time delay under asynchronous switching. Appl. Math. Comput. 2010, 216, 800–811. [Google Scholar] [CrossRef]

- Wang, Z.D.; Wei, G.L.; Feng, G. Reliable H∞ control for discrete-time piecewise linear systems with infinite distributed delays. Automatica 2009, 45, 2991–2994. [Google Scholar] [CrossRef] [Green Version]

- Mahmoud, M.S. Reliable decentralized control of interconnected discrete delay systems. Automatica 2012, 48, 986–990. [Google Scholar] [CrossRef]

- Suebcharoen, T.; Rojsiraphisal, T.; Mouktonglang, T. Controlled current quality improvement by multi-target linear quadratic regulator for the grid integrated renewable energy system. J. Anal. Appl. 2021, 19, 47–66. [Google Scholar]

- Faybusovich, L.; Mouktonglang, T.; Tsuchiya, T. Implementation of infinite-dimensional interior-point method for solving multi-criteria linear-quadratic control problem. Optim. Methods Softw. 2006, 21, 315–341. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks; Prentice-Hall: Englewood Cliffs, NJ, USA, 1994. [Google Scholar]

- Yang, X.; Song, Q.; Liang, J.; He, B. Finite-time synchronization of coupled discontinuous neural networks with mixed delays and nonidentical perturbations. J. Franklin Inst. 2015, 352, 4382–4406. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Z. New delay-dependent criterion for the stability of recurrent neural networks with time-varying delay. Sci. China Inf. Sci. 2009, 52, 942–948. [Google Scholar] [CrossRef]

- Li, X.; Souza, C.E.D. Delay-dependent robust stability and stabilization of uncertain linear delay systems: A linear matrix inequality approach. IEEE Trans. Automat. Control 1997, 42, 1144–1148. [Google Scholar] [CrossRef]

- Ali, M.S.; Balasubramaniam, P. Exponential stability of time delay systems with nonlinear uncertainties. Int. J. Comput. Math. 2010, 87, 1363–1373. [Google Scholar] [CrossRef]

- Ali, M.S. On exponential stability of neutral delay differential system with nonlinear uncertainties. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 2595–2601. [Google Scholar]

- Yoneyama, J. Robust H∞ control of uncertain fuzzy systems under time-varying sampling. Fuzzy Sets Syst. 2010, 161, 859–871. [Google Scholar] [CrossRef]

- Yoneyama, J. Robust H∞ filtering for sampled-data fuzzy systems. Fuzzy Sets Syst. 2013, 217, 110–129. [Google Scholar] [CrossRef]

- Francis, B.A. A Course in H∞ Control Theory; Springer: Berlin, Germany, 1987. [Google Scholar]

- Keulen, B.V. H∞ Control For Distributed Parameter Systems: A State-Space Approach; Birkhauser: Boston, MA, USA, 1993. [Google Scholar]

- Petersen, I.R.; Ugrinovskii, V.A.; Savkin, A.V. Robust Control Design Using H∞ Methods; Springer: London, UK, 2000. [Google Scholar]

- Du, Y.; Liu, X.; Zhong, S. Robust reliable H∞ control for neural networks with mixed time delays. Chaos Solitons Fractals 2016, 91, 1–8. [Google Scholar] [CrossRef]

- Lakshmanan, S.; Mathiyalagan, K.; Park, J.H.; Sakthivel, R.; Rihan, F.A. Delay-dependent H∞ state estimation of neural networks with mixed time-varying delays. Neurocomputing 2014, 129, 392–400. [Google Scholar] [CrossRef]

- Tian, E.; Yue, D.; Zhang, Y. On improved delay-dependent robust H∞ control for systems with interval time-varying delay. J. Franklin Inst. 2011, 348, 555–567. [Google Scholar] [CrossRef]

- Duan, Q.; Su, H.; Wu, Z.G. H∞ state estimation of static neural networks with time-varying delay. Neurocomputing 2012, 97, 16–21. [Google Scholar] [CrossRef]

- Liu, Y.; Lee, S.; Kwon, O.; Park, J. A study on H∞ state estimation of static neural networks with time-varying delays. Appl. Math. Comput. 2014, 226, 589–597. [Google Scholar] [CrossRef]

- Ali, M.S.; Saravanakumar, R.; Arik, S. Novel H∞ state estimation of static neural networks with interval time-varying delays via augmented Lyapunov–Krasovskii functional. Neurocomputing 2016, 171, 949–954. [Google Scholar]

- Thanh, N.T.; Phat, V.N. H∞ control for nonlinear systems with interval non-differentiable time-varying delay. Eur. J. Control 2013, 19, 190–198. [Google Scholar] [CrossRef]

- Gu, K.; Kharitonov, V.L.; Chen, J. Stability of Time-Delay Systems; Birkhäuser: Berlin, Germany, 2003. [Google Scholar]

- Sun, J.; Liu, G.P.; Chen, J. Delay-dependent stability and stabilization of neutral time-delay systems. Internat. J. Robust Nonlinear Control 2009, 19, 1364–1375. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Fraser, K.; Liu, X. Stochastic stability of uncertain Hopfield neural networks with discrete and distributed delays. Phys. Lett. A 2006, 354, 288–297. [Google Scholar] [CrossRef] [Green Version]

- Qian, W.; Cong, S.; Sun, Y.; Fei, S. Novel robust stability criteria for uncertain systems with time-varying delay. Appl. Math. Comput. 2009, 215, 866–872. [Google Scholar] [CrossRef]

- Xu, S.; Lam, J.; Zou, Y. New results on delay-dependent robust H∞ control for systems with time-varying delay. Automatica 2006, 42, 343–348. [Google Scholar] [CrossRef]

- Peng, C.; Tian, Y.C. Delay-dependent robust H∞ control for uncertain systems with time-varying delay. Inf. Sci. 2009, 179, 3187–3197. [Google Scholar] [CrossRef] [Green Version]

- Yan, H.C.; Zhang, H.; Meng, M.Q. Delay-range-dependent robust H∞ control for uncertain systems with interval time-varying delays. Neurocomputing 2010, 73, 1235–1243. [Google Scholar] [CrossRef]

- Wang, C.; Shen, Y. Improved delay-dependent robust stability criteria for uncertain time delay systems. Appl. Math. Comput. 2011, 218, 2880–2888. [Google Scholar] [CrossRef]

- Wu, Z.G.; Park, J.H.; Su, H.; Chu, J. New results on exponential passivity of neural networks with time-varying delays. Nonlinear Anal. Real World Appl. 2012, 13, 1593–1599. [Google Scholar] [CrossRef]

- Manivannan, R.; Samidurai, R.; Cao, J.; Alsaedi, A.; Alsaadi, F.E. Delay-dependent stability criteria for neutral-type neural networks with interval time-varying delay signals under the effects of leakage delay. Adv. Differ. Equ. 2018, 2018, 1–25. [Google Scholar] [CrossRef]

- Saravanakumar, R.; Mukaidani, H.; Muthukumar, P. Extended dissipative state estimation of delayed stochastic neural networks. Neurocomputing 2020, 406, 244–252. [Google Scholar] [CrossRef]

- Shanmugam, S.; Muhammed, S.A.; Lee, G.M. Finite-time extended dissipativity of delayed Takagi-Sugeno fuzzy neural networks using a free-matrixbased double integral inequality. Neural Comput. Appl. 2019, 32, 8517–8528. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chantawat, C.; Botmart, T.; Supama, R.; Weera, W.; Noinang, S. Hybrid Feedback Control for Exponential Stability and Robust H∞ Control of a Class of Uncertain Neural Network with Mixed Interval and Distributed Time-Varying Delays. Computation 2021, 9, 62. https://0-doi-org.brum.beds.ac.uk/10.3390/computation9060062

Chantawat C, Botmart T, Supama R, Weera W, Noinang S. Hybrid Feedback Control for Exponential Stability and Robust H∞ Control of a Class of Uncertain Neural Network with Mixed Interval and Distributed Time-Varying Delays. Computation. 2021; 9(6):62. https://0-doi-org.brum.beds.ac.uk/10.3390/computation9060062

Chicago/Turabian StyleChantawat, Charuwat, Thongchai Botmart, Rattaporn Supama, Wajaree Weera, and Sakda Noinang. 2021. "Hybrid Feedback Control for Exponential Stability and Robust H∞ Control of a Class of Uncertain Neural Network with Mixed Interval and Distributed Time-Varying Delays" Computation 9, no. 6: 62. https://0-doi-org.brum.beds.ac.uk/10.3390/computation9060062