On the Locus of the Practice Effect in Sustained Attention Tests

Abstract

:1. Introduction

1.1. Practice Effects in Sustained Attention Tests

1.2. A Process Model of Sustained Attention Tests

1.3. The Present Study

2. Method

2.1. Participants

2.2. Measures

2.2.1. Perceptual Speed—The Inspection Time Task

2.2.2. Motor Speed—The Simple Reaction Time Task

2.2.3. The Modified d2

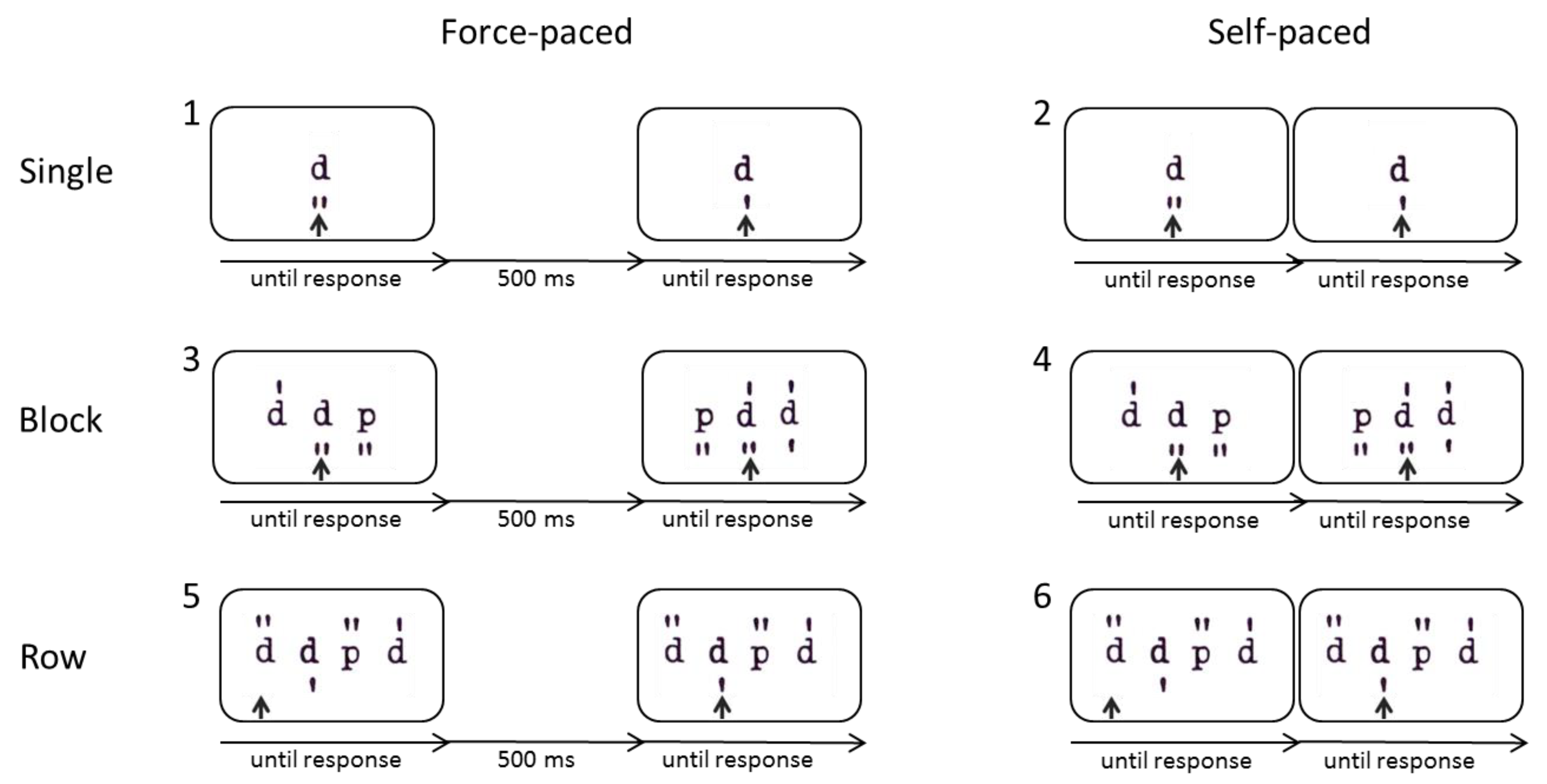

2.2.4. Test d2-R Electronic Version [15]

2.3. Procedure

2.4. Data Preprocessing

2.5. Analysis Strategy

3. Results

3.1. Descriptive Statistics and Practice Effects

3.2. Effects of Practice on Item Shifting and Preprocessing

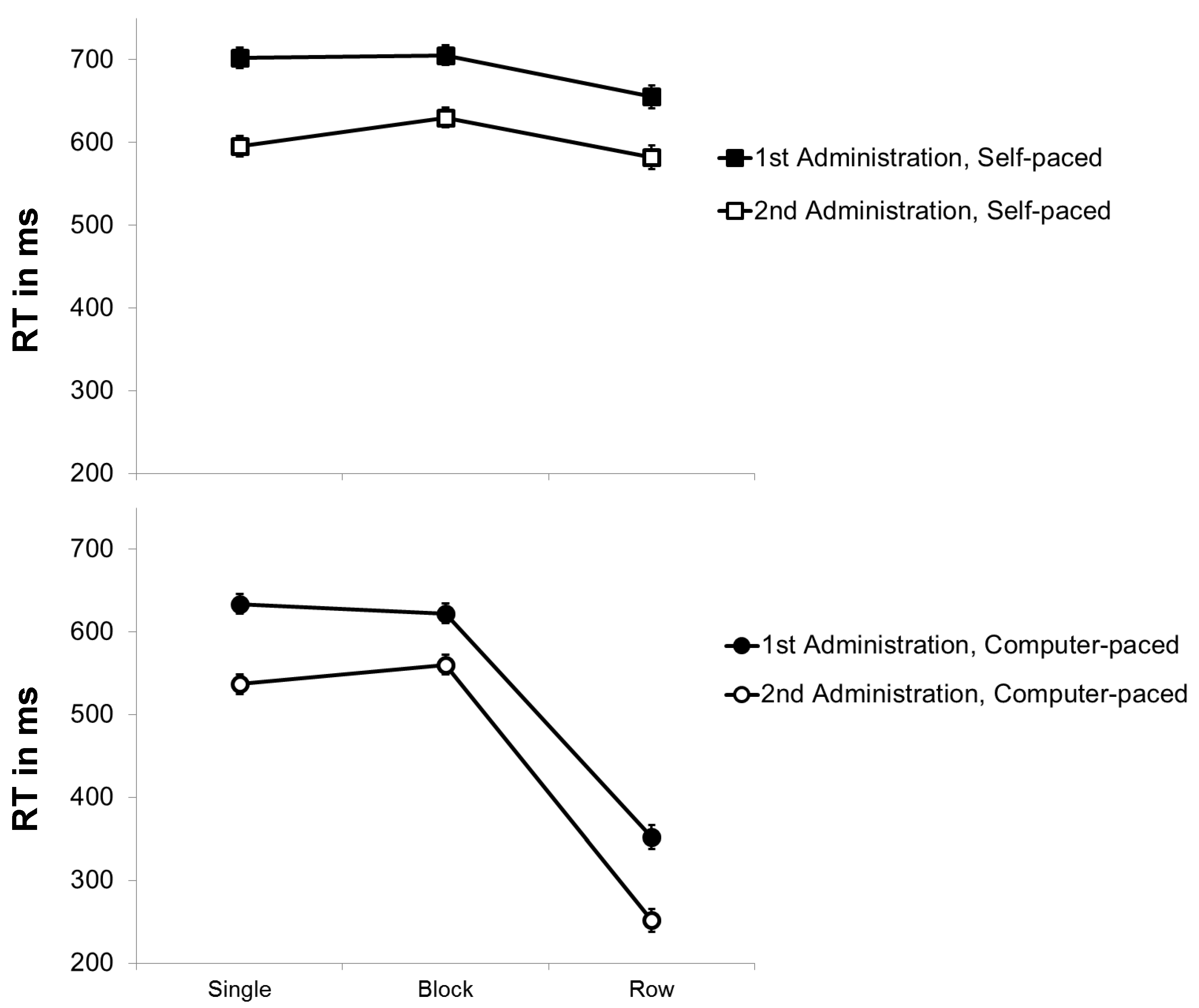

3.2.1. RT Analysis

3.2.2. Error Rates

4. Discussion

4.1. Effects of Practice on the Sub-Components

4.2. Effects of Practice on Preprocessing and Item Shifting

4.3. Limitations and Strenghts

4.4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Predictors | 1st Administration | 2nd Administration | ||||||

|---|---|---|---|---|---|---|---|---|

| β | R2 | adj. R2 | ΔR2 | β | R2 | adj. R2 | ΔR2 | |

| Model 1 | ||||||||

| Inspection Time Task (perceptual speed) | .26 * | .07 * | .06 | .31 ** | .09 ** | .09 | ||

| Model 2 | ||||||||

| Inspection Time Task (perceptual speed) | .23 * | .26 * | ||||||

| Simple Reaction Time Task (motor speed) | .26 * | .13 ** | .11 | .07 * | .18 * | .13 ** | .11 | .03 * |

| Model 3 | ||||||||

| Inspection Time Task (perceptual speed) | .08 | .09 | ||||||

| Simple Reaction Time Task (motor speed) | .02 | .02 | ||||||

| Simple Modified d2 (mental operation speed) | .67 ** | .47 ** | .46 | .34 ** | .60 ** | .41 ** | .40 | .29 ** |

References

- Westhoff, K.; Hagemeister, C. Konzentrationsdiagnostik; Pabst: Lengerich, Germany, 2005. [Google Scholar]

- Brickenkamp, R.; Schmidt-Atzert, L.; Liepmann, D. Test D2-Revision. Aufmerksamkeits-Und Konzentrationstest (D2-R); Hogrefe: Göttingen, Germany, 2010. [Google Scholar]

- Mirsky, A.F.; Anthony, B.J.; Duncan, C.C.; Ahearn, M.B.; Kellam, S.G. Analysis of the Elements of Attention: A Neuropsychological Approach. Neuropsychol. Rev. 1991, 2, 109–145. [Google Scholar] [CrossRef] [PubMed]

- Schmidt-Atzert, L.; Bühner, M. Aufmerksamkeit Und Intelligenz. In Intelligenz und Kognition: Die Kognitiv biologische Perspektive der Intelligenz; Schweizer, K., Ed.; Verlag Empirische Pädagogik: Landau, Germany, 2000; pp. 125–151. [Google Scholar]

- Schmidt-Atzert, L.; Bühner, M.; Enders, P. Messen Konzentrationstests Konzentration? Eine Analyse Der Komponenten von Konzentrationsleistungen. Diagnostica 2006, 52, 33–44. [Google Scholar] [CrossRef]

- Büttner, G.; Schmidt-Atzert, L. Diagnostik von Konzentration Und Aufmerksamkeit; Hogrefe: Göttingen, Germany, 2004. [Google Scholar]

- Bühner, M.; Ziegler, M.; Bohnes, B.; Lauterbach, K. Übungseffekte in Den TAP Untertests Test Go/Nogo Und Geteilte Aufmerksamkeit Sowie Dem Aufmerksamkeits-Belastungstest (D2). Z. Für Neuropsychol. 2006, 17, 191–199. [Google Scholar] [CrossRef]

- Lievens, F.; Reeve, C.L.; Heggestad, E.D. An Examination of Psychometric Bias Due to Retesting on Cognitive Ability Tests in Selection Settings. J. Appl. Psychol. 2007, 92, 1672–1682. [Google Scholar] [CrossRef] [PubMed]

- Randall, J.G.; Villado, A.J. Take Two: Sources and Deterrents of Score Change in Employment Retesting. Hum. Resour. Manag. Rev. 2017, 27, 536–553. [Google Scholar] [CrossRef]

- Scharfen, J.; Peters, J.M.; Holling, H. Retest Effects in Cognitive Ability Tests: A Meta-Analysis. Intelligence 2018, 67, 44–66. [Google Scholar] [CrossRef]

- Hausknecht, J.P.; Halpert, J.A.; Di Paolo, N.T.; Moriarty Gerrard, M.O. Retesting in Selection: A Meta-Analysis of Coaching and Practice Effects for Tests of Cognitive Ability. J. Appl. Psychol. 2007, 92, 373–385. [Google Scholar] [CrossRef]

- Krumm, S.; Schmidt-Atzert, L.; Michalczyk, K.; Danthiir, V. Speeded Paper-Pencil Sustained Attention and Mental Speed Tests. J. Individ. Differ. 2008, 29, 205–216. [Google Scholar] [CrossRef]

- Westhoff, K.; Dewald, D. Effekte Der Übung in Der Bearbeitung von Konzentrationstests. Diagnostica 1990, 36, 1–15. [Google Scholar]

- Steinborn, M.B.; Langner, R.; Flehmig, H.C.; Huestegge, L. Methodology of Performance Scoring in the D2 Sustained-Attention Test: Cumulative-Reliability Functions and Practical Guidelines. Psychol. Assess. 2017. Advance online publication. [Google Scholar] [CrossRef]

- Schmidt-Atzert, L.; Brickenkamp, R. Test D2-R-Elektronische Fassung Des Aufmerksamkeits-Und Konzentrationstests D2-R; Hogrefe: Göttingen, Germany, 2017. [Google Scholar]

- Scharfen, J.; Blum, D.; Holling, H. Response Time Reduction Due to Retesting in Mental Speed Tests: A Meta-Analysis. J. Intell. 2018, 6, 6. [Google Scholar] [CrossRef]

- Te Nijenhuis, J.; van Vianen, A.E.M.; van der Flier, H. Score Gains on G-Loaded Tests: No G. Intelligence 2007, 35, 283–300. [Google Scholar] [CrossRef]

- Lievens, F.; Buyse, T.; Sackett, P.R. Retest Effects in Operational Selection Settings: Development and Test of a Framework. Pers. Psychol. 2005, 58, 981–1007. [Google Scholar] [CrossRef]

- Westhoff, K.; Graubner, J. Konstruktion Eines Komplexen Konzentrationstests. Diagnostica 2003, 49, 110–119. [Google Scholar] [CrossRef]

- Krumm, S.; Schmidt-Atzert, L.; Schmidt, S.; Zenses, E.M.; Stenzel, N. Attention Tests in Different Stimulus Presentation Modes. J. Individ. Differ. 2012, 33, 146–159. [Google Scholar] [CrossRef]

- Krumm, S.; Schmidt-Atzert, L.; Eschert, S. Investigating the Structure of Attention: How Do Test Characteristics of Paper-Pencil Sustained Attention Tests Influence Their Relationship with Other Attention Tests? Eur. J. Psychol. Assess. 2008, 24, 108–116. [Google Scholar] [CrossRef]

- Blotenberg, I.; Schmidt-Atzert, L. Towards a Process Model of Sustained Attention Tests. J. Intell. 2019, 7, 3. [Google Scholar] [CrossRef]

- Blotenberg, I.; Schmidt-Atzert, L. On the Characteristics of Sustained Attention Test Performance—The Role of the Preview Benefit. Eur. J. Psychol. Assess. 2019, in press. [Google Scholar]

- Schweizer, K.; Moosbrugger, H. Attention and Working Memory as Predictors of Intelligence. Intelligence 2004, 32, 329–347. [Google Scholar] [CrossRef]

- Van Breukelen, G.J.P.; Roskam, E.E.C.I.; Eling, P.A.T.M.; Jansen, R.W.T.L.; Souren, D.A.P.B.; Ickenroth, J.G.M. A Model and Diagnostic Measures for Response Time Series on Tests of Concentration: Historical Background, Conceptual Framework, and Some Applications. Brain Cognit. 1995, 27, 147–179. [Google Scholar] [CrossRef]

- Eriksen, C.W. The Flankers Task and Response Competition: A Useful Tool for Investigating a Variety of Cognitive Problems. Vis. Cognit. 1995, 2, 101–118. [Google Scholar] [CrossRef]

- Friedman, N.P.; Miyake, A. The Relations Among Inhibition and Interference Control Functions: A Latent-Variable Analysis. J. Exp. Psychol. Gen. 2004, 133, 101–135. [Google Scholar] [CrossRef] [PubMed]

- Rayner, K. Eye Movements in Reading and Information Processing: 20 Years of Research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef] [PubMed]

- Schotter, E.R.; Angele, B.; Rayner, K. Parafoveal Processing in Reading. Atten. Percept. Psychophys. 2012, 74, 5–35. [Google Scholar] [CrossRef] [PubMed]

- Brand, C.R.; Deary, I.J. Intelligence and “Inspection Time.” In A Model of Intelligence; Eysenck, H., Ed.; Springer: New York, NY, USA, 1982; pp. 133–148. [Google Scholar]

- Schweizer, K.; Koch, W. Perceptual Processes and Cognitive Ability. Intelligence 2003, 31, 211–235. [Google Scholar] [CrossRef]

- Vickers, D.; Nettelbeck, T.; Willson, R.J. Perceptual Indices of Performance: The Measurement of ‘Inspection Time’ and ‘Noise’ in the Visual System. Perception 1972, 1, 263–295. [Google Scholar] [CrossRef] [PubMed]

- Ackerman, P.L. Determinants of Individual Differences during Skill Acquisition: Cognitive Abilities and Information Processing. J. Exp. Psychol. Gen. 1988, 117, 288–318. [Google Scholar] [CrossRef]

- Fleishman, E.A.; Hempel, W.E. Changes in Factor Structure of a Complex Psychomotor Test as a Function of Practice. Psychometrika 1954, 19, 239–252. [Google Scholar] [CrossRef]

- Sternberg, S.; Monsell, S.; Knoll, R.L.; Wright, C.E. The Latency and Duration of Rapid Movement Sequences: Comparisons of Speech and Typewriting. In Information Processing in Motor Control and Learning; Stelmach, G.E., Ed.; Academic Press: New York, NY, USA, 1978; pp. 117–152. [Google Scholar]

- Neubauer, A.C.; Knorr, E. Elementary Cognitive Processes in Choice Reaction Time Tasks and Their Correlations with Intelligence. Pers. Individ. Dif. 1997, 23, 715–728. [Google Scholar] [CrossRef]

- Vickers, D.; Smith, P.L. The Rationale for the Inspection Time Index. Pers. Individ. Dif. 1986, 7, 609–623. [Google Scholar] [CrossRef]

- Schneider, W.; Eschman, A.; Zuccolotto, A. E-Prime 2.0 Software; Psychology Software Tools Inc.: Pittsburgh, PA, USA, 2002. [Google Scholar]

- McGaugh, L.J. Time-Dependent Processes in Memory Storage. Science 1966, 153, 1351–1358. [Google Scholar] [CrossRef] [PubMed]

- Düker, H. Leistungsfähigkeit Und Keimdrüsenhormone; J.A. Barth: München, Germany, 1957. [Google Scholar]

- Krumm, S.; Schmidt-Atzert, L.; Bracht, M.; Ochs, L. Coordination as a Crucial Component of Performance on a Sustained Attention Test. J. Individ. Differ. 2011, 32, 117–128. [Google Scholar] [CrossRef]

- Townsend, J.T. Serial vs. Parallel Processing: Sometimes They Look like Tweedledum and Tweedledee but They Can (and Should) Be Distinguished. Psychol. Sci. 1990, 1, 46–54. [Google Scholar] [CrossRef]

- Schubert, A.-L.; Hagemann, D.; Voss, A.; Schankin, A.; Bergmann, K. Decomposing the Relationship between Mental Speed and Mental Abilities. Intelligence 2015, 51, 28–46. [Google Scholar] [CrossRef]

- Stafford, T.; Gurney, K.N. Additive Factors Do Not Imply Discrete Processing Stages: A Worked Example Using Models of the Stroop Task. Front. Psychol. 2011, 2. [Google Scholar] [CrossRef] [PubMed]

- Bornstein, R.F. Toward a Process-Focused Model of Test Score Validity: Improving Psychological Assessment in Science and Practice. Psychol. Assess. 2011, 23, 532–544. [Google Scholar] [CrossRef] [PubMed]

- Borsboom, D.; Mellenbergh, G.J.; Van Heerden, J. The Concept of Validity. Psychol. Rev. 2004, 111, 1061–1071. [Google Scholar] [CrossRef] [PubMed]

| 1 | An outlier analysis based on logarithmized RT yielded very similar results. |

| 2 | Mauchly’s sphericity test indicated a violation of the sphericity assumption for the variable task (χ2(2) = 61.158, p < .001) and for the interaction of practice and task (χ2(2) = 50.237, p < .001). Therefore, the Greenhouse–Geisser correction was used to adjust degrees of freedom for the main effect of task (ε = 0.670) and for the interaction of practice and task (ε = 0.700). |

| 3 | Additional analyses revealed that practice effects within sessions were less systematic than practice effects between sessions, which is most likely due to the role of memory consolidation for practice effects [39]. |

| Tasks (Sub-Components) | 1st Administration | 2nd Administration | Practice Effect | Reliability | |||

|---|---|---|---|---|---|---|---|

| M (SD) | M (SD) | T | p | M (SD) | dz | rtt | |

| Inspection Time Task (perceptual speed) | 53.73 (27.76) | 47.35 (31.83) | 3.14 | <.01 | 6.38 (26.00) | 0.32 | .58 |

| Simple Reaction Time Task (motor speed) | 239.60 (26.27) | 227.43 (26.15) | 5.01 | <.001 | 12.17 (24.30) | 0.50 | .57 |

| Simple modified d2 (mental operation speed) | 634.00 (95.54) | 536.95 (72.52) | 16.88 | <.001 | 97.05 (61.28) | 1.71 | .68 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Blotenberg, I.; Schmidt-Atzert, L. On the Locus of the Practice Effect in Sustained Attention Tests. J. Intell. 2019, 7, 12. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence7020012

Blotenberg I, Schmidt-Atzert L. On the Locus of the Practice Effect in Sustained Attention Tests. Journal of Intelligence. 2019; 7(2):12. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence7020012

Chicago/Turabian StyleBlotenberg, Iris, and Lothar Schmidt-Atzert. 2019. "On the Locus of the Practice Effect in Sustained Attention Tests" Journal of Intelligence 7, no. 2: 12. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence7020012