Facial Imitation Improves Emotion Recognition in Adults with Different Levels of Sub-Clinical Autistic Traits

Abstract

:1. Introduction

1.1. Role of Imitation

1.2. Autism Spectrum Conditions

1.3. Assessment of Imitation and Scope of the Study

2. Materials and Methods

2.1. Participants

2.2. Measures

2.2.1. Autism Spectrum Quotient

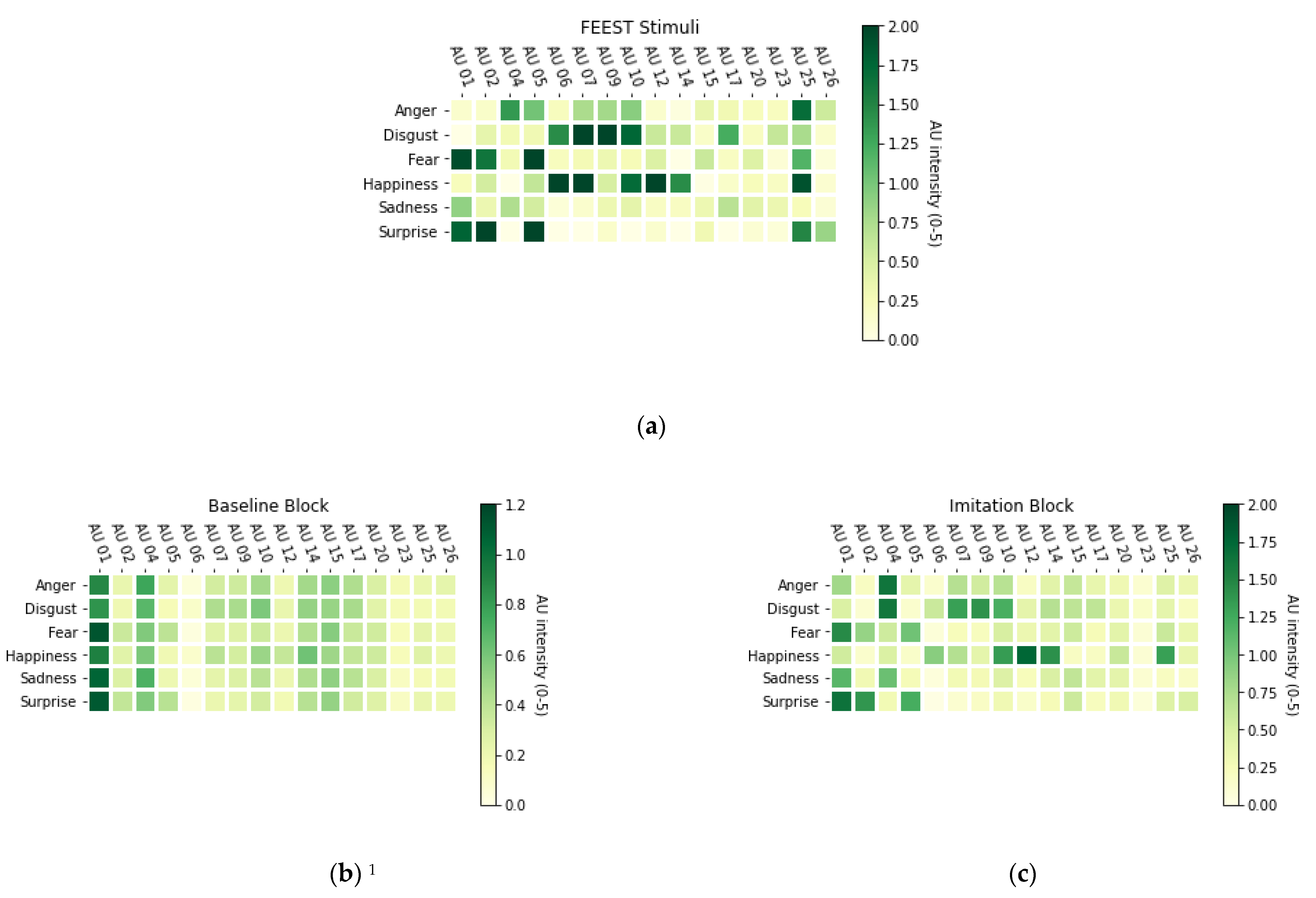

2.2.2. Stimuli

2.3. Research Design

2.4. Procedure

2.5. Data Preprocessing and Analysis

3. Results

3.1. Descriptives

3.1.1. Autistic Traits

3.1.2. Baseline Block

3.1.3. Intervention Effects

3.2. Between-Subjects Effects

3.2.1. Repeated Measures ANCOVAs

3.2.2. Regression with AQ

3.3. Within-Subjects Effects

4. Discussion

4.1. Replication Aspects

4.2. New and Technical Aspects

4.3. Future Perspectives

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- American Psychiatric Association. 2013. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®). Washington: American Psychiatric Pub. [Google Scholar]

- Baltrušaitis, Tadas, Amir Zadeh, Yao Chong Lim, and Louis-Philippe Morency. 2018. Openface 2.0: Facial Behavior Analysis Toolkit. Paper presented at the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, May 15–19; pp. 59–66. [Google Scholar]

- Baltrušaitis, Tadas, Marwa Mahmoud, and Peter Robinson. 2015. Cross-Dataset Learning and Person-Specific Normalisation for Automatic Action Unit Detection. Paper presented at the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, May 4–8; vol. 6, pp. 1–6. [Google Scholar]

- Baron-Cohen, Simon, Sally Wheelwright, Richard Skinner, Joanne Martin, and Emma Clubley. 2001. The Autism-Spectrum Quotient (AQ): Evidence from Asperger Syndrome/High-Functioning Autism, Males and Females, Scientists and Mathematicians. Journal of Autism and Developmental Disorders 31: 5–17. [Google Scholar] [CrossRef] [PubMed]

- Bradski, G. 2000. The OpenCV Library. Dr. Dobb’s Journal of Software Tools. Available online: https://www.drdobbs.com/open-source/the-opencv-library/184404319 (accessed on 24 December 2020).

- Brewer, Rebecca, Federica Biotti, Caroline Catmur, Clare Press, Francesca Happé, Richard Cook, and Geoffrey Bird. 2016. Can Neurotypical Individuals Read Autistic Facial Expressions? Atypical Production of Emotional Facial Expressions in Autism Spectrum Disorders. Autism Research 9: 262–71. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castellano, Ginevra, Loic Kessous, and George Caridakis. 2008. Emotion Recognition through Multiple Modalities: Face, Body Gesture, Speech. In Affect and Emotion in Human-Computer Interaction. Berlin and Heidelberg: Springer, pp. 92–103. [Google Scholar]

- Chartrand, Tanya L., and Jessica L. Lakin. 2013. The Antecedents and Consequences of Human Behavioral Mimicry. Annual Review of Psychology 64: 285–308. [Google Scholar] [CrossRef] [PubMed]

- Cohn, Jeffrey F., Zara Ambadar, and Paul Ekman. 2007. Observer-Based Measurement of Facial Expression with the Facial Action Coding System. The Handbook of Emotion Elicitation and Assessment 1: 203–21. [Google Scholar]

- Darwin, Charles. 1965. The Expression of the Emotions in Man and Animals. London: John Marry. First published 1872. [Google Scholar]

- Decety, Jean, and Jessica A. Sommerville. 2003. Shared Representations between Self and Other: A Social Cognitive Neuroscience View. Trends in Cognitive Sciences 7: 527–33. [Google Scholar] [CrossRef] [PubMed]

- Dimberg, Ulf. 1982. Facial Reactions to Facial Expressions. Psychophysiology 19: 643–47. [Google Scholar] [CrossRef]

- Ekman, Paul. 2003. Darwin, Deception, and Facial Expression. Annals of the New York Academy of Sciences 1000: 205–21. [Google Scholar] [CrossRef] [Green Version]

- Ekman, Paul, and Wallace V. Friesen. 1978. Facial Action Coding Systems. Palo Alto: Consulting Psychologists Press. [Google Scholar]

- Elfenbein, Hillary Anger, and Nalini Ambady. 2002. Is There an In-Group Advantage in Emotion Recognition? Psychological Bulletin 128: 243–49. [Google Scholar] [CrossRef] [Green Version]

- Ewbank, Michael P., Elisabeth A. H. von Dem Hagen, Thomas E. Powell, Richard N. Henson, and Andrew J. Calder. 2016. The Effect of Perceptual Expectation on Repetition Suppression to Faces Is Not Modulated by Variation in Autistic Traits. Cortex 80: 51–60. [Google Scholar] [CrossRef] [Green Version]

- Ewbank, Michael P., Philip J. Pell, Thomas E. Powell, Elisabeth A. H. von dem Hagen, Simon Baron-Cohen, and Andrew J. Calder. 2017. Repetition Suppression and Memory for Faces Is Reduced in Adults with Autism Spectrum Conditions. Cerebral Cortex 27: 92–103. [Google Scholar] [CrossRef] [Green Version]

- Fasel, Beat, and Juergen Luettin. 2003. Automatic Facial Expression Analysis: A Survey. Pattern Recognition 36: 259–75. [Google Scholar] [CrossRef] [Green Version]

- Faul, Franz, Edgar Erdfelder, Albert-Georg Lang, and Axel Buchner. 2007. G* Power 3: A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences. Behavior Research Methods 39: 175–91. [Google Scholar] [CrossRef] [PubMed]

- Foglia, Lucia, and Robert A. Wilson. 2013. Embodied Cognition. Wiley Interdisciplinary Reviews: Cognitive Science 4: 319–25. [Google Scholar] [CrossRef] [PubMed]

- Freitag, Christine M., Petra Retz-Junginger, Wolfgang Retz, Christiane Seitz, Haukur Palmason, Jobst Meyer, Michael Rösler, and Alexander von Gontard. 2007. Evaluation Der Deutschen Version Des Autismus-Spektrum-Quotienten (AQ)-Die Kurzversion AQ-k. Zeitschrift Für Klinische Psychologie Und Psychotherapie 36: 280–89. [Google Scholar] [CrossRef]

- Gray, Heather M., and Linda Tickle-Degnen. 2010. A Meta-Analysis of Performance on Emotion Recognition Tasks in Parkinson’s Disease. Neuropsychology 24: 176. [Google Scholar] [CrossRef] [Green Version]

- Harms, Madeline B., Alex Martin, and Gregory L. Wallace. 2010. Facial Emotion Recognition in Autism Spectrum Disorders: A Review of Behavioral and Neuroimaging Studies. Neuropsychology Review 20: 290–322. [Google Scholar] [CrossRef] [PubMed]

- IBM Corporation. 2017. IBM SPSS Statistics for Windows. version Q3 25.0. Armonk: IBM Corporation. [Google Scholar]

- Kang, Kathleen, Dana Schneider, Stefan R. Schweinberger, and Peter Mitchell. 2018. Dissociating Neural Signatures of Mental State Retrodiction and Classification Based on Facial Expressions. Social Cognitive and Affective Neuroscience 13: 933–43. [Google Scholar] [CrossRef]

- Kasari, Connie, Marian Sigman, Peter Mundy, and Nurit Yirmiya. 1990. Affective Sharing in the Context of Joint Attention Interactions of Normal, Autistic, and Mentally Retarded Children. Journal of Autism and Developmental Disorders 20: 87–100. [Google Scholar] [CrossRef]

- Koolagudi, Shashidhar G., and K. Sreenivasa Rao. 2012. Emotion Recognition from Speech: A Review. International Journal of Speech Technology 15: 99–117. [Google Scholar] [CrossRef]

- Kowallik, Andrea E., and Stefan R. Schweinberger. 2019. Sensor-Based Technology for Social Information Processing in Autism: A Review. Sensors 19: 4787. [Google Scholar] [CrossRef] [Green Version]

- Kulke, Louisa, Dennis Feyerabend, and Annekathrin Schacht. 2020. A Comparison of the Affectiva IMotions Facial Expression Analysis Software with EMG for Identifying Facial Expressions of Emotion. Frontiers in Psychology 11: 329. [Google Scholar] [CrossRef] [PubMed]

- Künecke, Janina, Andrea Hildebrandt, Guillermo Recio, Werner Sommer, and Oliver Wilhelm. 2014. Facial EMG Responses to Emotional Expressions Are Related to Emotion Perception Ability. PLoS ONE 9: e84053. [Google Scholar] [CrossRef] [PubMed]

- Leo, Marco, Pierluigi Carcagnì, Cosimo Distante, Paolo Spagnolo, Pier Luigi Mazzeo, Anna Chiara Rosato, Serena Petrocchi, Chiara Pellegrino, Annalisa Levante, Filomena De Lumè, and et al. 2018. Computational Assessment of Facial Expression Production in ASD Children. Sensors 18: 3993. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lewis, Michael B., and Emily Dunn. 2017. Instructions to Mimic Improve Facial Emotion Recognition in People with Sub-Clinical Autism Traits. Quarterly Journal of Experimental Psychology 70: 2357–70. [Google Scholar] [CrossRef] [PubMed]

- Maas, Cora J. M., and Joop J. Hox. 2005. Sufficient Sample Sizes for Multilevel Modeling. Methodology 1: 86–92. [Google Scholar] [CrossRef] [Green Version]

- McIntosh, Daniel N., Aimee Reichmann-Decker, Piotr Winkielman, and Julia L Wilbarger. 2006. When the Social Mirror Breaks: Deficits in Automatic, but Not Voluntary, Mimicry of Emotional Facial Expressions in Autism. Developmental Science 9: 295–302. [Google Scholar] [CrossRef]

- McKinney, Wes. 2010. Data Structures for Statistical Computing in Python. Paper presented at the 9th Python in Science Conference, Austin, TX, USA, June 3–July 3; vol. 445, pp. 51–56. [Google Scholar]

- Meltzoff, Andrew N., and M. Keith Moore. 1977. Imitation of Facial and Manual Gestures by Human Neonates. Science 198: 75–78. [Google Scholar] [CrossRef] [Green Version]

- Nijhof, Annabel D., Marcel Brass, and Jan R. Wiersema. 2017. Spontaneous Mentalizing in Neurotypicals Scoring High versus Low on Symptomatology of Autism Spectrum Disorder. Psychiatry Research 258: 15–20. [Google Scholar] [CrossRef] [Green Version]

- Oberman, Lindsay M., Piotr Winkielman, and Vilayanur S. Ramachandran. 2007. Face to Face: Blocking Facial Mimicry Can Selectively Impair Recognition of Emotional Expressions. Social Neuroscience 2: 167–78. [Google Scholar] [CrossRef]

- Oberman, Lindsay M., Piotr Winkielman, and Vilayanur S. Ramachandran. 2009. Slow Echo: Facial EMG Evidence for the Delay of Spontaneous, but Not Voluntary, Emotional Mimicry in Children with Autism Spectrum Disorders. Developmental Science 12: 510–20. [Google Scholar] [CrossRef]

- Olderbak, Sally, and Oliver Wilhelm. 2020. Overarching Principles for the Organization of Socioemotional Constructs. Current Directions in Psychological Science 29: 63–70. [Google Scholar] [CrossRef]

- Olderbak, Sally, Oliver Wilhelm, Andrea Hildebrandt, and Jordi Quoidbach. 2019. Sex Differences in Facial Emotion Perception Ability across the Lifespan. Cognition and Emotion 33: 579–88. [Google Scholar] [CrossRef] [PubMed]

- Peirce, Jonathan W. 2007. PsychoPy—Psychophysics Software in Python. Journal of Neuroscience Methods 162: 8–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pham, Trinh Thi Doan, Sesong Kim, Yucheng Lu, Seung-Won Jung, and Chee-Sun Won. 2019. Facial action units-based image retrieval for facial expression recognition. IEEE Access 7: 5200–7. [Google Scholar] [CrossRef]

- Philip, Ruth C. M., Heather C. Whalley, Andrew C. Stanfield, Reiner Heinrich Sprengelmeyer, Isabel M. Santos, Andrew W. Young, Anthony P. Atkinson, A. J. Calder, E. C. Johnstone, S. M. Lawrie, and et al. 2010. Deficits in Facial, Body Movement and Vocal Emotional Processing in Autism Spectrum Disorders. Psychological Medicine 40: 1919–29. [Google Scholar] [CrossRef] [Green Version]

- Rizzolatti, Giacomo, and Laila Craighero. 2004. The Mirror-Neuron System. Annu. Rev. Neurosci. 27: 169–92. [Google Scholar] [CrossRef] [Green Version]

- Rizzolatti, Giacomo, Luciano Fadiga, Vittorio Gallese, and Leonardo Fogassi. 1996. Premotor Cortex and the Recognition of Motor Actions. Cognitive Brain Research 3: 131–41. [Google Scholar] [CrossRef]

- Samadiani, Najmeh, Guangyan Huang, Borui Cai, Wei Luo, Chi-Hung Chi, Yong Xiang, and Jing He. 2019. A Review on Automatic Facial Expression Recognition Systems Assisted by Multimodal Sensor Data. Sensors 19: 1863. [Google Scholar] [CrossRef] [Green Version]

- Schirmer, Annett, and Ralph Adolphs. 2017. Emotion Perception from Face, Voice, and Touch: Comparisons and Convergence. Trends in Cognitive Sciences 21: 216–28. [Google Scholar] [CrossRef] [Green Version]

- Smith, Isabel M., and Susan E. Bryson. 1994. Imitation and Action in Autism: A Critical Review. Psychological Bulletin 116: 259. [Google Scholar] [CrossRef]

- Sommet, Nicolas, and Davide Morselli. 2017. Keep Calm and Learn Multilevel Logistic Modeling: A Simplified Three-Step Procedure Using Stata, R, Mplus, and SPSS. International Review of Social Psychology 30: 203–18. [Google Scholar] [CrossRef] [Green Version]

- Sonnby-Borgström, Marianne, Peter Jönsson, and Owe Svensson. 2008. Gender Differences in Facial Imitation and Verbally Reported Emotional Contagion from Spontaneous to Emotionally Regulated Processing Levels. Scandinavian Journal of Psychology 49: 111–22. [Google Scholar] [CrossRef] [PubMed]

- Stel, Mariëlle, Claudia van den Heuvel, and Raymond C. Smeets. 2008. Facial Feedback Mechanisms in Autistic Spectrum Disorders. Journal of Autism and Developmental Disorders 38: 1250–58. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Strack, Fritz, Leonard L. Martin, and Sabine Stepper. 1988. Inhibiting and Facilitating Conditions of the Human Smile: A Nonobtrusive Test of the Facial Feedback Hypothesis. Journal of Personality and Social Psychology 54: 768. [Google Scholar] [CrossRef]

- Uljarevic, Mirko, and Antonia Hamilton. 2013. Recognition of Emotions in Autism: A Formal Meta-Analysis. Journal of Autism and Developmental Disorders 43: 1517–26. [Google Scholar] [CrossRef]

- Velusamy, Sudha, Hariprasad Kannan, Balasubramanian Anand, Anshul Sharma, and Bilva Navathe. 2011. A Method to Infer Emotions from Facial Action Units. Paper presented at the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, May 22–27; pp. 2028–31. [Google Scholar]

- Virtanen, Pauli, Ralf Gommers, Travis E. Oliphant, Matt Haberland, Tyler Reddy, David Cournapeau, Evgeni Burovski, Pearu Peterson, Warren Weckesser, Jonathan Bright, and et al. 2020. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods 17: 261–72. [Google Scholar] [CrossRef] [Green Version]

- Wallbott, Harald G. 1991. Recognition of Emotion from Facial Expression via Imitation? Some Indirect Evidence for an Old Theory. British Journal of Social Psychology 30: 207–19. [Google Scholar] [CrossRef]

- Williams, Justin H. G., Andrew Whiten, Thomas Suddendorf, and David I. Perrett. 2001. Imitation, Mirror Neurons and Autism. Neuroscience & Biobehavioral Reviews 25: 287–95. [Google Scholar]

- Young, Andrew W., David Perrett, Andrew J. Calder, Rainer Sprengelmeyer, and Paul Ekman. 2002. Facial Expressions of Emotion: Stimuli and Tests (FEEST). Bury St. Edmunds: Thames Valley Test Company. [Google Scholar]

- Young, Andrew W., Sascha Frühholz, and Stefan R. Schweinberger. 2020. Face and Voice Perception: Understanding Commonalities and Differences. Trends in Cognitive Sciences 24: 398–410. [Google Scholar] [CrossRef]

| AU Number | Facial Action Code Name 1 | Muscular Basis 1 | Associated Emotional Expressions 2 |

|---|---|---|---|

| 01 | Inner Brow Raiser | Frontalis, Pars medialis | Fear, Sadness, Surprise |

| 02 | Outer Brow Raiser | Frontalis, Pars lateralis | Surprise |

| 04 | Brow Lowerer | Depressor, Glabelle, Depressor supercilii, Corrugator | Fear, Sadness, Disgust; Anger |

| 05 | Upper Lid Raiser | Levator palpebrae superioris | Surprise, Fear |

| 06 | Cheek Raiser | Orbicularis oculi, Pars orbitalis | Happiness |

| 07 | Lid Tightener | Orbicularis oculi, pars palpebralis | Anger, Disgust |

| 09 | Nose Wrinkler | Levator labii superioris alaquae nasi | Disgust |

| 10 | Upper Lip Raiser | Levator labii superioris, Caput infraorbitalis | Happiness |

| 12 | Lip Corner Puller | Zygomaticus major | Happiness |

| 14 | Dimpler | Buccinator | |

| 15 | Lip Corner Depressor | Depressor anguli oris (Triangularis) | Sadness |

| 17 | Chin Raiser | Mentalis | Anger, Sadness, Disgust |

| 20 | Lip Stretcher | Risorius with platysma | Fear |

| 23 | Lip Tightener | Orbicularis oris | Anger |

| 25 | Lips Part | Depressor Labii inferioris, or relaxation of Mentalis, or Orbicularis Oris | |

| 26 | Jaw Drop | Masetter; relaxed Temporalis and internal Pterygoid | Happiness |

| Baseline Block | Imitation Block | Change | |||||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | Cohen’s d 1 | 95% CI 2 | |

| FER accuracy 3 | 0.663 | 0.067 | 0.675 | 0.066 | 0.012 | 0.177 | [−0.158; 0.512] |

| Imitation 4 | 0.108 | 0.09 | 0.279 | 0.09 | 0.171 | 1.963 | [1.535; 2.337] |

| Effect | Model 1 | Model 2 | Model 3 |

|---|---|---|---|

| Fixed effects | |||

| Intercept | 1.10 *** (0.16) | 1.04 *** (0.17) | 1.04 *** (0.16) |

| Level-1 | |||

| Block (=1) | 0.07 (0.04) | 0.06 (0.04) | |

| Imitation | −0.07 (0.11) | −0.05 (0.11) | |

| Imitation * Block (=1) 2 | 0.40 ** (0.15) | 0.40 ** (0.15) | |

| Level-2 | |||

| AQ | −0.01(0.01) | −0.02 † (0.01) | |

| Cross-level | |||

| AQ * imitation | −0.01 (0.01) | ||

| AQ * Block (=1) 2 | 0.01 ** (0.01) | ||

| Random Effects | |||

| Variance component | |||

| Level-1 (stimulus) | 3.318 *** (0.425) | 3.271 *** (0.419) | 3.232 *** (0.419) |

| Level-2 (participant) | 0.176 *** (0.038) | 0.177 *** (0.039) | 0.177 *** (0.039) |

| Goodness of fit | |||

| Deviance 1 | 78,927.384 | 77,992.939 | 77,999.512 |

| Δχ 2 | 934.445 | 927.863 | |

| Δdf | 4 | 6 | |

| p | 0.000 | 0.000 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kowallik, A.E.; Pohl, M.; Schweinberger, S.R. Facial Imitation Improves Emotion Recognition in Adults with Different Levels of Sub-Clinical Autistic Traits. J. Intell. 2021, 9, 4. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence9010004

Kowallik AE, Pohl M, Schweinberger SR. Facial Imitation Improves Emotion Recognition in Adults with Different Levels of Sub-Clinical Autistic Traits. Journal of Intelligence. 2021; 9(1):4. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence9010004

Chicago/Turabian StyleKowallik, Andrea E., Maike Pohl, and Stefan R. Schweinberger. 2021. "Facial Imitation Improves Emotion Recognition in Adults with Different Levels of Sub-Clinical Autistic Traits" Journal of Intelligence 9, no. 1: 4. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence9010004