Bridging Brain and Cognition: A Multilayer Network Analysis of Brain Structural Covariance and General Intelligence in a Developmental Sample of Struggling Learners

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

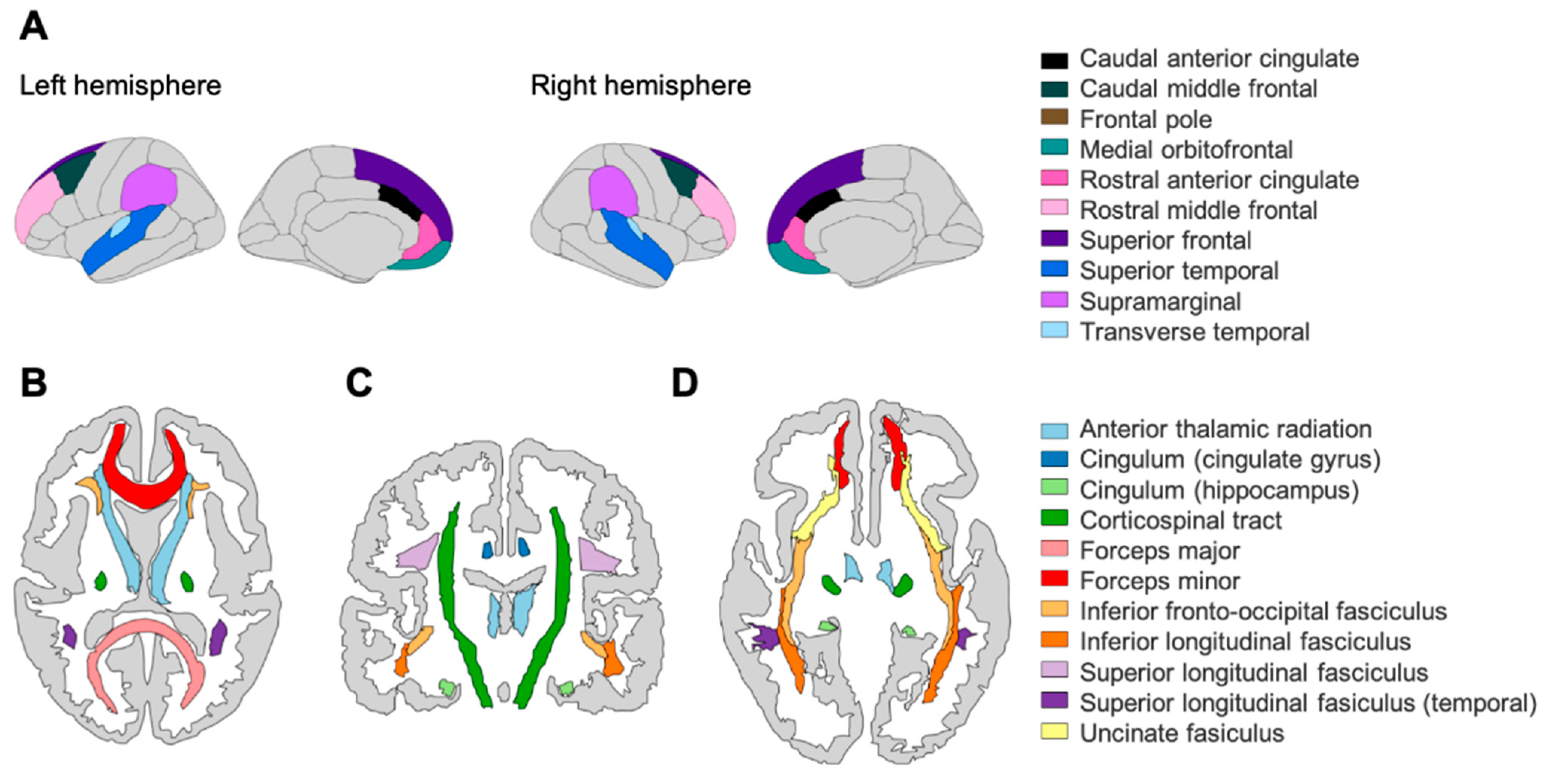

2.2. Structural Neuroimaging: Cortical Volume (CV) and Fractional Anisotropy (FA)

2.3. Network Estimation Methods

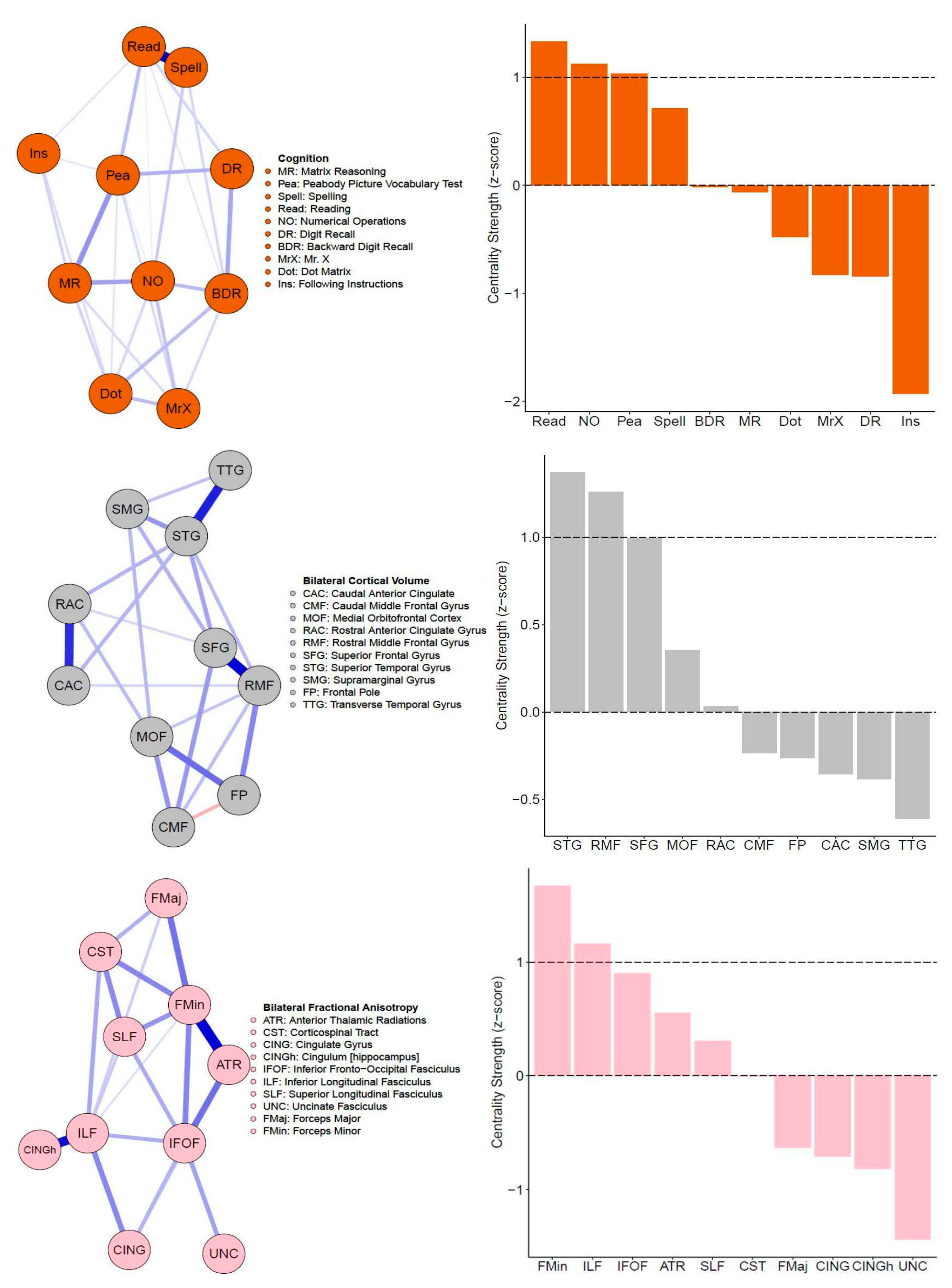

2.4. Node Strength Centrality (Single-Layer Networks)

2.5. Community Detection and Bridge Strength Centrality (Multilayer Networks)

2.6. Node Centrality Stability (Single and Multilayer Networks)

3. Results

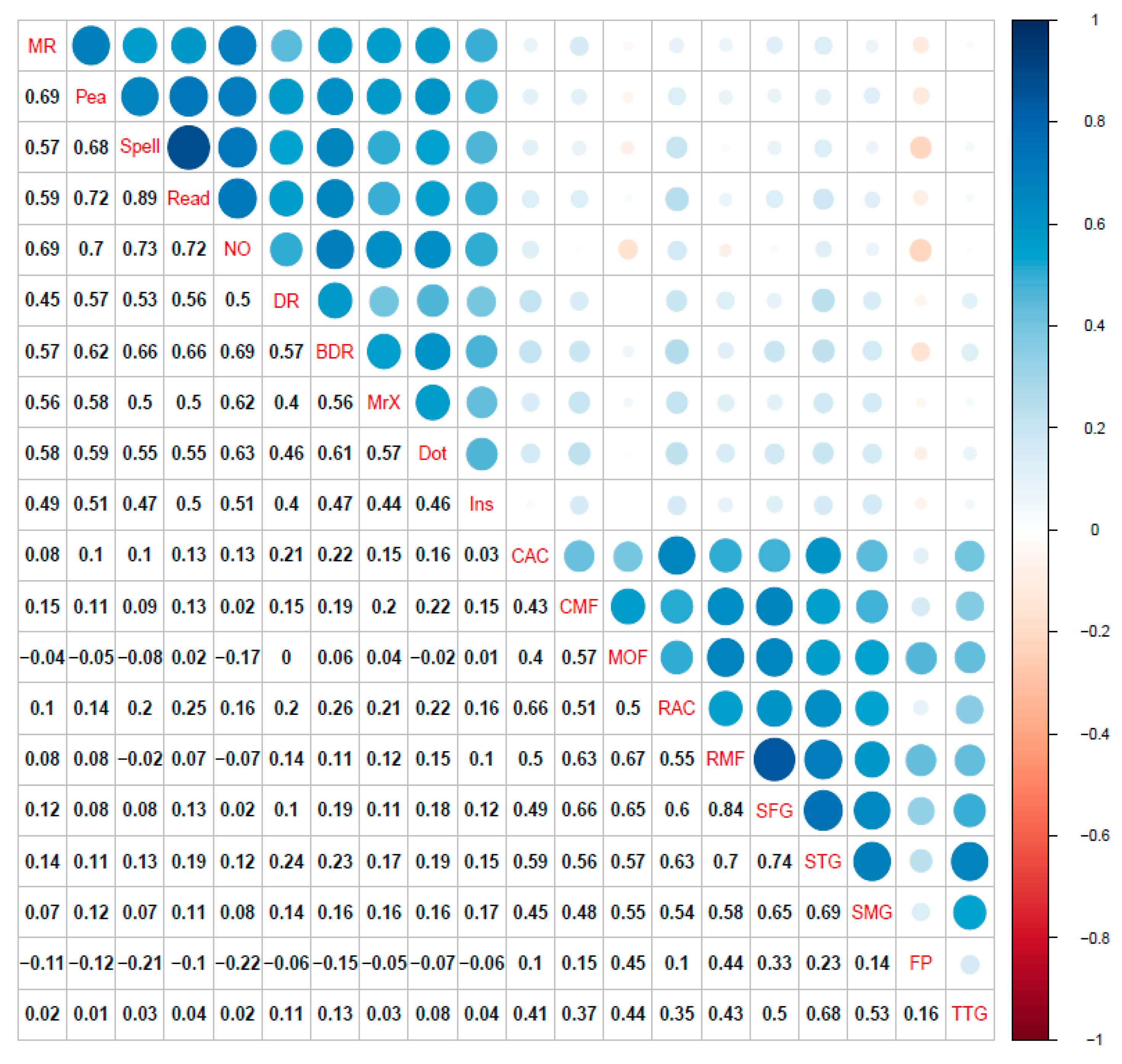

3.1. Single-Layer Network Models (Cognitive, Cortical Volume, and Fractional Anisotropy)

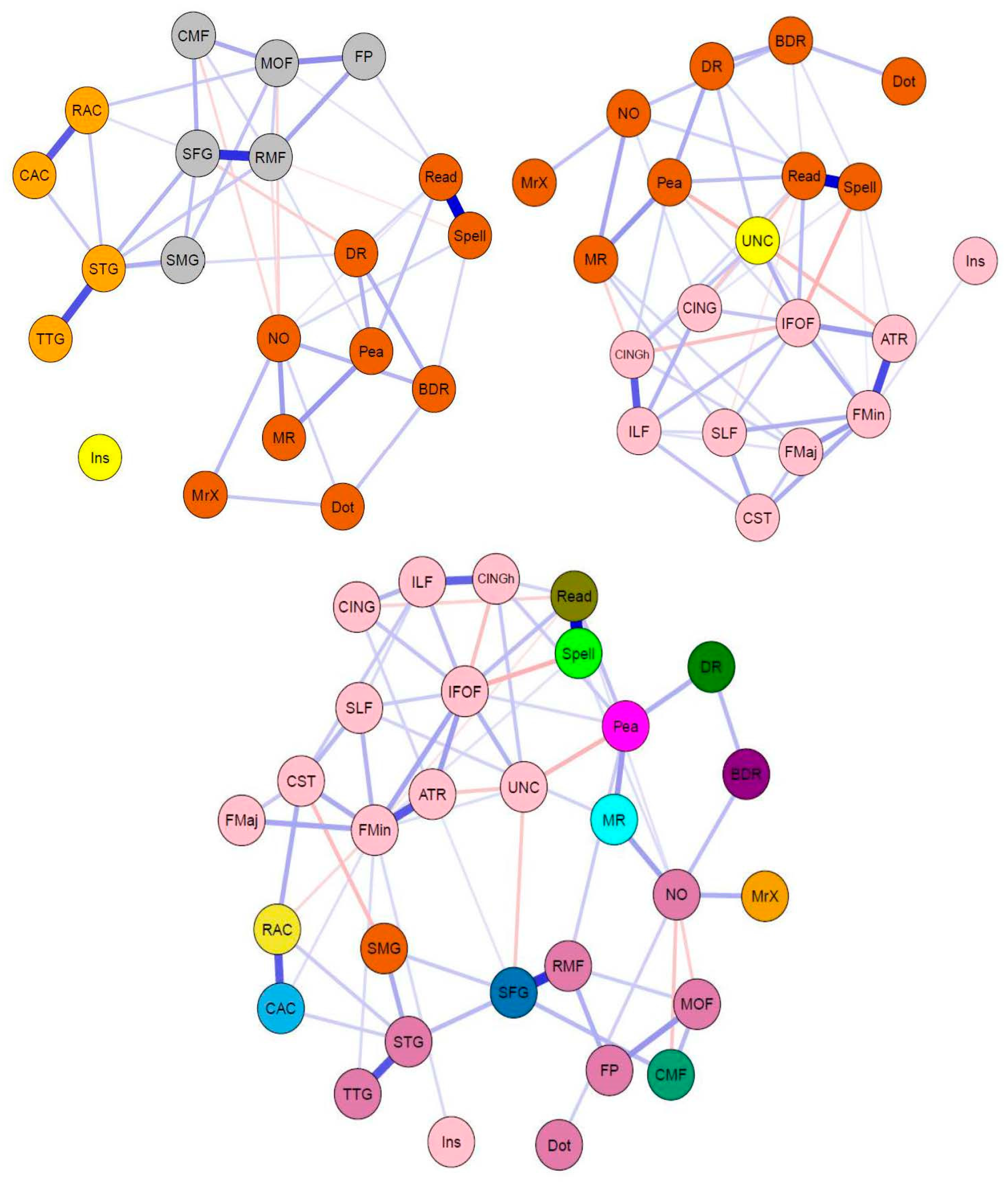

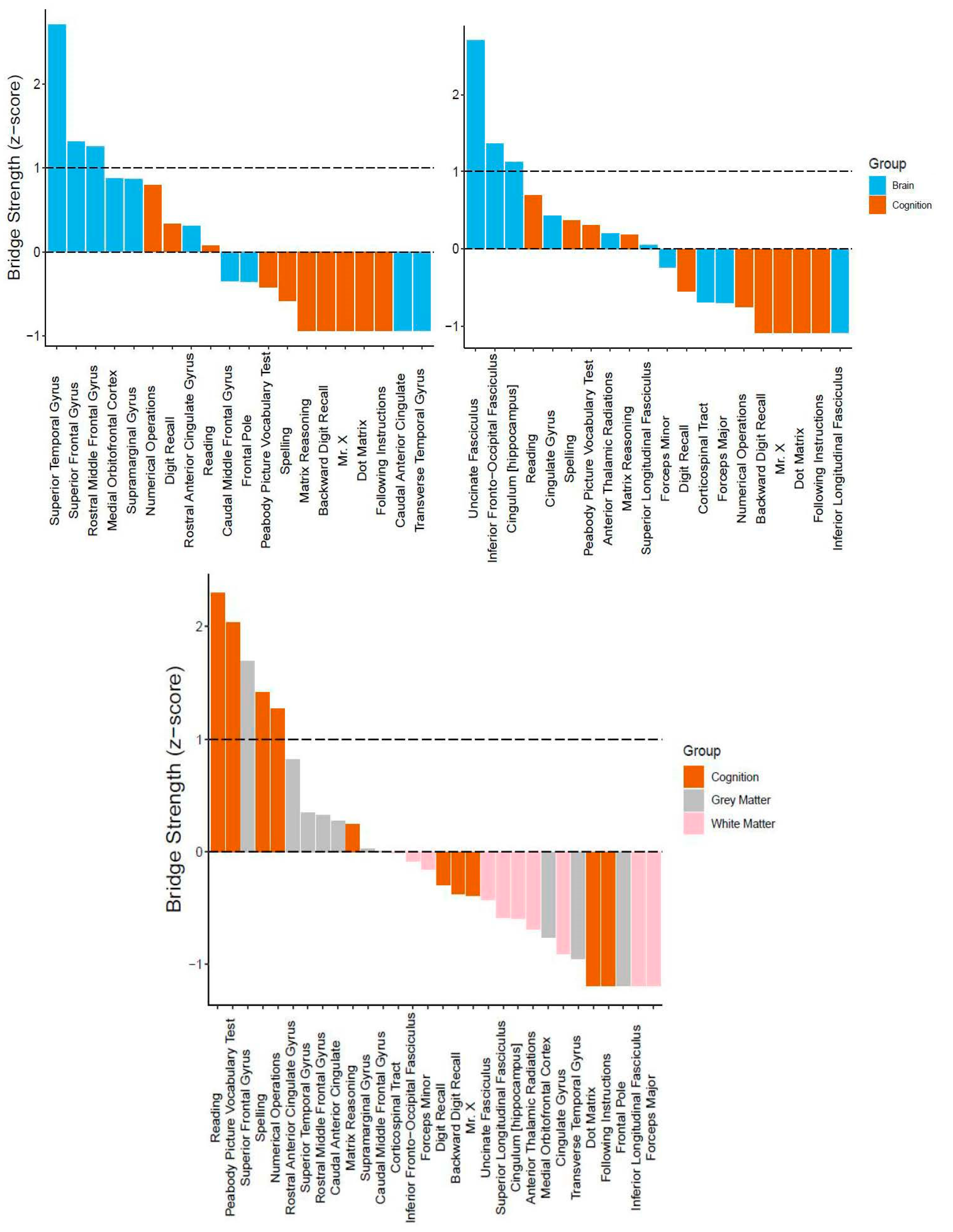

3.2. Bridging the Gap: Multilayer Networks

4. Discussion

4.1. Summary of Main Findings

4.2. Interpretation of Network Models and Community Detection Analyses

4.3. Limitations of the Current Study

4.4. Future Directions toward Theory Building in Cognitive Neuroscience

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Akarca, Danyal, Petra E. Vértes, Edward T. Bullmore, the CALM Team, and Duncan E. Astle. 2020. A Generative Network Model of Neurodevelopment. BioRxiv. [Google Scholar] [CrossRef]

- Alexander-Bloch, Aaron, Jay N. Giedd, and Ed Bullmore. 2013. Imaging Structural Co-Variance between Human Brain Regions. Nature Reviews Neuroscience 14: 322–36. [Google Scholar] [CrossRef] [Green Version]

- Alloway, Tracy P. 2007. Automated Working Memory Assessment (AWMA). London: Harcourt Assessment. [Google Scholar]

- Amestoy, Patrick R., Adelchi Azzalini, Tamas Badics, Gregory Benison, Adrian Bowman, Walter Böhm, Keith Briggs, Jeroen Bruggeman, Juergen Buchmueller, Carter T. Butts, and et al. 2020. Igraph: Network Analysis and Visualization (Version 1.2.6). Available online: https://CRAN.R-project.org/package=igraph (accessed on 11 June 2021).

- Avants, Brian B., Charles L. Epstein, Murray Grossman, and James C. Gee. 2008. Symmetric Diffeomorphic Image Registration with Cross-Correlation: Evaluating Automated Labeling of Elderly and Neurodegenerative Brain. Medical Image Analysis 12: 26–41. [Google Scholar] [CrossRef] [Green Version]

- Barabási, Albert-László. 2016. Network Science. Cambridge: Cambridge University Press, Available online: http://networksciencebook.com/ (accessed on 11 June 2021).

- Barbey, Aron K. 2018. Network Neuroscience Theory of Human Intelligence. Trends in Cognitive Sciences 22: 8–20. [Google Scholar] [CrossRef] [Green Version]

- Bassett, Danielle Smith, and Ed Bullmore. 2006. Small-World Brain Networks. The Neuroscientist 12: 512–23. [Google Scholar] [CrossRef] [PubMed]

- Bassett, Danielle S., and Edward T. Bullmore. 2017. Small-World Brain Networks Revisited. The Neuroscientist 23: 499–516. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bassett, Danielle S., and Olaf Sporns. 2017. Network Neuroscience. Nature Neuroscience 20: 353–64. [Google Scholar] [CrossRef] [Green Version]

- Basten, Ulrike, Kirsten Hilger, and Christian J. Fiebach. 2015. Where Smart Brains Are Different: A Quantitative Meta-Analysis of Functional and Structural Brain Imaging Studies on Intelligence. Intelligence 51: 10–27. [Google Scholar] [CrossRef]

- Bathelt, Joe, Hilde M. Geurts, and Denny Borsboom. 2020. More than the Sum of Its Parts: Merging Network Psychometrics and Network Neuroscience with Application in Autism. BioRxiv. [Google Scholar] [CrossRef]

- Bertolero, Max A., B. T. Thomas Yeo, and Mark D’Esposito. 2017. The Diverse Club. Nature Communications 8: 1277. [Google Scholar] [CrossRef] [PubMed]

- Bertolero, Maxwell A., B. T. Thomas Yeo, Danielle S. Bassett, and Mark D’Esposito. 2018. A Mechanistic Model of Connector Hubs, Modularity and Cognition. Nature Human Behaviour 2: 765–77. [Google Scholar] [CrossRef]

- Bianconi, Ginestra. 2018. Multilayer Networks: Structure and Function. Oxford: Oxford University Press. [Google Scholar]

- Borsboom, Denny. 2017. A Network Theory of Mental Disorders. World Psychiatry 16: 5–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bullmore, Ed, and Olaf Sporns. 2012. The Economy of Brain Network Organization. Nature Reviews Neuroscience 13: 336–49. [Google Scholar] [CrossRef] [PubMed]

- Calvin, Catherine M., Ian J. Deary, Candida Fenton, Beverly A. Roberts, Geoff Der, Nicola Leckenby, and G. David Batty. 2011. Intelligence in Youth and All-Cause-Mortality: Systematic Review with Meta-Analysis. International Journal of Epidemiology 40: 626–44. [Google Scholar] [CrossRef]

- Casey, B. J., Tariq Cannonier, May I. Conley, Alexandra O. Cohen, Deanna M. Barch, Mary M. Heitzeg, Mary E. Soules, Theresa Teslovich, Danielle V. Dellarco, Hugh Garavan, and et al. 2018. The Adolescent Brain Cognitive Development (ABCD) Study: Imaging Acquisition across 21 Sites. Developmental Cognitive Neuroscience 32: 43–54. [Google Scholar] [CrossRef] [PubMed]

- Cattell, Raymond B. 1971. Abilities: Their Structure, Growth, and Action. Oxford: Houghton Mifflin. [Google Scholar]

- Dablander, Fabian, and Max Hinne. 2019. Node Centrality Measures Are a Poor Substitute for Causal Inference. Scientific Reports 9: 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dale, Anders M., Bruce Fischl, and Martin I. Sereno. 1999. Cortical Surface-Based Analysis: I. Segmentation and Surface Reconstruction. NeuroImage 9: 179–94. [Google Scholar] [CrossRef]

- de Mooij, Susanne M. M., Richard N. A. Henson, Lourens J. Waldorp, and Rogier A. Kievit. 2018. Age Differentiation within Gray Matter, White Matter, and between Memory and White Matter in an Adult Life Span Cohort. The Journal of Neuroscience 38: 5826–36. [Google Scholar] [CrossRef] [Green Version]

- Desikan, Rahul S., Florent Ségonne, Bruce Fischl, Brian T. Quinn, Bradford C. Dickerson, Deborah Blacker, Randy L. Buckner, Anders M. Dale, R. Paul Maguire, Bradley T. Hyman, and et al. 2006. An Automated Labeling System for Subdividing the Human Cerebral Cortex on MRI Scans into Gyral Based Regions of Interest. NeuroImage 31: 968–80. [Google Scholar] [CrossRef]

- Dunn, Lloyd M., and Douglas M. Dunn. 2007. PPVT-4: Peabody Picture Vocabulary Test. London and San Antonio: Pearson Assessments. [Google Scholar]

- Epskamp, Sacha, and Eiko I. Fried. 2018. A Tutorial on Regularized Partial Correlation Networks. Psychological Methods 23: 617–34. [Google Scholar] [CrossRef] [Green Version]

- Epskamp, Sacha, and Eiko I. Fried. 2020. Bootnet: Bootstrap Methods for Various Network Estimation Routines (Version 1.4.3). Available online: https://CRAN.R-project.org/package=bootnet (accessed on 11 June 2021).

- Epskamp, Sacha, Denny Borsboom, and Eiko I. Fried. 2018. Estimating Psychological Networks and Their Accuracy: A Tutorial Paper. Behavior Research Methods 50: 195–212. [Google Scholar] [CrossRef] [Green Version]

- Epskamp, Sacha, Giulio Costantini, Jonas Haslbeck, Adela Isvoranu, Angelique O. J. Cramer, Lourens J. Waldorp, Verena D. Schmittmann, and Denny Borsboom. 2020. Qgraph: Graph Plotting Methods, Psychometric Data Visualization and Graphical Model Estimation (Version 1.6.5). Available online: https://CRAN.R-project.org/package=qgraph (accessed on 11 June 2021).

- Fischl, Bruce, and Anders M. Dale. 2000. Measuring the Thickness of the Human Cerebral Cortex from Magnetic Resonance Images. Proceedings of the National Academy of Sciences 97: 11050–55. [Google Scholar] [CrossRef] [Green Version]

- Fischl, Bruce, Martin I. Sereno, and Anders M. Dale. 1999. Cortical Surface-Based Analysis. II: Inflation, Flattening, and a Surface-Based Coordinate System. NeuroImage 9: 195–207. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fischl, Bruce, David H. Salat, Evelina Busa, Marilyn Albert, Megan Dieterich, Christian Haselgrove, and Andre van der Kouwe. 2002. Whole Brain Segmentation: Automated Labeling of Neuroanatomical Structures in the Human Brain. Neuron 33: 341–55. [Google Scholar] [CrossRef] [Green Version]

- Fornito, Alex, and Edward T. Bullmore. 2012. Connectomic Intermediate Phenotypes for Psychiatric Disorders. Frontiers in Psychiatry 3: 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fornito, Alex, Andrew Zalesky, and Edward Bullmore. 2016. Fundamentals of Brain Network Analysis. London, San Diego, Cambridge, and Oxford: Academic Press. [Google Scholar]

- Fried, Eiko I. 2020. Lack of Theory Building and Testing Impedes Progress in The Factor and Network Literature. Psychological Inquiry 31: 271–88. [Google Scholar] [CrossRef]

- Fried, Eiko I., and Angélique O. J. Cramer. 2017. Moving Forward: Challenges and Directions for Psychopathological Network Theory and Methodology. Perspectives on Psychological Science 12: 999–1020. [Google Scholar] [CrossRef]

- Fried, Eiko I., and Donald J. Robinaugh. 2020. Systems All the Way down: Embracing Complexity in Mental Health Research. BMC Medicine 18: 205. [Google Scholar] [CrossRef]

- Friedman, Jerome, Trevor Hastie, and Robert Tibshirani. 2008. Sparse Inverse Covariance Estimation with the Graphical Lasso. Biostatistics 9: 432–41. [Google Scholar] [CrossRef] [Green Version]

- Gates, Kathleen M., Teague Henry, Doug Steinley, and Damien A. Fair. 2016. A Monte Carlo Evaluation of Weighted Community Detection Algorithms. Frontiers in Neuroinformatics 10: 45. [Google Scholar] [CrossRef] [Green Version]

- Gathercole, Susan E., Elisabet Service, Graham J. Hitch, Anne-Marie Adams, and Amanda J. Martin. 1999. Phonological Short-Term Memory and Vocabulary Development: Further Evidence on the Nature of the Relationship. Applied Cognitive Psychology 13: 65–77. [Google Scholar] [CrossRef]

- Gathercole, Susan E., Emily Durling, Matthew Evans, Sarah Jeffcock, and Sarah Stone. 2008. Working Memory Abilities and Children’s Performance in Laboratory Analogues of Classroom Activities. Applied Cognitive Psychology 22: 1019–37. [Google Scholar] [CrossRef]

- Girn, Manesh, Caitlin Mills, and Kalina Christoff. 2019. Linking Brain Network Reconfiguration and Intelligence: Are We There Yet? Trends in Neuroscience and Education 15: 62–70. [Google Scholar] [CrossRef]

- Graham, Mark S., Ivana Drobnjak, and Hui Zhang. 2016. Realistic Simulation of Artefacts in Diffusion MRI for Validating Post-Processing Correction Techniques. NeuroImage 125: 1079–94. [Google Scholar] [CrossRef] [Green Version]

- Gu, Shi, Fabio Pasqualetti, Matthew Cieslak, Qawi K. Telesford, Alfred B. Yu, Ari E. Kahn, John D. Medaglia, Jean M. Vettel, Michael B. Miller, Scott T. Grafton, and et al. 2015. Controllability of Structural Brain Networks. Nature Communications 6: 1–10. [Google Scholar] [CrossRef] [PubMed]

- Hegelund, Emilie Rune, Trine Flensborg-Madsen, Jesper Dammeyer, and Erik Lykke Mortensen. 2018. Low IQ as a Predictor of Unsuccessful Educational and Occupational Achievement: A Register-Based Study of 1,098,742 Men in Denmark 1968–2016. Intelligence 71: 46–53. [Google Scholar] [CrossRef]

- Henry, Teague R., Donald Robinaugh, and Eiko I. Fried. 2020. On the Control of Psychological Networks. PsyArXiv. [Google Scholar] [CrossRef] [Green Version]

- Hilland, Eva, Nils I. Landrø, Brage Kraft, Christian K. Tamnes, Eiko I. Fried, Luigi A. Maglanoc, and Rune Jonassen. 2020. Exploring the Links between Specific Depression Symptoms and Brain Structure: A Network Study. Psychiatry and Clinical Neurosciences 74: 220–21. [Google Scholar] [CrossRef] [Green Version]

- Holmes, Joni, Annie Bryant, Susan Elizabeth Gathercole, and the CALM Team. 2019. Protocol for a Transdiagnostic Study of Children with Problems of Attention, Learning and Memory (CALM). BMC Pediatrics 19: 1–11. [Google Scholar] [CrossRef] [Green Version]

- Hua, Kegang, Jiangyang Zhang, Setsu Wakana, Hangyi Jiang, Xin Li, Daniel S. Reich, Peter A. Calabresi, James J. Pekar, Peter C.M. van Zijl, and Susumu Mori. 2008. Tract Probability Maps in Stereotaxic Spaces: Analyses of White Matter Anatomy and Tract-Specific Quantification. NeuroImage 39: 336–47. [Google Scholar] [CrossRef] [Green Version]

- Jones, Payton. 2020. Networktools: Tools for Identifying Important Nodes in Networks (Version 1.2.3). Available online: https://CRAN.R-project.org/package=networktools (accessed on 11 June 2021).

- Jones, Payton J., Ruofan Ma, and Richard J. McNally. 2019. Bridge Centrality: A Network Approach to Understanding Comorbidity. Multivariate Behavioral Research, 1–15. [Google Scholar] [CrossRef]

- Jung, Rex E., and Richard J. Haier. 2007. The Parieto-Frontal Integration Theory (P-FIT) of Intelligence: Converging Neuroimaging Evidence. Behavioral and Brain Sciences 30: 135. [Google Scholar] [CrossRef]

- Kan, Kees-Jan, Han L. J. van der Maas, and Stephen Z. Levine. 2019. Extending Psychometric Network Analysis: Empirical Evidence against g in Favor of Mutualism? Intelligence 73: 52–62. [Google Scholar] [CrossRef]

- Khundrakpam, Budhachandra S., John D. Lewis, Andrew Reid, Sherif Karama, Lu Zhao, Francois Chouinard-Decorte, and Alan C. Evans. 2017. Imaging Structural Covariance in the Development of Intelligence. NeuroImage 144: 227–40. [Google Scholar] [CrossRef]

- Kievit, Rogier A., and Ivan L. Simpson-Kent. 2021. It’s About Time: Towards a Longitudinal Cognitive Neuroscience of Intelligence. In The Cambridge Handbook of Intelligence and Cognitive Neuroscience. Cambridge: Cambridge University Press. [Google Scholar]

- Kievit, Rogier A., Simon W. Davis, John Griffiths, Marta M. Correia, Cam-CAN, and Richard N. Henson. 2016. A Watershed Model of Individual Differences in Fluid Intelligence. Neuropsychologia 91: 186–98. [Google Scholar] [CrossRef] [Green Version]

- Kievit, Rogier A., Ulman Lindenberger, Ian M. Goodyer, Peter B. Jones, Peter Fonagy, Edward T. Bullmore, and Raymond J. Dolan. 2017. Mutualistic Coupling Between Vocabulary and Reasoning Supports Cognitive Development During Late Adolescence and Early Adulthood. Psychological Science 28: 1419–31. [Google Scholar] [CrossRef]

- Kievit, Rogier A., Delia Fuhrmann, Gesa Sophia Borgeest, Ivan L. Simpson-Kent, and Richard N. A. Henson. 2018. The Neural Determinants of Age-Related Changes in Fluid Intelligence: A Pre-Registered, Longitudinal Analysis in UK Biobank. Wellcome Open Research 3: 38. [Google Scholar] [CrossRef] [PubMed]

- Kievit, Rogier A., Abe D. Hofman, and Kate Nation. 2019. Mutualistic Coupling between Vocabulary and Reasoning in Young Children: A Replication and Extension of the Study by Kievit et al. (2017). Psychological Science 30: 1245–52. [Google Scholar] [CrossRef]

- Kline, Rex B. 2015. Principles and Practice of Structural Equation Modeling, 4th ed. New York: Guilford Press. [Google Scholar]

- Krogsrud, Stine K., Anders M. Fjell, Christian K. Tamnes, Håkon Grydeland, Paulina Due-Tønnessen, Atle Bjørnerud, Cassandra Sampaio-Baptista, Jesper Andersson, Heidi Johansen-Berg, and Kristine B. Walhovd. 2018. Development of White Matter Microstructure in Relation to Verbal and Visuospatial Working Memory—A Longitudinal Study. PLoS ONE 13: e0195540. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Levenstein, Daniel, Veronica A. Alvarez, Asohan Amarasingham, Habiba Azab, Richard C. Gerkin, Andrea Hasenstaub, and Ramakrishnan Iyer. 2020. On the Role of Theory and Modeling in Neuroscience. arXiv arXiv:2003.13825. Available online: http://arxiv.org/abs/2003.13825 (accessed on 11 June 2021).

- Levine, Stephen Z., and Stefan Leucht. 2016. Identifying a System of Predominant Negative Symptoms: Network Analysis of Three Randomized Clinical Trials. Schizophrenia Research 178: 17–22. [Google Scholar] [CrossRef] [PubMed]

- Mareva, Silvana, and Joni Holmes. 2020. Network Models of Learning and Cognition in Typical and Atypical Learners. September. OSF Preprints. [Google Scholar] [CrossRef]

- Marr, David, and Tomaso Poggio. 1976. From Understanding Computation to Understanding Neural Circuitry. May. Available online: https://dspace.mit.edu/handle/1721.1/5782 (accessed on 11 June 2021).

- Meunier, David, Renaud Lambiotte, and Edward T. Bullmore. 2010. Modular and Hierarchically Modular Organization of Brain Networks. Frontiers in Neuroscience 4: 200. [Google Scholar] [CrossRef] [Green Version]

- Navas-Sánchez, Francisco J., Yasser Alemán-Gómez, Javier Sánchez-Gonzalez, Juan A. Guzmán-De-Villoria, Carolina Franco, Olalla Robles, Celso Arango, and Manuel Desco. 2014. White Matter Microstructure Correlates of Mathematical Giftedness and Intelligence Quotient: White Matter Microstructure. Human Brain Mapping 35: 2619–31. [Google Scholar] [CrossRef]

- Newman, Mark E. J. 2006. Modularity and Community Structure in Networks. Proceedings of the National Academy of Sciences 103: 8577–82. [Google Scholar] [CrossRef] [Green Version]

- Nooner, Kate Brody, Stanley J. Colcombe, Russell H. Tobe, Maarten Mennes, Melissa M. Benedict, Alexis L. Moreno, and Laura J. Panek. 2012. The NKI-Rockland Sample: A Model for Accelerating the Pace of Discovery Science in Psychiatry. Frontiers in Neuroscience 6: 152. [Google Scholar] [CrossRef] [Green Version]

- Peng, Peng, Marcia Barnes, CuiCui Wang, Wei Wang, Shan Li, H. Lee Swanson, William Dardick, and Sha Tao. 2018. A Meta-Analysis on the Relation between Reading and Working Memory. Psychological Bulletin 144: 48–76. [Google Scholar] [CrossRef] [PubMed]

- Poldrack, Russell A., Chris I. Baker, Joke Durnez, Krzysztof J. Gorgolewski, Paul M. Matthews, Marcus R. Munafò, Thomas E. Nichols, Jean-Baptiste Poline, Edward Vul, and Tal Yarkoni. 2017. Scanning the Horizon: Towards Transparent and Reproducible Neuroimaging Research. Nature Reviews Neuroscience 18: 115–26. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pons, Pascal, and Matthieu Latapy. 2005. Computing Communities in Large Networks Using Random Walks. In Computer and Information Sciences—ISCIS 2005. Edited by Pinar Yolum, Tunga Güngör, Fikret Gürgen and Can Özturan. Lecture Notes in Computer Science. Berlin/Heidelberg: Springer, pp. 284–93. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. 2020. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing, Available online: https://www.R-project.org (accessed on 11 June 2021).

- Robinaugh, Donald J., Ria H. A. Hoekstra, Emma R. Toner, and Denny Borsboom. 2019. The Network Approach to Psychopathology: A Review of the Literature 2008–2018 and an Agenda for Future Research. Psychological Medicine 50: 353–66. [Google Scholar] [CrossRef]

- Rohrer, Julia M. 2018. Thinking Clearly About Correlations and Causation: Graphical Causal Models for Observational Data. Advances in Methods and Practices in Psychological Science 1: 27–42. [Google Scholar] [CrossRef] [Green Version]

- Schmank, Christopher J., Sara Anne Goring, Kristof Kovacs, and Andrew R. A. Conway. 2019. Psychometric Network Analysis of the Hungarian WAIS. Journal of Intelligence 7: 21. [Google Scholar] [CrossRef] [Green Version]

- Schmank, Christopher J., Sara Anne Goring, Kristof Kovacs, and Andrew R. A. Conway. 2021. Investigating the Structure of Intelligence Using Latent Variable and Psychometric Network Modeling: A Commentary and Reanalysis. Journal of Intelligence 9: 8. [Google Scholar] [CrossRef] [PubMed]

- Schmiedek, Florian, Martin Lövdén, Timo von Oertzen, and Ulman Lindenberger. 2020. Within-Person Structures of Daily Cognitive Performance Differ from between-Person Structures of Cognitive Abilities. PeerJ 8: e9290. [Google Scholar] [CrossRef] [PubMed]

- Seidlitz, Jakob, František Váša, Maxwell Shinn, Rafael Romero-Garcia, Kirstie J. Whitaker, Petra E. Vértes, Konrad Wagstyl, Paul Kirkpatrick Reardon, Liv Clasen, Siyuan Liu, and et al. 2018. Morphometric Similarity Networks Detect Microscale Cortical Organization and Predict Inter-Individual Cognitive Variation. Neuron 97: 231–47. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Simpson-Kent, Ivan L., Delia Fuhrmann, Joe Bathelt, Jascha Achterberg, Gesa Sophia Borgeest, and Rogier A. Kievit. 2020. Neurocognitive Reorganization between Crystallized Intelligence, Fluid Intelligence and White Matter Microstructure in Two Age-Heterogeneous Developmental Cohorts. Developmental Cognitive Neuroscience 41: 100743. [Google Scholar] [CrossRef]

- Smith, Stephen M. 2002. Fast Robust Automated Brain Extraction. Human Brain Mapping 17: 143–55. [Google Scholar] [CrossRef]

- Solé-Casals, Jordi, Josep M. Serra-Grabulosa, Rafael Romero-Garcia, Gemma Vilaseca, Ana Adan, Núria Vilaró, Núria Bargalló, and Edward T. Bullmore. 2019. Structural Brain Network of Gifted Children Has a More Integrated and Versatile Topology. Brain Structure and Function 224: 2373–83. [Google Scholar] [CrossRef]

- Spearman, Charles. 1904. ‘General Intelligence’, Objectively Determined and Measured. The American Journal of Psychology 15: 201–92. [Google Scholar] [CrossRef]

- Sporns, Olaf, and Richard F. Betzel. 2016. Modular Brain Networks. Annual Review of Psychology 67: 613–40. [Google Scholar] [CrossRef] [Green Version]

- Sporns, Olaf, Christopher J. Honey, and Rolf Kötter. 2007. Identification and Classification of Hubs in Brain Networks. PLoS ONE 2: e1049. [Google Scholar] [CrossRef]

- van Bork, Riet, Mijke Rhemtulla, Lourens J. Waldorp, Joost Kruis, Shirin Rezvanifar, and Denny Borsboom. 2019. Latent Variable Models and Networks: Statistical Equivalence and Testability. Multivariate Behavioral Research, 1–24. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- van den Heuvel, Martijn P., and Olaf Sporns. 2011. Rich-Club Organization of the Human Connectome. Journal of Neuroscience 31: 15775–86. [Google Scholar] [CrossRef] [PubMed]

- van den Heuvel, Martijn P., and Olaf Sporns. 2013. Network Hubs in the Human Brain. Trends in Cognitive Sciences 17: 683–96. [Google Scholar] [CrossRef]

- van der Maas, Han L. J., Conor V. Dolan, Raoul P. P. P. Grasman, Jelte M. Wicherts, Hilde M. Huizenga, and Maartje E. J. Raijmakers. 2006. A Dynamical Model of General Intelligence: The Positive Manifold of Intelligence by Mutualism. Psychological Review 113: 842–61. [Google Scholar] [CrossRef]

- van der Maas, Han L. J., Kees-Jan Kan, Maarten Marsman, and Claire E. Stevenson. 2017. Network Models for Cognitive Development and Intelligence. Journal of Intelligence 5: 16. [Google Scholar] [CrossRef] [Green Version]

- Wandell, Brian A. 2016. Clarifying Human White Matter. Annual Review of Neuroscience 39: 103–28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wechsler, David. 2005. Wechsler Individual Achievement Test, 2nd ed. London: Pearson. [Google Scholar]

- Wechsler, David. 2008. Wechsler Adult Intelligence Scale, 4nd ed. San Antonio: The Psychological Corporation. [Google Scholar]

- Wechsler, David. 2011. Wechsler Abbreviated Scales of Intelligence, 2nd ed. London: Pearson. [Google Scholar]

- Zhang, Shuai, and R. Malatesha Joshi. 2020. Longitudinal Relations between Verbal Working Memory and Reading in Students from Diverse Linguistic Backgrounds. Journal of Experimental Child Psychology 190: 104727. [Google Scholar] [CrossRef]

| Cognitive Domain | Task Descriptions | Mean (SD) [Range] | Missing Data | Reference |

|---|---|---|---|---|

| Crystallized Ability (gc) | Numerical Operations (NO): Participants answered written mathematical problems that increased in difficulty. | 16.1 (8.4) [0, 64] | 9.94% | Wechsler (2005) |

| Single-Word Reading (Read): Participants read aloud first a list of letters and then words that gradually increased in complexity. Correct responses required correctness and fluency. | 83.2 (24.8) [1, 130] | 2.48% | ||

| Spelling (Spell): Participants spelled words with increasing difficulty one at a time that were spoken by an examiner. | 22 (9.2) [0, 49] | 3.35% | ||

| Peabody Picture Vocabulary Test (Pea): Participants were asked to choose the picture (out of four multiple-choice options) showing the meaning of a word spoken by an examiner. | 136.8 (31.6) [8, 215] | 1.12% | Dunn and Dunn (2007) | |

| Fluid Ability (gf) | Matrix Reasoning (MR): Participants saw sequences of partial matrices and selected the response option that best completed each matrix. | 11.2 (5.6) [0, 28] | 0.12% | Wechsler (2011) |

| Working Memory (WM) | Digit Recall (DR): Participants recalled sequences of single-digit numbers given in audio format. | 24.6 (5.4) [7, 47] | 0.5% | Alloway (2007) |

| Backward Digit Recall (BDR): Same as regular digit recall but in reversed order. | 9.7 (4.4) [0, 25] | 3.11% | ||

| Dot Matrix (Dot): Participants were shown the location of a red dot in a sequence of 4 × 4 matrices and had to recollect the location and order of these sequences. | 18 (5.7) [2, 43] | 0.75% | ||

| Mr. X (MrX): Participants remembered spatial sequences of locations of a ball held by a cartoon man rotated in one of seven positions. | 9.3 (5.1) [0, 32] | 1.24% | ||

| Following Instructions (FI): Participants carried out various sequences of actions (touch and/or pick up) based on props (a box, an eraser, a folder, a pencil, or a ruler) presented in front of them. By having participants undertake actions sequentially (do X “then” do Y), increasingly longer sequences were made which increased the difficulty. Scores denote total number of correct responses. | 11.2 (4) [1, 33] | 6.83% | Gathercole et al. (2008) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simpson-Kent, I.L.; Fried, E.I.; Akarca, D.; Mareva, S.; Bullmore, E.T.; the CALM Team; Kievit, R.A. Bridging Brain and Cognition: A Multilayer Network Analysis of Brain Structural Covariance and General Intelligence in a Developmental Sample of Struggling Learners. J. Intell. 2021, 9, 32. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence9020032

Simpson-Kent IL, Fried EI, Akarca D, Mareva S, Bullmore ET, the CALM Team, Kievit RA. Bridging Brain and Cognition: A Multilayer Network Analysis of Brain Structural Covariance and General Intelligence in a Developmental Sample of Struggling Learners. Journal of Intelligence. 2021; 9(2):32. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence9020032

Chicago/Turabian StyleSimpson-Kent, Ivan L., Eiko I. Fried, Danyal Akarca, Silvana Mareva, Edward T. Bullmore, the CALM Team, and Rogier A. Kievit. 2021. "Bridging Brain and Cognition: A Multilayer Network Analysis of Brain Structural Covariance and General Intelligence in a Developmental Sample of Struggling Learners" Journal of Intelligence 9, no. 2: 32. https://0-doi-org.brum.beds.ac.uk/10.3390/jintelligence9020032