Designing Feedback Systems: Examining a Feedback Approach to Facilitation in an Online Asynchronous Professional Development Course for High School Science Teachers

Abstract

:1. Introduction

1.1. Learning as a Complex System of Feedback

1.1.1. Learning through Feedback Loops

1.1.2. Understanding Online Facilitation

1.1.3. Understanding the Implementation of Online Facilitation Systems of Feedback

1.2. Complex Facilitation System Framework

2. Methodology

2.1. Context

2.2. Pedagogical Method

2.3. Participants

2.4. Data Sources and Analysis

3. Results

3.1. Negative and Positive Impacts of the Structural Dimension of Feedback

3.1.1. Negative Responses to the Structural Dimension of Feedback

I did not use the office hours. I did not need them. I think I got everything I needed via email.

I didn’t feel like it would sort of add too much. I felt pretty comfortable with the progress that I was making already. If I felt like I was really struggling with something, that would have been an impetus for me to join, but I didn’t feel like it was necessary, so I didn’t do it.

She did tell in an email. I recall she did mention who all was in her groups, all the names of the people and that that would be people to look for in the discussion boards. And when I first saw that I was like, okay. And then I went into the discussion boards, and then I felt like it was very cumbersome to try to find names and look back and forth. I lost interest in that pretty quickly. But maybe again, if it was her with our cohort or a smaller group in a more isolated setting, I feel like that a lot more interaction, exchange would probably happen with the people in her cohort and probably with her too.

And I would hope that we would have more [synchronous meetups], rather than just having two or three. And maybe not make it mandatory, but at least if somebody wanted to join and interact with people, it’ll definitely be held.

3.1.2. Positive Responses to the Structural Dimension of Feedback

I don’t feel like it needs to be improved. The only one question I had, I posted. And I like the idea that you could post a comment or a question, so that those questions were easily seen by the facilitators.

So those synchronous meetings really kind of give us the feel of the safety net, that if we have any questions or anything, there is somebody we can ask and we will definitely get our answers. So that’s the good aspect, I think.

And so [through the synchronous meetups] we did get a chance to experience all of you, in a way. And that, I think, was very helpful.

So, from a participant’s perspective, having multiple tracks is awesome because, again, I was sort of thrown into the middle of pandemic teaching in the spring, and that’s essentially why I forgot that I had signed up for this thing. So having the ability to go back and sort of restart on the third track there was really great.

I thought it was helpful. In fact, I felt it was extremely helpful because when I started off, I could tell that there were participants that really did have the time to get into it. And I just wasn’t able to put in the effort that I should have been able to put into it. When I shifted into that second track, I felt that I was able to make better connections with those participants in that track because I was able to focus on it more.

3.2. Negative and Positive Impacts of the Cognitive Dimension of Feedback

3.2.1. Negative Responses to the Cognitive Dimension of Feedback

Honestly, I feel like I did get feedback. Honestly, I don’t remember very well. I feel like if I did, it must’ve been just a little more generic, just a little more general comments about things. It’s nothing that I felt like I needed to change or alter what I did, or nothing that really added to what I had already planned. I guess nothing’s really, to be honest, nothing’s really sticking out in terms of what the feedback was on anything like that.

Well, like I said, it was hit or miss, you never know who was going to respond. It felt bad because I posted something late and nobody had answered me.

One of my friends is enrolled in the course, and when I asked her about the feedback she received, she told me that she didn’t receive any. I was surprised, so I went and checked, and it turns out that she did receive feedback; it’s just that she didn’t get an email notifying her of its existence.

3.2.2. Positive Responses to the Cognitive Dimension of Feedback

[My facilitator], she was great. I don’t really have anything in particular. Like I’m trying to think about like how I would, if it were me in those shoes, like what would I do differently? And I really feel like she, she nailed it. Again, the quality of the ideas that she was sort of putting out there was excellent. Her pace in terms of when she was responding to the things that I put out there was really good.

I think those [the facilitator’s feedback] just inherently were probably better because they’ve done it before, and they know how to give good constructive feedback. Good feedback, real feedback. I think most of those were really good; they definitely had more information.

I think she’s very willing to share and elaborate on the stuff that she does in her classroom and willing to offer it up to the population of participants out there that are trying to do that…and she definitely has a lot of valuable resources and ideas that I can tap into that if I need to. I’ll definitely take that moving forward into the school year.

Yes. So, I’m thinking about that one class participant that I mentioned to you that he and I had gone back and forth with some feedback on one of my capstones, and my facilitator actually jumped in on that. And it was just a really nice discussion among the three of us.

3.3. Negative and Positive Impacts of the Social-Affective Dimension of Feedback

3.3.1. Negative Responses to the Social-Affective Dimension of Feedback

I want to say I know one of the facilitators had commented on one of my posts. But I know that I’ve made a connection with another person in Ohio that was teaching using the modeling method too. But I don’t remember much beyond that. I know that there was two or three people, I just don’t know if they were part of my group, my section two group, or not.

So, there was feedback, but I have no idea who those people were.

I guess the only thing I sort of wished was the email I got that said who was in my group. That came probably like week three. To have that information sooner would have been helpful. I might’ve made a bigger attempt to connect to that group.

So, my priority in completing the course in what I was sort of looking for was definitely, I wouldn’t say completing the course, but I was trying to get sort of pedagogical ideas out of it. So, resources and ideas for how to teach this type of thing for my students, I didn’t put a priority on community building. So that was definitely not something that was like top of my list in terms of what I was doing.

Yeah, I’ve received some emails from my facilitator, but they seem to be sort of automatically generated. They might be personal, but they certainly didn’t seem so anyways.

3.3.2. Positive Responses to the Social-Affective Dimension of Feedback

Like I said, the way it was this year, I definitely knew that I had a contact person I could reach out to and that there was a group of people who were giving me feedback and who were answering questions. So, I felt well-supported.

He reached out once. I’d been on vacation and he just sent a reminder, not mean or anything just saying, hey, here’s our timeline. And he did it in an effort to kind of maintain that community within our session.

4. Discussion

5. Contributions and Implications for Practice

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| # | DBIR Element | How It Guided Our Design and Implementation of the Facilitation System |

|---|---|---|

| 1 | A focus on understanding persistent problems of practice facing the various stakeholders engaging with the education system | The facilitation system was guided by feedback from: (1) The facilitation team, which included expert participants who were enrolled in the course in previous years; (2) Our participants during the duration of the course; and (3) The instructional team, which is the team responsible for curating the course content and structure. |

| 2 | A commitment to an iterative and inclusive process of collaborative design | The facilitation system went through a process of continuous iteration throughout the course. In this process, we relied on feedback from our facilitators and participants to adjust the facilitation system to meet their needs. For example, we decided to include more details in the participant engagement monitoring report based on facilitators’ feedback. |

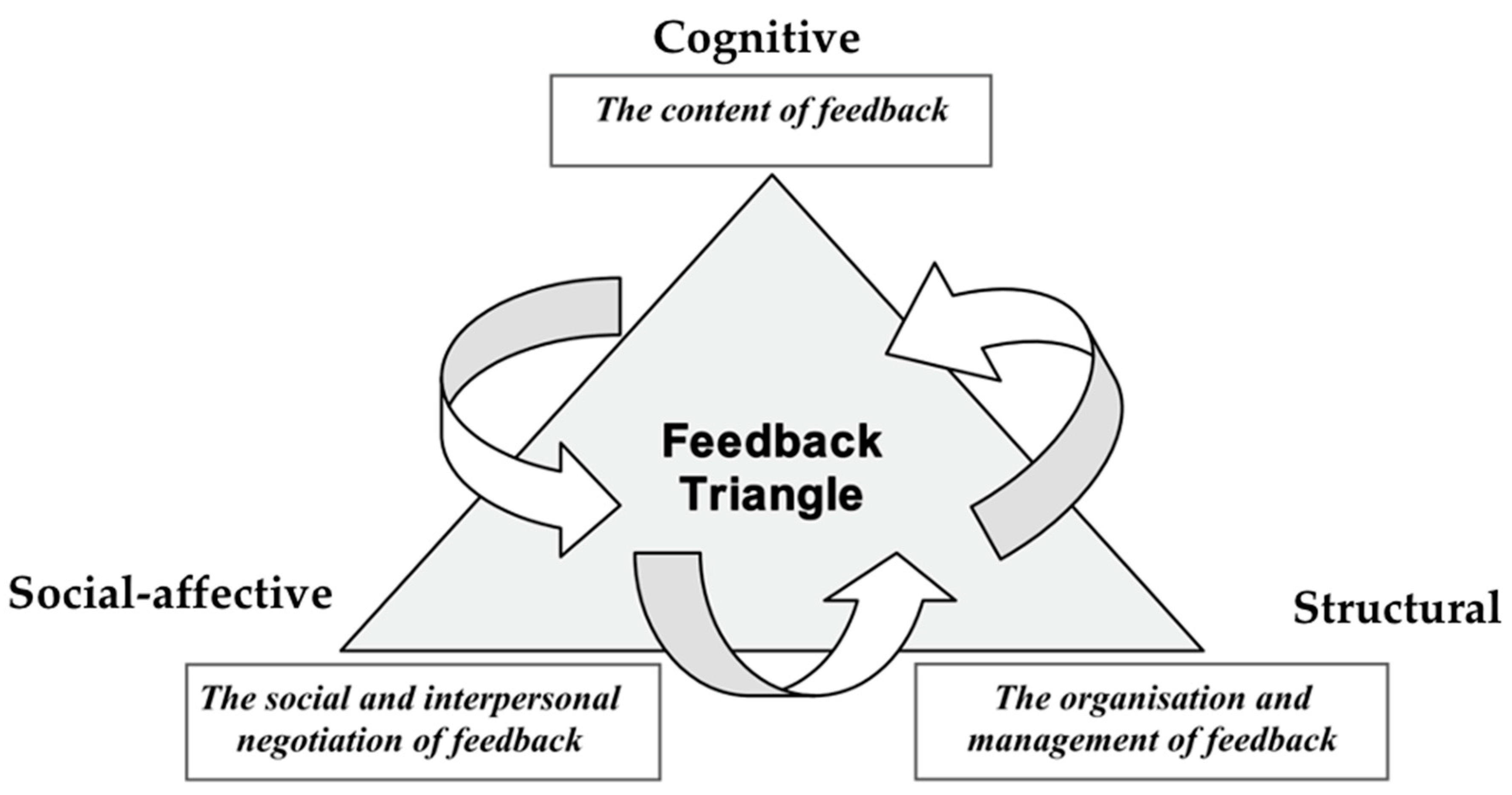

| 3 | A concern for using systematic inquiry to develop theories and knowledge related to implementation processes and student learning outcomes | Our facilitation system was guided by the feedback triangle framework, where we viewed learning as a process of closing feedback loops. Throughout the course, we offered participants different features and multiple modes for engaging with the course to support their different needs and preferences. |

| 4 | A concern for developing the capabilities of researchers, practitioners, and policymakers to influence sustainable change within systems | The facilitation system was supported by a dedicated facilitation team that followed a structured process. The team had its own dedicated infrastructural resources and tools to guide its goals. |

References

- Jeong, H.; Hmelo-Silver, C.E. Seven affordances of computer-supported collaborative learning: How to support collaborative learning? How can technologies help? Educ. Psychol. 2016, 51, 247–265. [Google Scholar] [CrossRef]

- Chen, J.; Wang, M.; Kirschner, P.A.; Tsai, C.C. The role of collaboration, computer use, learning environments, and supporting strategies in CSCL: A meta-analysis. Rev. Educ. Res. 2018, 88, 799–843. [Google Scholar] [CrossRef]

- Scardamalia, M.; Bereiter, C. Smart technology for self-organizing processes. Smart Learn. Environ. 2014, 1. [Google Scholar] [CrossRef] [Green Version]

- Ludvigsen, S.; Steier, R. Reflections and looking ahead for CSCL: Digital infrastructures, digital tools, and collaborative learning. Int. J. Comput. Collab. Learn. 2019, 14, 415–423. [Google Scholar] [CrossRef] [Green Version]

- Matuk, C.; Tissenbaum, M.; Schneider, B. Real-time orchestrational technologies in computer-supported collaborative learning: An introduction to the special issue. Int. J. Comput. Supported Collab. Learn. 2019, 14, 251–260. [Google Scholar] [CrossRef] [Green Version]

- Cabrera, D.; Cabrera, L.; Powers, E.; Solin, J.; Kushner, J. Applying systems thinking models of organizational design and change in community operational research. Eur. J. Oper. Res. 2018, 268, 932–945. [Google Scholar] [CrossRef]

- Zhou, J.; Dawson, P.; Tai, J.H.; Bearman, M. How conceptualising respect can inform feedback pedagogies. Assess. Eval. High. Educ. 2020, 46, 68–79. [Google Scholar] [CrossRef]

- Firmin, R.; Schiorring, E.; Whitmer, J.; Willett, T.; Collins, E.D.; Sujitparapitaya, S. Case study: Using MOOCs for conventional college coursework. Distance Educ. 2014, 35, 178–201. [Google Scholar] [CrossRef]

- Gayoung, L.E.; Sunyoung, K.E.; Myungsun, K.I.; Yoomi, C.H.; Ilju, R.H. A study on the development of a MOOC design model. Educ. Technol. Int. 2016, 17, 1–37. [Google Scholar]

- Jung, Y.; Lee, J. Learning engagement and persistence in massive open online courses (MOOCS). Comput. Educ. 2018, 122, 9–22. [Google Scholar] [CrossRef]

- Siemens, G.; Downes, S.; Cormier, D.; Kop, R. PLENK 2010–Personal Learning Environments, Networks and Knowledge. 2010. Available online: https://www.islandscholar.ca/islandora/object/ir%3A20500 (accessed on 17 October 2020).

- Kasch, J.; Van Rosmalen, P.; Kalz, M. A Framework towards educational scalability of open online courses. J. Univers. Comput. Sci. 2017, 23, 845–867. [Google Scholar]

- Blum-Smith, S.; Yurkofsky, M.M.; Brennan, K. Stepping back and stepping in: Facilitating learner-centered experiences in MOOCs. Comput. Educ. 2020, 160, 104042. [Google Scholar] [CrossRef] [PubMed]

- Salter, S.; Douglas, T.; Kember, D. Comparing face-to-face and asynchronous online communication as mechanisms for critical reflective dialogue. Educ. Action Res. 2017, 25, 790–805. [Google Scholar] [CrossRef]

- Parsons, S.A.; Hutchison, A.C.; Hall, L.A.; Parsons, A.W.; Ives, S.T.; Leggett, A.B. US participants’ perceptions of online professional development. Teach. Particip. Educ. Int. J. Res. Stud. 2019, 82, 33–42. [Google Scholar]

- Webb, D.C.; Nickerson, H.; Bush, J.B. A comparative analysis of online and face-to-face professional development models for CS education. In Proceedings of the SIGCSE ‘17: The 48th ACM Technical Symposium on Computer Science Education, Seattle, WA, USA, 8–11 March 2017; pp. 621–626. [Google Scholar]

- Yoon, S.A.; Miller, K.; Richman, T.; Wendel, D.; Schoenfeld, I.; Anderson, E.; Shim, J. Encouraging collaboration and building community in online asynchronous professional development: Designing for social capital. Int. J. Comput. Supported Collab. Learn. 2020, 15, 351–371. [Google Scholar] [CrossRef]

- Turvey, K.; Pachler, N. Design principles for fostering pedagogical provenance through research in technology supported learning. Comput. Educ. 2020, 146, 103736. [Google Scholar] [CrossRef]

- Sterman, J.D. Learning from evidence in a complex world. Am. J. Public Health 2006, 96, 505–514. [Google Scholar] [CrossRef]

- Wang, Z.; Gong, S.Y.; Xu, S.; Hu, X.E. Elaborated feedback and learning: Examining cognitive and motivational influences. Comput. Educ. 2019, 136, 130–140. [Google Scholar] [CrossRef]

- Hokayem, H.; Gotwals, A.W. Early elementary students’ understanding of complex ecosystems: A learning progression approach. J. Res. Sci. Teach. 2016, 53, 1524–1545. [Google Scholar] [CrossRef]

- Scott, W.R.; Davis, G.F. Organizations and Organizing: Rational, Natural and Open Systems Perspectives, 1st ed.; Routledge: Abingdon, UK, 2015. [Google Scholar]

- Lizzio, A.; Wilson, K. Feedback on assessment: Students’ perceptions of quality and effectiveness. Assess. Eval. High. Educ. 2008, 33, 263–275. [Google Scholar] [CrossRef]

- Henderson, M.; Ryan, T.; Phillips, M. The challenges of feedback in higher education. Assess. Eval. High. Educ. 2019, 44, 1237–1252. [Google Scholar] [CrossRef]

- Tarrant, S.P.; Thiele, L.P. Practice makes pedagogy–John Dewey and skills-based sustainability education. Int. J. Sustain. High. Educ. 2016, 17, 54–67. [Google Scholar] [CrossRef]

- Pardo, A. A feedback model for data-rich learning experiences. Assess. Eval. High. Educ. 2018, 43, 428–438. [Google Scholar] [CrossRef]

- Schön, D.; Argyris, C. Organizational learning II: Theory, Method and Practice; Addison-Wesley Publishing Company: Boston, MA, USA, 1996. [Google Scholar]

- Cheng, M.T.; Rosenheck, L.; Lin, C.Y.; Klopfer, E. Analyzing gameplay data to inform feedback loops in The Radix Endeavor. Comput. Educ. 2017, 111, 60–73. [Google Scholar] [CrossRef] [Green Version]

- Wiliam, D. Feedback: Part of a system. Educ. Leadersh. 2012, 70, 30–34. [Google Scholar]

- Sterman, J.; Oliva, R.; Linderman, K.W.; Bendoly, E. System dynamics perspectives and modeling opportunities for research in operations management. J. Oper. Manag. 2015, 39, 40. [Google Scholar] [CrossRef]

- Carless, D. Feedback loops and the longer-term: Towards feedback spirals. Assess. Eval. High. Educ. 2019, 44, 705–714. [Google Scholar] [CrossRef]

- Sterman, J.D. Learning in and about complex systems. Syst. Dyn. Rev. 1994, 10, 291–330. [Google Scholar] [CrossRef] [Green Version]

- Scott, G.; Danley-Scott, J. Two loops that need closing: Contingent faculty perceptions of outcomes assessment. J. Gen. Educ. 2015, 64, 30–55. [Google Scholar] [CrossRef]

- Ramani, S.; Könings, K.D.; Ginsburg, S.; van der Vleuten, C.P. Feedback redefined: Principles and practice. J. Gen. Intern. Med. 2019, 34, 744–749. [Google Scholar] [CrossRef] [Green Version]

- Martin, F.; Wang, C.; Sadaf, A. Student perception of helpfulness of facilitation strategies that enhance instructor presence, connectedness, engagement and learning in online courses. Internet High. Educ. 2018, 37, 52–65. [Google Scholar] [CrossRef]

- Davis, D.; Jivet, I.; Kizilcec, R.F.; Chen, G.; Hauff, C.; Houben, G.J. Follow the successful crowd: Raising MOOC completion rates through social comparison at scale. In Proceedings of the LAK’17 conference proceeding: The Seventh International Learning Analytics & Knowledge Conference, Simon Fraser University, Vancouver, BC, Canada, 13–17 March 2017; pp. 454–463. [Google Scholar]

- An, Y. The effects of an online professional development course on participants’ perceptions, attitudes, self-efficacy, and behavioral intentions regarding digital game-based learning. Educ. Technol. Res. Dev. 2018, 66, 1505–1527. [Google Scholar] [CrossRef]

- Phirangee, K.; Epp, C.D.; Hewitt, J. Exploring the relationships between facilitation methods, students’ sense of community, and their online behaviors. Online Learn. 2016, 20, 134–154. [Google Scholar] [CrossRef]

- Zhu, M.; Bonk, C.J.; Sari, A.R. Instructor experiences designing MOOCs in higher education: Pedagogical, resource, and logistical considerations and challenges. Online Learn. 2018, 22, 203–241. [Google Scholar] [CrossRef]

- Hew, K.F. Student perceptions of peer versus instructor facilitation of asynchronous online discussions: Further findings from three cases. Instr. Sci. 2015, 43, 19–38. [Google Scholar] [CrossRef]

- Van Popta, E.; Kral, M.; Camp, G.; Martens, R.L.; Simons, P.R. Exploring the value of peer feedback in online learning for the provider. Educ. Res. Rev. 2017, 20, 24–34. [Google Scholar] [CrossRef]

- Yang, M.; Carless, D. The feedback triangle and the enhancement of dialogic feedback processes. Teach. High. Educ. 2013, 18, 285–297. [Google Scholar] [CrossRef] [Green Version]

- Barker, M.; Pinard, M. Closing the feedback loop? Iterative feedback between tutor and student in coursework assessments. Assess. Eval. High. Educ. 2014, 39, 899–915. [Google Scholar] [CrossRef]

- Gerard, L.F.; Varma, K.; Corliss, S.B.; Linn, M. Professional development for technology-enhanced inquiry science. Rev. Educ. Res. 2011, 81, 408–448. [Google Scholar] [CrossRef]

- Yoon, S.A.; Anderson, E.; Koehler-Yom, J.; Evans, C.; Park, M.; Sheldon, J.; Schoenfeld, I.; Wendel, D.; Scheintaub, H.; Klopfer, E. Teaching about complex systems is no simple matter: Building effective professional development for computer-supported complex systems instruction. Instr. Sci. 2017, 45, 99–121. [Google Scholar] [CrossRef] [Green Version]

- Fishman, B.J.; Penuel, W.R.; Allen, A.R.; Cheng, B.H.; Sabelli, N.O. Design-based implementation research: An emerging model for transforming the relationship of research and practice. Natl. Soc. Study Educ. 2013, 112, 136–156. [Google Scholar]

- Fishman, B.J.; Penuel, W.R. Design-Based Implementation Research. In International Handbook of the Learning Sciences; Routledge: Abingdon, UK, 2018; pp. 393–400. [Google Scholar]

- Hew, K.F.; Qiao, C.; Tang, Y. Understanding student engagement in large-scale open online courses: A machine learning facilitated analysis of student’s reflections in 18 highly rated MOOCs. Int. Rev. Res. Open Distrib. Learn. 2018, 19. [Google Scholar] [CrossRef]

- Nicol, D. From monologue to dialogue: Improving written feedback processes in mass higher education. Assess. Eval. High. Educ. 2010, 35, 501–517. [Google Scholar] [CrossRef]

- Resendes, M.; Scardamalia, M.; Bereiter, C.; Chen, B.; Halewood, C. Group-level formative feedback and metadiscourse. Int. J. Comput. Supported Collab. Learn. 2015, 10, 309–336. [Google Scholar] [CrossRef]

- Kizilcec, R.F.; Halawa, S. Attrition and achievement gaps in online learning. In Proceedings of the Second (2015) ACM Conference on Learning@ Scale, Vancouver, BC, Canada, 14–18 March 2015; pp. 57–66. [Google Scholar]

- Kizilcec, R.F.; Pérez-Sanagustín, M.; Maldonado, J.J. Recommending self-regulated learning strategies does not improve performance in a MOOC. In Proceedings of the third (2016) ACM conference on learning@ scale, Edinburgh, Scotland, UK, 25–26 April 2016; pp. 101–104. [Google Scholar]

- Yeşilyurt, E.; Ulaş, A.H.; Akan, D. Participant self-efficacy, academic self-efficacy, and computer self-efficacy as predictors of attitude toward applying computer-supported education. Comput. Hum. Behav. 2016, 64, 591–601. [Google Scholar] [CrossRef]

- Darling-Hammond, L.; Hyler, M.E.; Gardner, M. Effective Participant Professional Development; Learning Policy Institute: Palo Alto, CA, USA, 2017. [Google Scholar]

- Baroody, A.J.; Dowker, A. ; The Development of Arithmetic Concepts and Skills: Constructive Adaptive Expertise; Routledge: Abingdon, UK, 2013. [Google Scholar]

- Anthony, G.; Hunter, J.; Hunter, R. Prospective participants development of adaptive expertise. Teach. Particip. Educ. 2015, 49, 108–117. [Google Scholar]

- Sweller, J. Cognitive load theory and educational technology. Educ. Technol. Res. Dev. 2020, 68, 1–6. [Google Scholar] [CrossRef]

- Goldie, J.G. Connectivism: A knowledge learning theory for the digital age? Med. Particip. 2016, 38, 1064–1069. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chaker, R.; Impedovo, M.A. The moderating effect of social capital on co-regulated learning for MOOC achievement. Educ. Inf. Technol. 2020, 5, 1–21. [Google Scholar] [CrossRef]

- Li, Y.; Chen, H.; Liu, Y.; Peng, M.W. Managerial ties, organizational learning, and opportunity capture: A social capital perspective. Asia Pac. J. Manag. 2014, 31, 271–291. [Google Scholar] [CrossRef]

- Adamopoulos, P. What makes a great MOOC? An interdisciplinary analysis of student retention in online courses. In Proceedings of the International Conference on Information Systems, ICIS 2013, Milano, Italy, 15–18 December 2013. [Google Scholar]

- Chang, R.I.; Hung, Y.H.; Lin, C.F. Survey of learning experiences and influence of learning style preferences on user intentions regarding MOOC s. Br. J. Educ. Technol. 2015, 46, 528–541. [Google Scholar] [CrossRef]

- Rosé, C.P.; Ferschke, O. Technology support for discussion based learning: From computer supported collaborative learning to the future of massive open online courses. Int. J. Artif. Intell. Educ. 2016, 26, 660–678. [Google Scholar] [CrossRef] [Green Version]

- Glaser, B.G.; Strauss, A.L. Discovery of Grounded Theory: Strategies for Qualitative Research; Routledge: Abingdon, UK, 2017. [Google Scholar]

- Campbell, J.L.; Quincy, C.; Osserman, J.; Pedersen, O.K. Coding in-depth semistructured interviews: Problems of unitization and intercoder reliability and agreement. Sociol. Methods Res. 2013, 42, 294–320. [Google Scholar] [CrossRef]

- Putnam, R. Bowling Alone; Simon & Schuster: New York, NY, USA, 2000. [Google Scholar]

- Lowenthal, P.R.; Dunlap, J.C. Investigating students’ perceptions of instructional strategies to establish social presence. Distance Educ. 2018, 39, 281–298. [Google Scholar] [CrossRef]

- Ulker, U. Reading activities in blended learning: Recommendations for university language preparatory course participants. Int. J. Soc. Sci. Educ. Stud. 2019, 5, 83. [Google Scholar]

- Gurley, L.E. Educators’ preparation to teach, perceived teaching presence, and perceived teaching presence behaviors in blended and online learning environments. Online Learn. 2018, 22, 197–220. [Google Scholar]

- Hollands, F.M.; Tirthali, D. MOOCs: Expectations and reality. Center for Benefit-Cost Studies of Education; Teachers College, Columbia University: New York, NY, USA, 2014. [Google Scholar]

- Watson, S.L.; Loizzo, J.; Watson, W.R.; Mueller, C.; Lim, J.; Ertmer, P.A. Instructional design, facilitation, and perceived learning outcomes: An exploratory case study of a human trafficking MOOC for attitudinal change. Educ. Technol. Res. Dev. 2016, 64, 1273–1300. [Google Scholar] [CrossRef]

- Wang, H.Y.; Duh, H.B.; Li, N.; Lin, T.J.; Tsai, C.C. An investigation of university students’ collaborative inquiry learning behaviors in an augmented reality simulation and a traditional simulation. J. Sci. Educ. Technol. 2014, 23, 682–691. [Google Scholar] [CrossRef]

| Participant Demographics | |

|---|---|

| Ethnicity | |

| White | 49 |

| Black | 1 |

| Hispanic | 4 |

| Asian or Pacific Islander | 12 |

| Multiethnic | 2 |

| Other | 5 |

| Prefer not to say | 1 |

| Participant ID | Facilitator | Satisfaction Category | School Resource Level | School Location |

|---|---|---|---|---|

| 1 | A | High | Middle Resource | Suburban |

| 2 | A | Neutral | High Resource | Suburban |

| 3 | B | High | High Resource | Suburban |

| 4 | B | Low | High Resource | Suburban |

| 5 | B | Low | High Resource | Suburban |

| 6 | C | High | High Resource | Suburban |

| 7 | C | Neutral | High Resource | Suburban |

| 8 | D | Neutral | Middle Resource | Rural |

| 9 | D | High | High Resource | Rural |

| 10 | D | Low | High Resource | Suburban |

| Positive feedback | A positive feedback occurs when the feedback allows the participant an opportunity to close the feedback loop and develop an understanding of the course content. Within a facilitation system, examples of positive feedback include (1) a facilitator offering a participant feedback on the content of their post and this feedback incentivizing the participant to learn more about the course content, (2) a facilitator sharing with a participant the contact information of another participant and they end up connecting or interacting within or beyond the course. |

| Negative feedback | Negative feedback occurs when the feedback causes the output of the participant to decrease or prevents the participant from closing the feedback loop. Examples of negative feedback include (1) when a feature of the facilitation system is disregarded by the participant, (2) when a facilitator’s feedback limits the participant’s ability to engage with the course content and community. |

| Other | Participants’ feedback is categorized as “other” if they refer to the facilitation system in a way that doesn’t fall into either of the other two categories. |

| Subcategory | Definition | Example |

|---|---|---|

| Positive Structural Dimension | The structural dimension refers to disciplinary practices and institutional policies that determine how the feedback process is arranged and what resources are mobilized in providing feedback [42]. This dimension is deemed as facilitating a positive feedback when participants favorably refer to a structural aspect of the facilitation system as the main factor supporting their judgment of this system. In these instances, the facilitator’s role in supporting the participants’ experiences is not highlighted by the participant beyond their performance of a structural practice. | “I think the synchronous meetups were helpful because I think that’s where you all talked to us more about different things and when we split off into those little small groups it wasn’t necessarily with our specific cohort person.” |

| Positive Cognitive Dimension | The cognitive dimension of facilitators’ feedback refers to the facilitators’ specific contribution to a participant’s understanding of the course content and its related activities beyond the structural dimensions of the facilitation system. This dimension is deemed as facilitating a positive feedback when participants favorably refer to a facilitator’s contribution as valuable to their cognitive processes. The cognitive dimension of feedback can influence a participant’s understanding of course content, task completion, and implementation strategies. | “And there were a couple of things that my facilitator had responded to in some of my discussion posts that I actually, I would save them to use. One of the ones that she provided me with was “How science works” I believe, was the document. Most of it was through discussion posts and email. She did provide me with some, a lot of good resources that applied to what my response was in the discussion posts.” |

| Positive Social Dimension | The social dimension of the facilitators’ feedback refers to the facilitators’ specific contribution to a participant’s ability to connect with other members of the learning community and build relationships beyond the impact of the structural dimensions of the facilitation system. The social dimension also includes a participant’s ability to nurture their social capital through the support and presence of the facilitator. This dimension is deemed as facilitating positive feedback when participants favorably refer to a facilitator’s specific contribution to their ability to interact and/or build social relationships with other members of the learning community. | “All my interactions were positive. I think she was just reaching out and it was clear that she wanted to make sure that everyone in the group got through it and at least knew each other’s emails. We all for sure knew from her that that was a group that we could contact and communicate with if we were struggling.” |

| Negative Structural Dimension | This dimension is deemed as promoting negative feedback when participants refer to a structural aspect of the facilitation system as the main factor influencing their unfavorable judgment of this system. In these instances, the facilitator’s role in hindering the participants’ learning experience is not highlighted by the participant beyond their performance of a structural practice. | “I was just thinking that the other day, like yesterday. How come I have to go back in there and search for that topic and try to remember where I posted it, you know, get the answer to that question.” |

| Negative Cognitive Dimension | This dimension is deemed as promoting negative feedback when participants refer to a facilitator’s contribution as a reason for hindering their cognitive processes. The cognitive dimension of feedback can influence a participant’s understanding of course content, task completion, and implementation strategies. | “She did respond to one or two that I recall. But that was about it. I didn’t feel like it was real significant or anything. But yeah, she did. I think it was one or two that she responded to” |

| Negative Social Dimension | This dimension is deemed as promoting negative feedback when teachers refer to a facilitator’s specific contribution as a reason for their inability to interact and/or build social relationships with other members of the learning community. | “Yeah. I would not say that I interacted much, if any with my facilitator. I certainly would not know my facilitator was. I did get two emails that seem to be sort of automatically generated. They might be personal, but they certainly didn’t seem so anyways.” |

| Descriptive | Q1-My Facilitator Helped Me Develop a Better Understanding of the Course Content | Q2-My Facilitator Was Accessible and Available for Me Whenever I Needed Support | Q3-I Was Able to Benefit from the Facilitators’ Office Hours | Q4-I Am Satisfied with the Relationship I Developed with My Facilitator | Q5-My Facilitator Connected Me with Other Participants | Q6-I Would Recommend My Facilitator to Future Participants. |

|---|---|---|---|---|---|---|

| Mean | 3.88 | 4.08 | 3.38 | 3.78 | 3.54 | 3.99 |

| SD | 1.03 | 4.00 | 0.87 | 1.02 | 1.12 | 0.99 |

| Median | 4.00 | 4.00 | 3.00 | 4.00 | 3.00 | 4.00 |

| Mode | 5.00 | 5.00 | 3.00 | 3.00 | 3.00 | 5.00 |

| Range | 4.00 | 4.00 | 4.00 | 4.00 | 4.00 | 4.00 |

| Descriptive | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 |

|---|---|---|---|---|---|---|

| Mean | 3.60 | 3.93 | 3.27 | 3.64 | 3.31 | 3.78 |

| SD | 1.07 | 1.07 | 0.89 | 1.09 | 1.16 | 1.02 |

| Median | 4.00 | 4.00 | 3.00 | 3.00 | 3.00 | 4.00 |

| Mode | 3.00 | 5.00 | 3.00 | 3.00 | 3.00 | 4.00 |

| Range | 4.00 | 4.00 | 4.00 | 4.00 | 4.00 | 4.00 |

| Descriptive | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 |

|---|---|---|---|---|---|---|

| Mean | 4.31 | 4.31 | 3.55 | 4.00 | 3.90 | 4.31 |

| SD | 0.81 | 0.81 | 0.83 | 0.89 | 0.98 | 0.85 |

| Median | 5 | 5 | 3 | 4 | 4 | 5 |

| Mode | 5 | 5 | 3 | 3 | 3 | 5 |

| Range | 2 | 2 | 2 | 2 | 3 | 2 |

| Tracks | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1&2 | 3 | 1&2 | 3 | 1&2 | 3 | 1&2 | 3 | 1&2 | 3 | 1&2 | 3 | |

| Mean | 3.6 | 4.31 | 3.93 | 4.31 | 3.27 | 3.55 | 3.64 | 4 | 3.31 | 3.9 | 3.78 | 4.31 |

| SD | 1.07 | 0.81 | 1.07 | 0.81 | 0.89 | 0.83 | 1.09 | 0.89 | 1.16 | 0.98 | 1.02 | 0.85 |

| Range | 4 | 2 | 4 | 2 | 4 | 2 | 4 | 2 | 4 | 3 | 4 | 2 |

| t-test | 3.23 | 1.71 | 1.41 | 1.53 | 2.33 | 2.43 | ||||||

| p-value | 0.002 * | 0.09 * | 0.165 | 0.129 | 0.023 * | 0.018 * | ||||||

| Cohen’s d | 0.689 | 0.095 | 0.322 | 0.353 | 0.527 | 0.535 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marei, A.; Yoon, S.A.; Yoo, J.-U.; Richman, T.; Noushad, N.; Miller, K.; Shim, J. Designing Feedback Systems: Examining a Feedback Approach to Facilitation in an Online Asynchronous Professional Development Course for High School Science Teachers. Systems 2021, 9, 10. https://0-doi-org.brum.beds.ac.uk/10.3390/systems9010010

Marei A, Yoon SA, Yoo J-U, Richman T, Noushad N, Miller K, Shim J. Designing Feedback Systems: Examining a Feedback Approach to Facilitation in an Online Asynchronous Professional Development Course for High School Science Teachers. Systems. 2021; 9(1):10. https://0-doi-org.brum.beds.ac.uk/10.3390/systems9010010

Chicago/Turabian StyleMarei, Amin, Susan A. Yoon, Jae-Un Yoo, Thomas Richman, Noora Noushad, Katherine Miller, and Jooeun Shim. 2021. "Designing Feedback Systems: Examining a Feedback Approach to Facilitation in an Online Asynchronous Professional Development Course for High School Science Teachers" Systems 9, no. 1: 10. https://0-doi-org.brum.beds.ac.uk/10.3390/systems9010010