Building System Capacity with a Modeling-Based Inquiry Program for Elementary Students: A Case Study

Abstract

:1. Introduction

1.1. Data Literacy Skills and Model-Based Learning as Important Components of Early STEM Education

1.2. System-Level Considerations for Establishing Elementary Science Reform

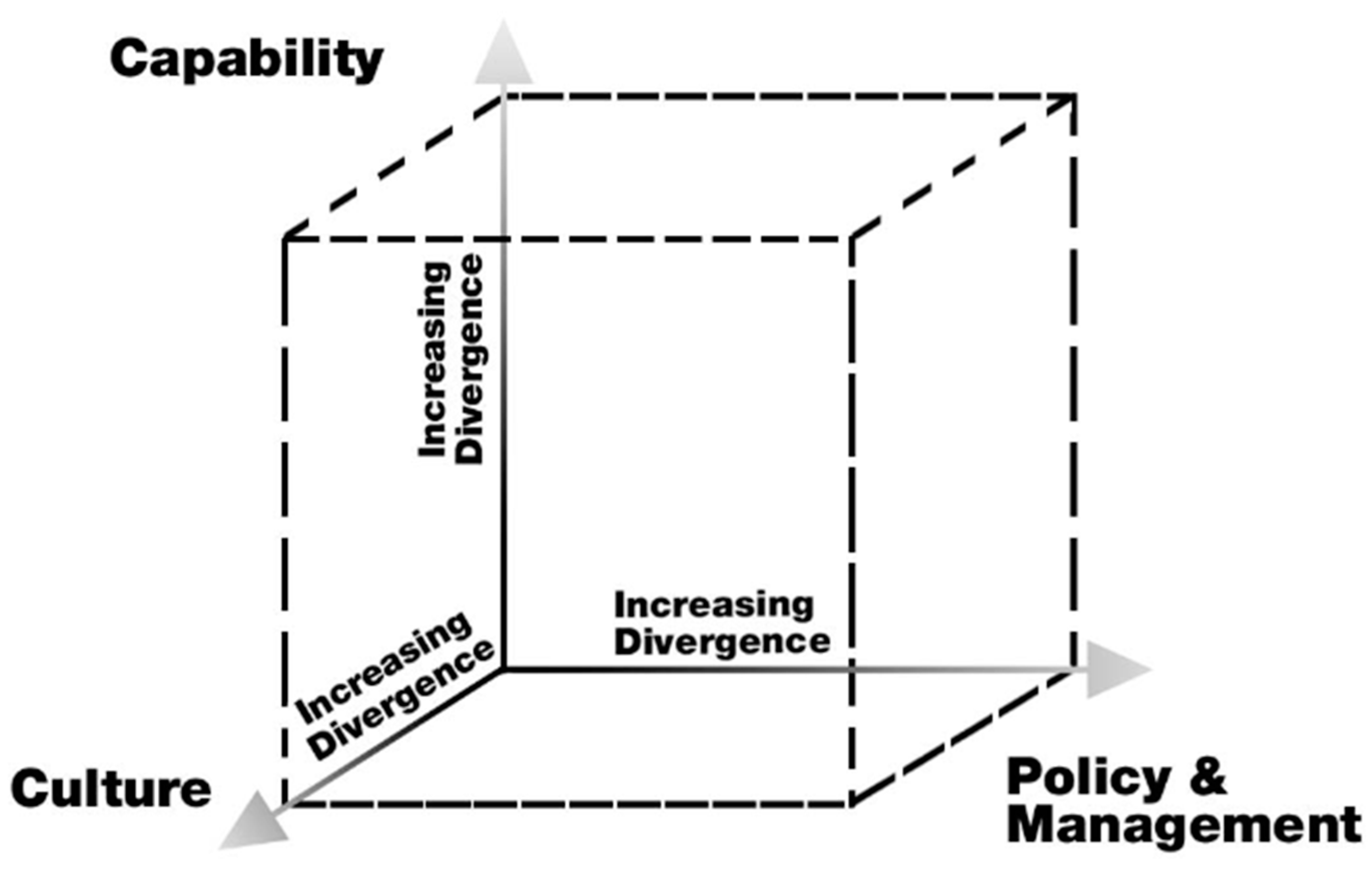

1.3. Model-Based Inquiry Reform through the Usability Cube Framework

2. Methodology

2.1. Context

2.2. Participants

2.3. Data Sources and Analysis

3. Results

3.1. Capability

It really encompasses all the skills and concepts that [the students are] learning, and they get to put it to use…The visual model, it helps them take the concepts and the vocabulary words and everything that we’re learning and putting it to use and seeing how it is actually used in real life…This really amped up [student] knowledge and really I think it checked off all of the markers of the standards we are teaching right now.

Everything was pretty much laid out for me. And then you have everything in the Google drive shared with us…I don’t have to go searching for things and piece it all together…after the summer [PD] you kind of forget everything. And once I went back through the binder and then went back through the lesson plans, it just all comes back and everything is laid out exactly what to do. So that was really helpful.

3.2. School Culture

We were hoping that [these four teachers] were going to be the ones that were going to help this continue once I had to pull out once the grant was over. And it just never took off…we talked with the administration about…pay[ing] these teachers a little bit of a stipend so that they could be ambassadors and they could go to the other school buildings and help train other teachers who were interested, but it just never happened. I think that would have made a big difference too. That could have helped it take off.

3.3. District-Level Policy and Management

I knew the superintendent really well because he was my principal when I first started in the district…And I had a good relationship with the people who were in charge of the curriculum as well…the head of curriculum, he really tried to get all of his principals on board with this.

3.4. Affordances to Students

Teachers…realized, ‘this is really cool, students are helping each other…’ [so] the students were doing some of the teaching as well. They were working together… And pairs would work together, but then you’d hear somebody over in the corner asking a question, this kid would yell across to her, ‘Hey, well do this.’ It was great because the teachers weren’t saying, ‘Shh. Shh. Get quiet.’ They just let them engage.

I really like to see [the students] cooperate with each other, that is something that, it’s definitely hard in this classroom, especially because there’s a lot of kids that have difficulty with that. However because they’re very interested in the StarLogo [Nova]…modeling, the manipulation of it all. They share really well…And there were some students who don’t normally do well with partner work, but they did really well when we did the partner work with the [modeling curriculum].

Modeling helps with their hands-on approach…They see in the instant…as they change something, something happens immediately they have that immediate reinforcement or negative of changing something and it not working anymore…and being able to tinker with it…being able to see immediately what their choices have done, what their impact is [helps students learning].

4. Discussion

4.1. Features that Increased Program Usability

4.2. Features that Decreased Program Usability

4.3. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Bryk, A.S.; Gomez, L.M.; Grunow, A.; LeMahieu, P.G. Learning to Improve: How America’s Schools Can Get Better at Getting Better; Harvard Education Press: Cambridge, MA, USA, 2015. [Google Scholar]

- McKenney, S. How can the learning sciences (better) impact policy and practice? J. Learn. Sci. 2018, 7, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Penuel, W.R.; Fishman, B.J. Large-scale science education intervention research we can use. J. Res. Sci. Teach. 2012, 49, 281–304. [Google Scholar] [CrossRef] [Green Version]

- President’s Council of Advisors on Science and Technology (PCAST). Engage to Excel: Producing One Million Additional College Graduates with Degrees in Science, Technology, Engineering, and Mathematics; Report to the President; President’s Council of Advisors on Science and Technology (PCAST): Washington, DC, USA, 2012. Available online: https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/pcast-engage-to-excel-final_2-25-12.pdf (accessed on 16 November 2020).

- National Science Foundation, National Center for Science and Engineering Statistics. Women, Minorities, and Persons with Disabilities in Science and Engineering: 2019; Special Report NSF 19-304; National Science Foundation, National Center for Science and Engineering Statistics: Alexandria, VA, USA, 2019. Available online: https://www.nsf.gov/statistics/wmpd (accessed on 16 November 2020).

- Ball, C.; Huang, K.T.; Cotten, S.R.; Rikard, R.V. Pressurizing the STEM pipeline: An expectancy-value theory analysis of youths’ STEM attitudes. J. Sci. Educ. Technol. 2017, 26, 372–382. [Google Scholar] [CrossRef]

- Klopfer, E.; Yoon, S.; Um, T. Teaching complex dynamic systems to young students with StarLogo. J. Comput. Math. Sci. Teach. 2005, 24, 157–178. [Google Scholar]

- Makar, K. Developing young children’s emergent inferential practices in statistics. Math. Think. Learn. 2016, 18, 1–24. [Google Scholar] [CrossRef]

- Lee, V.R.; Wilkerson, M. Data Use by Middle and Secondary Students in the Digital Age: A Status Report and Future Prospects; Commissioned Paper for the National Academies of Sciences, Engineering, and Medicine, Board on Science Education, Committee on Science Investigations and Engineering Design for Grades 6–12; International Society of the Learning Sciences: Washington, DC, USA, 2018. [Google Scholar]

- Schwarz, C.V.; Reiser, B.J.; Davis, E.A.; Kenyon, L.; Acher, A.; Fortus, D.; Schwartz, Y.; Hug, B.; Krajcik, J. Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. J. Res. Sci. Teach. 2009, 46, 632–654. [Google Scholar] [CrossRef] [Green Version]

- Windschitl, M.; Thompson, J.; Braaten, M. Beyond the scientific method: Model-based inquiry as a new paradigm of preferences for school science investigations. Sci. Educ. 2008, 92, 941–967. [Google Scholar] [CrossRef] [Green Version]

- Blumenfeld, P.; Fishman, B.J.; Krajcik, J.; Marx, R.W.; Soloway, E. Creating usable innovations in systemic reform: Scaling up technology-embedded project-based science in urban schools. Educ. Psychol. 2000, 35, 149–164. [Google Scholar] [CrossRef]

- Spillane, J.P.; Diamond, J.B.; Walker, L.J.; Halverson, R.; Jita, L. Urban school leadership for elementary science instruction: Identifying and activating resources in an undervalued school subject. J. Res. Sci. Teach. 2001, 38, 918–940. [Google Scholar] [CrossRef]

- Fishman, B.; Marx, R.W.; Blumenfeld, P.; Krajcik, J.; Soloway, E. Creating a framework for research on systemic technology innovations. J. Learn. Sci. 2004, 13, 43–76. [Google Scholar] [CrossRef]

- Foltz, L.; Gannon, S.; Kirschmann, S. Factors that contribute to the persistence of minority students in STEM fields. Plan. High. Educ. J. 2014, 42, 46–58. [Google Scholar]

- Tytler, R. Attitudes, identity, and aspirations toward science. In Handbook of Research on Science Education; Lederman, N.G., Abell, S.K., Eds.; Routledge: New York, NY, USA, 2014; Volume 2, pp. 82–103. [Google Scholar]

- Harris, K.; Sithole, A.; Kibirige, J. A needs assessment for the adoption of Next Generation Science Standards (NGSS) in K-12 education in the United States. J. Educ. Train. Stud. 2017, 5, 54–62. [Google Scholar] [CrossRef] [Green Version]

- National Research Council. Next Generation Science Standards: For States, By States; The National Academies Press: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Wise, A.F. Educating data scientists and data literate citizens for a new generation of data. J. Learn. Sci. 2020, 29, 165–181. [Google Scholar] [CrossRef]

- Bryce, C.M.; Baliga, V.B.; DeNesnera, K.L.; Flack, D.; Goetz, K.; Tarjan, L.M.; Wade, C.E.; Yovovich, V.; Baumgart, S.; Bard, D.; et al. Exploring models in the biology classroom. Am. Biol. Teach. 2016, 78, 35–42. [Google Scholar] [CrossRef] [Green Version]

- National Research Council. A Framework for K-12 Science Education: Practices, Crosscutting Concepts and Core Ideas; National Academies Press: Washington, DC, USA, 2012. [Google Scholar] [CrossRef]

- Yoon, S.; Klopfer, E.; Anderson, E.; Koehler-Yom, J.; Sheldon, J.; Schoenfeld, I.; Wendel, D.; Scheintaub, H.; Oztok, M.; Evans, C.; et al. Designing computer-supported complex systems curricula for the Next Generation Science Standards in high school science classrooms. Systems 2016, 4, 38. [Google Scholar] [CrossRef] [Green Version]

- Pea, R.D.; Collins, A. Learning how to do science education: Four waves of reform. In Designing Coherent Science Education; Kali, Y., Linn, M.C., Roseman, J.E., Eds.; Teachers College Press: New York, NY, USA, 2008; pp. 3–12. [Google Scholar]

- Penuel, W.R.; Reiser, B.J. Designing NGSS-Aligned Curriculum Materials; National Academies Press: Washington, DC, USA, 2018. [Google Scholar]

- Anderson, C.W.; de los Santos, E.X.; Bodbyl, S.; Covitt, B.A.; Edwards, K.D.; Hancock, J.B., II; Lin, Q.; Thomas, C.M.; Penuel, W.R.; Welch, M.M. Designing educational systems to support enactment of the Next Generation Science Standards. J. Res. Sci. Teach. 2018, 55, 1026–1052. [Google Scholar] [CrossRef]

- National Research Council. Taking Science to School: Learning and Teaching Science in Grades K-8; National Academies Press: Washington, DC, USA, 2007. [Google Scholar]

- Anderson, S.E.; Togneri, W. School District-Wide Reform Policies in Education. In International Handbook of Educational Policy; Bascia, N., Cumming, A., Datnow, A., Leithwood, K., Livingstone, D., Eds.; Springer: Dordrecht, The Netherlands, 2005; Volume 13, pp. 173–195. [Google Scholar] [CrossRef]

- Weinbaum, E.H.; Supovtiz, J.A. Planning ahead: Making program implementation more predictable. Phi Delta Kappan 2010, 9, 68–71. [Google Scholar] [CrossRef]

- Spillane, J.P.; Halverson, R.; Diamond, J.B. Investigating school leadership practice: A distributed perspective. Educ. Res. 2001, 30, 23–28. [Google Scholar] [CrossRef] [Green Version]

- Smetana, L.K.; Bell, R.L. Computer simulations to support science instruction and learning: A critical review of the literature. Int. J. Sci. Educ. 2012, 34, 1337–1370. [Google Scholar] [CrossRef]

- Yoon, S.A.; Koehler-Yom, J.; Yang, Z.; Liu, L. The effects of teachers’ social and human capital on urban science reform initiatives: Considerations for professional development. Teach. Coll. Rec. 2017, 119, 1–32. [Google Scholar]

- Fishman, B.; Penuel, W. Design-based implementation research. In International Handbook of the Learning Sciences, 1st ed.; Fischer, F., Hmelo-Silver, C.E., Goldman, S.R., Reimann, P., Eds.; Routledge: New York, NY, USA, 2018; pp. 393–400. [Google Scholar] [CrossRef] [Green Version]

- Penuel, W.R.; Fishman, B.J.; Cheng, B.H.; Sabelli, N. Organizing research and development at the intersection of learning, implementation, and design. Educ. Res. 2011, 40, 331–337. [Google Scholar] [CrossRef]

- Lewis, C. What is improvement science? Do we need it in education? Educ. Res. 2015, 44, 54–61. [Google Scholar] [CrossRef]

- Cottone, A.C.; Yoon, S.A.; Shim, J.; Coulter, B.; Carman, S. Using apt epistemic performance goals to develop data literacy in elementary school students: An early stage investigation. Instr. Sci. under review.

- Pluta, W.J.; Chinn, C.A.; Duncan, R.G. Learners’ epistemic criteria for good scientific models. J. Res. Sci. Teach. 2011, 48, 486–511. [Google Scholar] [CrossRef]

- Institute of Education Sciences/National Science Foundation. Common Guidelines for Education Research and Development; National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- StarLogo Nova. Available online: http://www.slnova.org/ (accessed on 1 December 2020).

- Resnick, M. Turtles, Termites, and Traffic Jams: Explorations in Massively Parallel Microworlds; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Brand, B.R. Integrating science and engineering practices: Outcomes from a collaborative professional development. Int. J. Stem Educ. 2020, 7, 1–13. [Google Scholar] [CrossRef]

- Dare, E.A.; Ellis, J.A.; Roehrig, G.H. Understanding science teachers’ implementations of integrated STEM curricular units through a phenomenological multiple case study. Int. J. Stem Educ. 2018, 5, 1–19. [Google Scholar] [CrossRef]

- Kelley, T.R.; Knowles, J.G.; Holland, J.D.; Jung, H. A conceptual framework for integrated STEM education. Int. J. Stem Educ. 2016, 3, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Darling-Hammond, L.; Hyler, M.E.; Gardner, M. Effective Teacher Professional Development; Learning Policy Institute: Palo Alto, CA, USA, 2017. [Google Scholar]

- Desimone, L. Improving impact studies of teachers’ professional development: Toward better conceptualizations and measures. Educ. Res. 2009, 38, 181–199. [Google Scholar] [CrossRef] [Green Version]

- Desimone, L.M.; Garet, M.S. Best practices in teachers’ professional development in the United States. Psychol. Soc. Educ. 2015, 7, 252–263. [Google Scholar] [CrossRef] [Green Version]

- Jegede, O.; Taplin, M.; Chan, S. Trainee teachers’ perception of their knowledge about expert teaching. Educ. Res. 2000, 42, 287–308. [Google Scholar] [CrossRef]

- Yoon, S.; Evans, C.; Anderson, E.; Koehler, J.; Miller, K. Validating a model for assessing science teacher’s adaptive expertise with computer-supported complex systems curricula and its relationship to student learning outcomes. J. Sci. Teach. Educ. 2019, 30, 890–905. [Google Scholar] [CrossRef]

- Lincoln, Y.S.; Guba, E.G. Paradigmatic controversies, contradictions, and emerging confluences. In Handbook of Qualitative Research, 2nd ed.; Denzin, N.K., Lincoln, Y.S., Eds.; Sage: Thousand Oaks, CA, USA, 2000; pp. 163–188. [Google Scholar]

- Glaser, B. The constant comparative method of qualitative analysis. Grounded Theory Rev. 2008, 7. Available online: http://groundedtheoryreview.com/2008/11/29/the-constant-comparative-method-of-qualitative-analysis-1/ (accessed on 12 January 2021).

- Creswell, J.W. Qualitative Inquiry & Research Design: Choosing among Five Approaches, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2013. [Google Scholar]

- Strauss, A.L.; Corbin, J.M. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory, 2nd ed.; Sage: Newbury Park, CA, USA, 1998. [Google Scholar]

- Coburn, C.E.; Russell, J.L.; Kaufman, J.; Stein, M.K. Supporting sustainability: Teachers’ advice networks and ambitious instructional reform. Am. J. Educ. 2012, 119, 137–182. [Google Scholar] [CrossRef] [Green Version]

- Richmond, G.; Manokore, V. Identifying elements critical for functional and sustainable professional learning communities. Sci. Teach. Educ. 2010, 95, 543–570. [Google Scholar] [CrossRef]

- Doppelt, Y.; Mehalik, M.; Schunn, C.; Silk, E.; Krysinski, D. Engagement and achievements: A case study of design-based learning in a science context. J. Technol. Educ. 2008, 19, 22–39. [Google Scholar]

- Slavin, R.E.; Madden, N.E.; Chambers, B.; Haxby, B. Two Million Children: Success for All; Corwin Press: Thousand Oaks, CA, USA, 2009. [Google Scholar]

- Rutten, N.; van Joolingen, W.; Veen, J. The learning effects of computer simulations in science education. Comput. Educ. 2012, 58, 136–153. [Google Scholar] [CrossRef]

- Pierson, A.E.; Brady, C.E. Expanding opportunities for systems thinking, conceptual learning, and participation through embodied and computational modeling. Systems 2020, 8, 48. [Google Scholar] [CrossRef]

- Haas, A.; Grapin, S.E.; Wendel, D.; Llosa, L.; Lee, O. How fifth-grade English learners engage in systems thinking using computational models. Systems 2020, 8, 47. [Google Scholar] [CrossRef]

- Makar, K.; Bakker, A.; Ben-Zvi, D. The reasoning behind informal statistical inference. Math. Think. Learn. 2011, 13, 152–173. [Google Scholar] [CrossRef]

- Rubin, A. Learning to reason with data: How did we get here and what do we know? J. Learn. Sci. 2020, 29, 154–164. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine. Design, Selection, and Implementation of Instructional Materials for the Next Generation Science Standards (NGSS): Proceedings of a Workshop; The National Academies Press: Washington, DC, USA, 2018. [Google Scholar] [CrossRef]

- Penuel, W.; Riel, M.; Krause, A.; Frank, K. Analyzing teachers’ professional interactions in a school as social capital: A social network approach. Teach. Coll. Rec. 2009, 111, 124–163. [Google Scholar]

- Coburn, C.E.; Mata, W.S.; Choi, L. The embeddedness of teachers’ social networks: Evidence from a study of mathematics reform. Sociol. Educ. 2013, 86, 311–342. [Google Scholar] [CrossRef]

- National Research Council. Monitoring Progress toward Successful K-12 Stem Education: A Nation Advancing? The National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- Stake, R.E.; Easley, J. Case Studies in Science Education; University of Illinois, Center for Instructional Research and Curriculum Evaluation: Urbana, IL, USA, 1978. [Google Scholar]

- Shear, L.; Penuel, W.R. Rock-solid support: Florida district weighs effectiveness of science professional learning. J. Staff Dev. 2010, 31, 48–51. [Google Scholar]

| School | School Enrollment | Black | White | Hispanic | Two or More Races | Asian | American Indian or Pacific Islander |

|---|---|---|---|---|---|---|---|

| School A | 537 | 42.8% | 27.7% | 18.2% | 9.5% | 1.3% | 0.4% |

| School B | 504 | 39.5% | 31.5% | 16.7% | 11.5% | 0.6% | 0.2% |

| School C | 500 | 36.8% | 23.4% | 27.4% | 11.4% | 1% | — |

| School D | 515 | 48.5% | 17.3% | 22.5% | 10.3% | 0.8% | 0.6% |

| School E | 363 | 29.2% | 55.9% | 12.1% | 2.8% | — | — |

| School F | 494 | 36.8% | 30.2% | 21.5% | 8.1% | 3.2% | 0.2% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cottone, A.M.; Yoon, S.A.; Coulter, B.; Shim, J.; Carman, S. Building System Capacity with a Modeling-Based Inquiry Program for Elementary Students: A Case Study. Systems 2021, 9, 9. https://0-doi-org.brum.beds.ac.uk/10.3390/systems9010009

Cottone AM, Yoon SA, Coulter B, Shim J, Carman S. Building System Capacity with a Modeling-Based Inquiry Program for Elementary Students: A Case Study. Systems. 2021; 9(1):9. https://0-doi-org.brum.beds.ac.uk/10.3390/systems9010009

Chicago/Turabian StyleCottone, Amanda M., Susan A. Yoon, Bob Coulter, Jooeun Shim, and Stacey Carman. 2021. "Building System Capacity with a Modeling-Based Inquiry Program for Elementary Students: A Case Study" Systems 9, no. 1: 9. https://0-doi-org.brum.beds.ac.uk/10.3390/systems9010009