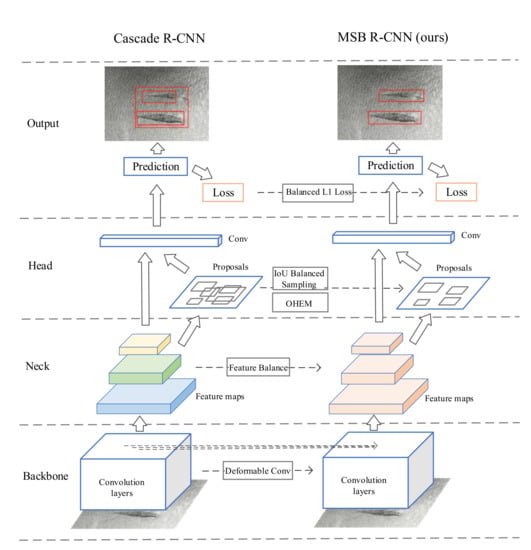

Figure 1.

Multi-stage balanced R-CNN (MSB R-CNN) network framework. The backbone of the network incorporates deformable convolution to balance high-level and low-level features. The balanced features then pass through the three-stage detector. The loss balancing is carried out in each stage where different sampling methods are adopted at different stages.

Figure 1.

Multi-stage balanced R-CNN (MSB R-CNN) network framework. The backbone of the network incorporates deformable convolution to balance high-level and low-level features. The balanced features then pass through the three-stage detector. The loss balancing is carried out in each stage where different sampling methods are adopted at different stages.

Figure 2.

Regular convolution (left) and deformable convolution (right) for defect images. Unlike regular convolution, which uses a fixed-shape convolution kernel, deformable convolution calculates offsets and the orientation for sampling points, which makes the shape of the convolution kernel variable, thereby improving the ability to extract shape features.

Figure 2.

Regular convolution (left) and deformable convolution (right) for defect images. Unlike regular convolution, which uses a fixed-shape convolution kernel, deformable convolution calculates offsets and the orientation for sampling points, which makes the shape of the convolution kernel variable, thereby improving the ability to extract shape features.

Figure 3.

Pipeline and heat map visualization of balanced feature pyramid. C1–C5 represent different levels of feature maps output from the backbone network and C2–C5 are used for feature integration. With multi-scale feature integration and refinement, we obtain the balanced feature pyramid. Finally, identity connect is performed, that is, adding the original features to the output.

Figure 3.

Pipeline and heat map visualization of balanced feature pyramid. C1–C5 represent different levels of feature maps output from the backbone network and C2–C5 are used for feature integration. With multi-scale feature integration and refinement, we obtain the balanced feature pyramid. Finally, identity connect is performed, that is, adding the original features to the output.

Figure 4.

The class distribution of samples in the training set of (a) DAGM2007 and (b) GC10.

Figure 4.

The class distribution of samples in the training set of (a) DAGM2007 and (b) GC10.

Figure 5.

Examples of detection result on DAGM2007 data set.

Figure 5.

Examples of detection result on DAGM2007 data set.

Figure 6.

Examples of detection result on GC10 data set.

Figure 6.

Examples of detection result on GC10 data set.

Figure 7.

Feature comparison of deformable convolution and standard convolution. Judging from the features of the four stages extracted from the backbone network, the deformable convolution fits the shape of the defect better than the original convolution in the last stage.

Figure 7.

Feature comparison of deformable convolution and standard convolution. Judging from the features of the four stages extracted from the backbone network, the deformable convolution fits the shape of the defect better than the original convolution in the last stage.

Figure 8.

Comparison of balanced feature and FPN feature. The features after feature balance is strengthened, and the defect area is more obvious.

Figure 8.

Comparison of balanced feature and FPN feature. The features after feature balance is strengthened, and the defect area is more obvious.

Table 1.

Detection accuracy in DAGM2007 (%). mAP is AP (IoU = 0.5:0.95, AR = all), AP50 is AP (IoU = 0.5, AR = all), AP75 is AP (IoU = 0.75, AR = all), APS is AP (IoU = 0.5:0.95, AR = S), APM is AP (IoU = 0.5:0.95, AR = M), and APL is AP (IoU = 0.5:0.95, AR = L). AR is the average recall for objects, AR = S is AR for small objects (area < ), AR = M is AR for medium objects ( < area < ), and AR = L is AR for large objects (area > ).

Table 1.

Detection accuracy in DAGM2007 (%). mAP is AP (IoU = 0.5:0.95, AR = all), AP50 is AP (IoU = 0.5, AR = all), AP75 is AP (IoU = 0.75, AR = all), APS is AP (IoU = 0.5:0.95, AR = S), APM is AP (IoU = 0.5:0.95, AR = M), and APL is AP (IoU = 0.5:0.95, AR = L). AR is the average recall for objects, AR = S is AR for small objects (area < ), AR = M is AR for medium objects ( < area < ), and AR = L is AR for large objects (area > ).

| AP | Faster R-CNN [22] | Grid R-CNN [9] | RetinaNet [28] | Cascade R-CNN [7] | SSD [25] | Libra R-CNN [14] | Ours |

|---|

| mAP | 64.6 | 66.5 | 65.2 | 66.0 | 63.2 | 66.7 | 67.5 |

| AP50 | 99.1 | 98.7 | 99.4 | 99.2 | 98.6 | 98.9 | 98.9 |

| AP75 | 74.5 | 78.9 | 75.5 | 77.6 | 73.3 | 77.7 | 79.8 |

| APS | 54.7 | 56.7 | 57.1 | 60.7 | 55.9 | 61.6 | 60.1 |

| APM | 63.2 | 65.8 | 63.5 | 64.7 | 62.8 | 65.6 | 65.8 |

| APL | 66.0 | 67.4 | 65.2 | 66.8 | 65.5 | 66.1 | 69.0 |

Table 2.

Detection accuracy (%) in each category (mAP).

Table 2.

Detection accuracy (%) in each category (mAP).

| Class | Faster R-CNN [22] | Grid R-CNN [9] | RetinaNet [28] | Cascade R-CNN [7] | SSD [25] | Libra R-CNN [14] | Ours |

|---|

| Class1 | 60.5 | 63.2 | 62.5 | 63.1 | 52.6 | 63.5 | 62.4 |

| Class2 | 64.4 | 68.3 | 66.9 | 66.2 | 67.3 | 70.1 | 70.8 |

| Class3 | 56.5 | 55.0 | 56.0 | 56.4 | 56.1 | 57.0 | 54.3 |

| Class4 | 69.2 | 72.2 | 70.2 | 66.5 | 68.5 | 68.3 | 70.9 |

| Class5 | 69.6 | 73.6 | 72.8 | 72.0 | 71.7 | 71.5 | 74.2 |

| Class6 | 70.6 | 70.7 | 71.7 | 75.4 | 65.8 | 73.9 | 75.1 |

| Class7 | 58.8 | 63.4 | 60.9 | 62.8 | 59.5 | 62.8 | 65.1 |

| Class8 | 51.9 | 54.9 | 53.7 | 53.0 | 48.2 | 54.9 | 53.9 |

| Class9 | 72.2 | 70.7 | 69.8 | 69.8 | 69.7 | 71.0 | 71.2 |

| Class10 | 72.0 | 74.1 | 71.9 | 73.4 | 72.6 | 74.7 | 76.7 |

Table 3.

Detection accuracy in GC10 (%).

Table 3.

Detection accuracy in GC10 (%).

| AP | Faster R-CNN [22] | Grid R-CNN [9] | RetinaNet [28] | Cascade R-CNN [7] | SSD [25] | Libra R-CNN [14] | Ours |

|---|

| mAP | 29.7 | 27.9 | 25.6 | 33.4 | 24.7 | 29.1 | 34.0 |

| AP50 | 64.5 | 61.4 | 53.9 | 66.6 | 55.9 | 62.3 | 67.2 |

| AP75 | 25.8 | 20.3 | 21.3 | 30.7 | 19.7 | 23.1 | 32.7 |

| APS | - | - | - | - | - | - | - |

| APM | 18.2 | 18.2 | 16.7 | 18.3 | 14.7 | 17.5 | 17.5 |

| APL | 29.3 | 27.1 | 24.2 | 32.5 | 23.0 | 28.4 | 34.0 |

Table 4.

Detection accuracy (%) in each category (mAP).

Table 4.

Detection accuracy (%) in each category (mAP).

| Class | Faster R-CNN [22] | Grid R-CNN [9] | RetinaNet [28] | Cascade R-CNN [7] | SSD [25] | Libra R-CNN [14] | Ours |

|---|

| Crease | 7.0 | 9.1 | 3.0 | 13.3 | 5.0 | 4.2 | 9.6 |

| Crescent_gap | 59.4 | 54.9 | 54.5 | 61.3 | 47.7 | 60.3 | 62.2 |

| Inclusion | 10.0 | 8.6 | 7.1 | 9.7 | 4.4 | 9.9 | 9.4 |

| Oil_spot | 25.4 | 22.2 | 22.9 | 24.2 | 16.5 | 22.6 | 26.6 |

| Punching | 54.0 | 54.3 | 53.5 | 54.2 | 52.0 | 54.5 | 54.8 |

| Rolled_pit | 16.0 | 16.5 | 2.6 | 15.9 | 6.0 | 17.7 | 19.6 |

| Silk_spot | 23.1 | 21.7 | 22.4 | 25.0 | 15.6 | 21.8 | 22.9 |

| Waist_folding | 34.6 | 35.5 | 30.6 | 36.2 | 22.0 | 30.8 | 41.8 |

| Water_spot | 41.2 | 40.5 | 40.1 | 44.0 | 33.5 | 41.5 | 42.9 |

| Welding_line | 26.6 | 16.0 | 22.2 | 49.9 | 44.7 | 27.9 | 50.4 |

Table 5.

Results of ablation experiments (%).

Table 5.

Results of ablation experiments (%).

| AP | Baseline | Baseline + dcn | Baseline + dcn + bf | Baseline + dcn + bf + bl | Baseline + dcn + bf + bl + sam |

|---|

| mAP | 66.0 | 66.5 | 66.7 | 67.0 | 67.5 |

| AP50 | 99.2 | 99.1 | 98.7 | 98.6 | 98.9 |

| AP75 | 77.6 | 77.8 | 79.0 | 78.6 | 79.8 |

| APS | 60.7 | 62.6 | 59.1 | 59.7 | 60.1 |

| APM | 64.7 | 65.4 | 64.9 | 66.0 | 65.8 |

| APL | 66.8 | 66.4 | 68.3 | 65.5 | 69.0 |

Table 6.

Impact of deformable convolution being added at different stages (check symbol √ means deformable convolution being applied).

Table 6.

Impact of deformable convolution being added at different stages (check symbol √ means deformable convolution being applied).

| Experiment | Stage 1 | Stage 2 | Stage 3 | mAP@IoU = 0.5:0.95 (%) | Params(M) |

|---|

| 1 | | | | 66.0 | 68.96 |

| 2 | √ | | | 66.5 | 69.04 |

| 3 | | √ | | 66.4 | 69.04 |

| 4 | | | √ | 66.5 | 69.04 |

| 5 | | √ | √ | 66.3 | 69.29 |

| 6 | √ | | √ | 66.6 | 69.29 |

| 7 | √ | √ | | 66.1 | 69.29 |

| 8 | √ | √ | √ | 66.5 | 69.54 |

Table 7.

Feature balance result (bf means feature balance).

Table 7.

Feature balance result (bf means feature balance).

| AP | Baseline | Baseline + bf |

|---|

| mAP | 66.0 | 66.3 |

| AP50 | 99.2 | 99.0 |

| AP75 | 77.6 | 77.9 |

| APS | 60.7 | 63.0 |

| APM | 64.7 | 65.1 |

| APL | 66.8 | 67.8 |

Table 8.

Experimental results of adding balanced L1 loss function at different stages (check symbol √ means applying balanced L1 loss function).

Table 8.

Experimental results of adding balanced L1 loss function at different stages (check symbol √ means applying balanced L1 loss function).

| Experiment | Stage 1 | Stage 2 | Stage 3 | mAP@IoU = 0.5:0.95 (%) |

|---|

| 1 | | | | 66.0 |

| 2 | √ | | | 66.5 |

| 3 | | √ | | 66.5 |

| 4 | | | √ | 66.2 |

| 5 | | √ | √ | 66.5 |

| 6 | √ | | √ | 66.6 |

| 7 | √ | √ | | 66.7 |

| 8 | √ | √ | √ | 65.9 |

Table 9.

Experimental results of adding OHEM sampler in different stages (check symbol √ indicates OHEM is applied).

Table 9.

Experimental results of adding OHEM sampler in different stages (check symbol √ indicates OHEM is applied).

| Experiment | Stage 1 | Stage 2 | Stage 3 | mAP@IoU = 0.5:0.95 (%) |

|---|

| 1 | | | | 66.0 |

| 2 | √ | | | 66.7 |

| 3 | | √ | | 66.5 |

| 4 | | | √ | 66.2 |

| 5 | | √ | √ | 66.5 |

| 6 | √ | | √ | 66.7 |

| 7 | √ | √ | | 66.1 |

| 8 | √ | √ | √ | 66.4 |

Table 10.

Experimental results of IoU balanced sampler in different stages (check symbol √ indicates IoU balanced sampling is applied).

Table 10.

Experimental results of IoU balanced sampler in different stages (check symbol √ indicates IoU balanced sampling is applied).

| Experiment | Stage 1 | Stage 2 | Stage 3 | mAP@IoU = 0.5:0.95 (%) |

|---|

| 1 | | | | 66.0 |

| 2 | √ | | | 66.3 |

| 3 | | √ | | 66.0 |

| 4 | | | √ | 66.3 |

| 5 | | √ | √ | 66.4 |

| 6 | √ | | √ | 66.6 |

| 7 | √ | √ | | 66.6 |

| 8 | √ | √ | √ | 66.5 |

Table 11.

Experimental results of adding IoU balance and OHEM sampler combination in different stages (check symbol √ indicates IoU balanced sampling is applied; otherwise, OHEM sampler is used).

Table 11.

Experimental results of adding IoU balance and OHEM sampler combination in different stages (check symbol √ indicates IoU balanced sampling is applied; otherwise, OHEM sampler is used).

| Experiment | Stage 1 | Stage 2 | Stage 3 | mAP@IoU = 0.5:0.95 (%) |

|---|

| 1 | | | | 66.5 |

| 2 | √ | | | 66.4 |

| 3 | | √ | | 66.3 |

| 4 | | | √ | 66.3 |

| 5 | | √ | √ | 66.2 |

| 6 | √ | | √ | 66.4 |

| 7 | √ | √ | | 66.8 |

| 8 | √ | √ | √ | 66.6 |