1. Introduction

Archaeological prospection using geophysical approaches in archaeology is essential to enhance scientific understanding and knowledge of archaeological areas and detect potential remains [

1,

2]. While the study of hidden features has been a major focus of archaeologists using excavation methods [

3,

4,

5,

6] developments in geophysics and RS (e.g., ground penetrating radar, drone-based photogrammetry, and laser scanning) have led to an evolution in archaeological studies. Scientists, engineers, and archaeologists can now apply RS approaches to inspect/survey areas of interest, thus avoiding the often-destructive process of excavation [

7,

8]. These non-invasive methods are significantly more sustainable for archaeological sites than traditional excavation and should be the preferred approaches [

9,

10].

RS techniques including Light Detection and Ranging (LiDAR) and aerial photography can be applied to identify archaeological topographies both automatically and manually [

11,

12,

13]. LiDAR and Photogrammetry-derived digital models have been applied in several archaeological projects to demonstrate how RS approaches can be used to identify, interpret, and assess the characteristics of archaeological sites [

13,

14,

15,

16,

17]. For example, in [

18], Digital Surface Models (DSMs) were derived from LiDAR data and Leica Photogrammetric Suite (LPS) and used to generate orthoimages for feature detection in Vaihingen, Germany. They found that buildings (e.g., Vaihingen block) are easier to differentiate when both LiDAR and photogrammetry applied rather than using LiDAR data alone. Airborne Laser Scanning (ALS) was also proposed and used to create Digital Terrain Models (DTMs) of the southern part of Devil’s Furrow (prehistoric pathway), in the Czech Republic, which highlighted the smallest terrain discontinuities in the study site (e.g., erosion furrows and tracks) [

11]. In [

19], a DEM was combined with an orthomosaic photo created from Unmanned Aerial Vehicles (UAV) RGB (Red, Green and Blue) images of a university campus (Sains Malaysia campus in Malaysia) to determine whether fused DSMs provide distinctive results for land cover classification or not. The study also improves the accuracy of the land cover classification by using convolutional neural networks. UAV images classified accurately into grassland, buildings, trees, paved roads, water bodies, shadow, and bare land [

19]. Recently, [

12] showed that LiDAR derived DSM and Google Earth imagery are able to identify hidden sites (e.g., ancient forts) to demonstrate the potential of RS tools to map a Roman period study site in Wadi El-Melah Valley in Gafsa, Tunisia, which is a series of plains surrounded by mountains (maximum altitude is around 1480 m). They detected two sites in the southwest Tunisia suspected to be Roman forts, confirmed by finding brick fragments and several pottery shards in the forts, and a delineated Roman boundary in southern Tunisia using RS data. As a result, several studies found that RS is a robust tool for the archaeological prospection.

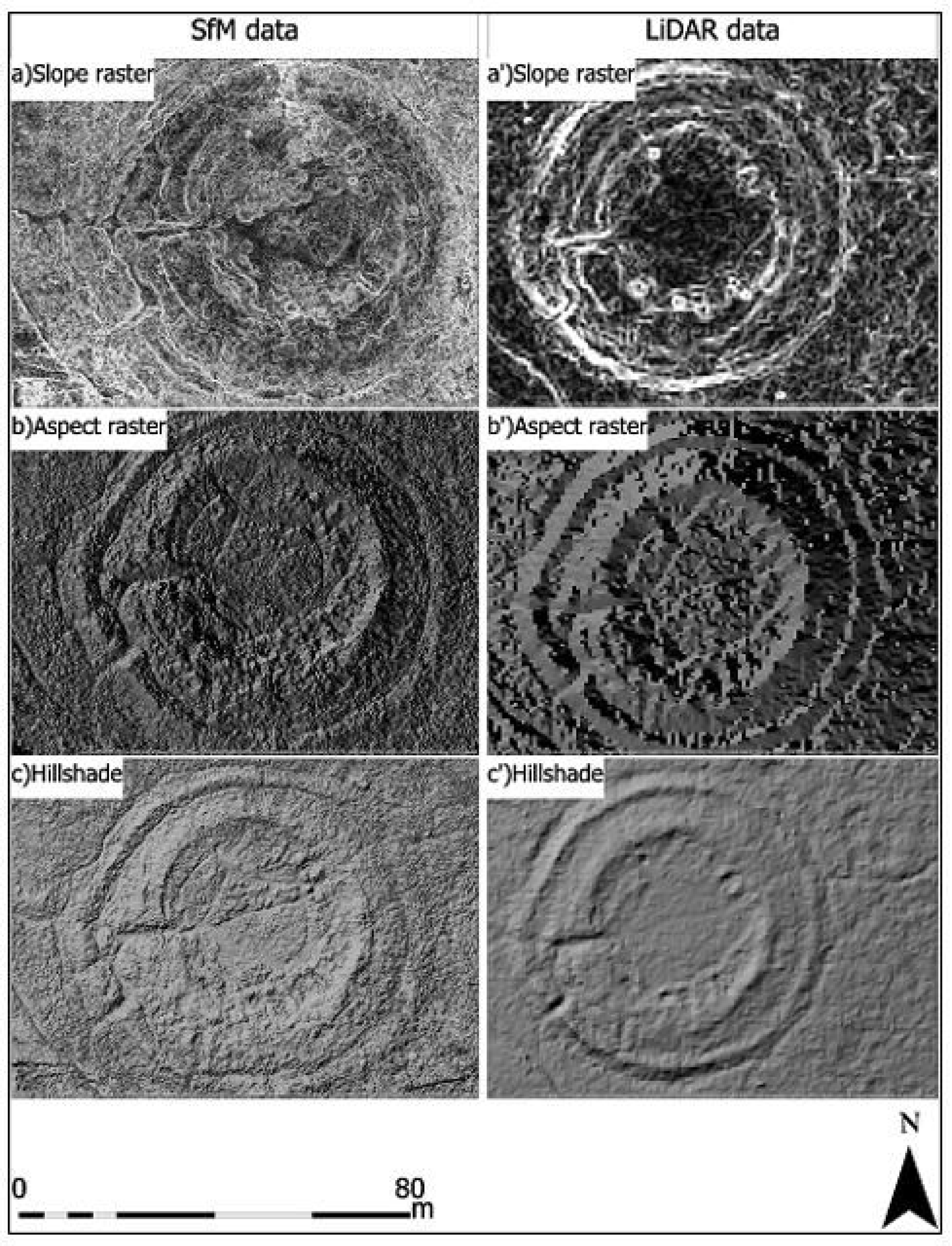

In addition, there are several visualization methods, such as slope images and aspect images derived from digital models, which can be used towards a successful detection of archaeological features [

1,

11,

14,

15,

16]. Specifically, slope images display the vertical variations in the elevation models derived from LiDAR DTMs, while aspect images show the directions of vertical variations in the study sites [

20]. In [

20], a mound, and a possibly new shell ring and another mound were discovered. Additionally, [

6] used a hillshade visualization of LiDAR data with a point density of 1 point/m

2 and successfully provided topographic details of Barwhill (north of Gatehouse of Fleet in Scotland) and detected several archaeological remains, such as linear features that signify old water drainage and another feature that corresponds to the Roman road. However, features could not be extracted from hillshade images, in some cases, due to the influence of the illumination model, which creates distortions and therefore hide some archaeological features. Similarly, in [

20], it was also found that the light in hillshade images could obscure topographies, so they created Red Relief Image Maps (RRIMs) to detect and digitize mounds using LiDAR data. RRIM is another visualization method and is suitable to represent and interpret monuments on various terrains, such as land surface, seafloor, and features on celestial bodies [

14]. RRIM has overcome the limitations (e.g., light direction dependence, filtering, and a weakness for scaling) of other visualization methods, such as hillshade. In [

15], different visualization methods were applied and evaluated under various conditions and they found that the RRIM technique brings relatively a great visualization advantage to the end user when compared to other methods, as it can successfully reveal subtle archaeological remains raster. Moreover, different visualizations techniques (e.g., hillshade, slope, positive openness) can be computed using the Relief Visualization Toolbox (RVT) for discovery and recognition of small-scale features [

16,

21].

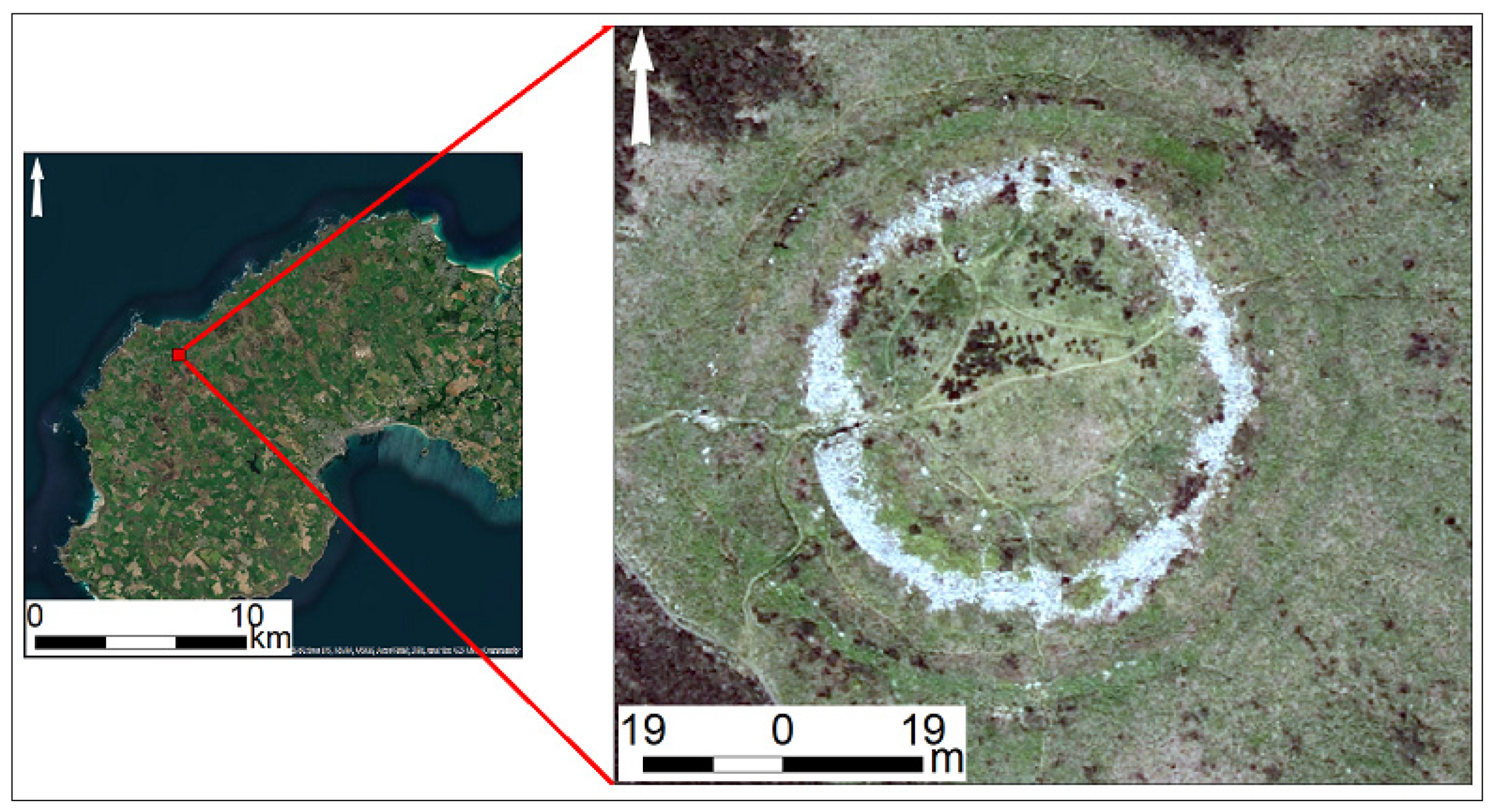

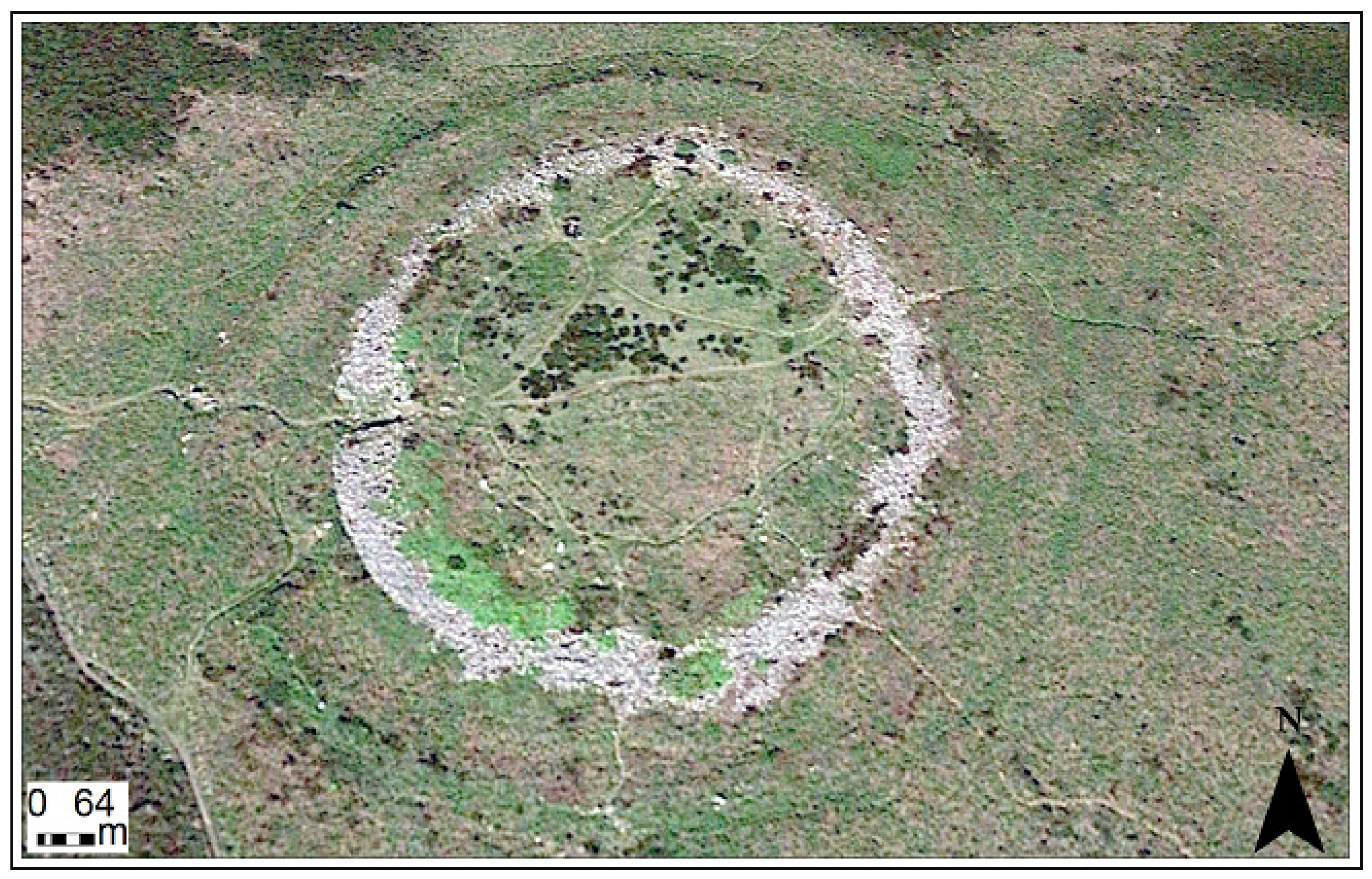

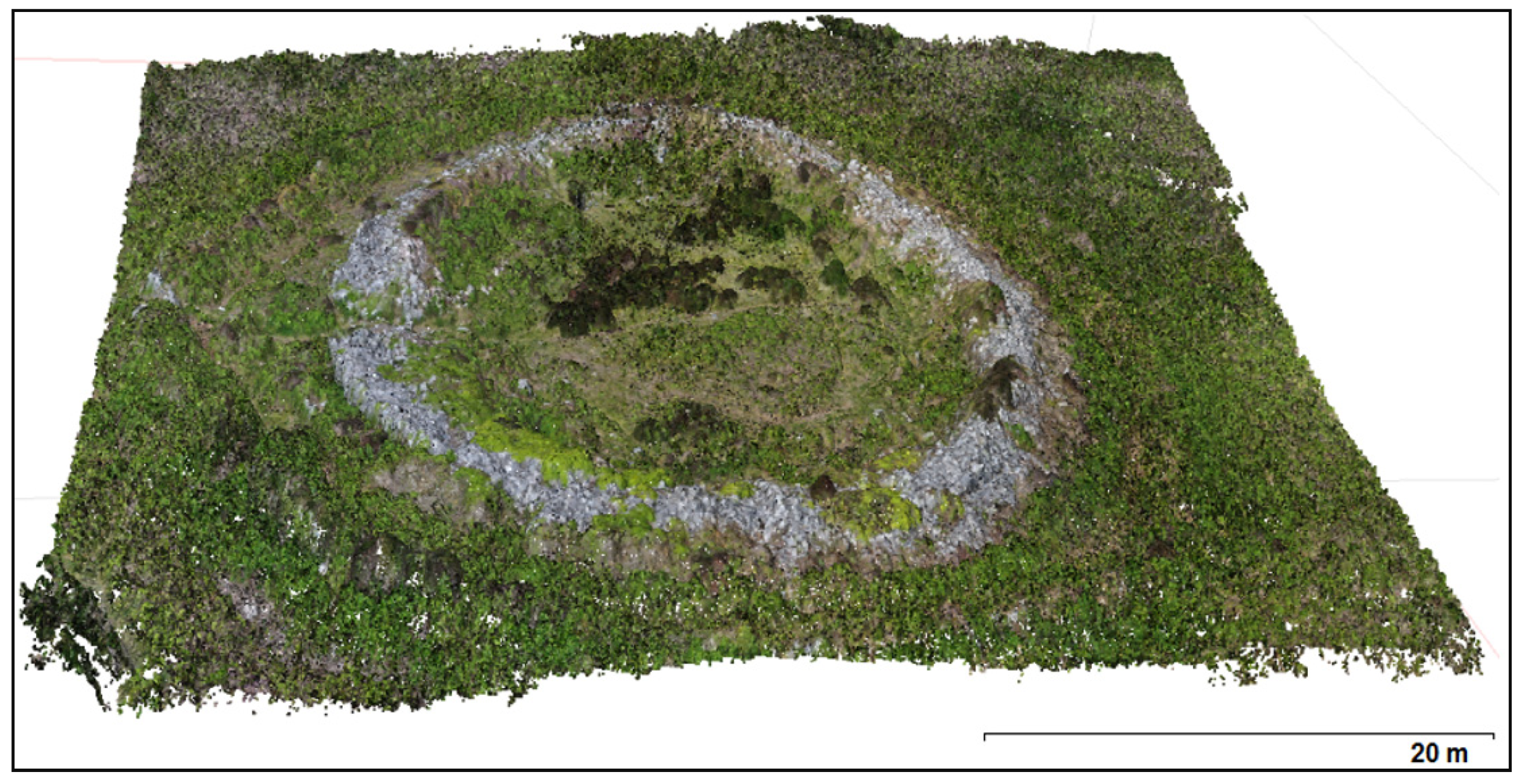

Many studies have employed RS technologies in the discipline of archaeology and cultural heritage. Some focused on the discovery and recording of ancient features/sites for the first time [

7,

15,

17]; others highlighted known archaeological features [

6,

11,

13]. These studies are related to some extent to our research although some are particularly targeting larger areas (e.g., discovering new sites). Thus, UAV-based photogrammetry and LiDAR have the possibility to make substantial further contributions to archaeological management outcomes, and these methods provide secure detection and adequate characterization of the archaeological records. The aim of this study is to demonstrate a workflow for identifying and recording archaeological features using fine-scale RS approaches (i.e., Structure from Motion- Multi View Stereo (SfM-MVS) photogrammetry with drone data and LiDAR data) and make critical comparisons of their capabilities to identify archaeological features (possible remains). Aerial images and LiDAR data processing, classification tools, and visualization methods are utilized to detect and digitize potential archaeological features, including those hard to observe from ground-level but relatively easy to distinguish from above.

4. Discussion

Studies by [

3,

4] were the first to identify archaeological monuments at Chun Castle, by implementing excavation methods. Work to date has concentrated on employing specific approaches for identifying archaeological landscapes (e.g., [

10,

11,

18,

20,

59]) and has not yet focused on making critical comparisons of various non-invasive methods (i.e., RS) for detecting archaeological structures. This research demonstrates particular interpretation and analysis methods that were applied on products derived from two RS approaches (i.e., LiDAR and UAV-SfM datasets) and highlight the differences in their capabilities to detect new archaeological features (potential monuments) at the study site (

Table 6 and

Table 7).

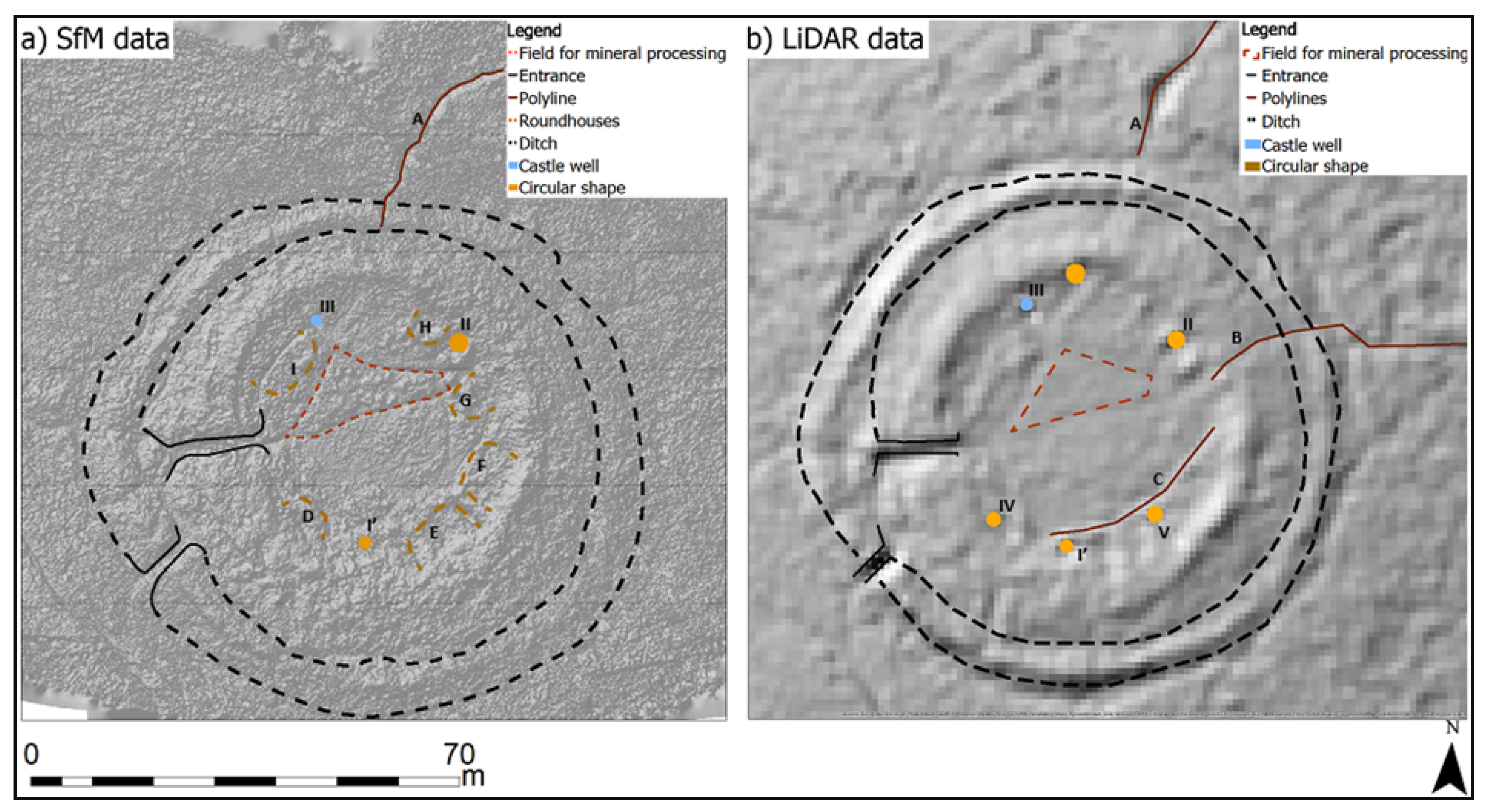

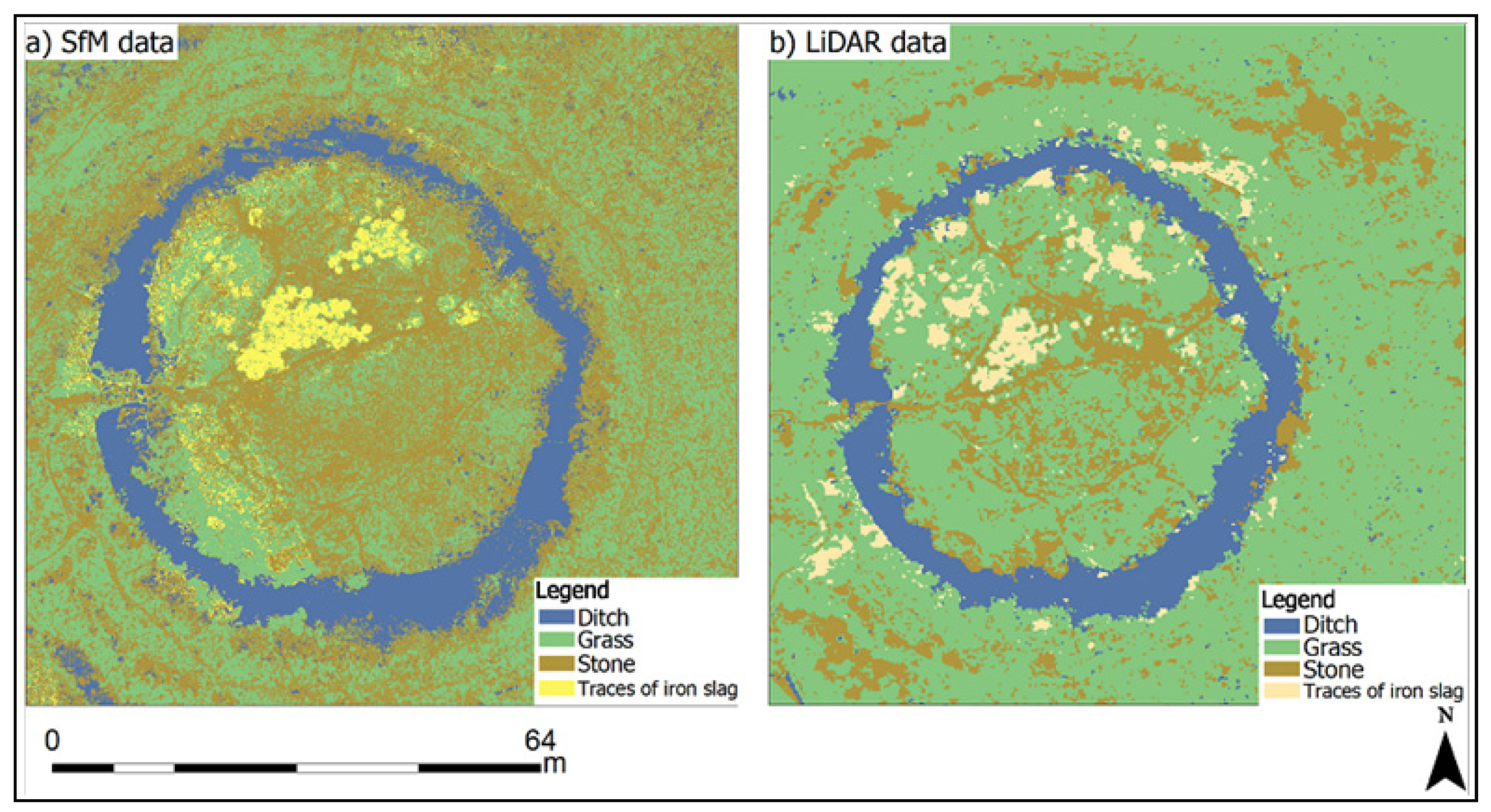

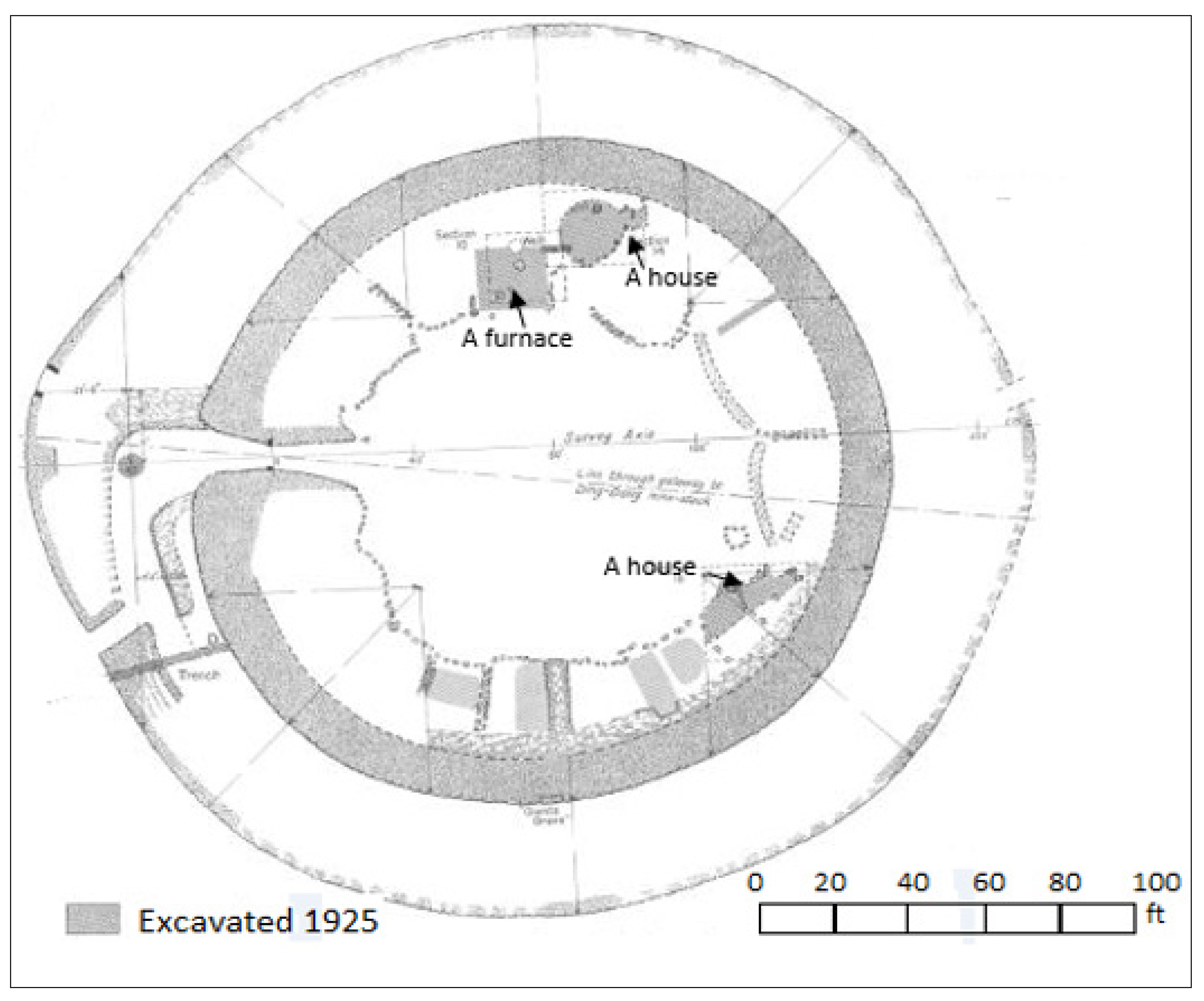

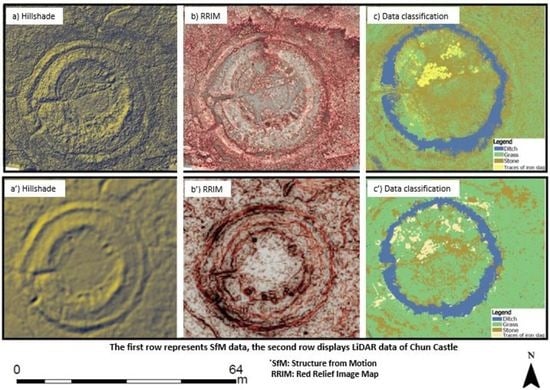

Chun Castle is in an extremely ruinous state. It was frequently challenging to identify archaeological objects from the RS data due to the number of loose stones covering the site. In this study, various visualization methods are applied; the presented combination of the DSM, slope raster, and RRIMs delivered relatively more detailed, less distorted, and clearer raster images than other visualization methods (e.g., hillshade), allowing for the extraction of more information about the archaeological landscape (

Figure 6). Using non- destructive methods, new findings are presented in this study site, which include possible traces of huts/houses, linear monuments, and circular structures. In [

27], possible archaeological remains were identified and interpreted, such as the castle well (one of the circular structures detected in this work), some pottery, huts, and a furnace by only utilizing excavation methods. A furnace in the fort (

Figure 9), containing traces of iron slag and tin, indicates that the fort became a place for the blending, smelting, and production of minerals in the 16th century [

3]. Additionally, and based on the excavation works by [

4], there were huts in the inner courtyard belonging to the Iron Age, but that no longer exist. This might be due to the plundering that occurred in the 18th century to construct houses and pave roads in Penzance.

Further in this study, the RRIM and hillshade raster image derived from the SfM-DSMs shows some possible construction remains of round houses/chambers and these remains were interpreted and digitized (

Figure 6 and

Figure A1 in

Appendix A). Circular huts, in general, are a normal form of Iron Age forts and have been revealed in most Iron Age castles [

4]. Six ‘potential existence’ archaeological huts traces are found in this study; some of them have been revealed by previous literatures (

Figure 9), as illustrated in

Section 2. Furthermore, there was a castle well that had been used for providing water [

27]. In general, wells are valuable elements in castles, and sometimes, castles had more than one well [

61]. Cartwright [

61] further states that around 80% of castles were supplied with one well and 20% had two or more wells. In this research, three circular features have been detected and one of these features was identified following its spatial positioning to be the castle well based on earlier identification in [

3,

27] studies.

In this work, archaeological features have been detected, quantified, and digitized at the fine-scale landscape from RS datasets. There are several features that were detected by SfM-MVS photogrammetry with UAV data but have not been identified by LiDAR (and vice versa), although the same processing and analysis methods were implemented in both datasets. This is due to the differences in spatial resolution between the two datasets. The reason is likely to be the spatial resolution of the SfM data, which is relatively higher than the resolution of the LiDAR DSMs for this particular site. In this study, the spatial resolution of the LiDAR data was 1 m, however there might be a possibility to grid the raw data (in case of availability) at a higher resolution (e.g., 0.5 m), which sets a limit to the amount of information extracted from this dataset. That means algorithms would be implemented by gap-filling the raw data; consequently, increasing the resolution in this particular case, would potentially not provide more useful information about the study site. Additionally, RS data were acquired on different dates, the LiDAR data were collected during July and August 2013 and the aerial photography in June 2019, the study site, to some extent, was not changed during that period (2013–2019). There are several factors that directly or indirectly affect topographic features and cause changes in a certain area, such as environmental damage (e.g., flood, earthquake, and fire) and human activities (e.g., vandalism, war, development, and excavation) [

62]. The study site has not been exposed to these factors, nor any destructive tools, especially between 2013 and 2019 [

61], so the archaeological area itself has not changed during that period. However, various archaeological features were likely to be obtained from both approaches due to the different settings and conditions (e.g., cameras, sensors, and resolution) of collecting each dataset. Accordingly, our understanding is promoted by this particular archaeological landscape that belongs to the Iron Age and the Roman period. The newly discovered possible huts and circular shapes in the castle helped to answer an archaeological question about how different methodological approaches (i.e., visualization methods and classification algorithms) can be applied for the detection of archaeological landscapes. Therefore, the merit of identifying archaeological structures here is to comprehend the capability of RS methods in interpreting and measuring structures/objects that might otherwise remain hidden.

5. Conclusions

In this paper, a non-destructive routine was presented to identify potential archaeological structures in Chun Castle site using LiDAR and UAV photogrammetry methods. The RS technologies allowed us to verify and understand the merits of the archaeological study site. Some features were identified and manually digitized based on the visualization methods (e.g., RRIMs) adopted. These methods resulted in a reliable identification of several potential hut monuments in the castle. ISO cluster and SVM classification algorithms were applied to automatically detect all archaeological objects in the site. The usage of various visualization approaches and classification tools in one archaeological site proved to be an adequate method for detecting hidden features. The algorithms that were adopted allowed for enhanced recognition of various suspected structures (e.g., roundhouse). The outcomes of the geospatial analysis allowed us to (1) detect and digitize newly archaeological objects, (2) map the shape of the fort, and (3) find unknown monuments (lines, circles) in the AOI. SfM photogrammetric data helped to detect hidden monuments in the archaeological landscape where LiDAR data provided relatively lower levels of detail. These differences were due to the various spatial resolution of the two datasets. The resulting DSMs and the produced visualization raster images, together with the utilized classification algorithms, allowed us to digitize the topographic features of the study site and detect possible monuments. Within this study, the possibilities of RS stand-alone methods (LiDAR and UAV-photogrammetry) in generating 3D models and identifying archaeological features of an ancient site are investigated. Our results concluded that the UAV-SfM and LiDAR are valuable data sources that could be applied in archaeological projects to improve potentials for new findings. For future work, we recommended the application of fusion RS approaches since there is a possibility to obtain relatively more information of the archaeological sites. Consequently, we conclude that applying fusion RS methods are likely to improve the interpretation performances of the RS source data and deliver relatively more archaeological data compared to the RS stand-alone approaches.