Detection and Analysis of Degree of Maize Lodging Using UAV-RGB Image Multi-Feature Factors and Various Classification Methods

Abstract

:1. Introduction

2. Materials and Methods

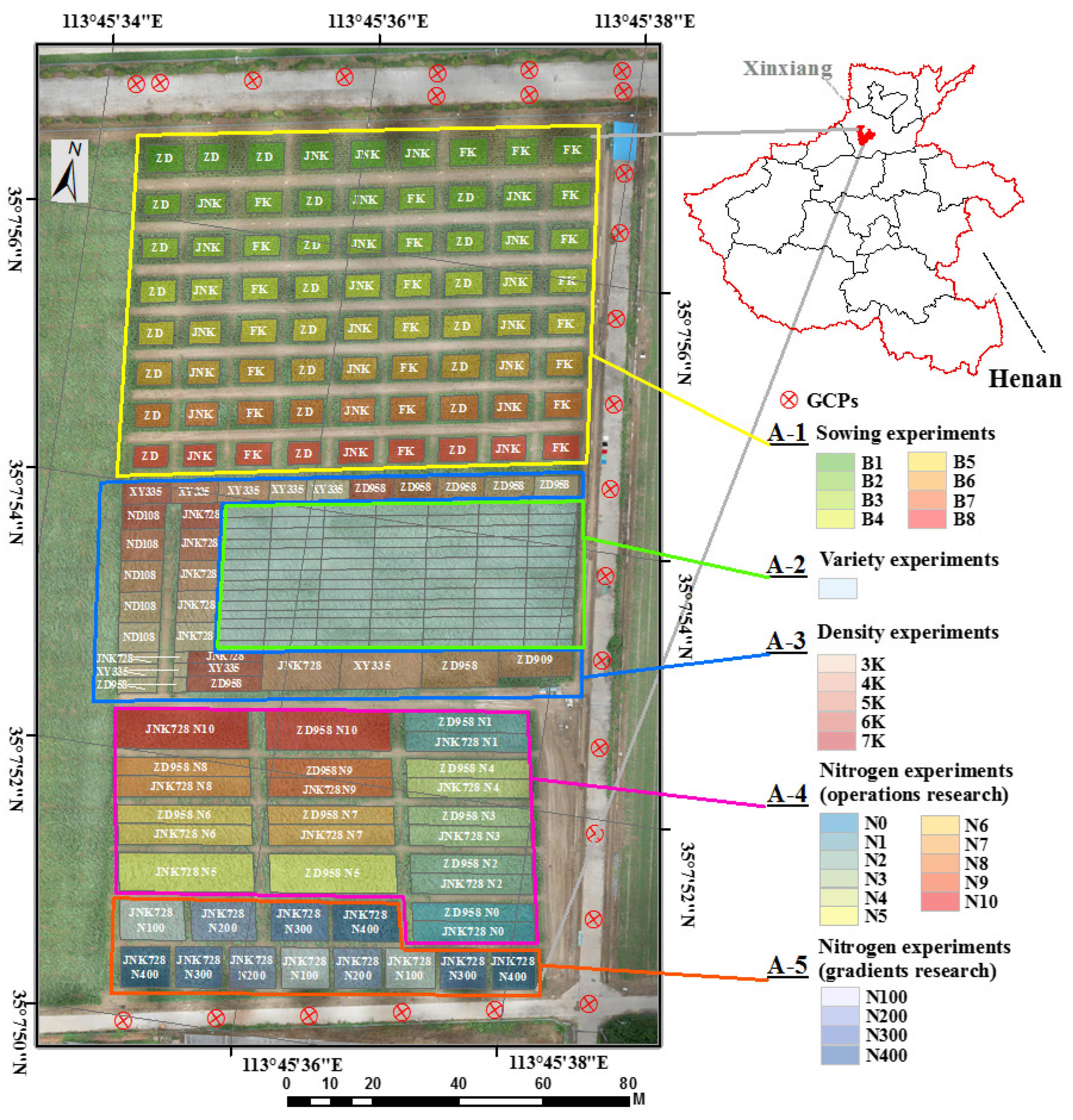

2.1. Study Area and Design of Field Experiments

2.2. UAV-RGB Image Acquisition

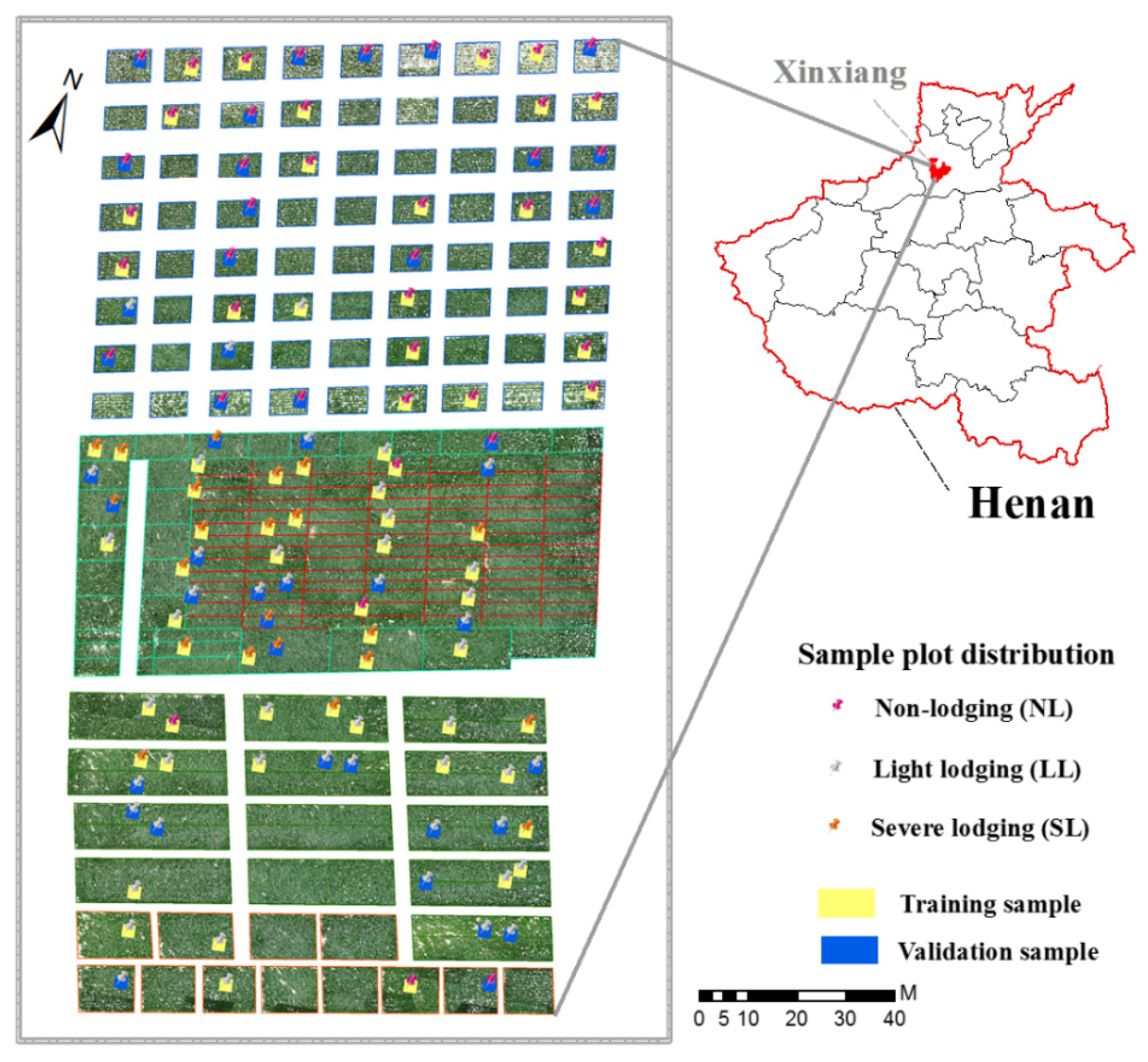

2.3. Field-Survey Data Acquisition and Lodging Type

2.4. UAV-RGB Image Preprocessing

- (1)

- Image filtering: To reduce the number of images and ensure good image quality, poor-quality images were deleted, such as those acquired during UAV takeoff and landing.

- (2)

- Image mosaic: To get the structural point cloud of the sparse scene, the GPS coordinates and the inertial navigation system attitude parameters recorded by the drone flight control system were combined, and Agisoft PhotoScan (Agisoft LLC, Saint Petersburg, Russia, version 1.4.5) was used to match the input image data based on the structure from motion algorithm (SfM) algorithm. Dense reconstruction then produced a dense point cloud. Next, the discrete three-dimensional points were connected to the polygonal mesh surface by surface reconstruction, and the texture was mapped to the surface to generate a realistic three-dimensional model. The final digital image served as the DSM [21,22,23]. Next, the ground control points obtained as per Section 2.2 served to make fine geometric corrections to the image of the study area. GCS_WGS_1984 served as the geographical coordinate system of the stitched RGB image, and the image was composed of red, green, and blue channels with each color containing eight bits (so the color value ranged from 0 to 255). The spatial resolution was 0.8 cm, and the image was stored in TIFF format.

- (3)

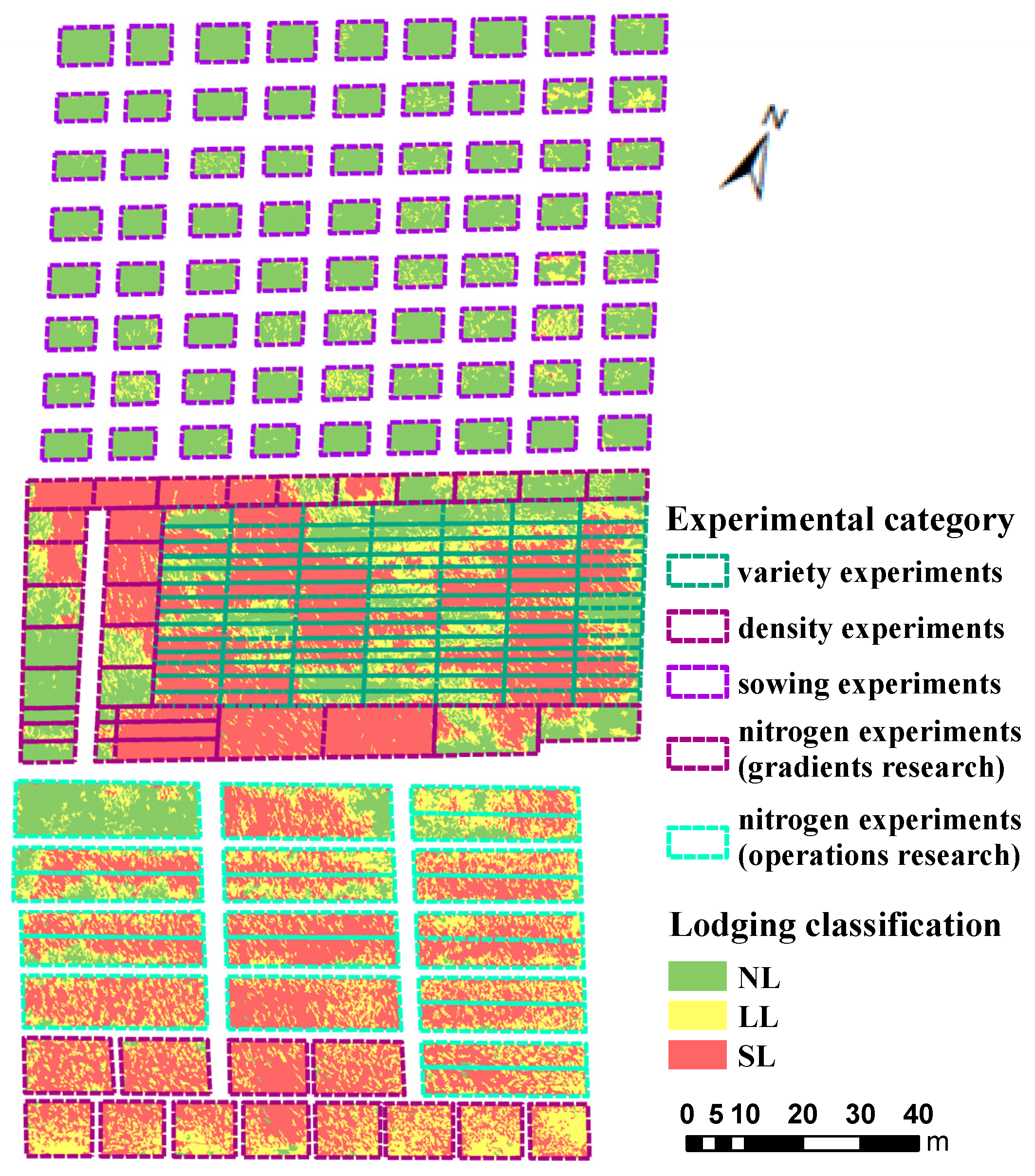

- Image clipping: ArcGIS 10.3 (Esri, USA) was combined with images and field-planting maps to outline the experimental types on the images, and cutting tools were used to remove parts that fell outside the study area, only the part of maize coverage is reserved, such as the base map in Figure 3.

2.5. Technical Process of Study

2.6. Extraction of Maize Lodging

2.6.1. Extraction of Characteristic Factors for Different Degrees of Lodging of Maize

- (1)

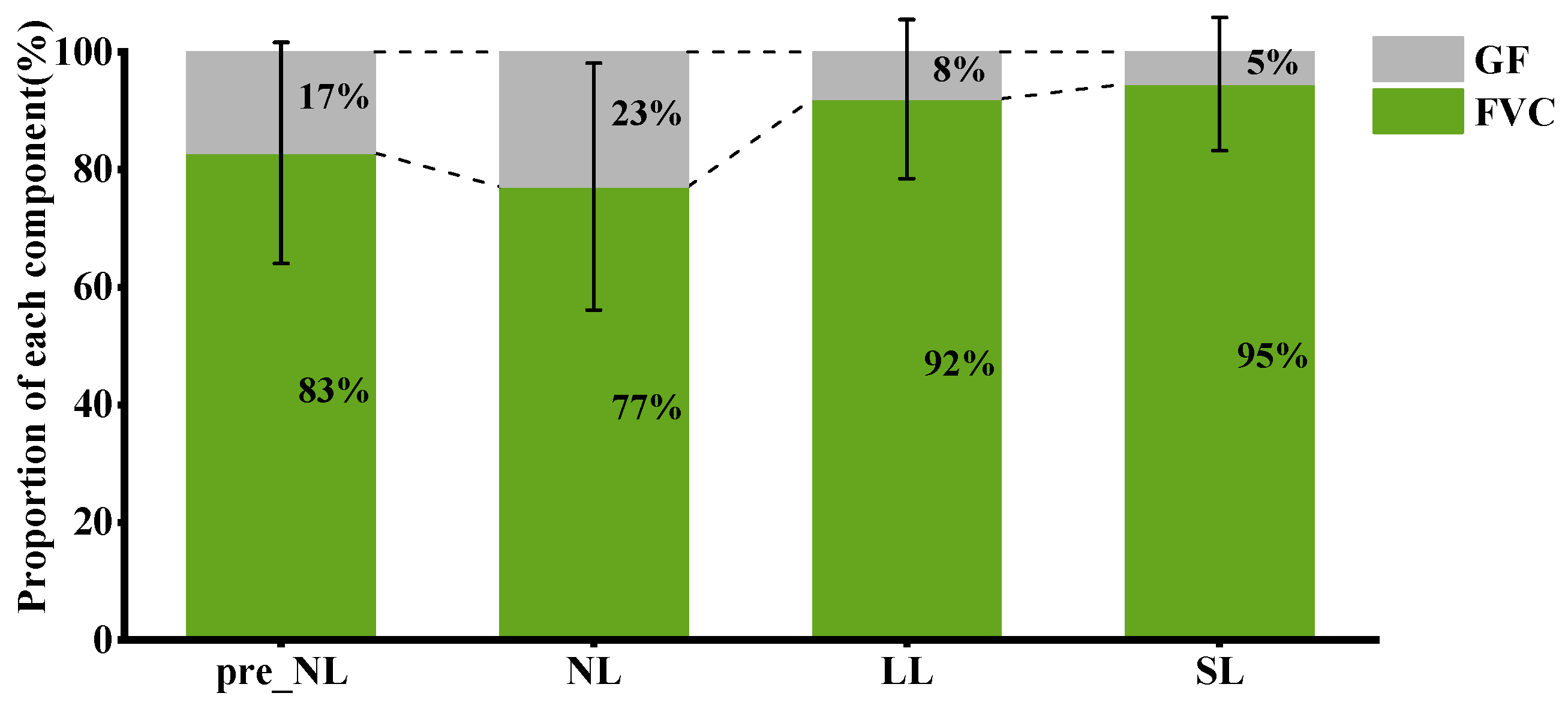

- Separation of soil background and extraction of canopy coverage

- (2)

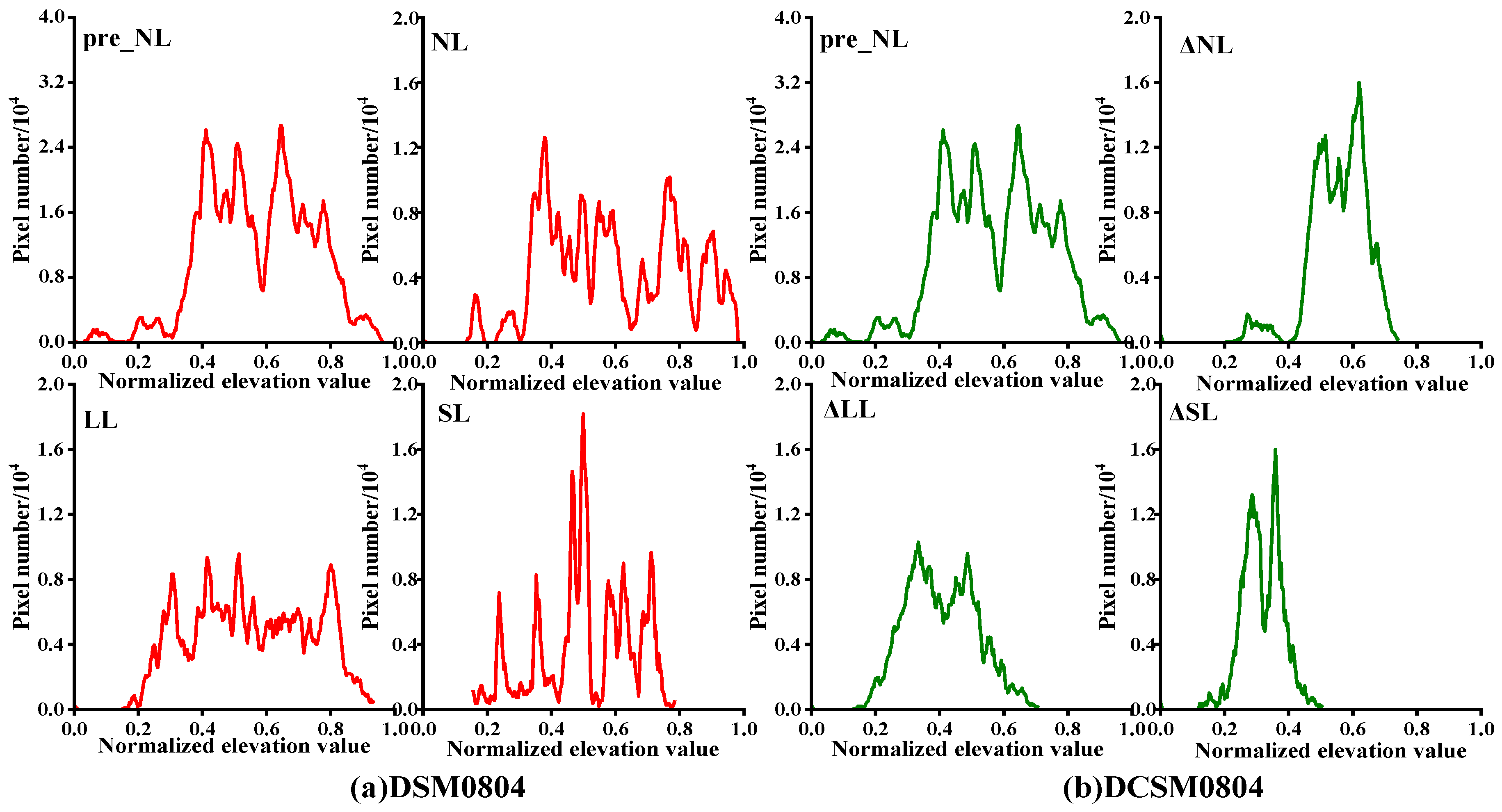

- Construction of digital elevation model and generation of digital surface model

- (3)

- Construction of vegetation indices

- (4)

- Statistics and analysis of texture features

2.6.2. Extraction Lodging from Maize Field with Different Degrees of Lodging

- (1)

- Classification of Maize Lodging by Pixel-Level Supervised Classification

- (2)

- Classification of Maize Lodging by Object-Oriented Classification

3. Results

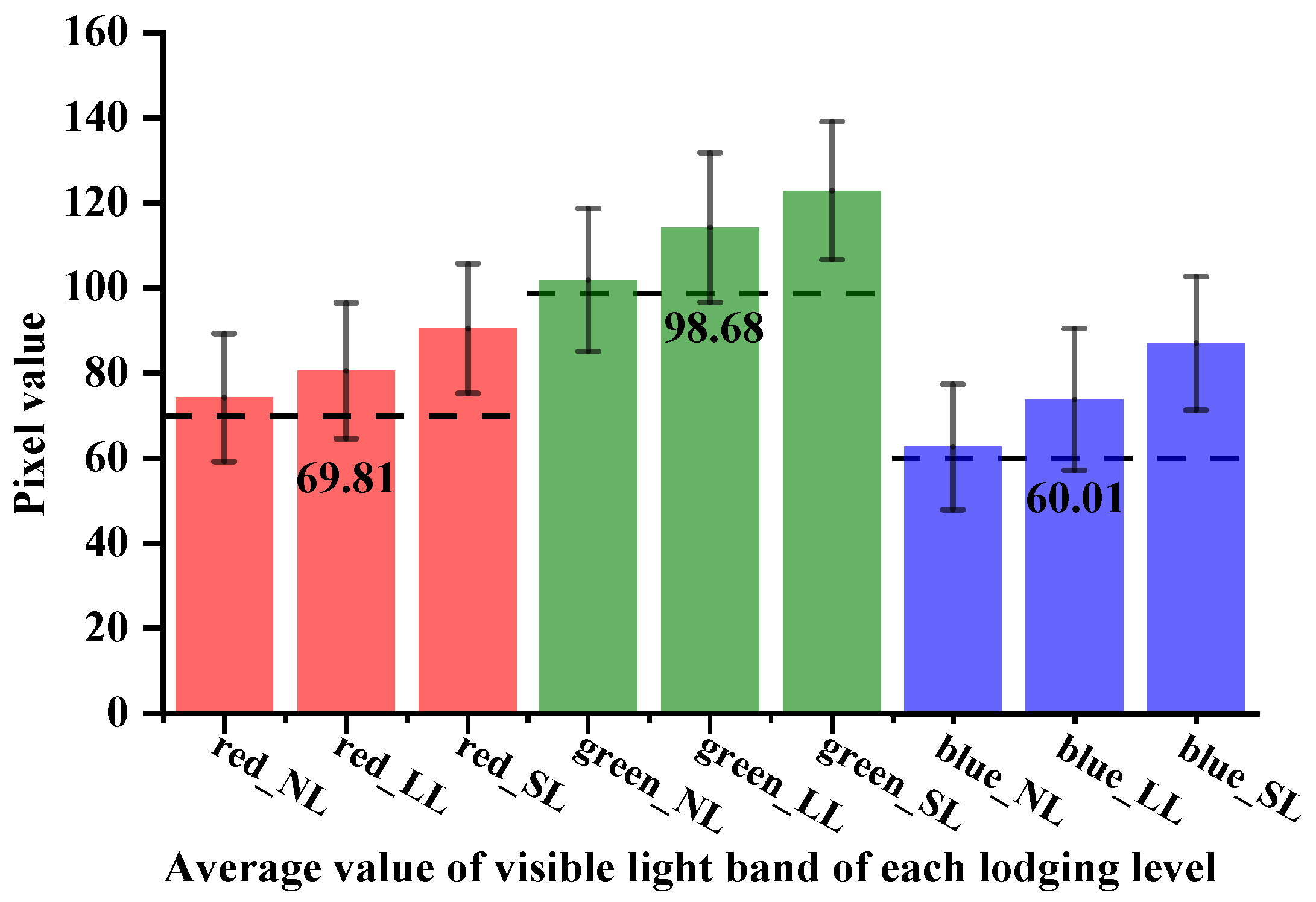

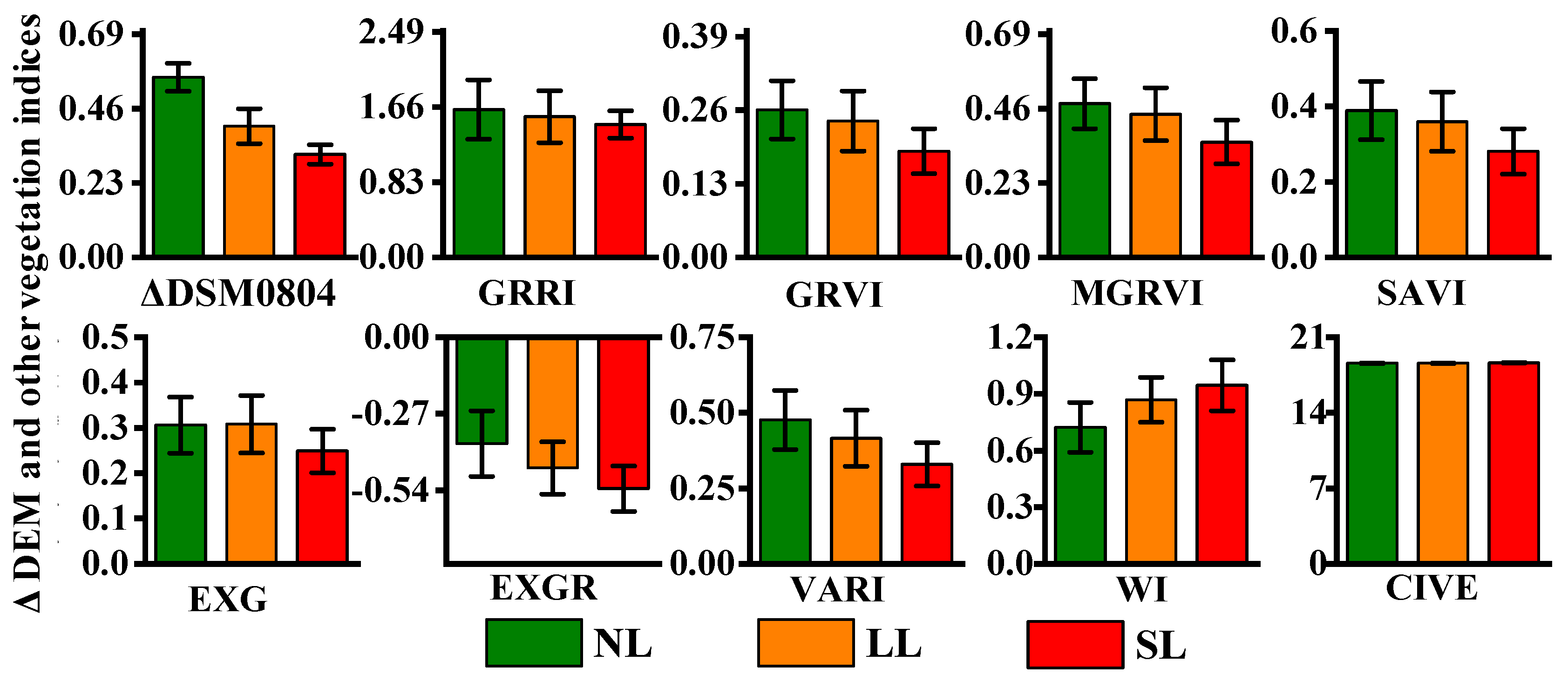

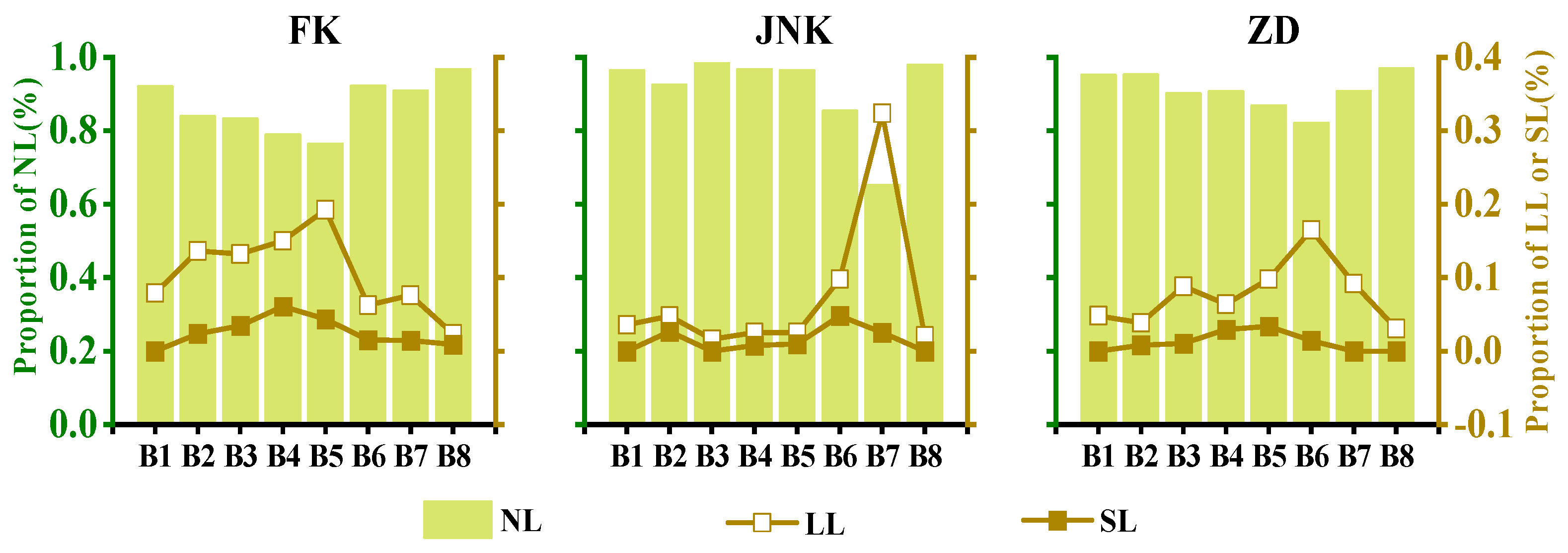

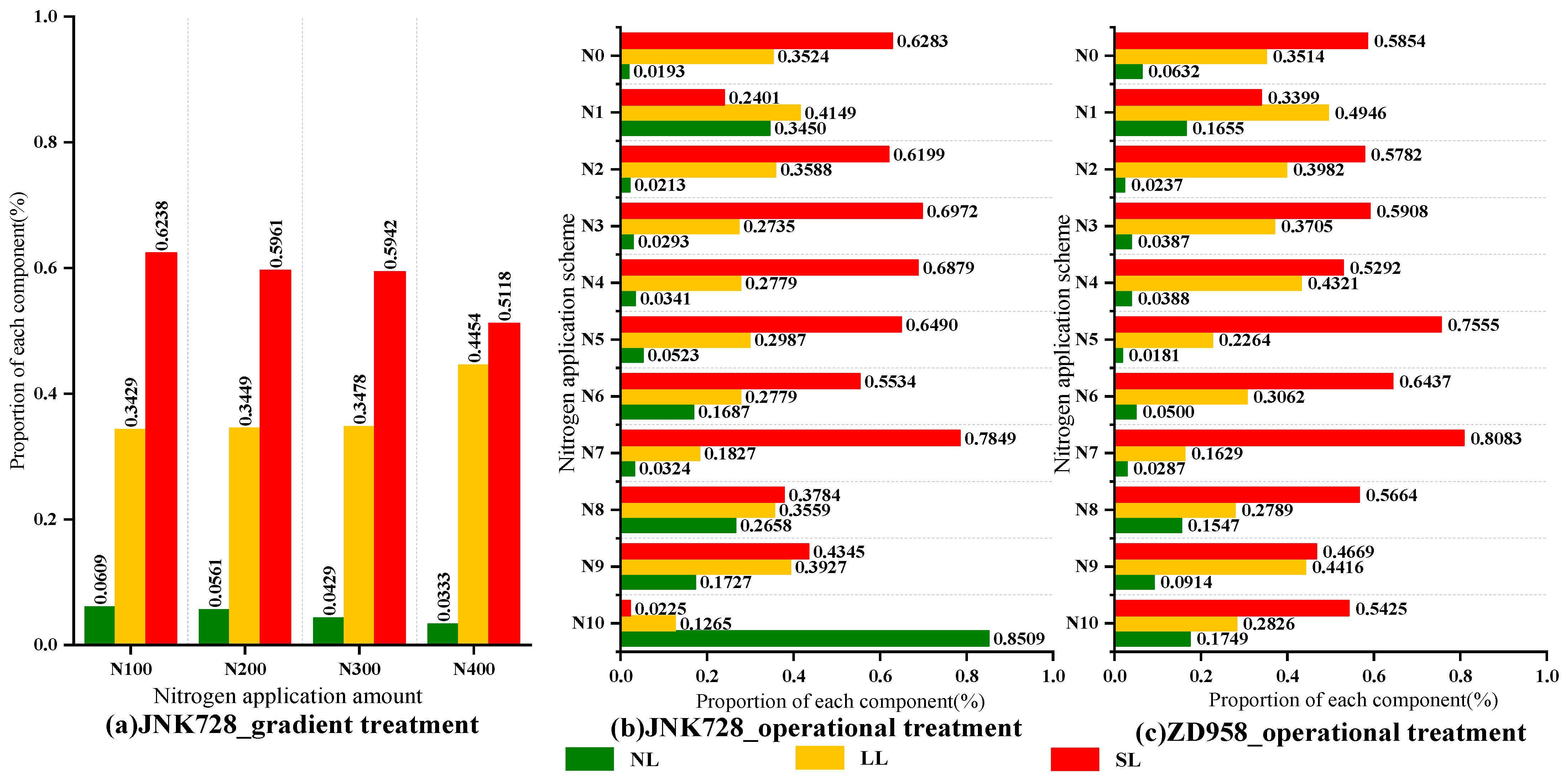

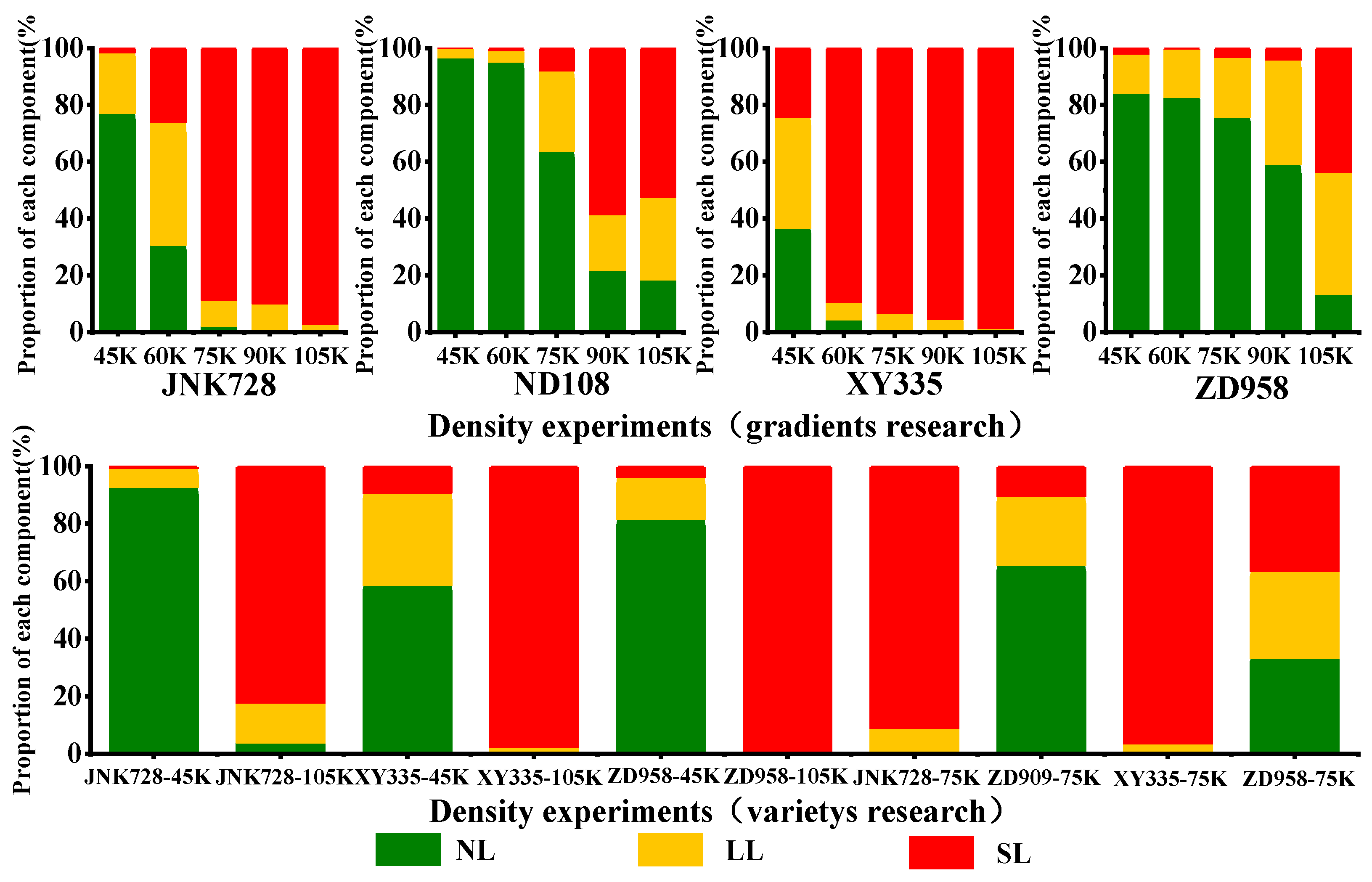

3.1. Extraction of Multi-Feature Factors from RGB Images

3.2. Classification of Each Degree of Lodging by the Various Classification Methods

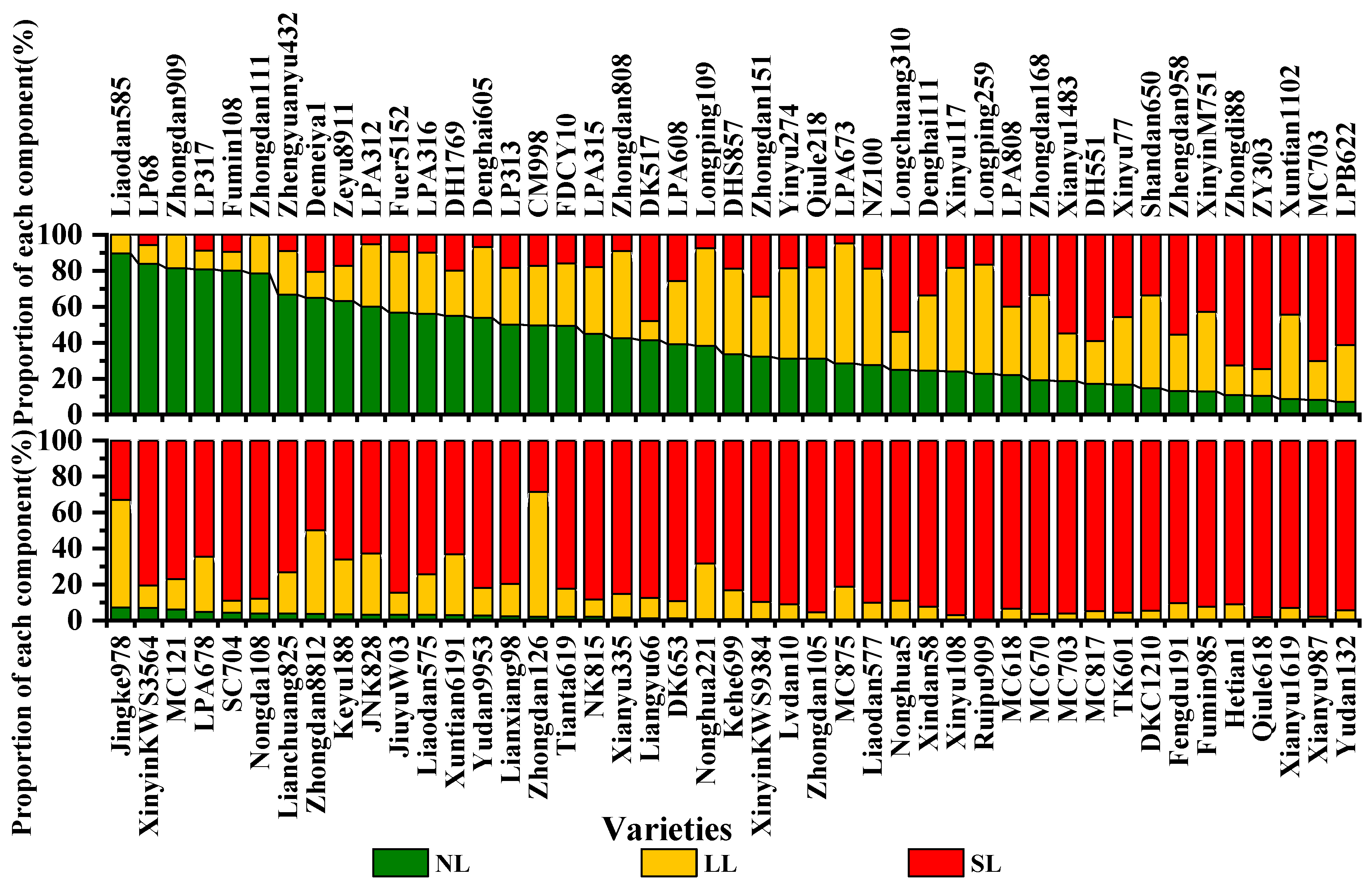

3.3. Mechanism behind Degree of the Maize Lodging

4. Discussion

5. Conclusions

- (1)

- The maize lodging modifies to varying degrees the original reflectance spectrum, vegetation index, texture characteristics, canopy coverage, digital surface model, and other characteristic factors. A statistical analysis of these characteristic factors allows us to select the optimal vegetation index from GRVI, MGRVI, SAVI, and VARI and the best texture feature from green_mean, green_homogeneity, mgrvi_variance, vari_variance, and vari_contrast.

- (2)

- When using the supervised classification method to classify the degree of the maize lodging, although the samples are relatively independent of each other, the low discrimination of lodging maize on the pixel scale translates into a large number of non-green parts in the sample area, which leads to misclassification at the pixel level, resulting in a serious “salt-and-pepper phenomenon.” Even a posteriori removal of small patches does not significantly improve the classification results. In addition, supervised classification provides poor classification accuracy, which means that supervised classification methods applied to high-spatial-resolution images with significant differences in the category structure and similar color are valid only for general classification schemes.

- (3)

- When the object-oriented classification method is used, the input layers should first be segmented. This step fully extracts the spatial information in the image. At the same time, by exploiting the attributes of the segmented objects, we classify the objects according to the classification method by using the random forest classification method, which avoids the misclassification of pixels due to pixel-level classification of unified ground objects, thereby reducing the salt-and-pepper phenomenon. The result is clearer boundaries between the different degrees of lodging and improved classification accuracy.

- (4)

- Combined with the original image, digital surface model, and texture features, the overall accuracy of object-oriented classification method combined with random forest classification is 86.96%, and the kappa coefficient is 0.7931, which is the highest values of several classification methods. These results show that the method of extracting the degree of lodging of maize using UAV-RGB images combined with the derived feature factors is feasible and can be used to obtain greater classification accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Characteristic Index | Non-Lodging (NL) | Light Lodging (LL) | Severe Lodging (SL) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AVG | SD | CV | AVG | SD | CV | CDNL | AVG | SD | CV | CDNL | |

| red_mean | 15.13 | 6.49 | 0.43 | 19.60 | 5.74 | 0.29 | 0.30 | 22.68 | 4.81 | 0.21 | 0.50 |

| red_variance | 77.16 | 52.51 | 0.68 | 69.36 | 42.16 | 0.61 | 0.10 | 71.36 | 45.08 | 0.63 | 0.08 |

| red_homogeneity | 0.31 | 0.20 | 0.63 | 0.23 | 0.10 | 0.42 | 0.27 | 0.22 | 0.09 | 0.41 | 0.31 |

| red_contrast | 80.42 | 62.14 | 0.77 | 63.61 | 47.39 | 0.75 | 0.21 | 74.50 | 60.38 | 0.81 | 0.07 |

| red_dissimilarity | 5.79 | 2.36 | 0.41 | 5.56 | 1.92 | 0.35 | 0.04 | 5.89 | 2.20 | 0.37 | 0.02 |

| red_entropy | 3.27 | 0.86 | 0.26 | 3.67 | 0.32 | 0.09 | 0.12 | 3.72 | 0.27 | 0.07 | 0.14 |

| red_second moment | 0.11 | 0.19 | 1.79 | 0.04 | 0.05 | 1.52 | 0.67 | 0.03 | 0.05 | 1.50 | 0.71 |

| red_correlation | 0.49 | 0.23 | 0.47 | 0.54 | 0.21 | 0.39 | 0.10 | 0.48 | 0.26 | 0.53 | 0.02 |

| green_mean | 19.80 | 8.49 | 0.43 | 26.54 | 7.00 | 0.26 | 0.34 | 29.35 | 5.66 | 0.19 | 0.48 |

| green_variance | 101.70 | 66.72 | 0.66 | 87.66 | 58.55 | 0.67 | 0.14 | 83.93 | 60.37 | 0.72 | 0.17 |

| green_homogeneity | 0.31 | 0.20 | 0.64 | 0.23 | 0.10 | 0.41 | 0.25 | 0.22 | 0.09 | 0.41 | 0.28 |

| green_contrast | 98.45 | 72.09 | 0.73 | 77.86 | 61.45 | 0.79 | 0.21 | 86.84 | 76.82 | 0.88 | 0.12 |

| green_dissimilarity | 6.35 | 2.53 | 0.40 | 5.96 | 2.21 | 0.37 | 0.06 | 6.10 | 2.47 | 0.41 | 0.04 |

| green_entropy | 3.28 | 0.86 | 0.26 | 3.67 | 0.32 | 0.09 | 0.12 | 3.71 | 0.27 | 0.07 | 0.13 |

| green_secondmoment | 0.11 | 0.19 | 1.80 | 0.04 | 0.05 | 1.51 | 0.66 | 0.03 | 0.05 | 1.49 | 0.70 |

| green_correlation | 0.53 | 0.23 | 0.43 | 0.56 | 0.21 | 0.38 | 0.06 | 0.48 | 0.26 | 0.54 | 0.08 |

| blue_mean | 14.91 | 6.89 | 0.46 | 21.05 | 6.89 | 0.33 | 0.41 | 25.57 | 5.96 | 0.23 | 0.71 |

| blue_variance | 85.72 | 58.03 | 0.68 | 93.50 | 56.15 | 0.60 | 0.09 | 96.54 | 54.38 | 0.56 | 0.13 |

| blue_homogeneity | 0.30 | 0.20 | 0.68 | 0.20 | 0.09 | 0.46 | 0.31 | 0.19 | 0.09 | 0.46 | 0.37 |

| blue_contrast | 87.70 | 65.65 | 0.75 | 84.10 | 62.55 | 0.74 | 0.04 | 99.03 | 77.20 | 0.78 | 0.13 |

| blue_dissimilarity | 6.21 | 2.56 | 0.41 | 6.45 | 2.21 | 0.34 | 0.04 | 6.90 | 2.46 | 0.36 | 0.11 |

| blue_entropy | 3.29 | 0.86 | 0.26 | 3.70 | 0.32 | 0.09 | 0.12 | 3.75 | 0.26 | 0.07 | 0.14 |

| blue_second moment | 0.10 | 0.19 | 1.81 | 0.03 | 0.05 | 1.56 | 0.67 | 0.03 | 0.05 | 1.56 | 0.71 |

| blue_correlation | 0.49 | 0.23 | 0.47 | 0.55 | 0.22 | 0.39 | 0.11 | 0.49 | 0.25 | 0.51 | 0.00 |

| vari_mean | 5.43 | 2.00 | 0.37 | 5.61 | 1.55 | 0.28 | 0.03 | 4.44 | 1.02 | 0.23 | 0.18 |

| vari_variance | 12.01 | 7.76 | 0.65 | 8.20 | 5.46 | 0.67 | 0.32 | 4.98 | 3.08 | 0.62 | 0.59 |

| vari_homogeneity | 0.40 | 0.13 | 0.32 | 0.40 | 0.09 | 0.23 | 0.02 | 0.46 | 0.10 | 0.21 | 0.14 |

| vari_contrast | 17.67 | 11.93 | 0.68 | 12.16 | 9.02 | 0.74 | 0.31 | 7.33 | 5.14 | 0.70 | 0.59 |

| vari_dissimilarity | 2.81 | 0.96 | 0.34 | 2.39 | 0.82 | 0.34 | 0.15 | 1.84 | 0.63 | 0.34 | 0.35 |

| vari_entropy | 3.15 | 0.60 | 0.19 | 3.34 | 0.26 | 0.08 | 0.06 | 3.13 | 0.31 | 0.10 | 0.00 |

| vari_second moment | 0.09 | 0.13 | 1.50 | 0.05 | 0.03 | 0.61 | 0.48 | 0.06 | 0.03 | 0.55 | 0.33 |

| vari_correlation | 0.27 | 0.21 | 0.79 | 0.28 | 0.20 | 0.72 | 0.05 | 0.28 | 0.22 | 0.76 | 0.06 |

| mgrvi_mean | 23.18 | 8.14 | 0.35 | 25.71 | 5.72 | 0.22 | 0.11 | 21.23 | 3.99 | 0.19 | 0.08 |

| mgrvi_variance | 169.15 | 82.47 | 0.49 | 125.70 | 64.31 | 0.51 | 0.26 | 80.55 | 39.26 | 0.49 | 0.52 |

| mgrvi_homogeneity | 0.23 | 0.17 | 0.74 | 0.15 | 0.07 | 0.43 | 0.33 | 0.17 | 0.07 | 0.40 | 0.26 |

| mgrvi_contrast | 239.50 | 117.66 | 0.49 | 186.71 | 107.09 | 0.57 | 0.22 | 118.68 | 67.19 | 0.57 | 0.50 |

| mgrvi_dissimilarity | 10.54 | 2.97 | 0.28 | 9.59 | 2.80 | 0.29 | 0.09 | 7.70 | 2.21 | 0.29 | 0.27 |

| mgrvi_entropy | 3.47 | 0.67 | 0.19 | 3.79 | 0.18 | 0.05 | 0.09 | 3.79 | 0.18 | 0.05 | 0.09 |

| mgrvi_secondmoment | 0.07 | 0.13 | 1.78 | 0.03 | 0.03 | 0.98 | 0.65 | 0.03 | 0.03 | 0.98 | 0.65 |

| mgrvi_correlation | 0.29 | 0.22 | 0.75 | 0.27 | 0.21 | 0.75 | 0.04 | 0.28 | 0.22 | 0.80 | 0.04 |

| savi_mean | 12.59 | 4.77 | 0.38 | 13.86 | 3.54 | 0.26 | 0.10 | 11.02 | 2.29 | 0.21 | 0.12 |

| savi_variance | 57.66 | 31.07 | 0.54 | 46.37 | 25.24 | 0.54 | 0.20 | 26.72 | 13.86 | 0.52 | 0.54 |

| savi_homogeneity | 0.28 | 0.16 | 0.57 | 0.22 | 0.07 | 0.34 | 0.20 | 0.26 | 0.08 | 0.30 | 0.06 |

| savi_contrast | 82.43 | 44.09 | 0.53 | 69.12 | 41.02 | 0.59 | 0.16 | 39.72 | 23.56 | 0.59 | 0.52 |

| savi_dissimilarity | 6.25 | 1.91 | 0.30 | 5.83 | 1.79 | 0.31 | 0.07 | 4.39 | 1.33 | 0.30 | 0.30 |

| savi_entropy | 3.41 | 0.66 | 0.19 | 3.71 | 0.19 | 0.05 | 0.09 | 3.66 | 0.20 | 0.05 | 0.07 |

| savi_secondmoment | 0.08 | 0.13 | 1.73 | 0.03 | 0.03 | 0.89 | 0.62 | 0.03 | 0.03 | 0.83 | 0.60 |

| savi_correlation | 0.28 | 0.21 | 0.76 | 0.27 | 0.20 | 0.75 | 0.04 | 0.27 | 0.22 | 0.80 | 0.04 |

| grvi_mean | 12.55 | 4.75 | 0.38 | 13.82 | 3.54 | 0.26 | 0.10 | 10.98 | 2.28 | 0.21 | 0.13 |

| grvi_variance | 57.53 | 31.01 | 0.54 | 46.33 | 25.24 | 0.54 | 0.19 | 26.67 | 13.86 | 0.52 | 0.54 |

| grvi_homogeneity | 0.28 | 0.16 | 0.57 | 0.22 | 0.07 | 0.34 | 0.20 | 0.26 | 0.08 | 0.30 | 0.05 |

| grvi_contrast | 82.33 | 44.11 | 0.54 | 69.11 | 41.07 | 0.59 | 0.16 | 39.68 | 23.58 | 0.59 | 0.52 |

| grvi_dissimilarity | 6.25 | 1.91 | 0.31 | 5.82 | 1.80 | 0.31 | 0.07 | 4.39 | 1.33 | 0.30 | 0.30 |

| grvi_entropy | 3.41 | 0.66 | 0.19 | 3.71 | 0.19 | 0.05 | 0.09 | 3.65 | 0.20 | 0.05 | 0.07 |

| grvi_secondmoment | 0.08 | 0.13 | 1.73 | 0.03 | 0.03 | 0.89 | 0.62 | 0.03 | 0.03 | 0.83 | 0.60 |

| grvi_correlation | 0.28 | 0.21 | 0.76 | 0.27 | 0.20 | 0.75 | 0.04 | 0.27 | 0.22 | 0.80 | 0.04 |

References

- Li, S.; Zhao, J.; Dong, S.; Zhao, M.; Li, C.; Cui, Y.; Liu, Y.; Gao, J.; Xue, J.; Wang, L.; et al. Research progress and Prospect of Maize Cultivation in China. Sci. Agric. Sin. 2017, 50, 1941–1959. [Google Scholar] [CrossRef]

- Li, S.; Ma, W.; Peng, J.; Chen, Z. Study on Yield Loss of Summer Maize Due to Lodging at the Big Flare Stage and Grain Filling Stage. Sci. Agric. Sin. 2015, 48, 3952–3964. [Google Scholar] [CrossRef]

- Berry, P.M.; Sterling, M.; Spink, J.H.; Baker, C.J.; Sylvester-Bradley, R.; Mooney, S.J.; Tams, A.R.; Ennos, A.R. Understanding and Reducing Lodging in Cereals. Adv. Agron. 2004, 84, 217–271. [Google Scholar] [CrossRef]

- Chauhan, S.; Darvishzadeh, R.; Boschetti, M.; Pepe, M.; Nelson, A. Remote sensing-based crop lodging assessment: Current status and perspectives. ISPRS J. Photogramm. 2019, 151, 124–140. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.; Tseng, H.; Hsu, Y.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef] [Green Version]

- Salamí, E.; Barrado, C.; Pastor, E. UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas. Remote Sens. 2014, 6, 1051. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z.; Wang, L.; Liu, J.; Zhou, Q. Area extraction of maize lodging based on remote sensing by small unmanned aerial vehicle. J. Agric. Eng. 2014, 30, 207–213. [Google Scholar] [CrossRef]

- Wang, M.; Sui, X.; Liang, S.; Wang, Y.; Yao, H.; Hou, X. Simulation test and remote sensing monitoring of summer corn lodging. Sci. Surv. Mapp. 2017, 42, 137–141. [Google Scholar] [CrossRef]

- Wang, L.; Gu, X.; Hu, S.; Yang, G.; Wang, L.; Fan, Y.; Wang, Y. Remote Sensing Monitoring of Maize Lodging Disaster with Multi-Temporal HJ-1B CCD Image. Sci. Agric. Sin. 2016, 49, 4120–4129. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Feng, H.; Zhou, C.; Yang, H.; Xu, B.; Li, Z.; Yang, X. Quantitative Identification of Maize Lodging-Causing Feature Factors Using Unmanned Aerial Vehicle Images and a Nomogram Computation. Remote Sens. 2018, 10, 1528. [Google Scholar] [CrossRef] [Green Version]

- Chu, T.; Starek, M.J.; Brewer, M.J.; Murray, S.C.; Pruter, L.S. Assessing Lodging Severity over an Experimental Maize (Zea mays L.) Field Using UAS Images. Remote Sens. 2017, 9, 923. [Google Scholar] [CrossRef] [Green Version]

- Sun, Q.; Sun, L.; Shu, M.; Gu, X.; Yang, G.; Zhou, L. Monitoring Maize Lodging Grades via Unmanned Aerial Vehicle Multispectral Image. Plant Phenomics 2019, 2019, 5704154. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, P.; Wu, F.; Dai, J.; Wang, H.; Xu, L.; Zhang, G. Comparison of farmland crop classification methods based on visible light images of unmanned aerial vehicles. J. Agric. Eng. 2017, 33, 112–119. [Google Scholar] [CrossRef]

- Wang, X.; Wang, M.; Wang, S.; Wu, Y. Extraction of vegetation information from visible unmanned aerial vehicle images. J. Agric. Eng. 2015, 31, 152–157. [Google Scholar] [CrossRef]

- Li, N.; Zhou, D.; Zhao, K. Marsh classification mapping at a community scale using high-resolution imagery. Acta Ecol. Sin. 2011, 31, 6717–6726. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Jing, R.; Deng, L.; Zhao, W.; Gong, Z. Object-oriented aquatic vegetation extracting approach based on visible vegetation indices. J. Appl. Ecol. 2016, 27, 1427–1436. [Google Scholar] [CrossRef]

- Tian, B.; Yang, G. Crop lodging and its evaluation method. Chin. Agric. Sci. Bull. 2005, 21, 111. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking computer vision aloft—Archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Li, Y. Research on Structure from Motion Based on UAV Image and Video. Master’s Thesis, Harbin University of Science and Technology, Harbin, China, 2017. Available online: http://cdmd.cnki.com.cn/Article/CDMD-10214-1017074785.htm (accessed on 1 March 2021).

- Han, C. SfM Algorithm of 3D Reconstruction from UAV Aerial Images. Master’s Thesis, Inner Mongolia University of Technology, Hohhot, China, 2019. Available online: http://cdmd.cnki.com.cn/Article/CDMD-10128-1019619445.htm (accessed on 1 March 2021).

- Hamuda, E.; Mc Ginley, B.; Glavin, M.; Jones, E. Automatic crop detection under field conditions using the HSV colour space and morphological operations. Comput. Electron. Agric. 2017, 133, 97–107. [Google Scholar] [CrossRef]

- Wang, M.; Yang, J.; Sun, Y.; Wang, G.; Xie, Y. UAV remote sensing rapid extraction technology for vegetation coverage in abandoned mines. China Soil Water Conserv. Sci. 2020, 18, 130–139. [Google Scholar]

- Liu, C.; Hu, L.; Yang, K.; Wu, X. Aerial rape flower image segmentation based on color space. J. Wuhan Univ. Light Ind. 2020, 39, 13–17, 21. [Google Scholar]

- Yoder, B.J.; Waring, R.H. The normalized difference vegetation index of small Douglas-fir canopies with varying chlorophyll concentrations. Remote Sens. Environ. 1994, 49, 81–91. [Google Scholar] [CrossRef]

- Kazmi, W.; Garcia-Ruiz, F.J.; Nielsen, J.; Rasmussen, J.; J Rgen Andersen, H. Detecting creeping thistle in sugar beet fields using vegetation indices. Comput. Electron. Agric. 2015, 112, 10–19. [Google Scholar] [CrossRef] [Green Version]

- Kawashima, S.; Nakatani, M. An Algorithm for Estimating Chlorophyll Content in Leaves Using a Video Camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef] [Green Version]

- Gamon, J.; Surfus, J. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Chen, D.; Walker, C.N.; Angus, J.F. Estimating the nitrogen status of crops using a digital camera. Field Crop Res. 2010, 118, 221–227. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Kobe, Japan, 20–24 July 2003. [Google Scholar]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Tan, B.; Li, Z.; Zhang, Q. Comparative Study on Forest Type Classification Methods of CHRIS Hyperspectral Images. Remote Sens. Technol. Appl. 2010, 25, 227–234. [Google Scholar]

- Tu, B.; Zhang, X.; Zhang, G.; Wang, J.; Zhou, Y. Classification method of hyperspectral remote sensing image based on recursive filtering and KNN. Remote Sens. Land Resour. 2019, 31, 22–32. [Google Scholar] [CrossRef]

- Zhang, X.; Li, F.; Zhen, Z.; Zhao, Y. -8 remote sensing image forest vegetation classification based on random forest model. J. N. For. Univ. 2016, 44, 53–57. [Google Scholar] [CrossRef]

- Van der Linden, S.; Rabe, A.; Held, M.; Jakimow, B.; Leitão, P.J.; Okujeni, A.; Schwieder, M.; Suess, S.; Hostert, P. The EnMAP-Box—A Toolbox and Application Programming Interface for EnMAP Data Processing. Remote Sens. 2015, 7, 1249. [Google Scholar] [CrossRef] [Green Version]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. 2014, 88, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Wan, L.; Li, Y.; Cen, H.; Zhu, J.; Yin, W.; Wu, W.; Zhu, H.; Sun, D.; Zhou, W.; He, Y. Combining UAV-Based Vegetation Indices and Image Classification to Estimate Flower Number in Oilseed Rape. Remote Sens. 2018, 10, 1484. [Google Scholar] [CrossRef] [Green Version]

- Wilke, N.; Siegmann, B.; Klingbeil, L.; Burkart, A.; Kraska, T.; Muller, O.; van Doorn, A.; Heinemann, S.; Rascher, U. Quantifying Lodging Percentage and Lodging Severity Using a UAV-Based Canopy Height Model Combined with an Objective Threshold Approach. Remote Sens. 2019, 11, 515. [Google Scholar] [CrossRef] [Green Version]

- Wen, W.; Gu, S.; Xiao, B.; Wang, C.; Guo, X. In situ evaluation of stalk lodging resistance for different maize (Zea mays L.) cultivars using a mobile wind machine. Plant Methods 2019, 15. [Google Scholar] [CrossRef] [PubMed]

| 1A A-4 Nitrogen Operations Research | |||||||||||

| Nitrogen fertilization (kg/hm2) | CK | N1 | N2 | N3 | N4 | N5 | N6 | N7 | N8 | N9 | N10 |

| Base fertilizer | 150 | 150 | 150 | 150 | 150 | 150 | 150 | ||||

| V5–V6 | 100 | 100 | 100 | 150 | 150 | 150 | |||||

| V11–V12 | 100 | 100 | 100 | 100 | 100 | ||||||

| R1 silking | 100 | 100 | 100 | 100 | 100 | ||||||

| 1B A-5 Nitrogen Gradients Research | |||||||||||

| Nitrogen fertilization (kg/hm2) | CK | N100 | N200 | N300 | N400 | ||||||

| Base fertilizer | 0 | 60 | 120 | 180 | 240 | ||||||

| V11–V12 | 0 | 40 | 80 | 120 | 160 | ||||||

| Experimental Category | Growth Stage | |

|---|---|---|

| Sowing experiments | Sowing date 1 | R5 |

| Sowing date 2 | R4 | |

| Sowing date 3 | R3 | |

| Sowing date 4 | R2 | |

| Sowing date 5 | R1 | |

| Sowing date 6 | VT | |

| Sowing date 7 | V14 | |

| Sowing date 8 | V10 | |

| Variety experiments | VT | |

| Density experiments | V14 | |

| Nitrogen experiments (operations research) | VT | |

| Nitrogen experiments (gradients research) | VT | |

| Parameter | Version or Information |

|---|---|

| UAV type | DJI matrix 210 RTK V2 |

| Flying area | 3.43 ha |

| Total number of photos | 770 |

| Route planning | DJI GS Pro (Version 2.0.13) |

| Height above ground | 30 m |

| Flight speed | 2 m/s |

| Flight time | 30 min each time |

| Camera orientation | Vertical down |

| Front overlap ratio | 85% |

| Side overlap ratio | 75% |

| Camera type | Zenmuse X4S (Pan tilt camera) |

| Ground resolution | 0.8 cm |

| Image resolution | 5472 × 3648 |

| Focal length | 24 mm |

| Abbreviation | Full Name | Formula | Reference |

|---|---|---|---|

| Normalized red intensity | Kawashima et al., (1998) [29] | ||

| Normalized green intensity | Kawashima et al., (1998) [29] | ||

| Normalized blue intensity | Kawashima et al., (1998) [29] | ||

| GRRI | Green-red ratio index | Gamon et al., (1999) [30] | |

| GRVI | Green ratio vegetation index | Tucker et al., (1979) [31] | |

| SAVI | Soil adjusted vegetation index | Li et al., (2010) [32] | |

| MGRVI | Modified Green Red Vegetation index | Bendig et al., (2015) [33] | |

| VARI | Visible atmospherically resistant index | Gitelson et al., (2002) [34] | |

| WI | Woebbecke index | Woebbecke et al., (1995) [35] | |

| CIVE | Color index of vegetation extraction | Kataoka et al., (2003) [36] | |

| ExG | Excess green index | Woebbecke et al., (1995) [35] | |

| ExGR | Excess green min us excess red | Guijarro et al., (2011) [37] |

| Classification Method | Characteristic Band Combination |

Overall Accuracy |

Kappa Coefficient | |

|---|---|---|---|---|

| Threshold segmentation | ∆DSM | 78.26% | 0.63 | |

| Pixel-level supervised classification | RGB (max likelihood classification) | 60.87% | 0.43 | |

| RGB (RFC) | 78.26% | 0.63 | ||

| RGB (K nearest neighbor) | 56.52% | 0.33 | ||

| Object oriented classification | Based on spectral data | RGB | 45.65% | 0.28 |

| RGB+∆DSM | 82.61% | 0.72 | ||

| Based on canopy structure data | ∆DSM+FVC | 80.43% | 0.68 | |

| Based on vegetation index | RGB+∆DSM+GRVI | 82.61% | 0.72 | |

| RGB+∆DSM+MGRVI | 80.43% | 0.69 | ||

| RGB+∆DSM+SAVI | 65.22% | 0.47 | ||

| RGB+∆DSM+VARI | 86.96% | 0.79 | ||

| Based on texture features | RGB+∆DSM+green_homogeneity | 80.43% | 0.68 | |

| RGB+∆DSM+green_mean | 86.96% | 0.79 | ||

| RGB+∆DSM+w_mgrvi_variance | 84.78% | 0.75 | ||

| RGB+∆DSM+vari_contrast | 80.43% | 0.68 | ||

| RGB+∆DSM+vari_variance | 86.96% | 0.78 | ||

| Without original RGB image | ∆DSM+Best_VI | 86.96% | 0.78 | |

| ∆DSM+Best_Texture | 84.78% | 0.74 | ||

| ∆DSM+Best_VI+Best_Texture | 84.78% | 0.75 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Nie, C.; Wang, H.; Ao, Y.; Jin, X.; Yu, X.; Bai, Y.; Liu, Y.; Shao, M.; Cheng, M.; et al. Detection and Analysis of Degree of Maize Lodging Using UAV-RGB Image Multi-Feature Factors and Various Classification Methods. ISPRS Int. J. Geo-Inf. 2021, 10, 309. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi10050309

Wang Z, Nie C, Wang H, Ao Y, Jin X, Yu X, Bai Y, Liu Y, Shao M, Cheng M, et al. Detection and Analysis of Degree of Maize Lodging Using UAV-RGB Image Multi-Feature Factors and Various Classification Methods. ISPRS International Journal of Geo-Information. 2021; 10(5):309. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi10050309

Chicago/Turabian StyleWang, Zixu, Chenwei Nie, Hongwu Wang, Yong Ao, Xiuliang Jin, Xun Yu, Yi Bai, Yadong Liu, Mingchao Shao, Minghan Cheng, and et al. 2021. "Detection and Analysis of Degree of Maize Lodging Using UAV-RGB Image Multi-Feature Factors and Various Classification Methods" ISPRS International Journal of Geo-Information 10, no. 5: 309. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi10050309