Field Motion Estimation with a Geosensor Network †

Abstract

:1. Introduction

- A priori knowledge of motion properties: For the motion of environmental phenomena, a priori knowledge of the field motion properties can be assumed. For example, wind speed statistics exist or information on the advection of rainclouds. This domain knowledge can be used to specify the required parameters of the proposed algorithm.

- Temporal continuity and spatial uniformity of motion: In arbitrary images, moving objects, such as cars, can occur that change their direction, stop or accelerate rather quickly, e.g., within a couple of frames. Therefore, temporal continuity usually only holds for very short time periods [10]. For atmospheric or oceanographic fields, it is likely that the motion is rather consistent over long sampling periods, and therefore, integration of motion information over time gains importance. In addition, motion in images often exhibits sharp motion discontinuities, e.g., at boundaries of the moving objects such as cars with opposing motion that pass by each other. For atmospheric or oceanographic fields, sharp motion discontinuities can be considered unlikely: they might only arise at boundaries of the field, e.g., at the boundaries of rain clouds. However, then, they do not exist in reality (i.e., the atmosphere surrounding the rain cloud also moves), but affect the motion estimation algorithm that is based on field measurements. For example, while the atmosphere still moves when there is no rain, the motion can only be estimated when there is some rain, which is not uniform (the uniformity of the field values is commonly known as the “blank wall” problem in the optical flow literature).

- Controlled node deployment, sampling rate and irregular data: In image-based optical flow, the pixels determine the sampling locations, and the motion speed and direction are influenced by numerous factors, such as spatial resolution and the orientation of the image, sampling rate, the spatial distance of the camera to the moving object, as well as the speed and direction of the moving object. When motion is to be estimated with a GSN deployed within (i.e., in situ) and sensing the field, the node deployment and sampling rate can be controlled to a certain extent, for example, the node spacing relative to the assumed field motion. However, while image-based optical flow approaches usually rely on regular grids (i.e., images), a grid-like deployment of the nodes might not be possible.

- Decentralized estimation: Image-based OF usually relies on a single computer that estimates the motion field from the images. With a GSN, the decentralized estimation of motion by each node is possible and potentially desirable for several reasons, which are described in the next section.

2. Contributions of This Work and Differences from Previous Work

- Previous work included a rather ad hoc error model for the individual observations. Here, a more sophisticated, probabilistic error model is introduced, and extensive evaluations illustrate the usefulness of the model.

- A more generic formalization of the optical flow algorithm is provided, which accounts for possible changes in motion over time.

- The algorithm is evaluated along the error measures of differences in motion speed and motion direction between true and estimated motion. The angular difference is a common error measure for optical flow approaches [15]. Motion speed is considered to be the other most important property of motion.

- More comprehensive simulations illustrate the performance of the algorithm in different conditions. Further, the algorithm is applied to simulated GSN sampling data from weather radar images collected during a precipitation event, and the motion estimation performance of the GSN is compared to that of a state-of-the-art image-based optical flow algorithm applied to the weather radar directly, which is a common approach from nowcasting [5]. In this way, the most complete information from the radar images can be compared to the sparse information from the nodes.

3. Background and Related Work

3.1. Related Work

3.2. Network and Field Model

3.3. Optical Flow: Basics

4. Methodology

- Gradient constraint estimation: Estimates of a gradient constraint at a node at time t, , and , are calculated from sensor measurements of neighboring nodes. The error of a GC is derived from the spatial configuration of the node neighborhood. Details are given in Section 4.1.

- Motion estimation: A set of estimates of gradient constraints is integrated at each time step t by each node over its direct 1-hop neighborhood to solve for the motion components. Further details are provided in Section 4.2.

4.1. Gradient Constraint Estimation

4.1.1. Stationarity of Nodes

4.1.2. Error in Derivative Calculation

4.1.3. Gradient Constraint Error Estimation

- Zero-field values: When the field values used for derivative calculation are zero and, hence, all derivatives are zero, the GC is not estimated at all. Usually, measurements of spatio-temporal fields are zero-inflated, meaning that the majority of samples are zero. In such a case, no motion can be estimated, and node energy can be saved.

- Node neighborhood extending field boundary: When only one of the sensor samples for estimating the GC is zero, the GC is not estimated at all, as the node neighborhood extends over the field boundaries. While the GC could still be estimated using the remaining sensor samples, this is not done, as the least squares matrices for estimating the partial derivatives are pre-computed (see [14] for the details).

- Non-zero, but equal field values: When the field is completely flat, corresponding to the “blank wall” problem described previously, the GC is not estimated at all. This case can be recognized, when all of the neighboring sensor samples of a timestep t are larger than zero, but equal. Then, the derivatives are zero, and the GC does not contribute to the motion estimation.

4.2. Temporal Coherence: Kalman Filter for Motion Estimation

5. Empirical Evaluation

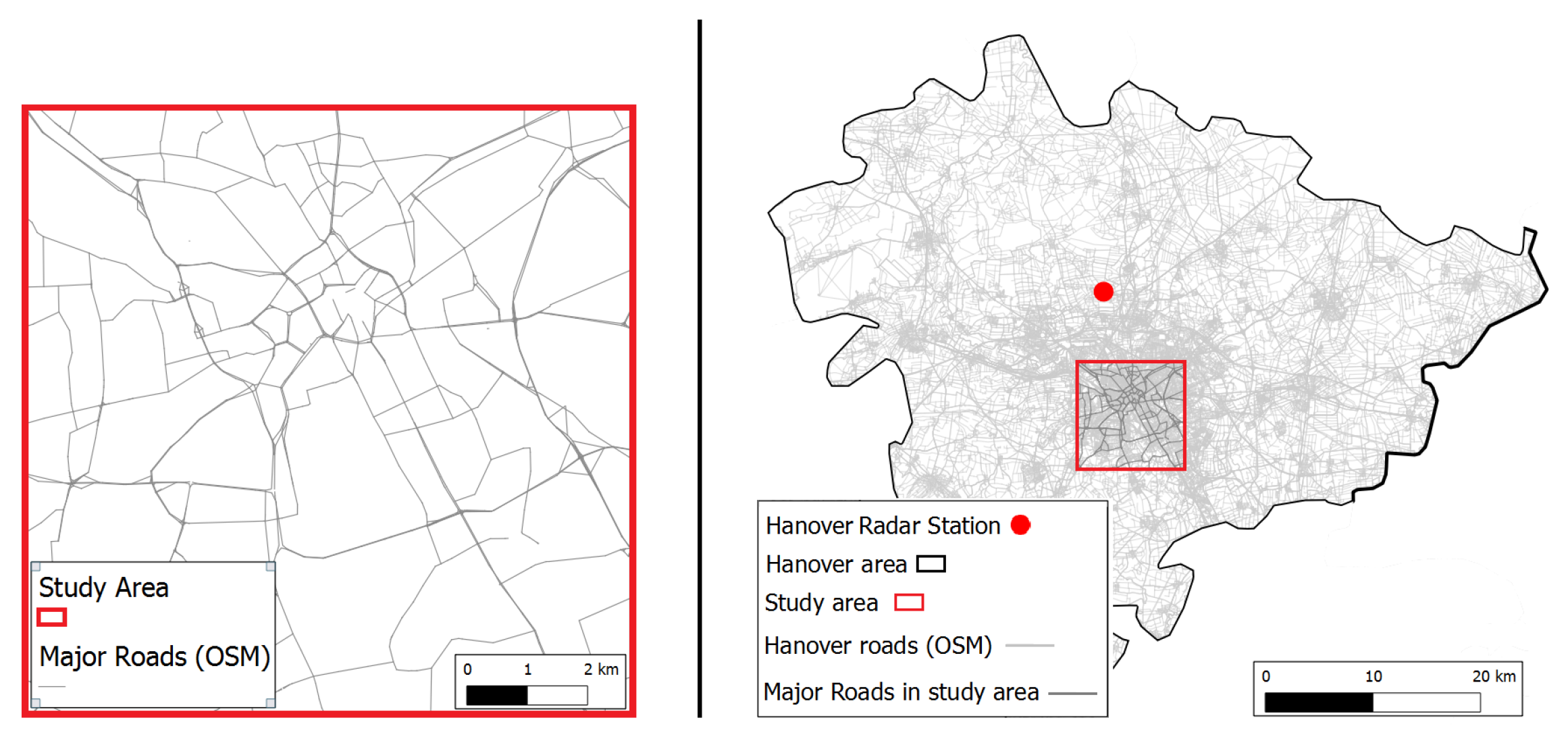

5.1. Study Area, Sensor Network and Deployment Strategy

5.2. Error Measures

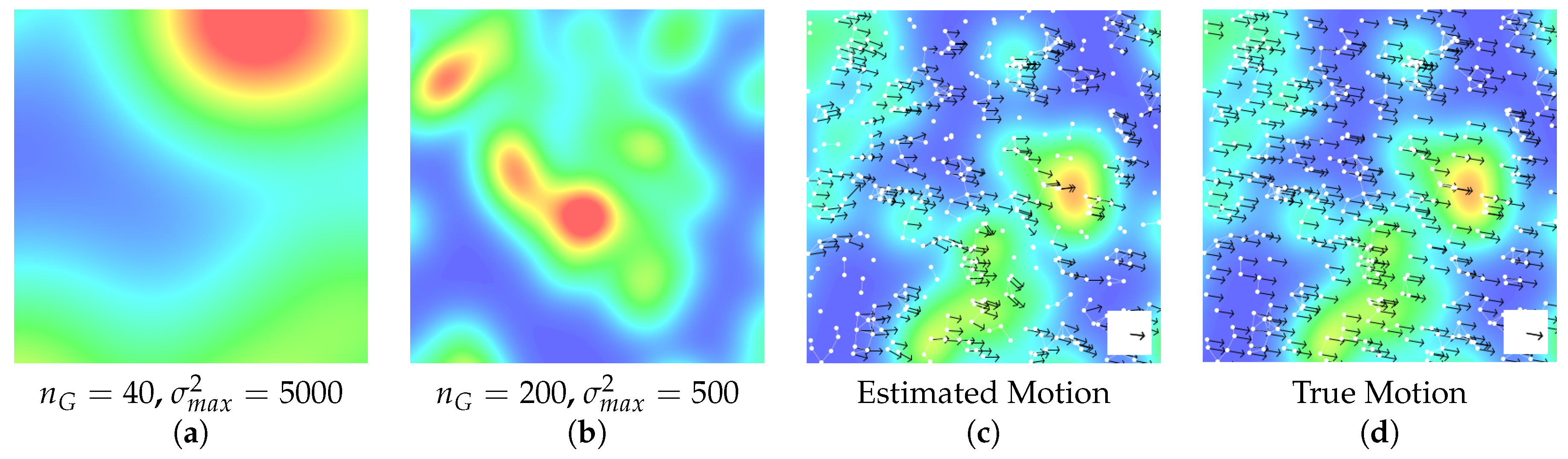

5.3. Setting the Filter Parameters

5.4. Results: Simulated Field

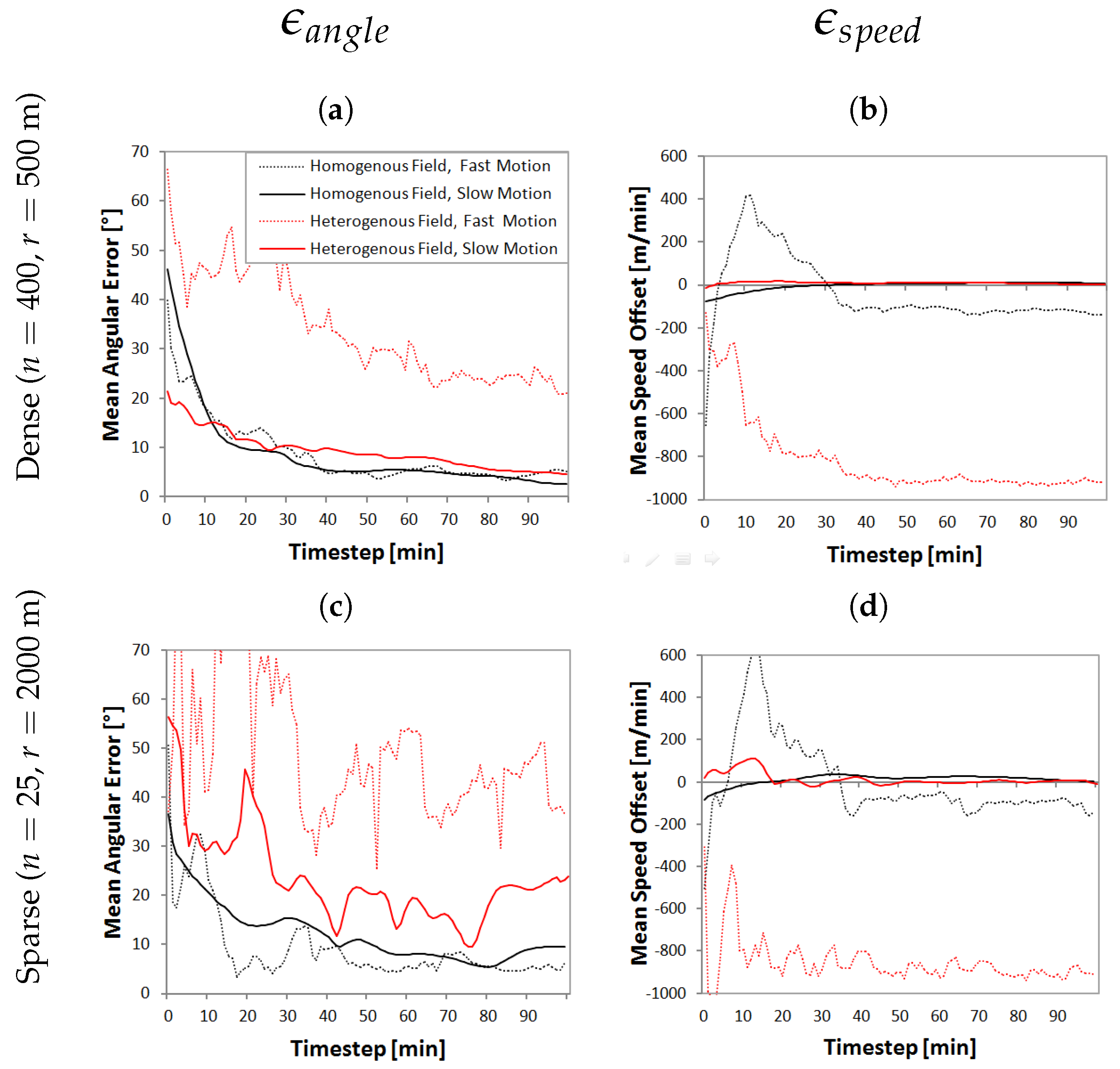

5.4.1. Influence of Field Linearity, Field Speed and Node Density

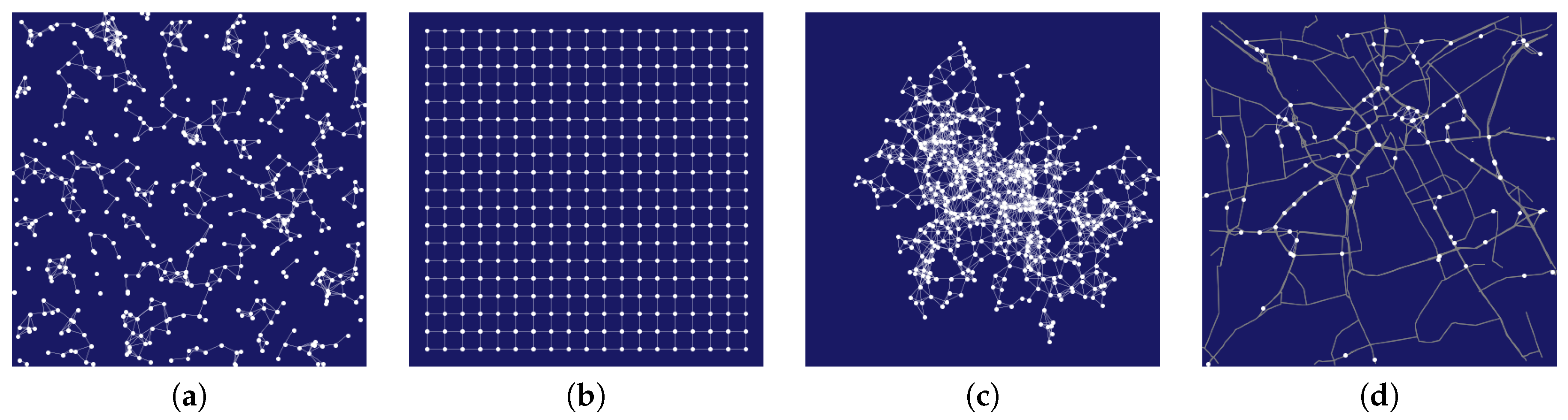

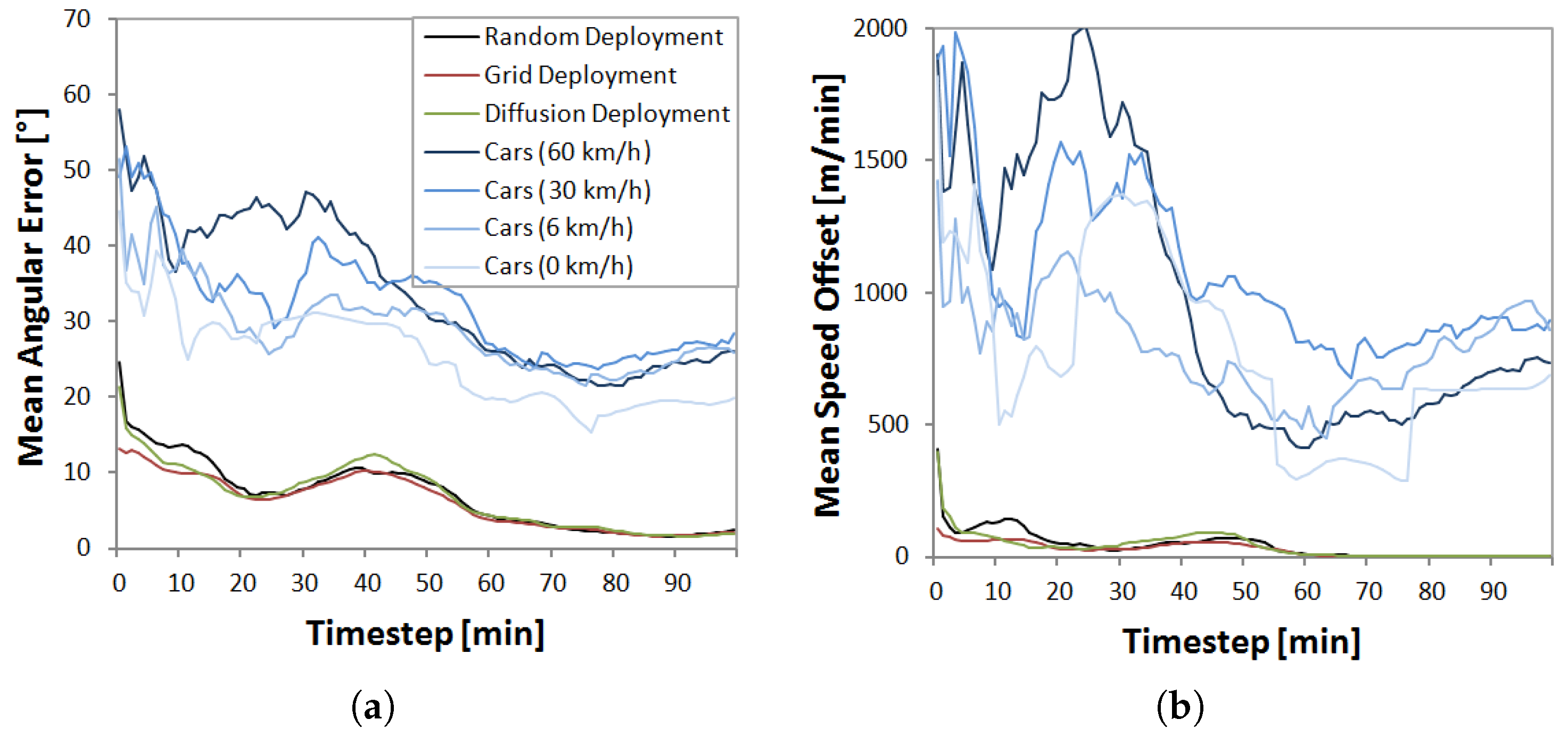

5.4.2. Influence of Deployment Strategy

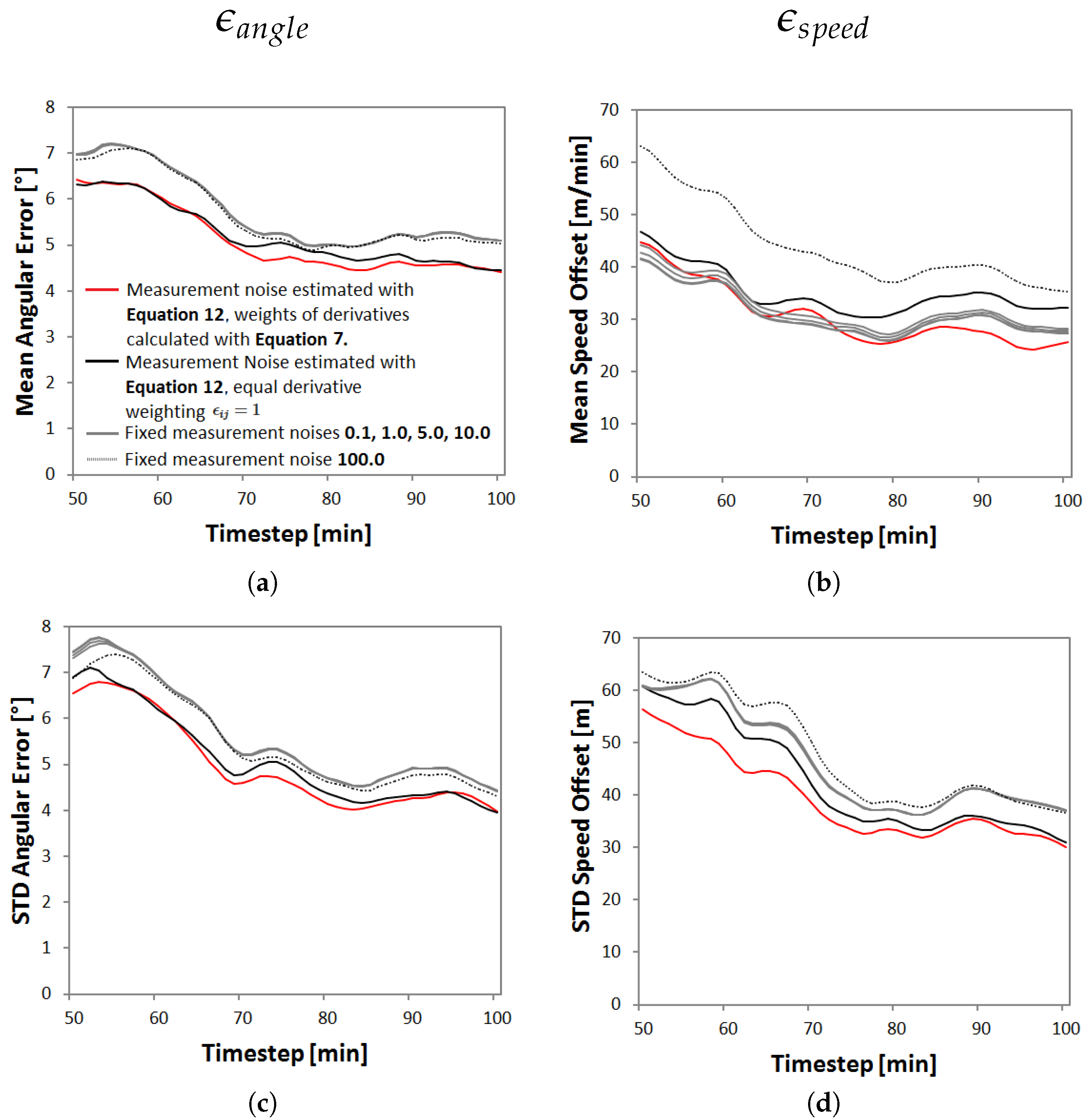

5.4.3. Influence of Kalman Measurement Noise

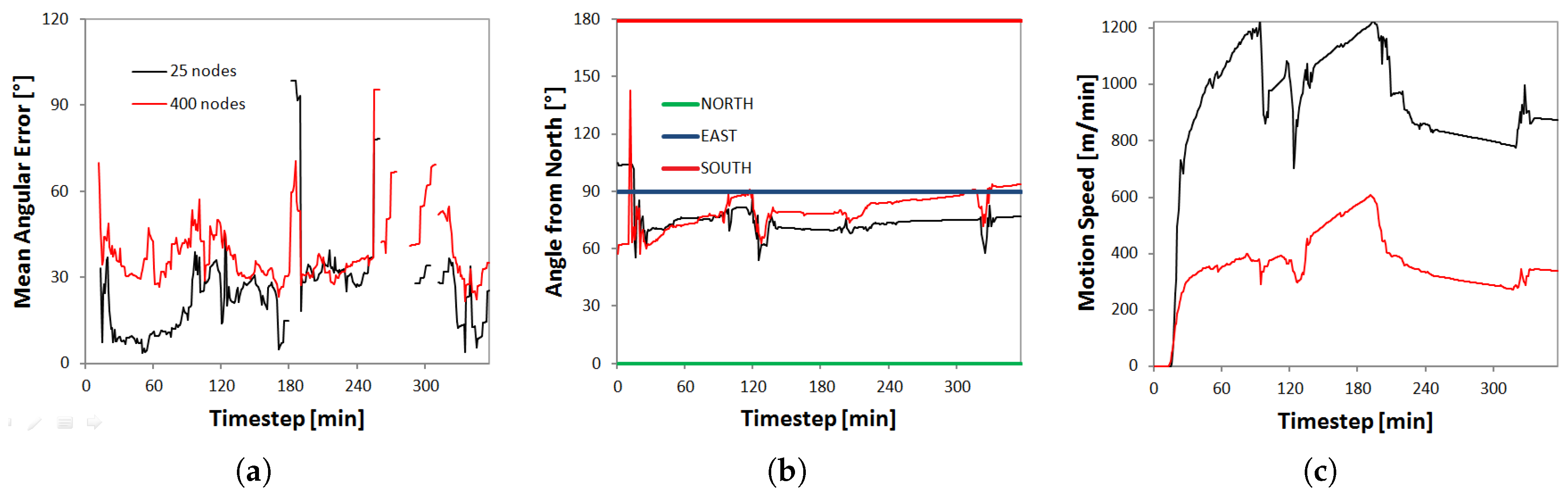

5.5. Results: Radar Field

6. Discussion and Conclusions

- Field properties and deployment density: The simulations have shown that the degree of field linearity in conjunction with the motion speed is an important factor and that the reachable accuracy decreases with increasing non-linearity and motion speed (Figure 5). This is a fact that is known from work on image-based optical flow, but has direct implications in a GSN setting where the deployment and sampling rate of the nodes can be controlled to a certain extent. Since small motion in space is advantageous due to the Taylor expansion of Equation (2), it is important that the field is sampled at a high sampling rate. In addition, it is also important that the nodes are deployed rather densely and close to each other (which is also beneficial concerning power consumption) since the accuracy of estimating partial derivatives decreases with increasing node distance.

- Deployment and stationarity of nodes: When the number of nodes and communication distance are held fixed, the deployment strategy of stationary nodes does not have a large influence on the motion estimation results (Figure 6). However, with decreasing node density, the performance of a random deployment will certainly decrease, since there will be disconnected nodes. Further, the algorithm has been developed for stationary nodes. Nonetheless, it can also be applied in a non-stationary setting, e.g., for cars. However, then the motion estimation accuracy decreases (Figure 6) due to the increased error in the temporal derivative estimate and the linear alignment of the cars along roads.

Appendix A. Algorithm Protocol

| Protocol 1: Field motion estimation with a node |

|

| INIT broadcast if become PROCESSING Receiving neighboring node position () if compute and unit vector add as new row to add as new diagonal entry to if |rows(Ai)|=|nbr>1(ni)| compute normal equations compute (Equation (12)) add (i, ) to broadcast to nbr>1(ni) Receiving of neighboring node add (j, ) to if become PROCESSING PROCESSING at time step t

|

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Govindaraju, R.S. Artificial neural networks in hydrology. I: Preliminary concepts. J. Hydrol. Eng. 2000, 5, 115–123. [Google Scholar]

- Taormina, R.; Chau, K.W. Data-driven input variable selection for rainfall-runoff modeling using binary-coded particle swarm optimization and Extreme Learning Machines. J. Hydrol. 2015, 529 Pt 3, 1617–1632. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-Frame motion estimation based on polynomial expansion. In Image Analysis; Number 2749 in Lecture Notes in Computer Science; Bigun, J., Gustavsson, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar]

- Lin, C.; Vasić, S.; Kilambi, A.; Turner, B.; Zawadzki, I. Precipitation forecast skill of numerical weather prediction models and radar nowcasts. Geophys. Res. Lett. 2005, 32, L14801. [Google Scholar] [CrossRef]

- Bowler, N.E.; Pierce, C.E.; Seed, A. Development of a precipitation nowcasting algorithm based upon optical flow techniques. J. Hydrol. 2004, 288, 74–91. [Google Scholar] [CrossRef]

- Fitzner, D.; Sester, M. Estimation of precipitation fields from 1-minute rain gauge time series–comparison of spatial and spatio-temporal interpolation methods. Int. J. Geogr. Inf. Sci. 2015, 29, 1668–1693. [Google Scholar] [CrossRef]

- Cohen, I.; Herlin, I. Optical flow and phase portrait methods for environmental satellite image sequences. In Computer Vision—ECCV ’96; Number 1065 in Lecture Notes in Computer Science; Buxton, B., Cipolla, R., Eds.; Springer: Berlin/Heidelberg, Germany, 1996; pp. 141–150. [Google Scholar]

- Haberlandt, U.; Sester, M. Areal rainfall estimation using moving cars as rain gauges—A modelling study. Hydrol. Earth Syst. Sci. 2010, 14, 1139–1151. [Google Scholar] [CrossRef] [Green Version]

- Fitzner, D.; Sester, M.; Haberlandt, U.; Rabiei, E. Rainfall estimation with a geosensor network of cars theoretical considerations and first results. Photogramm. Fernerkund. Geoinform. 2013, 2013, 93–103. [Google Scholar] [CrossRef]

- Black, M.J. Robust Incremental Optical Flow. Ph.D. Thesis, Yale University, New Haven, CT, USA, 1992. [Google Scholar]

- Duckham, M. Decentralized Spatial Computing: Foundations of Geosensor Networks; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, BC, Canada, 24–28 August 1981.

- Kalman, R. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Fitzner, D.; Sester, M. Decentralized gradient-based field motion estimation with a wireless sensor network. In Proceedings of the 5th International Confererence on Sensor Networks, Rome, Italy, 19–21 February 2016; pp. 13–24.

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. Int. J. Comput. Vis. 1994, 12, 43–77. [Google Scholar] [CrossRef]

- Sester, M. Cooperative boundary detection in a geosensor network usng a SOM. In Proceedings of the International Csrtographic Conference, Santiago, Chile, 15–21 November 2009.

- Jeong, M.H.; Duckham, M.; Kealy, A.; Miller, H.J.; Peisker, A. Decentralized and coordinate-free computation of critical points and surface networks in a discretized scalar field. Int. J. Geogr. Inf. Sci. 2014, 28, 1–21. [Google Scholar] [CrossRef]

- Umer, M.; Kulik, L.; Tanin, E. Spatial interpolation in wireless sensor networks: Localized algorithms for variogram modeling and Kriging. GeoInformatica 2010, 14, 101–134. [Google Scholar] [CrossRef]

- Brink, J.; Pebesma, E. Plume tracking with a mobile sensor based on incomplete and imprecise information. Trans. GIS 2014, 18, 740–766. [Google Scholar] [CrossRef]

- Das, J.; Py, F.; Maughan, T.; O’Reilly, T.; Messié, M.; Ryan, J.; Sukhatme, G.S.; Rajan, K. Coordinated sampling of dynamic oceanographic features with underwater vehicles and drifters. Int. J. Robot. Res. 2012, 31, 626–646. [Google Scholar] [CrossRef]

- Tsai, H.W.; Chu, C.P.; Chen, T.S. Mobile object tracking in wireless sensor networks. Comput. Commun. 2007, 30, 1811–1825. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Langley, R.B. Dilution of precision. GPS World 1999, 10, 52–59. [Google Scholar]

- Niemeier, W. Ausgleichungsrechnung: Eine Einführung für Studierende und Praktiker des Vermessungs- und Geoinformationswesens; de Gruyter: Berlin, Germany; New York, NY, USA, 2002. [Google Scholar]

- Simoncelli, E.P. Distributed Representation and Analysis of Visual Motion. Ph.D. Thesis, Massachusetts Institute of Technology, Department of Electrical Engineering and Computer Science, Cambridge, MA, USA, 1993. [Google Scholar]

- Simoncelli, E.P.; Adelson, E.H.; Heeger, D.J. Probability distributions of optical flow. In Proceedings of the 1991 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Los Alamos, CA, USA, 3–6 June 1991.

- Särkkä, S. Bayesian Filtering and Smoothing; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Watson, P.K. Kalman filtering as an alternative to Ordinary Least Squares—Some theoretical considerations and empirical results. Empir. Econ. 1983, 8, 71–85. [Google Scholar] [CrossRef]

- Berndt, C.; Rabiei, E.; Haberlandt, U. Geostatistical merging of rain gauge and radar data for high temporal resolutions and various station density scenarios. J. Hydrol. 2014, 508, 88–101. [Google Scholar] [CrossRef]

- Schulz, F. Modeling sensor and ad hoc networks. In Algorithms for Sensor and Ad Hoc Networks; Springer: Heidelberg, Germany, 2007; pp. 21–36. [Google Scholar]

- Golub, G.H.; Loan, C.F.V. Matrix Computations; JHU Press: Baltimore, ML, USA, 1996. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fitzner, D.; Sester, M. Field Motion Estimation with a Geosensor Network. ISPRS Int. J. Geo-Inf. 2016, 5, 175. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi5100175

Fitzner D, Sester M. Field Motion Estimation with a Geosensor Network. ISPRS International Journal of Geo-Information. 2016; 5(10):175. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi5100175

Chicago/Turabian StyleFitzner, Daniel, and Monika Sester. 2016. "Field Motion Estimation with a Geosensor Network" ISPRS International Journal of Geo-Information 5, no. 10: 175. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi5100175