1. Introduction

Land-Use (LU) and Land-Cover (LC) (also referred to as LULC) change can be associated with varying rates of change of one of the Earth’s surface components. However, with human activity increasing, the Earth’s surface has been modified significantly in recent years by various kinds of land cover changes [

1,

2]. Given its large number of practical applications, including the monitoring of deforestation, and agricultural expansion and intensification, as well as damage assessment, disaster monitoring, urban expansion monitoring, city planning, and land-resource management [

1,

3], knowledge of LULC changes is needed in many fields. Satellite images have long been the primary and most important source of data for studying different kinds of land-cover changes due to the long periods for which consistent measurements are available and the high spatial resolution of the imagery [

4,

5]. With the rapid development of remote sensing technology and the increasing variety of Earth observation satellites, the volume of data in satellite image datasets is growing exponentially [

6]. However, as they are limited by the capacity of the available data processing and analysis, mass data organization and management lag far behind the explosive increase in the amount of remote sensing imagery. Therefore, one of most challenging emerging applications is how to efficiently and precisely access change information data from such archives based on users’ needs.

The state-of-the-art systems for accessing change information from original images still rely on the use of keywords or metadata, such as geographical coordinates, the data acquisition time and the sensor type [

7], along with prior knowledge of the target change events. The performance of keyword matching-based retrieval approaches is highly dependent on the completeness of this prior knowledge. In order to provide consistent data that can be used to derive land-cover information as well as geophysical and biophysical products for regional assessment of surface dynamics and to study the functioning of Earth systems, new data service systems have been proposed. These include the NASA-funded Web-enabled Landsat Data [

8] project that systematically generated 30-m composited Landsat Enhanced Thematic Mapper Plus (ETM+) mosaics of the conterminous United States and Alaska from 2002 to 2012, the Australian Geoscience DATA CUBE framework [

9,

10], and ChangeMatters [

11], founded by Esri’s ArcGIS Server Image Extension, which accesses the 34,000 Global Land Survey (GLS) Landsat scenes consisting of worldwide imagery from the 1970s, 1990s, 2000, and 2005, which were created by the USGS and NASA. However, the geographical area covered by the remote sensing imagery used by the above-mentioned services is small and the available products are limited by the universality of the algorithms used to produce them. Also, as extensions of the keyword/metadata approach, they cannot accurately be applied to all the different change application areas actually required by users.

In contrast to the keyword-to-find-image approach, content-based image retrieval (CBIR) is a major advance that aims to search images using visual features that are similar to those of the query image submitted by the user. This technique uses a description of the image consisting of automatically extracted visual features such as color, texture, and shape. After a user submits one or more query images, the images in the database are ranked according to their similarity with the query images and the most similar images are returned to the user [

12,

13,

14]. This efficient method of managing and utilizing the information contained in an image database from the viewpoint of comprehension of the image content provides a new opportunity to solve the problem of information management in a large remote sensing image database [

7,

15]. Therefore, content-based remote sensing image retrieval (CBRSIR) is a topic that has attracted the attention of scholars around the world. It will become particularly important in the next decade when the number of acquired remote sensing images will again dramatically increase. Feature extraction is fundamental to content-based image retrieval. In the remote sensing literature, several primitive features for characterizing and describing images for retrieval purposes have been presented; these include the fuzzy color histogram [

16], the integrated color histogram [

17], the Gray Level Co-occurrence Matrix (GLCM) [

18], the fast wavelet [

19], and visual salient point features [

20]. Most studies have focused mainly on methods with different visual features and their effects on CBRSIR [

20,

21,

22]. However, on its own, one type of feature cannot always express the image content precisely and perfectly [

23], and it is hard to attain satisfactory retrieval results when using a single feature. Therefore, in this paper, the multi-features integrated retrieval model is proposed, in which the main color and texture features of the remote sensing image proposed by the researcher are included to improve the image retrieval.

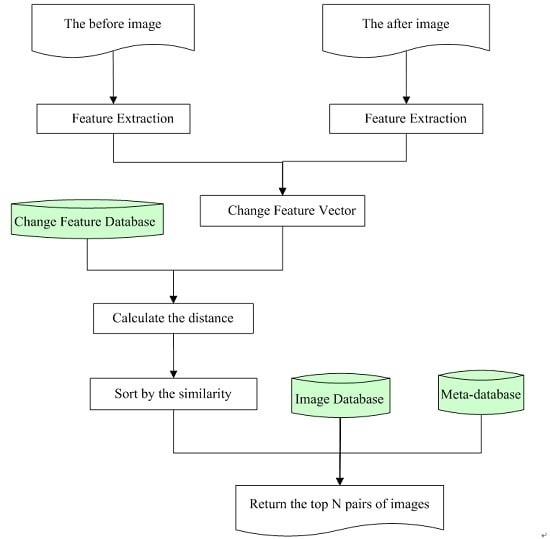

Very few studies describe the utilization of CBIR techniques for change detection [

14]. Classical change-detection techniques rely on image differences or ratios, post-classification comparison, classification of multi-temporal datasets, or change vector analysis [

1,

2,

3,

4,

5]; whereas, pixel-based methods require a sub-pixel registration accuracy, as mis-registrations greater than one pixel produce numerous errors when comparing images [

1,

2,

3,

4,

5]. To overcome the problems described above, content-based remote sensing image retrieval can be applied to accessing and detecting change information in remote sensing imagery. As accessing and detection of remote images’ change information can be seen the similar pairs (different rates with the same geographical location) of images retrieval, in this paper, a new content-based remote sensing image change information retrieval (CBRSICIR) model is proposed for the retrieval of information change from remote sensing imagery. The new model makes two general improvements to the existing content-based remote sensing image retrieval models. First, the structure and framework of the content-based retrieval of remote sensing image change information is built. In addition, an experimental prototype system is set up to demonstrate the validity and practicability of this model. Secondly, the multiple-feature integrated retrieval model is proposed—this model uses three types of color feature and four types of texture feature to improve the retrieval of change information from remote sensing imagery. The new model is a new method of acquiring change information from remote sensing imagery and can reduce the need to carry out image pre-processing. It can also overcome problems related to seasonal changes and other factors that affect change detection and, thereby, meet the needs of many different kinds of user. Meanwhile, the new model has important implications for improving remote sensing image management and autonomous information retrieval.

The remainder of this article is organized as follows.

Section 2 describes the study data and the main data-processing steps.

Section 3 describes the content-based remote sensing image change information retrieval model in detail.

Section 4 presents the experimental results that were obtained by using a remote sensing imagery dataset. Conclusions are drawn in

Section 5, where recommendations for future research are also given.

4. Results

Our model was implemented within the Matlab2015a environment. The empirical evaluation was performed on a Dell3G PC with a Win7 operating system. In order to analyze the effectiveness of the new model that used multiple features, our experiments were divided into three groups. Group 1 described the remote sensing images using nine kinds of features to compare the change information retrieval performance using the multiple features together with the other features described in

Section 4.1. Group 2 compared the retrieval performances obtained using different features for different types of ground object changes. Group 3 showed change information retrieval examples using the content-based remote sensing image change information retrieval model with different features.

In order to evaluate the efficiency of our proposed algorithm, the 14 Landsat images were sliced into 128 × 128 pixel pieces. The small images were then manually categorized into four classes, including agricultural land/vegetation (AGR), bare land (BAR), built-up land (BUI), and water (WAT). 1192 pairs of images corresponding to nine classes of ground object changes were also selected. These included AGR2BAR (Agricultural Land/Vegetation to Bare Land), AGR2BUI (Agricultural Land/Vegetation to Built-up Land), AGR2WAT (Agricultural Land/Vegetation to Water), BAR2AGR (Bare Land to Agricultural Land/Vegetation), BAR2BUI (Bare Land to Built-up Land), BAR2WAT (Bare Land to Water), WAT2AGR (Water to Agricultural Land/Vegetation), WAT2BAR (Water to Bare Land), and WAT2BUI (Water to Built-up Land), and thus formed the experimental remote sensing image database. There were 161, 295, 67, 194, 44, 42, 149, 103 and 137 pairs of images, respectively, in the classes listed.

Figure 5 shows three sample pairs of images for each of the nine change classes. It should be noted that Beijing has undergone unprecedented urban growth in the past three decades and the length of time between the demolition of buildings and the construction of new ones is very short. In addition, the spatial resolution of the Landsat satellites is relatively low. This means that it is difficult to capture the change from built-up land to other ground objects and so there were no data corresponding to the changes BUI2AGR (Built-up Land to Agricultural Land/Vegetation), BUI2BAR (Built-up Land to Bare Land), and BUI2WAT (Built-up Land to Water).

4.1. Comparision of Different Features

In the unified framework of our proposed content-based remote sensing image change information retrieval method, different feature combinations were tested for demonstrating the effectiveness of combined color and texture features. In this experiment, the Color correlogram, Color moments, HSV-HIST, Fast wavelet, In-moments, GLCM, and Texture Spectrum were exploited to represent the change content. Multi-Color, Multi-Texture, and Multi-all produce hybrid multiple features by combining three categories of color feature, four categories of texture feature, and seven categories of color and texture features, respectively. Altogether, nine comparison tests were made.

Table 3 and

Table 4 show the coverage ratios and also the values of the mean average precision that were obtained when

was set to {1, 2, 3, 4, 5, 10, 20} for 20 trials per category, resulting in 180 trials of the remote sensing image database. As demonstrated in

Table 3 and

Table 4, of the features tested, the change information retrieval results that were based on the Color Moments feature produced the highest coverage ratios and mean average precisions. For Multi-Texture, the coverage ratios and mean average precisions were higher than those obtained for single texture features. Better results were also obtained using Multi-Color than for any of the single-color features except Color Moments. In addition, the values obtained using Multi-all were higher than those for Multi-Color and Multi-Texture. It can also be seen that the coverage rate (

Table 3) and mean average precision (

Table 4) both decrease as

i increases. This means that change information retrieval based on CBIR is effective and always gives the top ranking to the most relevant change images pairs.

Figure 6 shows the precision-recall graphs that were obtained for the different methods using 20 trials per category (giving at total of 180 trials) when

i was set to {1, 2, 3, ..., 20}. As shown in

Figure 6, the change information retrieval results based on the Color Moments method again outperformed the other methods in terms of the precision-to-recall ratio. Also, the results based on Multi-all were better than those for the other eight methods.

It is important to note that the change information retrieval results based on the Color Moments method had the highest coverage ratios and mean average precision values and also the best performance in terms of precision-to-recall ratio. However, this does not mean that the use of this method will necessarily produce good results using other databases as a single feature cannot always express the image content precisely and perfectly. The time needed for the feature extraction was 2.13 s. The retrieval time was dependent on the scale of data—in this study, it was about 3.5 s. However, these times were achieved without using big data processing methods, such as parallel computing.

4.2. Comparative Performance of Different Features for Different Retrieval Cases

The change information retrieval performance based on different features was evaluated for the different ground object change classes.

Table 5 and

Table 6 show the coverage ratios and mean average precisions obtained when

i was set to 2 for 20 trials for each class of change in ground objects in the remote sensing image database. According to

Table 5 and

Table 6, the retrieval results based on different features were different for different change classes. The results obtained using the Color Moments feature still had good coverage ratios and mean average precisions in most cases. However, for the AGR2WAT class, the results obtained using HSV-HIST, Multi-color, and Multi-all were all better than those obtained using Color Moments. For the BAR2WAT class, the highest coverage ratios and mean average precisions were obtained using the Fast wavelet method. For Multi-all, the coverage ratios and mean average precisions were higher than those obtained for any other single feature or combined features, except for Color Moments.

Figure 7 shows the precision-recall graphs for different ground-change classes that were obtained using the Multi-all method and 20 trials per category.

i was set to {1, 2, 3, ..., 20}, resulting in a total of 180 trials using the remote image database. As shown in

Figure 7, using this method, the change information retrieval results for the AGR2BAR change class were the best; good results were also obtained for WAT2BUI, BAR2AGR, AGR2WAT, and WAT2BAR. The worst performance was for the BAR2BUI change class. These results show that it is hard to attain satisfactory retrieval results for all change classes using a single combined-feature model. In future work, to bridge the semantic gap between the content of low-level and high-level features, some pre-learning mechanism and relevance feedback (RF) methods should be introduced to improve the retrieval performance for different ground-change classes.

4.3. Search Examples

To illustrate the effectiveness of our approach for querying target pairs of remote sensing images, we provide here some screenshots obtained from our new data service system using the remote sensing images archive.

Figure 8, shows a typical query pair of “WAT2BUI” images (

Figure 8a) and the corresponding images retrieved using the proposed method based on the Multi-all feature. The order of the retrieval of the image pairs was as shown in

Figure 8b.

Figure 9 shows a typical query pair of “BAR2BUI” images (

Figure 9a) and the corresponding images retrieved using the proposed method based on Color Moments. From these figures, it can be seen that, through using multiple features, the new method produces promising results.