Complying with Privacy Legislation: From Legal Text to Implementation of Privacy-Aware Location-Based Services

Abstract

:1. Introduction

2. Related Work

2.1. Location Privacy: Definitions and Concepts

2.2. Location Data Privacy Issues in LBS and the Role of the General Data Protection Regulation (GDPR)

2.3. Summary

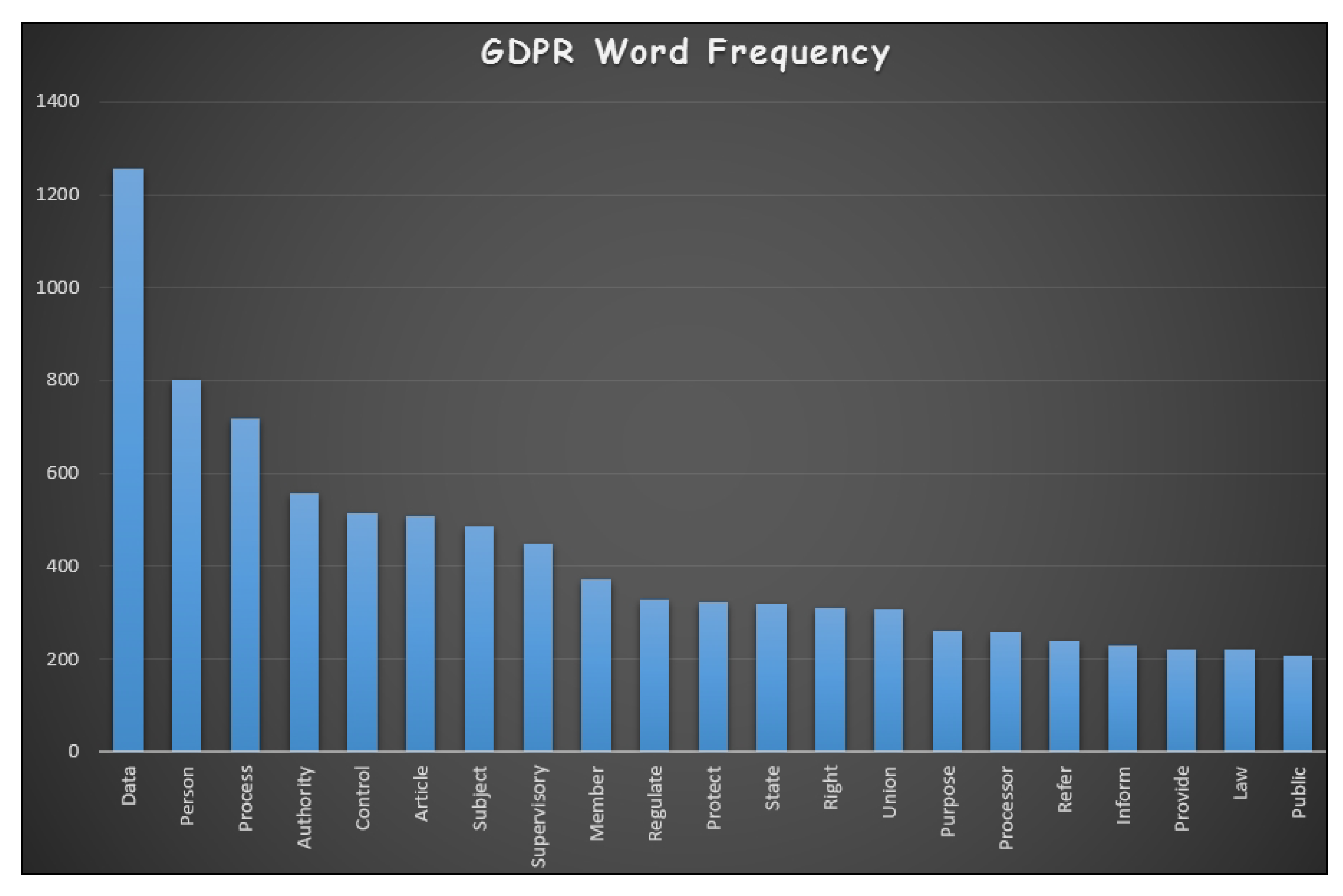

3. Analysis of the GDPR

- “Personal data” means any information relating to an identified or identifiable natural person.

- “Data subject” is a natural person who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.

- “Controller” means the natural or legal person, public authority, agency or other body which, alone or jointly with others, determines the purposes and means of the processing of personal data; where the purposes and means of such processing are determined by Union or Member State law, the controller or the specific criteria for its nomination may be provided for by Union or Member State law.

- “Processor” means a natural or legal person, public authority, agency or other body which processes personal data on behalf of the controller;

- “Processing” means any operation or set of operations which is performed on personal data or on sets of personal data, whether or not by automated means, such as collection, recording, organisation, structuring, storage, adaptation or alteration, retrieval, consultation, use, disclosure by transmission, dissemination or otherwise making available, alignment or combination, restriction, erasure or destruction.

- “Profiling” means any form of automated processing of personal data consisting of the use of personal data to evaluate certain personal aspects relating to a natural person, in particular to analyse or predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behaviour, location or movements.

- “Personal data breach” means a breach of security leading to the accidental or unlawful destruction, loss, alteration, unauthorised disclosure of, or access to, personal data transmitted, stored or otherwise processed.

3.1. Notice

3.2. Consent

3.3. Control

- Access—according to Art 15: The data subject shall have the right to obtain from the controller confirmation as to whether or not personal data concerning him or her are being processed (see Appendix A for the listed conditions according to Art 15).

- Rectification—Art 16: The data subject shall have the right to obtain from the controller without undue delay the rectification of inaccurate personal data concerning him or her.

- Erasure (right to be forgotten): The controller shall have the obligation to erase personal data without undue delay where one of the following grounds listed in Appendix A applies.

- Restriction of processing—Art 18: The data subject shall have the right to obtain from the controller restriction of processing where one of the following grounds listed in Appendix A applies.

- Data portability—Art 19: The data subject shall have the right to receive the personal data concerning him or her, which he or she has provided to a controller, in a structured, commonly used and machine-readable format and have the right to transmit those data to another controller without hindrance from the controller to which the personal data have been provided—Art 20.

- Object—Art 21: The data subject shall have the right to object, on grounds relating to his or her particular situation, at any time to processing of personal data concerning him or her which is based on point (e) or (f) of Article 6(1). See Appendix A for the details.

3.4. Summary

4. Expert Interviews

4.1. Participants

4.2. Procedure

4.3. Results

4.3.1. Challenges Regarding the Implementation of GDPR

- User-friendliness: Not surprisingly, implementing GDPR in a way that does not place some unmanageable burden on users was mentioned by the participants as an issue. Implementing GDPR while avoiding complexity in interaction or overwhelming numbers of alerts or notifications was mentioned by E1 and E6. In addition, the importance of communicating privacy-related issues to end users in a simple way was mentioned by E1, E2 and E5.

- Awareness: Another challenge that experts considered important was raising awareness about the need to think about data protection early during application development. E4 believed that start-ups and some business do not have enough resources to consider privacy-related issues from the beginning stages of system development. E1’s thoughts regarding the prioritising of privacy-related issues are in line with E4.

- Technical considerations: Technically realising the requirements of the regulations is a further challenge. Guaranteeing anonymisation is difficult due to the currently available technology options, according to E5 and E6.

- Lack of specific guidelines: E5, who is a founder of a company developing IOT devices, said, “In my company, we have developers and we are trying to develop software solutions and we have not found any guidelines that we can use”. A similar concern was raised by all other participants.

- Reasons for compliance with GDPR: This was another challenge, mentioned from different perspectives. Participants also raised concerns about the need of proper education regarding the importance of complying with GDPR (similar statements by E1, E3 and E5). E1, for example, stated that “designers need to be told clearly … about compliance with GDPR…, designers ought to know the purpose of compliance, designers should know the context and reasons”. E3 also said that “we can explain to designers what kind of value they think they should include or how to include those values” to comply with GDPR. The overall difficulty was to find a way to provide developers and designers with explanations and reasons beyond avoiding fine and punishment.

4.3.2. Approaches to Address Implementation Challenges

- A group of experts: Building a group consisting of legal and technical experts was suggested by E3 and E4. Both argued that addressing GDPR-related challenges is not simple and therefore cannot be expected to be done by single individuals alone. E4 suggested to include “lawyers, data processors, those who are aware about how information flows in a company, IT people, data ethics experts, those with humanistic training, or philosophers, or those who have legal ethics as their main concern, so this should be a multidisciplinary discussion”. The communication among members of such a group can be also challenging, and E3 suggested that assigning one individual with knowledge in both legal and technical aspects of development process could be a way to facilitate the communication.

- Customised guidelines: All experts agreed on the importance of developing specific guidelines for each company or service provider. E1 said: “there has to be a guideline for designing interactive systems, that is fundamental, this is technical, then it is the discussion of system requirements that must be designed with features supporting the implementation of GDPR, then you need certain visual and graphical palettes based on a company’s visual brand, that is related to the company’s design guidelines”.

- Contextualisation: The best way to communicate concepts related to privacy to users is through simplified but tangible methods (i.e., mentioned by E1, E2, E3 and E5). E1 calls it “wise notification”, and E3 believes that if the consent is not fully understandable for users, then it can turn into a tool for service providers to obtain permission for processing user data without protecting the users’ privacy.

4.3.3. Integrating GDPR Considerations into the Development Process

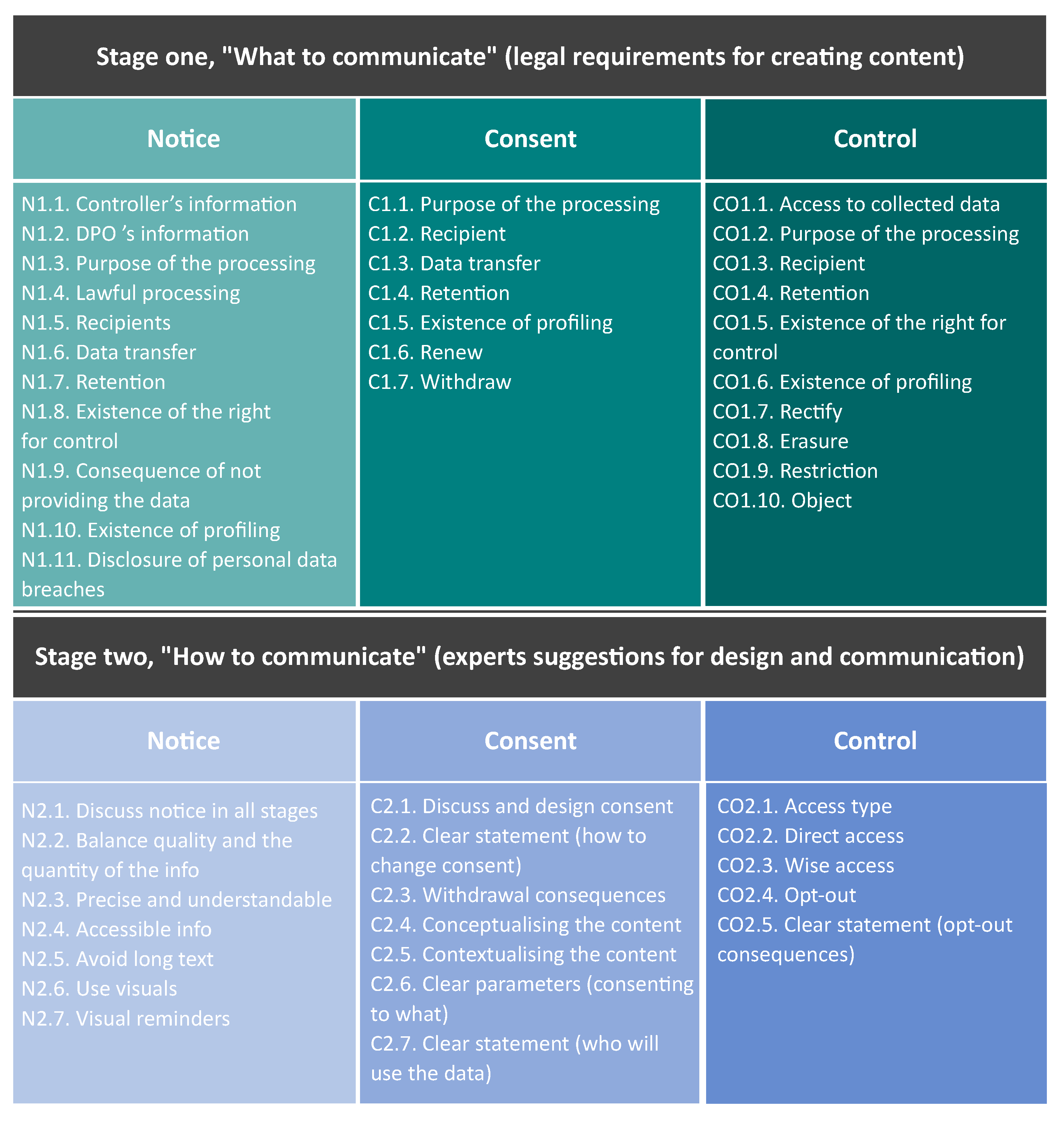

5. Guidelines for Realising GDPR-Compliant Implementations

5.1. Notice

5.1.1. Content Stage—Notice (N1)

5.1.2. Communication Stage—Notice (N2)

5.2. Consent

5.2.1. Content Stage—Consent (C1)

5.2.2. Communication Stage—Consent (C2)

5.3. Control

5.3.1. Content Stage—Control (CO1)

5.3.2. Communication Stage—Control (CO2)

5.4. Applying the Guidelines during Development

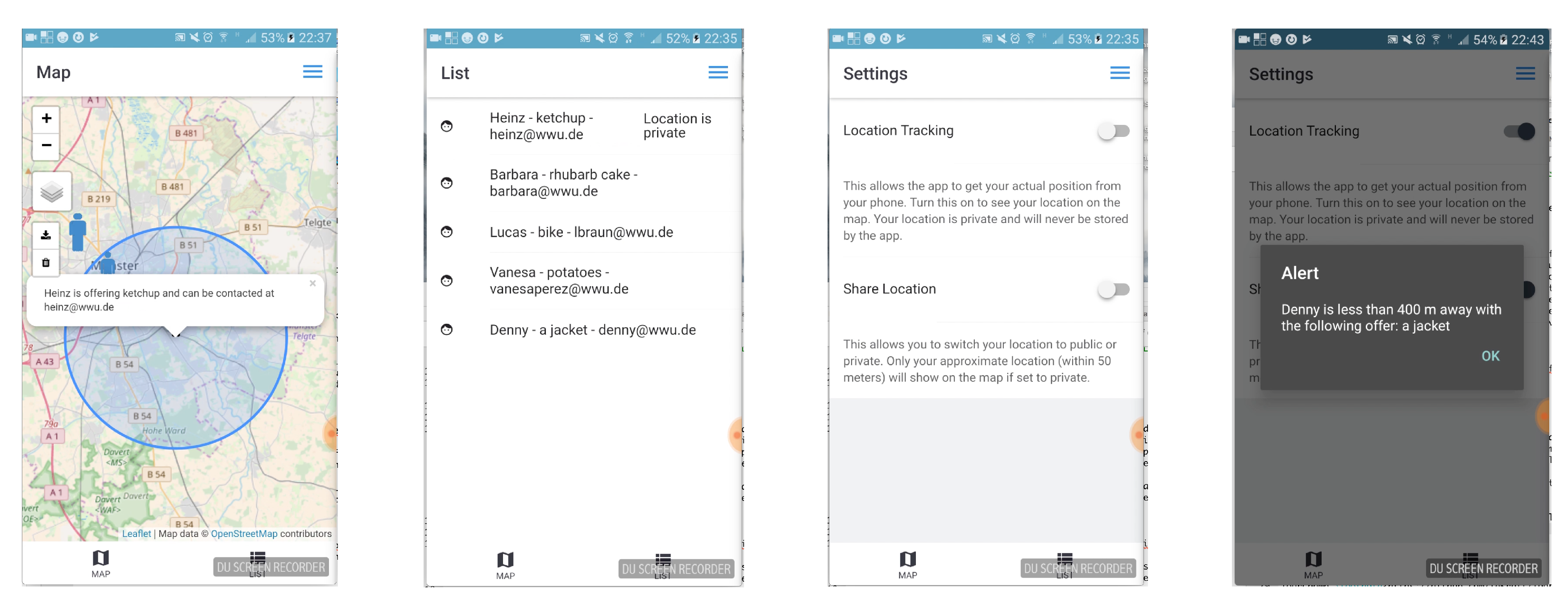

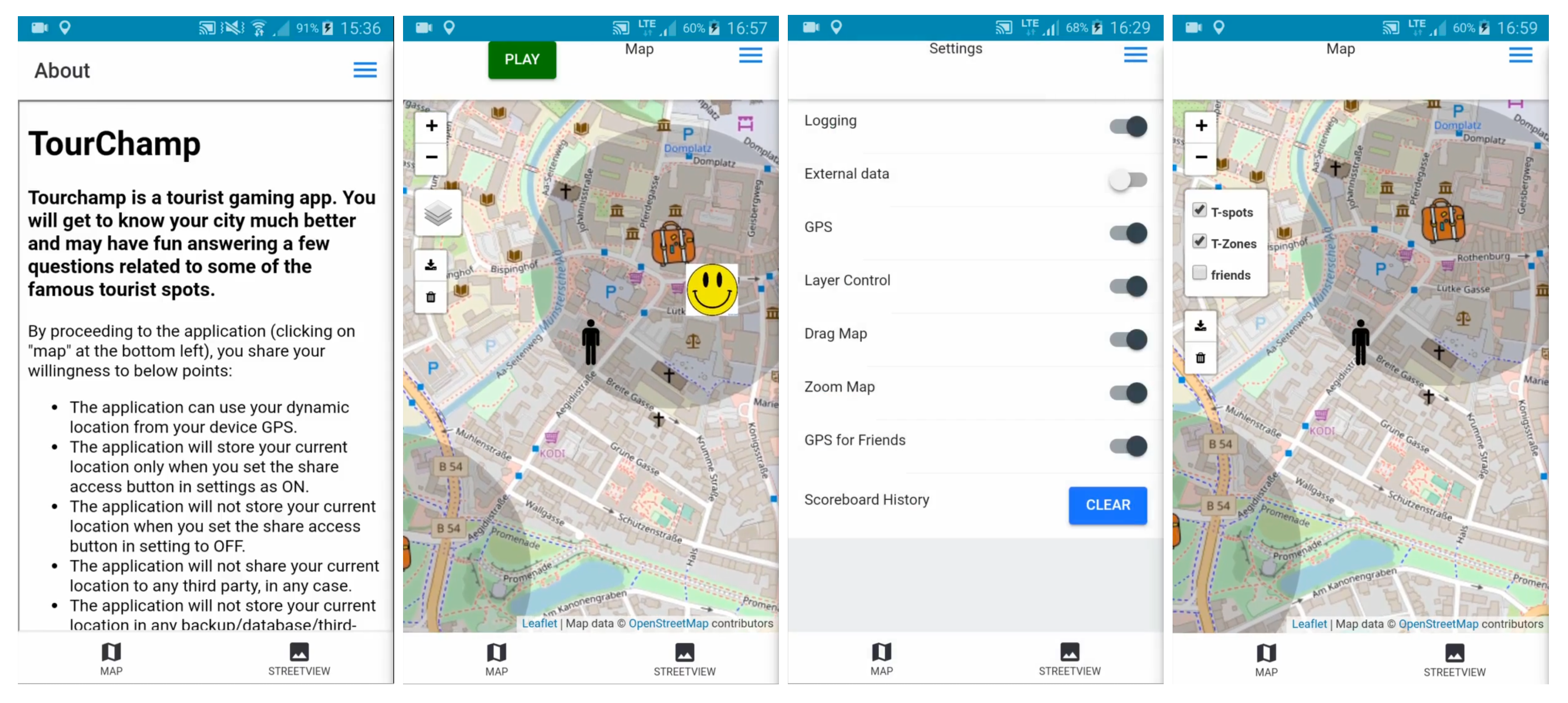

6. Guidelines in Practice: A Take-Home Study

6.1. Participants

6.2. Materials and Procedure

6.3. Results

6.3.1. Implementations

6.3.2. Location Privacy Features

6.3.3. Usability Guidelines

6.3.4. Limitations and Challenges

7. Discussion

7.1. Implications and Observations

7.2. Future Work

7.3. Limitations

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| GDPR | General Data Protection Regulation |

| IS | Information Systems |

| LBS | Location Based Services |

| UI | User Interface |

| NCC | Notice, Consent, Control |

| DPO | Data Protection Officer |

Appendix A. Summarised Analysis of NCC Factors

Appendix A.1. Notice

Appendix A.2. Consent

Appendix A.3. Control

- Access—according to Art. 15: The data subject shall have the right to obtain from the controller confirmation as to whether or not personal data concerning him or her are being processed, and where that is the case, access to the personal data and the following information: (a) the purposes of the processing; (b) the categories of personal data concerned; (c) the recipients or categories of recipient to whom the personal data have been or will be disclosed, in particular recipients in third countries or international organisations; (d) where possible, the envisaged period for which the personal data will be stored, or, if not possible, the criteria used to determine that period; (e) the existence of the right to request from the controller rectification or erasure of personal data or restriction of processing of personal data concerning the data subject or to object to such processing; (f) the right to lodge a complaint with a supervisory authority; (g) where the personal data are not collected from the data subject, any available information as to their source; (h) the existence of automated decision-making, including profiling, referred to in Article 22(1) and (4) and, at least in those cases, meaningful information about the logic involved, as well as the significance and the envisaged consequences of such processing for the data subject.

- Rectification—Art. 16: The data subject shall have the right to obtain from the controller without undue delay the rectification of inaccurate personal data concerning him or her. Taking into account the purposes of the processing, the data subject shall have the right to have incomplete personal data completed, including by means of providing a supplementary statement.

- Erasure (“right to be forgotten”): The data subject shall have the right to obtain from the controller the erasure of personal data concerning him or her without undue delay and the controller shall have the obligation to erase personal data without undue delay where one of the following grounds applies: (a) the personal data are no longer necessary in relation to the purposes for which they were collected or otherwise processed; (b) the data subject withdraws consent on which the processing is based according to point (a) of Article 6(1), or point (a) of Article 9(2), and where there is no other legal ground for the processing; (c) the data subject objects to the processing pursuant to Article 21(1) and there are no overriding legitimate grounds for the processing, or the data subject objects to the processing pursuant to Article 21(2); (d) the personal data have been unlawfully processed; (e) the personal data have to be erased for compliance with a legal obligation in Union or Member State law to which the controller is subject; (f) the personal data have been collected in relation to the offer of information society services referred to in Article 8(1).

- Restriction of processing—Art 18: The data subject shall have the right to obtain from the controller restriction of processing where one of the following applies: (a) the accuracy of the personal data is contested by the data subject, for a period enabling the controller to verify the accuracy of the personal data; (b) the processing is unlawful and the data subject opposes the erasure of the personal data and requests the restriction of their use instead; (c) the controller no longer needs the personal data for the purposes of the processing, but they are required by the data subject for the establishment, exercise or defence of legal claims; (d) the data subject has objected to processing pursuant to Article 21(1) pending the verification whether the legitimate grounds of the controller override those of the data subject.

- Data portability—Art 19: The data subject shall have the right to receive the personal data concerning him or her, which he or she has provided to a controller, in a structured, commonly used and machine-readable format and have the right to transmit those data to another controller without hindrance from the controller to which the personal data have been provided Art 20.

- Object—Art. 21: The data subject shall have the right to object, on grounds relating to his or her particular situation, at any time to processing of personal data concerning him or her which is based on point (e) or (f) of Article 6(1), including profiling based on those provisions. The controller shall no longer process the personal data unless the controller demonstrates compelling legitimate grounds for the processing which override the interests, rights and freedoms of the data subject or for the establishment, exercise or defence of legal claims. Where personal data are processed for direct marketing purposes, the data subject shall have the right to object at any time to processing of personal data concerning him or her for such marketing, which includes profiling to the extent that it is related to such direct marketing.

Appendix B. Expert Interviews’ Scripts

References

- Krumm, J. A survey of computational location privacy. Personal Ubiquitous Comput. 2009, 13, 391–399. [Google Scholar] [CrossRef]

- Clarke, R.; Wigan, M. You are where you’ve been: The privacy implications of location and tracking technologies. J. Locat. Based Serv. 2011, 5, 138–155. [Google Scholar] [CrossRef]

- Lin, Y.W. # DeleteFacebook is still feeding the beast–But there are ways to overcome surveillance capitalism. The Conversation, 20 March 2018. [Google Scholar]

- Michael, K.; Michael, M.G. The social and behavioural implications of location-based services. J. Locat. Based Serv. 2011, 5, 121–137. [Google Scholar] [CrossRef] [Green Version]

- European Union. Commission Regulation 2016/679 of 27 Apr. 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation), 2016 O.J. (L 119) 1 (EU) (General Data Protection Regulation); European Union: Brussels, Belgium, 2016. [Google Scholar]

- Newman, A.L. What the “right to be forgotten” means for privacy in a digital age. Science 2015, 347, 507–508. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Gartner, G.; Krisp, J.M.; Raubal, M.; Van de Weghe, N. Location based services: Ongoing evolution and research agenda. J. Locat. Based Serv. 2018, 1–31. [Google Scholar] [CrossRef]

- Westin, A.F. Social and political dimensions of privacy. J. Soc. Issues 2003, 59, 431–453. [Google Scholar] [CrossRef]

- Solove, D. Understanding Privacy; Harvard University Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Solove, D.J. Conceptualizing privacy. Cal. Law Rev. 2002, 90, 1087. [Google Scholar] [CrossRef]

- Keßler, C.; McKenzie, G. A geoprivacy manifesto. Trans. GIS 2018, 22, 3–19. [Google Scholar] [CrossRef]

- McKenzie, G.; Janowicz, K.; Seidl, D. Geo-privacy beyond coordinates. In Geospatial Data in a Changing World—Selected Papers of the 19th AGILE Conference on Geographic Information Science; Sarjakoski, T., Santos, M.Y., Sarjakoski, L.T., Eds.; Springer: Helsinki, Finland, 2016; pp. 157–175. [Google Scholar]

- Fawaz, K.; Feng, H.; Shin, K.G. Anatomization and protection of mobile apps’ location privacy threats. In Proceedings of the 24th USENIX Security Symposium (USENIX Security 15), Washington, DC, USA, 12–14 August 2015; USENIX Association: Berkeley, CA, USA, 2015; pp. 753–768. [Google Scholar]

- Duckham, M.; Kulik, L. Location privacy and location-aware computing. In Dynamic and Mobile GIS: Investigating Changes in Space and Time; Billen, R., Joao, E., Forrest, D., Eds.; CRC Press: Boca Raton, FL, USA, 2006; Chapter 3; pp. 35–51. [Google Scholar]

- Danculovic, J.; Rossi, G.; Schwabe, D.; Miaton, L. Patterns for personalized web applications. In Proceedings of the 6th European Conference on Pattern Languages of Programms (EuroPLoP ’2001), Irsee, Germany, 4–8 July 2001; Rüping, A., Eckstein, J., Schwanninger, C., Eds.; UVK—Universitaetsverlag Konstanz: Irsee, Germany, 2001; pp. 423–436. [Google Scholar]

- Kobsa, A. Privacy-enhanced personalization. Commun. ACM 2007, 50, 24–33. [Google Scholar] [CrossRef] [Green Version]

- Abbas, R.; Michael, K.; Michael, M. The regulatory considerations and ethical dilemmas of location-based services (LBS): A literature review. Inf. Technol. People 2014, 27, 2–20. [Google Scholar] [CrossRef]

- Perusco, L.; Michael, K. Control, trust, privacy, and security: evaluating location-based services. IEEE Technol. Soc. Mag. 2007, 26, 4–16. [Google Scholar] [CrossRef] [Green Version]

- Layton, R.; Celant, S. How the GDPR compares to best practices for privacy, accountability and trust. SSRN Electron. J. 2017, 1–23. [Google Scholar] [CrossRef]

- Raschke, P.; Axel, K.; Drozd, O.; Kirrane, S. Designing a GDPR-Compliant and Usable Privacy Dashboard; Springer: New York, NY, USA, 2017; pp. 1–13. [Google Scholar]

- Lindqvist, J. New challenges to personal data processing agreements: Is the GDPR fit to deal with contract, accountability and liability in a world of the Internet of Things? Int. J. Law Inf. Technol. 2017, 45–63. [Google Scholar] [CrossRef]

- Sweeney, L. k-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Beresford, A.R.; Stajano, F. Location privacy in pervasive computing. IEEE Pervasive Comput. 2003, 2, 46–55. [Google Scholar] [CrossRef]

- Mokbel, M.F.; Chow, C.Y.; Aref, W.G. The new casper: Query processing for location services without compromising privacy. In Proceedings of the 32nd International Conference on Very Large Data Bases, Seoul, Korea, 12–15 September 2006; pp. 763–774. [Google Scholar]

- Memon, I.; Chen, L.; Arain, Q.A.; Memon, H.; Chen, G. Pseudonym changing strategy with multiple mix zones for trajectory privacy protection in road networks. Int. J. Commun. Syst. 2018, 31, e3437. [Google Scholar] [CrossRef]

- Memon, I.; Arain, Q.A.; Memon, M.H.; Mangi, F.A.; Akhtar, R. Search me if you can: Multiple mix zones with location privacy protection for mapping services. Int. J. Commun. Syst. 2017, 30, e3312. [Google Scholar] [CrossRef]

- Langheinrich, M. A privacy awareness system for ubiquitous computing environments. In Proceedings of the International Conference on Ubiquitous Computing, Göteborg, Sweden, 29 September–1 October 2002; Springer: Berlin, Springer, 2002; pp. 237–245. [Google Scholar]

- Cavoukian, A. Privacy by Design: The 7 Foundational Principles. Implementation and Mapping of Fair Information Practices; Information and Privacy Commissioner of Ontario: Toronto, ON, Canada, 2009. [Google Scholar]

- Ataei, M.; Degbelo, A.; Kray, C. Privacy theory in practice: Designing a user interface for managing location privacy on mobile devices. J. Locat. Based Serv. 2018, 1–38. [Google Scholar] [CrossRef]

- Hornbæk, K.; Oulasvirta, A. What is interaction? In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17), Denver, CO, USA, 6–11 May 2017; Mark, G., Fussell, S.R., Lampe, C., Schraefel, M.C., Hourcade, J.P., Appert, C., Wigdor, D., Eds.; ACM Press: Denver, CO, USA, 2017; pp. 5040–5052. [Google Scholar] [CrossRef]

- Conger, S.; Pratt, J.H.; Loch, K.D. Personal information privacy and emerging technologies. Inf. Syst. J. 2013, 23, 401–417. [Google Scholar] [CrossRef]

- Wordart. Available online: https://wordart.com/ (accessed on 10 October 2018).

- Hsieh, H.F.; Shannon, S.E. Three approaches to qualitative content analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef] [PubMed]

- Bargiotti, L.; Gielis, I.; Verdegem, B.; Breyne, P.; Pignatelli, F.; Smits, P.; Boguslawski, R. Guidelines for Public Administrations on Location Privacy; Technical Report; Publications Office of the European Union: Luxembourg, 2016. [Google Scholar]

- Centers for Medicare & Medicaid Services. Selecting a Development Approach; Centers for Medicare & Medicaid Services: Baltimore County, MD, USA, 2008; pp. 1–10.

- Olsen, D.R. Evaluating user interface systems research. In Proceedings of the 20th Annual ACM Symposium on User Interface Software and Technology (UIST’07), Newport, RI, USA, 7–10 October 2007; Shen, C., Jacob, R.J.K., Balakrishnan, R., Eds.; ACM Press: Newport, RI, USA, 2007; pp. 251–258. [Google Scholar] [CrossRef]

- Ledo, D.; Houben, S.; Vermeulen, J.; Marquardt, N.; Oehlberg, L.; Greenberg, S. Evaluation strategies for HCI toolkit research. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI’18), Montreal, QC, Canada, 21–26 April 2018; Mandryk, R.L., Hancock, M., Perry, M., Cox, A.L., Eds.; ACM Press: Montreal, QC, Canada, 2018; pp. 1–17. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A Retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

- Lewis, J.R.; Sauro, J. The factor structure of the system usability scale. In Proceedings of the International Conference on Human Centered Design, San Diego, CA, USA, 19–24 July 2009; Springer: Berlin, Springer, 2009; pp. 94–103. [Google Scholar]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Döweling, S.; Schmidt, B.; Göb, A. A model for the design of interactive systems based on activity theory. In Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work (CSCW’12), Seattle, WA, USA, 11–15 February 2012; Poltrock, S.E., Simone, C., Grudin, J., Mark, G., Riedl, J., Eds.; ACM Press: Seattle, WA, USA, 2012; pp. 539–548. [Google Scholar] [CrossRef]

- Ivory, M.Y.; Hearst, M.A. The state of the art in automating usability evaluation of user interfaces. ACM Comput. Surv. (CSUR) 2001, 33, 470–516. [Google Scholar] [CrossRef] [Green Version]

- Schaub, F.; Balebako, R.; Durity, A.L.; Cranor, L.F. A design space for effective privacy notices. In Proceedings of the Eleventh Symposium On Usable Privacy and Security (SOUPS 2015), Ottawa, ON, Canada, 22–24 July 2015; pp. 1–17. [Google Scholar]

- Memon, I.; Mirza, H.T. MADPTM: Mix zones and dynamic pseudonym trust management system for location privacy. Int. J. Commun. Syst. 2018, e3795. [Google Scholar] [CrossRef]

| Participant ID | Brief Biography |

|---|---|

| E1 | Professor and consultant working on design ethics |

| E2 | E-Governance evangelist, currently part of an expert group advising a European city council on matters related to the implementation of GDPR in relation with government and public services |

| E3 | Assistant professor working on projects related to data justice, and in particular data ethics |

| E4 | Lawyer, currently advises companies in different sectors such as banking, insurance, transportation on how to implement GDPR |

| E5 | Professor and co-Founder of an IOT (Internet of Things) company, which develops solutions for smart cities |

| E6 | Professor, currently working closely with municipalities on the implementation of smart cities initiatives |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ataei, M.; Degbelo, A.; Kray, C.; Santos, V. Complying with Privacy Legislation: From Legal Text to Implementation of Privacy-Aware Location-Based Services. ISPRS Int. J. Geo-Inf. 2018, 7, 442. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi7110442

Ataei M, Degbelo A, Kray C, Santos V. Complying with Privacy Legislation: From Legal Text to Implementation of Privacy-Aware Location-Based Services. ISPRS International Journal of Geo-Information. 2018; 7(11):442. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi7110442

Chicago/Turabian StyleAtaei, Mehrnaz, Auriol Degbelo, Christian Kray, and Vitor Santos. 2018. "Complying with Privacy Legislation: From Legal Text to Implementation of Privacy-Aware Location-Based Services" ISPRS International Journal of Geo-Information 7, no. 11: 442. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi7110442