1. Introduction

A 360° panoramic walkthrough system is a kind of virtual reality technology based on the sequenced panoramic images. The image-based method enables walking through space much more easily than using 3D modeling and rendering. It is widely used in 3D visualization, virtual reality and augmented reality because of its easy data acquisition and processing, low bandwidth requirements, high resolution, strong authenticity and good navigation on cheap hardware display devices [

1]. Panoramic view is a new type of map service that displays 360° panoramic images taken in cities, streets, museum and other places in the form of a 3D street view based on 360° panorama technology. Users can use street view services to obtain an immersive experience by roaming around the 360° and 3D simulation scene without visiting the place. These services overcome the shortcomings of a traditional map, which is incapable of delivering a real scene. Thus, street view services are highly significant for applications research [

2].

Since panoramic image acquisition occurs on some limited and discontinuous sites, there are two main problems in a 360° panoramic walkthrough system compared to a general virtual reality system. First, the observation is limited to a few specific roaming points. Users can not freely move in the scene, and wandering can be realized only when there is only one viewpoint in the scene. Second, skipping occurs between roaming points, and the transition is unnatural, which seriously affects the walkthrough experience [

3,

4]. It is difficult to solve the previous problem without increasing the density of panorama stations and building a true 3D model. The latter problem can be compensated for by the visual effect of the smooth transition between the viewpoints, which is also the subject of this paper.

The smooth transition between the viewpoints can be used in compressing video sequences with high compression rates, virtual tourism and entertainment, 3D scene displays of real estate and public security, etc. The smooth transition algorithm for adjacent panoramic viewpoints is crucial for improving the user experience of the 360° panoramic roaming system, the practicability of indoor panoramic exhibition applications, and the usability of indoor panoramic navigation applications.

By summarizing the most current panoramic systems, there are several kinds of visual smoothing methods used in panoramic site transition:

- (1)

Skipping directly from one panorama site to another dismembers the relationships between the panoramic sites. The large flicker in the scenes can disorient the user. Unfortunately, most of the existing panoramic walkthrough systems use this skip mode to transition between adjacent sites without any strategies to mitigate this poor visual experience [

5]. To achieve a smooth transition between adjacent panorama sites, four common transition methods have been studied in previous research, including the texture transparent gradient, noise interference, model stretching and parallax.

- (2)

The texture-transparent gradient method reduces the flickering by linearly interpolating a series of panoramic images and fusing the colors of the two panorama sites [

6]. This method is simple and easy to implement, and it has steady visual effects at the beginning and end of the transition. However, an obvious ghosting occurs when the transition approaches half. In some game scenarios, noise interference is a common method of scene transition. The method disperses the user’s attention by generating some “noise” in the field of vision; this distracts the user’s attention from the unnatural scene transition, and the “noise” is usually the jitter, distortion, or change in the hue of the pixels on the screen [

7]. The method is essentially a visual deception, and the effect is limited.

- (3)

The model stretching method is based on a simple assumption that a feeling of “going in” is available by stretching the scene from the center of the view to the periphery, since the display effect will be magnified to different degrees as the distance from the objects in the visible area to the viewpoint is closer during the transition between sites. It can give the user a sense of moving forward using a simple image stretching process. This method can be applied to outdoor scenes with a broad perspective and relatively wide distances from objects to the viewpoint, especially in the street view with a layout arranged along the road [

8,

9]. However, because of the greater space shielding and smaller distance from objects to the viewpoint indoors, the stretching treatment will give an exaggerated sense of space displacement that is unnatural and unrealistic.

- (4)

In recent years, parallax based on human eye perception has been introduced into panoramic image roaming to improve the transitional effect, which includes full parallax, tour into the picture and fake parallax [

10,

11]. The full parallax method requires the support of fine 3D models, but the general panoramic walkthrough system does not have the necessary data. The fake parallax method only extracts the rough geometric information and the color layer of the background, and it simulates the parallax effect using a simple translation and zooming. Parallax is used mainly to smooth the observations from moving around while keeping eyes on an object. There are some marked differences in the direction and scale of motion between moving around and panorama scene transition.

To solve the problems of the destroyed spatial relationships among the panorama stations, and the disorientation caused by the inability of the user to perceive the change in position during panorama scene transition, a dynamic panoramic image rendering algorithm for smooth transitions between adjacent viewpoints in indoor scenes is proposed in this paper. Based on the principle of using homonymous points to guide graphics deformation, pairs of matched triangular patches are formed by homonymous points; then, a smooth interpolation of shape and texture is made with triangular patches as units to dynamically generate a panorama for each frame. This method dynamically constructs the sequence panorama with motion sensing during the transition. This makes the scene transition as real as walking in a three-dimensional scene, and effectively avoids the spatial disorientation.

Experiments using indoor panoramic images collected by different equipment and different distances between stations are performed. The results show that the proposed method can realize adaptive smooth transition between different scenes with a rendering efficiency of nearly 50 fps (frames per second), which effectively enhances the interactivity and immersion of the panoramic roaming system. Compared with similar research work, the special points of this paper are as follows:

- (1)

In the indoor space with a relatively regular layout but serious occlusion, instead of drawing matching points manually [

12], we construct matched triangular patches by using the method of obtaining feature points and lines after panoramic orthophoto projection.

- (2)

We use barycentric coordinates to perform the panoramic transition algorithm directly on the spherical panoramic model, instead of the transition of the cylindrical panoramic image [

13] and the cube panoramic image [

14].

- (3)

The idea of our panoramic transition algorithm is similar to [

15], but we constructed a panoramic system with a panoramic deformation layer, and analyzed its visual effect, practicability and operation performance in detail.

To illustrate the problem about the unnatural panoramic transition and better understand the dynamic panorama transition method, the videos named “problem of panoramic transition.mp4” (

https://youtu.be/R_lW1Xz8QNc) and “smooth panoramic transition using matched triangular patches.mp4” (

https://youtu.be/xQyqiUuLPCI) are provided as an attachment for readers.

2. Methods

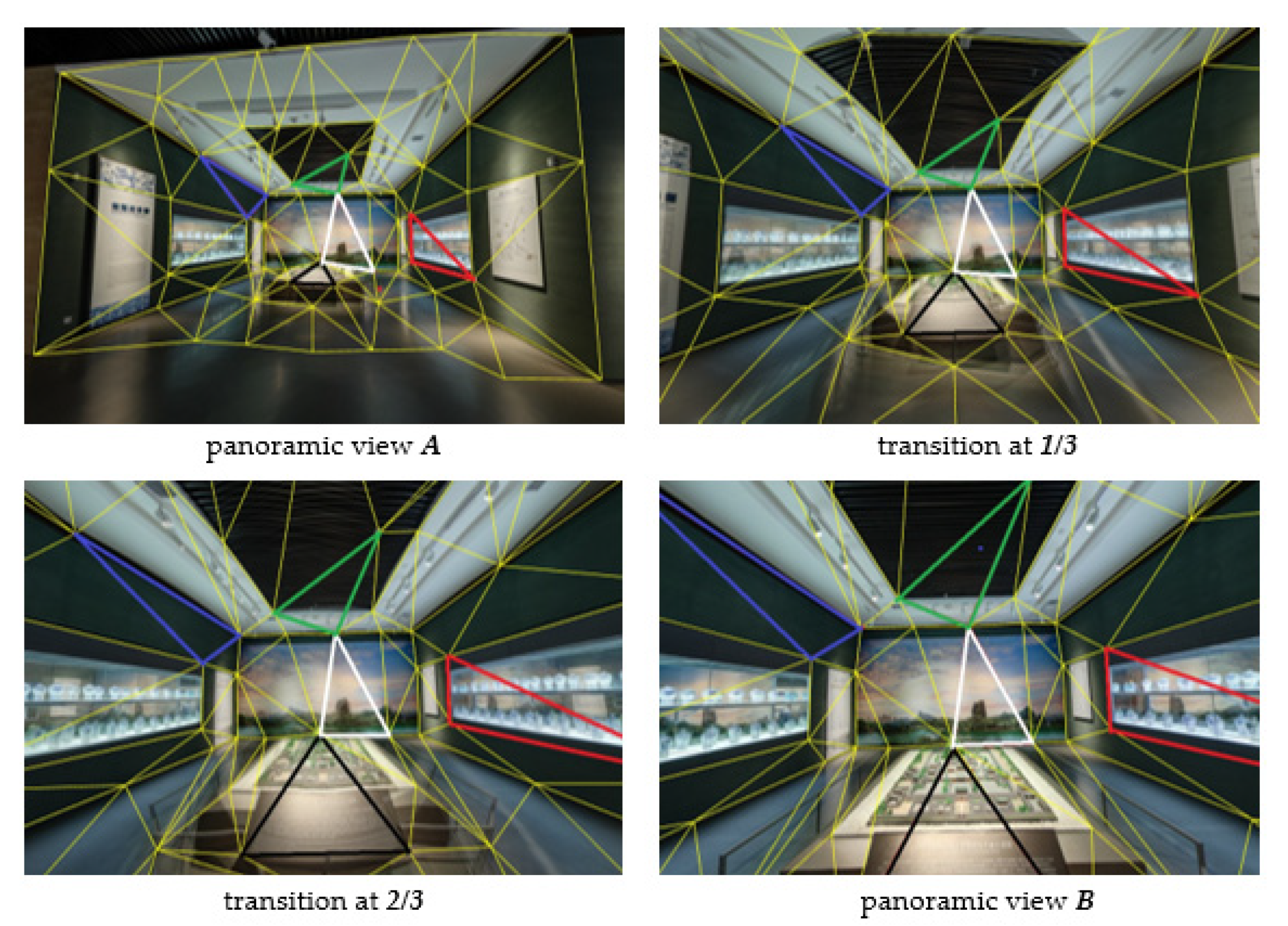

To overcome the jumping between the panoramic images of adjacent viewpoints, the graphics deformation principle is presented for the matched triangle in the adjacent panoramic images. The panoramic images are divided into many matched triangular patches. The matched triangular patches are then taken to implement graphical deformation to realize smooth transitions between the panoramic images of adjacent viewpoints. The schematic diagram of the basic principle is shown in

Figure 1. Transitional frames are generated by the smooth interpolation of the shapes and textures of all matched triangular patches.

As

Figure 1 shows, the panorama scene transitions from the current site to the next site are controlled by the transition of yellow triangular patches with a blended texture that are formed by fading out the current panorama A and fading in the next panorama B. The white triangle in the center of the view is enlarged with a slight deformation, but the red, blue, green, and black triangles are obviously stretched because of the transverse Mercator projection.

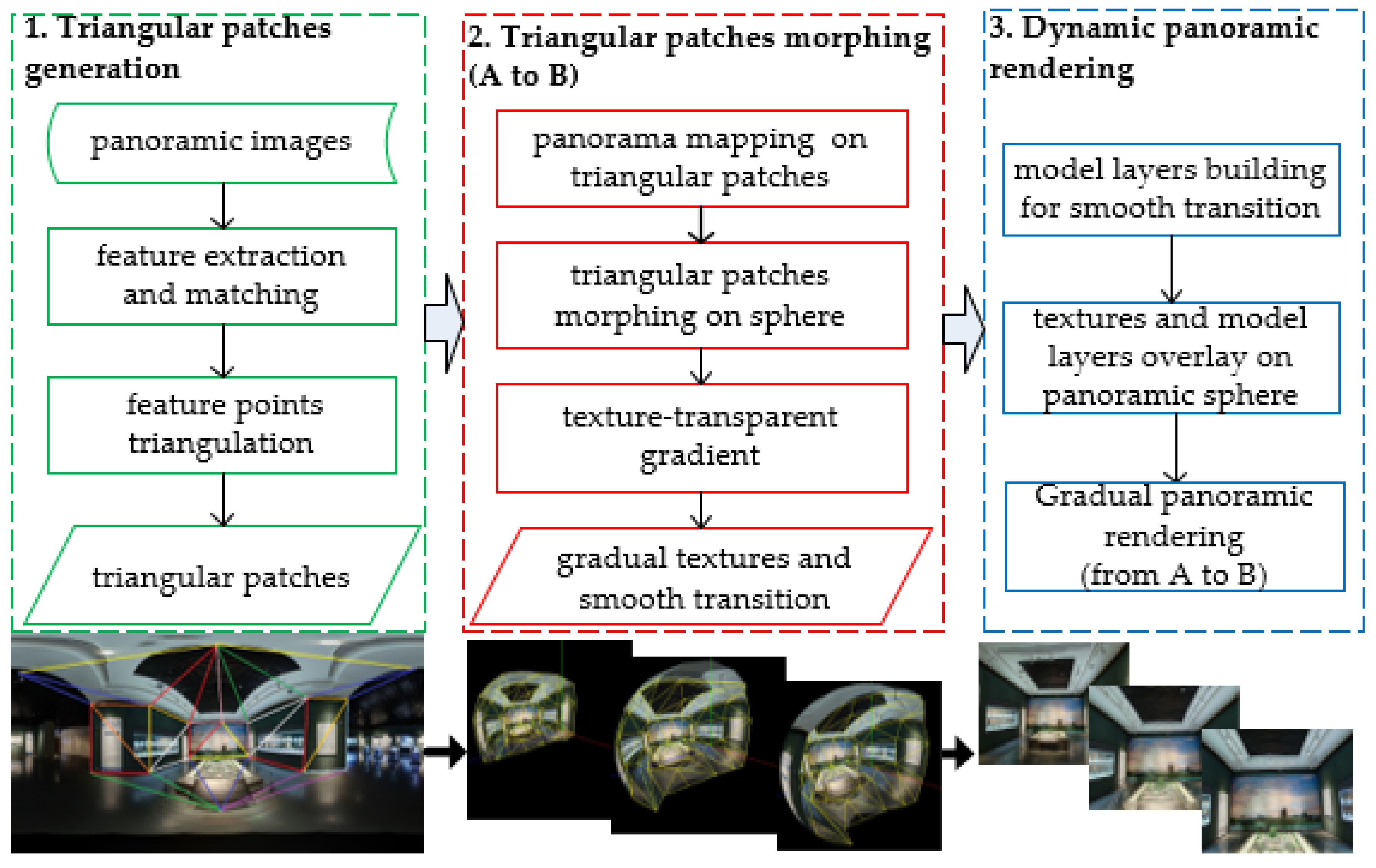

To achieve the smooth panorama transition between adjacent viewpoints with frame rates of no less than human visual perception, the production workflow is designed as shown in

Figure 2. The proposed method is divided into three parts. First, the triangular patches for controlling transitions are generated from the feature points of panoramas by a triangulation algorithm featured by maximizing the minimum angles. The above work is detailed in

Section 2.1. Then, we morph the triangular patches on the surface of a 3D panorama sphere while fading out texture A and fading in texture B. The principles and algorithms involved in this section are presented in

Section 2.2 and

Section 2.3. Finally, a strategy for implementing the panoramic walkthrough system, which is equipped with a dynamic panoramic image rendering algorithm for smooth transitions between adjacent viewpoints by overlying the morph model layer, is described in

Section 2.4.

2.1. Generation of Matched Delaunay Triangular Patches

The solutions of the panoramic image matching problem can be roughly divided into three categories. First, Bay et al. [

16] directly applied the existing techniques for general frame image processing to panoramic images. The algorithm is simple, but the special geometric deformation of the panoramic image has a profound influence on the matching results. Second, the feature extraction and various operations are directly performed in the spherical scale space, and the matching accuracy is high, but the model is complex and difficult to implement. The rigorous spherical geometry model was adopted to map the panoramic image onto the sphere. The image function was expressed in the form of a spherical harmonic function, and various operations are carried out in the spherical scale space in [

17]. Third, Mauthner et al. [

18] proposed a virtual imaging surface for matching that is generated by resampling the panoramic image according to the perspective projection model, but the obtained feature matching is relatively limited.

In this paper, the way to obtain homonymous points referenced in the method of the virtual imaging surface (the third solution above) is shown in

Figure 3.

First, the panoramic image is projected onto a cube to form six local planes, and the homonymous feature points are extracted by the classical SIFT (Scale-invariant feature transform) on all local planes. Then, a following back projection converts these points back into the image coordinates. Finally, triangular patches are generated by the triangulation algorithm from homonymous points. Based on the preferred homonyms points, the Delaunay triangulations algorithm is used to generate the triangular patches [

19]. It tends to avoid sliver triangles because it maximizes the minimum angle of all the angles of the triangles in the triangulation. Moreover, a Delaunay triangulation for a given set P of discrete points in a plane is a triangulation DT(P) such that no point in P is inside the circumcircle of any triangle in DT(P). This ensures that no control faces intersect.

2.2. Synchronous Morphing of the Shape and Texture

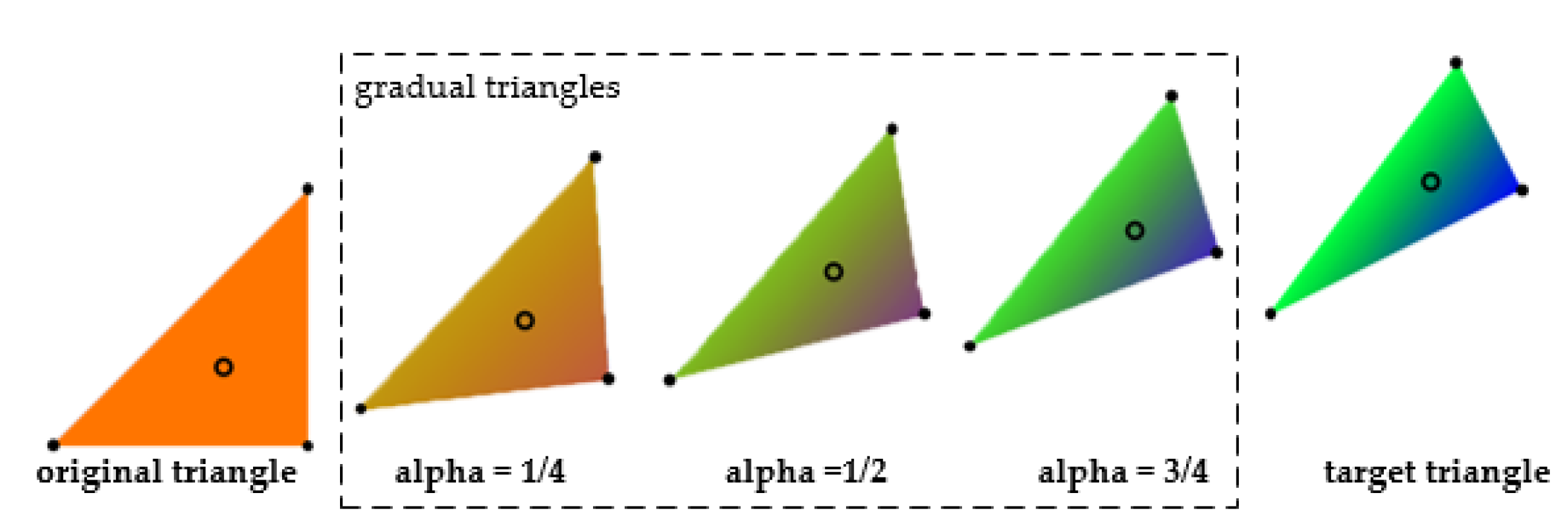

Based on the idea of the transition guided by control points, we use triangular patches as the basic control unit to transform the adjacent panorama. As the simplest surface element, a triangle’s transition is shown in

Figure 4. With the increase from 0 to 1, a triangle’s deformation is accomplished by simultaneously changing the triangle shape and the interior point texture. The original triangle (red) is displayed on the left, the target triangle (blue and green) is displayed on the right, and the gradual triangles (from 1/4 to 1/2 to 3/4) become narrower and greener. The solid black spots indicate the movement of the triangle’s vertices, and the hollow spots express the textural interpolation of interior points.

When a triangular surface is deformed, the positions and textures of its interior points need to be changed. In the Cartesian coordinate system, this operation requires a large number of interpolated calculations. However, in the Barycentric coordinates system, this operation becomes easy due to an important property in which the barycentric coordinates of each point in the triangle remain during a linear deformation [

20].

According to the definition of the Barycentric coordinates, considering a triangle defined by its three vertices, , and , any interior point in ΔABC can be expressed as a linear combination of three vertex coordinates , where and ; the combination of parameters is regarded as the Barycentric coordinates of point . Specially, the vertices themselves have the coordinates , and .

Setting an interior point with the Cartesian coordinates and the Barycentric coordinates , there is the following deduction:

- (1)

The transformation equation from Barycentric coordinates to Cartesian coordinates can be described as follows:

- (2)

The transformation equation from Cartesian coordinates to Barycentric coordinates can be described as follows:

The synchronous shape morph and the texture interpolation of a triangle can be realized by Algorithm 1 with the following steps.

- 1.

At the moment that

a in triangle

transitions to a new position in

, the coordinates of three vertices are the linear interpolation of the beginning and end positions in the Cartesian coordinates system as in Equation (3).

where

is the beginning coordinate,

is end coordinate, and

is the coordinate at the moment

.

- 2.

For each interior point in , a blended texture should be calculated. To blend the texture of the origin triangle with the texture of target triangle , the corresponding position of in should be calculated by Equations (1) and (2). After we get obtain the Barycentric coordinate of in , the Cartesian coordinate of in can then be obtained.

- 3.

The texture of the interior point

can be interpolated according to the corresponding texture

and

of

and

. The color interpolation follows Equation (4).

- 4.

A temporary triangle is meshed by the new shape and blended texture at moment .

| Algorithm 1. Synchronously Morph the Shape and Interpolate the Texture of a Triangle |

Input: the origin triangle , and the target triangle , the progress

Begin

interpolate three vertices of triangle using Equation (3)

for each interior point in do

is the Cartesian coordinate of before the transition

, in

, in

, the texture at

end

build new mesh by the and

End |

2.3. Transition between Adjacent Panoramic Viewpoints

The transition from panoramic site A to panoramic site B can be accomplished by dividing panoramic scenes into corresponding patches (

Section 2.1), and then performing morphing operations (

Section 2.2) on these patches. The process of transition from panoramic site A to B follows Algorithm 2.

| Algorithm 2: Transition the Panorama from A to B |

Input: two site panorama images ,, the control triangular patches

Begin

mapping and to

initial state

Loop: progress from 0.0 to 1.0

for each triangle in do

on Spherical coordinate

end for

.

end loop

final state

End |

- 1.

Initially, panoramas of the current station and the next station are mapped to each to initialize the triangular patches. The current view shows the panoramic site A, meaning that is fully visible and is completely transparent.

- 2.

At each moment during the transition (more than 50 frames), each triangle in the control triangular patches transitions to a new status using Algorithm 1. Stitching all new status triangle together, a current frame was formed to update the panoramic view.

- 3.

The end-state shows the panoramic site B with fully visible and completely transparent .

The middle textures

are formed by collecting all

.

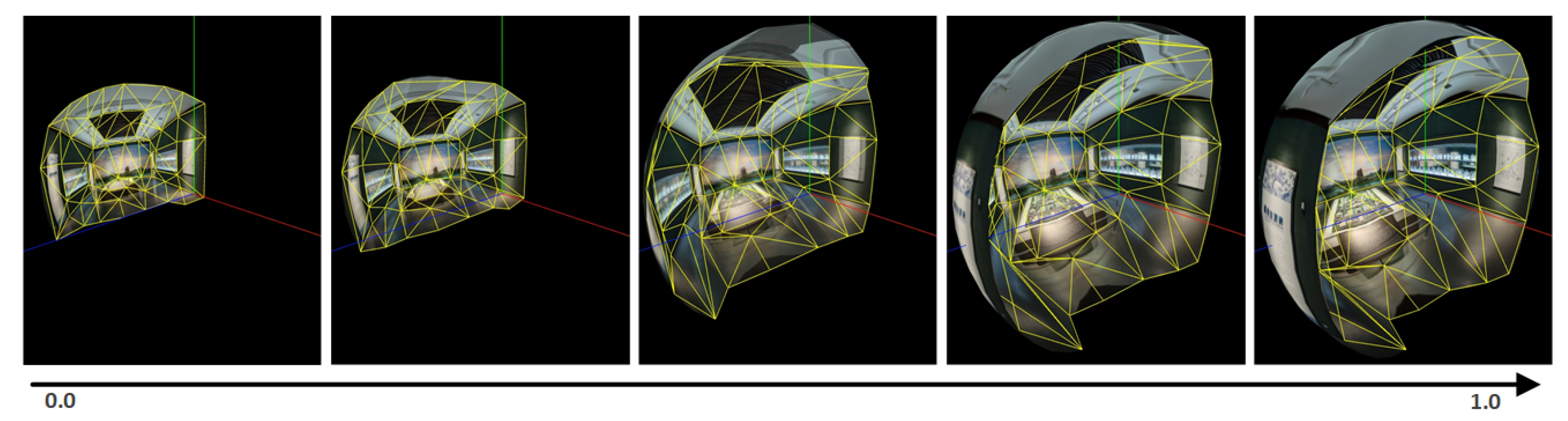

Figure 5 shows a sample of the middle textures generated by the smooth transition algorithm using the matched Delaunay triangular patches of two adjacent viewpoints.

The extra fact to note is that a spherical interpolation function is used instead of a linear interpolation function to morph the triangles in the spherical space. According to Liem [

21], the linear interpolation on longitude and latitude coordinates is equivalent to the spherical interpolation on Cartesian coordinates when the pixel coordinates are proportional to the spherical longitude and latitude on the panorama with an aspect ratio of 2:1. Therefore, the morphing mesh’s transition can fully perform based on

Section 2.2.

2.4. Dynamic Panoramic Image Rendering

According to the transitional method described in

Section 2.3, a set of dynamic textures

was collected. Therefore, the natural transitional effect of the panoramic scene can be achieved by “playing” the dynamic textures

on the panoramic ball. This strategy is easy to implement but is inefficient, because it requires the constant formation of new textures and retexture mapping, which on a large image requires serious CPU and memory consumption.

The dynamic panoramic ball display strategy was proposed as shown in

Figure 6. To avoid repeated texture mapping, a morphing mesh is added between the experiencer’s eyes and the regular panoramic ball as a model layer. Therefore, the panorama of site A or B is displayed to the users when roaming a fixed site, and the morphing mesh is displayed to users when jumping between sites.

The implementation of the dynamic panoramic image rendering algorithm for smooth transitions between adjacent viewpoints based on matched Delaunay triangular patches is shown in

Figure 7. More specifically, a spherical triangular mesh was generated by three-dimensional Delaunay triangulations. Then, the dynamic morph layer was implemented by transitioning between adjacent panorama sites on the sphere.

3. Experiments and Results

3.1. Experimental Environment and Dataset

To comprehensively and effectively test the effect of this method, two datasets were collected by different acquisition equipment in different scenarios.

Table 1 shows the collected panoramas and the corresponding acquisition equipment. The experimental data and results involved in this article can be obtained at

https://github.com/zpc-whu/panoramic-transition.

Dataset-1 consists of two panoramic pictures of a museum. These pictures are taken by a high-definition assembly camera with six lenses through the traditional station measurement in an digital museum construction project. The resolution can reach 20,000 × 10,000. The visual effect and accuracy testing of the proposed method is executed using these two panoramas.

Dataset-2 consists of seven panoramic pictures of a library, taken by a camera with six cheap XiaoYi lenses carried on the latest efficient indoor mobile measurement trolley during an indoor mapping project. The resolutions are 4096 × 2048. The robustness experiment of the proposed method is executed using these seven panoramas with six tests with distances from the cur-rent site to the destination site of 1 m, 2 m, 3 m, 5 m, 10 m, and 15 m.

3.2. Equations Visualization Performance

The visual effect and accuracy testing of the proposed method is executed using Dataset-1. The visualization performance is shown in

Table 2. The first column in the table is the transition schedule. It proceeds from 0 to 1 to complete the entire transitional process. Particularly, views of 11 moments (1%, 10%, 20%, 30%, 40%, 50%, 60%, 70%, 80%,90%, and 99%) are selected to show the visual effects of the transitional methods. In contrast, the second column shows the results of the commonly used texture transparent gradient method, and the third column shows the results of the proposed method. The fourth column shows the deformation motion of the control triangular patches during the transition to better understand the principle of the proposed method.

In

Table 2, comparing the scenes in the third column with the scenes in the second column, the method proposed in this paper has obvious advantages over the existing the texture transparency gradient method. There was a slight ghosting only at the progress of approximately 50% using the proposed method, but there was a severe ghosting between 30% and 70% using the transparency gradient method. Moreover, observing the third columns in sequence, the user can experience a distinct visual movement (going in) without a perceptible visual distortion introduced by the existing model stretching method.

More specifically, the proposed transitional method can guarantee a smooth process on the cabinets on both sides, the front display screen and the simulated sand table on the floor, without ghosting and distortion. Therefore, the proposed method of panoramic transitions guided by the triangular patches is an effective seamless transitional solution for the indoor panoramic scene. Compared with the method of the transparency gradient and model stretching, this method can achieve extremely natural transitions of scenes in conditions with only panoramic data.

3.3. Applicability Performance

To determine the robustness, six experiments (jumping from different distances to the same site) were performed using Dataset-2 to verify the sensitivity of the proposed method to the distance between sites. The robustness is listed in

Table 3. The first column is the distance from the start site to the target site of a transition, and six panoramic sites at different distances (1 m, 2 m, 3 m, 5 m, 10 m, and 15 m) from the target site are selected to show the robustness of the proposed transitional methods. The second column shows the control triangular patches extracted from each start site. The third column shows a snapshot of the user’s view when transitioning to 50%. The fourth column shows the deformation introduced by the morphing mesh near the end of the transition. The first row shows the real target site for all six experiments.

In

Table 3, from the second column, the smaller control area of the homonymic triangle patches with the increase in the distance between the two panorama sites is caused by the decreasing image overlap. More specifically, it is easy to extract the control triangle patches on the left side of the homonymic scene because of the commodious spatial distribution with objects farther away from the shooting site. However, it is challenging to extract the control triangle patches on the right side of the homonymic scene because of the large angular variations in the perspective caused by narrow spaces and occlusions of the objects in space. From the third column, the deformation of the generated panoramic scene increases, and the accuracy of the transitional model guided by the triangle patches decreases with the increase in the distance between the two panorama sites. In other words, the right side of the scene is significantly compressed, resulting in greater distortion.

In general, as the distance between the two sites increases from 1 m to 15 m, the overlap of the space scene decreases. This results in the control area that guides the transition decreasing, which increases the distortion of the generated scenes and worsens the transitional effect. However, the visual distortions resulting from the transitions within the 10 m range are acceptable in most application scenarios. The visual sense of movement is still maintained, and there is still an advantage over other transitional methods. Therefore, the proposed method can basically satisfy the smooth transition between two indoor panorama sites with a station spacing less than or equal to 10 m.

3.4. Efficiency Performance

To test the feasibility and efficiency of the proposed method, a variety of browser platforms extended to personal computers and mobile devices were used to run the single page application.

Table 4 recodes the frame rate under different platforms and datasets when the scene is roaming and transiting. The first column lists the four common browsers for testing, and the second and third columns are the frame rates in units of fps (frames per second).

As

Table 4 shows, different platforms keep different frame rates due to the different rendering capabilities, but there is slight difference in frame rates between roaming and transitioning on the same platform. This confirms that the proposed panorama transitional scheme does not slow down the rendering of panoramic scenes, and it is beyond the requirements of human visual observations (24 fps). Therefore, it is feasible and efficient.

4. Discussion

The seamless transition of the panoramic scene is achieved under the image morphing guidance of the triangular mesh generated by extracting the homonymous control points. From the extraction of homonymous feature points and the generation of triangular patches, to the calculation principles of triangular patch deformation and texture interpolation, to the design of the panoramic site transition algorithm and the implementation of the panoramic walkthrough system equipped with a dynamic morph layer, the proposed algorithm is described in detail. The further development of this methodology is the robust and smart matching of panoramic images.

The method is based on feature points, but it is difficult to find the feature points with practical significance in two panoramic images. Furthermore, the conventional line extraction and methods for eliminating mismatching points (such as distance anomaly and direction consistency) are also difficult to apply into panoramic images directly. Therefore, the development of feature extraction and matching between panoramic images is very important for the natural transition of panoramic scenes. The work of Carufel and Laganiere [

22] and Kim and Park [

23] can be a further reference regarding the matching of panoramic images.

As the transitional units, the control triangular patches play a vital role. For instance, the narrow triangle will cross the larger angle of view, resulting in larger distortions when we use the linear interpolation to simulate the continuous transition in the space. The object is broken when the same object is divided into different triangles, so it is necessary to make a reasonable division of the panoramic scene before doing a transition. In this regard, the latest research on restoring the depth and layout of houses from single panoramic images [

24,

25] is an inspiration to divide the panoramic space reasonably. Of course, the use of some auxiliary data can improve the transition, including planes in the scene extracted from the point cloud.

As the distance between stations increases, the shooting angle varies greatly. The control points will centrally appear in one cluster on the image or will be scattered over the upper and lower edges of the image. Such control triangular patches do not have strong reliability, frequently lead to greater deformation or confusion, and do not have good transitional visual effects. The analysis found that three major causes led to the above poor performance:

- (1)

the large distance between the sites with narrow space;

- (2)

objects are closer to the point of exposure and produce large occlusions;

- (3)

the depth distribution of the objects in space is diverse.

In view of the above problems, a shooting route with a relatively wide view should be a better choice.

Its natural smooth visual browsing experience is superior to the existing method of panoramic site transitions. Through the comprehensive test and analysis of its application scenarios and operational efficiency, the method is shown to be able to smoothly transition between adjacent panoramic sites collected by traditional station measurement or up-to-date mobile measurements. It also meets the needs of different applications, including panoramic space position navigation or high-resolution panoramic browsing. However, it should be noted that the method is not suitable for the scenes without obvious texture features or narrow and complicated scenes due to the invalid morph constraint caused by the rare feature points and small overlap.

5. Conclusions

In this paper, a dynamic panoramic image rendering algorithm for smooth transitions between adjacent viewpoints based on matched Delaunay triangular patches is proposed. The experiment shows that the proposed method seems to produce a visual sense of motion by the movement of vertexes and texture transparency changes in the morphing mesh. This work improves the user experience of the 360° panoramic roaming system and greatly improves the usability of indoor panoramic exhibition applications and indoor panoramic navigation applications. Furthermore, this technology can also be used in compressing video sequences with high compression rates, virtual tourism and entertainment, 3D scene displays of real estate and public security, etc.

Author Contributions

Conceptualization, Qingwu Hu and Mingyao Ai; methodology, Zhixiong Tang and Pengcheng Zhao; software, Zhixiong Tang and Pengcheng Zhao; validation, Zhixiong Tang and Pengcheng Zhao; formal analysis, Zhixiong Tang; investigation, Pengcheng Zhao; resources, Pengcheng Zhao; data curation, Pengcheng Zhao; writing—original draft preparation, Pengcheng Zhao; writing—review and editing, Qingwu Hu and Mingyao Ai; visualization, Zhixiong Tang; supervision, Qingwu Hu; Project administration, Qingwu Hu; funding acquisition, Mingyao Ai. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by grants from the National Science Foundation of China (Grant no. 41701528), Science and Technology Planning Project of Guangdong, China (Grand No. 2017B020218001) and the Fundamental Research Funds for the Central Universities (Grant no.2042017kf0235).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Koeva, M.; Luleva, M.; Maldjanski, P. Integrating spherical panoramas and maps for visualization of cultural heritage objects using virtual reality technology. Sensors 2017, 17, 829. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Anguelov, D.; Dulong, C.; Filip, D.; Frueh, C.; Lafon, S.; Lyon, R.; Ogale, A.; Vincent, L.; Weaver, J. Google street view: Capturing the world at street level. Computer 2010, 43, 32–38. [Google Scholar] [CrossRef]

- Quartly, M. An Evaluation of the User Interface for Presenting Virtual Tours Online; University of Canterbury: Christchurch, New Zealand, 2015. [Google Scholar]

- Pasewaldt, S.; Semmo, A.; Trapp, M.; Döllner, J. Multi-perspective 3d panoramas. Int. J. Geogr. Inf. Sci. 2014, 28, 2030–2051. [Google Scholar] [CrossRef]

- Vangorp, P.; Chaurasia, G.; Laffont, P.; Fleming, R.W.; Drettakis, G. Perception of visual artifacts in image-based rendering of façades. Comput. Graph. Forum 2011, 30, 1241–1250. [Google Scholar] [CrossRef]

- Chen, K.; Lorenz, D.A. Image sequence interpolation based on optical flow, segmentation, and optimal control. IEEE Trans. Image Process. 2012, 21, 1020. [Google Scholar] [CrossRef] [PubMed]

- Egan, K.; Holzschuch, N.; Holzschuch, N.; Ramamoorthi, R. Frequency analysis and sheared reconstruction for rendering motion blur. ACM SIGGRAPH 2009, 28, 93. [Google Scholar] [CrossRef]

- Liu, J.; Cui, D. Walkthrough technology in panorama-based multi-viewpoint VR environment. Comput. Eng. 2004, 30, 153–154. [Google Scholar] [CrossRef]

- Zhang, Y.W.; Dai, Q.; Shao, J. Research and implementation on smooth-browser in multi-viewpoint VR environment. Comput. Eng. Des. 2011, 32, 2768–2800. [Google Scholar] [CrossRef]

- Stich, T.; Linz, C.; Wallraven, C.; Cunningham, D.; Magnor, M. Perception-motivated interpolation of image sequences. In Proceedings of the Symposium on Applied Perception in Graphics and Visualization, Los Angeles, CA, USA, 9–10 August 2008; Volume 8, pp. 97–106. [Google Scholar] [CrossRef]

- Morvan, Y.; O’Sullivan, C. Handling occluders in transitions from panoramic images:a perceptual study. ACM Trans. Appl. Percept. 2009, 6, 1–15. [Google Scholar] [CrossRef]

- Kawai, N.; Audras, C.; Tabata, S.; Matsubara, T. Panorama image interpolation for real-time walkthrough. ACM SIGGRAPH 2016 Posters 2016, 1–2. [Google Scholar] [CrossRef]

- Cahill, N.D.; Ray, L.A. Method and System for Panoramic Image Morphing. U.S. Patent 6,795,090, 21 September 2004. [Google Scholar]

- Zhao, Q.; Wan, L.; Feng, W.; Zhang, J.; Wong, T. Cube2video: Navigate between cubic panoramas in real-time. IEEE Trans. Multimed. 2013, 15, 1745–1754. [Google Scholar] [CrossRef]

- Wang, S.; Zhu, Z.P.; Yang, J.Y.; Wang, Y.P. Research on panoramic smooth roaming system based on image morphing. J. Lanzhou Jiaotong Univ. 2014. 03, 82–86.

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up robust features. Comput. Vis. Image Underst. 2006, 110, 404–417. [Google Scholar] [CrossRef]

- Cruz-Mota, J.; Bogdanova, I.; Paquier, B.; Bierlaire, M.; Thiran, J.P. Scale invariant feature transform on the sphere: Theory and applications. Int. J. Comput. Vis. 2012, 98, 217–241. [Google Scholar] [CrossRef]

- Mauthner, T.; Fraundorfer, F.; Bischof, H. Region matching for omnidirectional images using virtual camera planes. In Proceedings of the 11th Computer Vision Winter Workshop, Telc, Czech Republic, 2–8 Feburary 2006; pp. 93–98. [Google Scholar]

- Du, Q.; Faber, V.; Gunzburger, M. Centroidal voronoi tessellations: Applications and algorithms. Siam Rev. 1999, 41, 637–676. [Google Scholar] [CrossRef] [Green Version]

- Floater, M.S. Generalized barycentric coordinates and applications. Acta Numer. 2015, 24, 161–214. [Google Scholar] [CrossRef] [Green Version]

- Liem, J. Real-time raster projection for web maps. Int. J. Digit. Earth 2016, 9, 215–229. [Google Scholar] [CrossRef]

- Carufel, J.L.D.; Laganière, R. Matching cylindrical panorama sequences using planar reprojections. In Proceedings of the IEEE International Conference on Computer Vision Workshops 2012, Providence, RI, USA, 16–21 June 2012; Volume 28, pp. 320–327. [Google Scholar]

- Kim, B.S.; Park, J.S. Estimating deformation factors of planar patterns in spherical panoramic images. Multimed. Syst. 2016, 23, 1–19. [Google Scholar] [CrossRef]

- Xu, J.; Stenger, B.; Kerola, T.; Tung, T. Pano2CAD: Room Layout from a Single Panorama Image. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 27–29 March 2017; pp. 354–362. [Google Scholar]

- Yang, Y.; Jin, S.; Liu, R.; Kang, S.B.; Yu, J. Automatic 3D Indoor Scene Modeling from Single Panorama. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).