Spatial and Temporal Patterns in Volunteer Data Contribution Activities: A Case Study of eBird

Abstract

:1. Introduction

2. Materials and Methods

2.1. eBird Data

2.2. Visualizing Spatial and Temporal Patterns

2.3. Modeling Sampling Efforts

2.3.1. Sampling Efforts

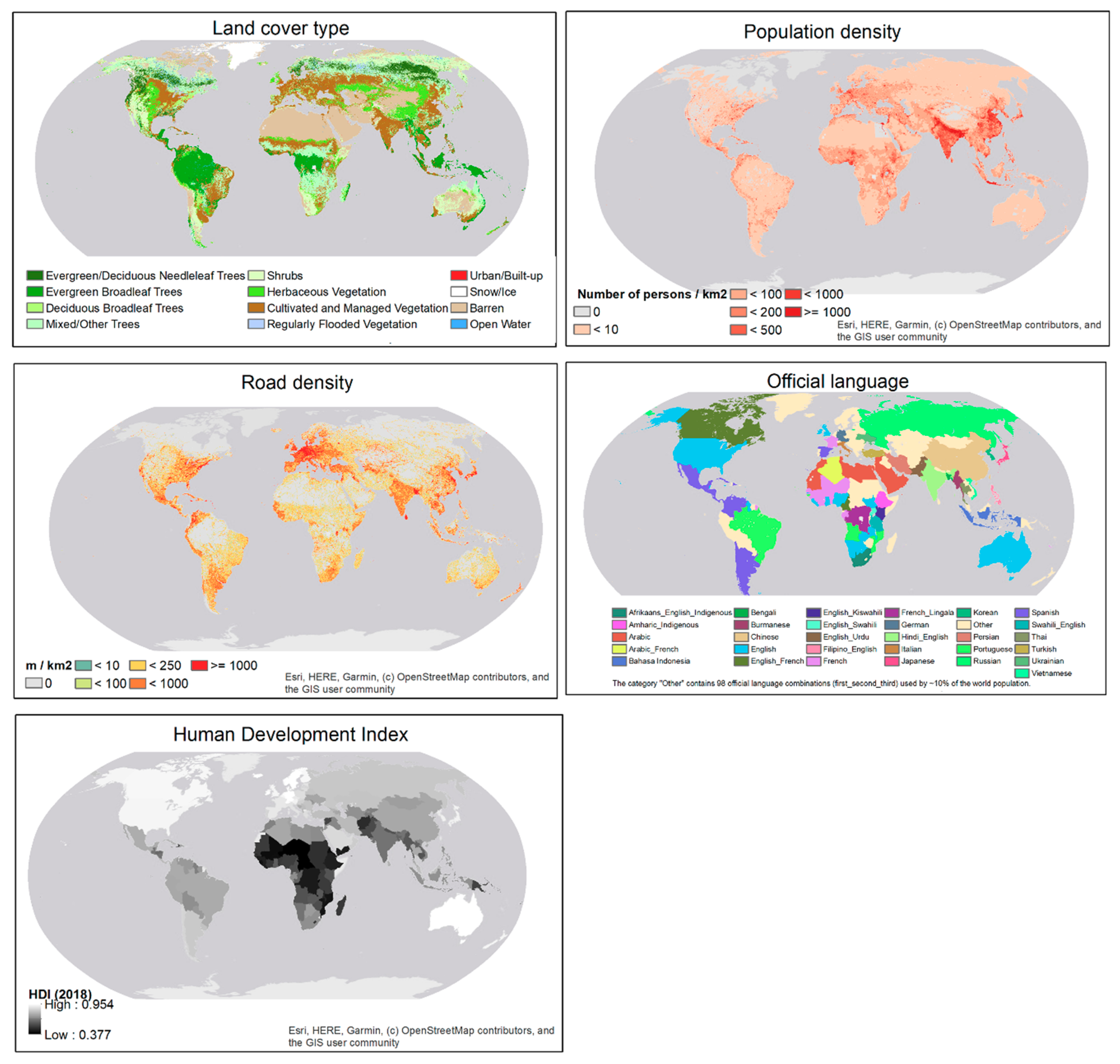

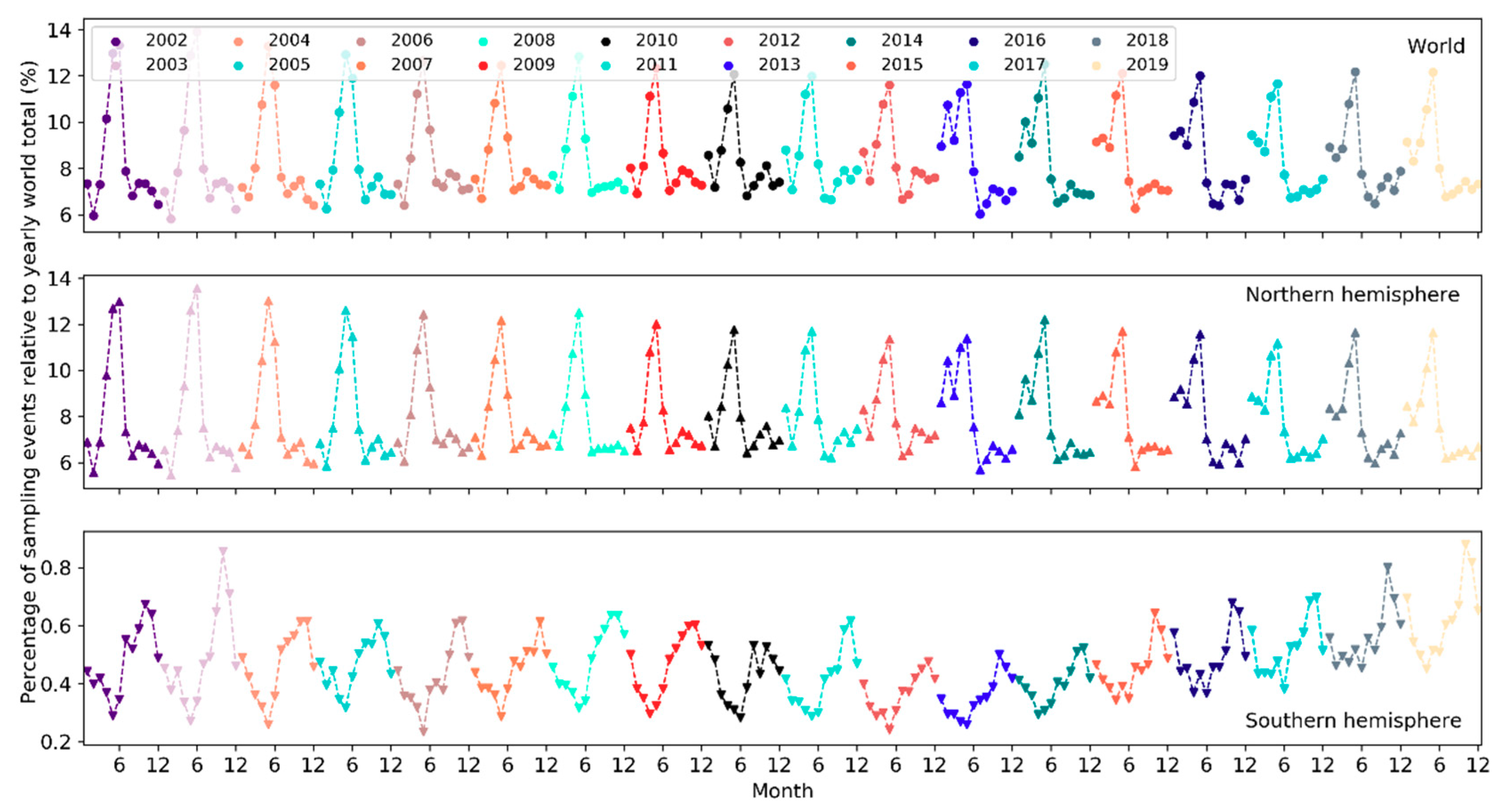

2.3.2. Covariates

2.3.3. Modeling Method

3. Results

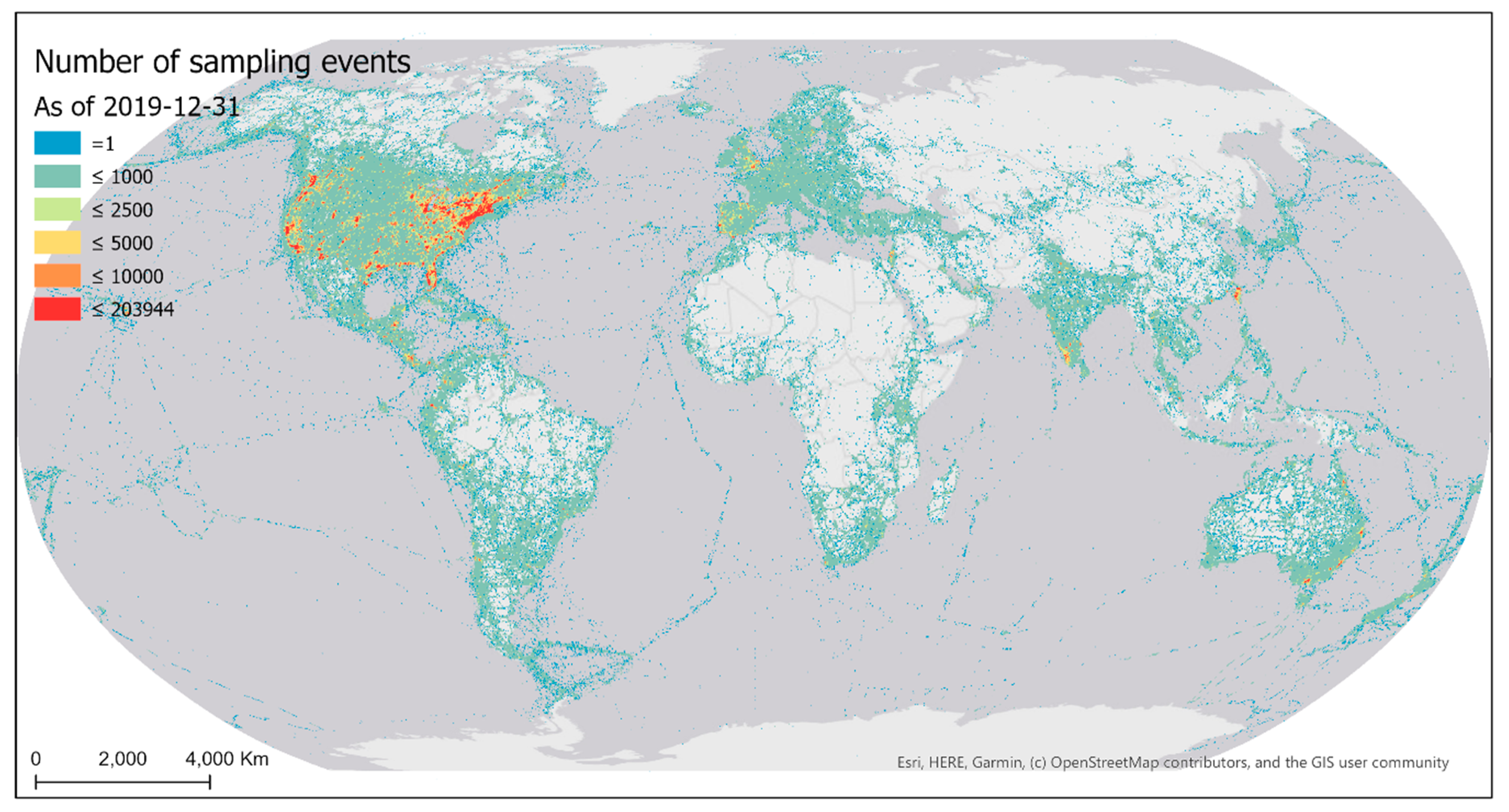

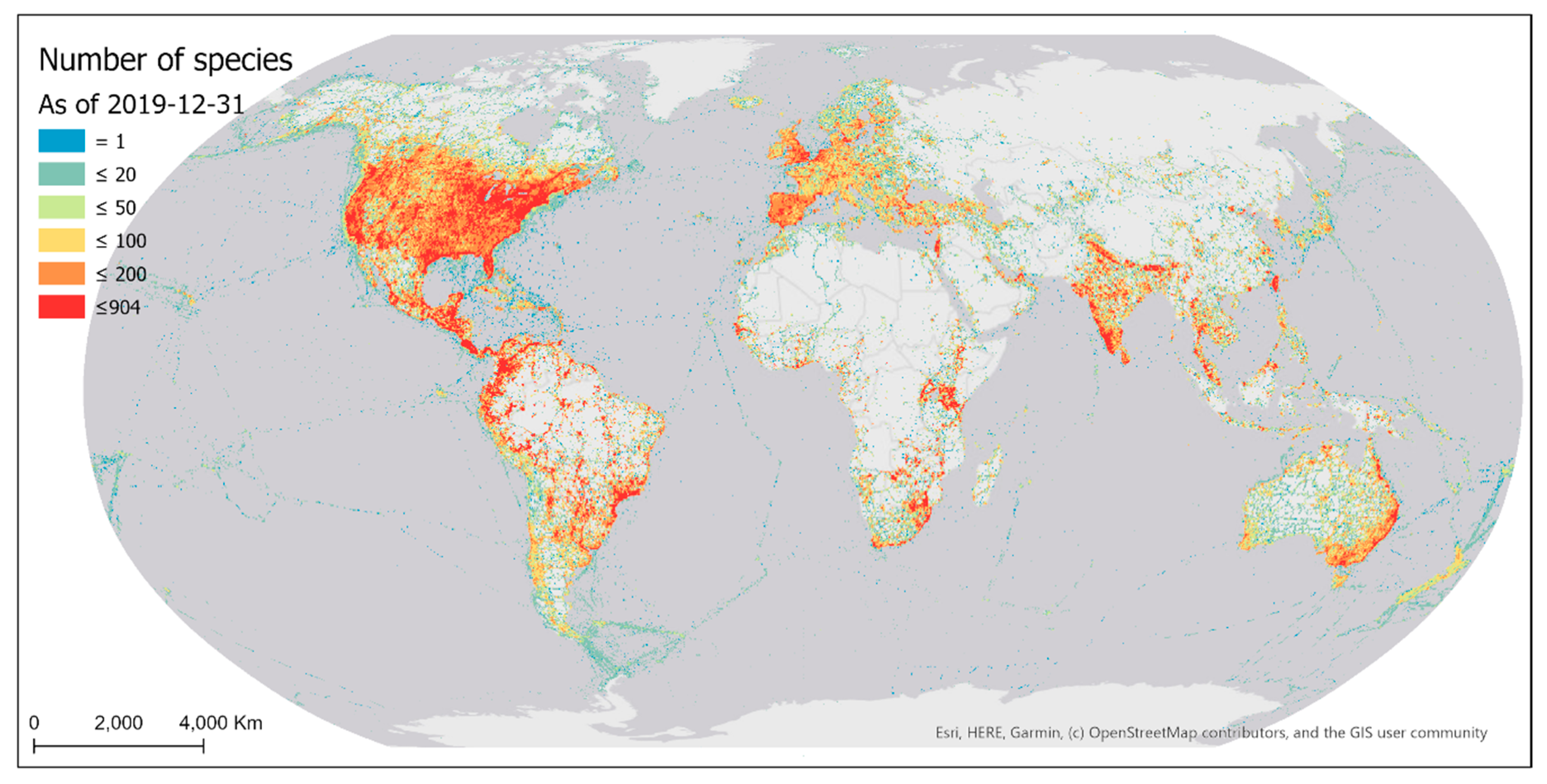

3.1. Spatial and Temporal Patterns

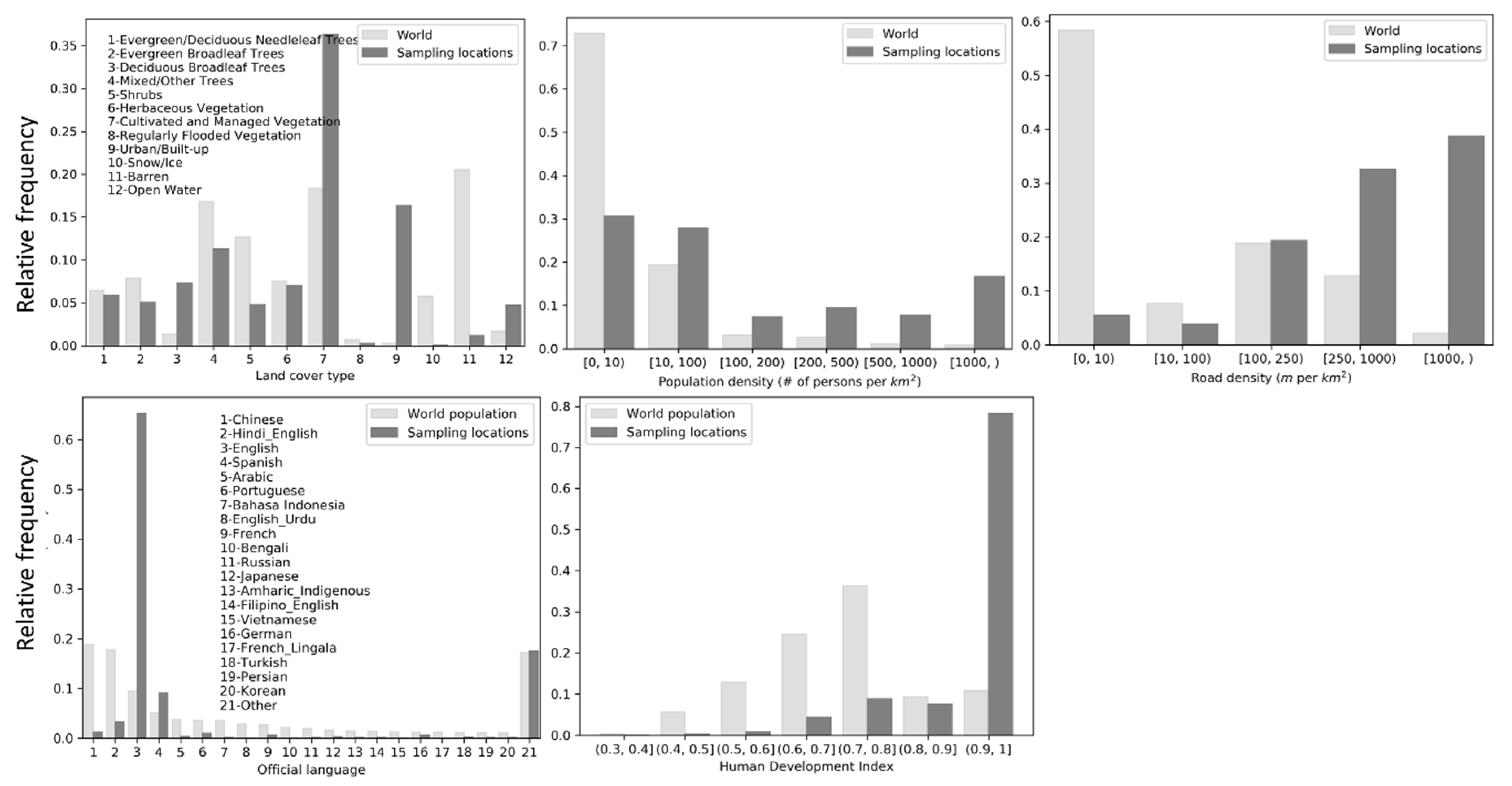

3.1.1. Sampling Events

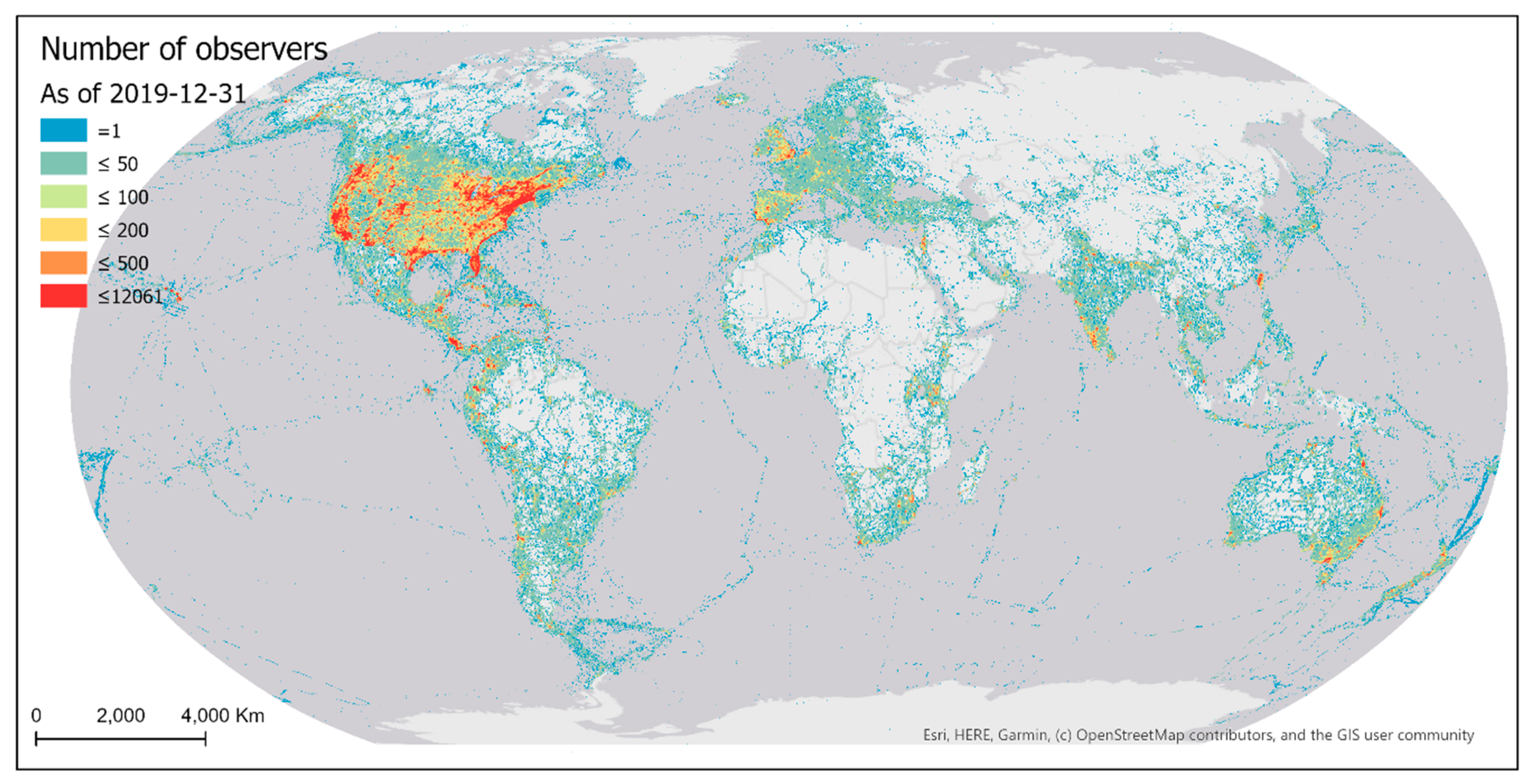

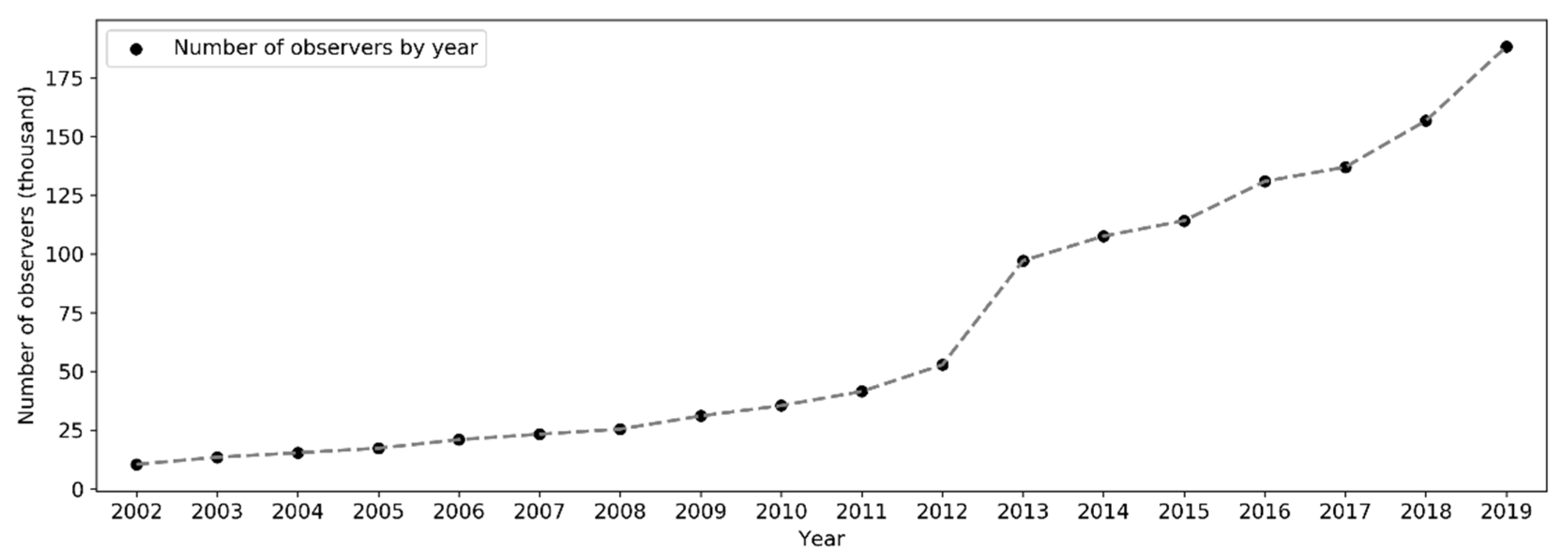

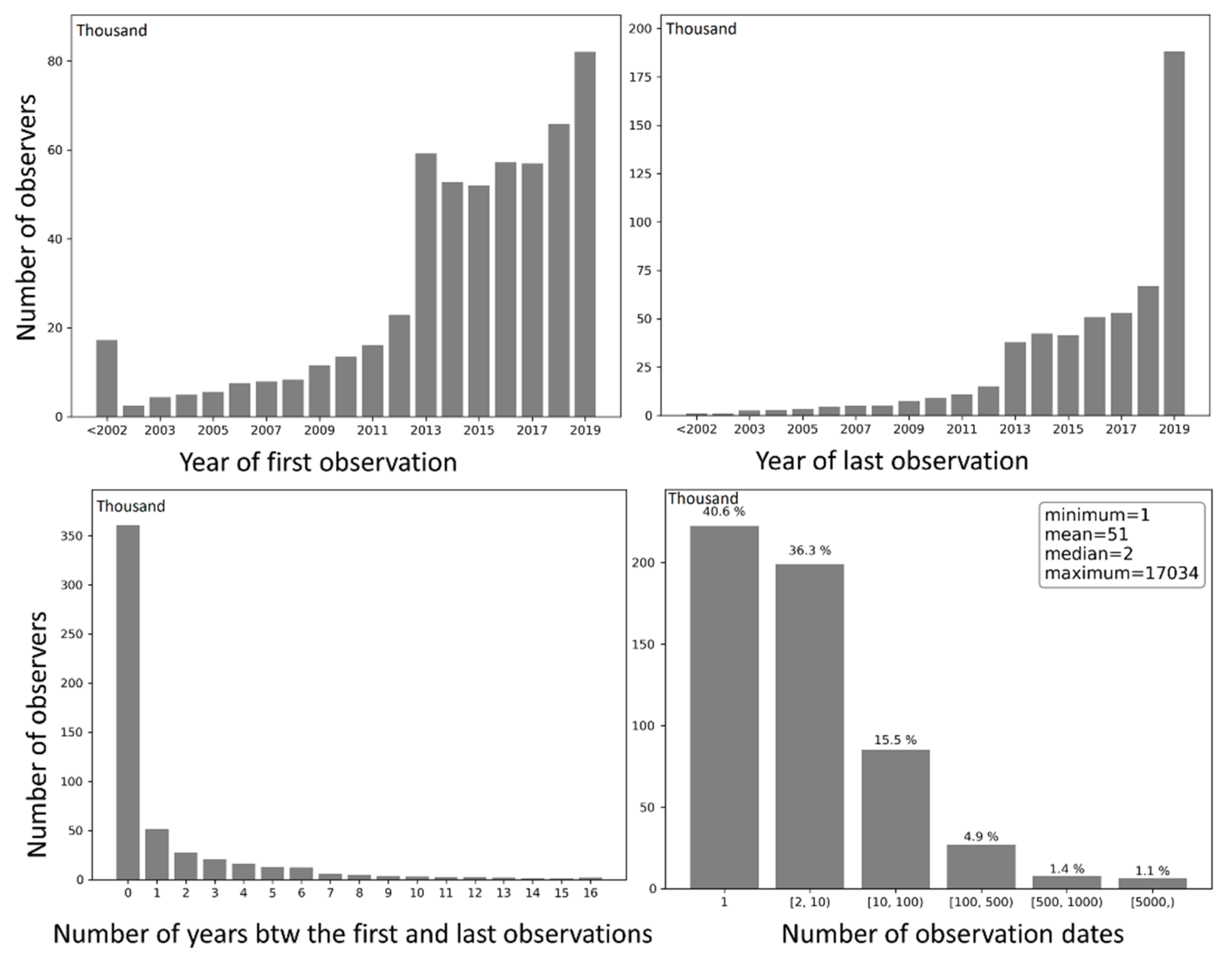

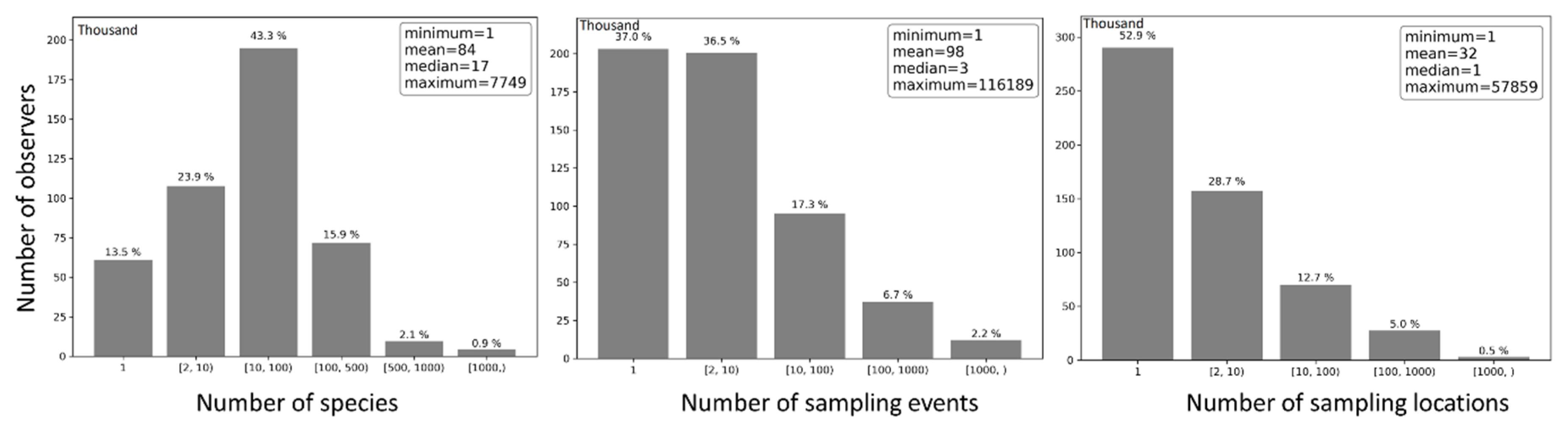

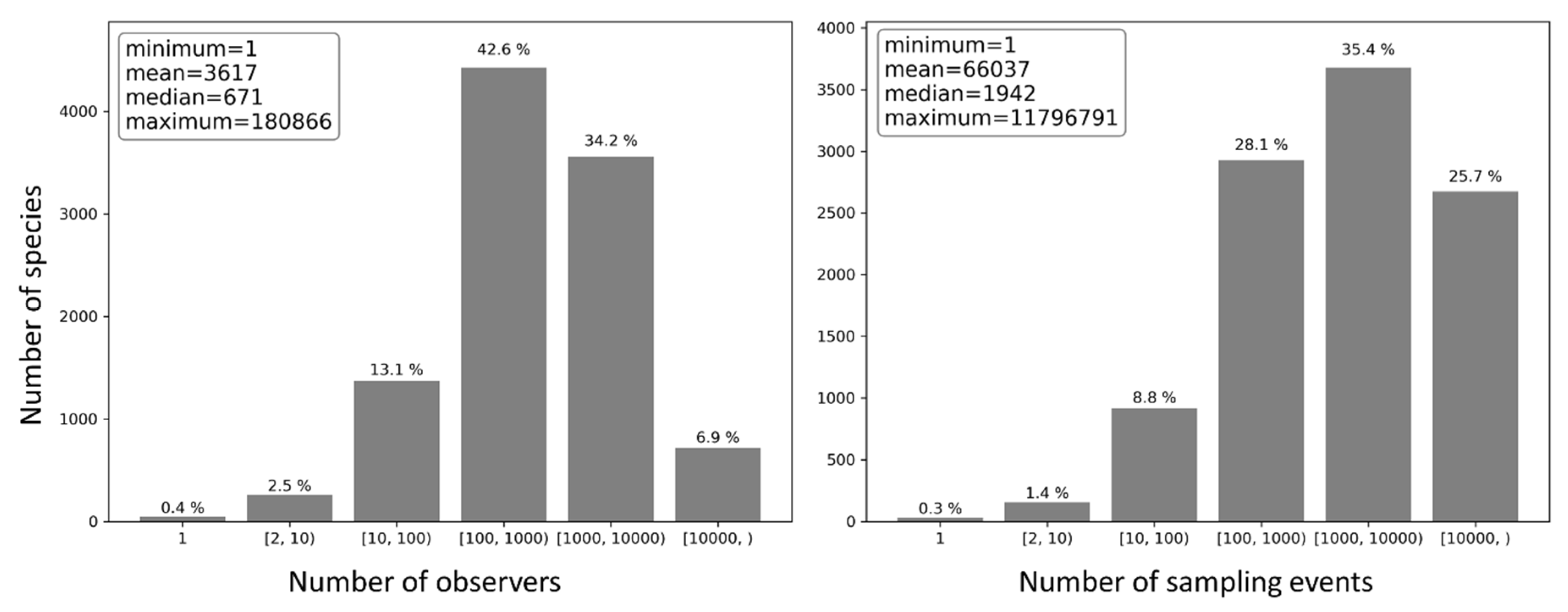

3.1.2. Observers

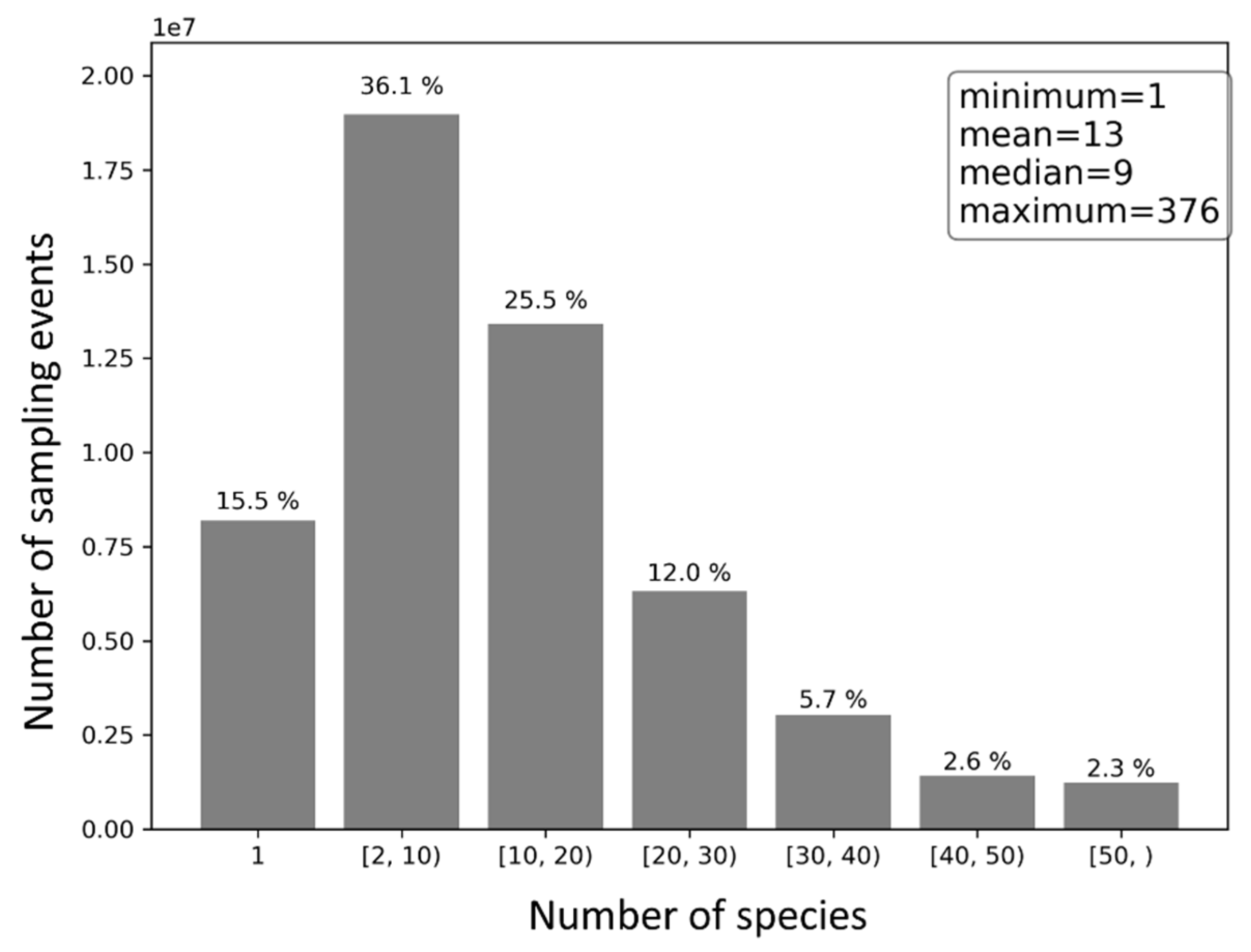

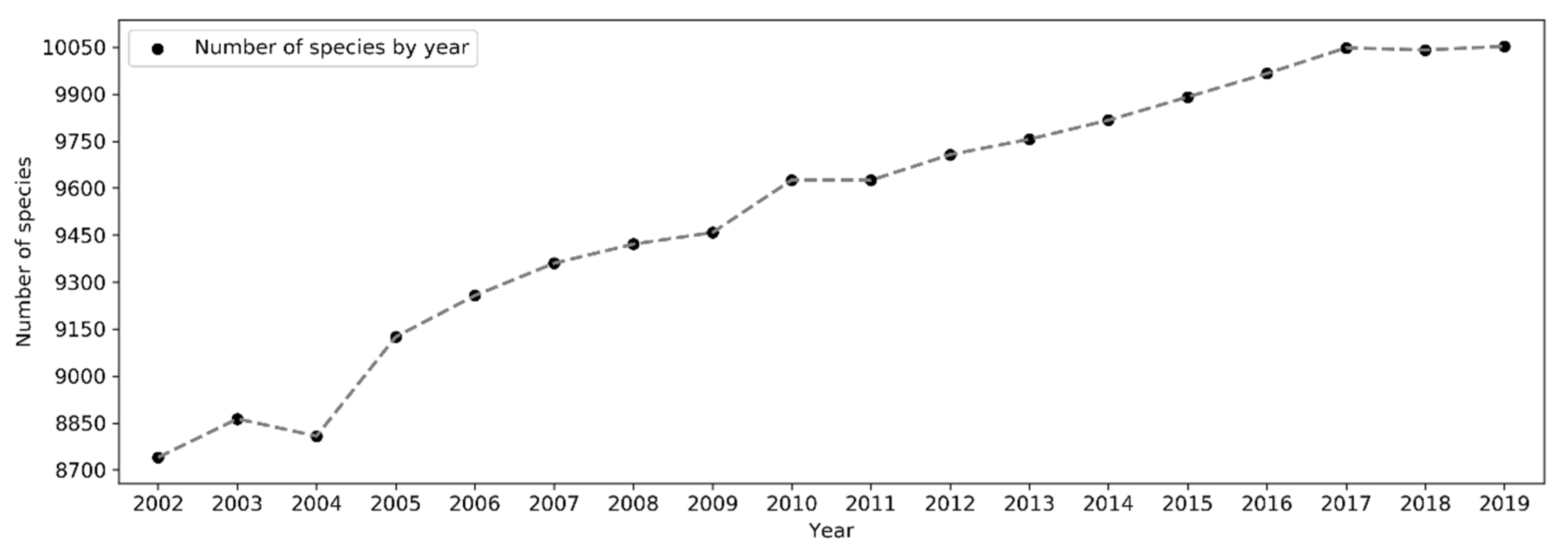

3.1.3. Bird Species

3.2. Modeling Sampling Efforts

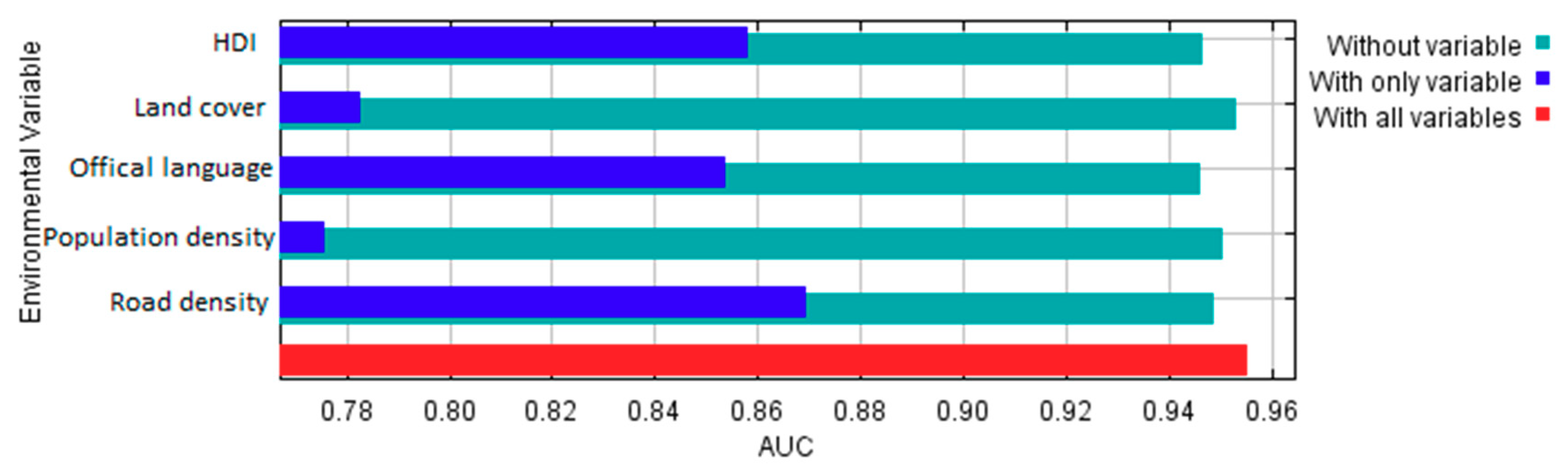

3.2.1. Analysis of Variable Importance

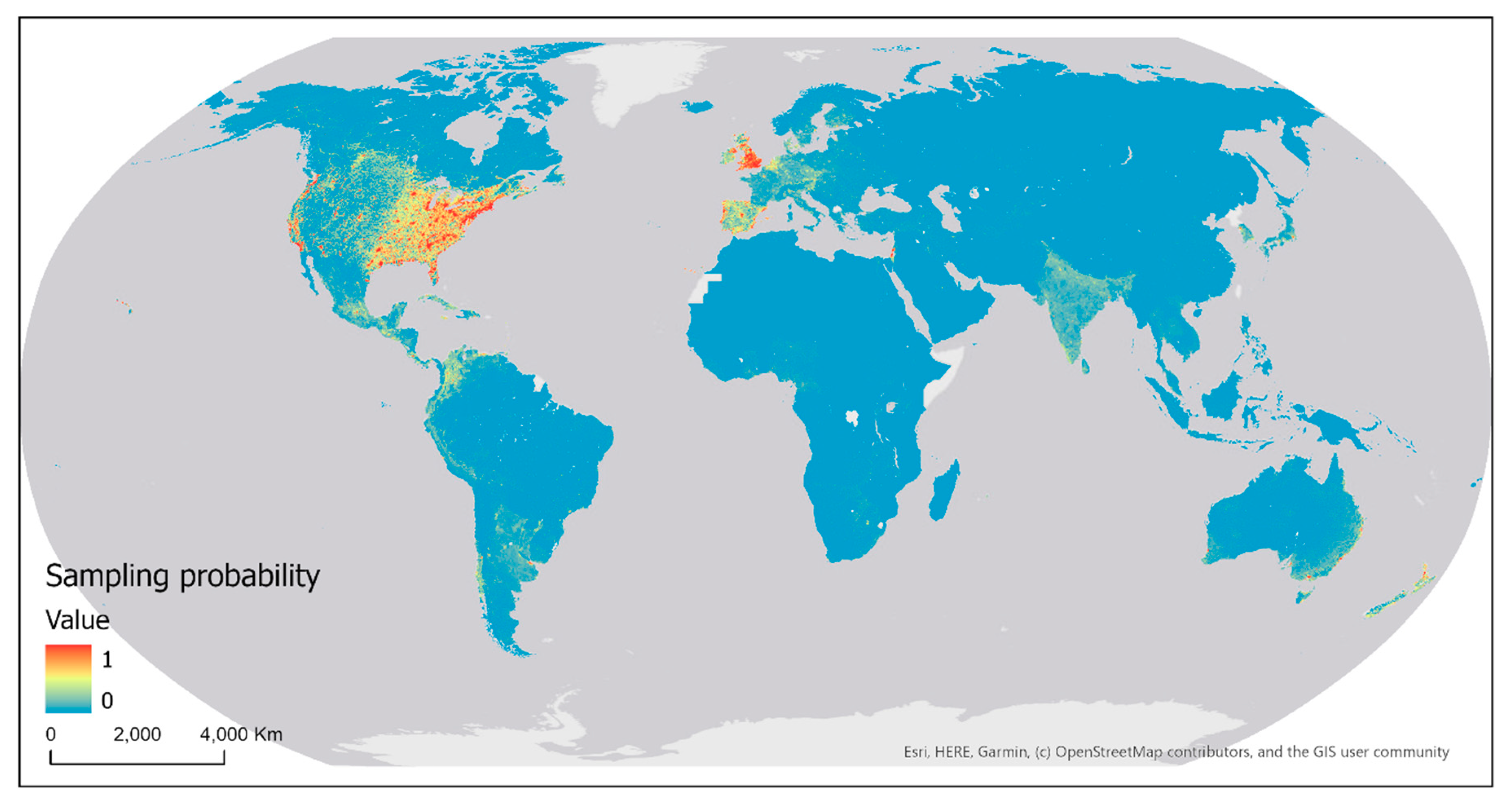

3.2.2. Modeled Sampling Probability

4. Discussion

4.1. Spatial Patterns in eBird Data

4.2. Temporal Patterns in eBird Data

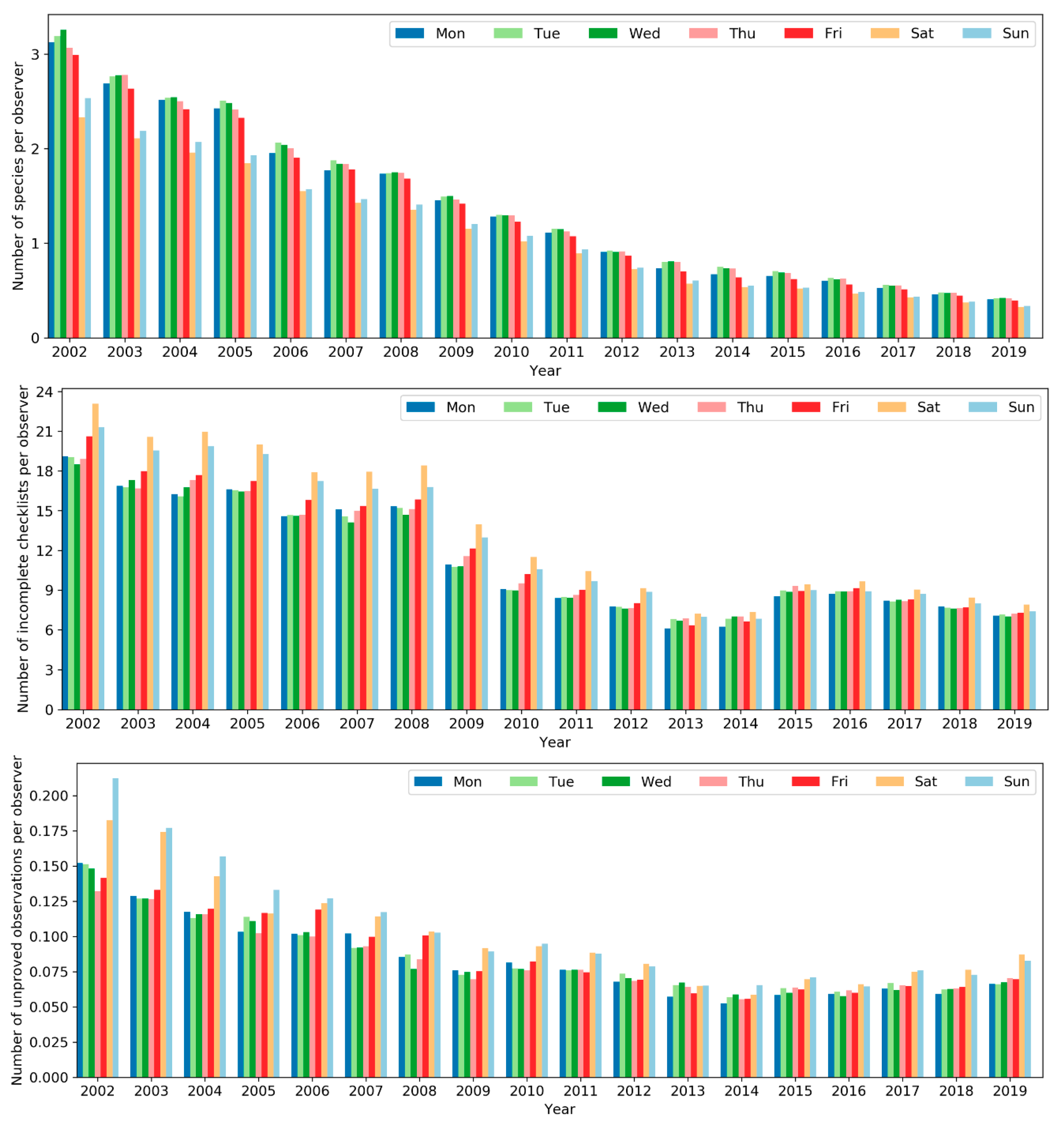

4.2.1. Patterns across the Years

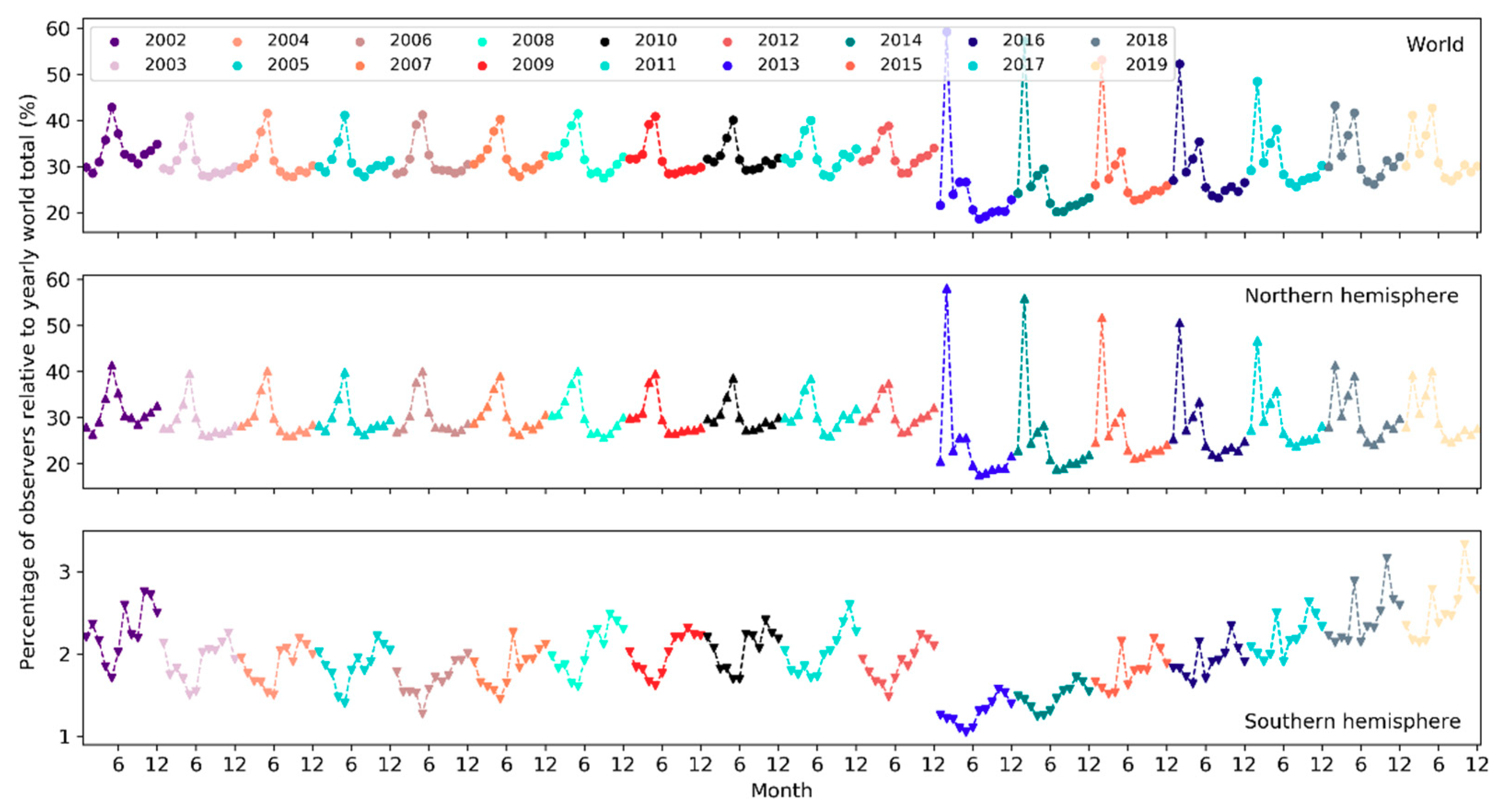

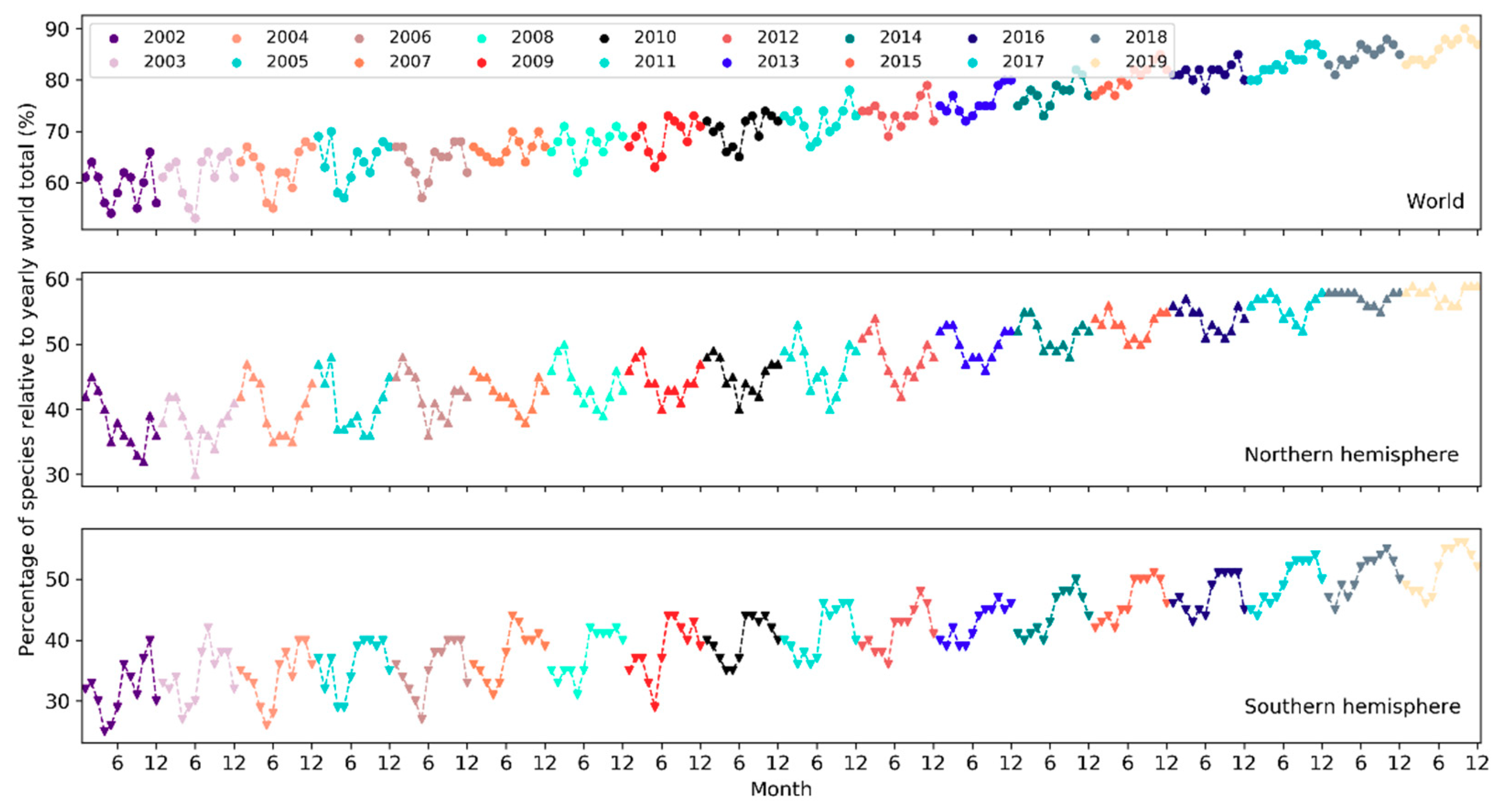

4.2.2. Patterns across the Months

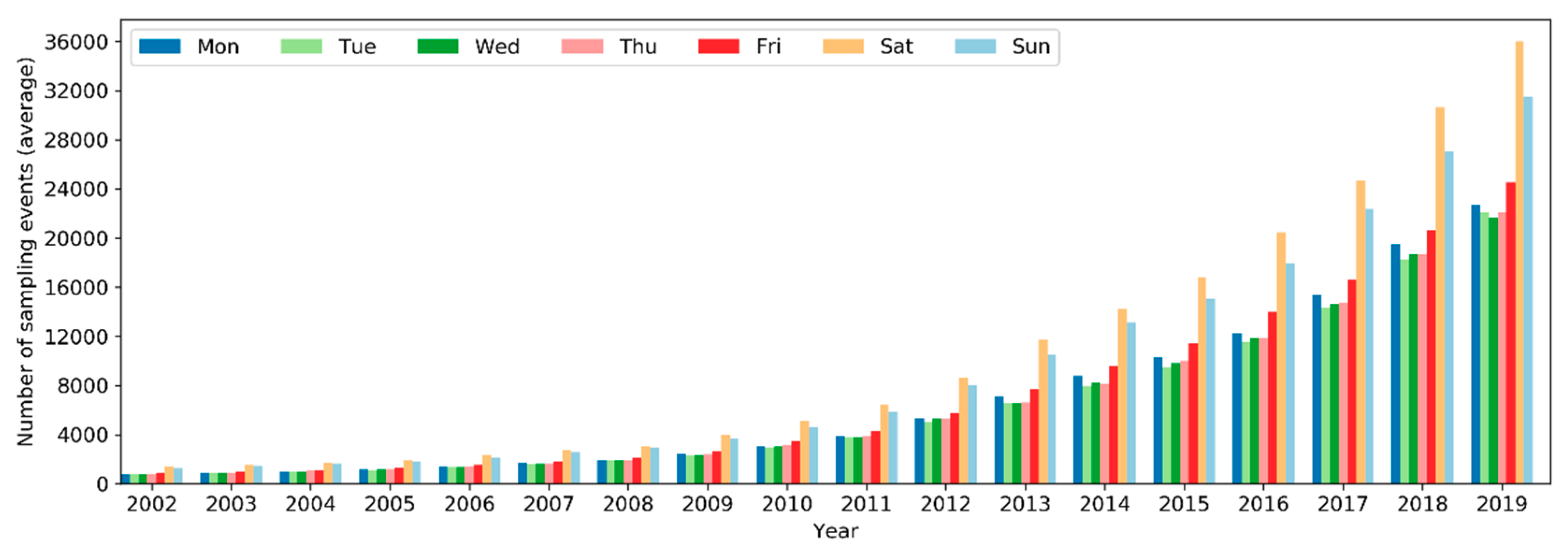

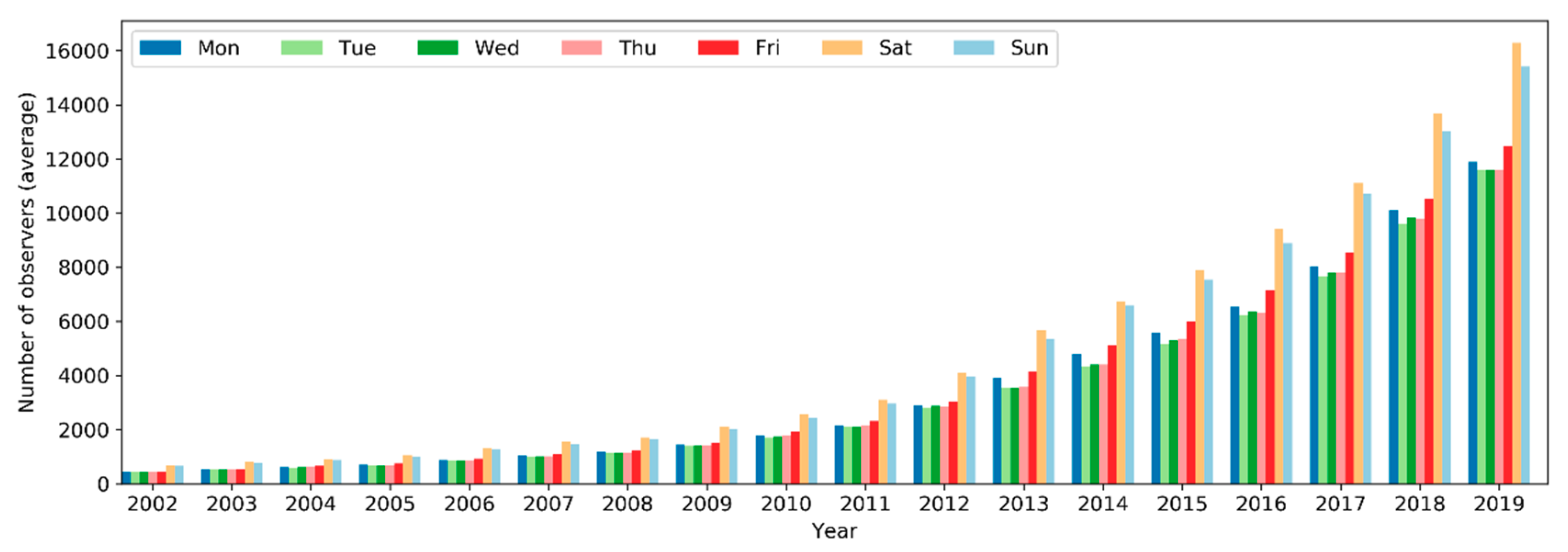

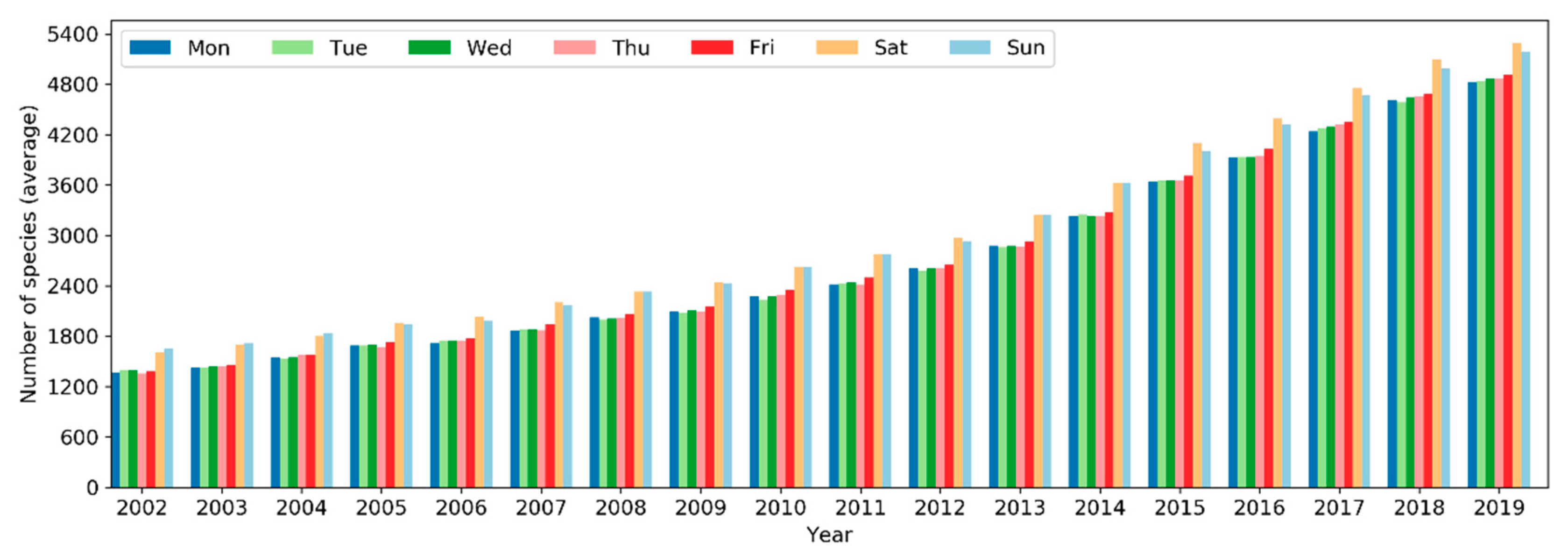

4.2.3. Patterns across the Days of the Week

4.3. Biases in VGI and Their Implications

4.3.1. Spatial Bias

4.3.2. Temporal Bias

4.3.3. Contributor Bias

4.3.4. Observation Bias

5. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef]

- Haklay, M.; Weber, P. OpenStreetMap: User-generated street maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Sullivan, B.L.; Wood, C.L.; Iliff, M.J.; Bonney, R.E.; Fink, D.; Kelling, S. eBird: A citizen-based bird observation network in the biological sciences. Biol. Conserv. 2009, 142, 2282–2292. [Google Scholar] [CrossRef]

- Wood, C.; Sullivan, B.; Iliff, M.; Fink, D.; Kelling, S. eBird: Engaging birders in science and conservation. PLoS Biol. 2011, 9, e1001220. [Google Scholar] [CrossRef] [PubMed]

- Arsanjani, J.J.; Zipf, A.; Mooney, P.; Helbich, M. OpenStreetMap in GIScience: Experiences, Research, and Applications; Springer: Berlin/Heidelberg, Germany, 2015; ISBN 3319142801. [Google Scholar]

- Sullivan, B.L.; Aycrigg, J.L.; Barry, J.H.; Bonney, R.E.; Bruns, N.; Cooper, C.B.; Damoulas, T.; Dhondt, A.A.; Dietterich, T.; Farnsworth, A.; et al. The eBird enterprise: An integrated approach to development and application of citizen science. Biol. Conserv. 2014, 169, 31–40. [Google Scholar] [CrossRef]

- Sachdeva, S.; McCaffrey, S.; Locke, D. Social media approaches to modeling wildfire smoke dispersion: Spatiotemporal and social scientific investigations. Inf. Commun. Soc. 2017, 20, 1146–1161. [Google Scholar] [CrossRef]

- Fink, D.; Hochachka, W.M.; Zuckerberg, B.; Winkler, D.W.; Shaby, B.; Munson, M.A.; Hooker, G.; Riedewald, M.; Sheldon, D.; Kelling, S. Spatiotemporal exploratory models for broad-scale survey data. Ecol. Appl. 2010, 20, 2131–2147. [Google Scholar] [CrossRef] [PubMed]

- Malik, M.M.; Lamba, H.; Nakos, C.; Pfeffer, J. Population Bias in Geotagged Tweets. In Proceedings of the Nineth International AAAI Conference on Web and Social Media, Oxford, UK, 26–29 May 2015; pp. 18–27. [Google Scholar]

- Brown, G. A review of sampling effects and response bias in Internet participatory mapping (PPGIS/PGIS/VGI). Trans. GIS 2017, 21, 39–56. [Google Scholar] [CrossRef]

- Hecht, B.; Stephens, M. A tale of cities: Urban biases in volunteered geographic information. In Proceedings of the Eighth International Conference on Web and Social Media (ICWSM), Ann Arbor, MI, USA, 1–4 June 2014; pp. 197–205. [Google Scholar]

- Zhang, Y.; Li, X.; Wang, A.; Bao, T.; Tian, S. Density and diversity of OpenStreetMap road networks in China. J. Urban Manag. 2015, 4, 135–146. [Google Scholar] [CrossRef]

- Yang, A.; Fan, H.; Jing, N.; Sun, Y.; Zipf, A. Temporal Analysis on Contribution Inequality in OpenStreetMap: A Comparative Study for Four Countries. ISPRS Int. J. Geo-Inf. 2016, 5, 5. [Google Scholar] [CrossRef]

- Basiri, A.; Haklay, M.; Foody, G.; Mooney, P. Crowdsourced geospatial data quality: Challenges and future directions. Int. J. Geogr. Inf. Sci. 2019, 33, 1588–1593. [Google Scholar] [CrossRef]

- Boakes, E.H.; McGowan, P.J.K.; Fuller, R.A.; Ding, C.; Clark, N.E.; O’Connor, K.; Mace, G.M. Distorted views of biodiversity: Spatial and temporal bias in species occurrence data. PLoS Biol. 2010, 8, e1000385. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G. Enhancing VGI application semantics by accounting for spatial bias. Big Earth Data 2019, 3, 255–268. [Google Scholar] [CrossRef]

- Zhang, G.; Zhu, A.-X. A representativeness directed approach to spatial bias mitigation in VGI for predictive mapping. Int. J. Geogr. Inf. Sci. 2019, 33, 1873–1893. [Google Scholar] [CrossRef]

- Zhu, A.-X.; Zhang, G.; Wang, W.; Xiao, W.; Huang, Z.-P.; Dunzhu, G.-S.; Ren, G.; Qin, C.-Z.; Yang, L.; Pei, T.; et al. A citizen data-based approach to predictive mapping of spatial variation of natural phenomena. Int. J. Geogr. Inf. Sci. 2015, 29, 1864–1886. [Google Scholar] [CrossRef]

- Boakes, E.H.; Gliozzo, G.; Seymour, V.; Harvey, M.; Smith, C.; Roy, D.B.; Haklay, M. Patterns of contribution to citizen science biodiversity projects increase understanding of volunteers’ recording behaviour. Sci. Rep. 2016, 6, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Antoniou, V.; Skopeliti, A. Measures and indicators of VGI quality: An overview. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, II-3/W5, 345–351. [Google Scholar] [CrossRef]

- Sauermanna, H.; Franzonib, C. Crowd science user contribution patterns and their implications. Proc. Natl. Acad. Sci. USA 2015, 112, 679–684. [Google Scholar] [CrossRef]

- Neis, P.; Zipf, A. Analyzing the contributor activity of a volunteered geographic information project-The case of OpenStreetMap. ISPRS Int. J. Geo-Inf. 2012, 1, 146–165. [Google Scholar] [CrossRef]

- Li, L.; Goodchild, M.F.; Xu, B. Spatial, temporal, and socioeconomic patterns in the use of Twitter and Flickr. Cartogr. Geogr. Inf. Sci. 2013, 40, 61–77. [Google Scholar] [CrossRef]

- Nielsen, J. The 90-9-1 Rule for Participation Inequality in Social Media and Online Communities. 2006. Available online: https://www.nngroup.com/articles/participation-inequality (accessed on 1 October 2020).

- Haklay, M.E. Why is Participation Inequality Important? Ubiquity Press: London, UK, 2016. [Google Scholar]

- Carron-Arthur, B.; Cunningham, J.A.; Griffiths, K.M. Describing the distribution of engagement in an Internet support group by post frequency: A comparison of the 90-9-1 Principle and Zipf’s Law. Internet Interv. 2014, 1, 165–168. [Google Scholar] [CrossRef]

- Girres, J.-F.; Touya, G. Quality assessment of the French OpenStreetMap dataset. Trans. GIS 2010, 14, 435–459. [Google Scholar] [CrossRef]

- Bittner, C. Diversity in volunteered geographic information: Comparing OpenStreetMap and Wikimapia in Jerusalem. GeoJournal 2017, 82, 887–906. [Google Scholar] [CrossRef]

- Geldmann, J.; Heilmann-Clausen, J.; Holm, T.E.; Levinsky, I.; Markussen, B.; Olsen, K.; Rahbek, C.; Tøttrup, A.P. What determines spatial bias in citizen science? Exploring four recording schemes with different proficiency requirements. Divers. Distrib. 2016, 22, 1139–1149. [Google Scholar] [CrossRef]

- Audubon. Cornell Lab of Orithnology about eBird. Available online: https://ebird.org/about (accessed on 17 September 2019).

- eBird. eBird Basic Dataset Metadata (v1.12). 2019. Available online: https://ebird.org/science/download-ebird-data-products (accessed on 17 September 2019).

- Kelling, S.; Lagoze, C.; Wong, W.-K.; Yu, J.; Damoulas, T.; Gerbracht, J.; Fink, D.; Gomes, C. eBird: A Human/Computer Learning Network to Improve Biodiversity Conservation and Research. AI Mag. 2013, 34, 10–20. [Google Scholar] [CrossRef]

- La Sorte, F.A.; Somveille, M. Survey completeness of a global citizen-science database of bird occurrence. Ecography 2020, 43, 34–43. [Google Scholar] [CrossRef]

- eBird. Explore Hotspots-eBird. Available online: https://ebird.org/hotspots (accessed on 17 September 2019).

- UNDP. Human Development Indices and Indicators: 2018 Statistical Update; UNDP: New York, NY, USA, 2018. [Google Scholar]

- USFWS. Birding in the United States: A Demographic and Economic Analysis Addendum to the 2011 National Survey of Fishing, Hunting, and Wildlife-Associated Recreation; U.S. Fish and Wildlife Service: Washington, DC, USA, 2013. Available online: https://digitalmedia.fws.gov/digital/collection/document/id/1874/ (accessed on 1 October 2020).

- eBird. Mobile Now Available in 5 Languages. Available online: https://ebird.org/news/mobiletranslation/ (accessed on 20 March 2020).

- Tuanmu, M.N.; Jetz, W. A global 1-km consensus land-cover product for biodiversity and ecosystem modelling. Glob. Ecol. Biogeogr. 2014, 23, 1031–1045. [Google Scholar] [CrossRef]

- Global 1-km Consensus Land Cover. Available online: http://www.earthenv.org/landcover (accessed on 15 June 2020).

- Center for International Earth Science Information Network—CIESIN—Columbia University. Gridded Population of the World, Version 4 (GPWv4): Population Density, Revision 11. 2018. Available online: https://data.nasa.gov/dataset/Gridded-Population-of-the-World-Version-4-GPWv4-Po/w4yu-b8bh (accessed on 15 June 2020).

- Population Density, v4.11 (2000, 2005, 2010, 2015, 2020). Available online: https://sedac.ciesin.columbia.edu/data/set/gpw-v4-population-density-rev11 (accessed on 15 June 2020).

- Meijer, J.R.; Huijbregts, M.A.J.; Schotten, K.C.G.J.; Schipper, A.M. Global patterns of current and future road infrastructure. Environ. Res. Lett. 2018, 13, 064006. [Google Scholar] [CrossRef]

- GRIP Global Roads Database. Available online: https://www.globio.info/download-grip-dataset (accessed on 15 June 2020).

- Human Development Data (1990–2018). Available online: http://hdr.undp.org/en/data (accessed on 1 October 2020).

- An Overview of All the Official Languages Spoken per Country. Available online: http://www.arcgis.com/home/item.html?id=5c6ec52c374249a781aede5802994c95 (accessed on 15 June 2020).

- 2020 World Population by Country. Available online: https://worldpopulationreview.com/ (accessed on 15 June 2020).

- Phillips, S.J.; Anderson, R.P.; Schapire, R.E. Maximum entropy modeling of species geographic distributions. Ecol. Model. 2006, 190, 231–259. [Google Scholar] [CrossRef]

- Yan, Y.; Kuo, C.; Feng, C.; Huang, W.; Fan, H. Coupling maximum entropy modeling with geotagged social media data to determine the geographic distribution of tourists. Int. J. Geogr. Inf. Sci. 2018, 32, 1699–1736. [Google Scholar] [CrossRef]

- Phillips, S.J.; Dudík, M.; Schapire, R.E. Maxent Software for Modeling Species Niches and Distributions, (Version 3.4.1). 2019. Available online: https://biodiversityinformatics.amnh.org/open_source/maxent (accessed on 1 March 2019).

- Phillips, S.J.; Dudík, M. Modeling of species distributions with Maxent: New extensions and a comprehensive evaluation. Ecography 2008, 31, 161–175. [Google Scholar] [CrossRef]

- Bégin, D.; Devillers, R.; Roche, S. The life cycle of contributors in collaborative online communities-the case of OpenStreetMap. Int. J. Geogr. Inf. Sci. 2018, 32, 1611–1630. [Google Scholar] [CrossRef]

- Welcome to ornitho.ch. Available online: https://www.ornitho.ch/ (accessed on 1 October 2020).

- iNaturalist. Available online: https://www.inaturalist.org/ (accessed on 1 October 2020).

- Conflict Is Still Africa’s Biggest Challenge in 2020. Available online: https://reliefweb.int/report/world/conflict-still-africa-s-biggest-challenge-2020 (accessed on 1 October 2020).

- Johnston, A.; Moran, N.; Musgrove, A.; Fink, D.; Baillie, S.R. Estimating species distributions from spatially biased citizen science data. Ecol. Model. 2020, 422, 108927. [Google Scholar] [CrossRef]

- Newman, G.; Graham, J.; Crall, A.; Laituri, M. The art and science of multi-scale citizen science support. Ecol. Inform. 2011, 6, 217–227. [Google Scholar] [CrossRef]

- Wikipedia eBird. Available online: https://en.wikipedia.org/wiki/EBird (accessed on 17 July 2020).

- eBird Mobile App for iOS Now Available! Available online: https://ebird.org/news/ebird_mobile_ios1 (accessed on 1 October 2020).

- Cornell Lab of Orinithology Merlin. Available online: https://merlin.allaboutbirds.org/the-story/ (accessed on 21 July 2020).

- Great (Global) Backyard Bird Count This Weekend! Available online: https://ebird.org/news/great-global-backyard-bird-count-this-weekend/ (accessed on 3 October 2020).

- Global Big Day—9 May 2020. Available online: https://ebird.org/news/global-big-day-9-may-2020 (accessed on 21 July 2020).

- October Big Day—19 October 2019. Available online: https://ebird.org/news/october-big-day-19-october-2019 (accessed on 21 July 2020).

- Jensen, R.R.; Shumway, J.M. Sampling our world. In Research Methods in Geography: A Critical Introduction; Gomez, B., Jones, J.P., III, Eds.; John Wiley & Sons: Hoboken, NJ, USA, 2010; pp. 77–90. [Google Scholar]

- Zhang, G.; Zhu, A.-X. The representativeness and spatial bias of volunteered geographic information: A review. Ann. GIS 2018, 24, 151–162. [Google Scholar] [CrossRef]

- Pardo, I.; Pata, M.P.; Gómez, D.; García, M.B. A novel method to handle the effect of uneven sampling effort in biodiversity databases. PLoS ONE 2013, 8, e52786. [Google Scholar] [CrossRef]

- Stolar, J.; Nielsen, S.E. Accounting for spatially biased sampling effort in presence-only species distribution modelling. Divers. Distrib. 2015, 21, 595–608. [Google Scholar] [CrossRef]

- Robinson, O.J.; Ruiz-Gutierrez, V.; Fink, D. Correcting for bias in distribution modelling for rare species using citizen science data. Divers. Distrib. 2018, 24, 460–472. [Google Scholar] [CrossRef]

- Sauer, J.R.; Peterjohn, B.G.; Link, W.A. Observer differences in the North American Breeding Bird Survey. Auk 1994, 111, 50–62. [Google Scholar] [CrossRef]

- Kendall, W.L.; Peterjohn, B.G.; Sauer, J.R.; Url, S. First-time observer effects in the North American Breeding Bird Survey. Auk 1996, 113, 823–829. [Google Scholar] [CrossRef]

- Fitzpatrick, M.C.; Preisser, E.L.; Ellison, A.M.; Elkinton, J.S. Observer bias and the detection of low-density populations. Ecol. Appl. 2009, 19, 1673–1679. [Google Scholar] [CrossRef] [PubMed]

- Kelling, S.; Johnston, A.; Hochachka, W.M.; Iliff, M.; Fink, D.; Gerbracht, J.; Lagoze, C.; La Sorte, F.A.; Moore, T.; Wiggins, A.; et al. Can Observation Skills of Citizen Scientists Be Estimated Using Species Accumulation Curves? PLoS ONE 2015, 10, e0139600. [Google Scholar] [CrossRef] [PubMed]

- Johnston, A.; Fink, D.; Hochachka, W.M.; Kelling, S. Estimates of observer expertise improve species distributions from citizen science data. Methods Ecol. Evol. 2018, 9, 88–97. [Google Scholar] [CrossRef]

- Johnston, A.; Hochachka, W.; Strimas-Mackey, M.; Ruiz Gutierrez, V.; Robinson, O.; Auer, T.; Kelling, S.; Fink, D. Analytical guidelines to increase the value of citizen science data: Using eBird data to estimate species occurrence. bioRxiv 2020. Available online: https://www.biorxiv.org/content/10.1101/574392v3.full.pdf (accessed on 15 June 2020).

| Country/Territory | Sampling Events | Sampling Locations | Observers | Species | ||

|---|---|---|---|---|---|---|

| n | % | n | % | |||

| United States | 36,540,720 | 67.9% | 5,166,510 | 61.6% | 417,288 | 1444 |

| Canada | 6,304,830 | 11.7% | 915,075 | 10.9% | 59,733 | 761 |

| Australia | 1,392,746 | 2.6% | 218,756 | 2.6% | 11,176 | 876 |

| India | 1,005,541 | 1.9% | 219,430 | 2.6% | 18,824 | 1536 |

| Spain | 757,638 | 1.4% | 148,401 | 1.8% | 9484 | 687 |

| United Kingdom | 714,712 | 1.3% | 123,621 | 1.5% | 11,898 | 756 |

| Mexico | 488,476 | 0.9% | 108,411 | 1.3% | 15,813 | 1140 |

| Taiwan | 453,852 | 0.8% | 75,321 | 0.9% | 3421 | 742 |

| Costa Rica | 383,191 | 0.7% | 68,171 | 0.8% | 12,855 | 930 |

| Portugal | 369,303 | 0.7% | 59,118 | 0.7% | 4170 | 620 |

| Variable | Percent Contribution (%) | Permutation Importance (%) |

|---|---|---|

| Road density | 45.8 | 0 |

| Official language | 31.5 | 31 |

| Land cover | 9.6 | 0 |

| HDI | 9.4 | 69 |

| Population density | 3.8 | 0 |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G. Spatial and Temporal Patterns in Volunteer Data Contribution Activities: A Case Study of eBird. ISPRS Int. J. Geo-Inf. 2020, 9, 597. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9100597

Zhang G. Spatial and Temporal Patterns in Volunteer Data Contribution Activities: A Case Study of eBird. ISPRS International Journal of Geo-Information. 2020; 9(10):597. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9100597

Chicago/Turabian StyleZhang, Guiming. 2020. "Spatial and Temporal Patterns in Volunteer Data Contribution Activities: A Case Study of eBird" ISPRS International Journal of Geo-Information 9, no. 10: 597. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9100597