Privacy-Aware Visualization of Volunteered Geographic Information (VGI) to Analyze Spatial Activity: A Benchmark Implementation

Abstract

:1. Introduction

2. Previous Work

3. Concept

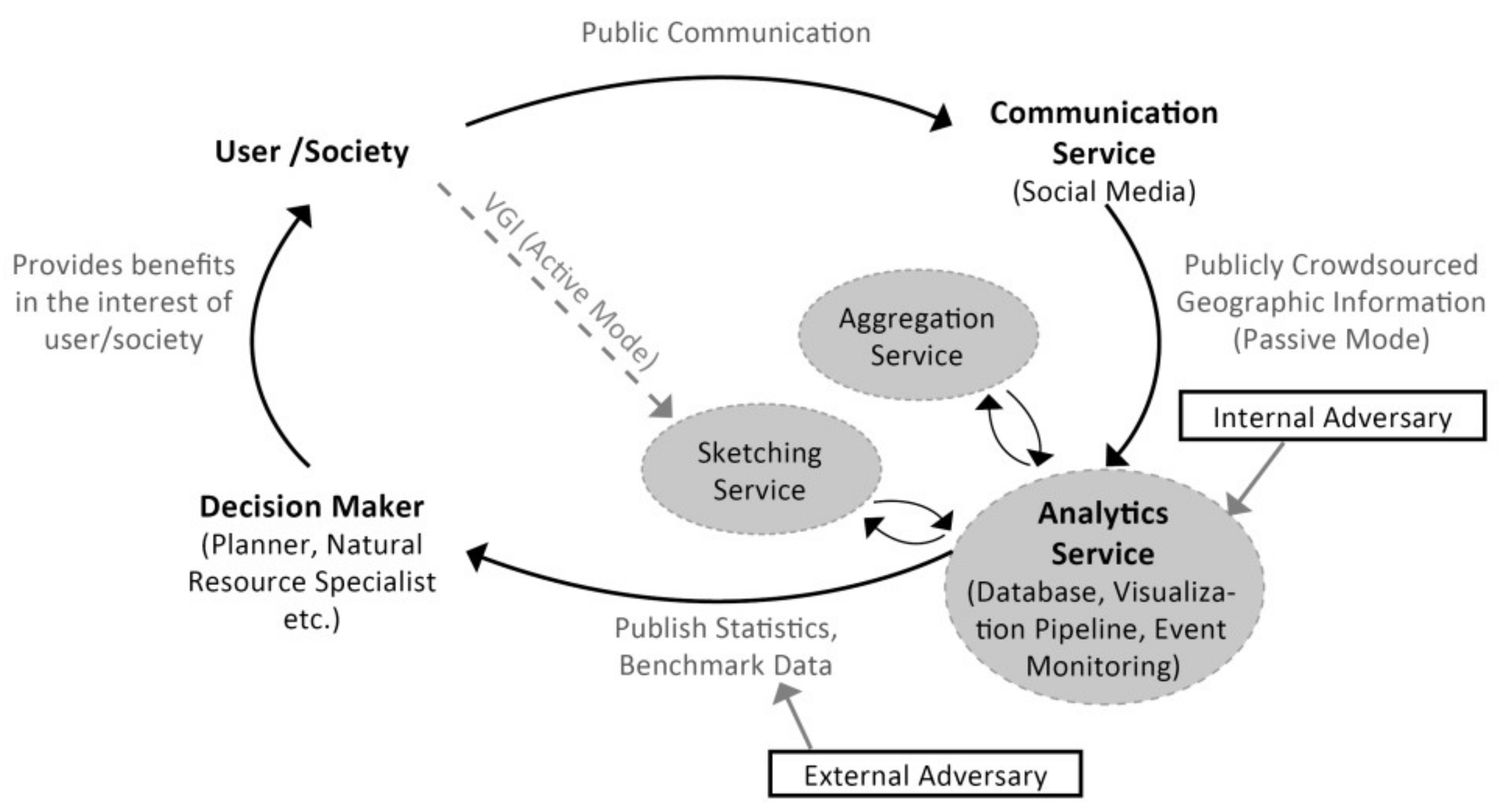

3.1. System Model

3.2. Analytics Service

4. Material and Methods

4.1. Dataset

4.2. Software Architecture

4.3. First Component: HyperLogLog (HLL)

4.4. Second Component: Location

5. Case Study: Alex, “Sandy”, and “Robert”

6. Results

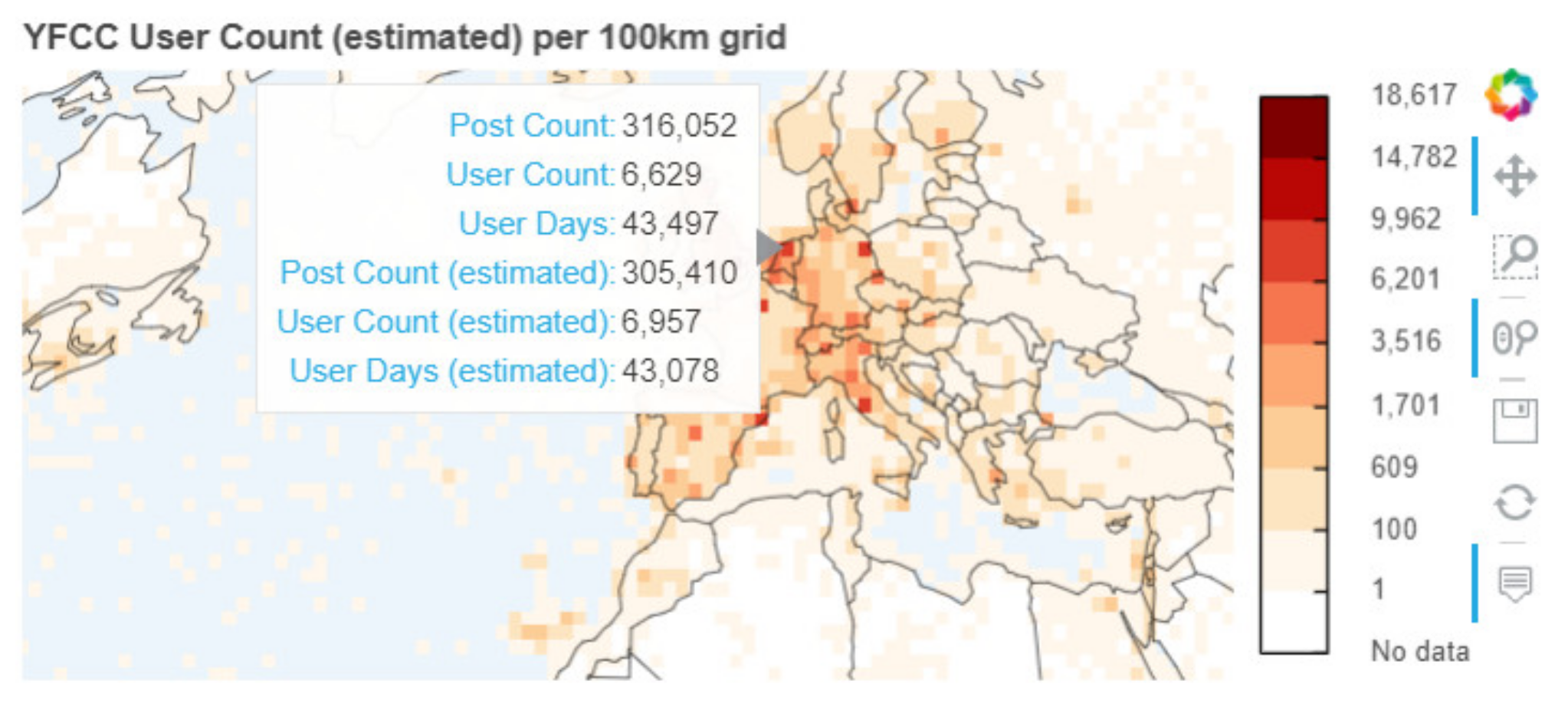

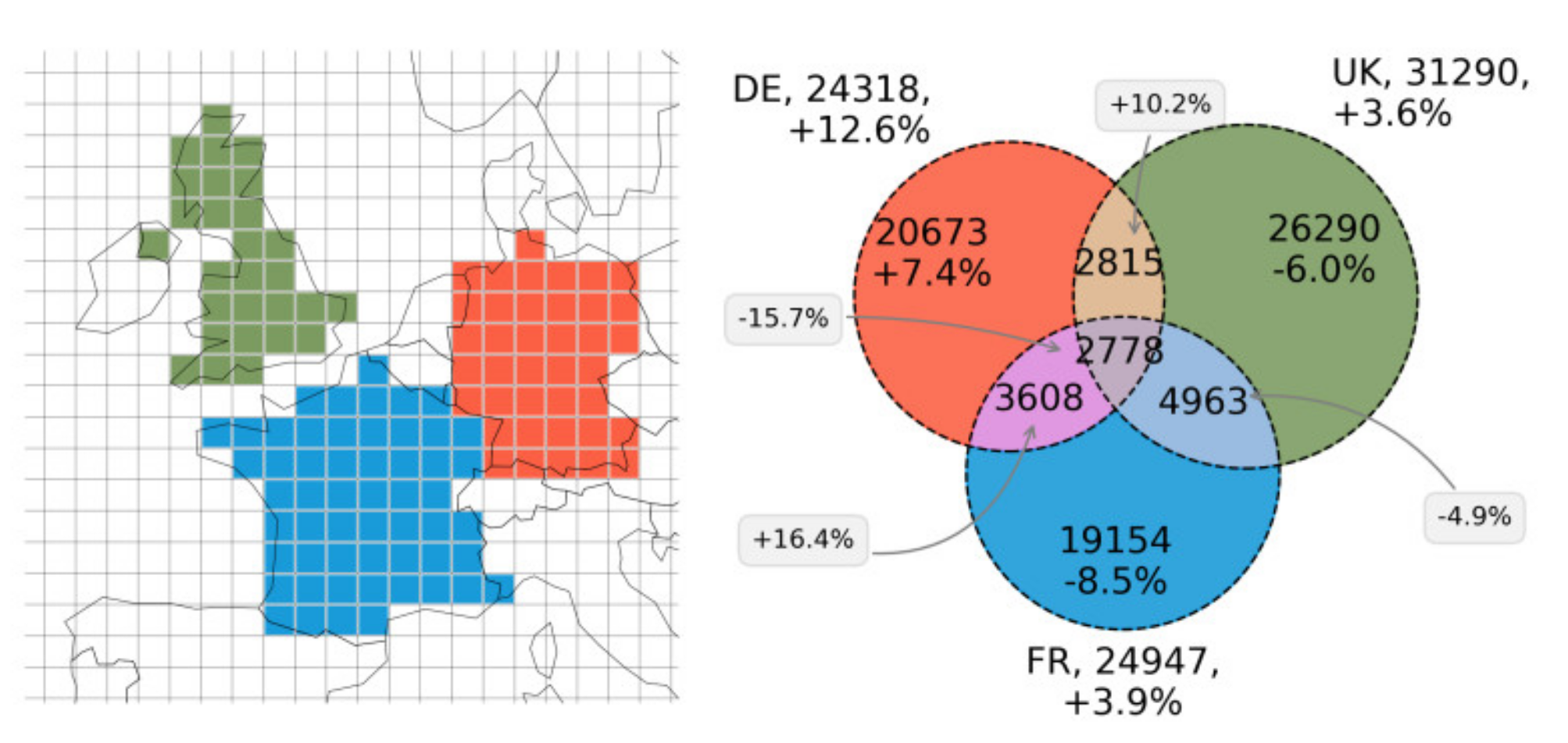

6.1. Worldwide Visitation Patterns

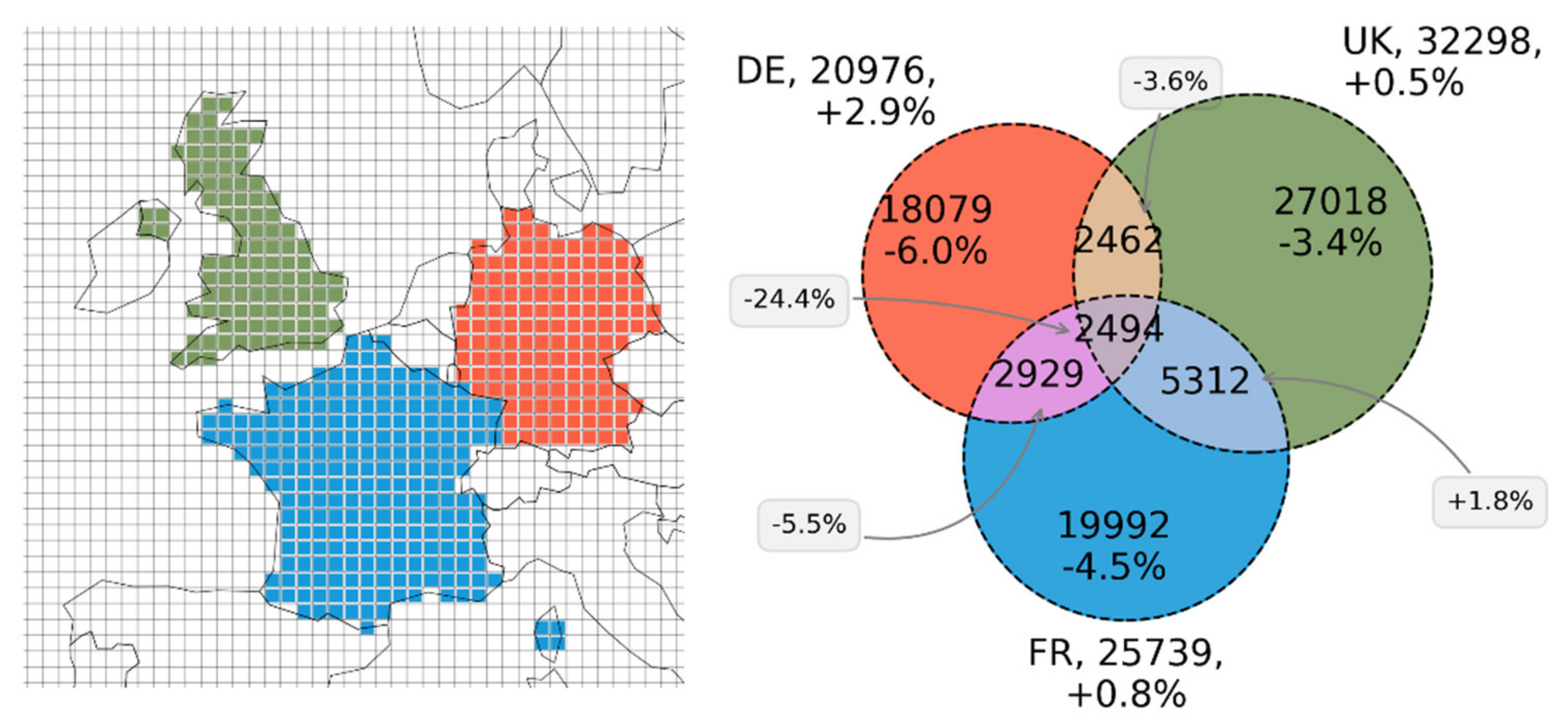

6.2. Utility of Published Benchmark Data

6.3. Privacy Trade-Off

7. Discussion

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- See, L.; Mooney, P.; Foody, G.; Bastin, L.; Comber, A.; Estima, J.; Rutzinger, M. Crowdsourcing, citizen science or volunteered geographic information? The current state of crowdsourced geographic information. ISPRS Int. J. Geo Inf. 2016, 5, 55. [Google Scholar] [CrossRef]

- Harvey, F. To Volunteer or to contribute locational information? Towards truth in labeling for crowdsourced geographic information. In Crowdsourcing Geographic Knowledge: Volunteered Geographic Information (VGI) in Theory and Practice; Sui, D.Z., Elwood, S., Goodchild, M.F., Eds.; Springer: Dordrecht, The Netherlands, 2013; pp. 31–42. [Google Scholar]

- Ghermandi, A.; Sinclair, M. Passive crowdsourcing of social media in environmental research: A systematic map. Glob. Environ. Chang. 2019, 55, 36–47. [Google Scholar] [CrossRef]

- Malhotra, N.K.; Kim, S.S.; Agarwal, J. Internet users’ information privacy concerns (IUIPC): The construct, the scale, and a causal model. Inf. Syst. Res. 2004, 15, 336–355. [Google Scholar] [CrossRef]

- Lane, J.; Stodden, V.; Bender, S.; Nissenbaum, H. Privacy, Big Data, and the Public Good: Frameworks for Engagement; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar] [CrossRef]

- Metcalf, J.; Crawford, K. Where are human subjects in Big Data research? The emerging ethics divide. Big Data Soc. 2016, 3, 205395171665021. [Google Scholar] [CrossRef]

- De Groot, R.S.; Alkemade, R.; Braat, L.; Hein, L.; Willemen, L. Challenges in integrating the concept of ecosystem services and values in landscape planning, management and decision making. Ecol. Complex. 2010, 7, 260–272. [Google Scholar]

- Hon, W.K.; Millard, C.; Walden, I. The problem of “personal data” in cloud computing: What Information is regulated?—The cloud of unknowing. Int. Data Priv. Law 2011, 1, 211–228. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Zhang, Y.; Lu, X.; Wang, Z.; Qin, Z.; Ren, K. Real-Time and Spatio-Temporal Crowd-Sourced Social Network Data Publishing with Differential Privacy. IEEE Trans. Dependable Secur. Comput. 2018, 15, 591–606. [Google Scholar] [CrossRef]

- Dustdar, S.; Rosenberg, F. A survey on context-aware systems. Inf. Syst. 2007, 2, 263–277. [Google Scholar]

- Politou, E.; Alepis, E.; Patsakis, C. Forgetting personal data and revoking consent under the GDPR: Challenges and Proposed Solutions. J. Cybersecur. 2018, 1–20. [Google Scholar] [CrossRef]

- Victor, N.; Lopez, D.; Abawajy, J.H. Privacy models for big data: A survey. Int. J. Big Data Intell. 2016, 3, 61. [Google Scholar] [CrossRef]

- D’Orazio, V.; Honaker, J.; King, G. Differential Privacy for Social Science Inference. SSRN Electron. J. 2015. [Google Scholar] [CrossRef] [Green Version]

- Oberski, D.L.; Kreuter, F. Differential Privacy and Social Science: An Urgent Puzzle. Harv. Data Sci. Rev. 2020, 2, 1–22. [Google Scholar] [CrossRef]

- Solove, D.J. Introduction: Privacy self-management and the consent dilemma. Harv. Law Rev. 2013, 126, 1880–1903. [Google Scholar]

- Flajolet, P.; Fusy, É.; Gandouet, O. HyperLogLog: The analysis of a near-optimal cardinality estimation algorithm. In Proceedings of the Conference on Analysis of Algorithms, AofA 07, Nice, France, 17–22 June 2007; 2007; Volume 7, pp. 127–146. [Google Scholar]

- Ataei, M.; Degbelo, A.; Kray, C.; Santos, V. Complying with privacy legislation: From legal text to implementation of privacy-aware location-based services. ISPRS Int. J. Geo Inf. 2018, 7, 442. [Google Scholar] [CrossRef] [Green Version]

- Martinez-Balleste, A.; Perez-Martinez, P.; Solanas, A. The pursuit of citizens’ privacy: A privacy-aware smart city is possible. IEEE Commun. Mag. 2013, 51, 136–141. [Google Scholar] [CrossRef]

- Singh, A.; Garg, S.; Kaur, R.; Batra, S.; Kumar, N.; Zomaya, A.Y. Probabilistic data structures for big data analytics: A comprehensive review. Knowl. Based Syst. 2020, 188. [Google Scholar] [CrossRef]

- Keßler, C.; McKenzie, G. A geoprivacy manifesto. Trans. GIS 2018, 22, 3–19. [Google Scholar] [CrossRef] [Green Version]

- Westin, A.F. Privacy and Freedom; Atheneum: New York, NY, USA, 1967. [Google Scholar]

- Altman, I. The Environment and Social Behavior: Privacy, Personal Space, Territory, Crowding; Brooks/Cole Pub. Co.: Monterey, CA, USA, 1975. [Google Scholar]

- Yu, S. Big Privacy: Challenges and Opportunities of Privacy Study in the Age of Big Data. IEEE Access 2016, 4, 2751–2763. [Google Scholar] [CrossRef] [Green Version]

- Samarati, P.; Sweeney, L. Protecting Privacy when Disclosing Information: K-Anonymity and Its Enforcement through Generalization and Suppression; Technical Report SRI-CSL-98-04; Computer Science Laboratory, SRI International: Menlo Park, CA, USA, 1998. [Google Scholar]

- Aggarwal, C.C. On k-anonymity and the curse of dimensionality. In Proceedings of the 31st International Conference on Very Large Data Bases, Trondheim, Norway, 30 August–2 September 2005; pp. 901–909, VLDB Endowment. [Google Scholar]

- Kamp, M.; Kopp, C.; Mock, M.; Boley, M.; May, M. Privacy-preserving MOBILITY MONITORING USING sketches of stationary sensor readings. In Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2013. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Feyisetan, O.; Drake, T.; Balle, B.; Diethe, T. Privacy-preserving active learning on sensitive data for user intent classification. CEUR Workshop Proc. 2019, 2335, 3–12. [Google Scholar]

- Jain, P.; Gyanchandani, M.; Khare, N. Big data privacy: A technological perspective and review. J. Big Data 2016, 3. [Google Scholar] [CrossRef] [Green Version]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography Conference; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Dwork, C. Differential privacy: A survey of results. In Theory and Applications of Models of Computation: Proceedings of the Fifth International Conference, TAMC 2008, Xi’an, China, 25–29 April 2008; Springer Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; Volume 4978, pp. 1–19. [Google Scholar]

- Machanavajjhala, A.; He, X.; Hay, M. Differential privacy in the wild: A tutorial on current practices & open challenges. Part F127746. Proc. ACM SIGMOD Int. Conf. Manag. Data 2017, 1727–1730. [Google Scholar] [CrossRef] [Green Version]

- Fan, L.; Xiong, L. Real-time aggregate monitoring with differential privacy. ACM Int. Conf. Proc. Ser. 2012, 2169–2173. [Google Scholar] [CrossRef] [Green Version]

- Dwork, C.; Naor, M.; Pitassi, T.; Rothblum, G.N. Differential Privacy Under Continual Observation. In Proceedings of the Forty-Second ACM Symposium on Theory of Computing, STOC’10, Cambridge, MA, USA, 6–8 June 2010; pp. 715–724. [Google Scholar]

- Bianchi, G.; Bracciale, L.; Loreti, P. “Better Than Nothing” privacy with bloom filters: To what extent? In Proceedings of the 2012 International Conference on Privacy in Statistical Databases, Palermo, Italy, 26–28 September 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 348–363. [Google Scholar] [CrossRef]

- Yu, Y.W.; Weber, G.M. Federated queries of clinical data repositories: Balancing accuracy and privacy. BioRxiv 2019, 841072. [Google Scholar] [CrossRef] [Green Version]

- Desfontaines, D.; Lochbihler, A.; Basin, D. Cardinality Estimators do not Preserve Privacy. Proc. Priv. Enhancing Technol. 2019, 2, 26–46. [Google Scholar] [CrossRef] [Green Version]

- Wright, C.; Skvortsov, E.; Kreuter, B.; Wang, Y. Privacy-Preserving Secure Cardinality and Frequency Estimation; Google LLC: Mountain View, CA, USA, 2020; pp. 1–20. [Google Scholar]

- Andreescu, T.; Feng, Z. Inclusion-exclusion principle. In A path to Combinatorics for Undergraduates; Birkhäuser: Boston, MA, USA, 2004. [Google Scholar]

- Baker, D.N.; Langmead, B. Dashing: Fast and accurate genomic distances with HyperLogLog. Genome Biol. 2019, 20, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gómez-Barrón, J.P.; Manso-Callejo, M.Á.; Alcarria, R.; Iturrioz, T. Volunteered geographic information system design: Project and participation guidelines. ISPRS Int. J. Geo-Inf. 2016, 5, 108. [Google Scholar] [CrossRef] [Green Version]

- Mannix, E.A.; Neale, M.A.; Northcraft, G.B. Equity, Equality, or Need? The Effects of Organizational Culture on the Allocation of Benefits and Burdens. Organ. Behav. Hum. Decis. Process. 1995, 63, 276. [Google Scholar] [CrossRef]

- Doan, A.; Ramakrishnan, R.; Halevy, A.Y. Crowdsourcing systems on the World-Wide Web. Commun. ACM 2011, 54, 86. [Google Scholar] [CrossRef]

- Chen, Y.; Parkins, J.R.; Sherren, K. Using geo-tagged Instagram posts to reveal landscape values around current and proposed hydroelectric dams and their reservoirs. Landsc. Urban Plan. 2017, 170. [Google Scholar] [CrossRef]

- Kennedy, L.; Naaman, M. Generating diverse and representative image search results for landmarks. In Proceedings of the 17th International Conference on World Wide Web, WWW’08, Beijing, China, 21–25 April 2008; ACM: New York, NY, USA, 2008; pp. 297–306. [Google Scholar]

- Wood, S.A.; Guerry, A.D.; Silver, J.M.; Lacayo, M. Using social media to quantify nature-based tourism and recreation. Sci. Rep. 2013, 3. [Google Scholar] [CrossRef] [PubMed]

- Heikinheimo, V.; Minin EDi Tenkanen, H.; Hausmann, A.; Erkkonen, J.; Toivonen, T. User-Generated Geographic Information for Visitor Monitoring in a National Park: A Comparison of Social Media Data and Visitor Survey. ISPRS Int. J. Geo-Inf. 2017, 6, 85. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.; Kim, C.; Lee, D.K.; Lee, H.; Andrada, R.I.T. Quantifying nature-based tourism in protected areas in developing countries by using social big data. Tour. Manag. 2019, 72, 249–256. [Google Scholar] [CrossRef]

- Fisher, D.M.; Wood, S.A.; White, E.M.; Blahna, D.J.; Lange, S.; Weinberg, A.; Lia, E. Recreational use in dispersed public lands measured using social media data and on-site counts. J. Environ. Manag. 2018, 222, 465–474. [Google Scholar] [CrossRef] [PubMed]

- Schaffer, M.; Schartner, P.; Rass, S. Universally unique identifiers: How to ensure uniqueness while protecting the issuer’s privacy. In Proceedings of the 2007 International Conference on Security and Management, SAM’07, Las Vegas, NV, USA, 25–28 June 2007; pp. 198–204. [Google Scholar]

- Shi, X.; Yu, Z.; Fang, Q.; Zhou, Q. A Visual Analysis Approach for Inferring Personal Job and Housing Locations Based on Public Bicycle Data. ISPRS Int. J. Geo-Inf. 2017, 6, 205. [Google Scholar] [CrossRef] [Green Version]

- Thomee, B.; Shamma, D.A.; Friedland, G.; Elizalde, B.; Ni, K.; Poland, D.; Borth, D.; Li, L. YFCC100M: The New Data in Multimedia Research. Commun. ACM 2016, 59, 64–73. [Google Scholar] [CrossRef]

- Dunkel, A.; Löchner, M.; Burghardt, D. Supplementary Materials (release v0.1.0) for Privacy-aware visualization of volunteered geographic information (VGI) to analyze spatial activity: A benchmark implementation. Data Repos. 2020. [Google Scholar] [CrossRef]

- Priya, V.; Ilavarasi, A.K.; Bhama, S. A Privacy Preserving Data Mining Approach for Handling Data with Outliers. Adv. Nat. Appl. Sci. 2017, 11, 585–591. [Google Scholar]

- Zhou, B.; Pei, J. The k-anonymity and l-diversity approaches for privacy preservation in social networks against neighborhood attacks. Knowl. Inf. Syst. 2011, 28, 47–77. [Google Scholar] [CrossRef]

- Gruteser, M.; Grunwald, D. Anonymous Usage of Location-Based Services Through Spatial and Temporal Cloaking. In Proceedings of the First International Conference on Mobile Systems, Applications, and Services (MobiSys), San Francisco, CA, USA, 5–8 May 2003. [Google Scholar]

- Wang, H.; Liu, R. Hiding outliers into crowd: Privacy-preserving data publishing with outliers. Data Knowl. Eng. 2015, 100, 94–115. [Google Scholar] [CrossRef]

- Ruppel, P.; Küpper, A. Geocookie: A space-efficient representation of geographic location sets. J. Inf. Process. 2014, 22, 418–424. [Google Scholar] [CrossRef] [Green Version]

- Jiang, B. Head/Tail Breaks: A New Classification Scheme for Data with a Heavy-Tailed Distribution. Prof. Geogr. 2013, 65, 482–494. [Google Scholar] [CrossRef]

- Ertl, O. Method and System to Estimate the Cardinality of Sets and Set Operation Results from Single and Multiple HyperLogLog Sketches. U.S. Patent Application No. 15/950,632, 11 April 2018. [Google Scholar]

- De Andrade, S.C.; Restrepo-Estrada, C.; Nunes, L.H.; Rodriguez, C.A.M.; Estrella, J.C.; Delbem, A.C.B.; Porto de Albuquerque, J. A multicriteria optimization framework for the definition of the spatial granularity of urban social media analytics. Int. J. Geogr. Inf. Sci. 2020, 1–20. [Google Scholar] [CrossRef]

- Shelton, T.; Poorthuis, A.; Zook, M. Social media and the city: Rethinking urban socio-spatial inequality using user-generated geographic information. Landsc. Urban Plan. 2015, 142, 198–211. [Google Scholar] [CrossRef]

- Øian, H.; Fredman, P.; Sandell, K.; Sæþórsdóttir, A.D.; Tyrväinen, L.; Jensen, F.S. Tourism, nature and sustainability: A review of policy instruments in the Nordic countries; Nordic Council of Ministers: Copenhagen, Denmark, 2018. [Google Scholar] [CrossRef]

- Di Zio, S.; Castillo Rosas, J.D.; Lamelza, L. Real Time Spatial Delphi: Fast convergence of experts’ opinions on the territory. Technol. Forecast. Soc. Change 2017, 115, 143–154. [Google Scholar] [CrossRef]

- Reviriego, P.; Ting, D. Security of HyperLogLog (HLL) Cardinality Estimation: Vulnerabilities and Protection. IEEE Commun. Lett. 2020, 1. [Google Scholar] [CrossRef] [Green Version]

| Metric | Exact (Raw) | Estimated (HLL) |

|---|---|---|

| Coordinate count | 12,764,268 | 12,756,691 |

| User count | 581,099 | 589,475 |

| Post count | 100,000,000 | 98,553,392 |

| User days | 17,662,780 | 17,678,373 |

| Context | Raw Data | HLL Data |

|---|---|---|

| Input data size of comma-separated values (CSV) | 2.5 GB | Explicit: 281 MB Sparse: 134 MB Full: 3.3 GB |

| Output data size, 100 km grid (CSV) | 182.46 MB | 19.80 MB |

| Processing time (Worldmap) | Post count: 7 min 13 s User count: 8 min 55 s User days: 12 min 8 s | 54.1 s (Post count, user count, user days) |

| Memory peak (Worldmap) | Post count: 15.4 GB User count: 15.5 GB User days: 19.3 GB | 1.4 GB (Post count, user count, user days) |

| Benchmark data size (CSV) | / | 10.61 MB (bins with user count ≥ 100) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dunkel, A.; Löchner, M.; Burghardt, D. Privacy-Aware Visualization of Volunteered Geographic Information (VGI) to Analyze Spatial Activity: A Benchmark Implementation. ISPRS Int. J. Geo-Inf. 2020, 9, 607. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9100607

Dunkel A, Löchner M, Burghardt D. Privacy-Aware Visualization of Volunteered Geographic Information (VGI) to Analyze Spatial Activity: A Benchmark Implementation. ISPRS International Journal of Geo-Information. 2020; 9(10):607. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9100607

Chicago/Turabian StyleDunkel, Alexander, Marc Löchner, and Dirk Burghardt. 2020. "Privacy-Aware Visualization of Volunteered Geographic Information (VGI) to Analyze Spatial Activity: A Benchmark Implementation" ISPRS International Journal of Geo-Information 9, no. 10: 607. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9100607