Hierarchical Point Matching Method Based on Triangulation Constraint and Propagation

Abstract

:1. Introduction

2. Proposed Method

2.1. Point Matching Based on Descriptors with Overlapping Subregions

2.1.1. Descriptor Construction

2.1.2. Dissimilarity Measure

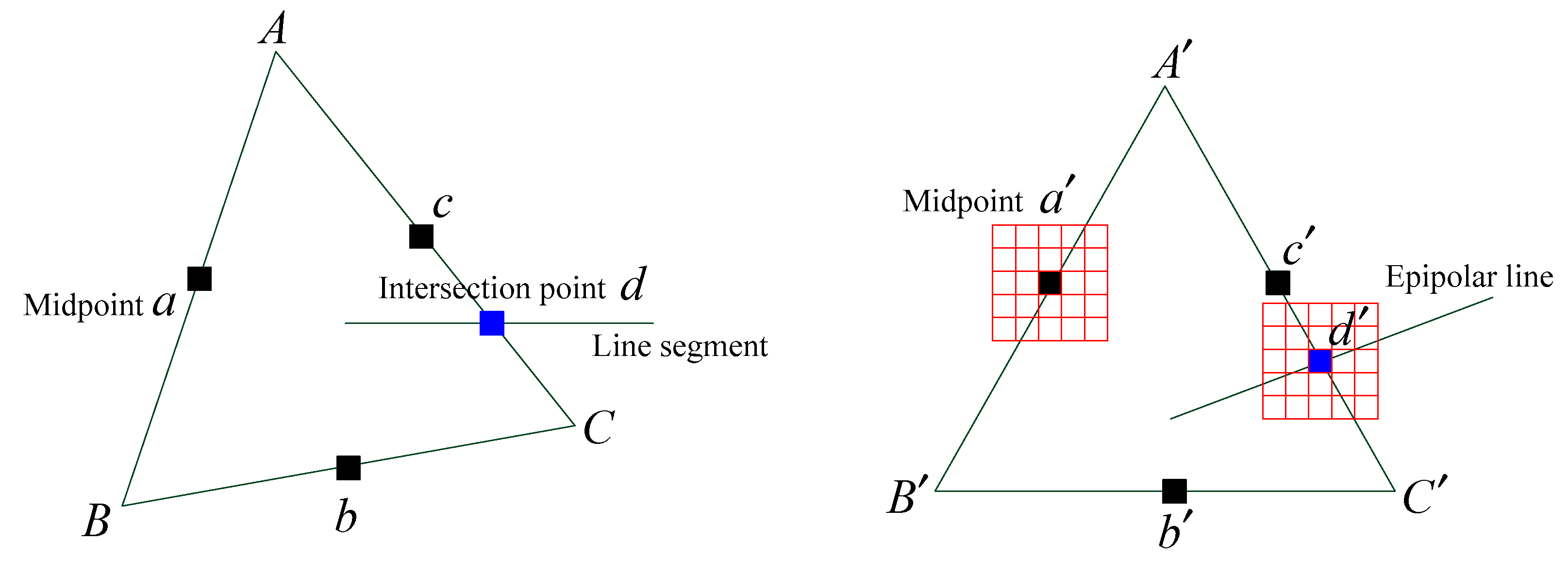

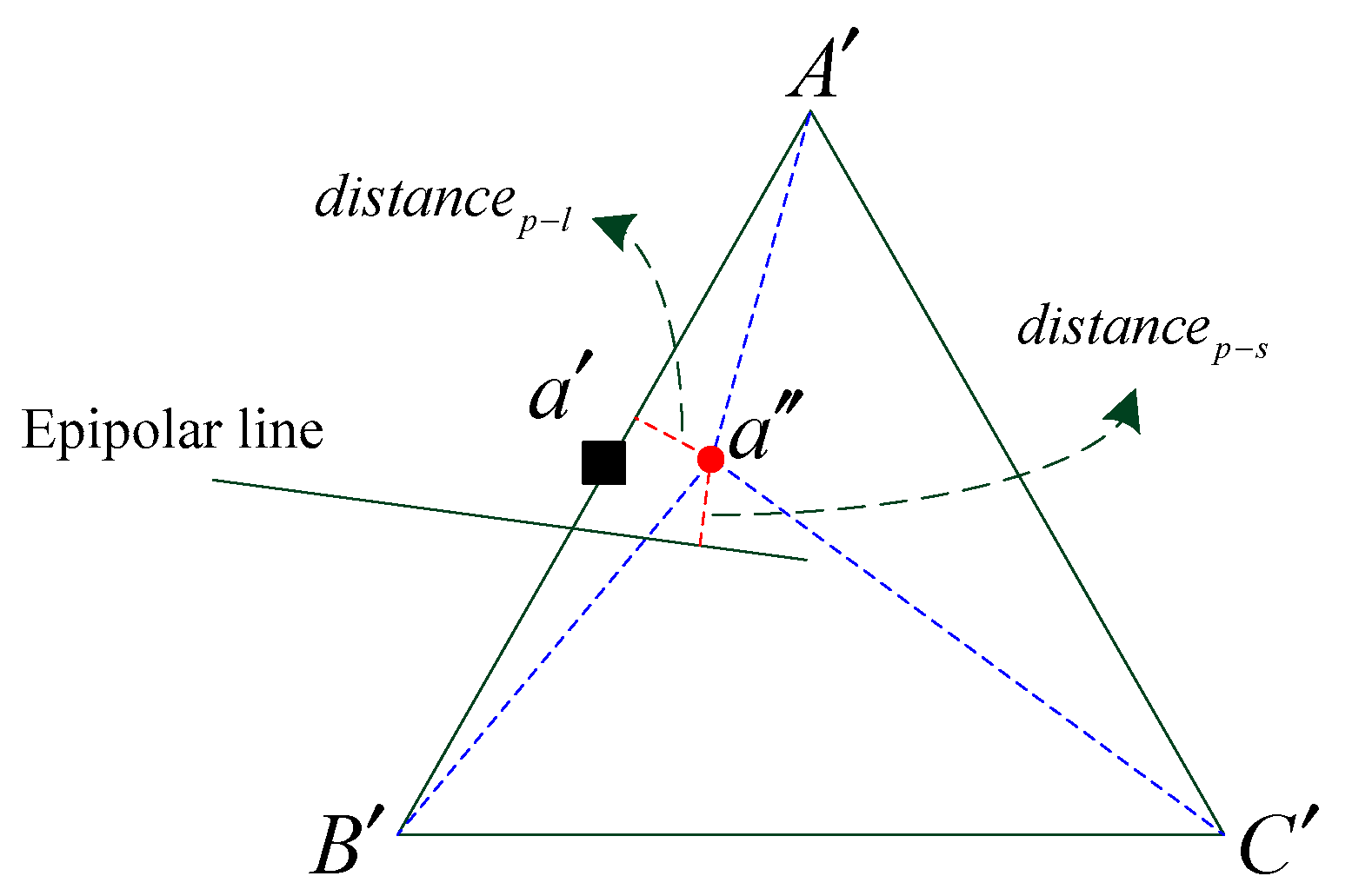

2.2. Point Matching with Multiple Constraints

2.3. Matching Propagation

| Algorithm 1 Matching propagation strategy to obtain quasi-dense matching |

| Input: Reference image and search image ; Initial reliable corresponding points Pt(Pt1,Pt2) ; Line segments Le extracted from the reference image; ; Output: Corresponding points |

| Return |

3. Further Experiments and Analysis

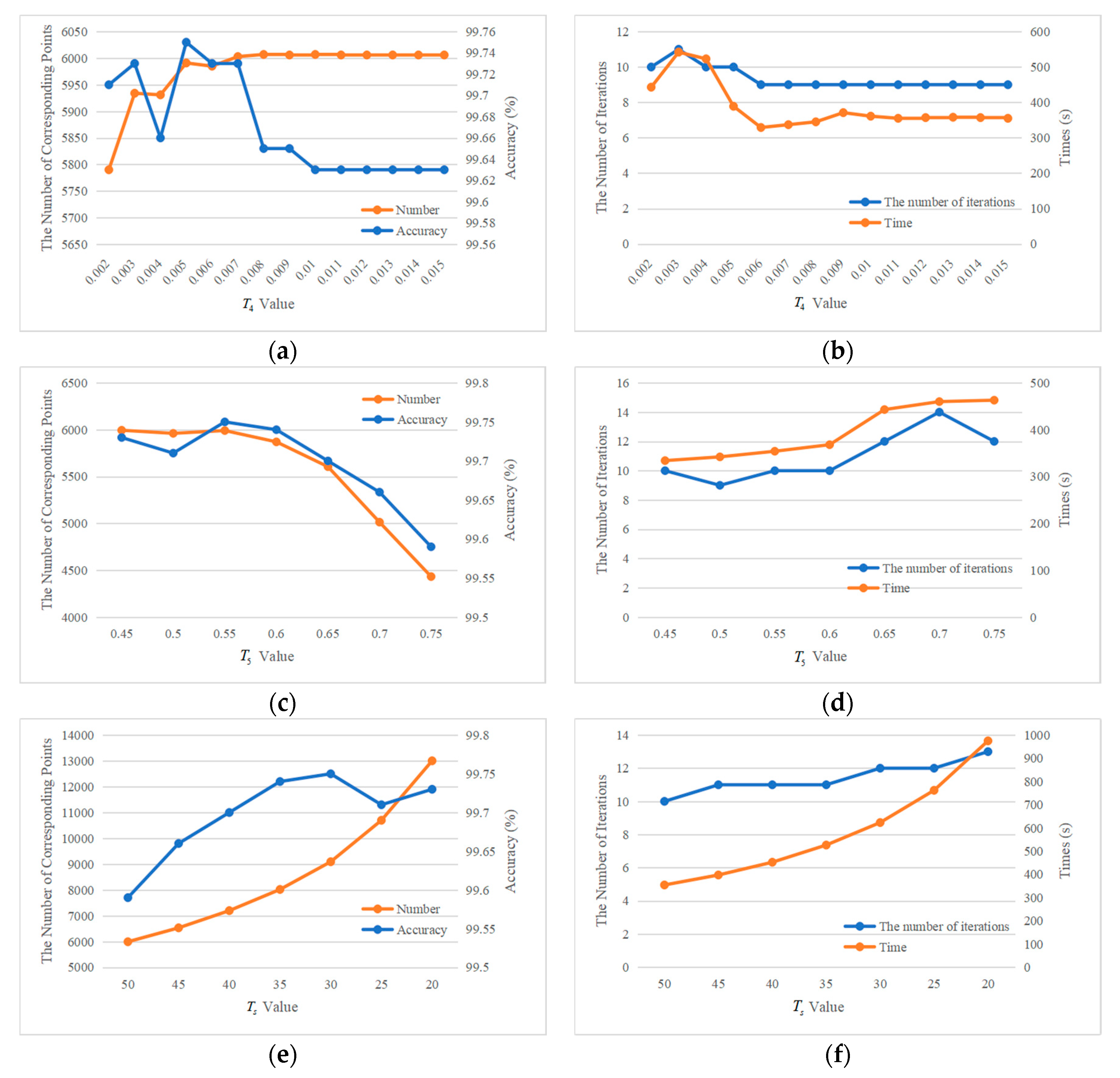

3.1. Parameter Selection

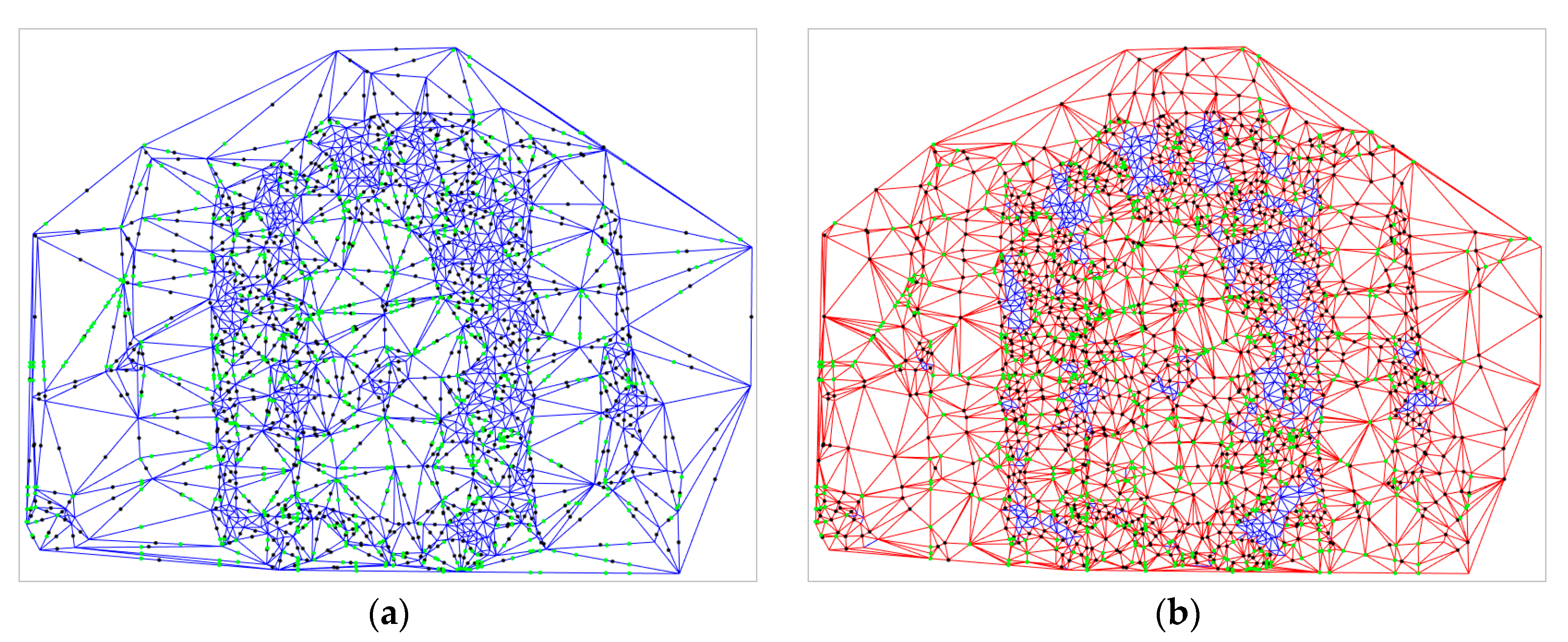

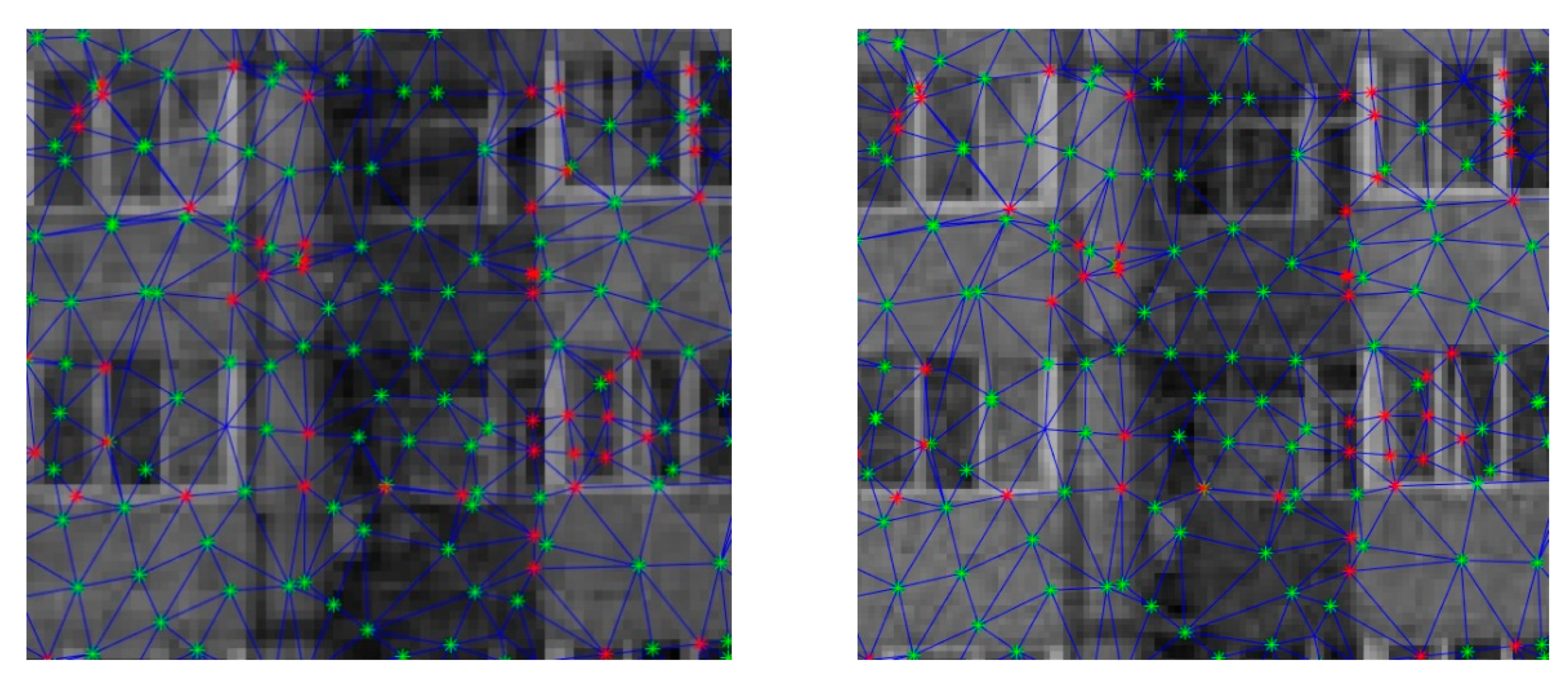

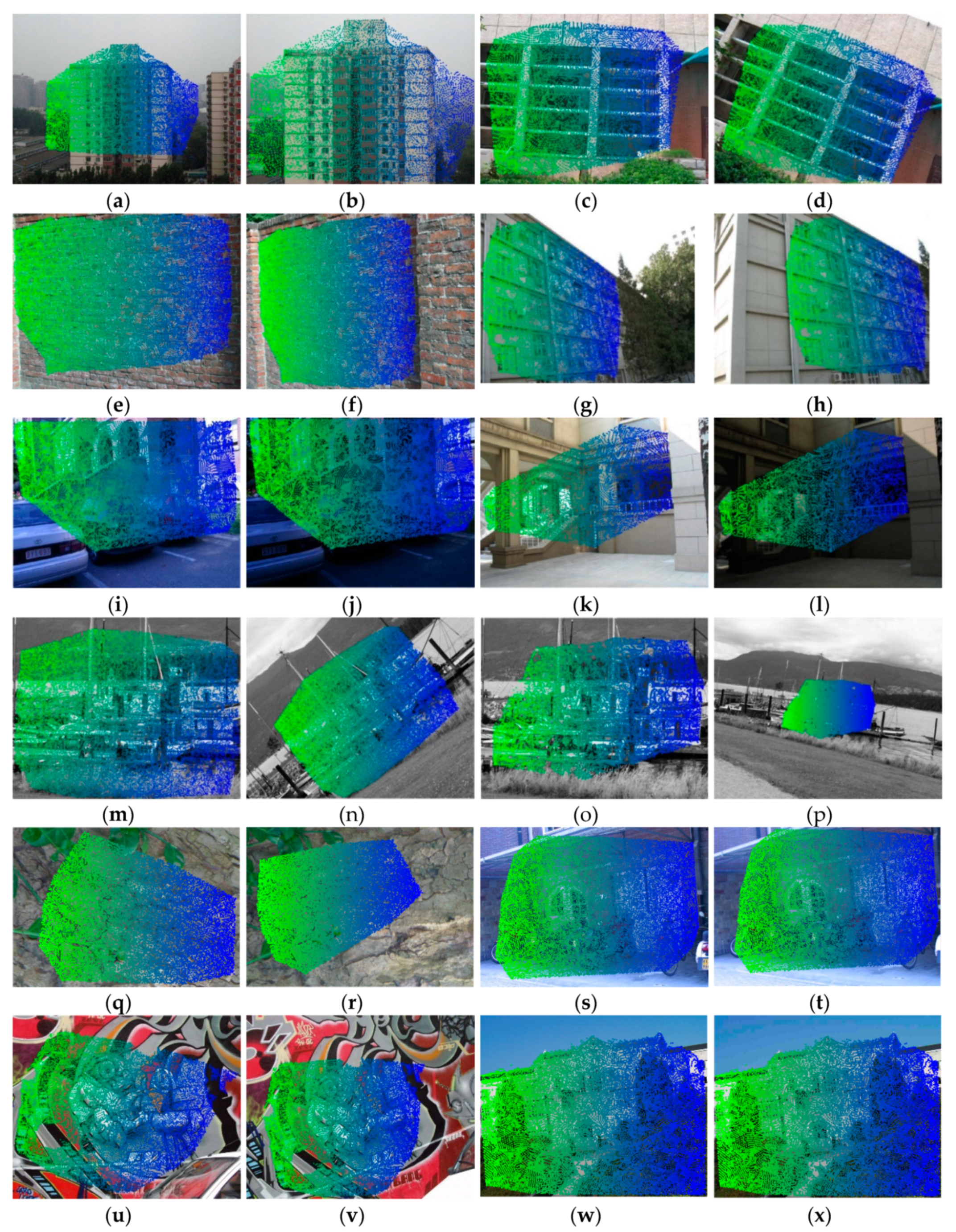

3.2. Different Matching Stages

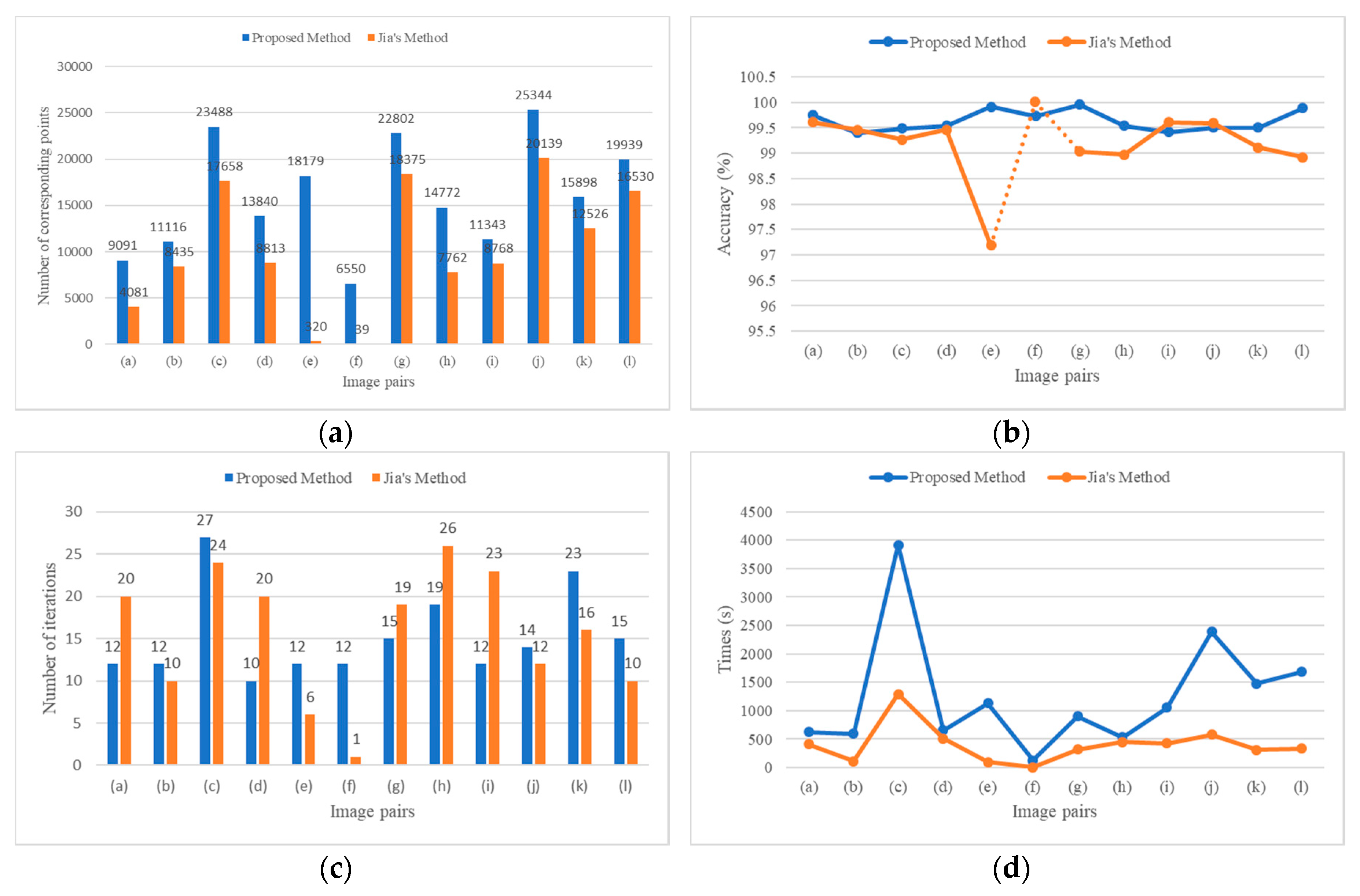

3.3. Comparison with Jia’s Method

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wang, D.; Liu, H.; Cheng, X. A miniature binocular endoscope with local feature matching and stereo matching for 3d measurement and 3d reconstruction. Sensors 2018, 18, 2243. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, W.; Li, X.; Yang, B.; Fu, Y. A novel stereo matching algorithm for digital surface model (DSM) generation in water areas. Remote Sens. 2020, 12, 870. [Google Scholar] [CrossRef] [Green Version]

- Su, N.; Yan, Y.; Qiu, M.; Zhao, C.; Wang, L. Object-based dense matching method for maintaining structure characteristics of linear buildings. Sensors 2018, 18, 1035. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lhuillier, M.; Quan, L. Match propagation for image-based modeling and rendering. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1140–1146. [Google Scholar] [CrossRef]

- Tola, E.; Lepetit, V.; Fua, P. A fast local descriptor for dense matching. In Proceedings of the 2008 26th IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–15. [Google Scholar] [CrossRef] [Green Version]

- Gruen, A. Development and Status of Image Matching in Photogrammetry. Photogramm. Rec. 2012, 27, 36–57. [Google Scholar] [CrossRef]

- Yu, Z.; Guo, X.; Ling, H.; Lumsdaine, A.; Yu, J. Line assisted light field triangulation and stereo matching. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2792–2799. [Google Scholar] [CrossRef] [Green Version]

- Revaud, J.; Weinzaepfel, P.; Harchaoui, Z.; Schmid, C. DeepMatching: Hierarchical Deformable Dense Matching. Int. J. Comput. Vis. 2016, 120, 300–323. [Google Scholar] [CrossRef] [Green Version]

- Dong, X.; Shen, J.; Shao, L. Hierarchical Superpixel-to-Pixel Dense Matching. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 2518–2526. [Google Scholar] [CrossRef] [Green Version]

- Jia, D.; Zhao, M.; Cao, J. FDM: Fast dense matching based on sparse matching. Signal Image Video Process. 2020, 14, 295–303. [Google Scholar] [CrossRef]

- Zhang, K.; Sheng, Y.; Ye, C. Stereo image matching for vehicle-borne mobile mapping system based on digital parallax model. Int. J. Veh. Technol. 2011, 2011, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Laraqui, M.; Saaidi, A.; Mouhib, A.; Abarkan, M. Images Matching Using Voronoï Regions Propagation. 3D Res. 2015, 6, 1–16. [Google Scholar] [CrossRef]

- Khazaei, H.; Mohades, A. Fingerprint matching algorithm based on voronoi diagram. In Proceedings of the 2008 International Conference on Computational Sciences and Its Applications, Perugia, Italy, 30 June–3 July 2008; pp. 433–440. [Google Scholar] [CrossRef]

- Wu, J.; Wan, Y.; Chiang, Y.Y.; Fu, Z.; Deng, M. A Matching Algorithm Based on Voronoi Diagram for Multi-Scale Polygonal Residential Areas. IEEE Access 2018, 6, 4904–4915. [Google Scholar] [CrossRef]

- Soleymani, R.; Chehel Amirani, M. A hybrid fingerprint matching algorithm using Delaunay triangulation and Voronoi diagram. In Proceedings of the 20th Iranian Conference on Electrical Engineering (ICEE2012), Tehran, Iran, 15–17 May 2012; pp. 752–757. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Reliable image matching via photometric and geometric constraints structured by Delaunay triangulation. ISPRS J. Photogramm. Remote Sens. 2019, 153, 1–20. [Google Scholar] [CrossRef]

- Zhu, Q.; Wu, B.; Tian, Y. Propagation strategies for stereo image matching based on the dynamic triangle constraint. ISPRS J. Photogramm. Remote Sens. 2007, 62, 295–308. [Google Scholar] [CrossRef]

- Li, T.; Sui, H.T.; Wu, C.K. Dense Stereo Matching Based on Propagation with a Voronoi Diagram. In Proceedings of the Third Indian Conference on Computer Vision, Graphics & Image Processing, Ahmadabad, India, 16–18 December 2002. [Google Scholar]

- Finch, A.M.; Wilson, R.C.; Hancock, E.R. Matching delaunay triangulations by probabilistic relaxation. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 1995; Volume 970, pp. 350–358. [Google Scholar] [CrossRef]

- Lhuillier, M.; Quan, L. Image interpolation by joint view triangulation. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999; Volume 2, pp. 139–145. [Google Scholar] [CrossRef] [Green Version]

- Sedaghat, A.; Ebadi, H.; Mokhtarzade, M. Image Matching of Satellite Data Based on Quadrilateral Control Networks. Photogramm. Rec. 2012, 27, 423–442. [Google Scholar] [CrossRef]

- Liu, Z.; An, J.; Jing, Y. A simple and robust feature point matching algorithm based on restricted spatial order constraints for aerial image registration. IEEE Trans. Geosci. Remote Sens. 2012, 50, 514–527. [Google Scholar] [CrossRef]

- Zhao, M.; An, B.; Wu, Y.; Chen, B.; Sun, S. A Robust delaunay triangulation matching for multispectral/multidate remote sensing image registration. IEEE Geosci. Remote Sens. Lett. 2015, 12, 711–715. [Google Scholar] [CrossRef]

- Ma, J.; Ahuja, N. Region Correspondence by Global Con guration Matching and Progressive Delaunay Triangulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 13–15 June 2000; Volume 2, pp. 637–642. [Google Scholar] [CrossRef]

- Guo, X.; Cao, X. Good match exploration using triangle constraint. Pattern Recognit. Lett. 2012, 33, 872–881. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhao, J.; Lin, H.; Gong, J. Triangulation of well-defined points as a constraint for reliable image matching. Photogramm. Eng. Remote Sens. 2005, 71, 1063–1069. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, Y.; Wu, B.; Zhang, Y. Multiple close-range image matching based on a self-adaptive triangle constraint. Photogramm. Rec. 2010, 25, 437–453. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, Y.; Zhu, Q. Integrated point and edge matching on poor textural images constrained by self-adaptive triangulations. ISPRS J. Photogramm. Remote Sens. 2012, 68, 40–55. [Google Scholar] [CrossRef]

- Jia, D.; Wu, S.; Zhao, M. Dense matching for wide baseline images based on equal proportion of triangulation. Electron. Lett. 2019, 55, 380–382. [Google Scholar] [CrossRef]

- Low, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Paradigm for Model. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, L.; Huang, S.; Yan, L.; Dissanayake, G. L2-SIFT: SIFT feature extraction and matching for large images in large-scale aerial photogrammetry. ISPRS J. Photogramm. Remote Sens. 2014, 91, 1–16. [Google Scholar] [CrossRef]

- Wang, E.; Jiao, J.; Yang, J.; Liang, D.; Tian, J. Tri-SIFT: A triangulation-based detection and matching algorithm for fish-eye images. Information 2018, 9, 299. [Google Scholar] [CrossRef] [Green Version]

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Tola, E.; Lepetit, V.; Fua, P. DAISY: An efficient dense descriptor applied to wide-baseline stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 815–830. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Wu, F.; Hu, Z. MSLD: A robust descriptor for line matching. Pattern Recognit. 2009, 42, 941–953. [Google Scholar] [CrossRef]

- Moisan, L.; Stival, B. A Probabilistic Criterion to Detect Rigid Point Matches Between Two. Int. J. Comput. Vis. 2004, 57, 201–218. [Google Scholar] [CrossRef]

| Parameters | ||||||

|---|---|---|---|---|---|---|

| Value | 1.8 | 0.009 | 0.005 | 0.55 | 50 | |

| Range | 0.5–1.5 | 1.2–2 | 0.006–0.015 | 0.002–0.015 | 0.45–0.75 | 50–20 |

| Step | 0.1 | 0.1 | 0.001 | 0.001 | 0.05 | −5 |

| Parameters | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Value | 0.8 | 1.8 | 0.011 | 0.005 | 0.55 | 30 | 0.45 | 0.25 | 0.15 | 0.15 |

| Images | Seed Points | Stage | Number of Iterations | Number of Midpoints | Number of Intersections | Total | Wrong | Accuracy (%) | Time (s) |

|---|---|---|---|---|---|---|---|---|---|

| (a) | 911 | 1 | 13 | 2760 | 1767 | 5436 | 23 | 99.58 | 83 |

| 2 | 12 | 6016 | 2185 | 9091 | 23 | 99.75 | 623 | ||

| (b) | 213 | 1 | 14 | 4749 | 2674 | 7634 | 24 | 99.69 | 64 |

| 2 | 12 | 8014 | 2905 | 11,116 | 67 | 99.4 | 591 | ||

| (c) | 635 | 1 | 24 | 10,592 | 2863 | 14,088 | 60 | 99.57 | 852 |

| 2 | 27 | 19,069 | 3826 | 23,488 | 119 | 99.49 | 3916 | ||

| (d) | 463 | 1 | 13 | 6794 | 4613 | 11,868 | 56 | 99.53 | 211 |

| 2 | 10 | 8530 | 4865 | 13,840 | 63 | 99.54 | 655 | ||

| (e) | 302 | 1 | 18 | 10,028 | 3952 | 14,280 | 40 | 99.72 | 336 |

| 2 | 12 | 13,838 | 4060 | 18,179 | 17 | 99.91 | 1134 | ||

| (f) | 39 | 1 | 21 | 3415 | 1855 | 5307 | 24 | 99.55 | 27 |

| 2 | 12 | 4581 | 1937 | 6550 | 18 | 99.73 | 125 | ||

| (g) | 750 | 1 | 37 | 2351 | 2119 | 5218 | 58 | 98.89 | 117 |

| 2 | 15 | 16,468 | 5690 | 22,802 | 8 | 99.96 | 904 | ||

| (h) | 190 | 1 | 14 | 2988 | 1920 | 5096 | 46 | 99.09 | 80 |

| 2 | 19 | 10,139 | 4516 | 14,772 | 67 | 99.54 | 536 | ||

| (i) | 89 | 1 | 18 | 1047 | 1226 | 2360 | 15 | 99.36 | 108 |

| 2 | 12 | 9090 | 2195 | 11,343 | 66 | 99.42 | 1058 | ||

| (j) | 192 | 1 | 21 | 12,994 | 5620 | 18,804 | 133 | 99.29 | 1232 |

| 2 | 14 | 19,509 | 5684 | 25,344 | 127 | 99.50 | 2392 | ||

| (k) | 233 | 1 | 14 | 6265 | 3729 | 10,225 | 53 | 99.48 | 404 |

| 2 | 23 | 11,533 | 4162 | 15,898 | 81 | 99.50 | 1479 | ||

| (l) | 724 | 1 | 18 | 10,958 | 3667 | 15,347 | 42 | 99.79 | 649 |

| 2 | 15 | 15,591 | 3640 | 19,939 | 23 | 99.89 | 1683 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Zhang, N.; Wu, X.; Wang, W. Hierarchical Point Matching Method Based on Triangulation Constraint and Propagation. ISPRS Int. J. Geo-Inf. 2020, 9, 347. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9060347

Wang J, Zhang N, Wu X, Wang W. Hierarchical Point Matching Method Based on Triangulation Constraint and Propagation. ISPRS International Journal of Geo-Information. 2020; 9(6):347. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9060347

Chicago/Turabian StyleWang, Jingxue, Ning Zhang, Xiangqian Wu, and Weixi Wang. 2020. "Hierarchical Point Matching Method Based on Triangulation Constraint and Propagation" ISPRS International Journal of Geo-Information 9, no. 6: 347. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9060347