Mission Flight Planning of RPAS for Photogrammetric Studies in Complex Scenes

Abstract

:1. Introduction

- Conventional aerial photogrammetry usually uses metric cameras mounted on planes assisted with high-quality positioning and orientation systems based on Global Navigation Satellite Systems (GNSS)/Inertial Navigation Systems (INS). In this case, the configuration of the flight is usually defined using flight lines or strips with predefined longitudinal and lateral overlaps. As examples, several studies [17,18,19] showed more detailed descriptions of the parameters to be considered and their calculation. The project requirements demanded by institutions or customers usually establish ranges of values for these parameters that must be taken into account in flight planning (overlaps, scale variability, etc.).

- Close-range photogrammetry [20,21,22] supposes the acquisition of photographs at reduced distances to the object and combines stereoscopic normal case (parallel axes perpendicular to the object plane) with convergent images (convergent axes). This acquisition mode is the most widely used in terrestrial photogrammetry and in RPAS where weight-reduced and nonmetric cameras are widely employed. As an example, two studies [23,24] described the recommendations of the International Committee of Architectural Photogrammetry (CIPA), known as the 3 × 3 rules, for architectural photogrammetric projects planning using nonmetric cameras. In the geometric rules, it is recommended to use multiple photographic all-around coverage that includes taking a ring of images all around the object, overlapping each other by more than 50%, and taking normal stereoscopic images for 3D restitution.

- In the normal case, the tendency is to use high overlaps (both longitudinal and lateral) because of the advantages in the photogrammetric processing (producing a more robust block from a geometric point of view that provides a best estimation of self-calibration parameters, and the reduction of occlusions, along with the possibility of selecting a central part of the images with less radial distortion and relief displacement). A typical feature, for instance, of mission plans for UAS photogrammetry is the large forward (80%) and cross (60–80%) overlap to compensate for aircraft instability [1]. In any case, a balance between the number of images and the cost of processing is desirable in any photogrammetric project.

- In the case of SfM, there is the use of a large amount of convergent photographs that covers all objects redundantly from several points of view.

- Variation of the distance to the object (height differences in vertical shots) can cause problems in stereoscopic coverage (normal case) or great differences in ground sample distance (GSD). This variation also impacts SfM because the features no longer match.

- Displacement of the image during the capture: this issue is especially important when using cruising acquisition mode or fixed-wing RPAS.

- The presence of obstacles and restricted areas.

- The distance and height of the take-off point.

2. Mission Planning Applications

- Integrated map: Consists of the obtaining of cartographic information to be used as a base map. Depending on the system, the base map can be a vector map, such as OpenStreetMap (e.g., Astec Navigator) (Figure 1a), with the possibility of uploading a georeferenced image to be used offline. However, the majority of base maps use georeferenced images downloaded from the Internet using map servers (e.g., Google or Bing) (Figure 1b). In these cases, the availability of an Internet connection is required in order to download these images, but once the images of the study zone are downloaded these applications can be run offline. The use of georeferenced image maps provides additional information for planning with respect to vector maps (e.g., identification of obstacles—trees, electricity lines, etc.).

- 3D visualization: Consists of the availability of 3D views of the study zone (Figure 1c). This is an interesting feature for analyzing the terrain characteristics of the flight zone using a previously available Digital Elevation Model (DEM). For example, UgCS allows the importation of 3D building models in order to consider their volume and avoid possible collisions (mainly in cities).

- Block flight planning: This is the common planning mode implemented in all systems. In this case, the study zone is defined using a rectangular polygon or a polygonal line (Figure 1d). Flight parameters can be derived from the definition of these limits. In addition, some tools use DEMs to plan flights (e.g., eMotion X and UgCS) (Figure 1c). Another important feature to consider is the possibility of obtaining the footprints of images. In this case, the application calculates and represents the projection of the photographs onto the ground, providing information about the coverages, overlaps, etc. This projection is usually realized in 2D considering a flat terrain or using only the four corners of the images and considering a real 3D projection over the terrain.

- Corridor or linear flight planning: This feature allows users to plan flights covering study areas following a specific linear element (roads, rivers, etc.) (Figure 1e).

- Circular or cylindrical flight planning: Circular flights are commonly implemented in all applications while cylindrical flights are simulated by adding several circular flights at different heights. This type of flight allows objects to be covered by convergent photographs (Figure 1f).

- VIM: Vector Integrated Map.

- OIM: Orthoimages Integrated Map.

- 3DV: 3D Visualization.

- RBP: Block flight Planning (defined by a rectangle).

- PBP: Block flight Planning (defined by a polygon).

- DEM: Digital Elevation Model.

- CFP: Corridor Flight Planning.

- CCFP: Circular—Cylindrical Flight Planning.

3. Method

- Great variability of camera-object distances (differences in height in the case of vertical flights and depth in vertical elements such as buildings).

- Presence of buildings or vertical objects to be surveyed.

- Presence of occlusions or obstacles which block direct vision to the object (need of oblique images).

- Requirement of stereo restitution.

- Objects to be studied elevated from the ground (not included in DEM).

- Base map using a service of orthoimages and/or DEM.

- 3D visualization.

- Block and corridor flights considering a DEM.

- Use of tilt images in planning.

- Use of linear elements as real elements with physical characteristics (area, direction, inclination, etc.).

- Circular and cylindrical flight planning considering CIPA recommendations for architectural surveys.

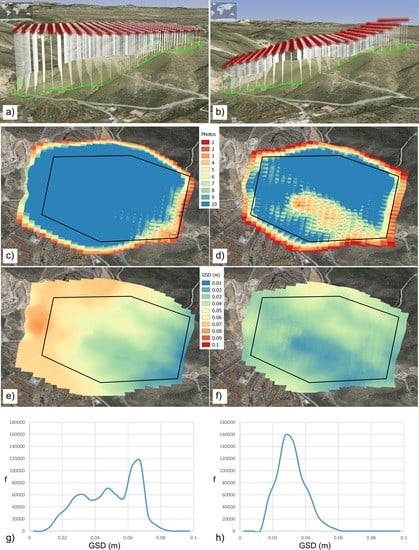

- Quality control determining photographs coverage-maps and minimum, mean, and maximum GSD (considering DEM) before and after the flight execution.

4. Software Development

- Compatibility with all operating systems where Java is available.

- A basic DEM is obtained from the Shuttle Radar Topographic Mission (SRTM) along with procedures for calculating intersections with the terrain (ray casting).

- Importation and exportation tools for the main geospatial data sources (e.g., GeoTIFF, Shapefile).

- Connection protocols to Web Map Service (WMS), Web Feature Service (WFS), and Web Coverage Service (WCS) and maps from Bing Maps and OpenStreetMap.

- Use of the WGS84 reference system (system used in RPAS).

- Use of UTM projection in area and distance calculations.

- Use of the Earth Gravitational Model 1996 (EGM96) to determine orthometric heights.

4.1. Conventional or Block Flight

4.2. Corridor Flight

4.3. Combined Flight

4.4. Polygon Extrusion Flight

5. Applications and Results

5.1. Block Flight Project

5.2. Corridor Flight Project

5.3. Vertical Object Case

5.4. Terrestrial Photogrammetry Using Masts

5.5. Quality Control of the RPAS Project

6. Discussion

- Flight adjusted to DEM by strips (DEM-S).

- Corridor Flight Planning by Objects (considering interest trajectories) (CFP-O).

- Block and Corridor Combined Planning (B+C-CP).

- Polygon Extrusion Planning (PEP).

- Calculation of Coverage Maps using real Footprints projected onto the terrain (CM-RF).

- Calculation of GSD value at the center of the photographs (C-GSD).

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef] [Green Version]

- Xiang, T.; Xia, G.; Zhang, L. Mini-unmanned aerial vehicle-based remote sensing: Techniques, applications, and prospects. IEEE Geosci. Remote Sens. Mag. 2019, 7, 29–63. [Google Scholar] [CrossRef] [Green Version]

- Sharma, J.B. (Ed.) Applications of Small Unmanned Aircraft Systems: Best Practices and Case Studies; CRC Press: New York, NY, USA, 2019. [Google Scholar]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Weibel, R.; Hansman, R.J. Safety considerations for operation of different classes of UAVS in the NAS. In AIAA 4th Aviation Technology, Integration and Operations (ATIO) Forum, 6421; American Institute of Aeronautics and Astronautics: Chicago, IL, USA, 2004. [Google Scholar]

- Arjomandi, M.; Agostino, S.; Mammone, M.; Nelson, M.; Zhou, T. Classification of unmanned aerial vehicles. Mech. Eng. 2006, 3016, 1–48. [Google Scholar]

- Gupta, S.G.; Ghonge, M.M.; Jawandhiya, P.M. Review of unmanned aircraft system (UAS). Int. J. Adv. Res. Comput. Eng. Technol. (IJARCET) 2013, 2, 1646–1658. [Google Scholar] [CrossRef]

- Ren, L.; Castillo-Effen, M.; Yu, H.; Johnson, E.; Yoon, Y.; Nakamura, T.; Ippolito, C.A. Small Unmanned Aircraft System (sUAS) categorization framework for low altitude traffic services. In Proceedings of the IEEE/AIAA 36th Digital Avionics Systems Conference (DASC), St. Petersburg, FL, USA, 17–21 September 2017. [Google Scholar]

- Boletín Oficial del Estado (BOE). Real decreto 1036/2017 de 15 de diciembre. Bol. Estado 2017, 316, 129609–129641.

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Global Drone Regulations Database. Available online: https://www.droneregulations.info (accessed on 5 May 2020).

- Gandor, F.; Rehak, M.; Skaloud, J. Photogrammetric mission planner for RPAS. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 61–65. [Google Scholar] [CrossRef] [Green Version]

- Karara, H.M. Close-range photogrammetry: Where are we and where are we heading? Photogramm. Eng. Remote Sens. 1985, 51, 537–544. [Google Scholar]

- Schwidefsky, K.; Ackermann, F. Photogrammetrie; BG Teubner: Stuttgart, Germany, 1976. [Google Scholar]

- Warner, W.S.; Graham, R.W.; Read, R.E. Small format aerial photography. ISPRS J. Photogramm. Remote Sens. 1996, 51, 316–317. [Google Scholar]

- Kraus, K. Photogrammetry: Geometry from Images and Laser Scans; Walter de Gruyter: Berlin, Germany, 2011. [Google Scholar]

- Atkinson, K.B. Close Range Photogrammetry and Machine Vision; Whittles Publishing: Caithness, Scotland, 1996. [Google Scholar]

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close Range Photogrammetry: Principles, Techniques and Applications; John Wiley & Sons: New York, NY, USA, 2006. [Google Scholar]

- Fraser, C. Advances in close-range photogrammetry. In Photogrammetric Week; University of Stuttgart: Stuttgart, Germany, 2015; pp. 257–268. [Google Scholar]

- Waldhäusl, P.; Ogleby, C.L. 3 × 3 rules for simple photogrammetric documentation of architecture. ISPRS Int. Arch. Photogramm. Remote Sens. 1994, 30, 426–429. [Google Scholar]

- CIPA. Photogrammetric Capture, the ‘3 × 3’ Rules. Available online: https://www.cipaheritagedocumentation.org/wp-content/uploads/2017/02/CIPA__3x3_rules__20131018.pdf (accessed on 5 May 2020).

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B 1979, 203, 405–426. [Google Scholar]

- Koenderink, J.J.; Van Doorn, A.J. Affine structure from motion. J. Opt. Soc. Am. A 1991, 8, 377–385. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Brutto, M.L.; Meli, P. Computer vision tools for 3D modelling in archaeology. In Proceedings of the International Conference on Cultural Heritage, Lemesos, Cyprus, 29 October–3 November 2012. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications. Texts in Computer Science; Springer: London, UK, 2010. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Damilano, L.; Guglieri, G.; Quagliotti, F.; Sale, I.; Lunghi, A. Ground control station embedded mission planning for UAS. J. Intell. Robot. Syst. 2013, 69, 241–256. [Google Scholar] [CrossRef]

- Pepe, M.; Fregonese, L.; Scaioni, M. Planning airborne photogrammetry and remote-sensing missions with modern platforms and sensors. Eur. J. Remote Sens. 2018, 51, 412–435. [Google Scholar] [CrossRef]

- Intel Asctec Navigator. Available online: https://downloadcenter.intel.com/download/26931/Downloads-for-Intel-Falcon-8-UAS (accessed on 5 May 2020).

- Mikrokopter Tool. Available online: https://wiki.mikrokopter.de/en/Software (accessed on 5 May 2020).

- Drone Deploy. Available online: https://www.dronedeploy.com/ (accessed on 5 May 2020).

- ArduPilot Mission Planner. Available online: https://ardupilot.org/planner/ (accessed on 5 May 2020).

- UgCS. Available online: https://www.ugcs.com/ (accessed on 5 May 2020).

- Hernandez-Lopez, D.; Felipe-Garcia, B.; Gonzalez-Aguilera, D.; Arias-Perez, B. An automatic approach to UAV flight planning and control for photogrammetric applications. Photogramm. Eng. Remote Sens. 2013, 79, 87–98. [Google Scholar] [CrossRef]

- Israel, M.; Mende, M.; Keim, S. UAVRC, a generic MAV flight assistance software. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 287–291. [Google Scholar] [CrossRef] [Green Version]

- Mera Trujillo, M.; Darrah, M.; Speransky, K.; DeRoos, B.; Wathen, M. Optimized flight path for 3D mapping of an area with structures using a multirotor. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 905–910. [Google Scholar]

- Martin, R.A.; Blackburn, L.; Pulsipher, J.; Franke, K.; Hedengren, J.D. Potential benefits of combining anomaly detection with view planning for UAV infrastructure modeling. Remote Sens. 2017, 9, 434. [Google Scholar] [CrossRef] [Green Version]

- DJI Ground Station Pro. Available online: https://www.dji.com/es/ground-station-pro (accessed on 5 May 2020).

- Sensefly eMotion. Available online: https://www.sensefly.com/software/emotion/ (accessed on 5 May 2020).

- Pix4D Capture. Available online: https://www.pix4d.com/product/pix4dcapture/ (accessed on 5 May 2020).

- Map Pilot for DJI. Available online: https://support.dronesmadeeasy.com/ (accessed on 5 May 2020).

- Wolf, P.R.; Dewitt, B.A.; Wilkinson, B.E. Elements of Photogrammetry with Application in GIS, 4th ed.; Mc Graw-Hill: Boston, MA, USA, 2014. [Google Scholar]

- Mikhail, E.M.; Bethel, J.S.; McGlone, J.C. Introduction to Modern Photogrammetry; John Wiley & Sons: New York, NY, USA, 2001. [Google Scholar]

- McGlone, J.C. Manual of Photogrammetry, 6th ed.; American Society of Photogrammetry and Remote Sensing: Bethesda, MD, USA, 2013. [Google Scholar]

- Appel, A. Some techniques for shading machine renderings of solids. In Proceedings of the AFIPS Spring Joint Computer Conference, Atlantic City, NJ, USA, 30 April–2 May 1968; pp. 37–45. [Google Scholar]

- Whitted, T. An improved illumination model for shaded display. In Proceeding of the 6th Annual Conference on Computer Graphics and Interactive Techniques, Seattle, WA, USA, 14–18 July 1980; Volume 23, pp. 343–349. [Google Scholar]

- Foley, J.D.; van Dam, A.; Feiner, S.K.; Hughes, J.F. Computer Graphics: Principle and Practice; Addison-Wesley: Boston, MA, USA, 1995. [Google Scholar]

- Fan, M.; Tang, M.; Dong, J. A review of real-time terrain rendering techniques. In Proceedings of the 8th International Conference on Computer Supported Cooperative Work in Design, Xiamen, China, 26–28 May 2004; pp. 685–691. [Google Scholar]

- Höhle, J. Oblique aerial images and their use in cultural heritage documentation. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W2, 349–354. [Google Scholar]

- Tonkin, T.N.; Midgley, N.G. Ground-control networks for image based surface reconstruction: An investigation of optimum survey designs using UAV derived imagery and structure-from-motion photogrammetry. Remote Sens. 2016, 8, 786. [Google Scholar] [CrossRef] [Green Version]

- MFPlanner3D. Available online: https://github.com/jmgl0003/MFPlanner3D (accessed on 5 May 2020).

- NASA Worldwind. Available online: http://github.com/NASAWorldWind (accessed on 5 May 2020).

- Trajkovski, K.K.; Grigillo, D.; Petrovic, D. Optimization of UAV flight missions in steep terrain. Remote Sens. 2020, 12, 1293. [Google Scholar] [CrossRef] [Green Version]

- Cardenal, J.; Pérez, J.L.; Mata, E.; Delgado, J.; Gómez-López, J.M.; Colomo, C.; Mozas, A. Recording and modeling of fortresses and castles with UAS. Some study cases in Jaén (Southern Spain). ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 207–214. [Google Scholar] [CrossRef]

- Pérez-García, J.L.; Mozas-Calvache, A.T.; Gómez-López, J.M.; Jiménez-Serrano, A. 3D modelling of large archaeological sites using images obtained from masts. Application to Qubbet el-Hawa site (Aswan, Egypt). Archaeol. Prospect. 2019, 26, 121–135. [Google Scholar]

- Yang, Y.; Lin, Z.; Liu, F. Stable imaging and accuracy issues of low-altitude unmanned aerial vehicle photogrammetry systems. Remote Sens. 2016, 8, 316. [Google Scholar] [CrossRef] [Green Version]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of unmanned aerial vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

| Application | VIM | OIM | 3DV | RBP | PBP | DEM | CFP | CCFP |

|---|---|---|---|---|---|---|---|---|

| DJI GS Pro [42] | ✓ | ✓ | ✓ | ✓ | ||||

| AscTec Navigator [33] | ✓ | * | ✓ | ✓ | ✓ | ✓ | ||

| eMotion X [43] | ✓ | ✓ | ✓ | ✓ | ✓ | *** | ✓ | |

| MikroKopter Tool [34] | ✓ | ✓ | *** | ✓ | ||||

| Mission Planner [36] | ✓ | ✓ | ✓ | ✓ | ** | *** | ✓ | |

| DroneDeploy [35] | ✓ | ✓ | ✓ | |||||

| Pix4D Capture [44] | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Map Pilot for DJI [45] | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| UgCS [37] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | *** | ✓ |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gómez-López, J.M.; Pérez-García, J.L.; Mozas-Calvache, A.T.; Delgado-García, J. Mission Flight Planning of RPAS for Photogrammetric Studies in Complex Scenes. ISPRS Int. J. Geo-Inf. 2020, 9, 392. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9060392

Gómez-López JM, Pérez-García JL, Mozas-Calvache AT, Delgado-García J. Mission Flight Planning of RPAS for Photogrammetric Studies in Complex Scenes. ISPRS International Journal of Geo-Information. 2020; 9(6):392. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9060392

Chicago/Turabian StyleGómez-López, José Miguel, José Luis Pérez-García, Antonio Tomás Mozas-Calvache, and Jorge Delgado-García. 2020. "Mission Flight Planning of RPAS for Photogrammetric Studies in Complex Scenes" ISPRS International Journal of Geo-Information 9, no. 6: 392. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9060392