A Real-Time Infrared Stereo Matching Algorithm for RGB-D Cameras’ Indoor 3D Perception

Abstract

:1. Introduction

2. Related Work

3. Methodology

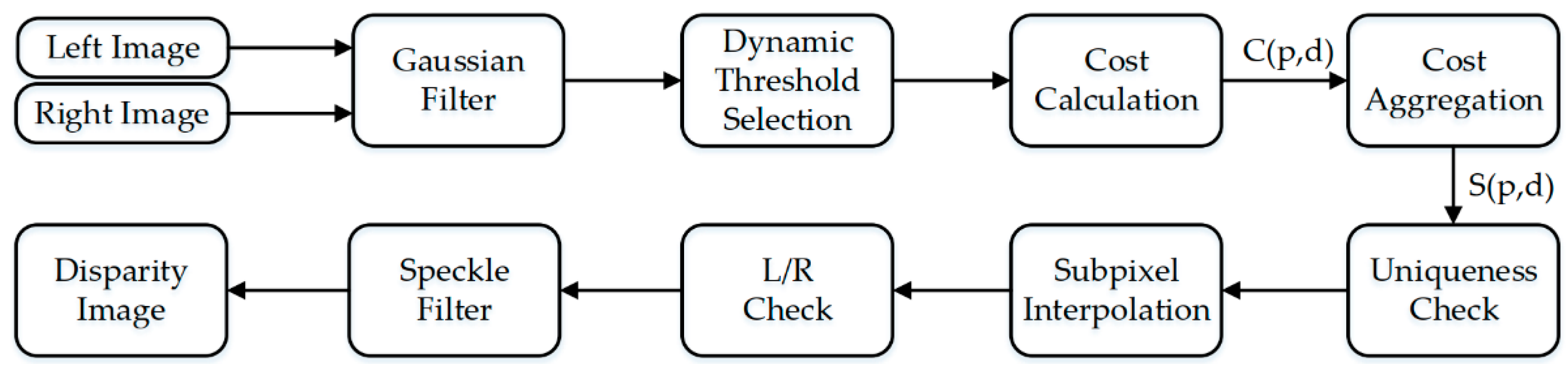

3.1. Stereo Matching Algorithm

3.1.1. Stereo Matching Algorithm of the R200

3.1.2. Block Matching Algorithm

3.1.3. Semi-Global Matching Algorithm

3.1.4. Our Infrared Semi-Global Stereo Matching Algorithm

- (1)

- Uniqueness test. The minimum computed cost function value should be smaller than the second-best value to a certain extent. Otherwise, the match will be considered invalid.

- (2)

- Sub-pixel interpolation. Since the image samples the real world, the disparity image cannot be exactly equal to the disparity of its corresponding object point. As there is a certain deviation, it is difficult to meet the needs of high-precision 3D perception and 3D reconstruction. Therefore, sub-pixel interpolation is needed to improve accuracy. The interpolation formulas are shown in Formulas (10) and (11). Its essence is a parabolic interpolation: the disparity is the minimum value of the parabola.where d is the original estimate disparity at this point, Sp is the aggregated costs.

- (3)

- Left-Right Consistency (LRC) check to eliminate errors.

- (4)

- Point cloud growth. The point cloud in object space can be restored from the disparity image. There is no depth data at the position in object space corresponding to the hole in the disparity image. The point cloud around can be used to fill it, and then it can be recovered to the disparity image, so as to repair the hole in the disparity image.

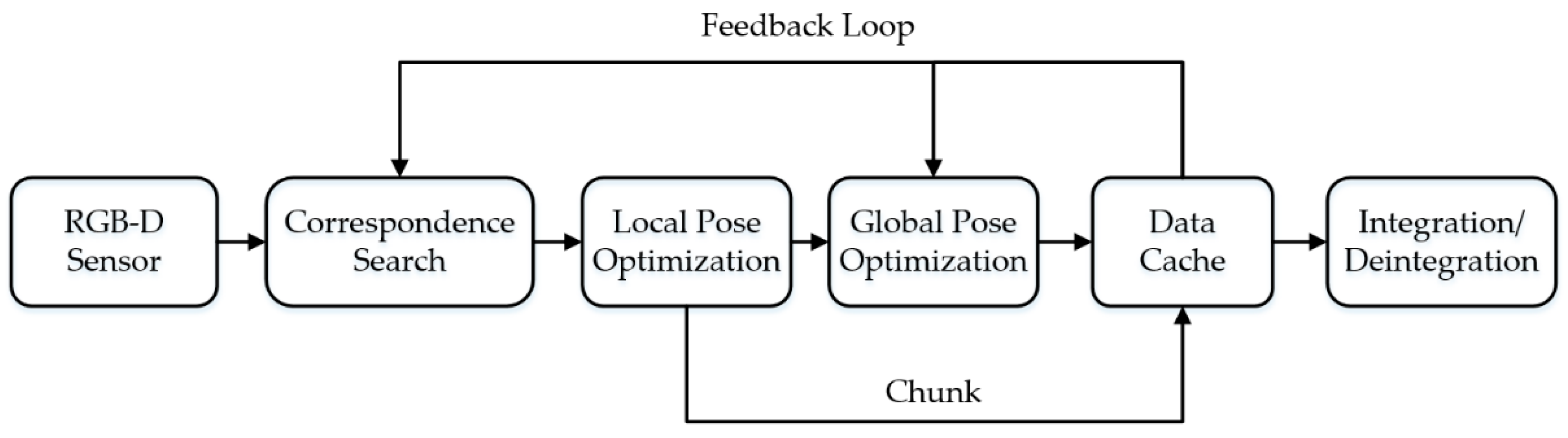

3.2. 3D Surface Model Reconstruction

4. Experiments Data and Results

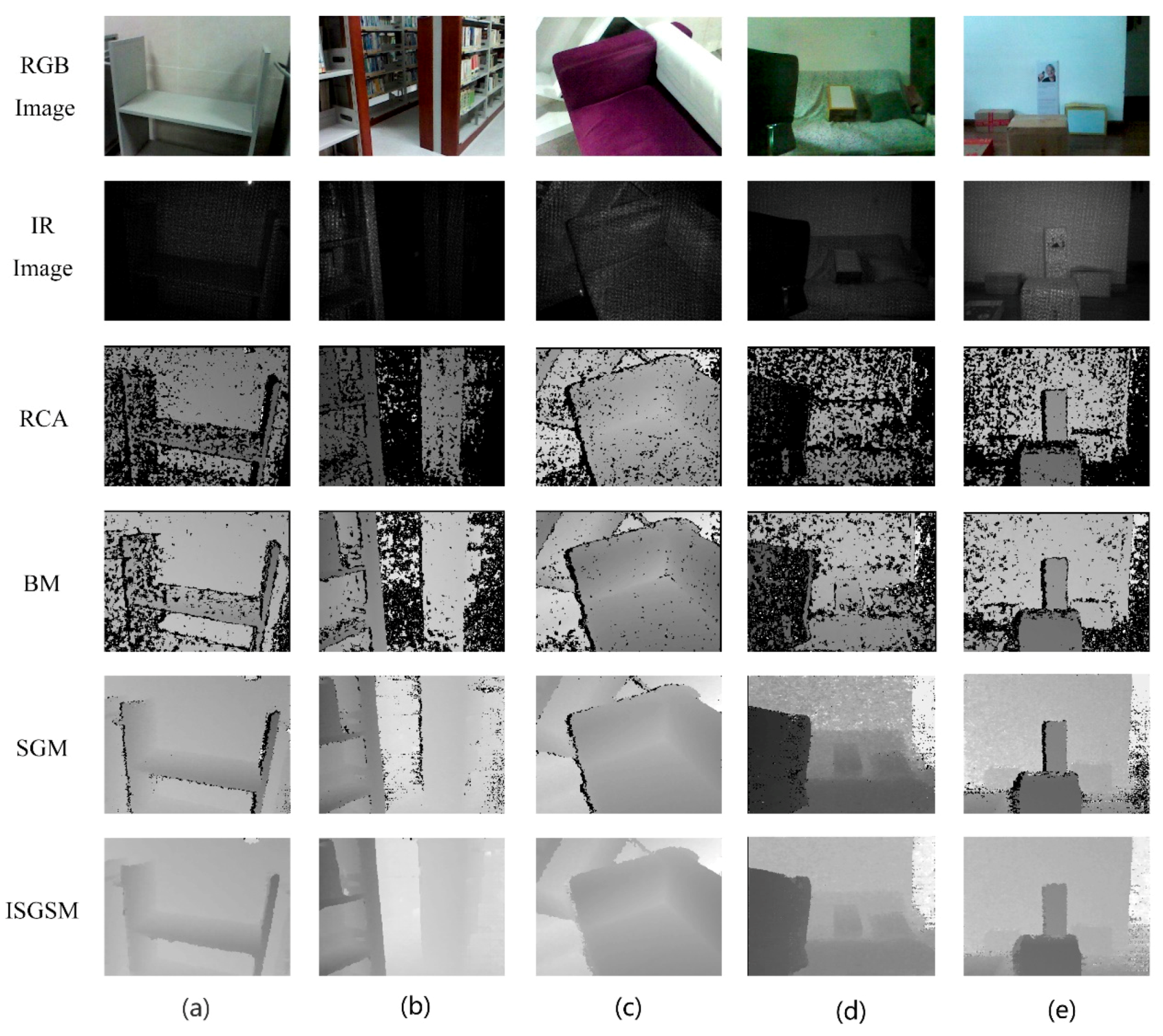

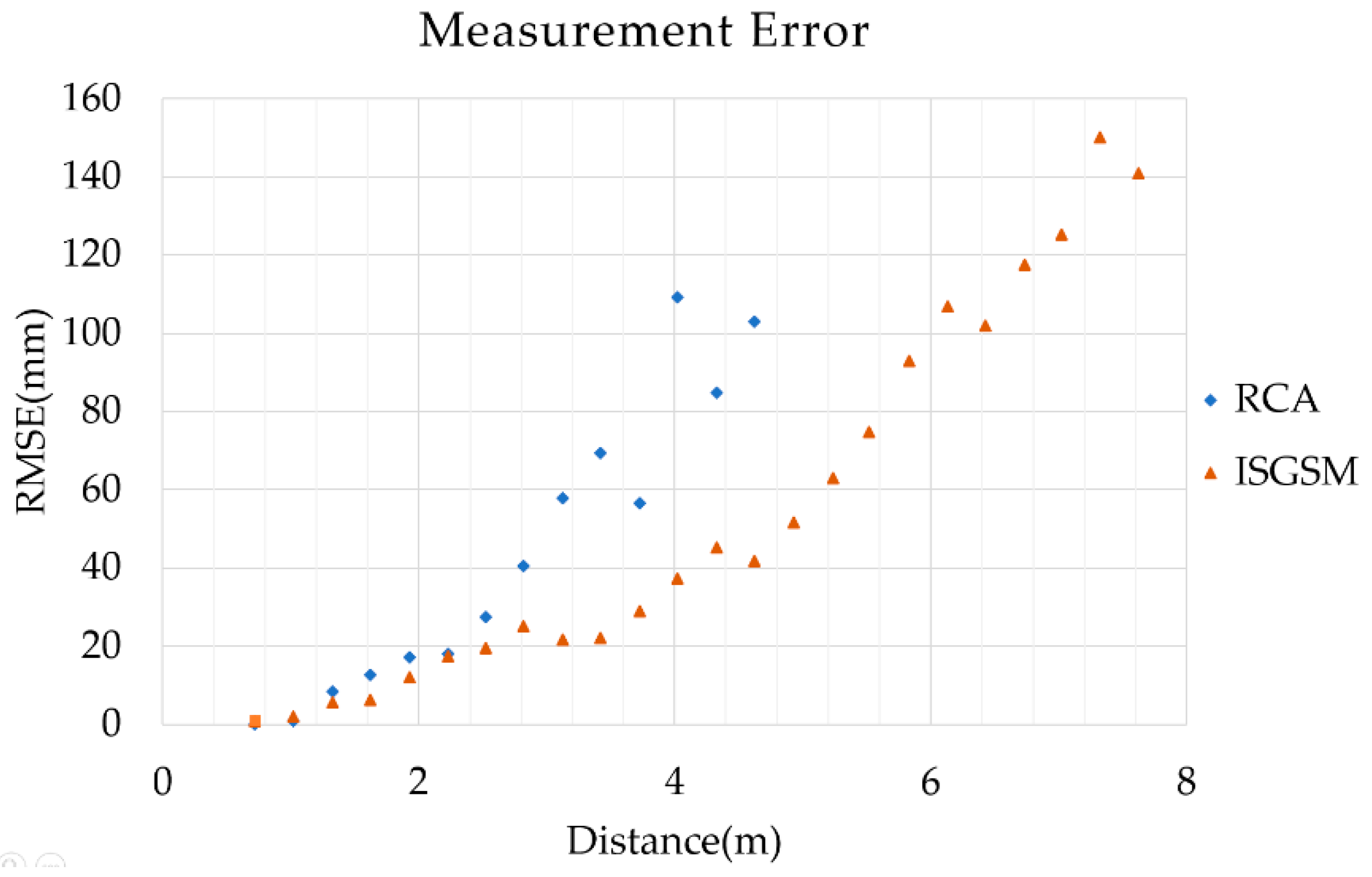

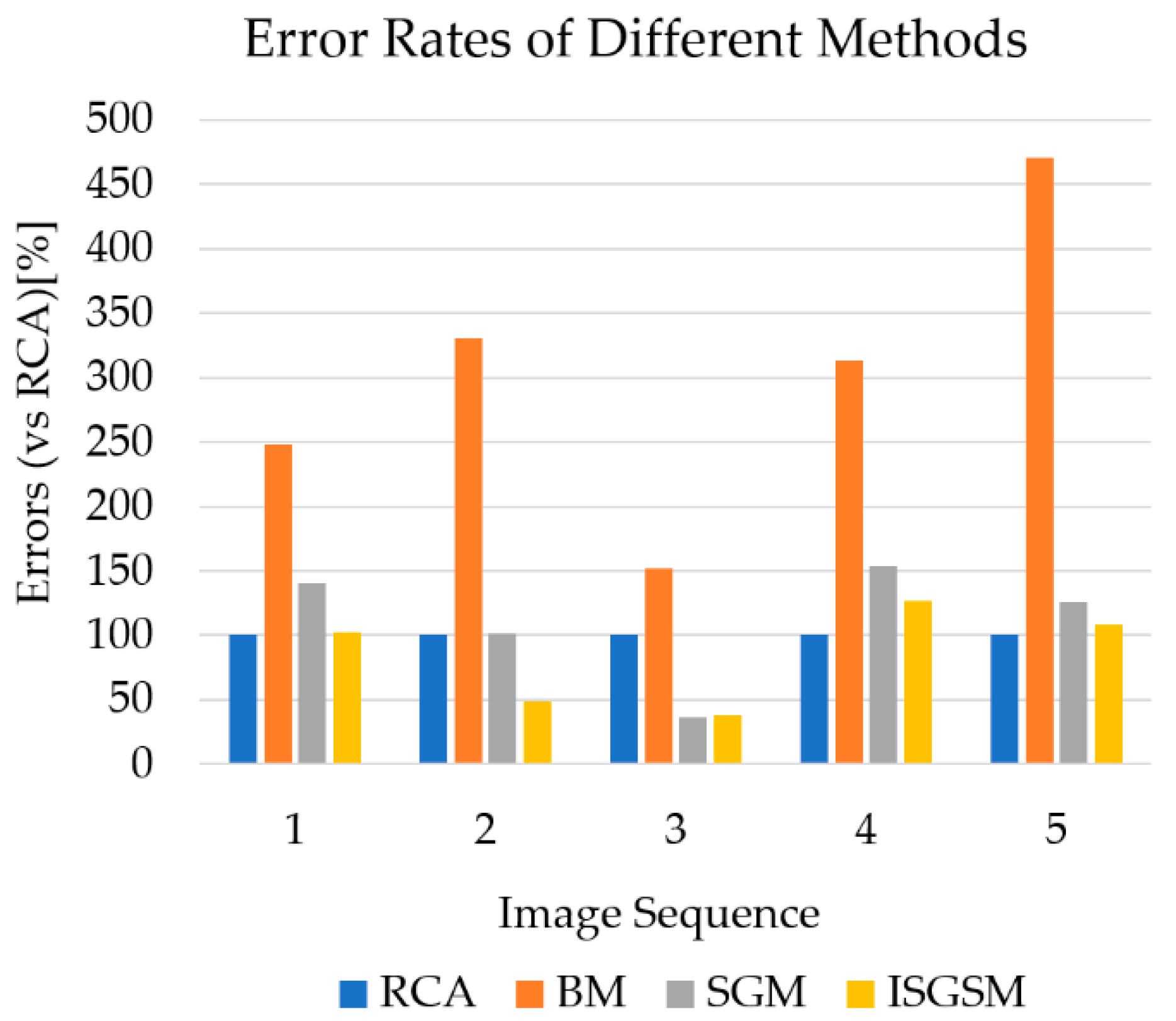

4.1. Experimental Results Comparison of Different Stereo Matching Algorithms

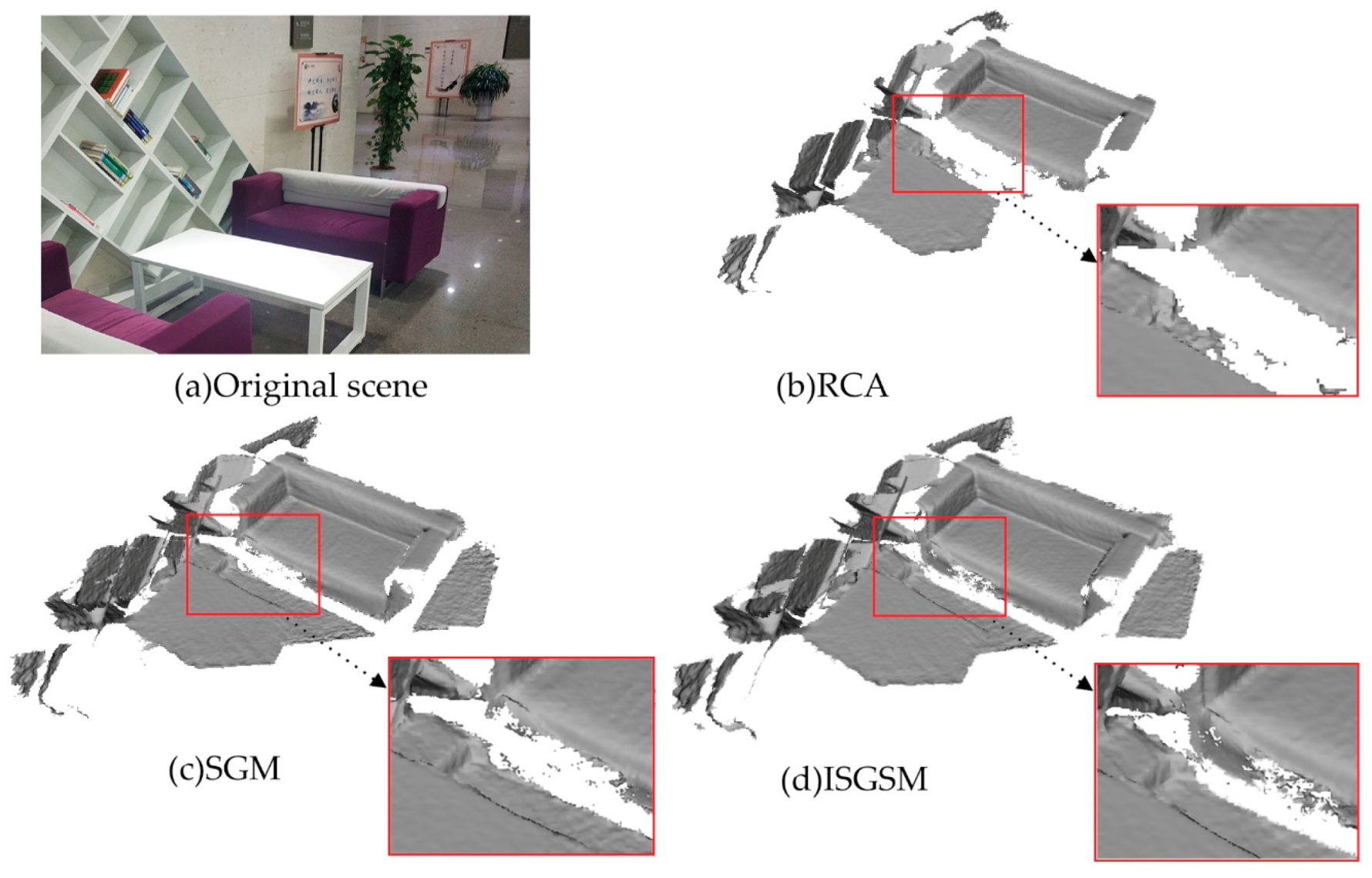

4.2. 3D Surface Modeling with Different Stereo Matching Algorithms

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Qin, J.; Li, M.; Liao, X.; Zhong, J. Accumulative Errors Optimization for Visual Odometry of ORB-SLAM2 Based on RGB-D Cameras. Isprs Int. J. Geo-Inf. 2019, 8, 581. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Chen, R.; Liao, X.; Guo, B.; Zhang, W.; Guo, G. A Precise Indoor Visual Positioning Approach Using a Built Image Feature Database and Single User Image from Smartphone Cameras. Remote Sens. 2020, 12, 869. [Google Scholar] [CrossRef] [Green Version]

- Stotko, P.; Weinmann, M.; Klein, R. Albedo estimation for real-time 3D reconstruction using RGB-D and IR data. ISPRS J. Photogramm. Remote Sens. 2019, 150, 213–225. [Google Scholar] [CrossRef]

- Bäuml, B.; Schmidt, F.; Wimböck, T.; Birbach, O.; Dietrich, A.; Fuchs, M.; Friedl, W.; Frese, U.; Borst, C.; Grebenstein, M.; et al. Catching flying balls and preparing coffee: Humanoid rollin’justin performs dynamic and sensitive tasks. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3443–3444. [Google Scholar]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef] [Green Version]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D mapping with an RGB-D camera. IEEE Trans. Robot. 2013, 30, 177–187. [Google Scholar] [CrossRef]

- Remondino, F. Heritage recording and 3D modeling with photogrammetry and 3D scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems Conference (RSS), Berkeley, CA, USA, 14–16 July 2014; pp. 109–111. [Google Scholar]

- Kuhnert, K.D.; Stommel, M. Fusion of Stereo-Camera and PMD-Camera Data for Real-Time Suited Precise 3D Environment Reconstruction. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2006, Beijing, China, 9–15 October 2006; pp. 4780–4785. [Google Scholar]

- Kuan, Y.W.; Ee, N.O.; Journal, L.S. Comparative Study of Intel R200, Kinect v2, and Primesense RGB-D Sensors Performance Outdoors. IEEE Sens. J. 2019, 19, 8741–8750. [Google Scholar] [CrossRef]

- Foix, S.; Alenya, G.; Torras, C. Lock-in time-of-flight (ToF) cameras: A survey. IEEE Sens. J. 2011, 11, 1917–1926. [Google Scholar] [CrossRef] [Green Version]

- Hisatomi, K.; Kano, M.; Ikeya, K.; Katayama, M.; Mishina, T.; Iwadate, Y.; Aizawa, K. Depth Estimation Using an Infrared Dot Projector and an Infrared Color Stereo Camera. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2086–2097. [Google Scholar] [CrossRef]

- Shengjun, T. RGB-D Indoor High-Precision 3D Mapping Method for Multi-View Image Enhancement. Ph.D. Thesis, Wuhan University, Wuhan, China, 2017. [Google Scholar]

- Jiao, J.; Yuan, L.; Tang, W.; Deng, Z.; Wu, Q. A Post-Rectification Approach of Depth Images of Kinect v2 for 3D Reconstruction of Indoor Scenes. ISPRS Int. J. Geo-Inf. 2017, 6, 349. [Google Scholar]

- Chen, H.; Wang, K.; Yang, K. Improving RealSense by Fusing Color Stereo Vision and Infrared Stereo Vision for the Visually Impaired. In Proceedings of the 2018 International Conference on Information Science and System, Wuhan, China, 20–22 April 2018; pp. 142–146. [Google Scholar]

- Hirschmuller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), Washington, DC, USA, 20 June 2005; Volume 2, pp. 807–814. [Google Scholar]

- Keselman, L.; Iselin, Woodfill, J.; Grunnet-Jepsen, A.; Bhowmik, A. Intel RealSense Stereoscopic Depth Cameras. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Brown, M.Z.; Burschka, D.; Hager, G.D. Advances in computational stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 993–1008. [Google Scholar] [CrossRef] [Green Version]

- Zabih, R.; Woodfill, J. Non-parametric local transforms for computing visual correspondence. In European Conference on Computer Vision; Springer: Berlin, Heidelberg, 1994; pp. 151–158. [Google Scholar]

- Prince, S.J.; Eagle, R.A. Weighted directional energy model of human stereo correspondence. Vis. Res. 2000, 40, 1143–1155. [Google Scholar] [CrossRef] [Green Version]

- Veksler, O. Stereo correspondence by dynamic programming on a tree. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20 June 2005; Volume 2, pp. 384–390. [Google Scholar]

- Kolmogorov, V.; Zabih, R. Computing visual correspondence with occlusions using graph cuts. In Proceedings of the Eighth IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 508–515. [Google Scholar]

- Sun, J.; Zheng, N.N.; Shum, H.Y. Stereo matching using belief propagation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 787–800. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Hirschmuller, H.; Scharstein, D. Evaluation of Stereo Matching Costs on Images with Radiometric Differences. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1582–1599. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Wenzel, K.; Rothermel, M.; Fritsch, D. SURE—The ifp Software for Dense Image Matching. In Photogrammetric Week ’13; Fritsch, D., Ed.; Wichmann: Stuttgart, Germany, 2013; pp. 59–70. [Google Scholar]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric Surface Reconstruction from Imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012. [Google Scholar]

- Yan, L.; Fei, L.; Chen, C.; Ye, Z.; Zhu, R. A Multi-View Dense Image Matching Method for High-Resolution Aerial Imagery Based on a Graph Network. Remote Sens. 2016, 8, 799. [Google Scholar] [CrossRef] [Green Version]

- Hirschmuller, H. Stereo vision in structured environments by consistent semi-global matching. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2386–2393. [Google Scholar]

- Chai, Y.; Yang, F. Semi-Global Stereo Matching Algorithm Based on Minimum Spanning Tree. In Proceedings of the 2018 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 25–27 May 2018; pp. 2181–2185. [Google Scholar]

- Loghman, M.; Kim, J. SGM-based dense disparity estimation using adaptive Census transform. In Proceedings of the International Conference on Connected Vehicles and Expo (ICCVE), Las Vegas, USA, 2–6 December 2013; pp. 592–597. [Google Scholar]

- Humenberger, M.; Engelke, T.; Kubinger, W. A census-based stereo vision algorithm using modified semi-global matching and plane fitting to improve matching quality. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), San Francisco, CA, USA, 13–18 June 2010; pp. 77–84. [Google Scholar]

- Seki, A.; Pollefeys, M. SGM-Nets: Semi-Global Matching with Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 1 July 2017; pp. 21–26. [Google Scholar]

- Yang, W.; Li, X.; Yang, B.; Fu, Y. A Novel Stereo Matching Algorithm for Digital Surface Model (DSM) Generation in Water Areas. Remote Sens 2020, 12, 870. [Google Scholar] [CrossRef] [Green Version]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. Bundlefusion: Real-time globally consistent 3d reconstruction using on-the-fly surface reintegration. ACM Trans. Graph. 2017, 36, 1. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Heikkila, J.; Silvcn, O. A Four Step Camera Calibration Procedure with Implicit Image Correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; Volume 22, pp. 1106–1112. [Google Scholar]

- Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib-doc (accessed on 11 December 2019).

- Lu, J.; Zhang, X.; Dong, D.; Fang, Y. A stereo matching algorithm based on census transformation and dynamic programming. In Proceedings of the 33rd Chinese Control Conference, Nanjing, China, 28–30 July 2014; pp. 8271–8276. [Google Scholar]

- Jin, S.; Cho, J.; Pham, X.D.; Lee, K.M.; Park, S.-K.; Kim, M.; Jeon, J. FPGA Design and Implementation of a Real-Time Stereo Vision System. Ieee Trans. Circuits Syst. Video Technol. 2010, 20, 15–26. [Google Scholar]

- Fathi, M.; Sheikhaei, S.; Tavakoli, J. Low-cost and Real-time Hardware Implementation of Stereo Vision System on FPGA. In Proceedings of the 2019 27th Iranian Conference on Electrical Engineering (ICEE), Yazd, Iran, 30 April–2 May 2019; pp. 258–263. [Google Scholar]

- Birchfield, S.; Tomasi, C. Depth discontinuities by pixel-to-pixel stereo. Int. J. Comput. Vis. 1999, 35, 269–293. [Google Scholar] [CrossRef]

- Zhu, C.; Chang, Y.Z. Stereo matching for infrared images using guided filtering weighted by exponential moving average. Iet Image Process. 2020, 14, 830. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Fitzgibbon, A.W. KinectFusion: Real-Time Dense Surface Mapping and Tracking. In Proceedings of the 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Whelan, T.; Kaess, M.; Fallon, M.; Johannsson, H.; Leonard, J.J.; McDonald, J. Kintinuous: Spatially extended KinectFusion. In Proceedings of the 3rd RSS Workshop on RGB-D: Advanced Reasoning with Depth Cameras, Sydney, Australia, 9–10 July 2012. [Google Scholar]

- Whelan, T.; Leutenegger, S.; Salas-Moreno, R.; Glocker, B.; Davison, A. ElasticFusion: Dense SLAM without a pose graph. Proc. Robot. Sci. Syst. 2015, 1–9. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, J.; Li, M.; Liao, X.; Qin, J. A Real-Time Infrared Stereo Matching Algorithm for RGB-D Cameras’ Indoor 3D Perception. ISPRS Int. J. Geo-Inf. 2020, 9, 472. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9080472

Zhong J, Li M, Liao X, Qin J. A Real-Time Infrared Stereo Matching Algorithm for RGB-D Cameras’ Indoor 3D Perception. ISPRS International Journal of Geo-Information. 2020; 9(8):472. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9080472

Chicago/Turabian StyleZhong, Jiageng, Ming Li, Xuan Liao, and Jiangying Qin. 2020. "A Real-Time Infrared Stereo Matching Algorithm for RGB-D Cameras’ Indoor 3D Perception" ISPRS International Journal of Geo-Information 9, no. 8: 472. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9080472