Leveraging OSM and GEOBIA to Create and Update Forest Type Maps

Abstract

:1. Introduction

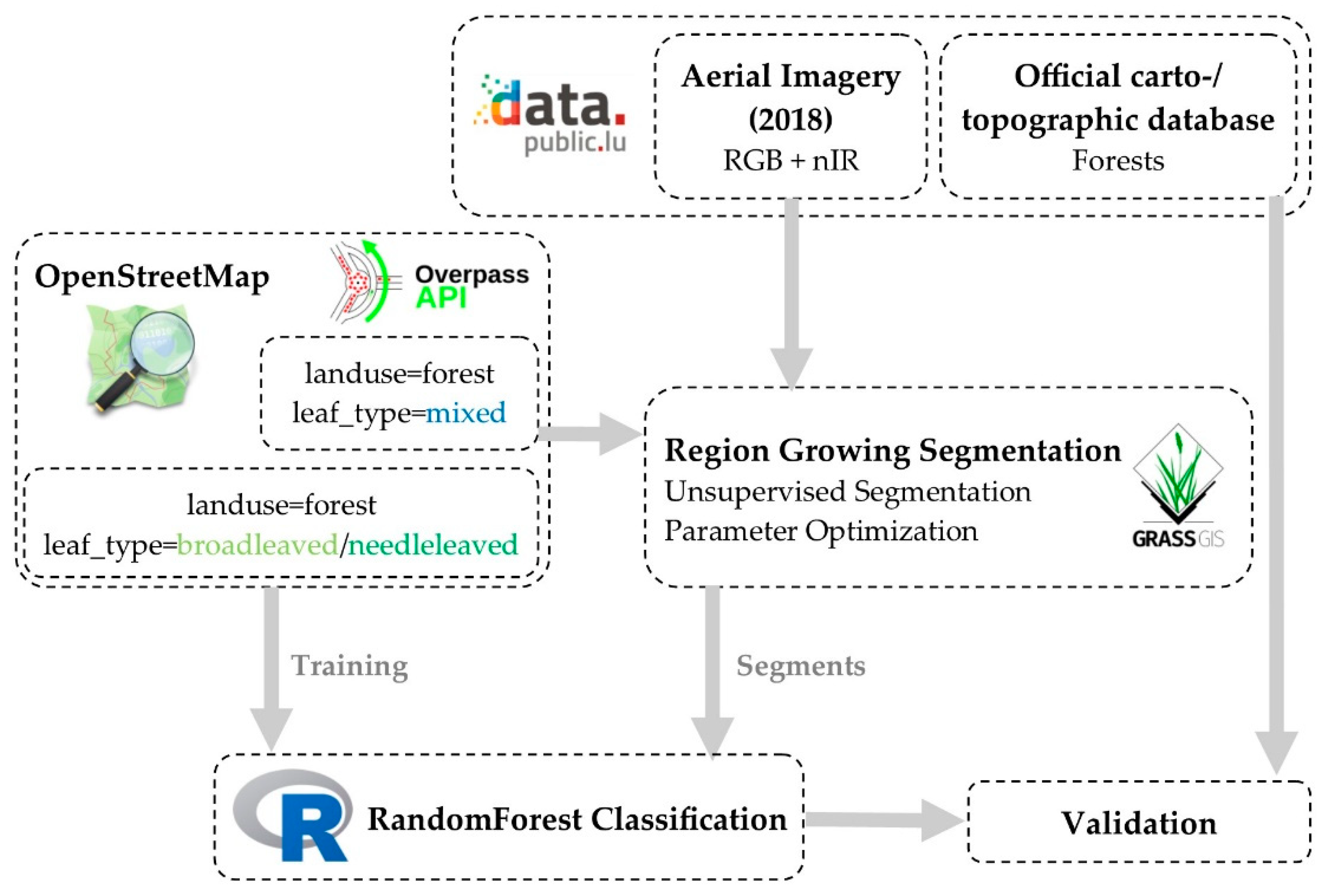

- Separation of forest types based on region growing segmentation and aerial imagery inside existing vector boundaries.

- Classification of derived segments.

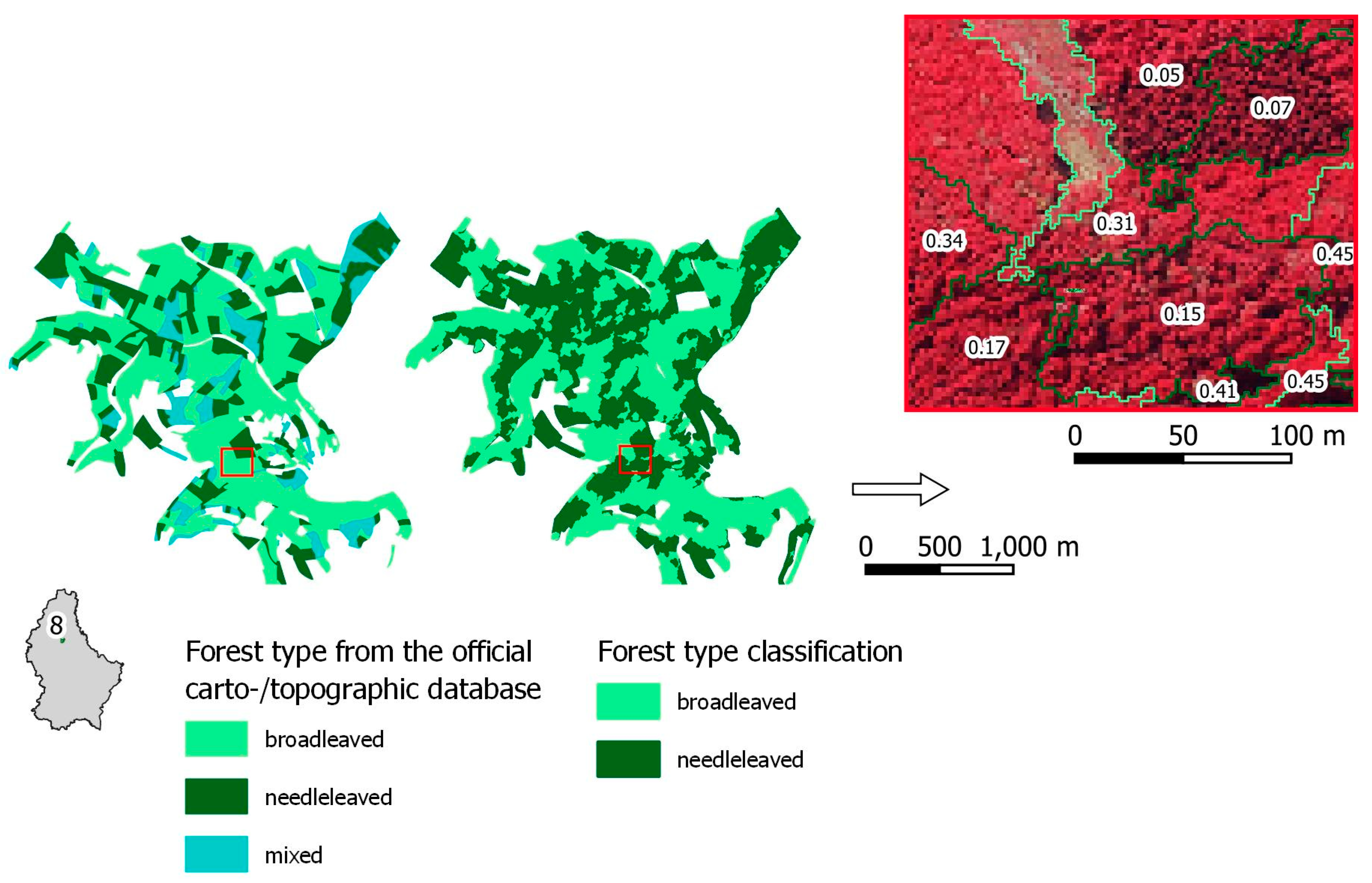

- Upgrade of OpenStreetMap geometries through spatial and thematic subdivisions of forest type.

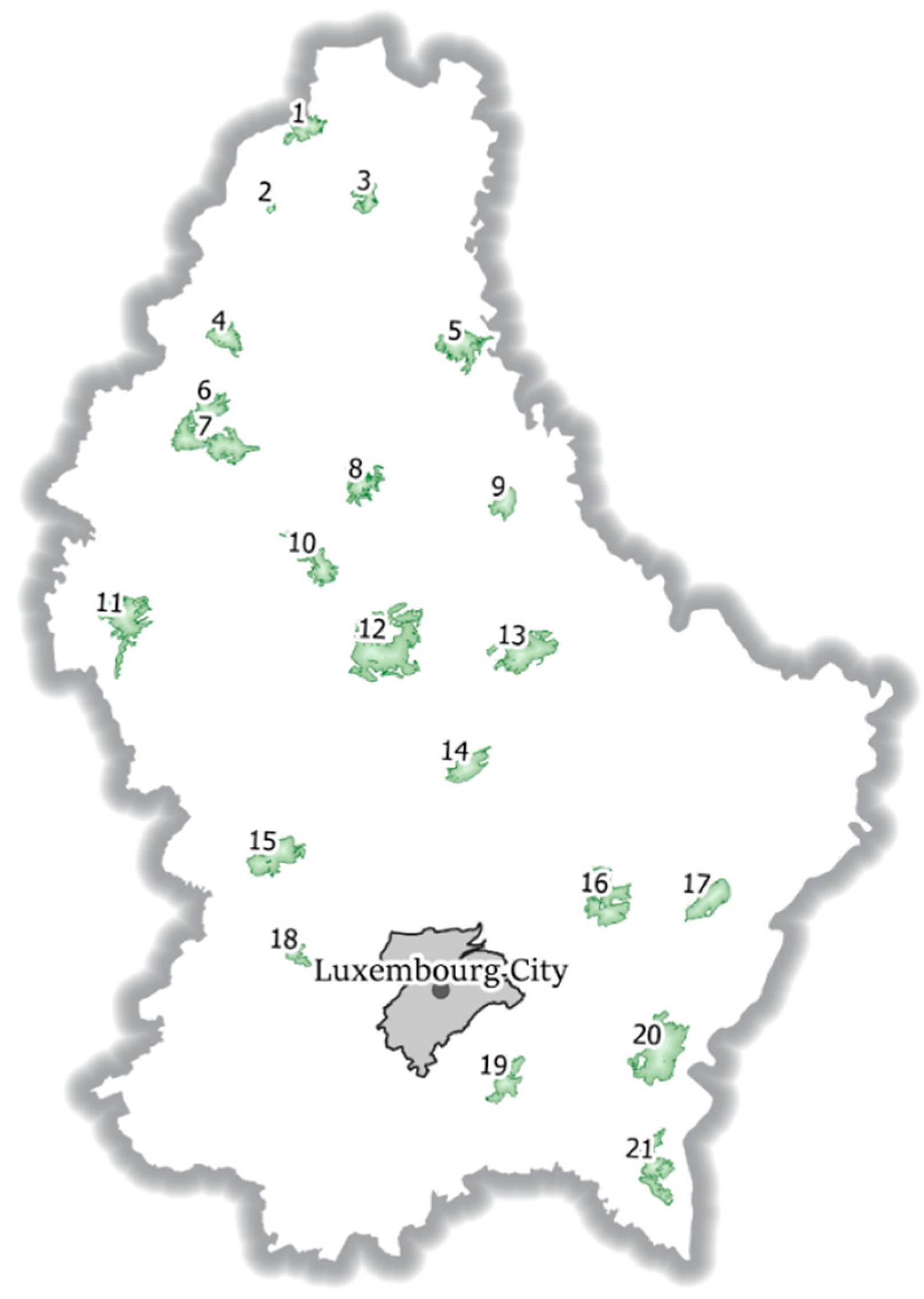

2. Study Area

3. Materials and Methods

3.1. Selection of OSM Relations

3.2. Image Segmentation inside OSM Forest Polygons

3.3. Selection of Training Areas for Classification

3.4. Classification

3.5. Validation

4. Results

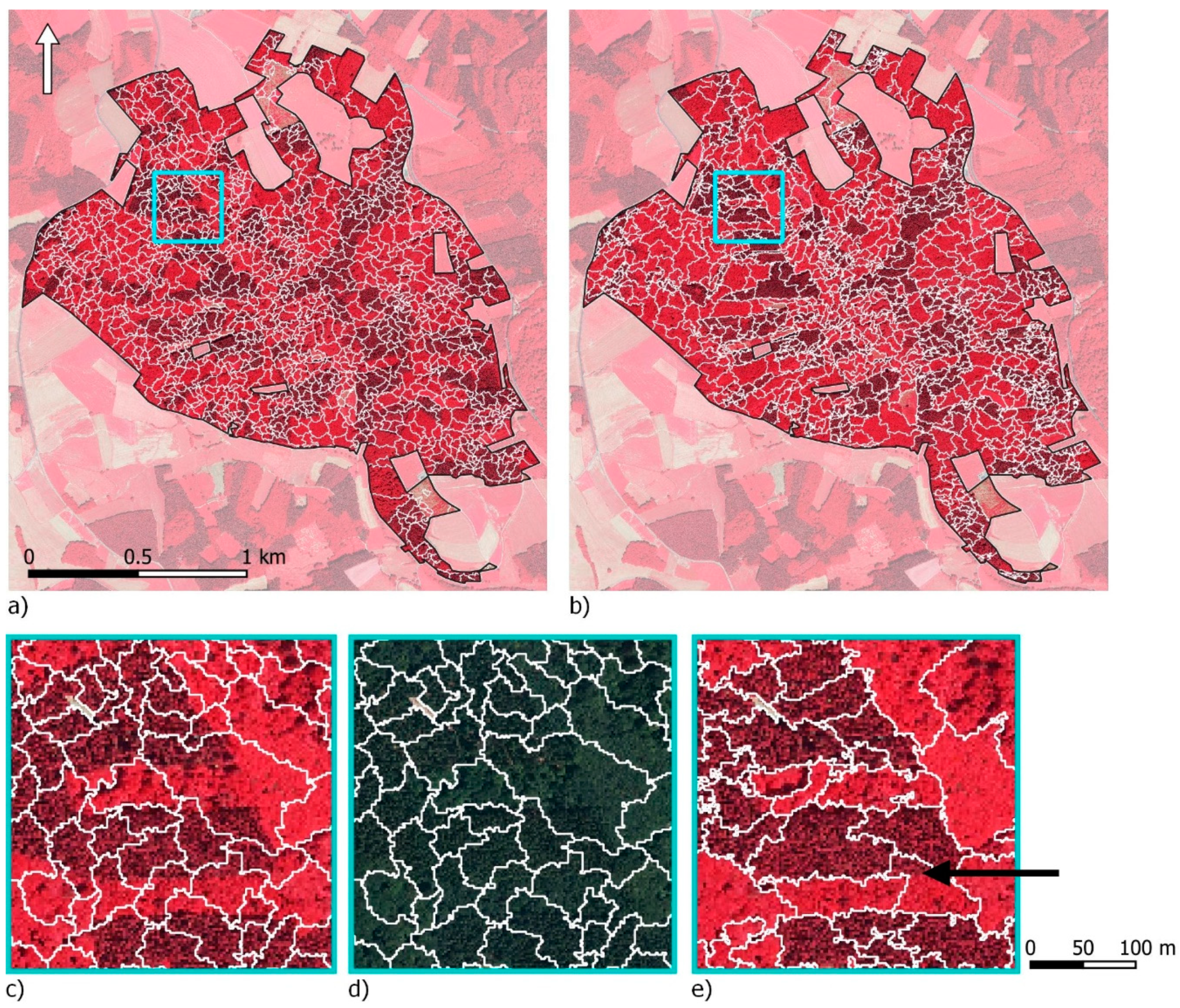

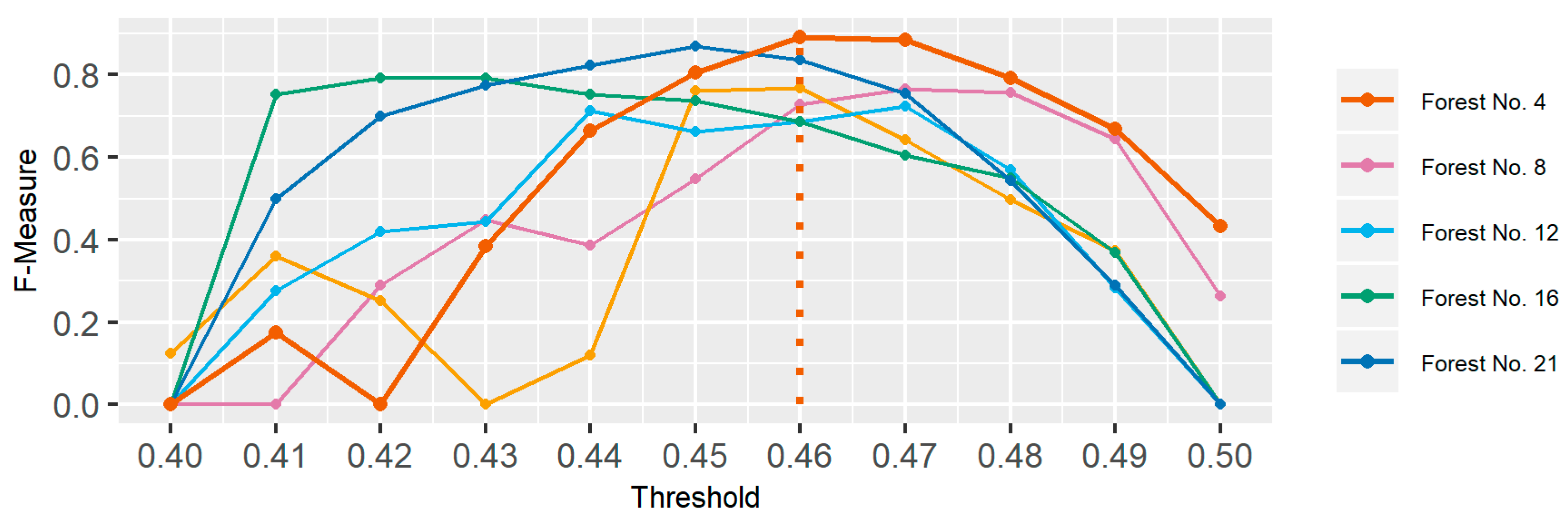

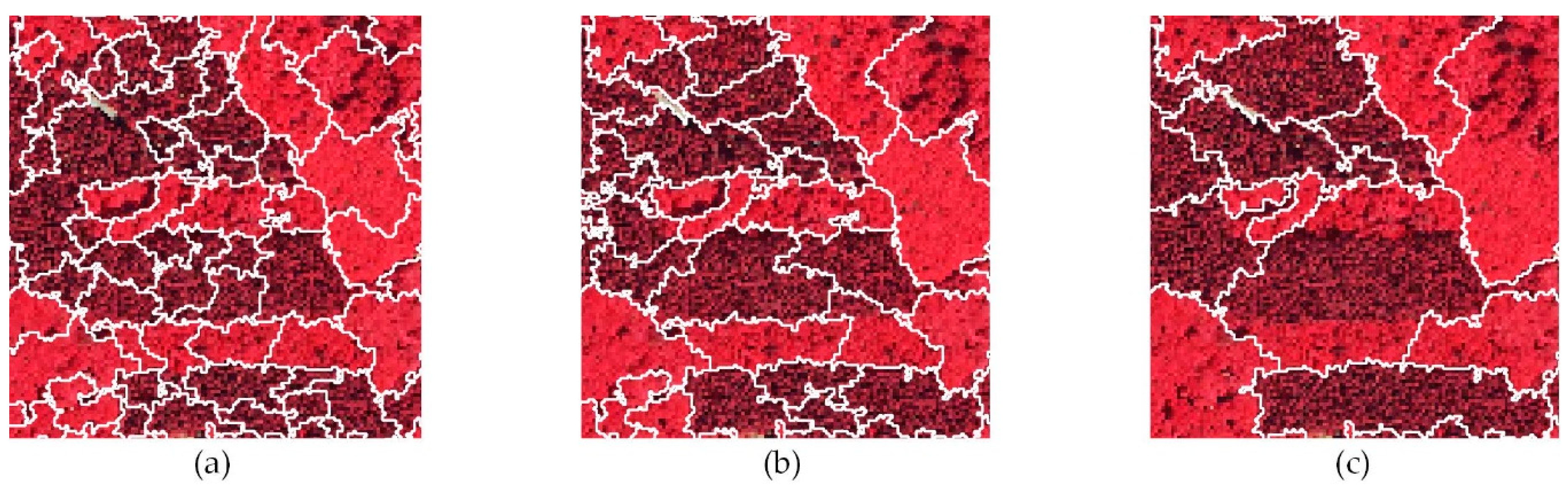

4.1. Image Segmentation inside OSM Forest Polygons

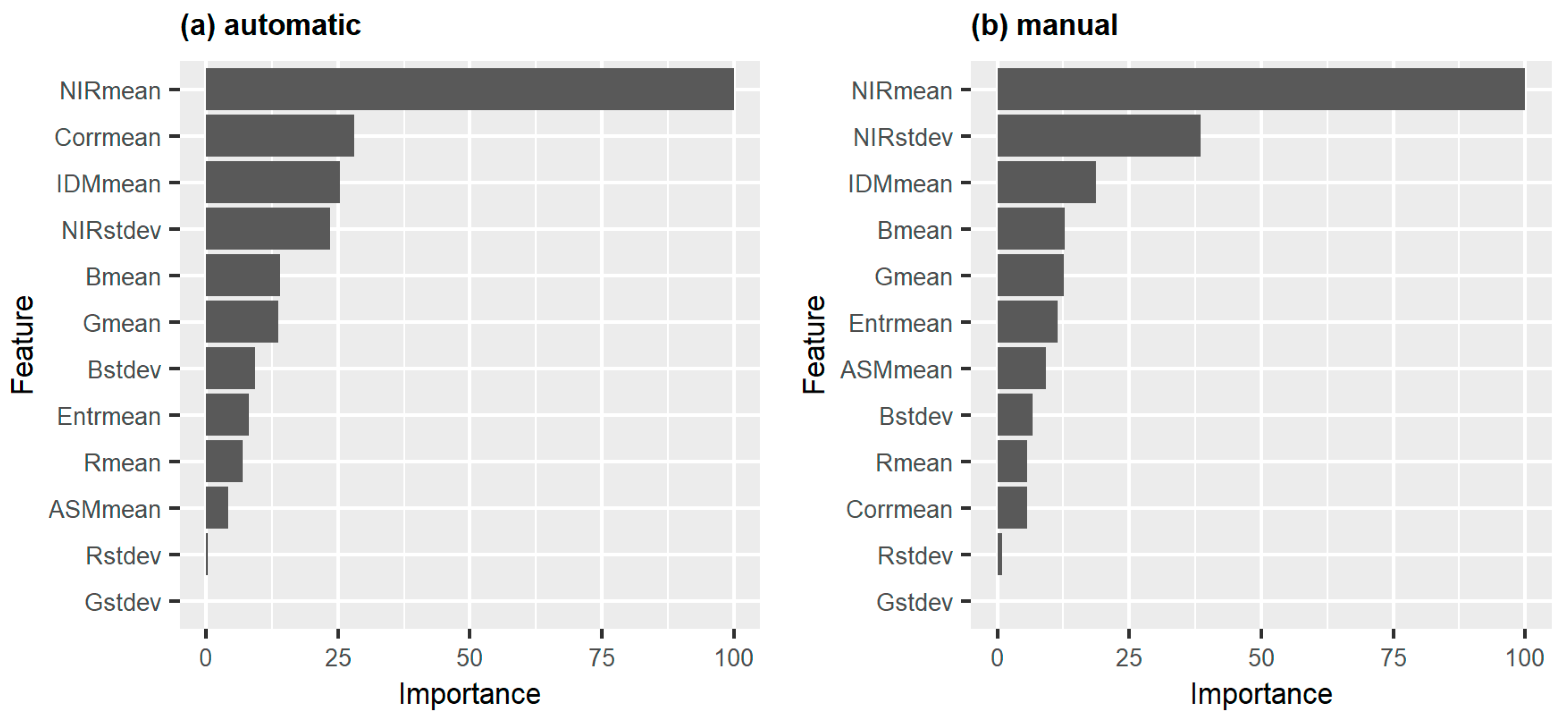

4.2. Classification and Validation

5. Discussion

5.1. Separation of Forest Types Based on Region Growing Segmentation and Aerial Imagery inside Existing Vector Boundaries

5.2. Classification of Derived Segments

- General stand border situations.

- Border situations with mixed forest.

- New growth needleleaved forests.

- Under-/Oversegmentation of structurally rich forest areas.

5.3. Upgrade of OpenStreetMap Geometries through Spatial and Thematic Subdivisions of Leaf Type

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liu, Y.; Gong, W.; Hu, X.; Gong, J. Forest Type Identification with Random Forest Using Sentinel-1A, Sentinel-2A, Multi-Temporal Landsat-8 and DEM Data. Remote Sens. 2018, 10, 946. [Google Scholar] [CrossRef] [Green Version]

- Pasquarella, V.J.; Holden, C.E.; Woodcock, C.E. Improved mapping of forest type using spectral-temporal Landsat features. Remote Sens. Environ. 2018, 210, 193–207. [Google Scholar] [CrossRef]

- Nink, S.; Hill, J.; Stoffels, J.; Buddenbaum, H.; Frantz, D.; Langshausen, J. Using Landsat and Sentinel-2 Data for the Generation of Continuously Updated Forest Type Information Layers in a Cross-Border Region. Remote Sens. 2019, 11, 2337. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.; Liu, D. Accurate mapping of forest types using dense seasonal Landsat time-series. ISPRS J. Photogramm. Remote Sens. 2014, 96, 1–11. [Google Scholar] [CrossRef]

- Kempeneers, P.; Sedano, F.; Seebach, L.; Strobl, P.; San-Miguel-Ayanz, J. Data fusion of different spatial resolution remote sensing images applied to forest-type mapping. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4977–4986. [Google Scholar] [CrossRef]

- Stoffels, J.; Hill, J.; Sachtleber, T.; Mader, S.; Buddenbaum, H.; Stern, O.; Langshausen, J.; Dietz, J.; Ontrup, G. Satellite-Based Derivation of High-Resolution Forest Information Layers for Operational Forest Management. Forests 2015, 6, 1982–2013. [Google Scholar] [CrossRef]

- Gillis, M.; Leckie, D. Forest Inventory Mapping Procedures across Canada. For. Chron. 1993, 71, 74–88. [Google Scholar]

- Tuominen, S.; Pekkarinen, A. Performance of different spectral and textural aerial photograph features in multi-source forest inventory. Remote Sens. Environ. 2005, 94, 256–268. [Google Scholar] [CrossRef]

- Hall, R.J. The roles of aerial photographs in forestry remote sensing image analysis. In Remote Sensing of Forest Environments; Springer: Boston, MA, USA, 2003; pp. 47–75. [Google Scholar]

- Lausch, A.; Erasmi, S.; King, D.; Magdon, P.; Heurich, M. Understanding Forest Health with Remote Sensing-Part I—A Review of Spectral Traits, Processes and Remote-Sensing Characteristics. Remote Sens. 2016, 8, 1029. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. Gisci. Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Smith, G.M.; Morton, R.D. Real world objects in GEOBIA through the exploitation of existing digital cartography and image segmentation. Photogramm. Eng. Remote Sens. 2010, 76, 163–171. [Google Scholar] [CrossRef]

- Kim, M.; Warner, T.A.; Madden, M.; Atkinson, D.S. Multi-scale GEOBIA with very high spatial resolution digital aerial imagery: Scale, texture and image objects. Int. J. Remote Sens. 2011, 32, 2825–2850. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Castilla, G.; St-Onge, B.; Powers, R. A multiscale geographic object-based image analysis to estimate lidar-measured forest canopy height using Quickbird imagery. Int. J. Geogr. Inf. Sci. 2011, 25, 877–893. [Google Scholar] [CrossRef]

- Lang, S.; Hay, G.J.; Baraldi, A.; Tiede, D.; Blaschke, T. Geobia Achievements and Spatial Opportunities in the Era of Big Earth Observation Data. ISPRS Int. J. Geo-Inf. 2019, 8, 474. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Madden, M.; Warner, T.A. Forest type mapping using object-specific texture measures from multispectral Ikonos imagery. Photogramm. Eng. Remote Sens. 2009, 75, 819–829. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; Van der Meer, F.; Van der Werff, H.; Van Coillie, F. Geographic object-based image analysis–towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [Green Version]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A new name for a new discipline. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 75–89. [Google Scholar]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef] [Green Version]

- Aggarwal, N.; Srivastava, M.; Dutta, M. Comparative analysis of pixel-based and object-based classification of high resolution remote sensing images—A review. Int. J. Eng. Trends Technol. 2016, 38, 5–11. [Google Scholar] [CrossRef]

- Smith, G.; Morton, D. Segmentation: The Achilles’ heel of object–based image analysis? ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 38, XXXVIII-4/C1. [Google Scholar]

- Gu, H.; Li, H.; Yan, L.; Lu, X. A framework for Geographic Object-Based Image Analysis (GEOBIA) based on geographic ontology. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 27–33. [Google Scholar] [CrossRef] [Green Version]

- Griffith, D.; Hay, G. Integrating GEOBIA, Machine Learning, and Volunteered Geographic Information to Map Vegetation over Rooftops. ISPRS Int. J. Geo-Inf. 2018, 7, 462. [Google Scholar] [CrossRef] [Green Version]

- Mason, D.C.; Corr, D.; Cross, A.; Hogg, D.C.; Lawrence, D.; Petrou, M.; Tailor, A. The use of digital map data in the segmentation and classification of remotely-sensed images. Int. J. Geogr. Inf. Syst. 1988, 2, 195–215. [Google Scholar] [CrossRef]

- Sui, D.; Goodchild, M.; Elwood, S. Volunteered geographic information, the exaflood, and the growing digital divide. In Crowdsourcing Geographic Knowledge; Springer: Dordrecht, The Netherlands, 2013; pp. 1–12. [Google Scholar]

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef] [Green Version]

- Ballatore, A.; Bertolotto, M.; Wilson, D.C. Geographic knowledge extraction and semantic similarity in OpenStreetMap. Knowl. Inf. Syst. 2012, 37, 61–81. [Google Scholar] [CrossRef] [Green Version]

- Brovelli, M.A.; Wu, H.; Minghini, M.; Molinari, M.E.; Kilsedar, C.E.; Zheng, X.; Shu, P.; Chen, J. Open Source Software and Open Educational Material on Land Cover Maps Intercomparison and Validation. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 61–68. [Google Scholar] [CrossRef] [Green Version]

- Pourabdollah, A.; Morley, J.; Feldman, S.; Jackson, M. Towards an Authoritative OpenStreetMap: Conflating OSM and OS OpenData National Maps’ Road Network. ISPRS Int. J. Geo-Inf. 2013, 2, 704–728. [Google Scholar] [CrossRef]

- Mooney, P.; Corcoran, P. Has OpenStreetMap a role in Digital Earth applications? Int. J. Digit. Earth 2013, 7, 534–553. [Google Scholar] [CrossRef] [Green Version]

- Barron, C.; Neis, P.; Zipf, A. A Comprehensive Framework for Intrinsic OpenStreetMap Quality Analysis. Trans. GIS 2014, 18, 877–895. [Google Scholar] [CrossRef]

- Zilske, M.; Neumann, A.; Nagel, K. OpenStreetMap for Traffic Simulation; Technische Universität Berlin: Berlin, Germany, 2015. [Google Scholar]

- Zhao, P.; Jia, T.; Qin, K.; Shan, J.; Jiao, C. Statistical analysis on the evolution of OpenStreetMap road networks in Beijing. Phys. A Stat. Mech. Its Appl. 2015, 420, 59–72. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Wang, A.; Bao, T.; Tian, S. Density and diversity of OpenStreetMap road networks in China. J. Urban Manag. 2015, 4, 135–146. [Google Scholar] [CrossRef] [Green Version]

- Luxen, D.; Vetter, C. Real-time routing with OpenStreetMap data. In Proceedings of the 19th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Chicago, IL, USA, 1–4 November 2011; pp. 513–516. [Google Scholar]

- Fan, H.; Zipf, A.; Fu, Q. Estimation of building types on OpenStreetMap based on urban morphology analysis. In Connecting a Digital Europe through Location and Place; Springer: Cham, Switzerland, 2014; pp. 19–35. [Google Scholar]

- Bakillah, M.; Liang, S.; Mobasheri, A.; Jokar Arsanjani, J.; Zipf, A. Fine-resolution population mapping using OpenStreetMap points-of-interest. Int. J. Geogr. Inf. Sci. 2014, 28, 1940–1963. [Google Scholar] [CrossRef]

- Estima, J.; Painho, M. Investigating the potential of OpenStreetMap for land use/land cover production: A case study for continental Portugal. In OpenStreetMap in GIScience; Springer: Cham, Switzerland, 2015; pp. 273–293. [Google Scholar]

- Fonte, C.C.; Patriarca, J.A.; Minghini, M.; Antoniou, V.; See, L.; Brovelli, M.A. Using openstreetmap to create land use and land cover maps: Development of an application. In Geospatial Intelligence: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2019; pp. 1100–1123. [Google Scholar]

- Arsanjani, J.J.; Mooney, P.; Zipf, A.; Schauss, A. Quality assessment of the contributed land use information from OpenStreetMap versus authoritative datasets. In OpenStreetMap in GIScience; Springer: Cham, Switzerland, 2015; pp. 37–58. [Google Scholar]

- Schultz, M.; Voss, J.; Auer, M.; Carter, S.; Zipf, A. Open land cover from OpenStreetMap and remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2017, 63, 206–213. [Google Scholar] [CrossRef]

- Yang, D.; Fu, C.-S.; Smith, A.C.; Yu, Q. Open land-use map: A regional land-use mapping strategy for incorporating OpenStreetMap with earth observations. Geo-Spat. Inf. Sci. 2017, 20, 269–281. [Google Scholar] [CrossRef] [Green Version]

- Yang, D. Mapping Regional Landscape by Using OpenstreetMap (OSM). In Environmental Information Systems: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2019; pp. 771–790. [Google Scholar] [CrossRef]

- Upton, V.; Ryan, M.; O’Donoghue, C.; Dhubhain, A.N. Combining conventional and volunteered geographic information to identify and model forest recreational resources. Appl. Geogr. 2015, 60, 69–76. [Google Scholar] [CrossRef]

- Grippa, T.; Georganos, S.; Vanhuysse, S.; Lennert, M.; Mboga, N.; Wolff, É. Mapping slums and model population density using earth observation data and open source solutions. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; pp. 1–4. [Google Scholar]

- Liu, C.; Xiong, L.; Hu, X.; Shan, J. A progressive buffering method for road map update using OpenStreetMap data. ISPRS Int. J. Geo-Inf. 2015, 4, 1246–1264. [Google Scholar] [CrossRef] [Green Version]

- Rondeux, J.; Alderweireld, M.; Saidi, M.; Schillings, T.; Freymann, E.; Murat, D.; Kugener, G. La Forêt Luxembourgeoise en Chiffres-Résultats de l’Lnventaire Forestier National au Grand-Duché de Luxembourg 2009–2011; Administration de la Nature et des forêts du Grand-Duché de Luxembourg—Service des Forêts: Diekirch, Luxembourg, 2014.

- Niemeyer, T.; Härdtle, W.; Ries, C. Die Waldgesellschaften Luxemburgs: Vegetation, Standort, Vorkommen und Gefährdung; Musée National D’Histoire Naturelle Luxembourg: Luxembourg, 2010. [Google Scholar]

- BD-L-TC-forests from the Official Carto-/Topographic Database. Available online: https://data.public.lu/fr/datasets/bd-l-tc-2015/ (accessed on 20 December 2019).

- Photos Aériennes. Available online: https://act.public.lu/fr/cartographie/photos-aeriennes.html (accessed on 22 July 2020).

- Orthophoto Officelle du Grand-Duché de Luxembourg, Édition 2018. Available online: https://data.public.lu/fr/datasets/orthophoto-officelle-du-grand-duche-de-luxembourg-edition-2018/ (accessed on 10 November 2019).

- Raifer, M. Overpass Turbo—Overpass API. Available online: http://overpass-turbo.eu/ (accessed on 15 January 2020).

- Bins, L.S.; Fonseca, L.G.; Erthal, G.J.; Ii, F.M. Satellite imagery segmentation: A region growing approach. Simpósio Bras. De Sens. Remoto 1996, 8, 677–680. [Google Scholar]

- Räsänen, A.; Kuitunen, M.; Tomppo, E.; Lensu, A. Coupling high-resolution satellite imagery with ALS-based canopy height model and digital elevation model in object-based boreal forest habitat type classification. ISPRS J. Photogramm. Remote Sens. 2014, 94, 169–182. [Google Scholar] [CrossRef] [Green Version]

- Räsänen, A.; Rusanen, A.; Kuitunen, M.; Lensu, A. What makes segmentation good? A case study in boreal forest habitat mapping. Int. J. Remote Sens. 2013, 34, 8603–8627. [Google Scholar] [CrossRef]

- Momsen, E.; Metz, M.; GRASS Development Team. Available online: https://grass.osgeo.org/grass76/manuals/addons/i.segment.gsoc.html (accessed on 5 March 2020).

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Grippa, T.; Lennert, M.; Beaumont, B.; Vanhuysse, S.; Stephenne, N.; Wolff, E. An Open-Source Semi-Automated Processing Chain for Urban Object-Based Classification. Remote Sens. 2017, 9, 358. [Google Scholar] [CrossRef] [Green Version]

- Espindola, G.; Câmara, G.; Reis, I.; Bins, L.; Monteiro, A. Parameter selection for region-growing image segmentation algorithms using spatial autocorrelation. Int. J. Remote Sens. 2006, 27, 3035–3040. [Google Scholar] [CrossRef]

- Johnson, B.A.; Bragais, M.; Endo, I.; Magcale-Macandog, D.B.; Macandog, P.B.M. Image segmentation parameter optimization considering within-and between-segment heterogeneity at multiple scale levels: Test case for mapping residential areas using landsat imagery. ISPRS Int. J. Geo-Inf. 2015, 4, 2292–2305. [Google Scholar] [CrossRef] [Green Version]

- Carleer, A.; Debeir, O.; Wolff, E. Assessment of very high spatial resolution satellite image segmentations. Photogramm. Eng. Remote Sens. 2005, 71, 1285–1294. [Google Scholar] [CrossRef] [Green Version]

- UNFCCC. Report of the Conference of the Parties on Its Seventh Session, Held at Marrakesh from 29 October to 10 November 2001; FCCC/CP/2001/13/Add.1; UNFCCC: Marrakesh, Morocco, 2001. [Google Scholar]

- Mallinis, G.; Koutsias, N.; Tsakiri-Strati, M.; Karteris, M. Object-based classification using Quickbird imagery for delineating forest vegetation polygons in a Mediterranean test site. ISPRS J. Photogramm. Remote Sens. 2008, 63, 237–250. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Xu, B. GEOBIA vegetation mapping in Great Smoky Mountains National Park with spectral and non-spectral ancillary information. Photogramm. Eng. Remote Sens. 2010, 76, 137–149. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. ManCybern. 1973, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef] [Green Version]

- Antoniol, G.; Basco, C.; Ceccarelli, M.; Metz, M.; Lennart, M.; GRASS Development Team. Available online: https://grass.osgeo.org/grass78/manuals/r.texture.html (accessed on 5 March 2020).

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Kuhn, M. Caret: Classification and Regression Training. Available online: https://CRAN.R-project.org/package=caret (accessed on 10 March 2019).

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Li, X.; Cheng, X.; Chen, W.; Chen, G.; Liu, S. Identification of Forested Landslides Using LiDar Data, Object-based Image Analysis, and Machine Learning Algorithms. Remote Sens. 2015, 7, 9705–9726. [Google Scholar] [CrossRef] [Green Version]

| Leaf Type | Automatic | Manual |

|---|---|---|

| broadleaved | 1205 | 522 |

| needleleaved | 771 | 453 |

| Segmentation | ||||||

| RGB (n = 19,925) | RGB + nIR (n = 36,008) | |||||

| Input Variables | ||||||

| RGB | RGB + Texture | RGB + nIR | RGB + nIR + Texture | |||

| Training Setup | Automatic | Accuracy | 0.73 | 0.77 | 0.80 | 0.84 |

| Kappa | 0.41 | 0.46 | 0.51 | 0.60 | ||

| Manual | Accuracy | 0.71 | 0.73 | 0.83 | 0.85 | |

| Kappa | 0.38 | 0.41 | 0.57 | 0.62 | ||

| n = 33,742 | Reference | ||

|---|---|---|---|

| Classification | Broadleaved | Needleleaved | |

| Broadleaved | 22,284 | 2136 | |

| Needleleaved | 2899 | 6423 | |

| PAB: 0.88 | PAN: 0.75 | UAB:0.91 | UAN: 0.69 |

| OAA: 0.85 | κ: 0.61 | CAA: 0.82 | |

| Q: 0.02 A: 0.13 | |||

| OAA: Overall Accuracy; κ: Cohens Kappa CAA: Class-Averaged Accuracy; Q: Quantity Disagreement; A: Allocation Disagreement | |||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brauchler, M.; Stoffels, J. Leveraging OSM and GEOBIA to Create and Update Forest Type Maps. ISPRS Int. J. Geo-Inf. 2020, 9, 499. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9090499

Brauchler M, Stoffels J. Leveraging OSM and GEOBIA to Create and Update Forest Type Maps. ISPRS International Journal of Geo-Information. 2020; 9(9):499. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9090499

Chicago/Turabian StyleBrauchler, Melanie, and Johannes Stoffels. 2020. "Leveraging OSM and GEOBIA to Create and Update Forest Type Maps" ISPRS International Journal of Geo-Information 9, no. 9: 499. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9090499