CityJSON Building Generation from Airborne LiDAR 3D Point Clouds

Abstract

:1. Introduction

2. Related Works

2.1. Building-Generation Methods

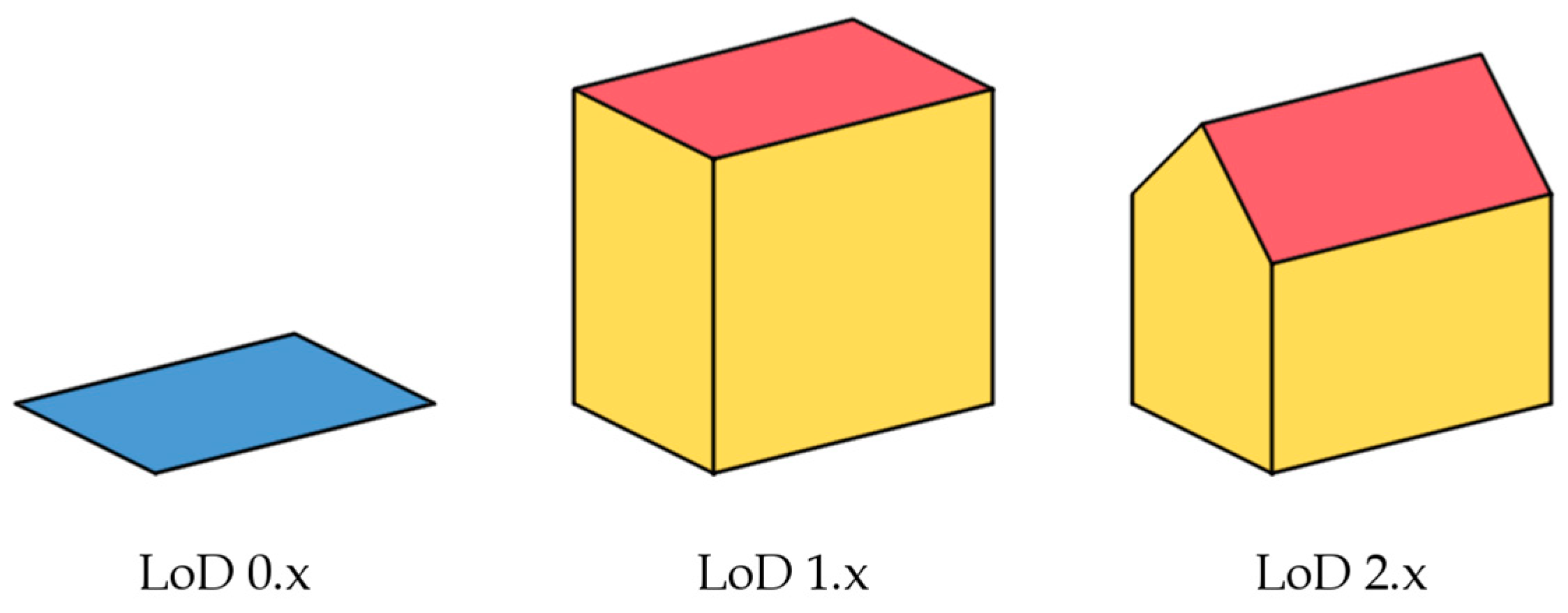

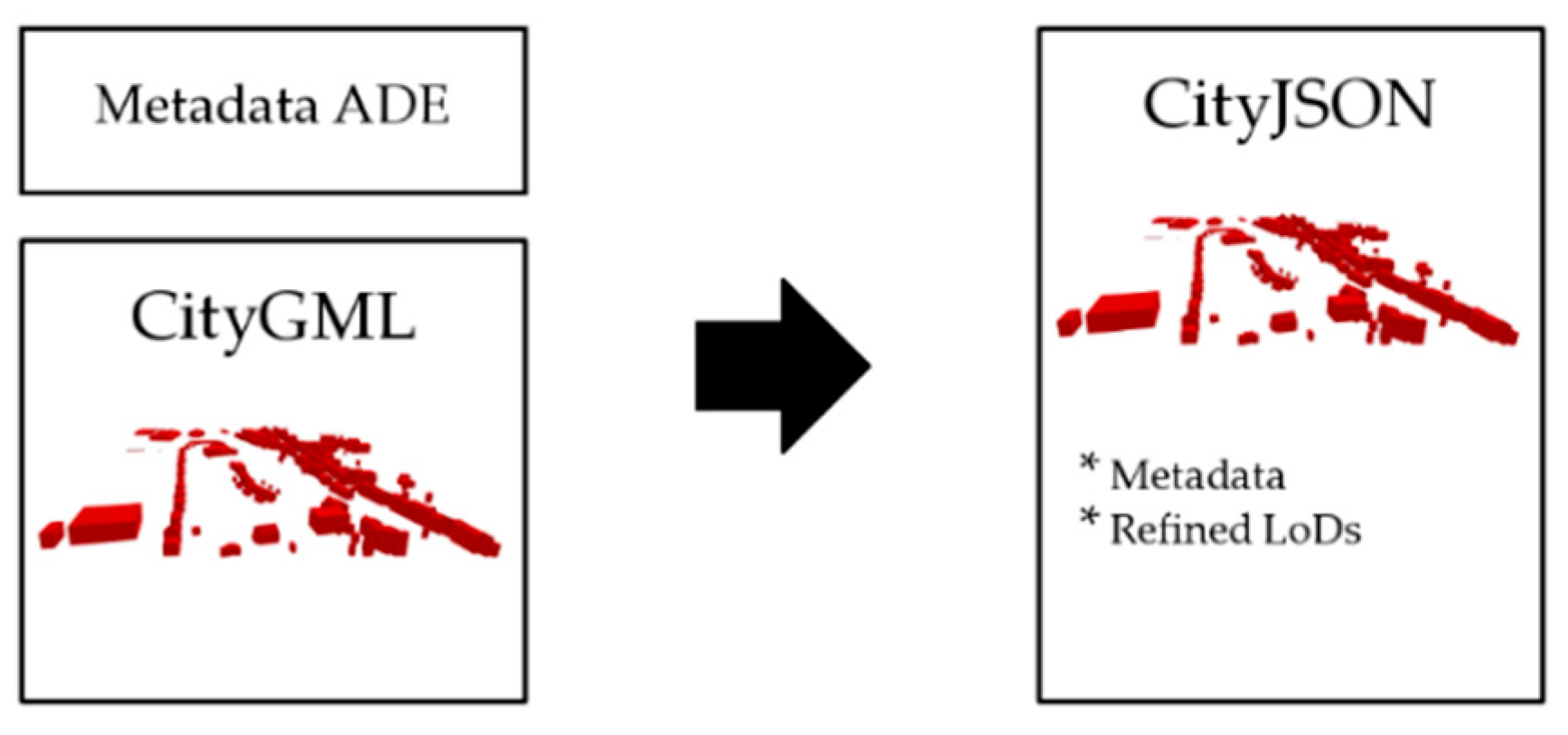

2.2. CityGML and CityJSON

3. Methodology

3.1. Introductory Comments

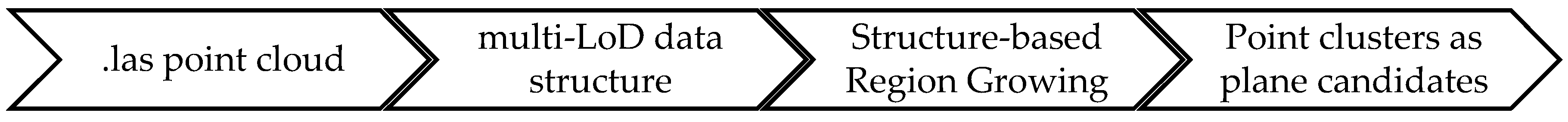

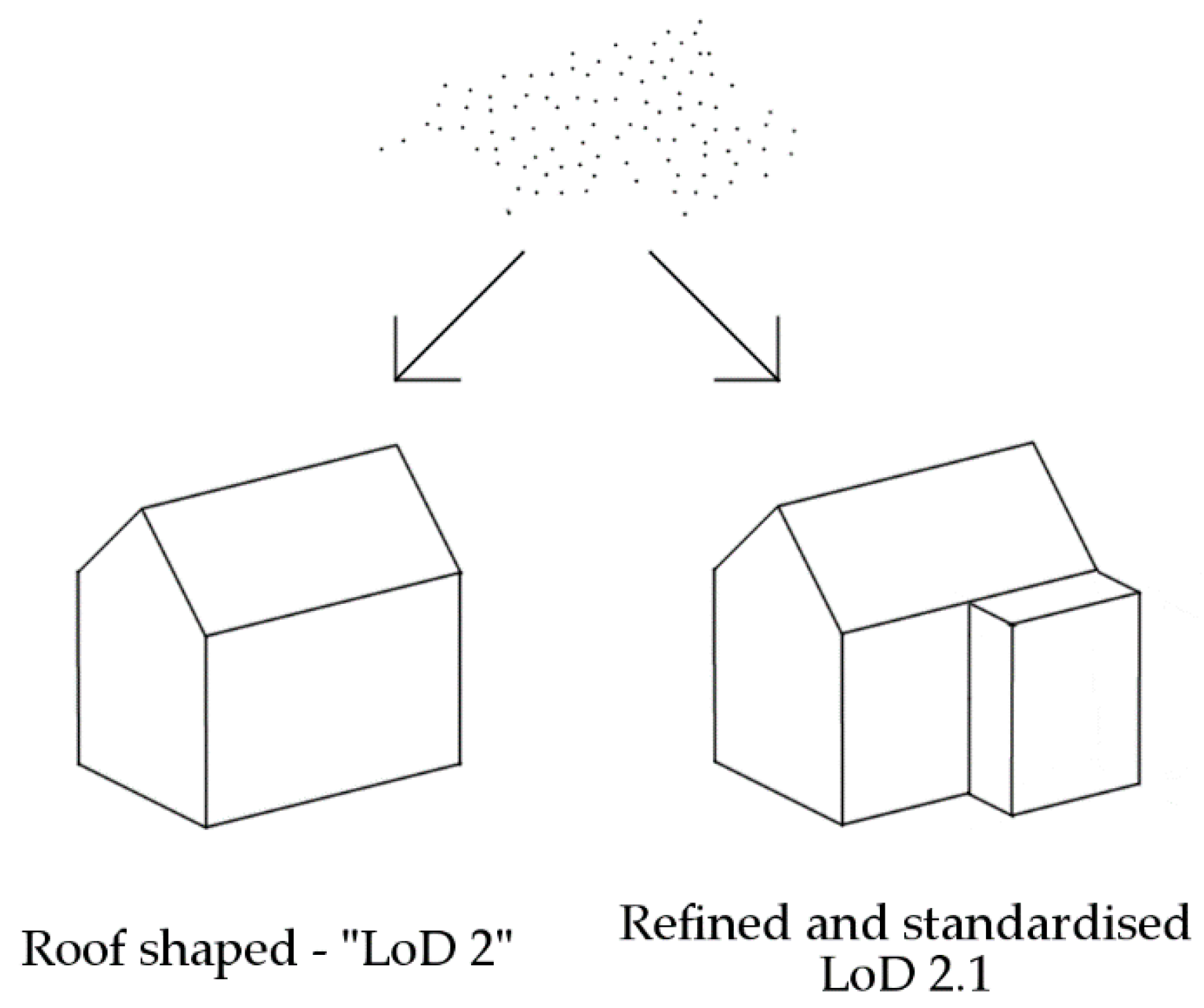

3.2. Point-Cloud Segmentation

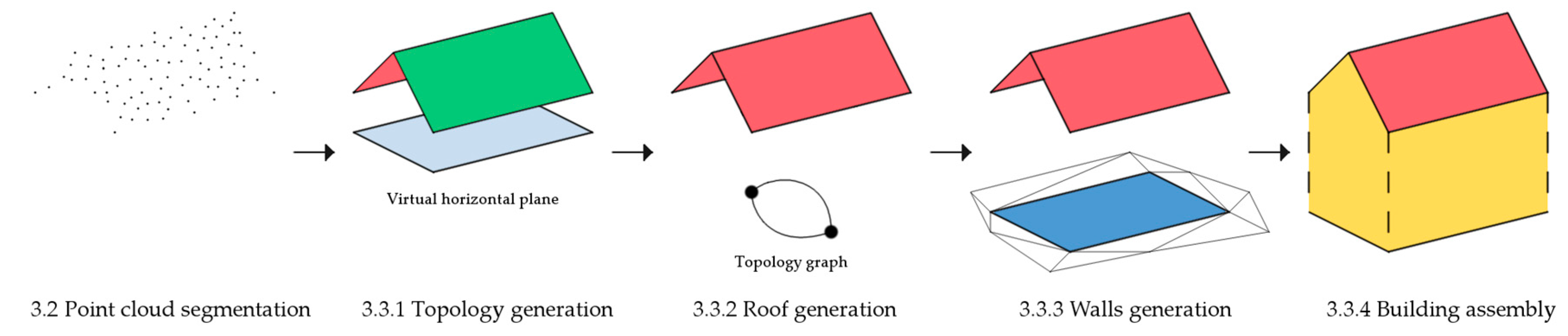

3.3. Step-By-Step Geometric Modeling

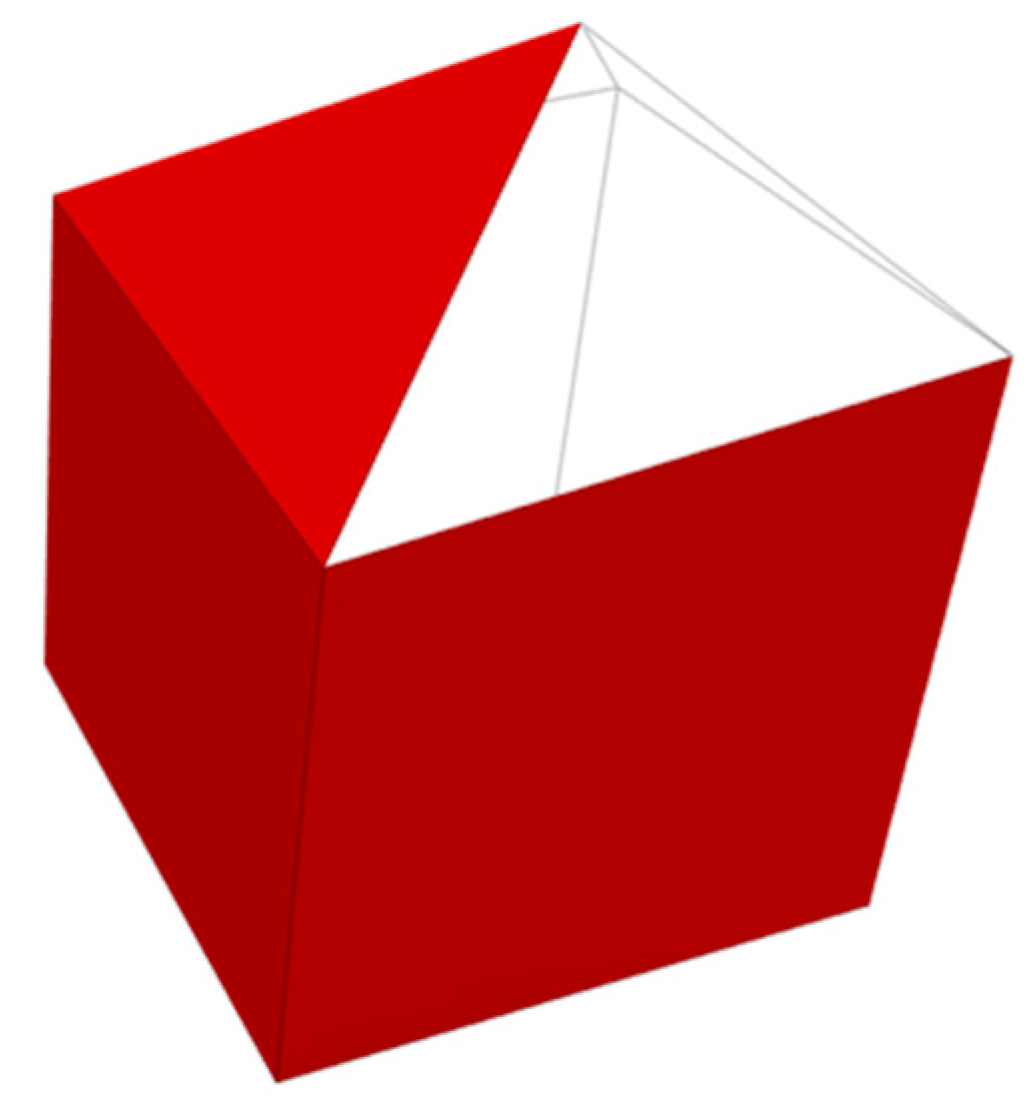

3.3.1. Topology Generation

- O+ planes have normal vectors that when projected, are orthogonal and point away from each other.

- O- planes have normal vectors that when projected, are orthogonal and point towards each other.

- S+ planes have normal vectors that when projected, are parallel and point away from each other.

- N = no constraint.

- An adjacency matrix (i, j), which contains the ID of j planes connected to the i plane.

- A relationship matrix, which contains the nature of the connectivity between the i and j planes.

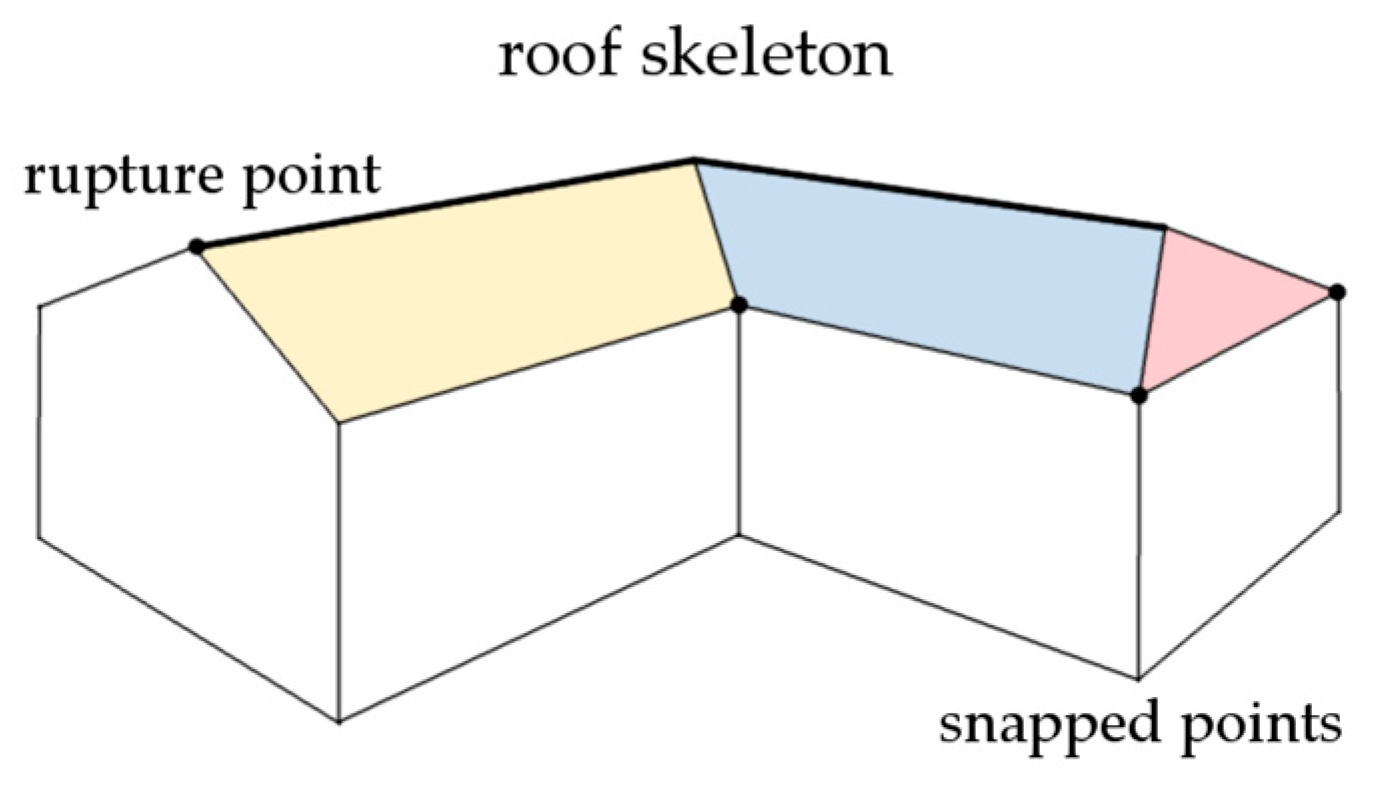

3.3.2. Roof Generation

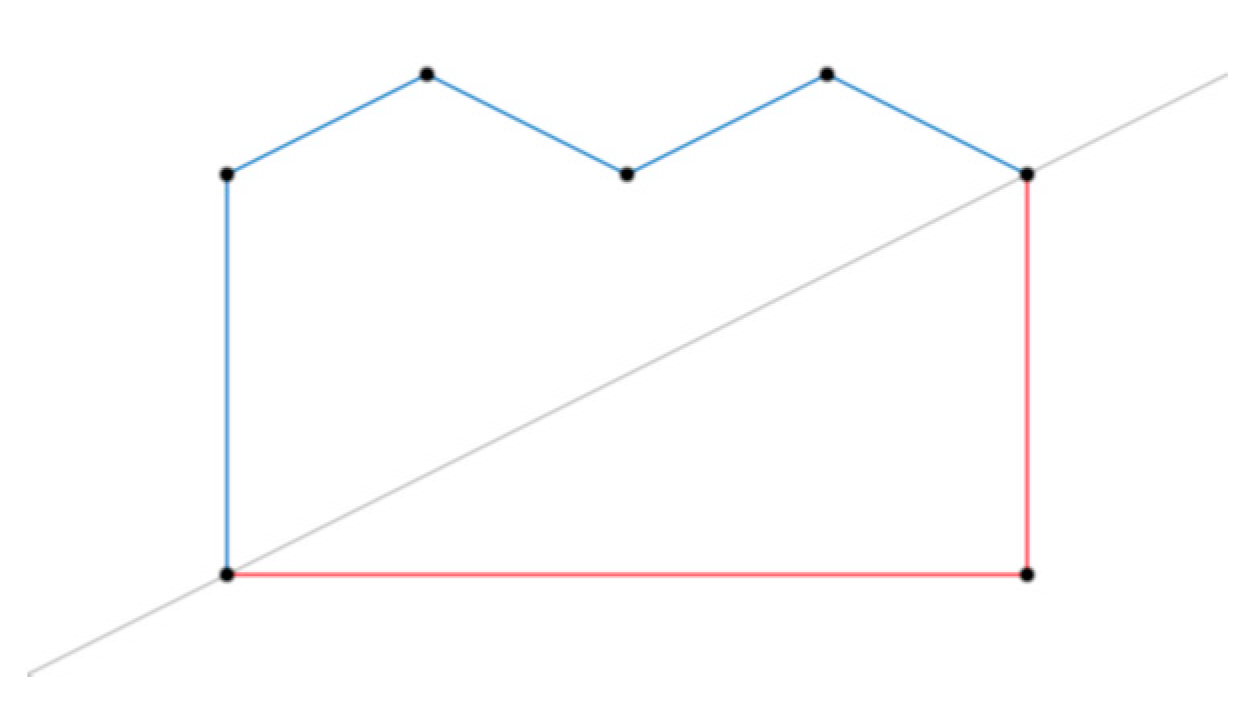

3.3.3. Wall Generation

3.3.4. Building Assembly

3.4. CityJSON Model Building

4. Discussion

4.1. CityJSON Improvements

4.2. Format Compliance

4.3. Quality Control

4.3.1. Spatio-Semantic Evaluation

4.3.2. Geometric Evaluation

- Snap tolerance: 0.001 m—if two points are closer than this value, then they are assumed to be the same.

- Planarity tolerance: 0.05 m—the maximum distance between a point and a fitted plane.

- Overlap tolerance: 0.01 m—the tolerance used to validate adjacency between different solids.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Billen, R.; Cutting-Decelle, A.-F.; Marina, O.; de Almeida, J.-P.; Caglioni, M.; Falquet, G.; Leduc, T.; Métral, C.; Moreau, G.; Perret, J.; et al. (Eds.) 3D City Models and Urban Information: Current Issues and Perspectives: European COST Action TU0801; EDP Sciences: Liège, Belgium, 2014; pp. 1–118. [Google Scholar]

- Gröger, G.; Plümer, L. CityGML—Interoperable semantic 3D city models. ISPRS J. Photogramm. Remote Sens. 2012, 71, 12–33. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Chen, D. LiDAR Point Clouds to 3D Urban Models: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Biljecki, F.; Ledoux, H.; Stoter, J. An improved LOD specification for 3D building models. Comput. Environ. Urban Syst. 2016, 59, 25–37. [Google Scholar] [CrossRef] [Green Version]

- Biljecki, F.; Ledoux, H.; Du, X.; Stoter, J.; Soon, K.H.; Khoo, V.H.S. The most common geometric and semantic errors in CityGML datasets. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 4, 13–22. [Google Scholar] [CrossRef] [Green Version]

- Labetski, A.; Kumar, K.; Ledoux, H.; Stoter, J. A metadata ADE for CityGML. Open Geospat. Data Softw. Stand. 2018, 3, 16. [Google Scholar] [CrossRef] [Green Version]

- Ledoux, H.; Ohori, K.A.; Kumar, K.; Dukai, B.; Labetski, A.; Vitalis, S. CityJSON: A compact and easy-to-use encoding of the CityGML data model. Open Geospat. Data Softw. Stand. 2019, 4, 4. [Google Scholar] [CrossRef]

- Tarsha Kurdi, F.; Awrangjeb, M. Automatic evaluation and improvement of roof segments for modelling missing details using Lidar data. Int. J. Remote Sens. 2020, 41, 4702–4725. [Google Scholar] [CrossRef]

- Poux, F.; Hallot, P.; Neuville, R.; Billen, R. Smart Point Cloud: Definition and Remaining Challenges. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 4, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Lafarge, F.; Mallet, C. Creating Large-Scale City Models from 3D-Point Clouds: A Robust Approach with Hybrid Representation. Int. J. Comput. Vis. 2012, 99, 69–85. [Google Scholar] [CrossRef]

- Jung, J.; Sohn, G. A line-based progressive refinement of 3D rooftop models using airborne LiDAR data with single view imagery. ISPRS J. Photogramm. Remote Sens. 2019, 149, 157–175. [Google Scholar] [CrossRef]

- Zhou, K.; Lindenbergh, R.; Gorte, B.; Zlatanova, S. LiDAR-guided dense matching for detecting changes and updating of buildings in Airborne LiDAR data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 200–213. [Google Scholar] [CrossRef]

- Cao, R.; Zhang, Y.; Liu, X.; Zhao, Z. 3D building roof reconstruction from airborne LiDAR point clouds: A framework based on a spatial database. Int. J. Geogr. Inf. Sci. 2017, 31, 1359–1380. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Ballard, D.H. Generalizing the Hough Transform to Detect Arbitrary Shapes. In Readings in Computer Vision; Elsevier: Oxford, UK, 1987; pp. 714–725. ISBN 978-0-08-051581-6. [Google Scholar]

- Borrmann, D.; Elseberg, J.; Lingemann, K.; Nüchter, A. A Data Structure for the 3D Hough Transform for Plane Detection. IFAC Proc. Vol. 2010, 43, 49–54. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Ling, X.; Wan, Y.; Liu, L.; Li, Q. TopoLAP: Topology Recovery for Building Reconstruction by Deducing the Relationships between Linear and Planar Primitives. Remote Sens. 2019, 11, 1372. [Google Scholar] [CrossRef] [Green Version]

- Tarsha-Kurdi, F.; Landes, T.; Grussenmeyer, P. Hough-Transform and Extended RANSAC Algorithms for Automatic Detection of 3D Building Roof Planes from Lidar Data. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007; pp. 407–412. [Google Scholar]

- Li, L.; Yang, F.; Zhu, H.; Li, D.; Li, Y.; Tang, L. An Improved RANSAC for 3D Point Cloud Plane Segmentation Based on Normal Distribution Transformation Cells. Remote Sens. 2017, 9, 433. [Google Scholar] [CrossRef] [Green Version]

- Xu, B.; Jiang, W.; Shan, J.; Zhang, J.; Li, L. Investigation on the Weighted RANSAC Approaches for Building Roof Plane Segmentation from LiDAR Point Clouds. Remote Sens. 2015, 8, 5. [Google Scholar] [CrossRef] [Green Version]

- Pârvu, I.M.; Remondino, F.; Ozdemir, E. LoD2 Building Generation Experiences and Comparisons. J. Appl. Eng. Sci. 2018, 8, 59–64. [Google Scholar] [CrossRef] [Green Version]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D.; Breitkopf, U.; Jung, J. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2014, 93, 256–271. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Gilani, S.; Siddiqui, F. An Effective Data-Driven Method for 3-D Building Roof Reconstruction and Robust Change Detection. Remote Sens. 2018, 10, 1512. [Google Scholar] [CrossRef] [Green Version]

- Kumar, K.; Ledoux, H.; Stoter, J. Dynamic 3D Visualization of Floods: Case of the Netherlands. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 83–87. [Google Scholar] [CrossRef] [Green Version]

- Kumar, K.; Labetski, A.; Ohori, K.A.; Ledoux, H.; Stoter, J. The LandInfra standard and its role in solving the BIM-GIS quagmire. Open Geospat. Data Softw. Stand. 2019, 4, 5. [Google Scholar] [CrossRef] [Green Version]

- Vitalis, S.; Ohori, K.; Stoter, J. Incorporating Topological Representation in 3D City Models. ISPRS Int. J. Geo-Inf. 2019, 8, 347. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Brenner, C.; Sester, M. A generative statistical approach to automatic 3D building roof reconstruction from laser scanning data. ISPRS J. Photogramm. Remote Sens. 2013, 79, 29–43. [Google Scholar] [CrossRef]

- Wagner, D.; Ledoux, H. CityGML Quality Interoperability Experiment; OGC: Wayland, MA, USA, 2016. [Google Scholar]

- Wichmann, A. Grammar-guided reconstruction of semantic 3D building models from airborne LiDAR data using half-space modeling. Comput. Sci. 2018. [Google Scholar] [CrossRef]

- Fan, H.; Meng, L. A three-step approach of simplifying 3D buildings modeled by CityGML. Int. J. Geogr. Inf. Sci. 2012, 26, 1091–1107. [Google Scholar] [CrossRef]

- Balta, H.; Velagic, J.; Bosschaerts, W.; De Cubber, G.; Siciliano, B. Fast Statistical Outlier Removal Based Method for Large 3D Point Clouds of Outdoor Environments. IFAC-PapersOnLine 2018, 51, 348–353. [Google Scholar] [CrossRef]

- Poux, F. The Smart Point Cloud: Structuring 3D Intelligent Point Data; Université de Liège: Liège, Belgique, 2019. [Google Scholar]

- Berger, M.; Tagliasacchi, A.; Seversky, L.M.; Alliez, P.; Guennebaud, G.; Levine, J.A.; Sharf, A.; Silva, C.T. A Survey of Surface Reconstruction from Point Clouds. Comput. Graph. Forum 2017, 36, 301–329. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Billen, R. Voxel-Based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs. Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Neuville, R.; Van Wersch, L.; Nys, G.-A.; Billen, R. 3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects. Geosciences 2017, 7, 96. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Billen, R. A Smart Point Cloud Infrastructure for intelligent environments. In Laser Scanning; Riveiro, B., Lindenbergh, R., Eds.; CRC Press: Boca Raton, FL, USA, 2019; pp. 127–149. ISBN 978-1-351-01886-9. [Google Scholar]

- Dorninger, P.; Pfeifer, N. A Comprehensive Automated 3D Approach for Building Extraction, Reconstruction, and Regularization from Airborne Laser Scanning Point Clouds. Sensors 2008, 8, 7323–7343. [Google Scholar] [CrossRef] [Green Version]

- Kada, M.; McKinley, L. 3D Building reconstruction from LiDAR based on a cell decomposition approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 38, 47–52. [Google Scholar]

- Huang, H.; Mayer, H. Towards Automatic Large-Scale 3D Building Reconstruction: Primitive Decomposition and Assembly. In Societal Geo-Innovation; Bregt, A., Sarjakoski, T., Van Lammeren, R., Rip, F., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 205–221. ISBN 978-3-319-56758-7. [Google Scholar]

- Jung, J.; Jwa, Y.; Sohn, G. Implicit Regularization for Reconstructing 3D Building Rooftop Models Using Airborne LiDAR Data. Sensors 2017, 17, 621. [Google Scholar] [CrossRef] [PubMed]

- Henn, A.; Gröger, G.; Stroh, V.; Plümer, L. Model driven reconstruction of roofs from sparse LIDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 76, 17–29. [Google Scholar] [CrossRef]

- Verma, V.; Kumar, R.; Hsu, S. 3D Building Detection and Modeling from Aerial LIDAR Data. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2213–2220. [Google Scholar]

- Hu, P.; Yang, B.; Dong, Z.; Yuan, P.; Huang, R.; Fan, H.; Sun, X. Towards Reconstructing 3D Buildings from ALS Data Based on Gestalt Laws. Remote Sens. 2018, 10, 1127. [Google Scholar] [CrossRef] [Green Version]

- Milde, J.; Zhang, Y.; Brenner, C.; Plümer, L.; Sester, M. Building reconstruction using a structural description based on a formal grammar. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 47. [Google Scholar]

- Xiong, B.; Jancosek, M.; Oude Elberink, S.; Vosselman, G. Flexible building primitives for 3D building modeling. ISPRS J. Photogramm. Remote Sens. 2015, 101, 275–290. [Google Scholar] [CrossRef]

- Biljecki, F.; Ledoux, H.; Stoter, J.; Zhao, J. Formalisation of the level of detail in 3D city modeling. Comput. Environ. Urban Syst. 2014, 48, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Wagner, D.; Wewetzer, M.; Bogdahn, J.; Alam, N.; Pries, M.; Coors, V. Geometric-Semantical Consistency Validation of CityGML Models. In Progress and New Trends in 3D Geoinformation Sciences; Pouliot, J., Daniel, S., Hubert, F., Zamyadi, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 171–192. ISBN 978-3-642-29792-2. [Google Scholar]

- Ennafii, O.; Bris, A.L.; Lafarge, F.; Mallet, C. Semantic Evaluation of 3D City Models. Unpublished work. 2018. [Google Scholar] [CrossRef]

- Ennafii, O.; Le Bris, A.; Lafarge, F.; Mallet, C. A Learning Approach to Evaluate the Quality of 3D City Models. Photogramm. Eng. Remote Sens. 2019, 85, 865–878. [Google Scholar] [CrossRef] [Green Version]

- Ledoux, H. Val3dity: Validation of 3D GIS primitives according to the international standards. Open Geospat. Data Softw. Stand. 2018, 3, 1. [Google Scholar] [CrossRef]

- Hachenberger, P.; Kettner, L.; Mehlhorn, K. Boolean operations on 3D selective Nef complexes: Data structure, algorithms, optimized implementation and experiments. Comput. Geom. 2007, 38, 64–99. [Google Scholar] [CrossRef] [Green Version]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.-M. USAC: A Universal Framework for Random Sample Consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2022–2038. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Ledoux, H.; Stoter, J. Automatic Repair of Citygml Lod2 Buildings Using Shrink-Wrapping. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 309–317. [Google Scholar] [CrossRef] [Green Version]

| City (Method) | Size | Buildings | Valid | Primitives | Valid |

|---|---|---|---|---|---|

| Berlin | 933 MB | 22.771 | 74% | 89.736 | 90% |

| DenHaag | 22 MB | 844 | 61% | 1.990 | 85% |

| Montréal | 125 MB | 581 | 76% | 1.744 | 88% |

| NRW | 16 MB | 797 | 83% | 928 | 77% |

| Theux (UR) | 689 KB | 420 | 92% | 1.198 | 97% |

| Theux (RG) | 656 KB | 400 | 93% | 1053 | 96% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nys, G.-A.; Poux, F.; Billen, R. CityJSON Building Generation from Airborne LiDAR 3D Point Clouds. ISPRS Int. J. Geo-Inf. 2020, 9, 521. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9090521

Nys G-A, Poux F, Billen R. CityJSON Building Generation from Airborne LiDAR 3D Point Clouds. ISPRS International Journal of Geo-Information. 2020; 9(9):521. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9090521

Chicago/Turabian StyleNys, Gilles-Antoine, Florent Poux, and Roland Billen. 2020. "CityJSON Building Generation from Airborne LiDAR 3D Point Clouds" ISPRS International Journal of Geo-Information 9, no. 9: 521. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9090521