Correlation Plenoptic Imaging With Entangled Photons

Abstract

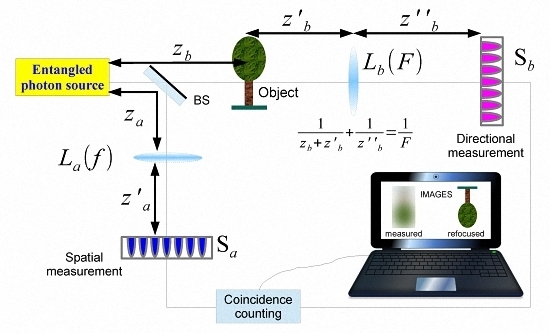

:1. Introduction

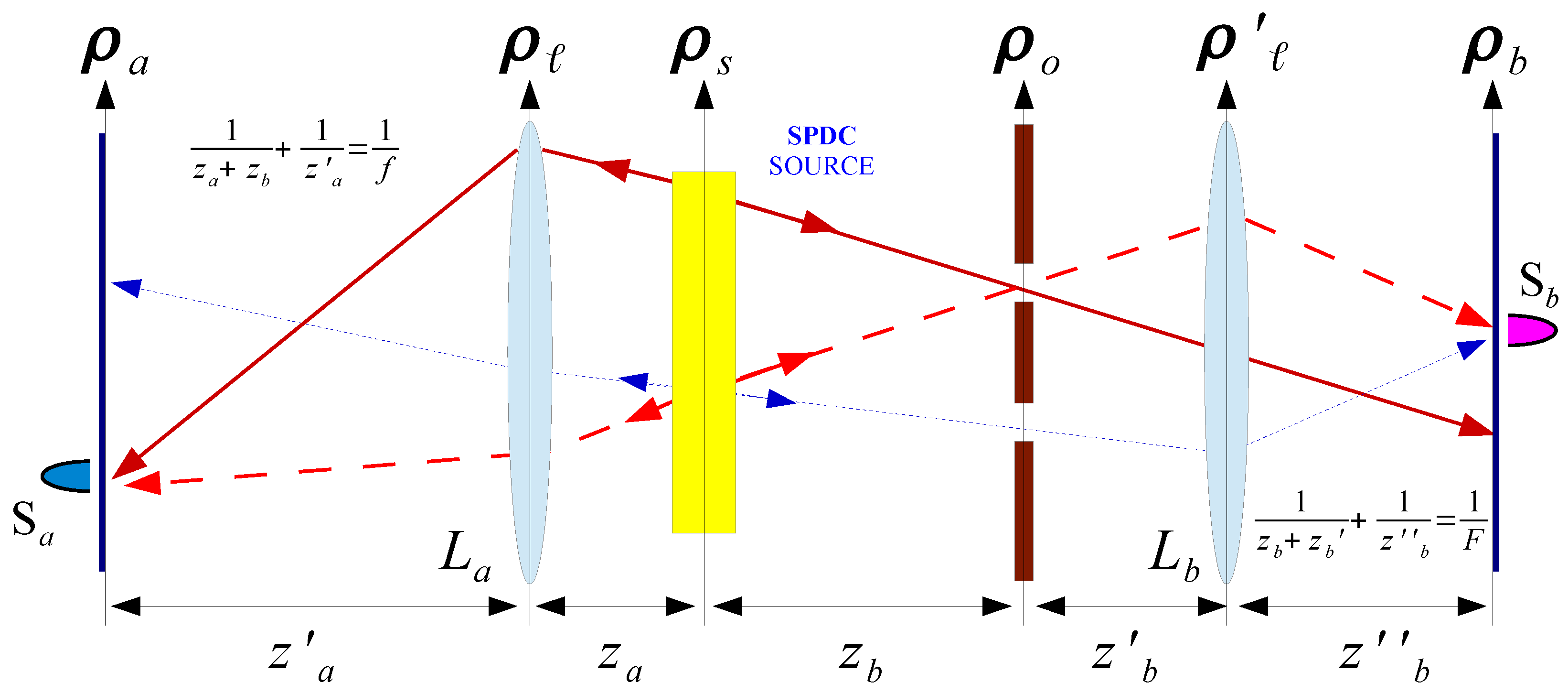

2. Theoretical Analysis

2.1. Background

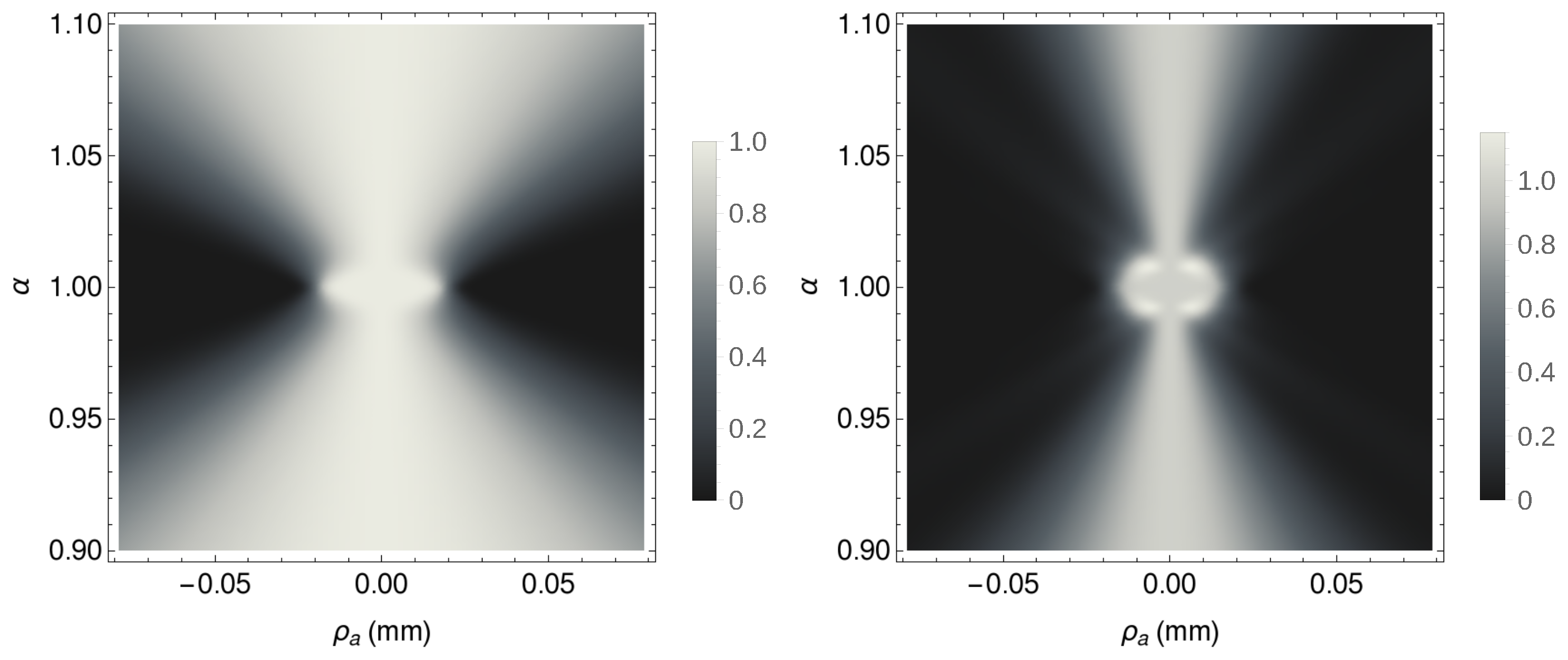

2.2. Plenoptic Properties of the Correlation Function and Refocusing Capability

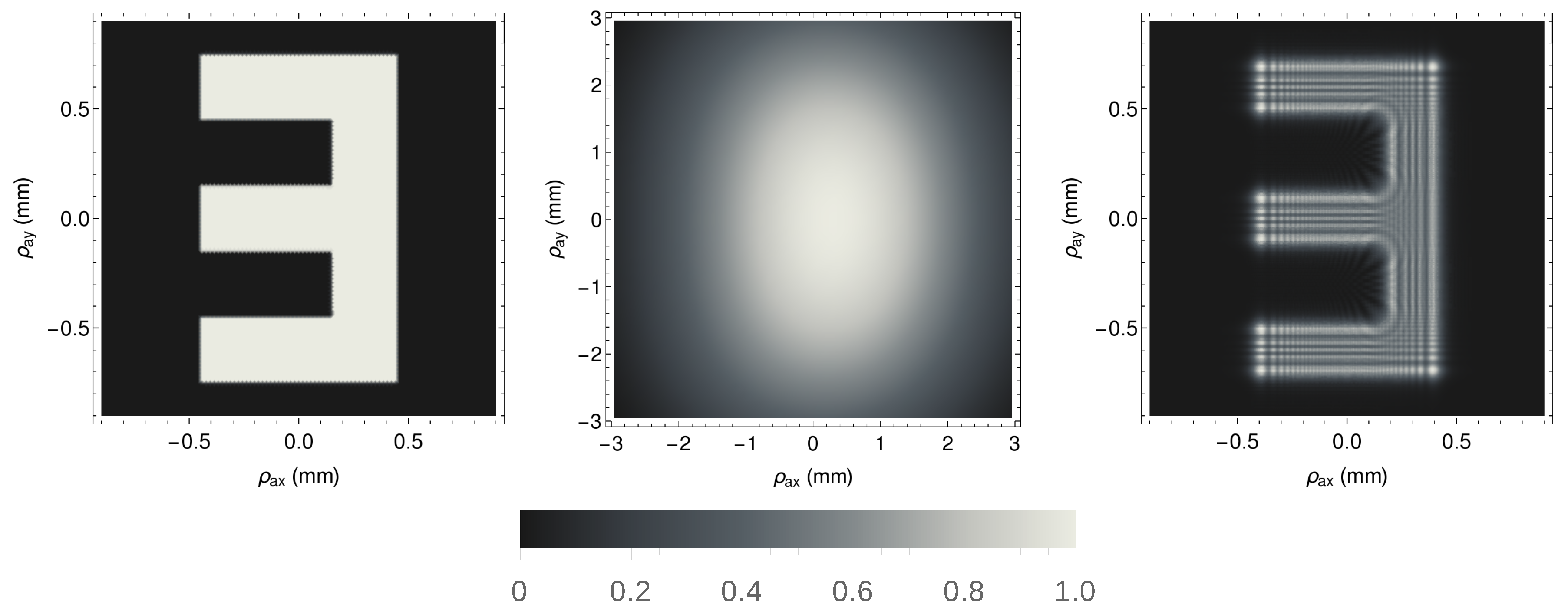

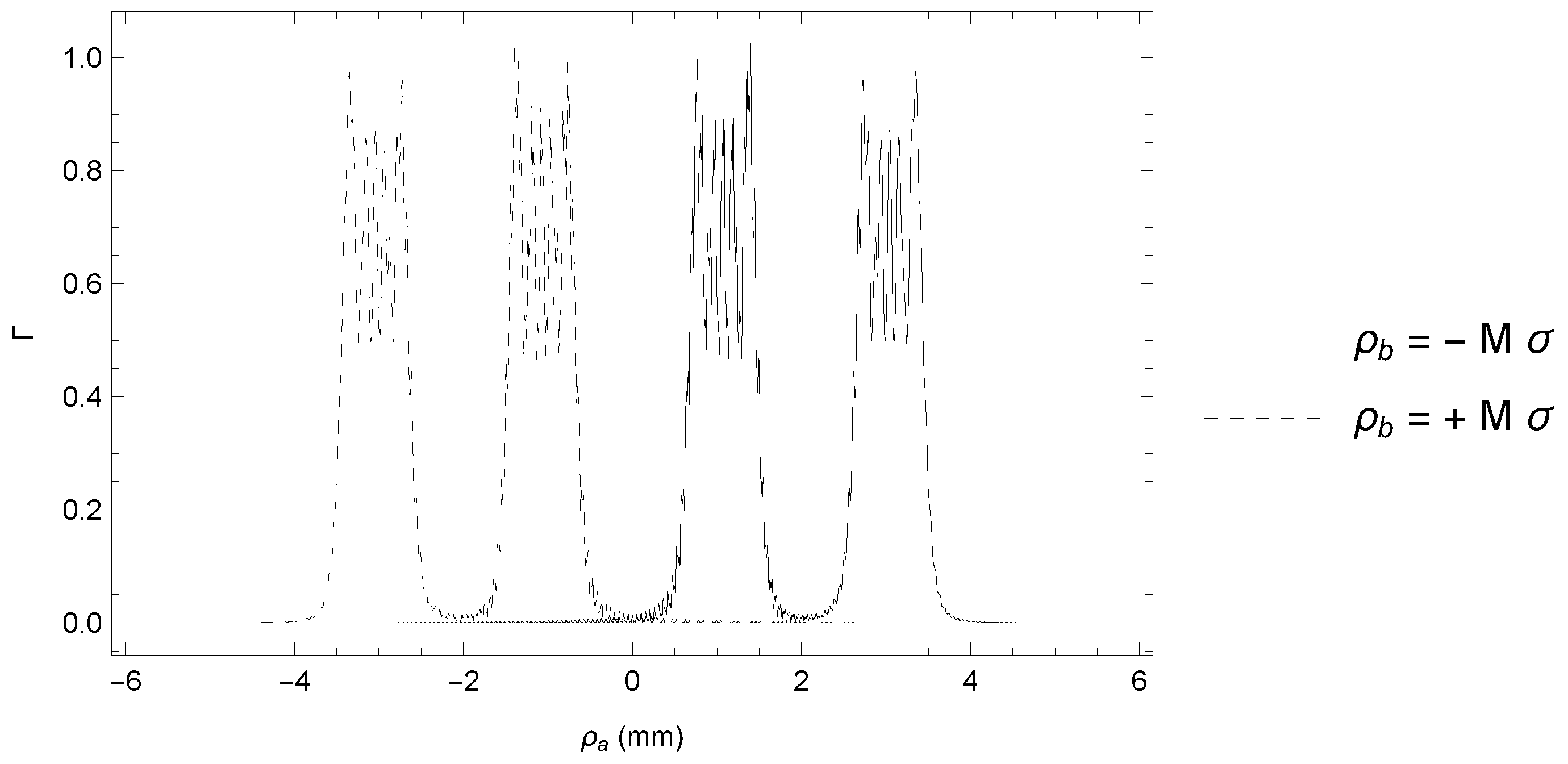

3. Simulation of CPI With Entangled Photons From SPDC

4. Discussion

5. Conclusions and Outlook

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Adelson, E.H.; Wang, J.Y.A. Single Lens Stereo with a Plenoptic Camera. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 99–106. [Google Scholar] [CrossRef]

- Xiao, X.; Javidi, B.; Martinez-Corral, M.; Stern, A. Advances in three-dimensional integral imaging: Sensing, display, and applications. Appl. Opt. 2013, 52, 546–560. [Google Scholar] [CrossRef] [PubMed]

- Broxton, M.; Grosenick, L.; Yang, S.; Cohen, N.; Andalman, A.; Deisseroth, K.; Levoy, M. Wave optics theory and 3-D deconvolution for the light field microscope. Opt. Express. 2013, 21, 25418–25439. [Google Scholar] [CrossRef] [PubMed]

- Prevedel, R.; Yoon, Y.-G.; Hoffmann, M.; Pak, N.; Wetzstein, G.; Kato, S.; Schrödel, T.; Raskar, R.; Zimmer, M.; Boyden, E.S.; et al. Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy. Nat. Methods 2014, 11, 727–730. [Google Scholar] [CrossRef] [PubMed]

- Ng, R.; Levoy, M.; Brédif, M.; Duval, G.; Horowitz, M.; Hanrahan, P. Light Field Photography with a Hand-Held Plenoptic Camera; Tech Report CSTR 2005-02; Stanford University Computer Science: Stanford, CA, USA, 2005. [Google Scholar]

- Lytro ILLUM. Available online: https://www.lytro.com/illum (accessed on 2 June 2016).

- Raytrix. Available online: http://www.raytrix.de/ (accessed on 2 June 2016).

- 3D capture for the next generation. Available online: http://www.pelicanimaging.com (accessed on 2 June 2016).

- Liu, H.; Jonas, E.; Tian, L.; Jingshan, Z.; Recht, B.; Waller, L. 3D imaging in volumetric scattering media using phase-space measurements. Opt. Express. 2015, 23, 14461–14471. [Google Scholar] [CrossRef] [PubMed]

- Muenzel, S.; Fleischer, J.W. Enhancing layered 3D displays with a lens. Appl. Opt. 2013, 52, D97–D101. [Google Scholar] [CrossRef] [PubMed]

- Levoy, M.; Hanrahan, P. Light field rendering. In Computer Graphics Annual Conference Series, Proceedings of the SIGGRAPH 1996, New Orleans, LA, USA, 4–9 August 1996; ACM SIGGRAPH: New York, NY, USA, 1996; pp. 31–42. [Google Scholar]

- Levoy, M.; Ng, R.; Adams, A.; Footer, M.; Horowitz, M. Light field microscopy. ACM Trans. Graph. 2006, 25, 924–934. [Google Scholar] [CrossRef]

- Glastre, W.; Hugon, O.; Jacquin, O.; de Chatellus, H.G.; Lacot, E. Demonstration of a plenoptic microscope based on laser optical feedback imaging. Opt. Express 2013, 21, 7294–7303. [Google Scholar] [CrossRef] [PubMed]

- Waller, L.; Situ, G.; Fleischer, J.W. Phase-space measurement and coherence synthesis of optical beams. Nat. Photonics 2012, 6, 474–479. [Google Scholar] [CrossRef]

- Georgiev, T.; Zheng, K.C.; Curless, B.; Salesin, D.; Nayar, S.; Intwala, C. Spatio-Angular Resolution Tradeoff in Integral Photography. In Eurographics Symposium on Redering (2006); Akenine-Möller, T., Heidrich, W., Eds.; The Eurographics Association: Geneva, Switzerland, 2006. [Google Scholar]

- Schroff, S.A.; Berkner, K. Image formation analysis and high resolution image reconstruction for plenoptic imaging systems. Appl. Opt. 2013, 52, D22–D31. [Google Scholar] [CrossRef] [PubMed]

- Pérez, J.; Magdaleno, E.; Pérez, F.; Rodríguez, M.; Hernández, D.; Corrales, J. Super-Resolution in Plenoptic Cameras Using FPGAs. Sensors 2014, 14, 8669–8685. [Google Scholar] [CrossRef] [PubMed]

- D’Angelo, M.; Pepe, F.V.; Garuccio, A.; Scarcelli, G. Correlation Plenoptic Imaging. Phys. Rev. Lett. 2016, 116, 223602. [Google Scholar] [CrossRef]

- Pepe, F.V.; Scarcelli, G.; Garuccio, A.; D’Angelo, M. Plenoptic imaging with second-order correlations of light. Quantum Meas. Quantum Metrol. 2016, 3, 20–26. [Google Scholar] [CrossRef]

- Ferri, F.; Magatti, D.; Gatti, A.; Bache, M.; Brambilla, E.; Lugiato, L.A. High-Resolution Ghost Image and Ghost Diffraction Experiments with Thermal Light. Phys. Rev. Lett. 2005, 94, 183602. [Google Scholar] [CrossRef] [PubMed]

- Brida, G.; Chekhova, M.V.; Fornaro, G.A.; Genovese, M.; Lopaeva, L.; Ruo Berchera, I. Systematic analysis of signal-to-noise ratio in bipartite ghost imaging with classical and quantum light. Phys. Rev. A 2011, 83, 063807. [Google Scholar] [CrossRef]

- Brida, G.; Genovese, M.; Ruo Berchera, I. Experimental realization of sub-shot-noise quantum imaging. Nat. Photonics 2010, 4, 227–230. [Google Scholar] [CrossRef]

- Klyshko, D.N. Photons and Nonlinear Optics; CRC Press: Boca Raton, FL, USA, 1988. [Google Scholar]

- D’Angelo, M.; Valencia, A.; Rubin, M.H.; Shih, Y.H. Resolution of quantum and classical ghost imaging. Phys. Rev. A 2005, 72, 013810. [Google Scholar] [CrossRef]

- D’Angelo, M.; Shih, Y.H. Quantum Imaging. Laser Phys. Lett. 2005, 2, 567–596. [Google Scholar] [CrossRef]

- Scully, M.O.; Zubairy, M.S. Quantum Optics, 1st ed.; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Rubin, M.H.; Klyshko, D.N.; Shih, Y.H.; Sergienko, A.V. Theory of two-photon entanglement in type-II optical parametric down-conversion. Phys. Rev. A 1994, 50. [Google Scholar] [CrossRef]

- Rubin, M.H. Transverse correlation in optical spontaneous parametric down-conversion. Phys. Rev. A 1996, 54. [Google Scholar] [CrossRef]

- Burlakov, A.V.; Chekhova, M.V.; Klyshko, D.N.; Kulik, S.P.; Penin, A.N.; Shih, Y.H.; Strekalov, D.V. Interference effects in spontaneous two-photon parametric scattering from two macroscopic regions. Phys. Rev. A 1997, 56. [Google Scholar] [CrossRef]

- Kim, Y.-H. Measurement of one-photon and two-photon wave packets in spontaneous parametric downconversion. J. Opt. Soc. Am. B 2003, 20, 1959–1966. [Google Scholar] [CrossRef]

- Baek, S.-Y.; Kim, Y.-H. Spectral properties of entangled photon pairs generated via frequency-degenerate type-I spontaneous parametric down-conversion. Phys. Rev. A 2008, 77, 043807. [Google Scholar] [CrossRef]

- Rubin, M.H.; Shih, Y. Resolution of ghost imaging for nondegenerate spontaneous parametric down-conversion. Phys. Rev. A 2008, 78, 033836. [Google Scholar] [CrossRef]

- Karmakar, S.; Shih, Y. Two-color ghost imaging with enhanced angular resolving power. Phys. Rev. A 2010, 81, 033845. [Google Scholar] [CrossRef]

- Goodman, J.W. Introduction to Fourier Optics, 2nd ed.; McGraw-Hill Science/Engineering/Math: New York, NY, USA, 1996. [Google Scholar]

- Pittman, T.B.; Shih, Y.H.; Strekalov, D.V.; Sergienko, A.V. Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A 1995, 52, R3429. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pepe, F.V.; Di Lena, F.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Correlation Plenoptic Imaging With Entangled Photons. Technologies 2016, 4, 17. https://0-doi-org.brum.beds.ac.uk/10.3390/technologies4020017

Pepe FV, Di Lena F, Garuccio A, Scarcelli G, D’Angelo M. Correlation Plenoptic Imaging With Entangled Photons. Technologies. 2016; 4(2):17. https://0-doi-org.brum.beds.ac.uk/10.3390/technologies4020017

Chicago/Turabian StylePepe, Francesco V., Francesco Di Lena, Augusto Garuccio, Giuliano Scarcelli, and Milena D’Angelo. 2016. "Correlation Plenoptic Imaging With Entangled Photons" Technologies 4, no. 2: 17. https://0-doi-org.brum.beds.ac.uk/10.3390/technologies4020017