1. Introduction

The paper treats the simple linear regression

when the errors

are observations from a heavy tailed distribution, and the explanatory variables

Xi too.

In linear regression, the explanatory variables are often assumed to be equidistant on an interval. If the values are random, they may be uniformly distributed over an interval or normal or have some other distribution. In this paper, the explanatory variables are random. The Xi are inverse powers of uniform variables Ui in (0,1): . The variables Xi have a Pareto distribution with tail index ξ > 0. The tails become heavier as the index increases. For ξ ≥ 1, the expectation is infinite. We assume that the error variables have heavy tails too, with tail index η > 0. The aim of this paper is twofold:

The paper compares a number of estimators

E for the regression line in the case of heavy tails. The distribution of the error is Student or Pareto. The errors are scaled to have InterQuartile Distance IQD = 1. The tail index

ξ of the Pareto distribution of the explanatory variable varies between zero and three; the tail index

η of the error varies between zero and four. The performance of an estimator

E is measured by the loss function

L(

u) =

u2 applied to the difference between the slope

a of the regression line and its estimate

. Our approach is unorthodox. For various values of the tail indices

ξ and

η, we compute the average loss for ten batches of a hundred thousand simulations of a sample of size one hundred. Theorems and proofs are replaced by tables and programs. If the error has a symmetric distribution, the square root of the average loss is the empirical sd (standard deviation). From the tables in

Section 7, it may be seen that, for good estimators, this sd depends on the tail index of the explanatory variables rather than the tail index of the error. As a rule of thumb, the sd is of the order of

This crude approximation is also valid for errors with a Pareto distribution. It may be used to determine whether an estimator of the regression line performs well for heavy tails.

The paper introduces a new class of non-linear estimators. A weighted balance estimator of the regression line is a bisector of the sample. For even sample size half the points lie below the bisector, half above. There are many bisectors. A weight sequence is used to select a bisector which yields a good estimate of the regression line. Weighted balance estimators for linear regression may be likened to the median for univariate samples. The LAD (Least Absolute Deviation) estimator is a weighted balance estimator. However, there exist weighted balance estimators which perform better when the explanatory variable has heavy tails.

The results of our paper are exemplary rather than analytical. They describe the outcomes of an initial exploration on estimators for linear regression with heavy tails. The numerical results in the tables in

Section 7 may be regarded as benchmarks. They may be used to measure the performance of alternative estimators. Insight in the performance of estimators of the regression line for samples of size one hundred where the explanatory variable has a Pareto distribution and the error a Student or Pareto distribution may help to select a good estimator in the case of heavy tails.

The literature on the LAD(Least Absolute Deviation) estimator is extensive (see

Dielman (

2005)). The theory for the TS (Theil–Sen) estimator is less well developed, even though TS is widely used for data which may have heavy tails, as is apparent from a search on the Internet. A comparison of the performance of these two estimators is overdue.

When the tail indices

ξ and

η are positive, outliers occur naturally. Their effect on estimates has been studied in many papers. A major concern is whether an outlier should be accepted as a sample point. In simulations, contamination does not play a role. In this paper, outliers do not receive special attention. Robust statistics does not apply here. If a good fairy were to delete all outliers, that would incommode us. It is precisely the outliers which allow us to position the underlying distribution in the (

ξ,

η)-domain and select the appropriate estimator. Equation (

2) makes no sense in robust regression. Our procedure for comparing estimators by computing the average loss over several batches of a large number of simulations relies on uncontaminated samples. This does not mean that we ignore the literature on robust regression. Robust regression estimates may serve as initial estimates. (This approach does not do justice to the special nature of robust regression, which aims at providing good estimates of the regression line when working with contaminated data.) In our paper, we have chosen a small number of geometric estimators of the regression line, whose performance is then compared for a symmetric and an asymmetric error distribution at various points in the

ξ,

η-domain, see

Figure 1a. In robust regression, one distinguishes M-, R- and L-estimators. We treat the M-estimators LS and LAD. These minimize the

distance of the residuals for

p = 2 and

p = 1, respectively. We have not looked at other values of

p ∈ [1,∞). Tukey’s biweight and Huber’s Method are non-geometric M-estimators since the estimate depends on the scaling on the vertical axis. The R-estimators of Jaeckel and Jurečková are variations on the LAD estimator. They are less sensitive to the behaviour of the density at the median, as we show in

Section 3. They are related to the weighted balance estimators WB40, and are discussed in

Section 4. Least Trimmed Squares (LTS) was introduced in

Rousseeuw (

1984). It is a robust version of least squares. It is a geometric L estimator. Least Median Squares introduced in the same paper yields the central line of a closed strip containing fifty of the hundred sample points. It selects the strip with minimal vertical width. If the error has a symmetric unimodal density one may add the extra condition that there are twenty five sample points on either side of the strip. This estimator was investigated in a recent paper

Postnikov and Sokolov (

2015). Maximum Likelihood may be used if the error distribution is known. We are interested in estimators which do not depend on the error distribution, even though one has to specify a distribution for the error in order to measure the performance.

Nolan and Ojeda-Revah (

2013) uses Maximum Likelihood to estimate the regression line when the errors have a stable distribution and the explanatory variable (design matrix) is deterministic. The paper contains many references to applications. The authors write: “In these applications, outliers are not mistakes, but an essential part of the error distribution. We are interested in both estimating the regression coefficients and in fitting the error distribution.” These words also give a good description of the aim of our paper.

There is one recent paper which deserves special mention. It uses the same framework as our paper. In

Samorodnitsky et al. (

2007), the authors determined limit distributions for the difference

for certain linear estimators for the linear regression

. The error

Y* is assumed to have a symmetric distribution with power tails, and the absolute value of the explanatory variable also has a power tail. The tail indices are positive. The estimators are linear expressions in the error terms and functions of the absolute value of the explanatory variables (which in our paper are assumed to be positive):

The estimator E = Eθ depends on a parameter θ > 1 . The value θ = 2 yields LS. The paper distinguishes seven subregions in the positive (ξ,η)-quadrant with different rates of convergence. The paper is theoretical and focuses on the limit behaviour of the distribution of the estimator when the sample size tends to infinity. We look at the same range of values for the tail indices ξ and η, but our approach is empirical. We focus on estimators which perform well in terms of the quadratic loss function L(u) = u2. Such estimators are non-linear. We allow non-symmetric error distributions, and our regression line may have a non-zero abscissa. We only consider two classes of dfs for the error term, Student and Pareto, and our explanatory variables have a Pareto distribution. We restrict attention to the rectangle, (ξ,η) ∈ [0,3] × [0,4]. In our approach, the horizontal line η = 1/2 and the vertical line ξ = 1/2 turn out to be critical, but for ξ,η ≥ 1/2 the performance of the estimators depends continuously on the tail indices. There are no sharply defined subregions where some estimator is optimal. Our treatment of the behaviour for n → ∞ is cursory. The two papers present complementary descriptions of linear regression for heavy tails.

Let us give a brief overview of the contents. The exposition in

Section 2 gives some background and supplies the technical details for understanding the results announced above. The next four sections describe the estimators which are investigated in our paper. The first describes the three well-known estimators LS, LAD and RMP. Least Squares performs well for 0 ≤

η < 1/2 when the error has finite variance. Least Absolute Deviation performs well when

ξ is small. The estimator RMP (RightMost Point) selects the bisector which passes through the rightmost sample point. Its performance is poor, but its structure is simple. The next section treats the Weighted Balance estimators. The third treats Theil’s estimator which selects the line such that Kendall’s tau vanishes for the residuals, rather than the covariance as for Least Squares. It also introduces a weighted version of the Theil–Sen estimator. The last of these four sections introduces four estimators based on trimming: the Weighted Least Trimmed Squares estimator, WLTS, a weighted version of the estimator introduced by Rousseeuw and described above, and three estimators which select a bisector for which a certain state function is minimal when the 25 furthest points above the bisector are trimmed and the furthest 25 below. For these three estimators, trimming is related to the procedure RANSAC proposed in

Fischler and Bolles (

1981) for image analysis.

The heart of our paper is the set of tables in

Section 7 where for

ξ = 0, 1/2, 1, 3/2, 2, 3 we compare the performance of different estimators. The errors have a Student or Pareto distribution. The tail index of these distributions varies over 0, 1/3, 1/2, 2/3, 1, 3/2, 2, 3, 4. To make the results for different values of the tail index

η comparable, the errors are standardized so that their df

F* satisfies

This ensures that the InterQuartile Distance is IQD = 1. There are three sets of six tables corresponding to the six values of the tail index of the explanatory variable, ξ = 0, 1/2, 1, 3/2, 2, 3.

The first set of tables lists the empirical sd for LS, LAD, Power Corrected LAD, the Theil–Sen estimator and three estimators based on trimming, all for errors with a Student distribution. The estimators do not depend on the tail index of the error.

The second set of tables lists the empirical sd for the Hyperbolic Correction of LAD, the Right Median, RM, the Hyperbolic Balance estimators HB0 and HB40, Weighted Theil–Sen, and Weighted Least Trimmed Squares, WLTS, all for errors with a Student distribution. The estimators contain parameters which depend on the value of the tail indices, ξ and η. Thus, the Right Median, RM, depends on an odd positive integer which tells us how many of the rightmost points are used for the median. In WLTS, there are three parameters, the number of sample points which are trimmed, and two positive real valued parameters which determine a penalty for deleting sample points which lie far to the right.

The third set of tables does the same as the second, but here the errors have a Pareto distribution. Both the empirical sd and bias of the estimates are listed.

The estimators yielding the tables are simple functions of the 2

n real numbers which determine the sample. Apart from a choice of the parameters they do not depend on the form of the distribution of the error or the explanatory variable. The results show a continuous dependence of the empirical sd on the tail indices

ξ and

η both for Student and for Pareto errors. The sd and the value of the parameters are different in the third set of tables (Pareto errors) and the second (Student errors) but the similarity of the performance of the estimators for these two error distributions suggests that the empirical sds in these tables will apply to a wide range of error densities. The fourth table lists the optimal values of the parameters for various estimators. The example in

Section 7.3 shows how the techniques of the paper should be applied to a sample of size

n = 231 if neither the values of the tail indices

ξ and

η nor the distribution of the error are known.

The results in the three tables are for sample size

n = 100. The explanatory variables are independent observations from a Pareto distribution on (1,∞), with tail index

ξ > 0, arranged in decreasing order. One may replace these by the hundred largest points in a Poisson point process on (0,∞) with a Pareto mean measure with the same tail index. This is done in

Appendix B. A scaling argument shows that the slope of the estimate of the regression line for the Poisson point process is larger by a factor approximately 100

ξ compared to the iid sample. For the Poisson point process the rule of thumb in Equation (

2) for the sd of the slope for good estimators has to be replaced by

The performance decreases with ξ since the fluctuations in the rightmost point around the central value x = 1 increase and the remaining 99 sample points Xi, i > 1, with central value 1/iξ, tend to lie closer to the vertical axis as ξ increases and hence give less information about the slope of the regression line.

What happens if one uses more points of the point process in the estimate? For

ξ ≤ 1/2, the full sequence of the points of the Pareto point process together with the independent sequence of errors

determines the true regression line almost surely. The full sequence always determines the distribution of the error variable, but for errors with a Student distribution and

ξ > 1/2 it does not determine the slope of the regression line. For weighted balance estimators, the step from

n = 100 to ∞ is a small one. If

ξ is large, say

ξ ≥ 3/4, the crude Equation (

4) remains valid for sample size

n > 100.

Conclusions are formulated in

Section 8. The Appendix contains three sections:

Appendix A treats tails and shows when tails of Weighted Balance estimators

are comparable to those of the error

Y*, and when they have finite second moment;

Appendix B gives a brief exposition of the alternative Poisson point process model; and

Appendix C introduces EGBP distributions. These often give a surprisingly good fit to the distribution of the logarithm of the absolute value of

for symmetric errors.

2. Background

In this paper, both the explanatory variables

Xi and the errors

in the linear regression

have heavy tails. The vectors(

X1, …,

Xn) and

are independent; the

are iid; and the

Xi are a sample from a Pareto distribution on (1,∞) arranged in decreasing order:

The Pareto explanatory variables may be generated from the order statistics U1 < ⋯ < Un of a sample of uniform variables on (0,1) by setting . The parameter ξ > 0 is called the tail index of the Pareto distribution. Larger tail indices indicate heavier tails. The variables have tail index η. They typically have a symmetric Student t distribution or a Pareto distribution. For the Student distribution, the tail index η is the inverse of the degrees of freedom. At the boundary, η = 0 and the Student distribution becomes Gaussian, the Pareto distribution exponential.

The problem addressed in this paper is simple: What are good estimators of the regression line for a given pair (ξ,η) of positive power indices?

For

η < 1/2, the variable

Y* has finite variance and LS (Least Squares) is a good estimator. For

ξ < 1/2, the Pareto variable

X = 1/

Uξ has finite variance. In that case, the LAD (Least Absolute Deviation) often is a good estimator of the regression line. Asymptotically it has a (bivariate) normal distribution provided the density of

Y* is positive and continuous at the median, see

Van de Geer (

1988). What happens for (

ξ,

η) ∈ [1/2,∞)

2? In the tables in

Section 7, we compare the performance of several estimators at selected parameter values (

ξ,

η) for sample size

n = 100. See

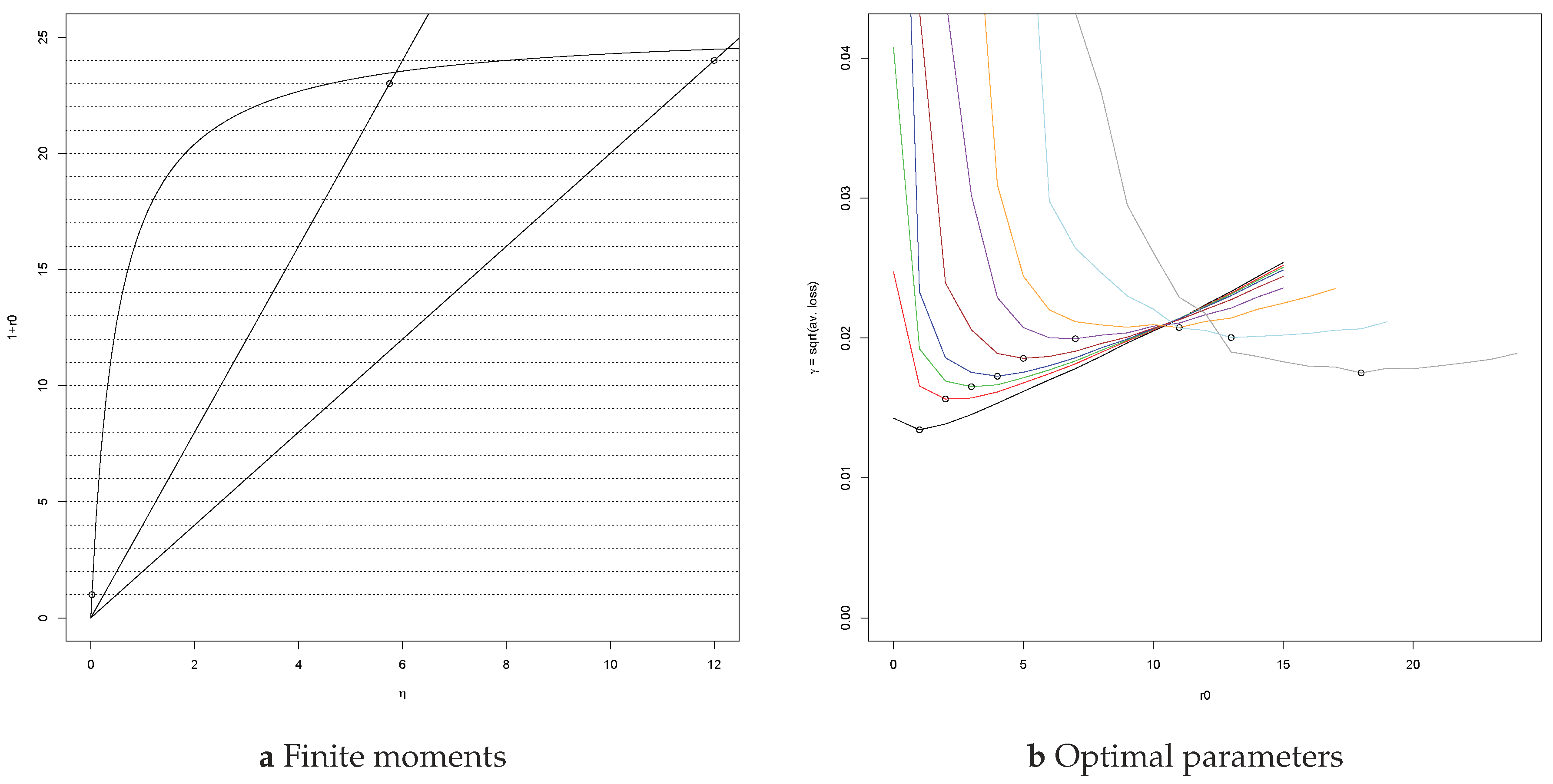

Figure 1a. First, we give an impression of the geometric structure of the samples which are considered in this paper, and describe how such samples may arise in practice.

For large

ξ, the distribution of the points

Xi along the positive horizontal axis becomes very skewed. For

ξ = 3 and a sample of a hundred

.

1 Exclude the rightmost point. The remaining 99 explanatory variables then all lie in an interval which occupies less than one percent of the range. The point (

X1,

Y1) is a pivot. It yields excellent estimates of the slope if the absolute error is small. The estimator RMP (RightMost Point) may be expected to yield good results. This estimator selects the bisector which passes through (

X1,

Y1).

Definition 1. A bisector of the sample is a line which divides the sample into two equal parts. For an even sample of size 2m, one may choose the line to pass through two sample points: m − 1 sample points then lie above the line and the same number below.

The estimator RMP will perform well most of the time but if

Y* has heavy tails RMP may be far off the mark occasionally, even when

η <

ξ. What is particularly frustrating are situations like

Figure 1b where RMP so obviously is a poor estimate.

Figure 1a shows the part of (

ξ,

η)-space to which we restrict attention in the present paper. For practical purposes, the square 0 ≤

ξ,

η ≤ 3/2 is of greatest interest. The results for other values of

ξ and

η may be regarded as the outcome of stress tests for the estimators.

Often, the variables are interpreted as iid errors. It then is the task of the statistician to recover the linear relation between the vertical and horizontal coordinate from the blurred data for Y. The errors are then usually assumed to have a symmetric distribution, normal or stable.

There is a different point of view. For a bivariate normal vector (X,Y), there exists a line, the regression line y = b + ax, such that conditional on X = x the residual Y* = Y − (ax + b) has a centred normal distribution independent of x. A good estimate of the regression line will help to obtain a good estimate of the distribution of (X,Y). This situation may also occur for heavy-tailed vectors.

Heffernan and Tawn (

2004) studies the distribution of a vector conditional on the horizontal component being large. In this

conditional extreme value model, the authors focus on the case where the conditional distribution of

Y given

X =

x is asymptotically independent of

x for

x → ∞. The vector

Z = (

X,

Y) conditioned to lie in a half plane

Ht = {

x >

t} is a

high risk scenario, denoted by

ZHt. Properly normalized, the high risk scenarios

ZHt may have a limit distribution for

t → ∞. From univariate extreme value theory, we know that the horizontal coordinate of the limit vector has a Pareto or exponential distribution. In the

Heffernan–Tawn model, the vertical coordinate of the limit vector is independent of the horizontal coordinate. Heffernan and Tawn in

Heffernan and Tawn (

2004) considered vectors with light tails. The results were extended to heavy tails by Heffernan and Resnick in

Heffernan and Resnick (

2007). See

Balkema and Embrechts (

2007) for a list of all limit distributions.

Given a sample of a few thousand observations from a heavy-tailed bivariate distribution, one will select a subsample of say the hundred points for which the horizontal coordinate is maximal. This yields a sequence

x1 > ⋯ >

x100. The choice of the horizontal axis determines the vertical coordinate in the points (

x1,

y1), …, (

x100,

y100). In the Heffernan–Tawn model, the vertical coordinate may be chosen to be asymptotically independent of

x for

x → ∞. To find this preferred vertical coordinate, one has to solve the linear regression Equation (

1). The

residuals,

, allow one to estimate the distribution of the error

and the tail index

η.

The model , where X1 > ⋯ > Xn are the extreme sample points and the vertical coordinates are asymptotically iid and independent of the values Xi, yields a nice bivariate extension of univariate Extreme Value Theory. In our analysis of the Heffernan-Tawn model, it became clear that good estimates of the slope of the regression line for heavy tails are essential if one wants to apply the model. The interpretation of the data should not effect the statistical analysis. Our interest in the Heffernan–Tawn model accounts for the Pareto distribution of the explanatory variable and the assumption of heavy tails for the error term. It also accounts for our focus on estimates of the slope.

We restrict attention to

geometric estimators of the regression line. Such estimators are called

contravariant in

Lehmann (

1983). A transformation of the coordinates has no effect on the estimate of the regression line

L. It is only the coordinates which are affected.

Definition 2. The group of affine transformations of the plane which preserve orientation and map right vertical half planes into right vertical half planes consists of the transformations An estimator of the regression line is geometric if the estimate is not affected by coordinate transformations in .

Simulations are used to compare the performance of different estimators. For geometric estimators, one may assume that the true regression line is the horizontal axis, that the Pareto distribution of the explanatory variables Xi is the standard Pareto distribution on (1,∞) with tail , and that the errors are scaled to have IQD = 1. Scaling by the InterQuartile Distance IQD allows us to compare the performance of an estimator for different error distributions.

The aim of this paper is to compare various estimators of the regression line for heavy tails. The heart of the paper is the set of tables in

Section 7. To measure the performance of an estimator

E we use the loss function

L(

a) =

a2. We focus on the slope of the estimated regression line

. For given tail indices (

ξ,

η), we choose

X = 1/

Uξ Pareto and errors

Y* with a Student or Pareto distribution with tail index

η, scaled to have IQD = 1. We then compute the average loss

Lr of the slope

for

r simulations of a sample of size

n = 100 from this distribution.

where the sum is taken over the outcomes

for

r simulations. We choose

r = 10

5. The square root

is our measure of performance. It is known as RMSE (Root Mean Square Error). We do not use this term. It is ambiguous. The mean may indicate the average, but also the expected value. Moreover, in this paper, the error is the variable

Y* rather than the difference

. If the df

F* of

Y* is symmetric, the square root

is the empirical sd of the sequence of

r outcomes of

. The quantity

γ is random. If one starts with a different seed, one obtains a different value

γ. Since

r is large. one may hope that the fluctuations in

γ for different batches of

r simulations is small. The fluctuations depend on the distribution of

γ, and this distribution is determined by the tail of the random variable

. The average loss

Lr is asymptotically normal if

L has a finite second moment. For this, the estimate

has to have a finite fourth moment. In

Section 3, the distribution of

γ is analyzed for

ξ =

η =

i/10,

i = 2, …, 7, for the estimator

E = LS and Student errors. We show how the distribution of

γ changes on passing the critical value

η = 1/2.

The fluctuations in

γ are perhaps even more important than the average value in determining the quality of an estimator. To quantify the fluctuations in

γ we perform ten batches of 10

5 simulations. This yields ten values

γi for the empirical sd. Compute the average

μ and the sd

. The two quantities

μ and

δ describe the performance of the estimator. Approximate

δ by one of

Now, approximate the pair (

μ,

δ) by (

m/10

k,

d/10

k) with

d ∈ {1, 2, 5} and

m an integer. For the representation (

m/10

k,

d/10

k), it suffices to know the integers

m,

k and the digit

d ∈ {1, 2, 5}. In our case,

δ typically is small, 0 <

δ < 1 for estimators with good performance. In that case,

k is non-negative and one can reconstruct

m and

k from the decimal fraction

m/10

k provided one writes out all

k digits after the decimal point, including the zeros. That allows one to reconstruct the pair (

m/10

k,

d/10

k) from

m/10

k[

d]. This lean notation is also possible if one writes

m/10

k as

me −

k, as in 17

e + 6, the standard way to express seventeen million in R. In this paper, we often write

m/10

k[

d] except when this notation leads to confusion. Here are some examples: For (

μ,

δ) = (0.01297, 0.000282), (136.731 × 10

−7, 1.437 × 10

−7), (221.386, 3.768), (221.386, 37.68) and (334567.89, 734567.89) the recipe gives:

Actually, we still have to say how we reduce

δ to

d/10

k. Take

d = 1; if 10

kδ lies in [0.7, 1.5],

d = 2 for 1.5 ≤ 10

kδ < 3 and

d = 5 for 3 ≤ 10

kδ < 7. Finally, define

. In Equation (

6), the reader sees at a glance the average of the outcomes

γi of the ten batches, and the magnitude of the fluctuations.

Let us mention two striking results of the paper. The first concerns LAD (Least Absolute Deviation), a widely used estimator which is more robust than LS (Least Squares) since it minimizes the sum of the absolute deviations rather than their squares. This makes the estimator less sensitive to outliers of the error. The LAD estimate of the regression line is a bisector of the sample. For

ξ > 1/2, the outliers of the explanatory variable affect the stability of the LAD estimate (see

Rousseeuw (

1991), p. 11).

Table 1 lists some results from

Section 7 for the empirical sd of the LAD-estimate.

The message from the table is clear. For errors with infinite second moment,

η ≥ 1/2, use LAD, but not when

ξ ≥ 1/2. Actually, the expected loss for

is infinite for

η ≥ 1/2 for all

ξ. In this respect LAD is no better than LS. If the upper tail of the error varies regularly with negative exponent, the quotient

is a bounded function on

. See Theorem 2.

The discrepancy between the empirical sd, based on simulations, and the theoretical value is disturbing. Should a risk manager feel free to use LAD in a situation where the explanatory variable is positive with a tail which decreases exponentially and the errors have a symmetric unimodal density? Conversely, should she base her decision on the mathematical result in the theorem? The answer is clear: One hundred batches of a quintillion simulations of a sample of size

n = 100 with

X standard exponential and

Y* Cauchy may well give outcomes which are very different from 0.0917[5]. Such outcomes are of no practical interest. The empirical sds computed in this paper and listed in the tables in

Section 7 may be used for risks of the order of one in ten thousand, but for risks of say one in ten million—risks related to catastrophic events—other techniques have to be developed.

A million simulations allow one to make frequency plots which give an accurate impression of the density when a density exists. Such plots give more information then the empirical sd; they suggest a shape for the underlying distribution. We plot the log frequencies in order to have a better view of the tails. Log frequencies of Gaussian distributions yield concave parabolas.

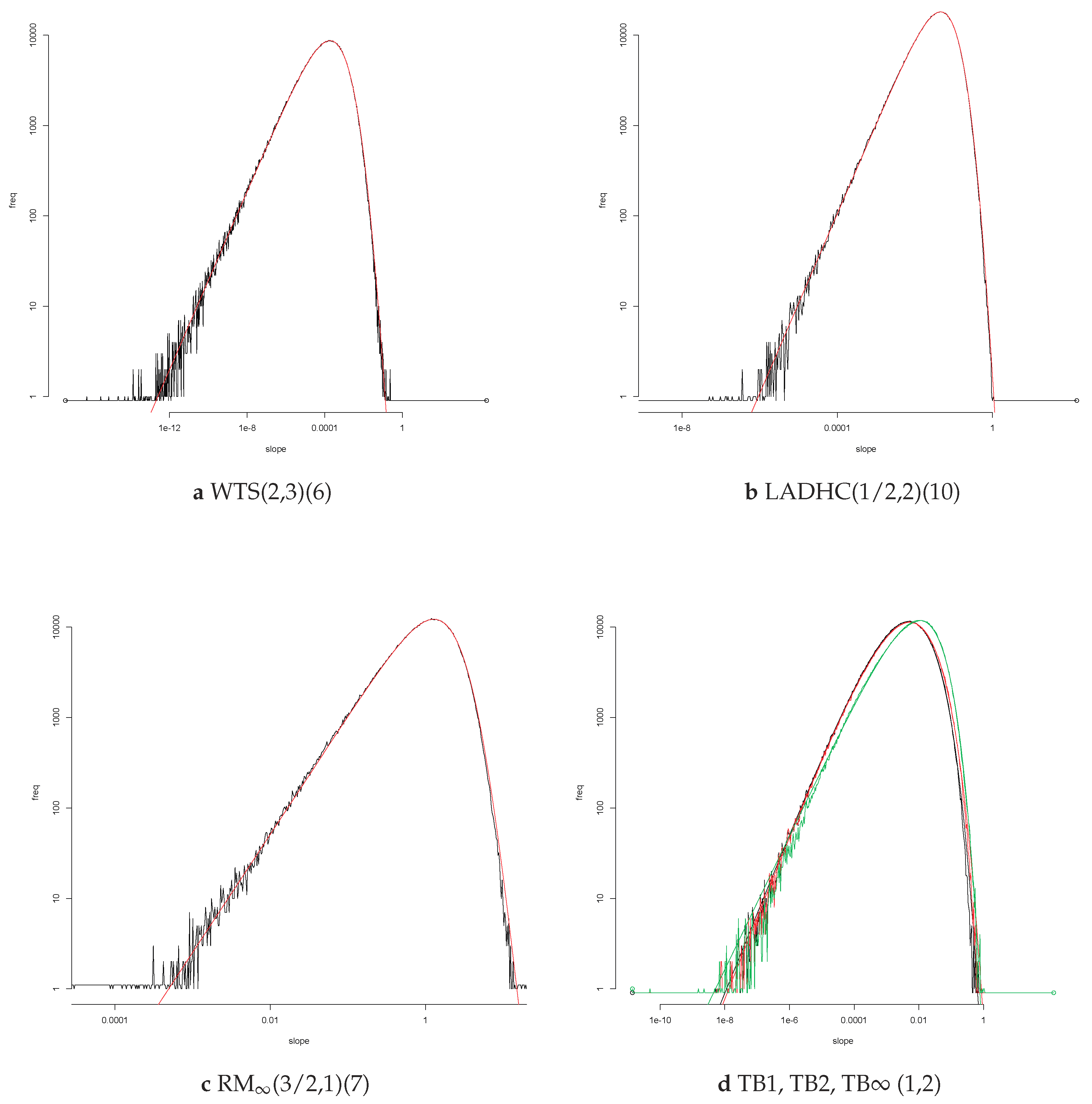

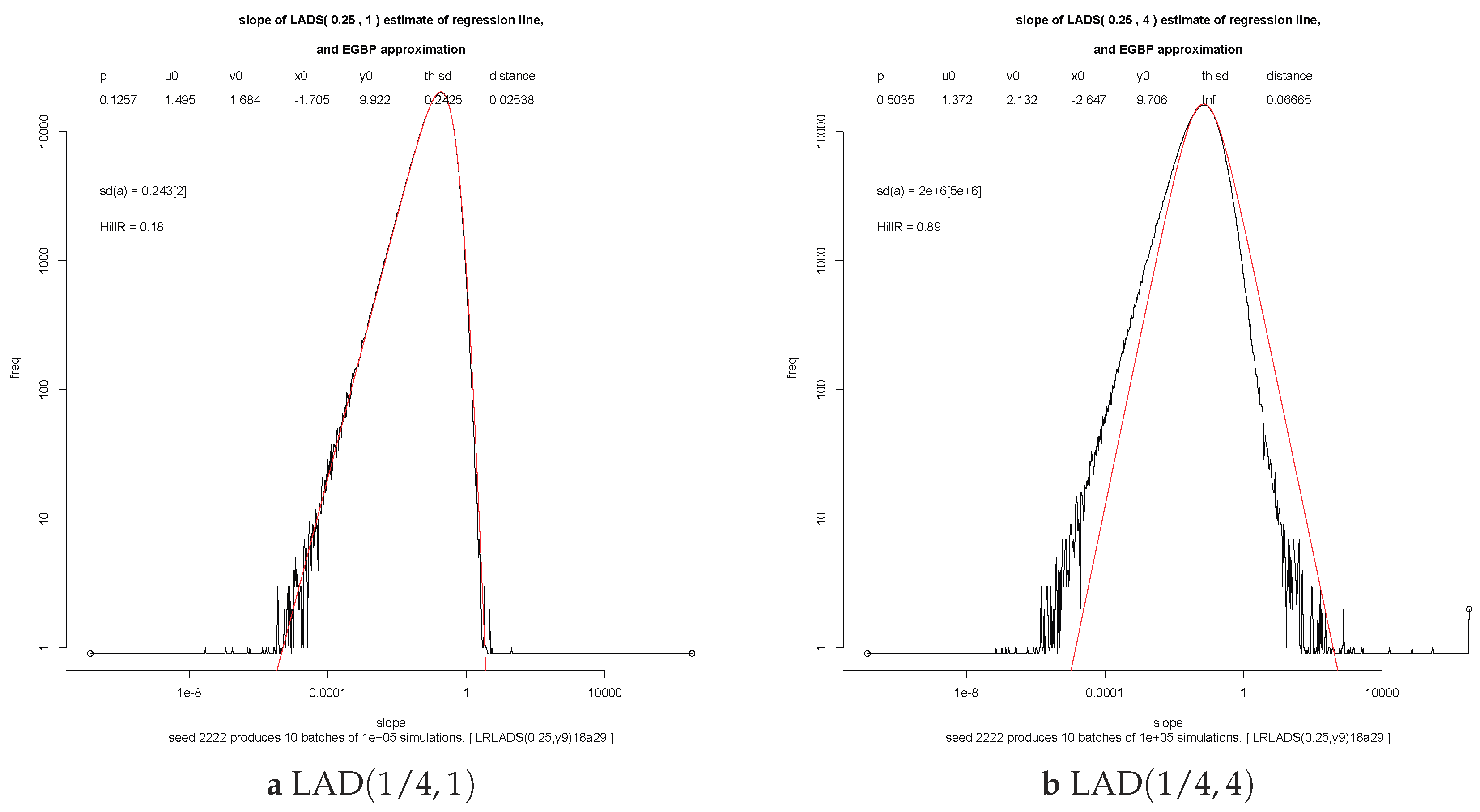

Figure 2 shows loglog frequency plots for two estimators of the regression line for errors with a symmetric Student distribution for (

ξ,

η) = (3,4). A loglog frequency plot of

plots the log of the frequency of

. It yields a plot with asymptotes with non-zero slopes if the df of

has power tails at zero and infinity.

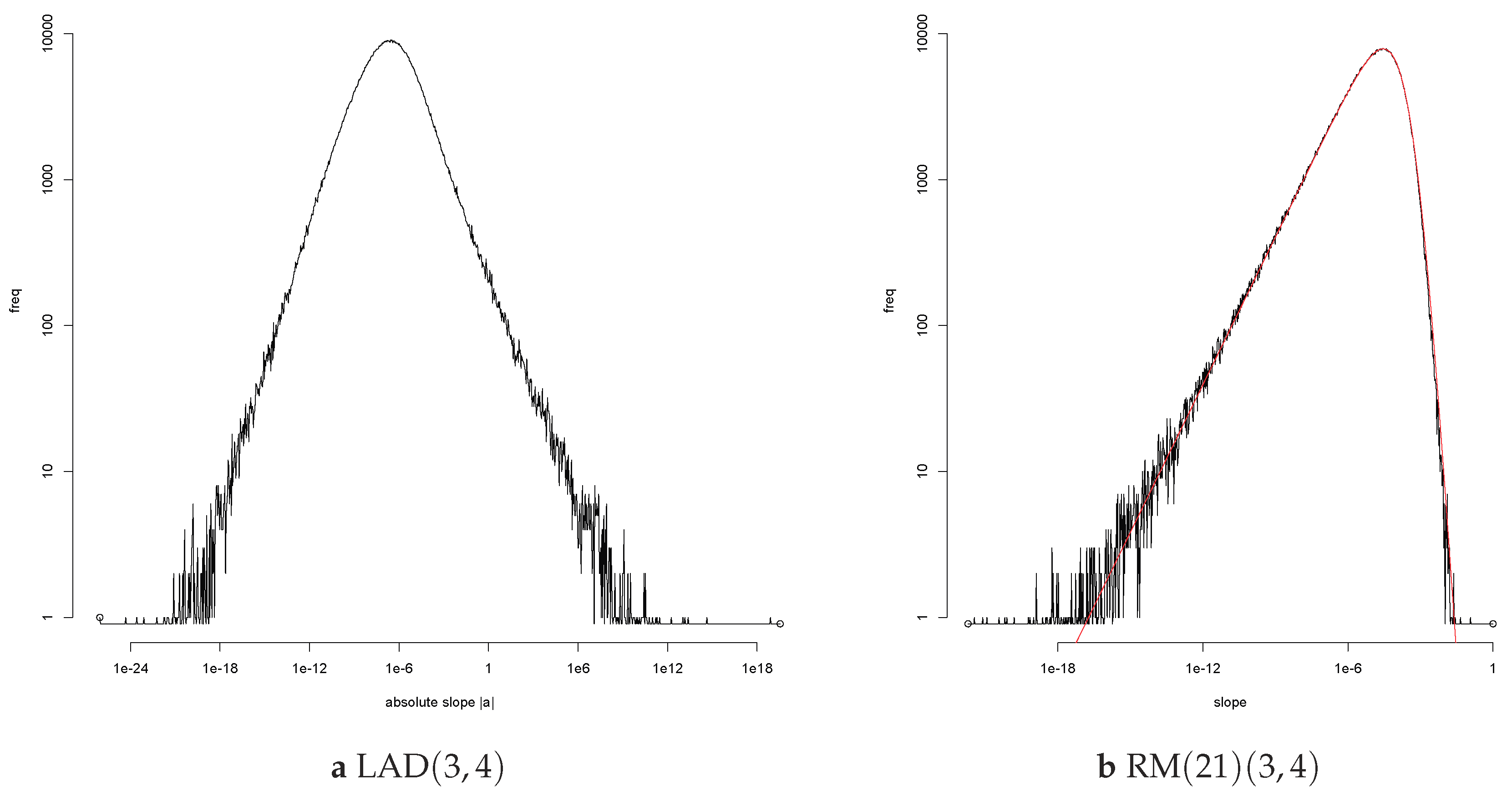

First, consider the loglog frequency plot of

on the left. The range of

in

Figure 2a is impressive. The smallest value of |

a| is of the order of 10

−24, the largest of the order of 10

20. A difference of more than forty orders of magnitude. Accurate values occur when

X1 is large and |

Y*| is small. The LAD estimate of the regression line is a bisector of the sample. In extreme cases it will pass through the rightmost point and agree with the RMP estimate. The value will then be of the order of 1/

X1. The minimum is determined by the minimal value of 10

8 simulations of a standard uniform variable. For

ξ = 3, this gives the rough value 10

−24 for the most accurate estimate. Large values of |

a| are due to large values of |

Y1|. Because of the tail index

η = 4, the largest value of |

Y1| will be of the order of 10

24. Then, |

Y1|/

X1 is of the order of 10

18. For the tail index pair (

ξ,

η) = (3,4), the limits of computational accuracy for R are breached.

The asymptotes in the loglog frequency plots for

correspond to power tails for

, at the origin and at infinity. The slope of the asymptote is the exponent of the tail. The plot on the right,

Figure 2b, shows that it is possible to increase the absolute slope of the right tail and thus reduce the inaccurate estimates. The value 0e+16[1] for the sd of the LAD estimate of the slope is reduced to 0.00027[5] for RM(21). The Right Median estimate RM(21) with parameter 21 is a variation on the Rightmost Point estimate RMP. Colour the 21 rightmost sample points red and then choose the bisector of the sample which also is a bisector of the red points. This is a discrete version of the cake cutting problem: “Cut a cake into two equal halves, such that the icing is also divided fairly.” The RM estimate passes through a red and a black point. Below the line are 49 sample points, ten of which are red; above the line too. The tail index of

is at most 2

η/20 = 0.4 by Theorem A2. The estimate

has finite sd even though the value 0.00027[5] shows that the fluctuations in the empirical sd for ten batches of a hundred thousand simulations are relatively large.

The smooth red curve in the right hand figure is the EGBP fit to the log frequency plot of

, see

Appendix C.

The tables in

Section 7 compare the performance of various estimators. It is not the value of the empirical sds listed in these tables which are important, but rather the induced ordering of the estimators. For any pair (

ξ,

η) and any estimator

E, the empirical sd and the value of the parameter will vary when the df

F* of the error

Y* is varied, but the relative order of the estimators is quite stable as one sees by comparing the results for Student and Pareto errors with the same tail indices.

The remaining part of this section treats the asymptotic behaviour of estimators when the sample size tends to ∞ via a Poisson point process approach. This part may be skipped on first reading.

Recall that our interest in the problem of linear regression for heavy tails was triggered by a model in extreme value theory. One is interested in the behaviour of a vector

X when a certain linear functional

is large. What happens to the distribution of the high risk scenario

for the half space

Ht = {

ζ ≥

t} for

t → ∞? We consider the bivariate situation and choose coordinates such that

ζ is the first coordinate. In the Heffernan–Tawn model, one can choose the second coordinate such that the two coordinates of the high risk scenario are asymptotically independent. More precisely there exist normalizations of the high risk scenarios, affine transformations mapping the vertical half plane

Ht onto

H1 such that the normalized high risk scenarios converge in distribution. The limit scenario lives on

H1 = {

x ≥ 1}. The first component of the limit scenario has a Pareto (or exponential) distribution and is independent of the second component. The normalizations which yield the limit scenario, applied to the samples, yield a limiting point process on {

x > 0} (by the Extension Theorem, Theorem 14.12, in

Balkema and Embrechts (

2007).) If the limit scenario has density

r(

x)

f*(

y) on

H1 with

r(

x) =

λ/

xλ + 1 on (1, ∞), the limit point process is a Poisson point process

N0 with intensity

r(

x)

f*(

y) where

r(

x) =

λ/

xλ + 1 on (0, ∞). It is natural to use this point process

N0 to model the hundred points with the maximal

x-values in a large sample from the vector

X.

For high risk scenarios, estimators may be evaluated by looking at their performance on the hundred rightmost points of this Pareto point process. For geometric estimators the normalizations linking the sample to the limit point process do not affect the regression line since the normalizations belong to the group used in the definition of geometric estimator above. The point process N0 actually is a more realistic model than an iid sample.

For geometric estimators, there is a simple relation between the slope

An of the regression line for the

n rightmost points

,

i = 1, …,

n, of the point process

N0 and the slope

of the regression line for a sample of

n independent observations

:

The first n points of the standard Poisson point process divided by the next point, Zn, are the order statistics from the uniform distribution on (0,1) and independent of the Gamma(n + 1) variable Zn with density xne−x/n! on (0,∞).) A simple calculation shows that ζn = nξ + c(ξ) + o(1) for n → ∞.

The point process Na with points , , has intensity r(x)f*(y − ax). The step from n to n + 1 points in estimating the linear regression means that one reveals the n + 1 st point of the point process Na. This point lies close to the vertical axis if n is large and very close if ξ > 0 is large too. The new point will give more accurate information about the abscissa b of the regression line than the previous points since the influence of the slope decreases. For the same reason, it will give little information on the value of the slope a. The point process approach allows us to step from sample size n = 100 to ∞ and ask the crucial question: Can one distinguish Na and N0 for a ≠ 0?

Almost every realization of

Na determines the probability distribution of the error but it need not determine the slope

a of the regression line. Just as one cannot distinguish a sequence of standard normal variables

Wn from the sequence of shifted variables

Wn + 1/

n with absolute certainty, one cannot distinguish the Poisson point process

Na from

N0 for

ξ > 1/2 and errors with a Student distribution. The distributions of the two point processes

Na and

N0 are equivalent. See

Appendix B for details.

The equivalence of the distributions of

Na and

N0 affects the asymptotic behaviour of the estimates

An of the slope of the regression line for the Poisson point process. There exist no estimators for which

An converges to the true slope. The limit, if it exists, is a random variable

A∞, and the loss (

A∞ −

a)

2 is almost surely positive. Because of the simple scaling relation in Equation (

7) between the estimate of the slope for iid samples and for

N, the limit relation

An ⇒

A∞ implies

.

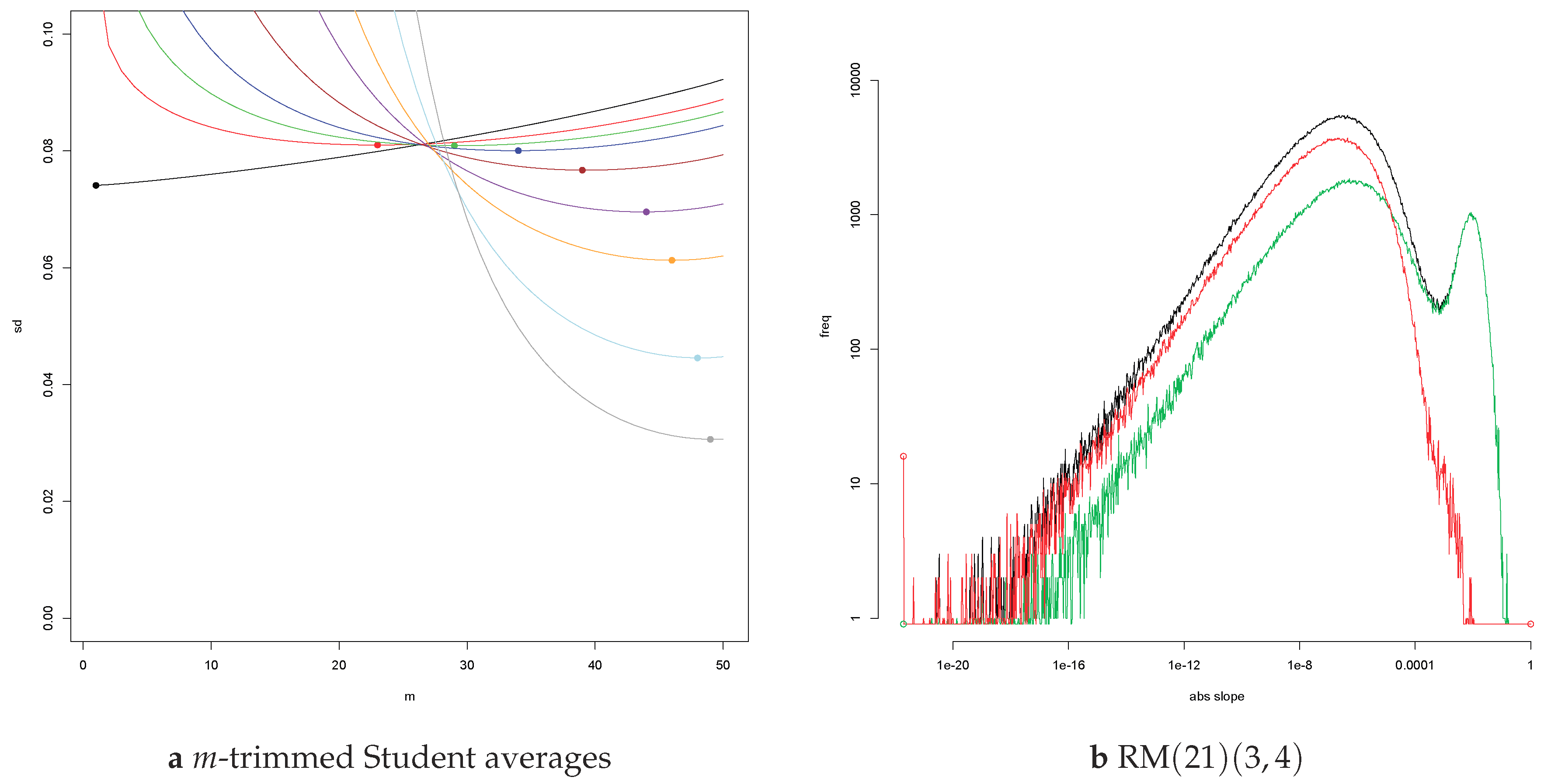

For errors with finite second moment, the sd

σn of the slope of the LS estimate

An of the regression line

y =

ax +

b based on the

n rightmost points of the Poisson point process has a simple expression in terms of the positions of the explanatory variables

x1 > ⋯ >

xn, as does the sd

of the slope of the LS estimate of the regression ray

y =

ax (with abscissa

b = 0). We assume standard normal errors and let R calculate the value of

σn =

σn(

ξ) and

for various values of

n and of

ξ ∈ (0,3]. Since the explanatory variables are random, we use a million simulations to approximate these deterministic values. We also compute approximations to

(see

Appendix B.5). The plots in

Figure 3 will answer questions such as the following:

How does σ∞ depend on ξ ∈ (0,3]?

How much does the sd σn decrease if we reveal the next n points of the point process N0?

What is the effect of the unknown abscissa on the estimates? For what n will σn equal ?

Figure A2 in

Appendix B.4 shows the plots on a logarithmic scale and similar plots for the empirical sd of the Right Median estimates for Cauchy errors.

3. Three Simple Estimators: LS, LAD and RMP

Least Squares (LS) and Least Absolute Deviation (LAD) are classic estimators which perform well if the tail index η of the error is small (LS), or when the tail index ξ of the explanatory variable is small (LAD). For ξ,η ≥ 1/2, one may use the bisector of the sample passing through the RightMost Point (RMP) as a simple but crude estimate of the regression line.

At the critical value

η = 1/2, the second moment of the error becomes infinite and the least squares estimator breaks down. Samples change gradually when

ξ and

η cross the critical value of 1/2. We investigate the break down of the LS estimator by looking at its behaviour for

ξ =

η =

i/10,

i = 2, …, 7, for errors with a Student distribution. It is shown that the notation in Equation (

6) nicely expresses the decrease in the performance of the estimator on passing the critical exponent. We also show that even for bounded errors there may exist estimators which perform better than Least Squares. The estimator LAD is more robust than Least Squares. Its performance declines for

ξ > 1/2, but even for

ξ = 0 (exponential distribution) or

ξ = −1 (uniform distribution) its good performance is not lasting. The RightMost Point estimate is quite accurate most of the time but may be far off occasionally. That raises the question whether an estimator which is far off 1% of the time is acceptable.

Least squares (LS) is simple to implement and gives good results for

η < 1/2. Given the two data vectors

x and

y, we look for the point

ax +

be in the two-dimensional linear subspace spanned by

x and

e = (1, …, 1) which gives the best approximation to

y. Set

z =

x −

me where

m is the mean value of the coordinates of

x. The vectors

e and

z are orthogonal. Choose

a0 and

b0 such that

y −

a0z ⊥

z and

y −

b0e ⊥

e. Explicitly:

The point we are looking for is

This point minimizes the sum of the squared residuals, where the

residuals are the components of the vector

y − (

ax +

be). Note that

m is the mean of the components of

x and

s2 = 〈

z,

z〉 the sample variance. Conditional on

X =

x, the estimate of the slope is

There is a simple relation between the standard deviation of

Y* and of the estimate

of the slope: (

ζi) is a unit vector; hence conditional on the configuration

x of

XIf the sd of Y* is infinite then so is the sd of the estimator . That by itself does not disqualify the LS estimator. What matters is that the expected loss is infinite.

Let us see what happens to the average loss

Lr when the number

r of simulations is large for distributions with tail index

ξ =

η =

τ as

τ crosses the critical value 0.5 where the second moment of

Y* becomes infinite.

Figure 4 shows the log frequency plots of

for

ξ =

η =

τ(

i) =

i/10 for

i = 2, …, 7 based on ten batches of a hundred thousand simulations. The variable

Y* has a Student

t distribution with 1/

η degrees of freedom and is scaled to have interquartile distance one. The most striking feature is the change in shape. The parabolic form associated with the normal density goes over into a vertex at zero for

τ = 0.5 suggesting a Laplace density, and a cusp for

τ > 0.5. The cusp will turn into a singularity

f(

x) ∼

c/

xτ with

τ = 1 − 1/

η > 0 when

Y* has a Student distribution with tail index

η > 1.

The change that really matters is not visible in

Figure 4. It occurs in the tails.

The distribution of the average loss

Lr depends on the tail behaviour of

. The Student variable

Y* with 1/

η degrees of freedom has tails

. This also holds for

which is a mixture of linear combinations of these variables by Equation (

9). For

η =

i/10,

i = 2, …, 7, the positive variable

has upper tail

. See Theorem 1.

First, consider the behaviour of the average

Zr(

i) of

r = 10

6 independent copies of the variable

Z(

i) = 1/

Ui/5 where

U is standard uniform. The Pareto variable

Z(

i) has tail 1/

z5/i on (1, ∞). Its expectation is 2/3, 3/2, 4, ∞, ∞, ∞ for

i = 2, 3, 4, 5, 6, 7. The average

Zr(

i) has the form

mr(

i) +

sr(

i)

Wr(

i) where one may choose

mr(

i) to be the mean of

Z(

i) if finite, and where

Wr(

i) converges in distribution to a centred normal variable for

i = 2, and for

i > 2 to a skew stable variable with index

α =

i/5 and

β = 1. The asymptotic expression for

Zr is:

i = 2: , , Wr(2) ⇒ c2W2;

i = 3: , sr(3) = r−2/5, Wr(3) ⇒ c3W5/3;

i = 4: , sr(4) = r−1/5, Wr(4) ⇒ c4W5/4;

i = 5: mr(5) = logr, sr(5) ≡ 1, Wr(5) ⇒ c5W1;

i = 6: mr(6) = sr(6) = r1/5, Wr(6) ⇒ c6W5/6; and

i = 7: mr(7) = sr(7) = r2/5, Wr(7) ⇒ c7W5/7.

For an appropriate constant

Ci > 0, the variable

has tails asymptotic to 1/

t5/i, and hence the averages

CiLr exhibit the asymptotic behaviour above. It is the relative size of the deterministic part

mr(

i) of

Lr compared to the size of the fluctuations

sr(

i)

Wr(

i) of the random part which changes as

i/10 passes the critical value 0.5. The quotients

sr(

i)/

mr(

i) do not change much if one replaces

Lr by

Lr, the batch sd. The theoretical results listed above are nicely reflected in the Hill estimate of the tail index, and the loss of precision in the empirical sds in

Table 2.

For individual samples, it may be difficult to decide whether the parameters are

ξ =

η = 4/10 or 6/10. The pairs (4,4)/10 and (6,6)/10 belong to different domains in the classification in

Samorodnitsky et al. (

2007) but that classification is based on the behaviour for

n → ∞. The relation between the estimates

for (4,4)/10 and (6,6)/10 for samples of size

n = 100 becomes apparent on looking at large ensembles of samples for parameter values (

i,

i)/10 when

i varies from two to seven. The slide show was created in an attempt to understand how the change in the parameters affects the behaviour of the LS estimator. The estimate has a distribution which depends on the parameter. The dependence is clearly expressed in the tails of the distribution. The Hill estimates reflect nicely the tail index

η of the error. A recent paper

Mikosch and Vries (

2013) gives similar results for samples with fixed size for LS in linear regression where the coefficient

b in Equation (

1) is random with heavy tails.

The simplicity of the LS estimator makes a detailed analysis of the behaviour of the average loss

Lr possible for

. The critical value is

η = 1/2. The relative size of the fluctuations rather than the absolute size of the sd signal the transition across the critical value. Note that the critical value

η = 1/2 is not due to the “square” in Least Squares but to the exponent 2 in the loss function. There is a simple relation between the tails of the error distribution and of

.

Appendix A.5 shows that

is bounded. Lemma 3.4 in

Mikosch and Vries (

2013) then gives a very precise description of the tail behaviour of

in terms of the tails of

Y*. We formulate this lemma as a Theorem below.

Theorem 1. (Mikosch and de Vries) Let denote the slope of the LS estimate of the regression line in Equation (1) for a sample of size n ≥ 4

when the true regression line is the horizontal axis. Suppose Y* has a continuous df and X a bounded density. Let vary regularly at infinity with exponent −λ < 0

and assume balance: there exists λ ∈ [−1,1]

such thatSet M = (

X1 + ⋯ +

Xn)/

n and Zi =

Xi −

M, . DefineIf λ < [

n/2]

, then Proof. Proposition A6 shows that there exists a constant

A > 1 such that

for

s > 0. Set

μ = ([

n/2] +

λ)/2. Then,

is finite for

. Lemma 3.4 in

Mikosch and Vries (

2013) gives the desired result with

and

. ☐

If one were to define the loss as the absolute value of the difference

rather than the square, the expected loss would be finite for

η < 1. In particular, the partial averages of

for an iid sequence of samples of fixed size

n converge almost surely to the true slope. In this respect, Least Squares is a good estimator for errors with tail index

η < 1. For

η = 1, a classic paper

Smith (

1973) shows that

has a Cauchy distribution if the errors have a Cauchy distribution and the explanatory variable is deterministic.

Least Absolute Deviations (LAD) also known as Least Absolute Value and Least Absolute Error is regarded as a good estimator of the regression line for errors with heavy tails. The LAD estimator has not achieved the popularity of the LS estimator in linear regression. However, LAD has always been seen as a serious alternative to the simpler procedure LS. A century ago, the astronomer Eddington in his book

Eddington (

1914) discussed the problem of measuring the velocity of the planets and wrote: “This [LAD] is probably a preferable procedure, since squaring the velocities exaggerates the effect of a few exceptional velocities; just as in calculating the mean error of a series of observations it is preferable to use the simple mean residual irrespective of sign rather than the mean square residual”.

2 In a footnote he added: “This is contrary to the advice of most textbooks; but it can be shown to be true.” Forty years earlier, Edgeworth had propagated the use of LAD for astronomical observations in a series of papers in the Philosophical Magazine (see

Koenker (

2000)).

The LAD (Least Absolute Deviations) estimate of the regression line minimizes the sum of the absolute deviations rather than the sum of their squares. It was introduced (by Boscovitch) half a century before Gauss introduced Least Squares in 1806. Computationally, it is less tractable, but nowadays there exist fast programs for computing the LAD regression coefficients even if there are a hundred or more explanatory variables.

Dielman (

2005) gives a detailed oversight of the literature on LAD.

The names “least squares” and “least absolute deviations” suggest that one needs finite variance of the variables

Y* for LS and a finite first moment for LAD. That is not the case. Bassett and Koenker in their paper

Bassett and Koenker (

1978) on the asymptotic normality of the LAD estimate for deterministic explanatory variables observed: “The result implies that for any error distribution for which the median is superior to the mean as an estimator of location, the LAE [LAD] estimator is preferable to the least squares estimator in the general linear model, in the sense of having strictly smaller asymptotic confidence ellipsoids.” The median of a variable

X is the value

t which minimizes the expectation of |

X −

t|, but a finite first moment is not necessary for the existence of the median. The median of an odd number of points on a line is the middle point. It does not change if the positions of the points to the left and the right is altered continuously provided the points do not cross the median. Similarly, the LAD-regression line for an even number of points is a bisector which passes through two sample points. The estimate does not change if the vertical coordinatesof the points above and below are altered continuously provided the points do not cross the line. Proofs follow in the next section.

Under appropriate conditions, the distribution of

is asymptotically normal. That is the case if the second moment of

X is finite and the density of

Y* is positive and continuous at the median, see

Van de Geer (

1988). The LAD estimator of the regression line is not very sensitive to the tails of

Y* but it is sensitive to the behaviour of the distribution of

Y* at the median

m0. The sd of the normal approximation is inversely proportional to the density of

Y* at the median. LAD will do better if the density peaks at

m0 and worse if the density vanishes at

m0.

To illustrate this, we consider the case where

X has a standard exponential distribution (

ξ = 0) and

Y* has a density

f* which is concentrated on (−1,1) and symmetric. We consider four situations

,

f* ≡ 1/2,

f*(

y) = |

y| and

f* ≡ 1 on the complement of [−1/2, 1/2].

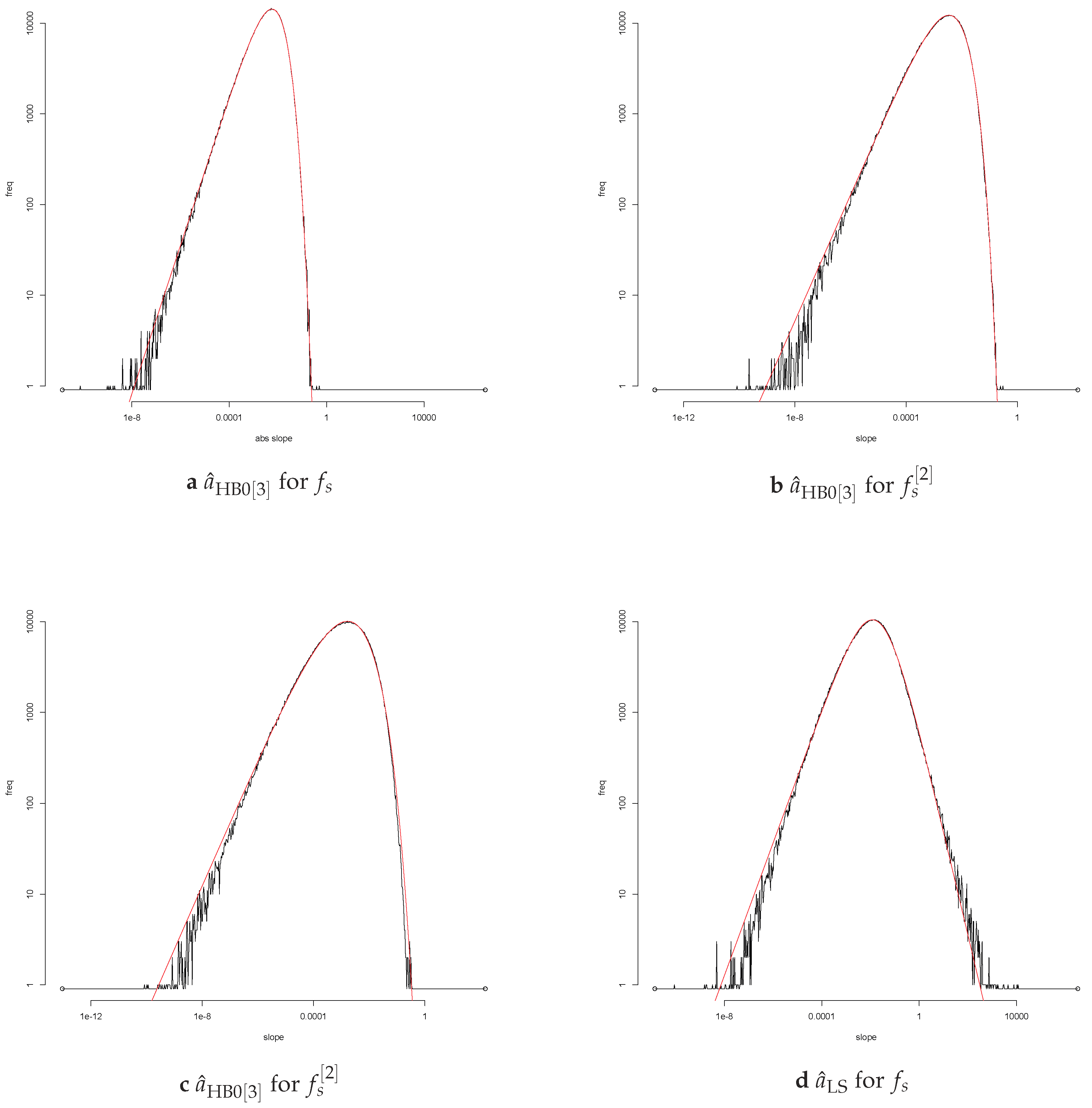

Figure 5 shows the log frequencies of the estimator

and

. Here, LAD40 is a variation on LAD which depends on the behaviour of the distribution of

Y* at the 0.4 and 0.6 quantiles rather than the median. Ten batches of a hundred thousand simulations yield the log frequencies in

Figure 5 and the given empirical sds.

The Gauss–Markov Theorem states that the least squares estimate

has the smallest sd among all estimates

of the slope which are linear combinations of the

yi. It clearly does not apply to LAD or LAD40, see

Table 3. The incidental improvement of the performance by ten or thirty per cent is not sufficient to lure the reader away from LS. Our paper is not about optimal estimators. A glance at the first table in

Section 7 shows that, for heavy tails, there exist estimators whose performance is abominable. The aim of our paper is to show that there also exist estimators which perform well.

Rightmost point (RMP or RM(1)) (similar to LAD, as we show below) is a weighted balance estimator. A balance estimate of the regression line is a bisector which passes through two of the hundred sample points. The regression line for RMP is the bisector which contains the rightmost sample point. The RMP estimate is accurate if X1 is large, except in those cases where is large too. In terms of the quadratic loss function employed in this paper, it is a poor estimator for η ≥ 1/2.

Table 4 lists the empirical sd of the estimate

of the slope for LS, LAD and RMP, based on ten batches of a hundred thousand simulations of a sample of size

n = 100, for various values of the tail indices

ξ and

η. The explanatory variable

X is Pareto with tail 1/

x1/ξ for

ξ > 0 and standard exponential for

ξ = 0; the dependent variable

Y* has a Student distribution with 1/

η degrees of freedom for

η > 0 and is normal for

η = 0. The error is scaled to have InterQuartile Distance IQD = 1.

Rousseeuw in

Rousseeuw (

1984) observed: “Unfortunately, [LAD] is only robust with respect to vertical outliers, but it does not protect against bad leverage points.” This agrees with the deterioration of the LAD-estimate for

η ≥ 1/2 when

ξ increases. The good performance of the LAD estimates for

ξ = 0 and the relatively small fluctuations reflect the robustness which is supported by the extensive literature on this estimator. It does not agree with the theoretical result below:

Theorem 2. In the linear regression in Equation (1), let X have a non-degenerate distribution and let Y*have an upper tail which varies regularly with non-positive exponent. Let the true regression line be the horizontal axis and let denote the slope of the LAD estimate of the regression line for a sample of size n. For each n > 1

, the quotientis a bounded function on . Proof. Let c1 < c2 be points of increase of the df of X. Choose δ1 and δ2 positive such that the intervals I1 = (c1 − δ1, c1 + δ1) and I2 = (c2 − δ2, c2 + δ2) are disjoint, and (c1 − nδ1, c1 + nδ1) and I2 too. Let E denote the event that X1 ∈ I2 and the remaining n − 1 values Xi lie in I1. The LAD regression line L passes through (X1,Y1). (If it does not, the line which passes through (X1,Y1) and intersects L in x = c1 has a smaller sum of absolute deviations: Let δ denote the absolute difference in the slope of these two lines. The gain for X1 is (c2 − δ2 − c1)δ, and exceeds the possible loss (n − 1)δδ1 for the the remaining n − 1 points.) It is known that the LAD estimate of the regression line is a bisector. We may choose the vertical coordinate so that y = 0 is a continuity point of F* and 0 < F*(0) < 1. A translation of Y* does not affect the result. The event E1 ⊂ E that Y1 is positive and more than half the points (Xi,Yi), i > 1, lie below the horizontal axis has probability where p > 0 depends on F*(0) and n. If E1 occurs the regression line L will intersect the vertical line x = c1 − δ1 below the horizontal axis. For Y1 = y > 0 the slope A of L then exceeds y/c where c = (c2 + δ2) − (c1 + δ1). Hence, for t > 0. Regular variation of the upper tail of Y* implies that for t ≥ t0 where λ ≤ 0 is the exponent of regular variation of 1 − F*. This yields the desired result for the quotient Qn. ☐

How should one interpret this result? The expected loss (MSE) is infinite for

η ≥ 1/2. In that respect, LAD is no better than LS. We introduce the notion of “light heavy” tails. Often heavy tails are obvious. If one mixes ten samples of ten observations each from a Cauchy distribution with ten samples of ten observations from a centred normal distribution, scaling each sample by the maximum of the ten absolute values to obtain point sets in the interval [−1,1], one will have no difficulty in selecting the ten samples which derive from the heavy-tailed Cauchy distribution, at least most of the time. In practice, one expects heavy tails to be visible in samples of a hundred points. However, heavy tails by definition describe the df far out. One can alter the density of a standard normal variable

Z to have the form

c/

z2 outside the interval (−12, 12) for an appropriate constant

c. If one takes samples from the variable

with the new density the effect of the heavy tails will be visible, but only in very large samples. For a sample of a trillion independent copies of

, the probability that one of the points lies outside the interval (−12, 12) is less than 0.000 000 000 000 01. Here, one may speak of “light heavy” tails. In the proof above, it is argued that under certain circumstances LAD will yield the same estimate of the regression line as RMP. The slope of the bisector passing through the rightmost point is comparable to

Y1 /

X1 and the upper tail of the df of this quotient is comparable to that of

Y1 . In our set-up a sufficient condition for LAD to agree with RMP is that

X1 > 100

X2. For a tail index

ξ = 3, the probability of this event exceeds 0.2, as shown in

Section 2. If

X has a standard exponential distribution, the probability is less. The event {

X1 > 100

X2} = {

U1 <

U2/

e100} for

Xi = −log(

Ui) has probability

e−100.

Here are two questions raised by the disparity between theory and simulations:

(1) Suppose the error has heavy tails,

η ≥ 1/2. Do there exist estimators

E of the regression line for which the slope

has finite second moment? In

Section 4. it is shown that, for the balance estimators RM(

m) (Right Median) and HB0(

d) (Hyperbolic Balance at the median), one may choose the parameters

m and

d, dependent on the tail indices

ξ and

η, such that the estimate of the slope has finite second moment.

(2) Is it safe to use LAD for

ξ < 1/2? Not really. For

ξ < 1/2, the estimate

is asymptotically normal as the sample size goes to infinity provided the error has a positive continuous density at the median. This does not say anything about the loss for samples of size

n = 100. The empirical sds for

ξ = 0,1/2 and

η = 0, 1/2, 1, 3/2, 2, 3, 4 are listed in

Table 1. For

ξ = 0, the performance of

is good; for

ξ = 1/2 the performance for

η ≥ 1 is bad, for

η ≥ 3 atrocious. The empirical sd varies continuously with the tail indices. Thus, what should one expect for

ξ = 1/4? Ten batches of a hundred thousand simulations yield the second row in

Table 5: For

η ≥ 3, the performance is atrocious. The next sections describe estimators which perform better than LAD, sometimes even for

ξ = 0. We construct an adapted version, LADGC, in which the effect of the large gap between

X1 and

X2 is mitigated by a gap correction (GC).

To obtain a continuous transition for

ξ → 0, one should replace

X = 1/

Uξ with

U uniformly distributed on (0,1) by

X = (

Uξ − 1)/

ξ +

ξ for

ξ ∈ (0,1). In the table above, the entries for

ξ = 1/4 and

ξ = 1/2 then have to be divided by 4 and 2, respectively. The rule of thumb (in Equation (

2)) is then valid for all

ξ ∈ (0,1). Since the situation

ξ ∈ (0,1/2) plays no role in this paper, we stick to the simple formula:

X = 1/

Uξ for

ξ > 0.

The words above might evoke the image of a regime switch in the far tails when LAD is contaminated by the pernicious influence of the RMP estimator due to configurations of the sample where the distribution of the horizontal coordinates exhibits large gaps. This image is supported by the loglog frequency plots. For small values of

η, the plots suggest a smooth concave graph with asymptotic slope on the left (due to a df of

asymptotic to

cx for

x → 0), and a steeper slope on the right suggesting a tail index <1 for the upper tail of

. The two plots for

ξ = 1/4 and

η = 1,4 in

Figure 6 have different shapes. The slope of the right leg of the right plot becomes less steep as one moves to the right. For two simulations,

lies beyond the boundary value 10

6. The maximal absolute value 5.3 × 10

9. This single estimate makes a significant contribution to the average loss for

η = 4. All this suggests that for

η = 4 the tail of the df of

becomes heavier as one moves out further to the right.

4. Weighted Balance Estimators

Recall that a bisector is a line which divides the sample into two equal parts. It may be likened to the median of a one-dimensional sample. For odd sample size, bisectors contain a sample point, for even sample size, a bisector contains two sample points or none. The latter are called

free bisectors. There are many bisectors, even if one restricts attention to bisectors through two points in a sample of size

n = 100. The question is:

For symmetrically distributed errors, balance is a good criterion for selecting a bisector. It is shown below how a decreasing sequence of non-negative weights allows one to define a bisector which is in balance. We give the intuitive background to the idea of a weighted balance estimator, some examples and the basic theory. The focus is on sample size

n = 100. The extension to samples with an even number of observations is obvious. For a detailed exposition of the general theory and complete proofs, the reader is referred to the companion paper

Balkema (

2019).

The intuition behind the weighted balance estimators is simple. Assume the true regression line is the horizontal axis. Consider a sample of size n = 2m and a free bisector L. If the slope of the bisector is negative, the rightmost sample points will tend to lie above L; if the slope is positive, the rightmost points tend to lie below L. Now, introduce a decreasing sequence of weights, w1 ≥ ⋯ ≥ wn ≥ 0. The weight of the m points below the bisector will tend to increase as one increases the slope of the bisector. We prove that the increase in weight is indeed monotone. As the slope of the bisector L increases the weight of the m points below the bisector increases. At a certain moment the weight of the m points below L will surpass half the total weight. That determines the line of balance. This line L is the weighted balance estimate WB0 for the weight sequence wi. For odd sample size, n = 2m + 1, the same argument works. We then consider bisectors which pass through one sample point to determine the WB0 estimate of the regression line for the given sample.

Strips may give more stable estimates. Consider a sample of size n = 100 and strips which contain twenty points such that of the remaining eighty points half lie above the strip and half below. Here, the weight w(B) of the set B of forty points below the strip will also increase if the slope of the strip increases and (by symmetry) the weight w(A) of the forty points above the strip will decrease. By monotonicity, as one increases the slope from −∞ to +∞, there is a moment when w(B) will surpass w(A). The centre line of that strip is the WB40 estimate of the regression line for the given weight sequence.

The monotonicity allows one to determine the slope of the estimates WB0 and WB40 by a series of bisections. The Weighted Balance estimator is fast. It is versatile. Both the RightMost Point estimator and Least Absolute Deviation are weighted balance estimators. RMP for the weight sequence 1,0, …, 0 and LAD for the random weight sequence wi = Xi as we show below.

We now first give some examples. Then, we prove the monotonicity mentioned above. We then show that LAD is the weighted balance estimator for the weight sequence

wi =

Xi.

Appendix A contains an analysis of the tail behaviour of weighted balance estimators.

4.1. Three Examples

In this paper, we use three basic weight sequences. Two are deterministic.

(1) Let

r = 2

r0 = 1 be a positive odd number less than

n = 100. The weight sequence for RM(

r) is (1, …, 1, 0, …, 0) with

r ones. Colour the rightmost

r sample points red and select a bisector

L passing though two sample points, one black and one red. The bisector

L0 is in balance if there are

r0 red points below

L0 and

r0 red points above

L0. This bisector is the Right Median RM(

r) estimate of the regression line. An infinitesimal anti-clock wise rotation of

L0 around the centre midway between the red and black point on

L0 yields a free bisector

L; the fifty points below

L weigh

r0 + 1 >

r/2. The Right Median estimator is a variation on RMP. It takes account of the position of the

r rightmost points and thus avoids the occasional erroneous choice of RMP. If

r = 1, then RM(

r) = RMP. For

r = [

n/2], RM(

r) agrees with the estimator SBLR introduced in

Nagya (

2018).

(2a) The weight sequence 1, 1/2, …, 1/100 gives the rightmost point the largest weight. It is overruled by the next three points: w2 + w3 + w4 > w1. If Y1 is very large, the bisector L through the rightmost point will have a large positive slope and the points z2, …, z9 will tend to lie below L. The weight of the 49 points below L, augmented with the left point on L, will then exceed half the total weight. For balance, the slope has to be decreased.

One can temper the influence of the rightmost point by choosing the weights to be the inverse of 2, …, 101 or 3, …, 102. In general, we define the

hyperbolic weight sequence by

The parameter

d is positive. If it is large, the weights decrease slowly. Suppose the bisector

L contains two points,

zL and

zR. The weight

wL of the left point is less than

wR, the weight of the right point. Let Ω denote the total weight and

w(

B) the weight of the points below

L. If

for the weight sequence in Equation (

12), then

L is the HB0(

d) estimate of the regression line.

(2b) Instead of a bisector, one may consider a strip

S which contains twenty points with forty points above

S and forty below. Assume that

S is closed and of minimal width. The boundary lines of

S each contain one of the twenty points. For certain slopes, one of the boundary lines will contain two points and the strip will contain 21 points. Suppose the upper boundary contains two points,

zL to the left of

zR, and the set above

S contains 39 points. Let

w(

B) be the weight of the forty points below

S and

w(

A) the weight of the 39 points above

S. If

then the centre line of the strip is the Hyperbolic Weight HB40(

d) estimate of the regression line.

(3) We shall see below that LAD is the weighted balance estimator for the random weight wi = Xi. Since the Xi form an ordered sample from a continuous Pareto distribution, one cannot split the hundred sample points into two sets of fifty points with the same weight. It follows that there is a unique bisector L passing through two sample points such that the balance tilts according as one assigns the heavier point on L to the set above or below L.

4.2. The Monotonicity Lemma

In this section, X and Y* are assumed to have continuous dfs. Almost all samples from (X,Y*) then have the following properties:

In particular, no line contains more than two sample points. Configurations which satisfy the two conditions above are called unexceptional. For unexceptional configurations, there is a set of lines which each contain exactly two sample points. The slopes γ of these lines are finite and distinct. They form a set of size .

Definition 3. A weight w is a sequence w1 ≥ ⋯ ≥ wn ≥ 0 with w1 > wn.

Take an unexceptional sample of n points from (X,Y*), a positive integer m < n and a line with slope . As one translates the line upwards the number of sample points below the line increases by steps of one since γ does not lie in Γ, and hence the lines contain at most one sample point. There is an open interval such that for all β in this interval the line y = β + γx contains no sample point and exactly m sample points lie below the line. The total weight of these m sample points is denoted by wm(γ). It depends only on m and γ. As long as the line L moves around without hitting a sample point, the set of m sample points below the line does not change and neither does its weight wm(γ). What happens when γ increases?

Lemma 1. For any positive integer m < n and any weight sequence w, the function γ ↦ wm(γ) is well defined for , increasing, and constant on the components of .

Proof. Consider lines which contain no sample points. Let and let L0 be a line with slope γ0 which contains no sample points such that there are precisely m sample points below L0. Let B denote the closed convex hull of these m sample points, and let A denote the closed convex hull of the n − m sample points above L0. One can move the line around continuously in a neighbourhood of L0 without hitting a sample point. For any such line between the convex sets A and B the weight of the m points below the line equals wm(γ0), the weight of B. If one tries to maximize the slope of this line then in the limit one obtains a line L with slope γ ∈ Γ. This line contains two sample points. The left point is a boundary point of B, the right one a boundary point of A. Consider lines which pass through the point z ∈ L midway between these two sample points. The line with slope γ − dγ < γ lies between the sets A and B and wm(γ − dγ) = wm(γ0). For the line with slope γ + dγ > γ, there are m points below the line but the left point on L has been exchanged for the heavier right point. Hence, wm(γ + dγ) ≥ wm(γ − dγ) with equality holding only if the two points on L have the same weight. ☐

This simple lemma is the crux of the theory of weighted balance estimators. An example will show how it is applied.

Example 1. Consider a sample of n = 100

points and take m = 40

. Let w1 ≥ ⋯ ≥

w100 be a weight sequence. We are looking for a closed strip S in balance: There are forty points below the strip and forty points above the strip, and the weights of these two sets of forty sample points should be in balance. The weight w40(

γ)

of the forty points below the strip depends on the slope γ. It increases as γ increases. The limit values for γ → ±∞

are Similarly, the weight w40(

γ)

of the forty points above the strip decreases from Ω

1 to Ω

0. Both functions are constant on the components of . Weight sequences are not constant. Hence, Ω

0 < Ω

1. It follows from the monotonicity that here are points γ0 ≤

γ1 in Γ such that If γ0 = γ1, there is a unique closed strip S of minimal height with slope γ0 = γ1 and we speak of strict balance. One of the boundary lines of S contains one sample point, the other two. This is the strip of balance. A slight change in the slope will cause one of the two points on the boundary line containing two points to fall outside the strip. Depending on which, the balance will tilt to one side or the other. Define the centre line of S to be the estimate of the regression line for the estimator WB40 for the given weight sequence. If γ0 < γ1 then for i = 0,1 let Li with slope γi be the centre line of the corresponding strip Si. Define the WB40 estimate as the line with slope (γ0 + γ1)/2 passing through the intersection of L0 and L0.

The example shows how to define for any sample size n and any non-constant weight w and any positive integer m < [n/2] for any unexceptional sample configuration a unique line, the WBm estimate of the regression line. Of particular interest is the case m = [n/2]. The strip then is a line and the estimator is denoted by WB0.

Definition 4. For a sample of size n and m < [

n/2]

, the Hyperbolic Balance estimator HB

m(

d)

for d > 0

is the weighted balance estimator as in the example above (where m = 40)

with the weight wi = 1/(

d − 1 +

i)

in Equation (12). If m = [

n/2]

we write HB0(

d).

The WBm estimate of the slope of the regression line for unexceptional sample configurations is determined by the intersection of two graphs of piecewise constant functions, one increasing and the other decreasing, and so is the WB0 estimate. There either is a unique horizontal coordinate where the graphs cross and balance is strict, or the graphs agree on an interval I = (γ0,γ1). In the latter case, for γ ∈ (γ0,γ1) ∖ Γ, there exist closed strips whose boundaries both contain one sample point and which have the property that the m points below the strip and the m above have the same weight. We say that exact balance holds in γ. This yields the following result:

Proposition 1. Let n be the sample size and m ≤ n/2 a positive integer, and let . Let S be a closed strip of minimal height of slope α such that m sample points lie below S and m above. If n is even and m = n/2 then S is a line which contains no sample points, a free bisector; if n = 2m + 1 then S is a line which contains one sample point; if m < [n/2] both boundary lines of S contain one sample point. Let w0 denote the weight of the m sample points below S and w0 the weight of the m points above S. Let denote the slope of the WB estimate of the regression line.

If w0 < w0, then ,

If w0 > w0, then ,

If w0 = w0, exact balance holds at α.

In the case of exact balance, there is a maximal interval J = (γ0,γ1) with γ0,γ1 ∈ Γ such that w0 = w0 for all strips S separating two sets of m sample points with slope γ ∈ J ∖ Γ. Both α and lie in J.

Strict balance holds almost surely for LAD if the conditions of this section, that

X and

Y* have continuous dfs, is satisfied. It also holds for RM0(

r) if the sample size is even and

r odd. We show below that, for sample size

n = 100, it holds for HB0(

d) for all integers

d ≤ 370261 and for HB40(

d) for all integers

d ≤ 1000. In the case of strict balance, we need two values

γ2 <

γ3 such that

w0 <

w0 in

γ2 and

w0 >

w0 in

γ3. A series of bisections then allows us to approximate

at an exponential rate. If strict balance does not hold one needs to do more work. The programs on which the results in the tables in

Section 7 are based determine for WB0 the indices of the two points on the bisector of balance, and for WB40 the indices of the two points on the one boundary and of the one point on the other boundary of the strip of balance. We then use the criteria in Equations (

13) and (

14) to check strict balance.

To show that exact balance does not occur for HB0 or HB40 for a particular value of the parameter d one has to solve a number theoretic puzzle: Can two finite disjoint sets of distinct inverse numbers 1/(d + i) have the same sum? We need R to obtain a solution.

Proposition 2. For sample size n = 100 exact balance is not possible for HB0 with the hyperbolic weight sequences wi = 1/(d − 1 + i) for parameter d ∈ {1, …, 370261} neither for HB40 for d ≤ 1000.

Proof. For HB0, the argument is simple. For d ≤ 370261, the set Jd = {d, …, d + 99} contains a prime power q = pr, which may be chosen such that 2q > d + 99. For exact balance, there exist two disjoint subsets A and B of Jd containing fifty elements each such that the inverses have the same sum. That implies that 1/q = s where s is a signed sum over the remaining 99 elements i with . Write s as an irreducible fraction s = k/m and observe that m is not divisible by q since none of the 99 integers i in the sum is. However, 1/q = k/m implies k = 1 and m = q.

For HB40, one needs more ingenuity to show that exact balance does not occur. One has to show that Jd does not contain two disjoint subsets A and B of forty elements each such that the sums over the inverse elements of A and of B are equal. We show that there exist at least 21 elements in Jd which cannot belong to A ∪ B. Thus, for d = 1, the elements 23i, i = 1,2,3,4, cannot belong to A ∪ B since, for any non-empty subset of these four elements, the sum of the signed inverses is an irreducible fraction whose denominator is divisible by 23. This has to be checked! It does not hold in general. The sum of 1/i over all i ≤ 100 which are divisible by 25 is 1/12. The sum 1/19 + 1/38 + 1/57 − 1/76 equals (12 + 6 + 4 − 3)/12/19 = 1/12. Primes and prime powers in the denominators may disappear. One can ask R to check whether this happens. For d = 1, the set Jd contains 32 elements (23, 29, 31, 37, 41, 43, 46, 47, 49, 53, 58, 59, 61, 62, 64, 67, 69, 71, 73, 74, 79, 81, 82, 83, 86, 87, 89, 92, 93, 94, 97, and 98) which cannot lie in A ∪ B. For d = 2, …, 1000, the number varies but is never less than 32. ☐

Different weight sequence may yield the same estimates.

Proposition 3. The weight sequences (wi) and (cwi + d) for c > 0 yield the same WB estimates.

Proof. The inequalities which define balance remain valid since there are m sample points on either side. ☐

The weight sequence (6, 1, …, 1) yields the RMP estimator, but so does any weight which is close to this weight, for instance (6, w2, …, w100) with 1 < w100 ≤ w2 ≤ 1.1. A weight sequence for which w1 is large and the remaining weights cluster together will perform poorly if the error has heavy tails.

Proposition 4. Let WB0

be the Weighted Balance estimator of the regression line for the weight sequence (

wi)

, i = 1, …,

n = 2

m. Ifthen WB0 = RMP.

Proof. Suppose z1 does not lie on the line L of balance. Then, the weight of z1 together with the m − 2 points on the same side of L and the lighter point on L is not greater than half the total weight. ☐

4.3. LAD as a Weighted Balance Estimator

We show that LAD = WB0 with weight

wi =

Xi for even sample size. The proof for odd sample size is similar (see

Balkema (

2019)).

Theorem 3. LAD = WB0 with weight wi = Xi for sample size n = 2m.